1. Introduction

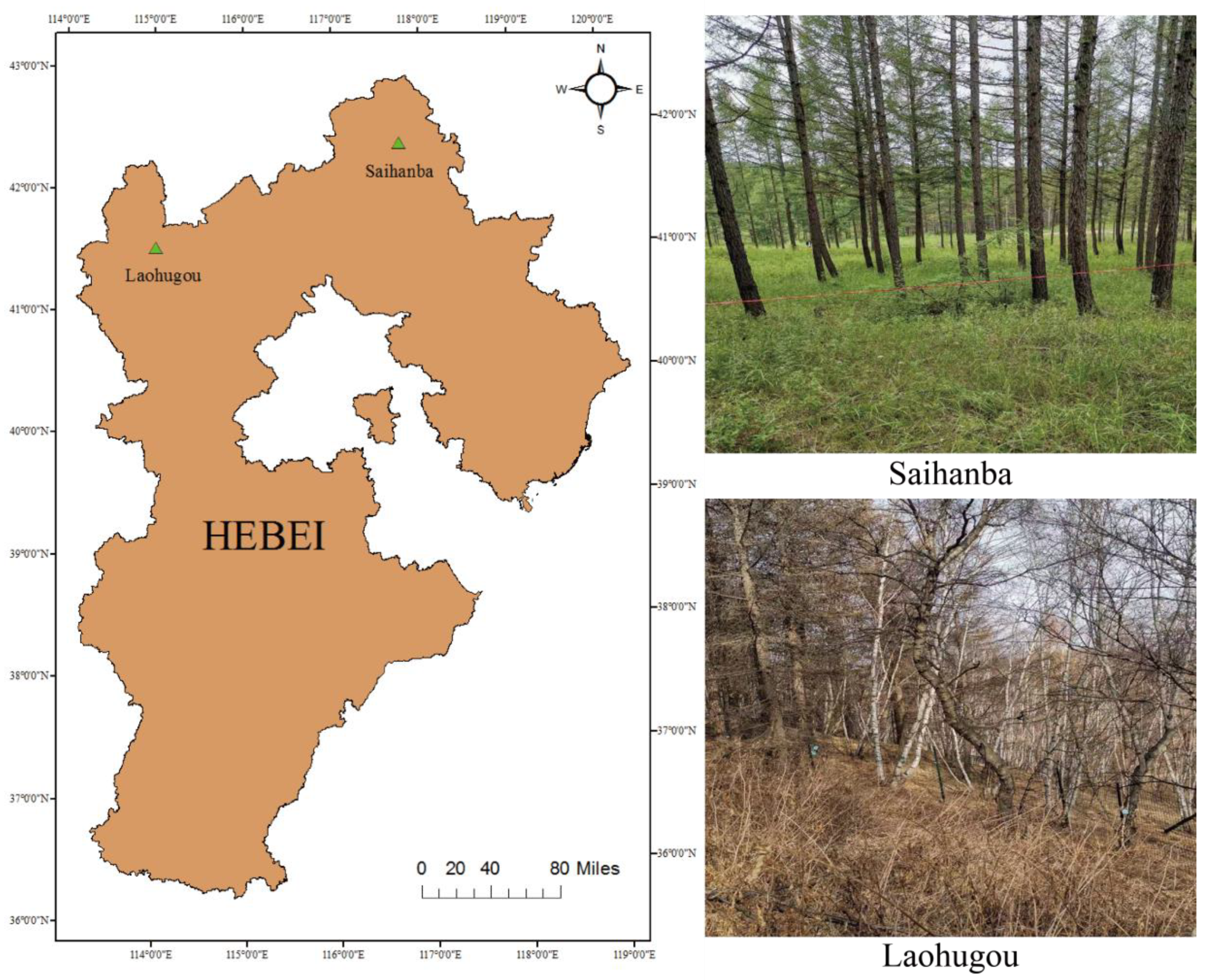

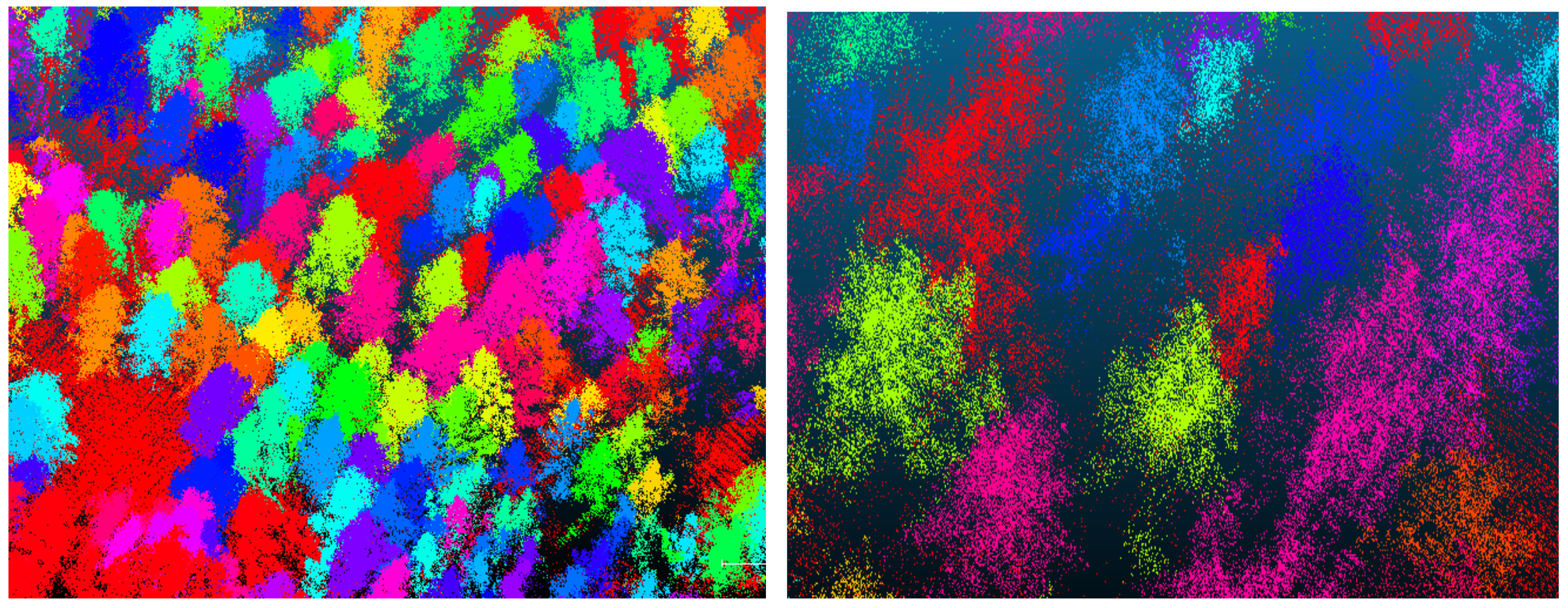

Accurate classification of tree species is essential for forest resource management and ecological conservation [

1,

2,

3]. Remote sensing technologies are widely used in forest surveys because they allow to rapidly gather large-scale observations in a timely and periodical manner [

4,

5,

6]. Light Detection and Ranging (LiDAR) is an active remote sensing technology with the ability to quickly and accurately extract three-dimensional feature information. In addition, LiDAR permits all-weather operations compared with optical remote sensing satellites and is less affected by the external environment. LiDAR has also powerful low-altitude detection advantages [

7,

8]. Therefore, LiDAR greatly enhances forest resources investigations.

In recent years, with the continuous improvement of computer hardware performance, massive data calculations and complex neural network model training have evolved from theoretical designs to applications. The winners of the 2012 ImageNet competition gained an unmatched advantage using AlexNet, promoting the boom of deep learning models in the field of image recognition [

9]. Compared with two-dimensional image data, point cloud data obtained by LiDAR add information in the vertical dimension. This greatly challenges feature extraction and training of point cloud data deep learning models [

10,

11]. Currently, many scholars are engaged in deep learning research of point clouds, and their research methods can be divided into three categories: (1) Research on deep learning models based on multi-views, which mainly convert a point cloud into two-dimensional images with multiple perspectives through projection and identify them through deep learning models that achieve ideal results [

12,

13,

14]; (2) Deep learning model research based on voxel data, which mainly converts point cloud into 3D voxel data and then, using a convolutional neural network, extract the 3D features [

15,

16,

17]; (3) Deep learning model research based on the point cloud, which mainly uses point cloud data for end-to-end extraction of feature attributes and model training [

18,

19,

20].

The rapid development of 3D point cloud deep learning technology helped advance the research into point cloud forest tree species classification. Seidel et al. [

21] used deep learning methods to predict tree species from point cloud data acquired by a 3D laser scanner. The approach converts the three-dimensional point cloud data of each tree into a two-dimensional image using a convolutional neural network to classify the image and then completes the tree species classification. The experimental results showed that the classification accuracy of ash, oak, and pine trees was improved by 6%, 13%, 14%, and 24%, respectively. Hamid et al. [

22] classified conifer/deciduous airborne point cloud data based on deep learning methods. They converted LiDAR data into digital surface models and 2D views and used deep convolutional neural networks for classification. Experimental results showed that the cross-validation classification accuracy of conifers and deciduous trees were 92.1 ± 4.7% and 87.2 ± 2.2%, respectively; the classification accuracy of understory trees was 69.0 ± 9.8% for conifers and 92.1 ± 1.4% for deciduous trees. Mizoguchi et al. [

23] used a convolutional neural network to identify the tree species of a single tree in Terrestrial Laser Scanning (TLS) data. This method converted the point cloud data into a depth map and extracted features through the convolutional neural network to complete tree species recognition. The experimental results showed that the overall accuracy of the classification reached 89.3%. The point cloud data of the tree were converted into two-dimensional image data, and deep learning image classification was used to complete the classification of tree species. However, in the process of converting point cloud data into image data, the feature information was lost, and the feature of the point data was destroyed.

Guan et al. [

24] classified tree species based on deep learning applied to point cloud data obtained by a mobile LiDAR. The method converted the point cloud into voxel data to extract a single tree and used the waveform to build the tree structure. They used deep learning technology to train the advanced feature extraction of the tree waveform. The overall accuracy reached 86.1%, and the kappa coefficient was 0.8, which improved the classification accuracy of tree species. Zou et al. [

25] classified tree species based on deep learning methods for TLS data of complex forests applying the voxelization of the extracted single tree data and extracting the hierarchical features of the voxel data. Finally, they used the deep learning model to complete the tree species classification. Experimental results showed that the average classification accuracies on the two data sets were 93.1% and 95.6%, which were better than for other algorithms. After converting the point cloud data into voxel data, applying deep learning to classify the voxel data into tree species produced excellent results. However, the process of converting point cloud data into voxel data was complicated, and there was a local feature loss. Because the voxel data structure was complex, feature extraction and model training were inefficient.

Liu et al. [

26] used Unmanned Aerial Vehicle Laser Scanning (UAVLS) and TLS data to classify tree species based on 3D deep learning. The method proposed the LayerNet model network, which divides multiple overlapping layers to obtain the local 3D structure characteristics of trees. At the same time, LayerNet aggregated the characteristics of each layer to obtain the global characteristics and classified the tree species by convolution. The test results showed that LayerNet could accurately classify 3D data directly, with a classification accuracy of up to 92.5%. Briechle et al. [

27] used PointNet++ to directly classify airborne point cloud data. Experiments showed that the accuracy was 90% for coniferous trees and 81% for deciduous trees. Xi et al. [

28] used deep learning and machine learning algorithms to classify the tree species data of TLS. They researched 13 machine learning and deep learning classification methods of nine tree species. The experimental results showed that the classification accuracy of deep learning was 5% higher than that of machine learning. The classification of PointNet++ was the best. This research conducted end-to-end deep learning model training on point cloud data. The approach did not require the conversion of point clouds into voxels or 2D images, avoiding the loss of features and local information and improving computational efficiency. However, they did not make use of the attribute information of the point cloud data, so the neural network could not extract more features during model training.

To overcome the problems in the above research, we propose using the Point Cloud Tree Species Classification Network (PCTSCN) to classify tree species with high precision for natural resource forest monitoring. Our network includes three main steps. First, the point cloud data of a single tree are down-sampled through geometric sampling to retain more local features. Then, we use the improved Farthest Point Sampling method to further extract the global feature points of every single tree. The sub-sample single tree point cloud was taken as the training sample. Finally, the neural network is used to extract the characteristics of the spatial and reflection intensity information of the training samples for model training. The model obtained through PCTSCN training not only considers the local features of tree point cloud data but also integrates the global features. The PCTSCN increases attribute information and information dimensions of each point, avoiding the limitations of the other research approaches.

3. Method

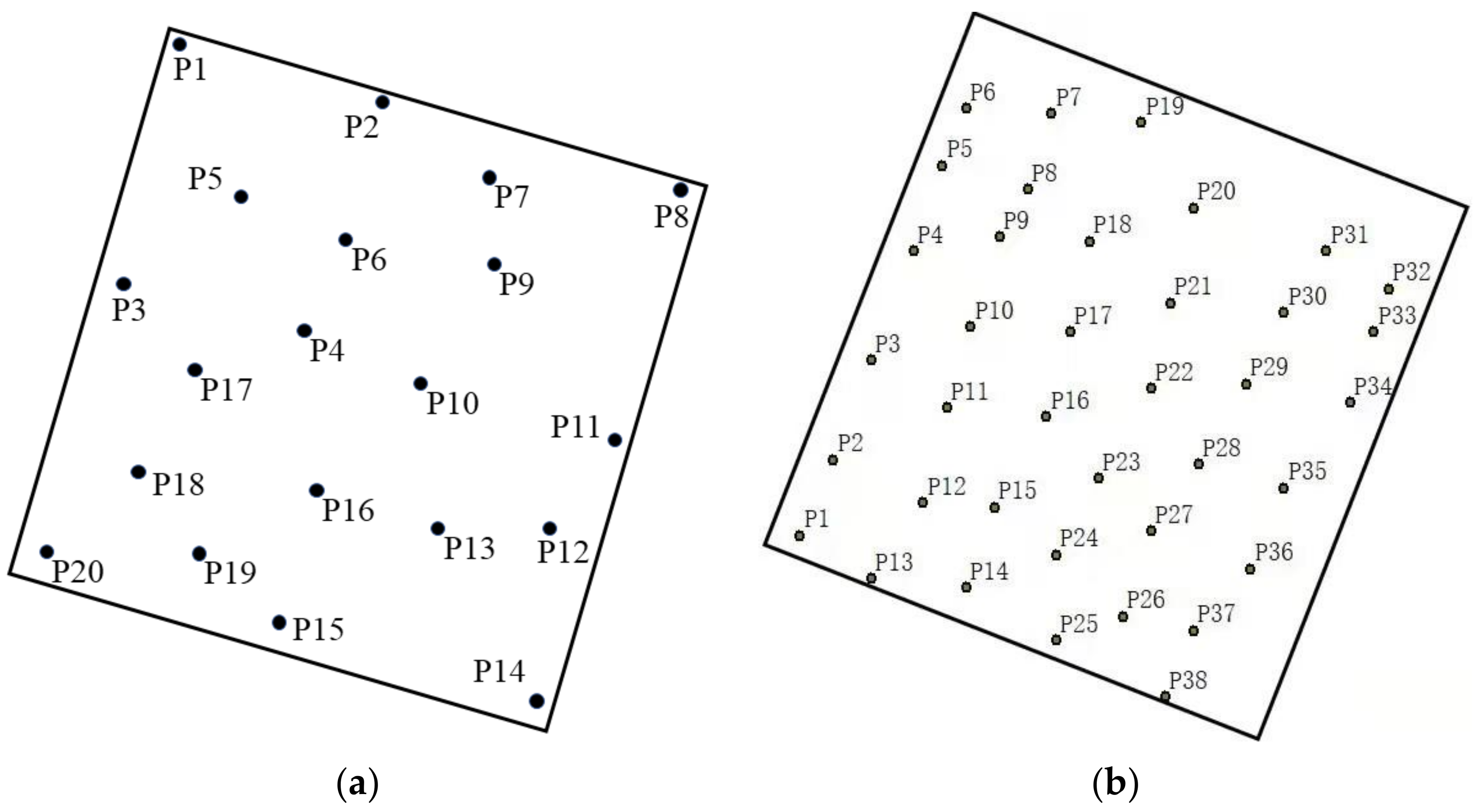

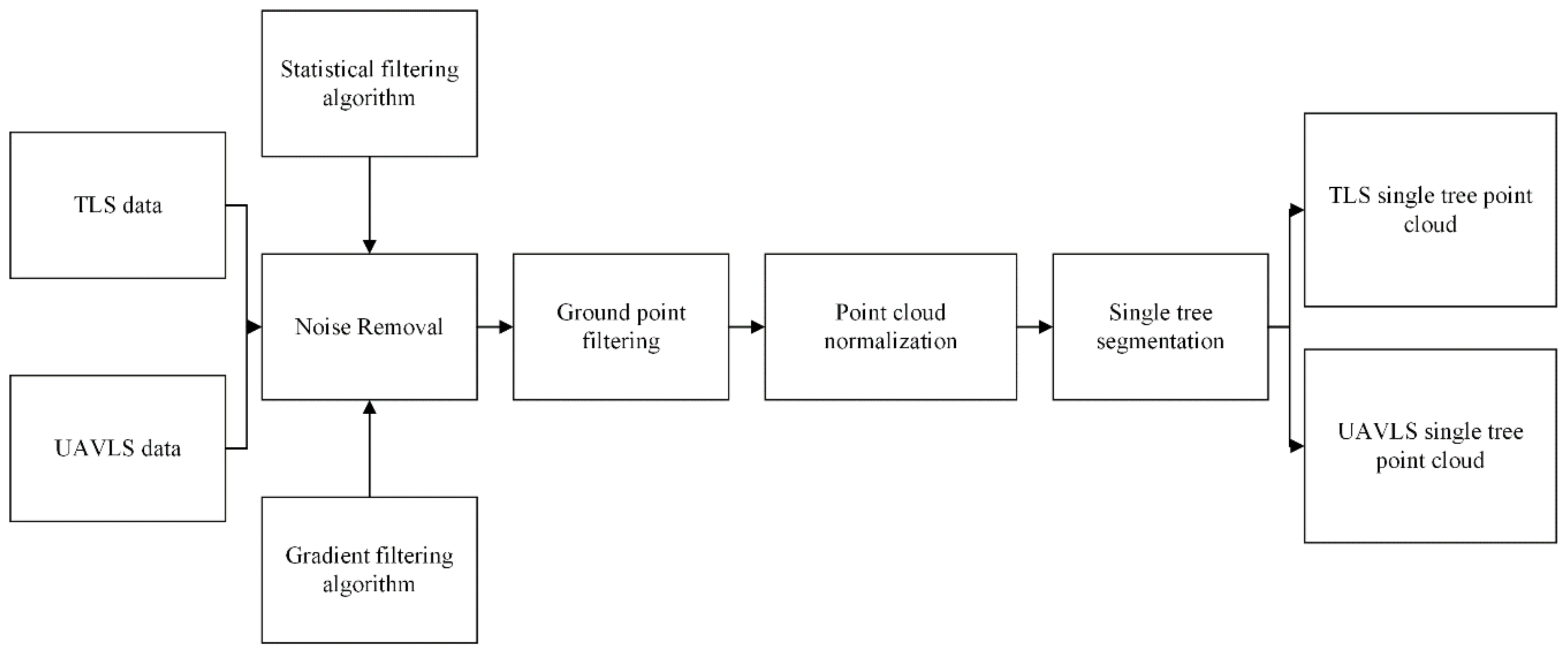

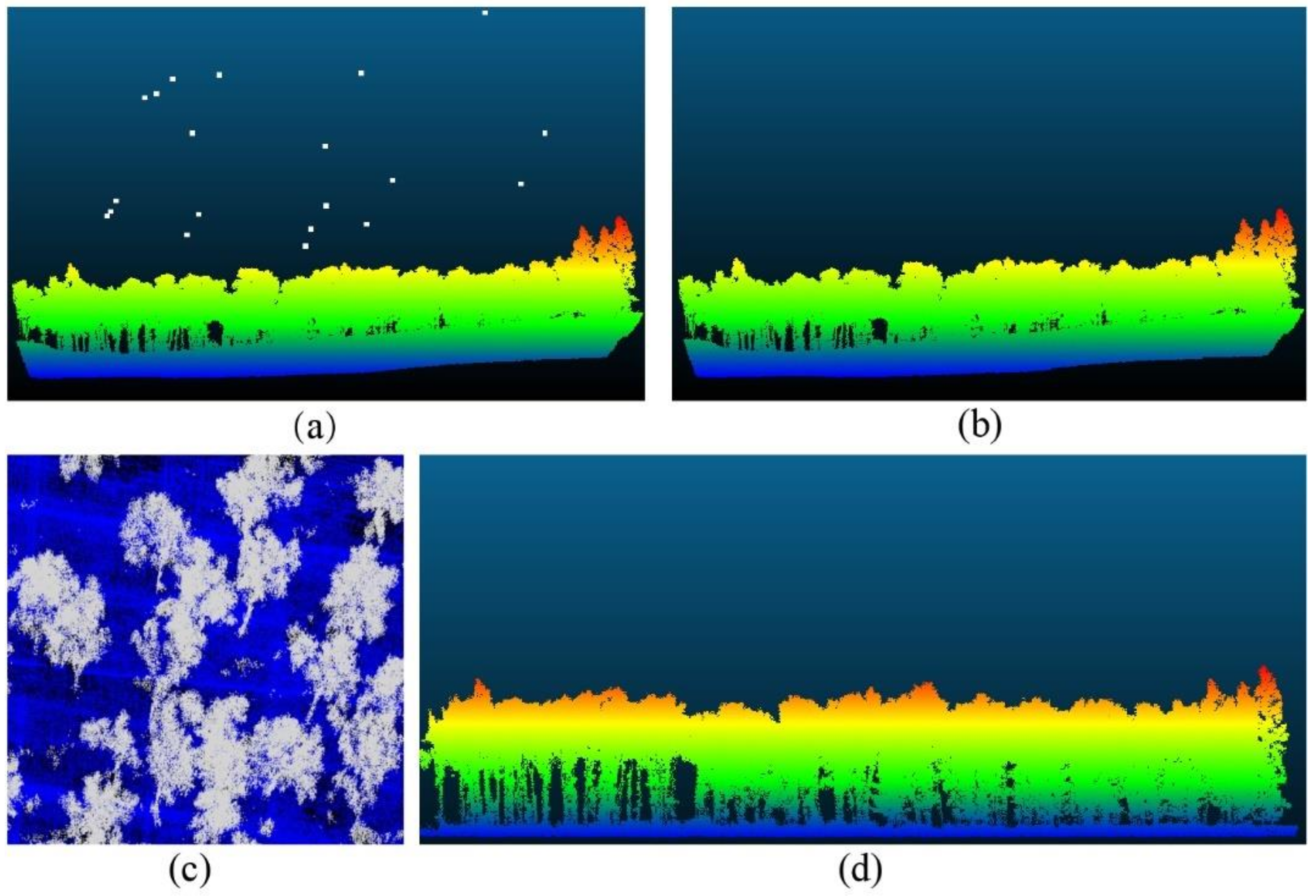

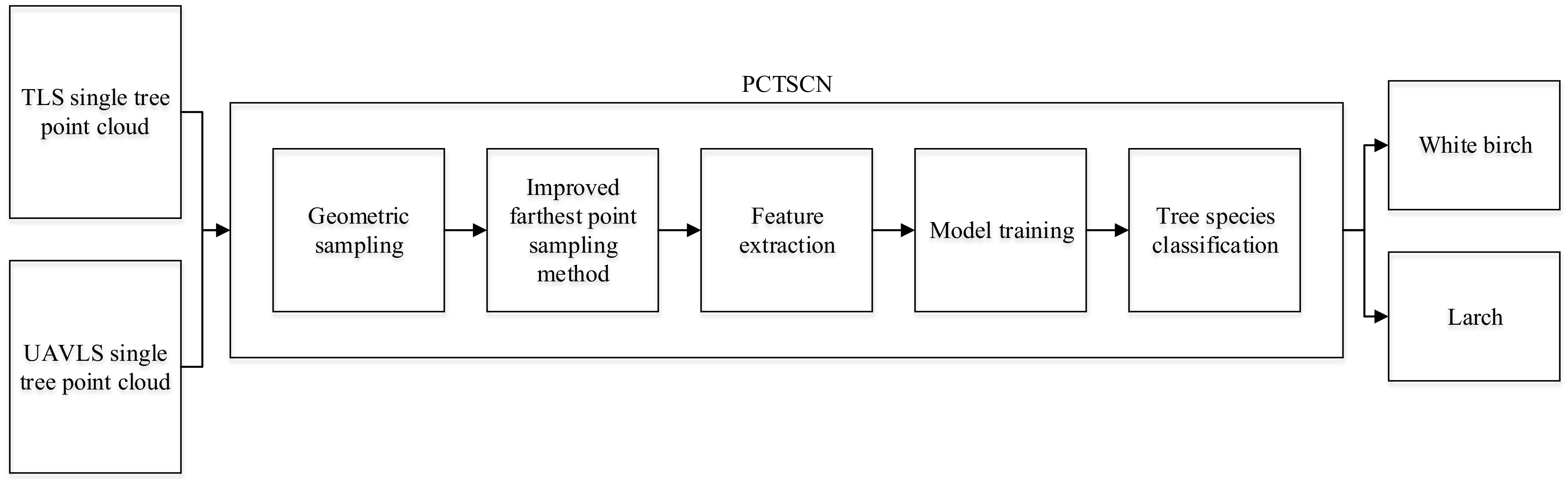

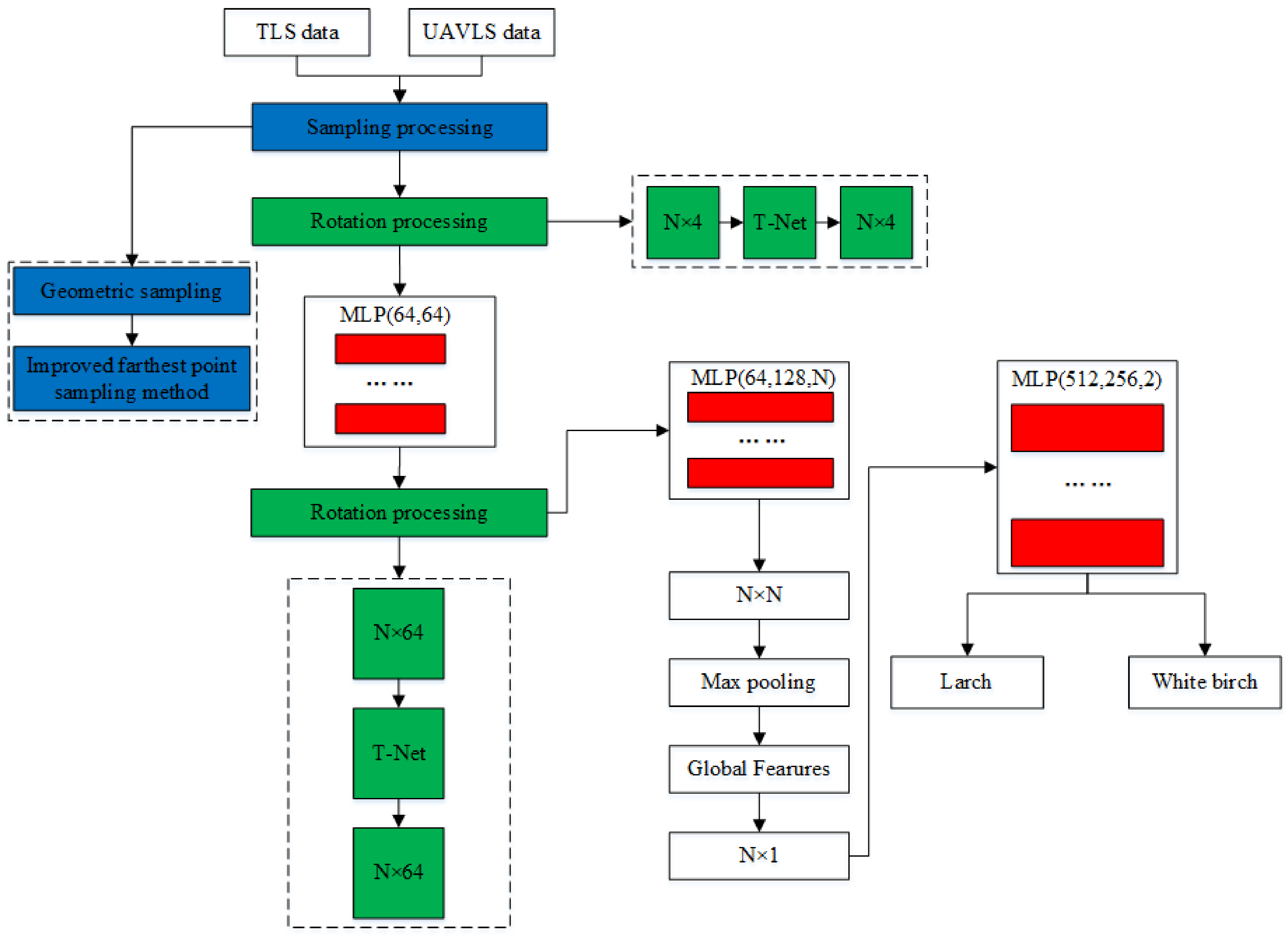

The PCTSCN proposed in this paper can directly classify tree species from point cloud data. We sampled the single tree point cloud twice in different ways to retain more local and global features. We added an attribute dimension to the neural network to extract reflection intensity information. In this way, the neural network could extract more features to improve the classification accuracy of the tree species. To verify the feasibility of the method, we classified the tree point cloud data obtained by TLS and UAVLS separately. First, PCTSCN performed geometric sampling processing on the point cloud data of single trees. Compared with uniform sampling, geometric sampling preserves more local information features. Second, PCTSCN performed the improved farthest point sampling for the point cloud data of the tree processed in the previous step. In contrast to the farthest point sampling method, the improved farthest point sampling method sampled from the whole tree. The method completed the sub-sampling according to the set sampling conditions and retained the required number of feature points of the single tree. Third, we used the neural network in PCTSCN to complete the extraction of high-dimensionality features. PCTSCN added a reflection intensity information dimension when the initial feature matrix was input so that it contained more feature information. Fourth, PCTSCN conducted model training on TLS and UAVLS data with different sampling point densities and the highest feature dimension. Fifth, we used the softmax function to output the classification results in PCTSCN to complete the classification of the tree species. The workflow of PCTSCN is shown in

Figure 7.

3.1. Geometric Sampling

Because white birch and larch trees have different shapes and structures, we should retain more features after sampling the point cloud data. Compared to Liu et al. [

26] and PointNet’s uniform sampling method, PCTSCN used a geometric sampling method to down-sample the point cloud data of larch and white birch. The local features of a single tree point cloud were more obvious where the location curvature had a large change. In the geometric sampling method, more points are extracted from the individual tree point cloud in places with large curvature changes. The specific steps of geometric sampling in PCTSCN are as follows:

- (1)

Calculation of the K-nearest neighborhood of each point in the point cloud data of each single tree sample. Because the point data volume of each point cloud sample after the single tree segmentation was inconsistent, the threshold of the neighborhood range could be set according to the data volume of each single tree point cloud.

- (2)

Extraction of the curvature of the area. Assuming that the surface approximated the point cloud data in the neighborhood of the point, the curvature of this point can be expressed by the curvature of the surface of the area.

- (3)

Using discrete points to fit the surface, we used the standard least squares surface fitting method:

,

,

are the points in the neighborhood, and

denotes the residual squared.

The approximate equation parameters of the curved surface were obtained by solving, and the average curvature was obtained by a curve of the curved surface.

- (4)

Identification of the range of the curvature threshold. The points with a curvature greater than the threshold were saved; otherwise, they were discarded. In this way, the geometric characteristics of the tree point cloud were preserved.

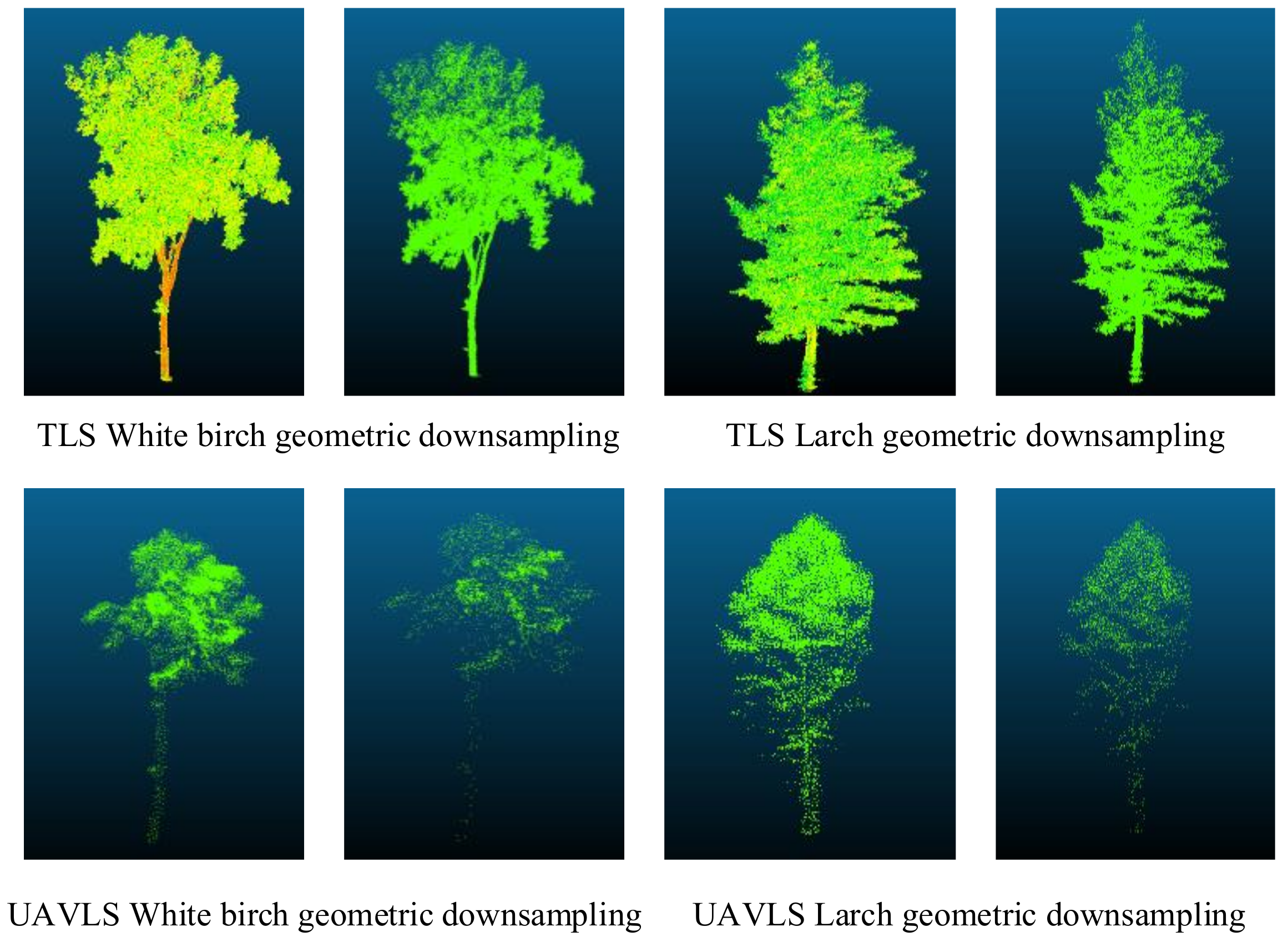

Whether it was the data obtained by TLS or UAVLS, after processing by the geometric sampling method, the contour structure was retained (

Figure 8). The local characteristics of each tree were also preserved.

3.2. Improved Farthest Point Sampling

The Farthest Point Sampling is the method of down-sampling point cloud data found commonly in the literature [

18]. The main idea of the algorithm is to sample M points (M < N) from N points. We constructed two datasets: A and B. A represented the set formed by the selected points, and B represented the set formed by the unselected points. At each iteration, we selected a point from set B with the largest distance to a point in set A. The specific process is as follows:

- (1)

Randomly select a point in set B and put it into set A.

- (2)

Calculate the distance from a point in set A to each point in set B, select the corresponding point with the largest distance and save it in set A.

- (3)

Randomly select a point from set B, and calculate the distance between this point and two points in set A. Finding the closest point is the closest distance from a random point in set B to set A.

- (4)

Calculate the distance from each point in set B to set A, and find the point with the largest corresponding distance, which is the third point.

- (5)

Repeat steps 3 and 4 until the number of points required by set A is selected.

The Farthest Point Sampling method provides uniform sampling in the extraction of local feature points. However, it suffers from the problem of an uncertain initial point and an uncertain sampling result.

The down-sampling of tree point cloud data needed to save its overall outline was able to highlight the structural and morphological characteristics of each tree, which was convenient for the neural network to extract and integrate its high-dimensional features. To better preserve the global features of the sampled data, we improved the Farthest Point Sampling method as follows:

- (1)

The single tree data processed by the geometric sampling method in the previous step constituted set C, and the total number of points was to N. The point H closest to the center was extracted and stored in set D, and then the number of points in set C became N-1.

- (2)

C was expressed as (), where the k value range was from 1 to N-1. The points corresponding to the maximum and minimum values in the x, y, and z directions were extracted. These points were stored in set D. The number of points in set D became 7 and C became N-7.

- (3)

These distances were calculated from each point in the set C to each point in the set D. The distance of the same point in the corresponding set C would be subtracted in pairs to find the absolute value. The absolute value was accumulated and summed to obtain the point in set C with the smallest sum, and finally, the point was stored in set D.

- (4)

Step 3 was repeated, and one every m point was collected until the number of points in set D reached the requirement of the number of sampling points:

where

P represents the number of points of input network.

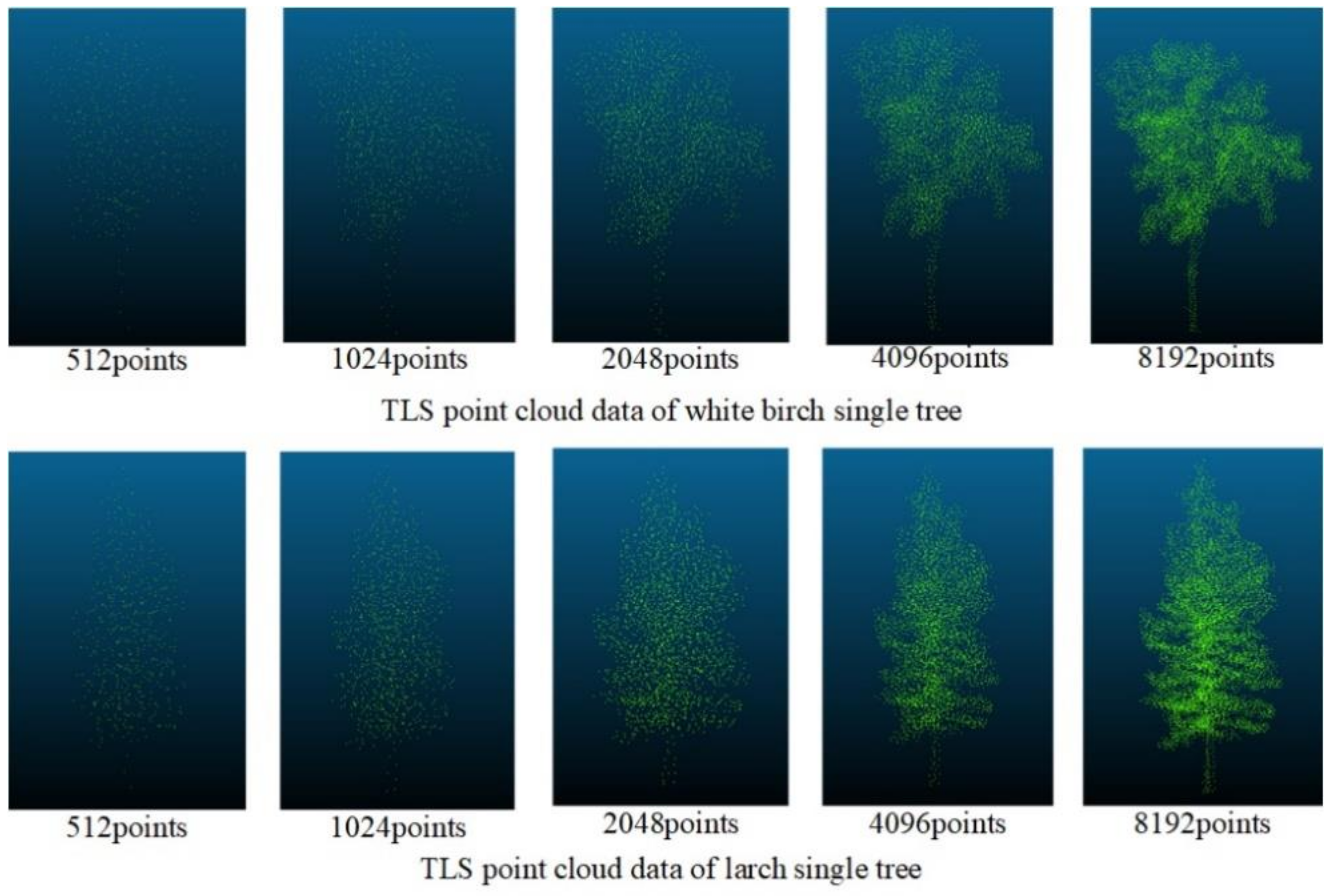

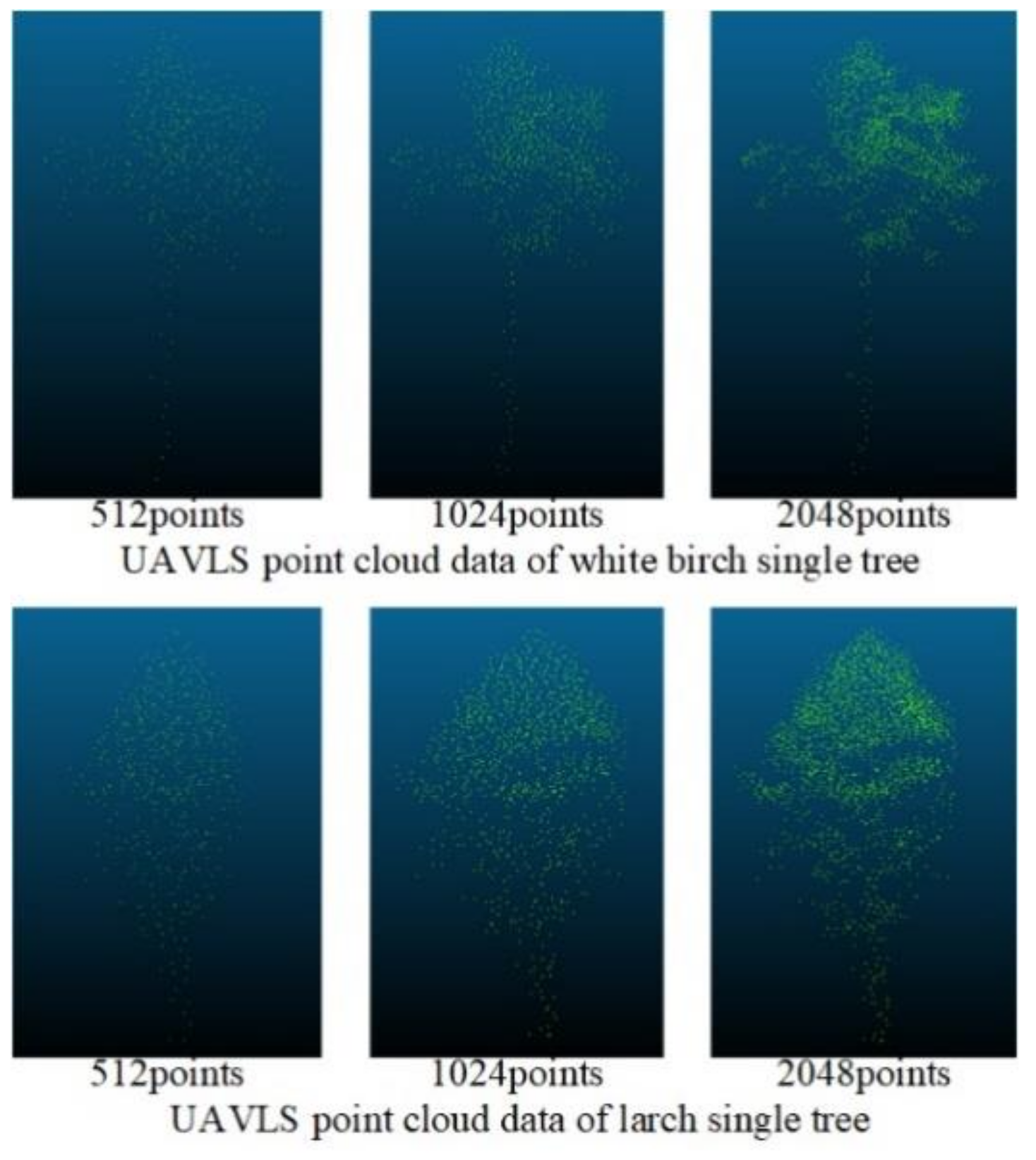

Figure 9 shows the processing of the data of TLS through the improved Farthest Point Sampling method, where 512, 1024, 2048, 4096, and 8192 points were reserved, respectively. Although the overall contour information of the tree gradually became blurred as the number of points decreased, when the number of points was 512, obvious contours and local features could still be retained. Because the density of the data obtained by UAVLS was relatively sparse, we used the improved farthest point sampling method to sample UAVLS data and retain 512, 1024, and 2048 points, respectively. The sampling effect is shown in

Figure 10.

3.3. Network Model Construction

For deep learning model training based on the end-to-end tree species classification of point cloud data, most studies only considered the structural information of a point cloud. However, the attribute information between the point cloud data could be used to distinguish the tree species. The intensity information differed depending on the tree species. Therefore, we proposed the PCTSCN that combined the intensity information of the point cloud as a new feature with its own structure information. The dimension of each input point was changed from three-dimensional to four-dimensional.

The point cloud data of each tree were an unordered collection of vectors, and the order of the points did not affect the tree shape. The disorder of the point cloud data would make it impossible to directly train the point cloud. We expanded the amount of data through various arrangements of point cloud collections. To reduce the loss of geometric point information, a multi-layer perceptron (MLP) was used to extract high-dimensional point features. A symmetric function was used to aggregate the information of each point. The principle of the symmetric function is:

where

h stands for high-dimensional mapping,

g stands for symmetric function, and

γ stands for MLP integration information. The tree point cloud presents a geometric transformation invariance. After a tree point cloud object is rotated by an angle, it still represents the object, but its coordinates change. The T-Net network was introduced to realize the prediction of the affine change matrix, and this change was directly applied to the input point coordinates. The T-Net network structure consisted of point feature extraction, maximum pooling, and fully connected layers. This small neural network generated transformation parameters based on the input data, and the input data were multiplied by the transformation matrix to achieve rotation invariance.

The specific implementation of PCTSCN is shown in

Figure 11. After geometric sampling and improved farthest point sampling, the data obtained by TLS retained 512, 1024, 2048, 4096, and 8192 points, respectively. The data obtained by UAVLS retained 512, 1024, and 2048 points, respectively. The reason for the inconsistency in the number of sampling points for the two data storage has been explained in

Section 3.2. Each individual tree point cloud retained the intensity information. The dimension of each point was four-dimensional (

x,

y,

z,

i), where

i represented the reflection intensity. The point cloud of each tree constituted an

feature matrix. The individual tree point cloud N obtained by TLS was expressed as 512, 1024, 2048, 4096, and 8192. The N for UAVLS data was expressed as 512, 1024, and 2048. Multiplying the feature matrix formed by each tree point cloud with the

matrix generated by the T-Net network produced an

alignment matrix. After processing by MLP, each feature point was mapped to a 64-dimensional space. At this time, the PCTSCN used T-Net to generate a

matrix again. The next step was to multiply

matrix with the mapping matrix to obtain an

alignment matrix. The feature points were mapped to N dimensions through MLP again to obtain an

feature matrix. The global feature was obtained through the maximum pooling operation, and an

global feature matrix was generated. Finally, after another MLP, the softmax function was used to obtain the classification categories of larch and white birch to complete the tree species classification.

5. Discussion

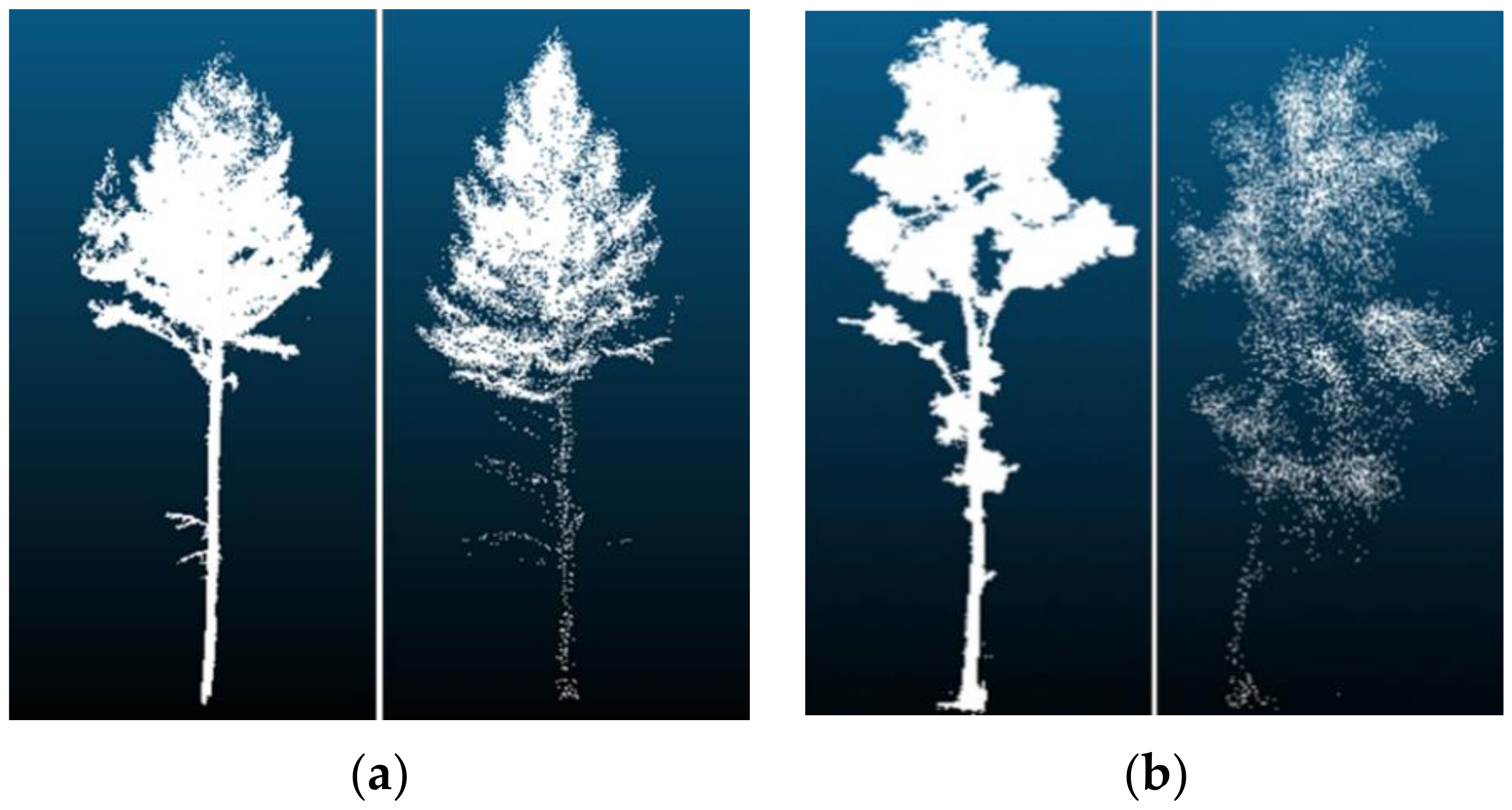

The point density had a greater accuracy impact on the tree species classification model. For the data obtained by TLS, when the point density was 512 points and 1024 points, the classification accuracy of the model tree species was higher than for other models trained with higher point density. Because the point density was too large, the extracted information was redundant, resulting in low model classification accuracy. The point density was too large to increase the number of parameters, resulting in slow model training convergence, which, in turn, affected the test accuracy of the model. The sample point density of the training model was 512 points and 1024 points, and the classification accuracy of the model tree species that were trained was the same. The misclassified samples of the two models were also the same. The misclassified samples of other models included their misclassified samples. In addition to the problems of the model, classification errors occurred when tree species of different species had similar structural characteristics (e.g.,

Figure 12a, showing larches recognized as white birches, and

Figure 12b, with white birches mistaken for larches). For the data obtained by UAVLS, the classification accuracy of the model tree species continued to improve with the increase in the point density. As shown in

Figure 13, the point cloud data at the bottom of the tree could not be obtained by UAVLS due to the occlusion between trees, so the overall contour information of the single tree was missing. Similar appearance features and structures between different tree species could also cause classification errors. Due to the large difference in point density, the time required for each model training varies greatly. As the density of points increases with the model, training time continues to increase, which was due to the obvious increase in the amount of parameter calculation in the neural network.

We mapped the various highest feature dimensions in the network of the individual tree point cloud training sample data acquired by two LiDAR instruments. For the TLS data, the highest accuracy was obtained when the highest feature of the mapping was 512-dimensional. The classification accuracy of the model decreased with increasing dimensionality. The reason for our analysis was that the information feature structure of single tree data obtained by TLS was complete. Higher dimensionality caused more redundant information, which resulted in the overfitting of the training model. In addition, the number of model parameters increased with increasing the highest dimensionality. When the training epochs were the same, it was difficult for the high-dimensional model to converge. For the data obtained by UAVLS, the accuracy of the tree species classification model fluctuated with mapping the highest feature dimension in the neural network increases. The reason was that the structural information of single tree data obtained by UAVLS was insufficient. When the highest dimension was low, the characteristics of different tree species could not be fully extracted. When mapping, the highest dimension was 2048 dimensions, the model classification accuracy decreased. This was because the dimensionality was high, and the extracted feature information was redundant. Another reason was that the increase in the number of parameters was difficult for the model to converge. Therefore, appropriately increasing the feature extraction dimension can improve the model classification accuracy.

In the experimental analysis, we found that seasonal changes had a great impact on the overall outline of broad-leaved forest trees. After the deciduous period of the white birch tree, the branches and leaves of the tree fell off. The appearance and structure of trees in this period were significantly different from those in the growth period [

39,

40]. Larch is a conifer, and its branches and leaves are hardly affected by seasonality [

41,

42]. At present, some scholars used the method of branch and leaf separation to extract branches and stems following two types of approaches. The first type is to extract branches and trunks based on different reflection intensity information and point density of branches and leaves [

43,

44]. The information extracted by this method was incomplete. The second category is to label the branch and leaf points and use deep learning or machine learning to extract the branches. This type of method requires a lot of work to label samples in the early stage [

28,

45,

46]. We recommend extracting some single trees separated from branches and leaves from broad-leaved forests and merging them with the complete single trees as training samples. The completeness of the single tree division directly determines the outline of the tree. In forest areas with complex terrain and environment, the UAVLS data were restricted, resulting in sparse point density. It was difficult to ensure that the individual tree after the split was absolutely complete. The sparsity of data affects the classification accuracy of the tree species [

26]. In addition, the parameter settings in the model affect the accuracy of the model, such as the learning rate and batch size [

47]. The loss function does not drop or oscillate widely when the learning rate is large. The loss gradient drops slowly when the learning rate is small, which makes the model unable to converge [

48,

49]. The batch size should be set according to the total amount of data. When the batch size is small, the model has difficulty converging. If the total amount of data is small, all samples could be used as a batch size [

50,

51].

6. Conclusions

This paper proposed PCTSCN based on deep learning, which solved the problem that 3D tree point cloud data were difficult to directly train as a sample to obtain a deep learning model. This method could directly train point cloud data samples to derive the model. PCTSCN did not need to convert the point cloud data into other types of data and avoided the problem of feature loss in the data conversion process. Firstly, PCTSCN performed geometric sampling processing on the data to extract local feature points and then used the improved farthest point sampling method for sub-sampling to retain the global features. Secondly, PCTSCN used neural networks to extract spatial feature information and reflection intensity information from the training samples processed in the previous step. Finally, PCTSCN mapped the extracted spatial features and attribute features to a high-dimensional space and then performed feature extraction to train the tree species classification model to complete the tree species identification. To verify the applicability of the method proposed in this paper, PCTSCN was used to classify the tree species of data obtained by both TLS and UAVLS. In the same experimental environment, the accuracy of the tree species classification model trained with the data obtained by TLS was higher than that of the model trained with the data obtained by UAVLS. The classification accuracy of PCTSCN tree species reached up to 96%, and the kappa coefficient was 0.923. After adding the intensity information to the features extracted by the neural network, PCTSCN improved the classification accuracy of tree species by 2–6% compared with other 3D deep learning models. The PCTSCN proposed in this paper provides a new method for tree species classification of forest LiDAR data. In the future, we will extend this research to the classification of multiple tree species from point cloud data.