1. Introduction

Airborne single-channel light detection and ranging (LiDAR) has been widely used in many applications, such as topographic mapping, urban planning, forest inventory, environmental monitoring, due to its abilities of quickly acquiring large-scale and high-precision information of the Earth’s surface [

1,

2]. Based on the highly-accurate three-dimensional (3-D) height information and single-wavelength infrared intensity information, point cloud classification has become an active research direction in the fields of photogrammetry and remote sensing and computer science [

3,

4,

5]. However, only the LiDAR data themselves achieve unsatisfactory fine-grained point cloud classification results due to the lack of rich spectral information. Therefore, LiDAR data are commonly combined with optical images to better understand the Earth surface mechanics, and monitor the ground objects and their changes [

6,

7,

8]. Some competitions and special issues were organized to promote data fusion of LiDAR with multiple other data sources (e.g., hyperspectral images, and ultra-high-resolution images) for urban observation and surveillance, forest inventory, etc [

9,

10]. Although the integration of LiDAR data with image data improves the point cloud classification accuracy, some issues, such as geometric registration and object occlusions, have not been effectively and satisfactorily addressed.

Several multispectral LiDAR prototypes, which can simultaneously collect point clouds with multi-wavelength intensities, have been launched in recent years. For example, Wuhan University in China also developed a multispectral LiDAR system with four wavelengths of 556 nm, 670 nm, 700 nm, and 780 nm in 2015 [

11]. The intensities of multiple wavelengths are independent of illumination conditions (e.g., shading and secondary scatter effect), effectively reducing shadow occlusions. In December 2014, the first commercial multispectral LiDAR system, which contains three wavelengths of 532 nm, 1064 nm, and 1550 nm, has been released by Teledyne Optech (Toronto, ON, Canada). Multispectral LiDAR data have been used in many fields, such as topographic mapping, land-cover classification, environmental modeling, natural resource management, and disaster response [

12,

13,

14,

15].

A multispectral LiDAR system acquires multi-wavelength spectral data simultaneously in addition to 3-D spatial data, providing multiple attribute features to the targets of interest. Studies have demonstrated that multispectral LiDAR point clouds achieved better classification at finer details [

10,

16]. Recently, multispectral point cloud data have been increasingly applied to carry out classification studies. These methods can be categorized into groups in terms of processed data type: (1) two-dimensional (2-D) multispectral feature image-based methods [

17,

18,

19,

20,

21,

22,

23,

24,

25] and (2) 3-D multispectral point clouds based methods [

22,

26,

27,

28,

29,

30]. The former first rasterized multispectral LiDAR point clouds into multispectral feature images with a given raster size according to the height and intensity information of multi-wavelength point clouds, and then explored a variety of established image processing methods for point cloud classification and object recognition. Zou et al. [

17] and Matikainen et al. [

18] performed an objected-based classification method to investigate the feasibility of Optech Titan Multispectral LiDAR data on land-cover classification. Bakuła et al. [

19] carried out a maximum likelihood land-cover classification task by fusing textural, elevation, and intensity information of multispectral LiDAR data. Fernandez-Diaz et al. [

20] proven that multispectral LiDAR data achieved promising performance on land-cover classification, canopy characterization, and bathymetric mapping. To improve multispectral LiDAR point cloud classification accuracies, Huo et al. [

21] explored a morphological profiles (MP) feature and proposed a novel hierarchical morphological feature (HMP) function by taking full advantage of the normalized digital surface model (nDSM) data of the multispectral LiDAR data. More recently, Matikainen et al. [

22], by combining with single photon LiDAR (SPL) data, investigated the capabilities of multi-channel intensity data in land-cover classification of a suburban area. However, most of the state-of-the-art studies just used low-level hand-crafted features, such as intensity and height features directly provided by the multispectral LiDAR data, geometric features (e.g., planarity and linearity), and simple feature indices (e.g., vegetation index and water index), in point cloud classification tasks. So far, few classification studies have focused on high-level semantic features directly learned from the multispectral LiDAR data. Deep learning, which learns high-level and representative features from a plenty of representative training samples, has attracted increasing attentions in a variety of applications, such as medical diagnosis [

23] and computer vision [

24]. Pan et al. [

25] compared a representative deep learning method, Deep Boltzmann machine (DBM), with two widely-used machine learning methods, principal component analysis (PCA), and random forest (RF) in multispectral LiDAR classification, and found that the features learned by the DBM improved an overall accuracy (OA) by 8.5% and 19.2%, respectively. Yu et al. [

26] performed multispectral LiDAR land-cover classification by a hybrid capsule network, which fused local and global features to obtain better classification accuracies. Although the aforementioned deep learning-based methods achieved promising point cloud classification performance, these methods were performed on 2-D multispectral feature images converted from 3-D multispectral LiDAR data [

25,

26]. Although rasterizing 3-D multispectral LiDAR point clouds into 2-D multispectral feature images greatly reduces the computational cost when processing point cloud classification of large areas, such conversion, which brings conversion errors and spatial information loss of some objects (e.g., powerline and fence), leads to incomplete and possibly unreliable point cloud classification results.

The 3-D multispectral point clouds based methods directly perform point-wise classification tasks without data conversion. Wichmann et al. [

27] first performed data fusion through a nearest neighbor approach, and then analyzed spectral patterns of several land covers to perform point cloud classification. Morsy et al. [

28] separated land-water from vegetation-built-up by using three normalized difference feature indices derived from three Titan multispectral LiDAR wavelengths. Sun et al. [

29] performed 3-D point cloud classification on a small test scene by integrating the PCA-derived spatial features along with the laser return intensity at different wavelengths. Ekhtari et al. [

30,

31] proved that multispectral LiDAR data have good feature recognition abilities by directly classifying the data into ten land ground objects (i.e., building 1, building 2, asphalt 1, asphalt 2, asphalt 3, soil 1, soil 2, tree, grass, and concrete) via a support vector machine (SVM) method. Ekhtari et al. [

31] performed an eleven-class classification task and achieved an OA of 79.7%. In addition, some multispectral point clouds classification studies have demonstrated that 3-D multispectral LiDAR point cloud based methods were superior to 2-D multispectral feature image based methods by an OA improvement of 10% in [

32], and 3.8% in [

33]. Recently, Wang et al. [

34] extracted the geometric-and-spectral features from multispectral LiDAR point clouds by a tensor representation. Compared with a vector-based feature representation, a tensor preserves more information for point cloud classification due to its high-order data structure. Although most aforementioned methods achieved better point cloud classification performance in most cases, even on correctly identifying some very-long-and-narrow objects (e.g., powerlines and fences) [

2] or some ground objects in complex environments (e.g., roads occluded by tree canopies) [

1]. These methods classified objects from multispectral LiDAR data according to the spectral, geometrical, and height-derived features of the data. Feature extraction and selection plays an important part in point cloud classification, but there is no simple way to determine the optimal number of features and the most appropriate features in advance to ensure robust point cloud classification accuracy. Therefore, to further improve point cloud classification accuracy, deep learning methods will be explored for point cloud classification by directly performing on 3-D multispectral LiDAR point clouds.

To effectively process unstructured, irregularly-distributed point clouds, a set of networks/models have been proposed, such as PointNet [

35], PointNet++ [

36], DGCNN [

37], GACNet [

38], and RSCNN [

39]. Specifically, PointNet [

35] used a simple symmetric function and a multi-layer perceptron (MLP) to handle unordered points and permutation invariance of a point cloud. However, PointNet neglected points-to-points spatial neighboring relations, which contained fine-grained structural information for object segmentation. DGCNN [

37], via EdgeConv, constructed local neighborhood graphs to capture the local domain information and global shape features of a point cloud effectively. To avoid feature pollution between objects, GACNet [

38] used a novel graph attention convolution (GAC) with learnable kernel shapes to dynamically adapt to the structures of the objects to be concerned. To obtain an inductive local representation, RSCNN [

39] encoded the geometric relationship between points by applying weighted sum of neighboring point features, which resulted in much shape awareness and robustness. However, GACNet and RSCNN have a high cost of data structuring, which limits their abilities to generalize complex scenarios. PointNet++ [

36], a hierarchical structure of PointNet, is capable of both extracting local features and dealing with unevenly sampled points through multi-scale grouping (MSG), thereby improving the robustness of the model. Chen et al. [

40], based on the PointNet++ network, performed a LiDAR point cloud classification by considering both the point-level and global features of centroid point, and achieved a good classification performance for airborne LiDAR point clouds with variable densities at different areas, especially that for the powerline category.

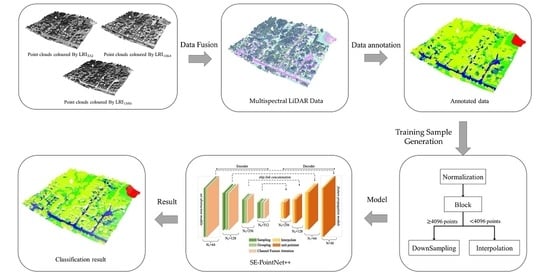

Due to the simplicity and robustness of PointNet++, in this paper, we select it as our backbone for point-wise multispectral LiDAR point cloud classification. The features learned by the PointNet++ contain some ineffective channels, which cost heavy computation resources and result in a decrease of classification accuracy. Therefore, to emphasize important channels and suppress the channels unconducive to prediction, a Squeeze-and-Excitation block (SE-block) [

41] is integrated into the PointNet++, termed as SE-PointNet++. The proposed SE-PointNet++ architecture is applied to the Titan multispectral LiDAR data collected in 2014. We select 13 representative regions and label them manually with six categories by taking into account ground objects distributions in the study areas and the geometrical and spectral properties of the Titan multispectral LiDAR data. The main contributions of the study include the following:

- (1)

A novel end-to-end SE-PointNet++ is proposed for the point-wise multispectral LiDAR point cloud classification task. Specifically, to improve point cloud classification performance, a Squeeze-and-Excitation block (SE-block), which emphasizes important channels and suppresses the channels unconducive to prediction, is embedded into the PointNet++ network.

- (2)

We investigate by comprehensive comparisons the feasibility of multispectral LiDAR data and the superiority of the proposed architecture for point cloud classification tasks, as well as the influence of the sampling strategy on point cloud classification accuracy.

5. Conclusions

The multispectral LiDAR point cloud data contain both geometrical and multi-wavelength information, which contributes to identifying different land-cover categories. In this study, we proposed an improved PointNet++ architecure, named SE-PointNet++, by integrating an SE attention mechanism into the PointNet++ for the multispectral LiDAR point cloud classification task. First, data preprocessing was performed for data merging and annotation. A set of samples were obtained by the FPS point sampling method. By embedding the SE-block into the PointNet++, the SE-PointNet++ is capable of both extracting local geometrical relationships among points from unevenly sampled data, and strengthening important feature channels simultaneously, which improves multispectral LiDAR point cloud classification accuracies.

We have tested the SE-PointNet++ on the Titan airborne multispectral LiDAR data. The dataset was classified into six land-cover categories: road, building, grass, tree, soil, and powerline. Quantitative evaluations showed that our SE-PointNet++ achieved a classification performance with the OA, mIoU, F1-score, and Kappa coefficient of 91.16%, 60.15%, 73.14%, and 0.86 of, respectively. In addition, the comparative studies with five established methods also confirmed that the SE-PointNet++ was feasible and effective in 3-D multispectral LiDAR point cloud classification tasks.