Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression

Abstract

:1. Introduction

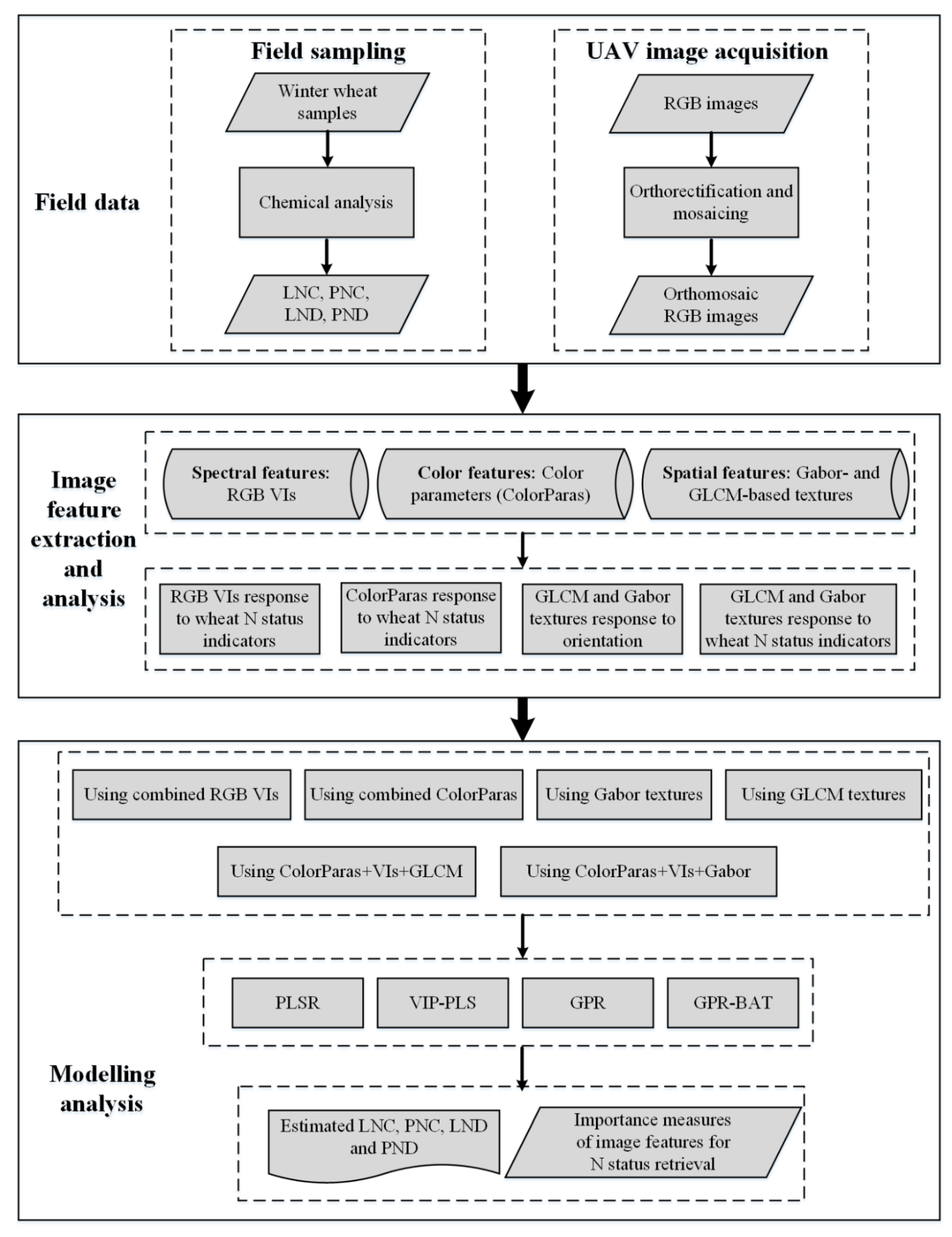

2. Materials and Methods

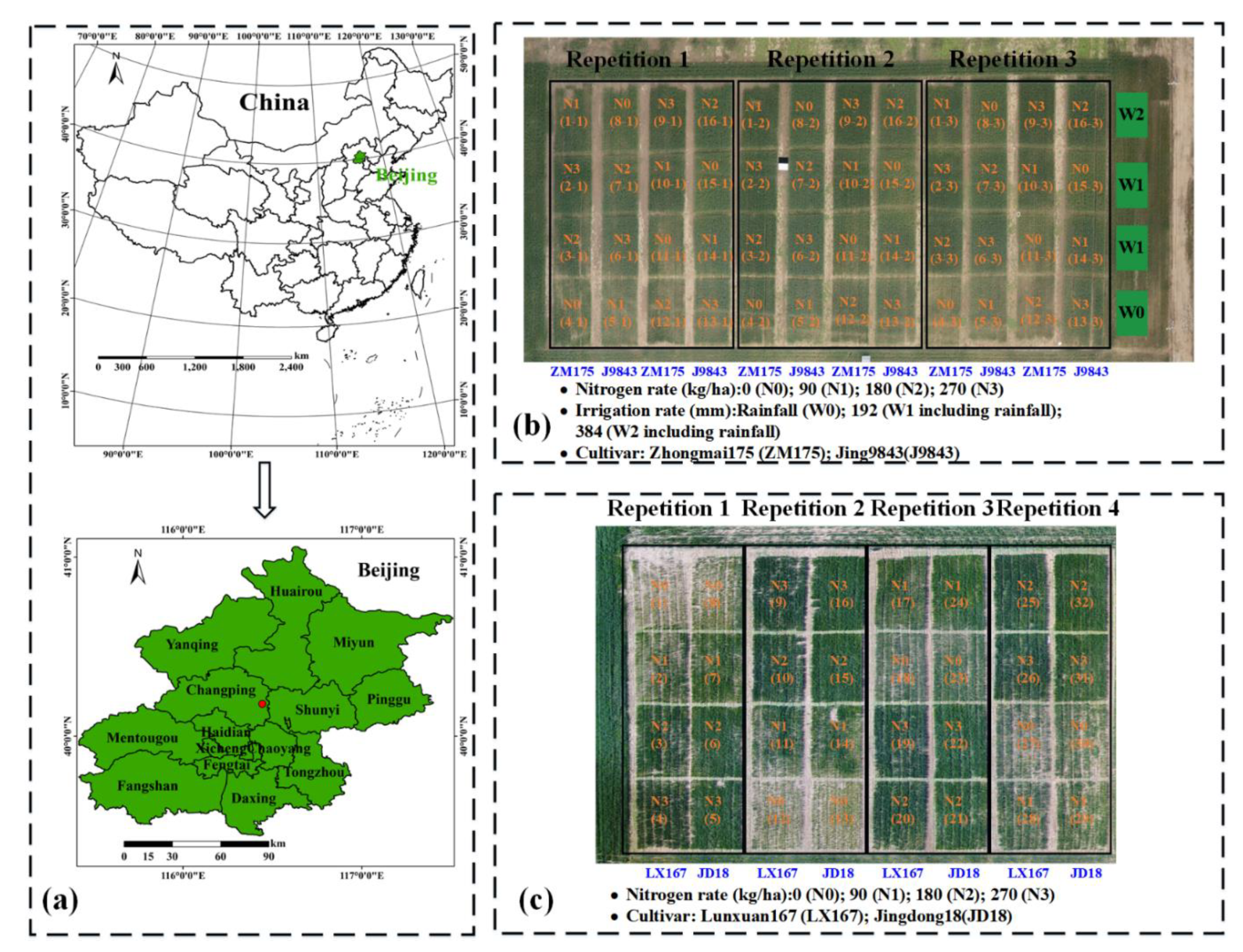

2.1. Experimental Design

2.2. Data Collection

2.2.1. UAV RGB Imagery Acquisition and Pre-Processing

2.2.2. Field Data Acquisition

2.3. RGB Image Feature Extraction and Analysis

2.3.1. Spectral Features: RGB-Based VIs

2.3.2. Color Features: Color Parameters from Different Color Space Models

2.3.3. Spatial Features: Gabor- and GLCM-Based Textures

2.4. Identification of Influential Image Features and Modeling for Winter Wheat N Status Estimation

2.4.1. PLSR Modeling and VIP-PLS

2.4.2. GPR Modeling and GPR-BAT

2.5. Modeling Strategy and Statistical Analyses

3. Results

3.1. Descriptive Statistics

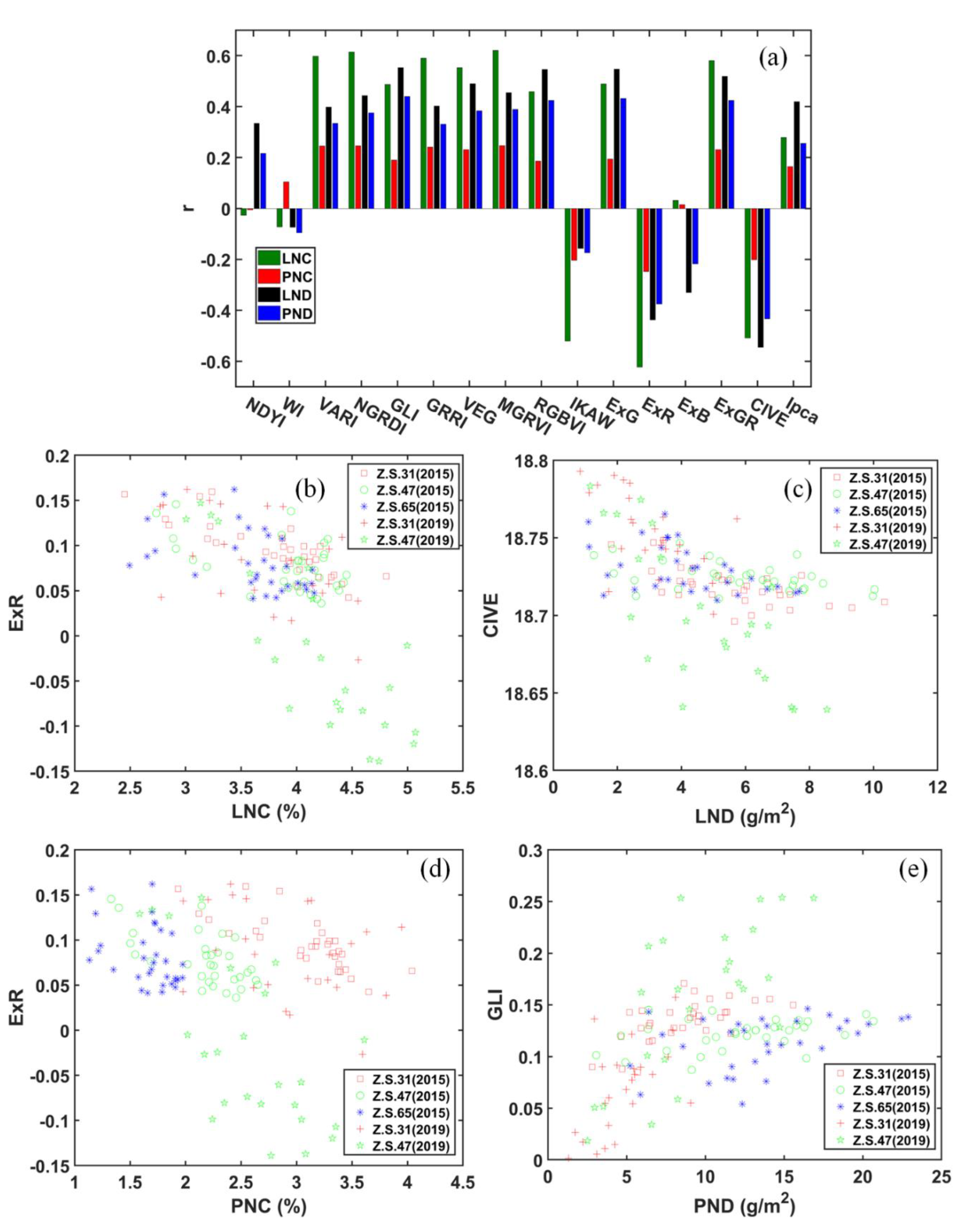

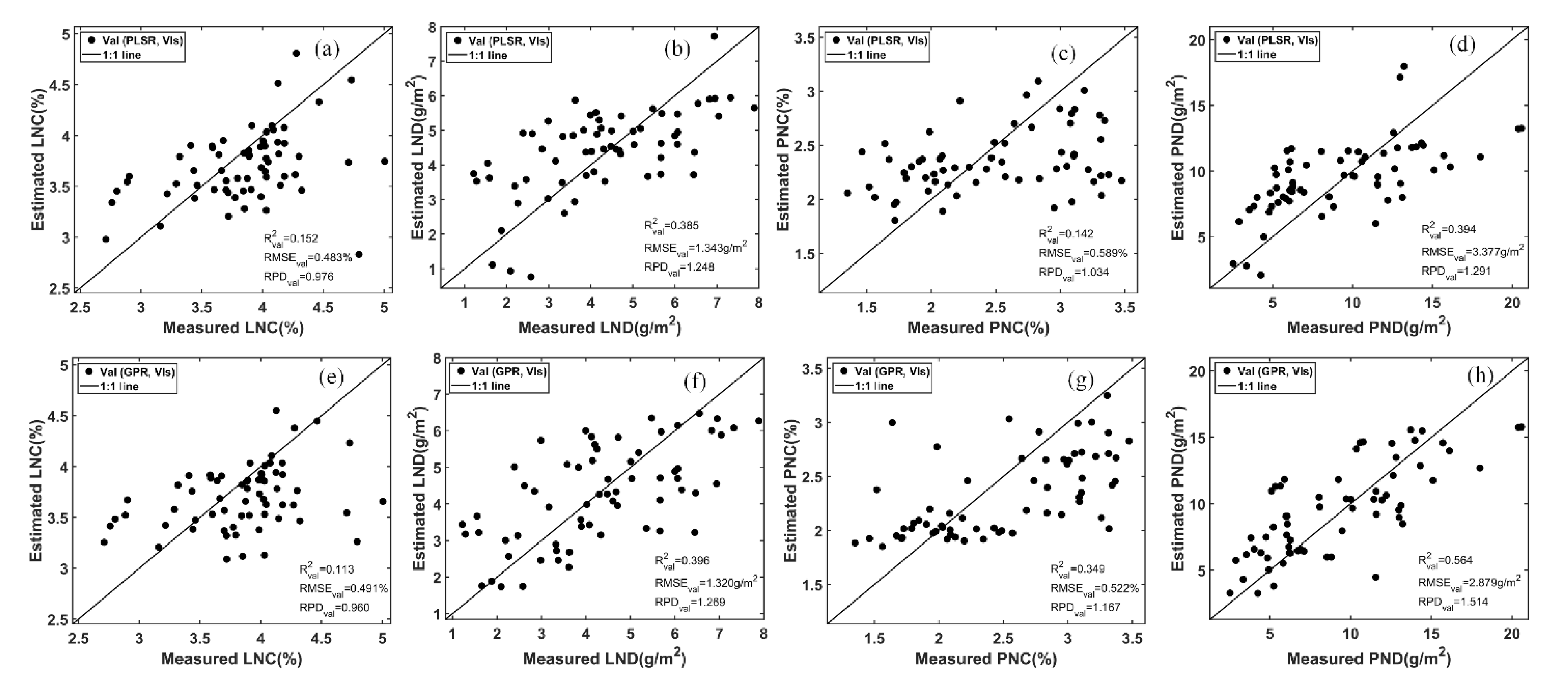

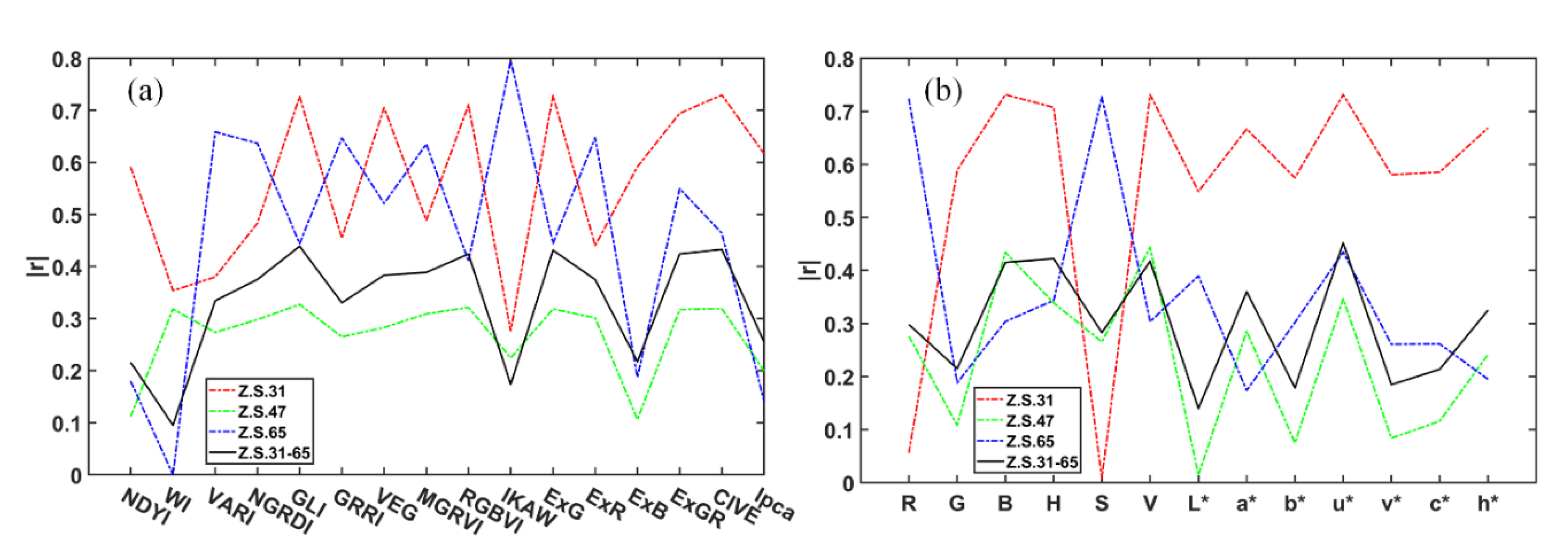

3.2. Using RGB-Based VIs to Estimate the Winter Wheat N Status

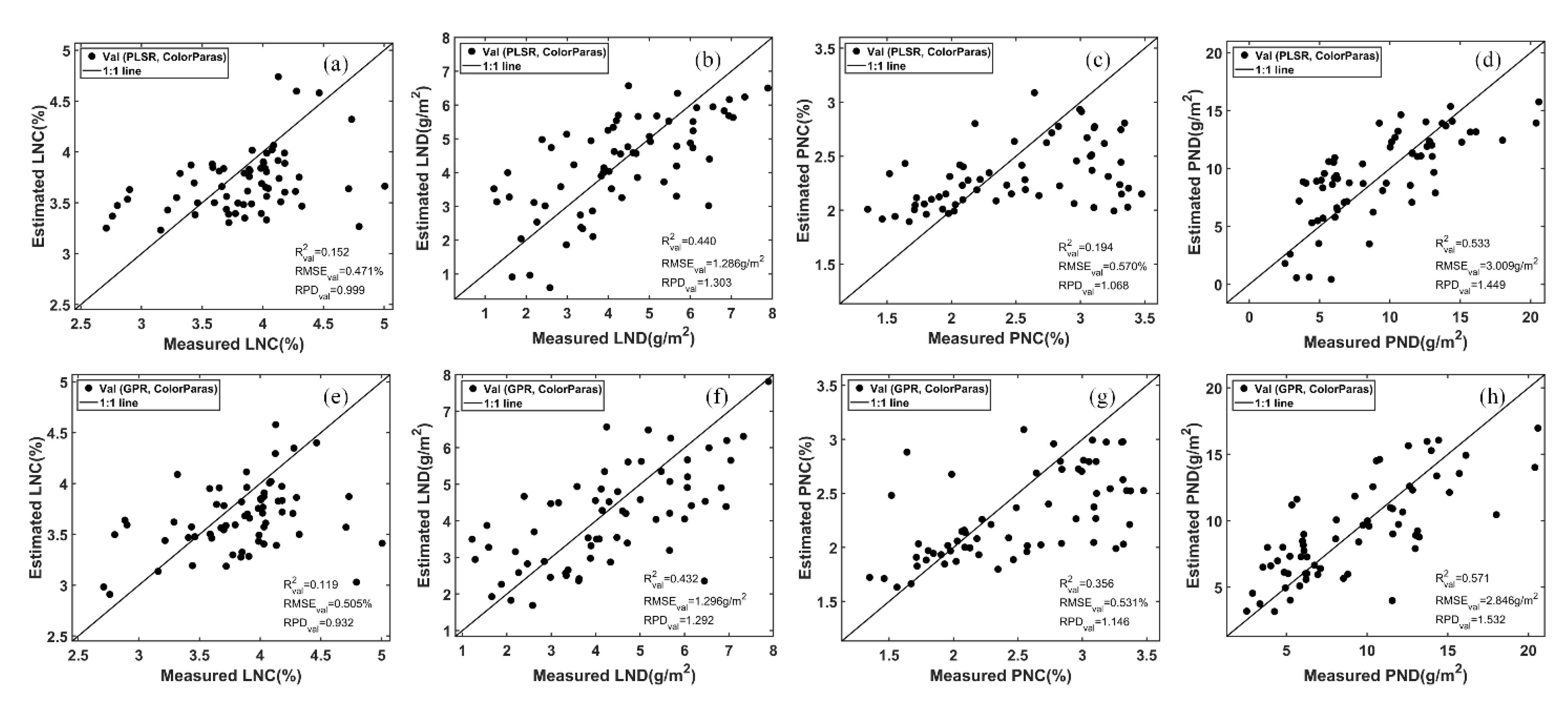

3.3. Using Color Parameters to Estimate the Winter Wheat N Status

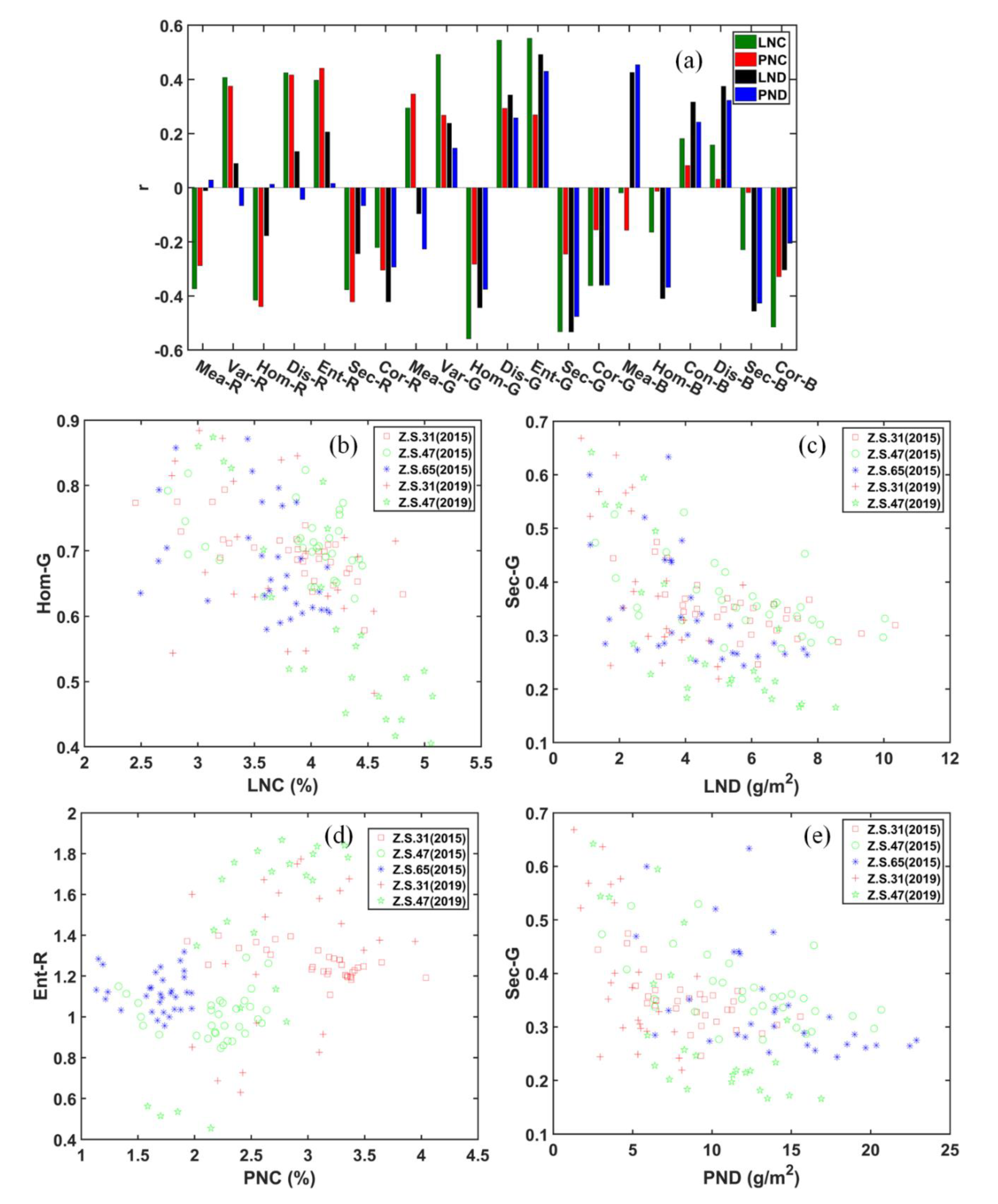

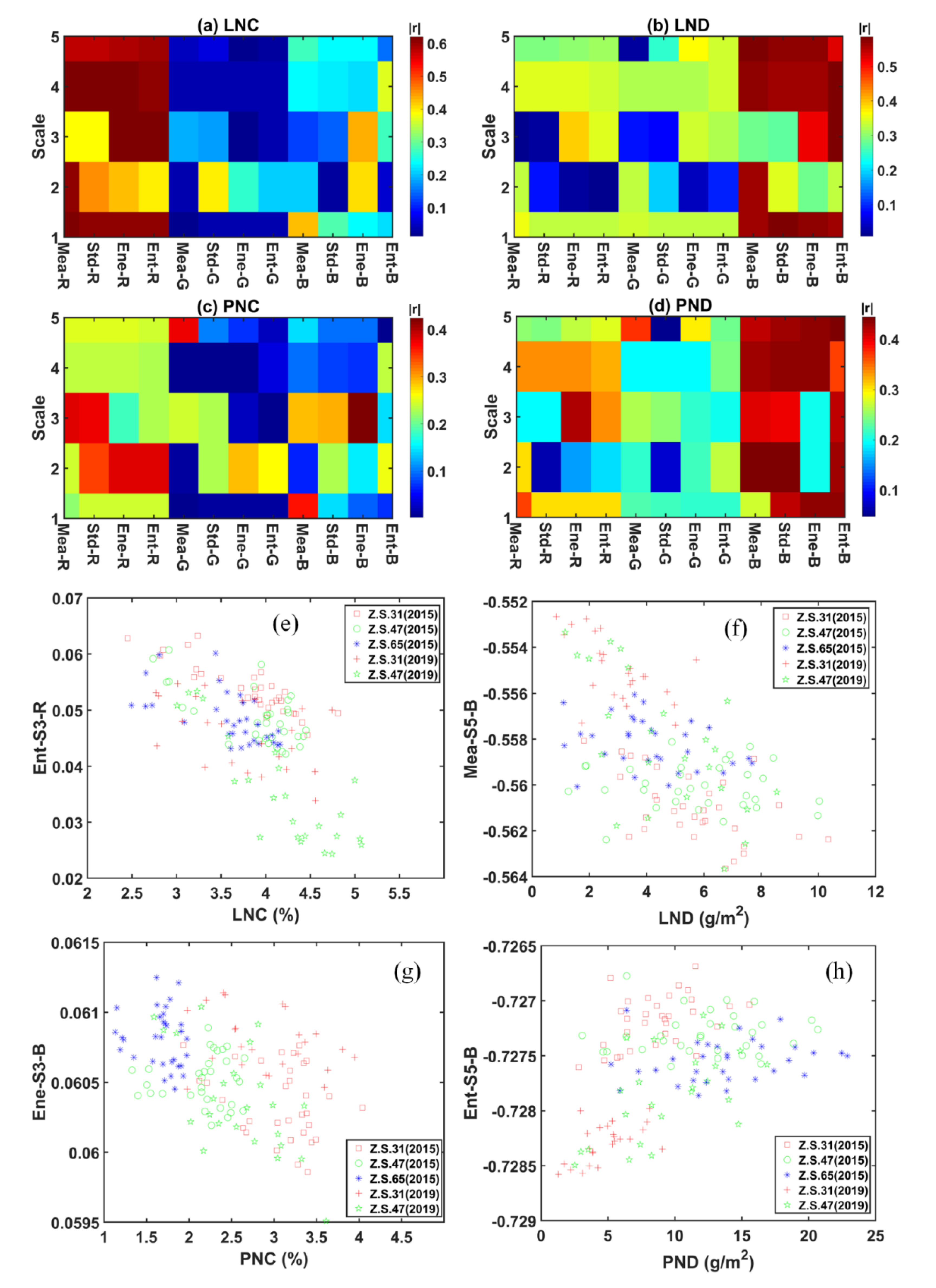

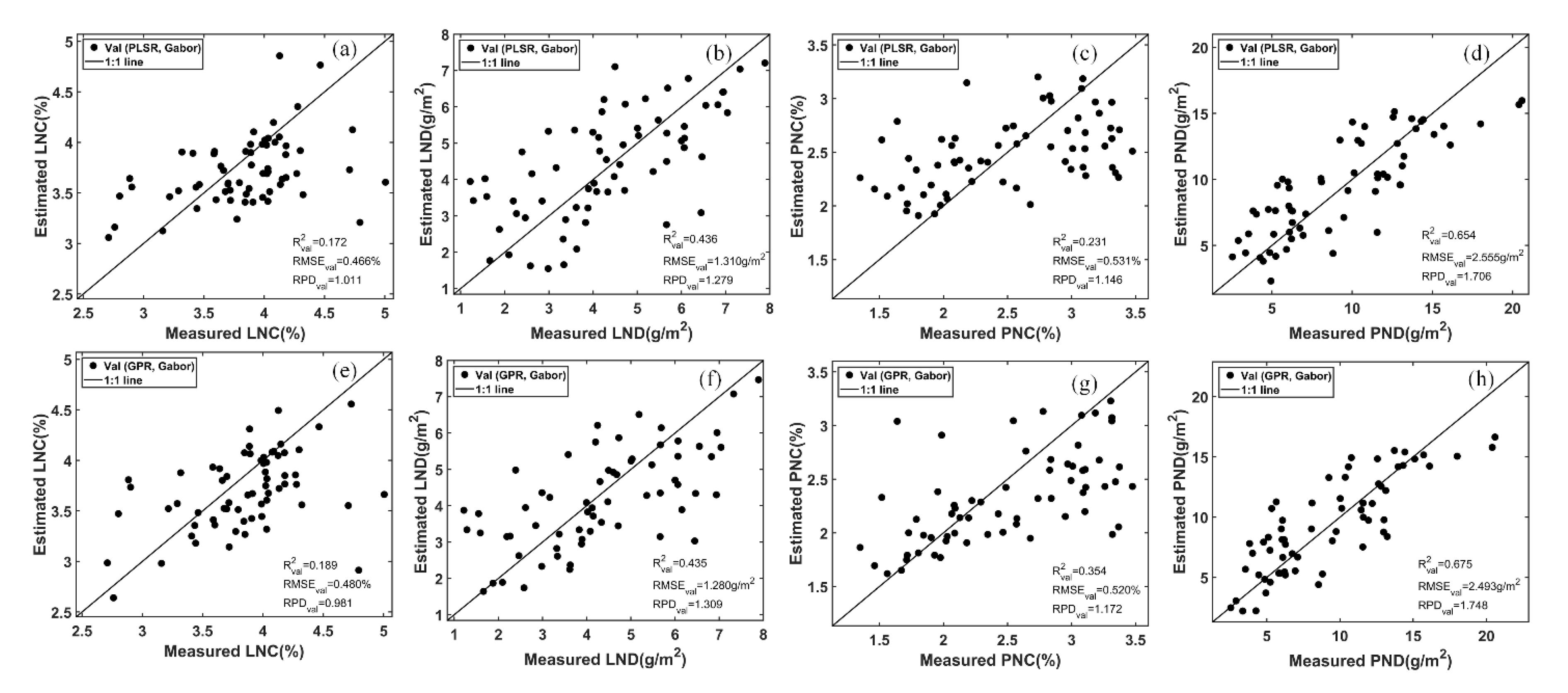

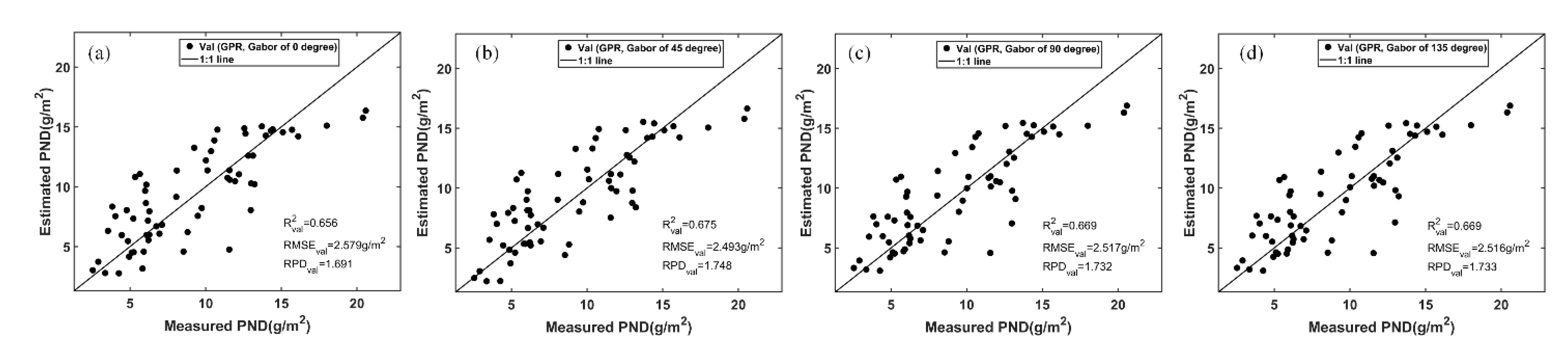

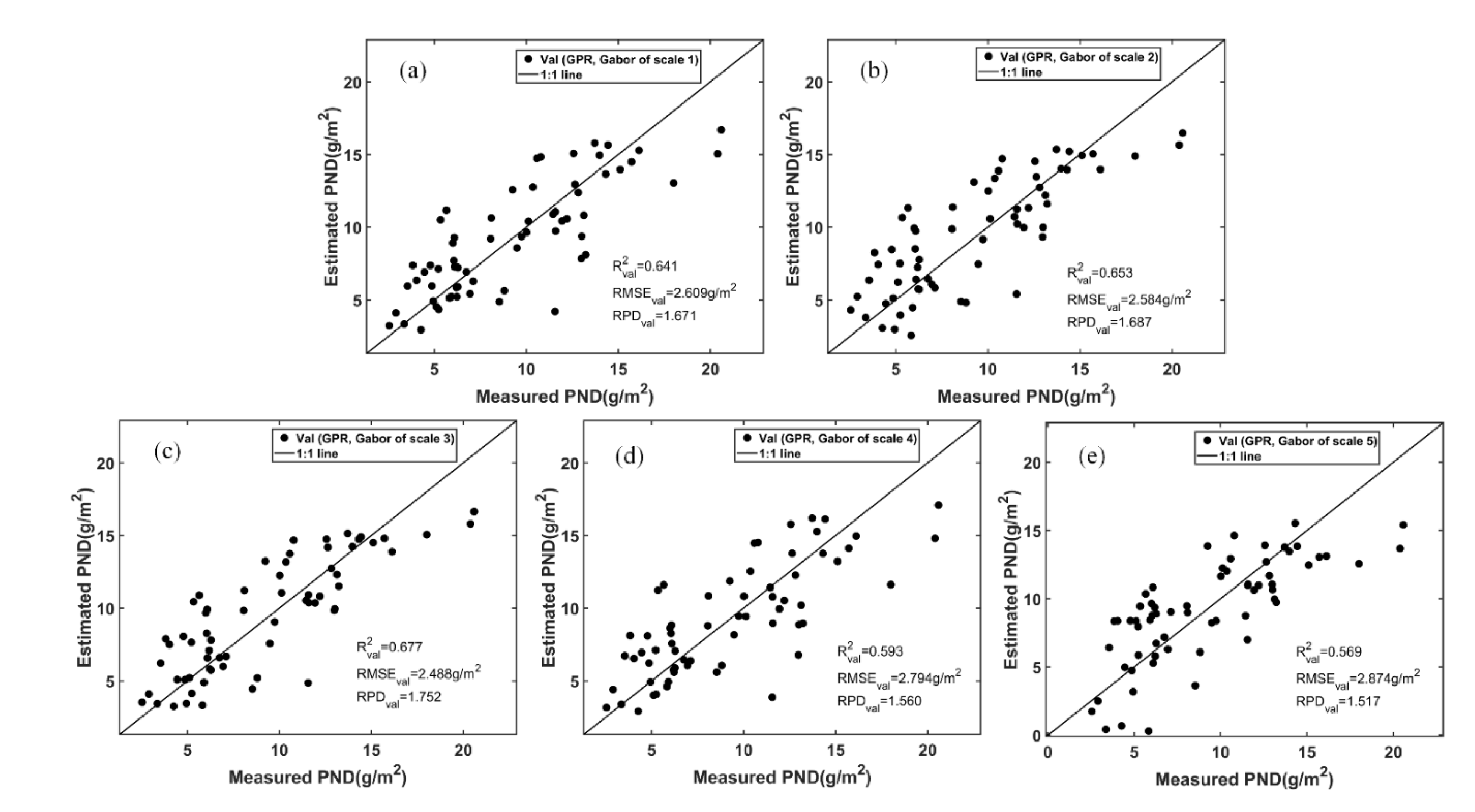

3.4. Using Gabor Textural Features to Estimate the Winter Wheat N Status

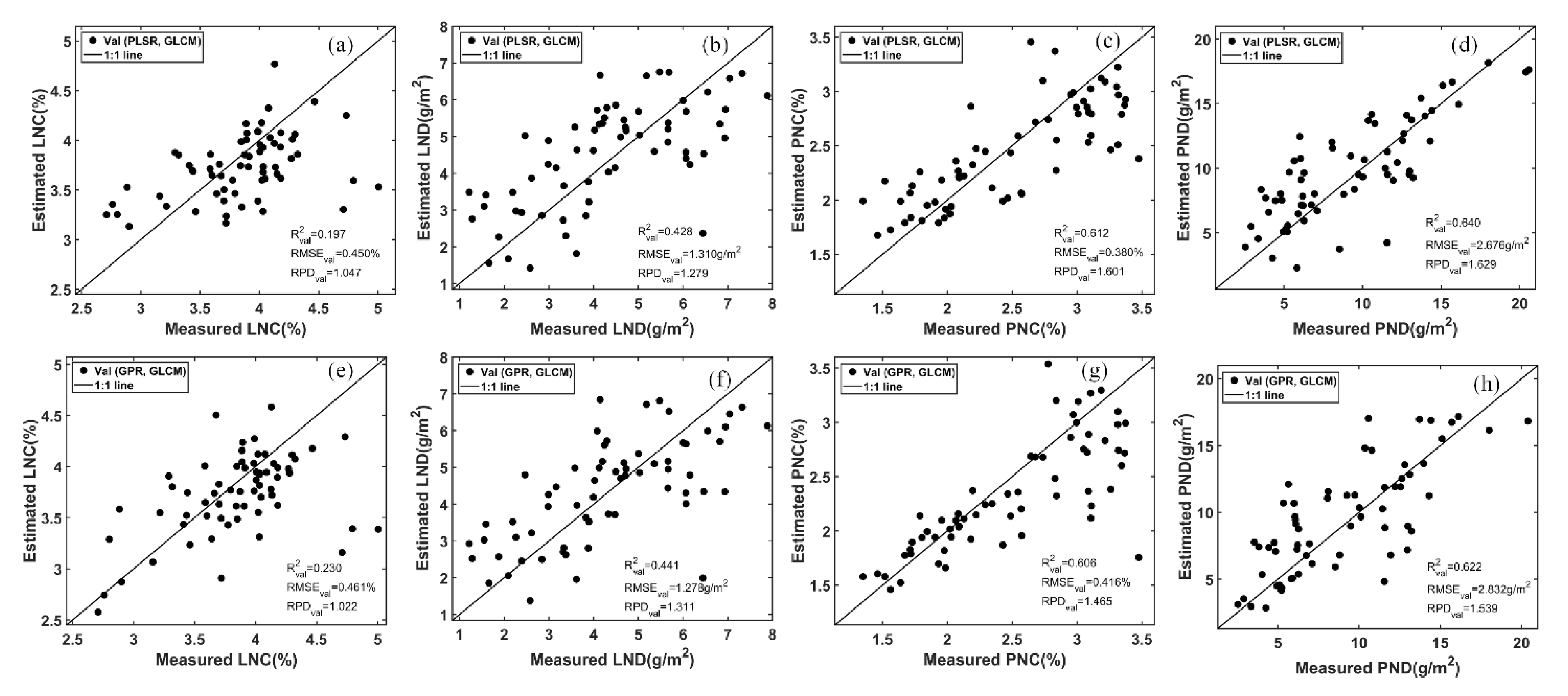

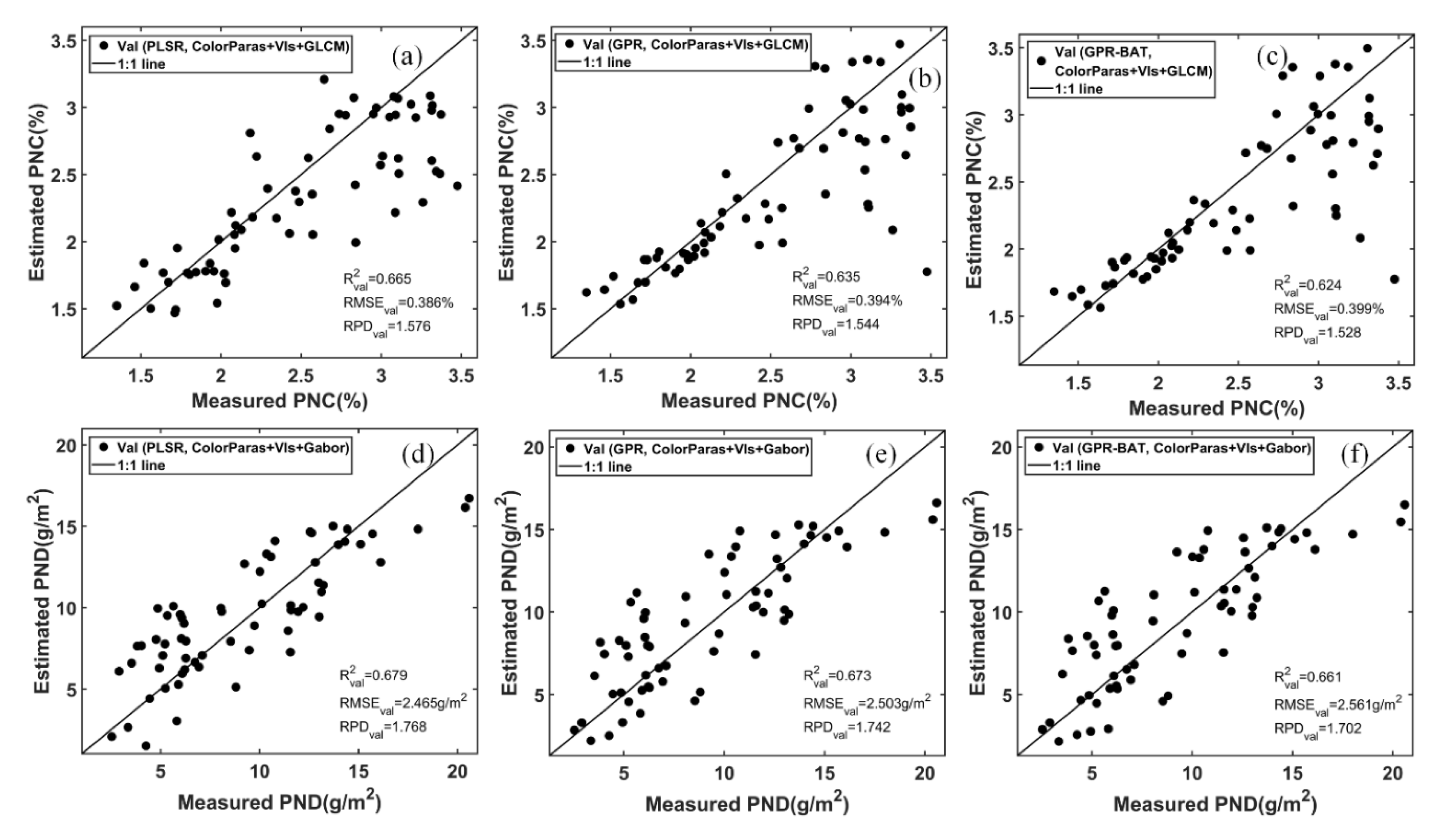

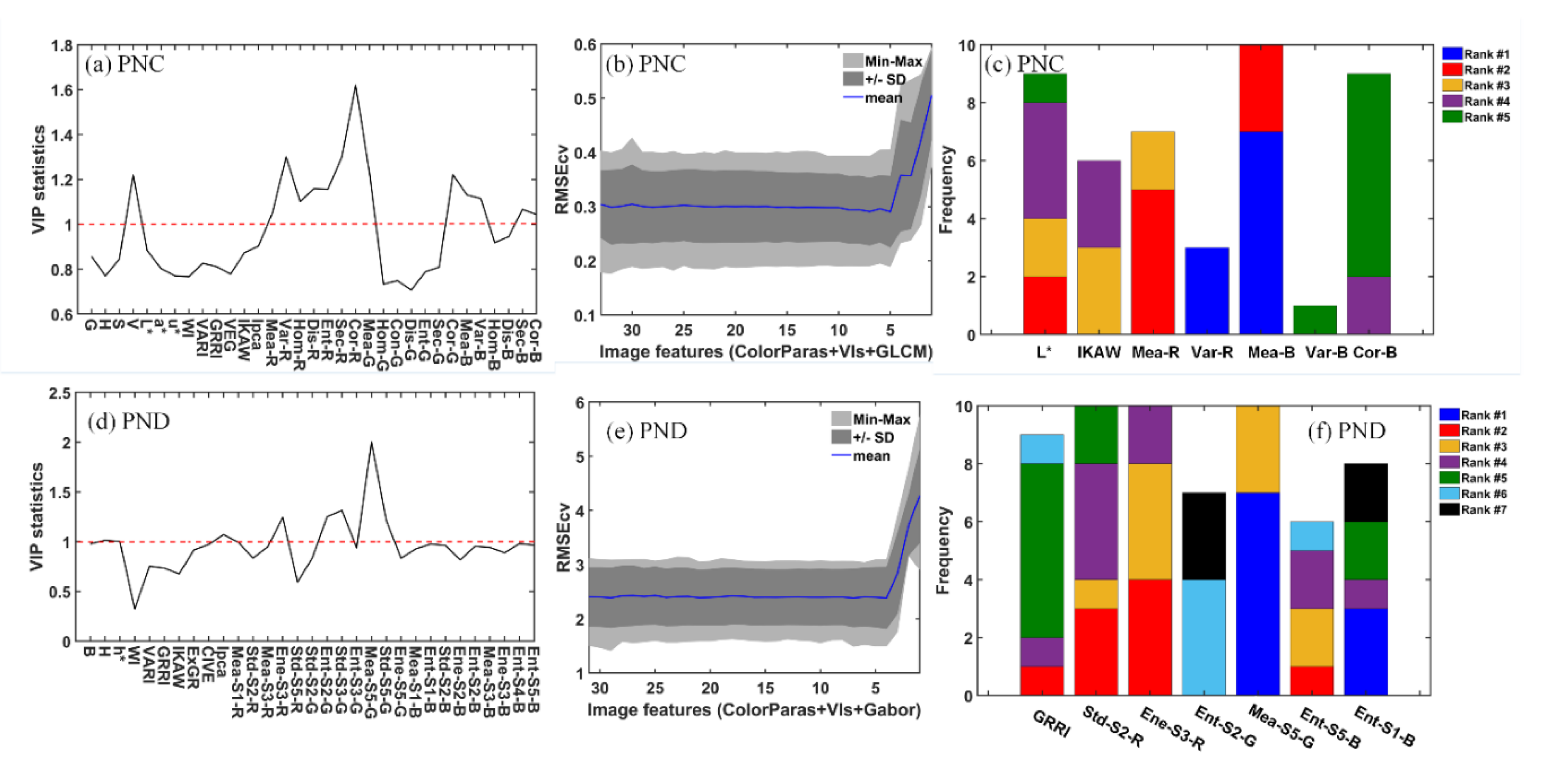

3.5. The Combined Use of RGB-Based VIs, Color Parameters, and Textures for Winter Wheat N Status Estimation

4. Discussion

4.1. Limitations of RGB-Based VIs and Color Parameters in Wheat Winter N Status Estimation

4.2. Ability of Image Textures to Estimation the Winter Wheat N Status

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Diacono, M.; Rubino, P.; Montemurro, F. Precision nitrogen management of wheat. A review. Agron. Sustain. Dev. 2013, 33, 219–241. [Google Scholar] [CrossRef]

- Ali, M.M.; Al-Ani, A.; Eamus, D.; Tan, D.K.Y. Leaf nitrogen determination using non-destructive techniques—A review. J. Plant Nutr. 2017, 40, 928–953. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Li, Z.; Li, H.; Li, Z.; Xu, X.; Song, X.; Zhang, Y.; Duan, D.; Zhao, C.; et al. Progress of hyperspectral data processing and modelling for cereal crop nitrogen monitoring. Comput. Electron. Agric. 2020, 172, 105321. [Google Scholar] [CrossRef]

- Delloye, C.; Weiss, M.; Defourny, P. Retrieval of the canopy chlorophyll content from Sentinel-2 spectral bands to estimate nitrogen uptake in intensive winter wheat cropping systems. Remote. Sens. Environ. 2018, 216, 245–261. [Google Scholar] [CrossRef]

- Croft, H.; Arabian, J.; Chen, J.M.; Shang, J.; Liu, J. Mapping within-field leaf chlorophyll content in agricultural crops for nitrogen management using Landsat-8 imagery. Precis. Agric. 2019, 21, 856–880. [Google Scholar] [CrossRef] [Green Version]

- Moharana, S.; Dutta, S. Spatial variability of chlorophyll and nitrogen content of rice from hyperspectral imagery. ISPRS J. Photogramm. Remote. Sens. 2016, 122, 17–29. [Google Scholar] [CrossRef]

- Nigon, T.J.; Mulla, D.J.; Rosen, C.J.; Cohen, Y.; Alchanatis, V.; Knight, J.; Rud, R. Hyperspectral aerial imagery for detecting nitrogen stress in two potato cultivars. Comput. Electron. Agric. 2015, 112, 36–46. [Google Scholar] [CrossRef]

- Jin, X.; Zarco-Tejada, P.; Schmidhalter, U.; Reynolds, M.P.; Hawkesford, M.J.; Varshney, R.K.; Yang, T.; Nie, C.; Li, Z.; Ming, B.; et al. High-throughput estimation of crop traits: A review of ground and aerial phenotyping platforms. IEEE Geosci. Remote. Sens. Mag. 2020. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Pagola, M.; Ortiz, R.; Irigoyen, I.; Bustince, H.; Barrenechea, E.; Aparicio-Tejo, P.; Lamsfus, C.; Lasa, B. New method to assess barley nitrogen nutrition status based on image colour analysis. Comput. Electron. Agric. 2009, 65, 213–218. [Google Scholar] [CrossRef]

- Saberioon, M.M.; Amin, M.S.M.; Anuar, A.R.; Gholizadeh, A.; Wayayok, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Graeff, S.; Pfenning, J.; Claupein, W.; Liebig, H.-P. Evaluation of Image Analysis to Determine the N-Fertilizer Demand of Broccoli Plants (Brassica oleracea convar. botrytis var. italica). Adv. Opt. Technol. 2008, 2008, 359760. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Zhang, F.; Qian, X.; Zhu, Y.; Shen, G. Quantification of rice canopy nitrogen balance index with digital imagery from unmanned aerial vehicle. Remote. Sens. Lett. 2015, 6, 183–189. [Google Scholar] [CrossRef]

- Liu, S.; Li, L.; Gao, W.; Zhang, Y.; Liu, Y.; Wang, S.; Lu, J. Diagnosis of nitrogen status in winter oilseed rape (Brassica napus L.) using in-situ hyperspectral data and unmanned aerial vehicle (UAV) multispectral images. Comput. Electron. Agric. 2018, 151, 185–195. [Google Scholar] [CrossRef]

- Yang, B.; Wang, M.; Sha, Z.; Wang, B.; Chen, J.; Yao, X.; Cheng, T.; Cao, W.; Zhu, Y. Evaluation of Aboveground Nitrogen Content of Winter Wheat Using Digital Imagery of Unmanned Aerial Vehicles. Sensors 2019, 19, 4416. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.; Huang, W.; Kong, W.; Ye, H.; Luo, J.; Chen, P. Remote estimation of canopy nitrogen content in winter wheat using airborne hyperspectral reflectance measurements. Adv. Space Res. 2016, 58, 1627–1637. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L. Using Hyperspectral Remote Sensing Data for Retrieving Canopy Chlorophyll and Nitrogen Content. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2012, 5, 574–583. [Google Scholar] [CrossRef]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.; McMurtrey, J.E.; Walthall, C.L. Evaluation of Digital Photography from Model Aircraft for Remote Sensing of Crop Biomass and Nitrogen Status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Kawashima, S. An Algorithm for Estimating Chlorophyll Content in Leaves Using a Video Camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef] [Green Version]

- Fu, Y.; Taneja, P.; Lin, S.; Ji, W.; Adamchuk, V.; Daggupati, P.; Biswas, A. Predicting soil organic matter from cellular phone images under varying soil moisture. Geoderma 2020, 361, 114020. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Li, S.; Yuan, F.; Ata-Ul-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef] [Green Version]

- Shen, L.; Bai, L. A review on Gabor wavelets for face recognition. Pattern Anal. Appl. 2006, 9, 273–292. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Verrelst, J.; Muñoz-Marí, J.; Laparra, V.; Mateo-Jimenez, F.; Gomez-Dans, J. A Survey on Gaussian Processes for Earth-Observation Data Analysis: A Comprehensive Investigation. IEEE Geosci. Remote Sens. Mag. 2016, 4, 58–78. [Google Scholar] [CrossRef] [Green Version]

- Verrelst, J.; Alonso, L.; Camps-Valls, G.; Delegido, J.; Moreno, J. Retrieval of Vegetation Biophysical Parameters Using Gaussian Process Techniques. IEEE Trans. Geosci. Remote. Sens. 2012, 50, 1832–1843. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking computer vision aloft-archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Recognising panoramas. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 2, pp. 1218–1225. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A Comparison of Crop Parameters Estimation Using Images from UAV-Mounted Snapshot Hyperspectral Sensor and High-Definition Digital Camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- Cheng, H.; Jiang, X.; Sun, Y.; Wang, J. Color image segmentation: Advances and prospects. Pattern Recognit. 2001, 34, 2259–2281. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Gamon, J.A.; Surfus, J.S. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote. Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Mao, W.; Wang, Y.; Wang, Y. Real-time Detection of Between-row Weeds Using Machine Vision. In Proceedings of the 2003 ASAE Annual Meeting 2003, Las Vegas, NV, USA, 27–30 July 2003; p. 1. [Google Scholar] [CrossRef]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Kobe, Japan, 20–24 July 2003; Volume 2, pp. b1079–b1083. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.D.; Wheeler, H. Automated Crop and Weed Monitoring in Widely Spaced Cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Sulik, J.J.; Long, D.S. Spectral considerations for modeling yield of canola. Remote. Sens. Environ. 2016, 184, 161–174. [Google Scholar] [CrossRef] [Green Version]

- García-Mateos, G.; Hernández-Hernández, J.L.; Escarabajal-Henarejos, D.; Jaén-Terrones, S.; Molina-Martínez, J. Study and comparison of color models for automatic image analysis in irrigation management applications. Agric. Water Manag. 2015, 151, 158–166. [Google Scholar] [CrossRef]

- Rossel, R.A.V.; Minasny, B.; Roudier, P.; McBratney, A. Colour space models for soil science. Geoderma 2006, 133, 320–337. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man, Cybern. 1973, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Jones, J.P.; Palmer, L.A. An evaluation of the two-dimensional Gabor filter model of simple receptive fields in cat striate cortex. J. Neurophysiol. 1987, 58, 1233–1258. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grigorescu, S.E.; Petkov, N.; Kruizinga, P. Comparison of texture features based on Gabor filters. IEEE Trans. Image Process. 2002, 11, 1160–1167. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, C.; Wechsler, H. Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans. Image Process. 2002, 11, 467–476. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, M.; Zhang, L. Gabor Feature Based Sparse Representation for Face Recognition with Gabor Occlusion Dictionary. In Proceedings of the European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; Springer: Berlin, Germany, 2010; pp. 448–461. [Google Scholar] [CrossRef] [Green Version]

- Gai, S. Efficient Color Texture Classification Using Color Monogenic Wavelet Transform. Neural Process. Lett. 2017, 32, 443–626. [Google Scholar] [CrossRef]

- Wold, S.; Sjöström, M.; Eriksson, L. PLS-regression: A basic tool of chemometrics. Chemom. Intell. Lab. Syst. 2001, 58, 109–130. [Google Scholar] [CrossRef]

- Geladi, P.; Kowalski, B.R. Partial least-squares regression: A tutorial. Anal. Chim. Acta 1986, 185, 1–17. [Google Scholar] [CrossRef]

- Cho, M.A.; Skidmore, A.; Corsi, F.; Van Wieren, S.E.; Sobhan, I. Estimation of green grass/herb biomass from airborne hyperspectral imagery using spectral indices and partial least squares regression. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 414–424. [Google Scholar] [CrossRef]

- Kooistra, L.; Salas, E.; Clevers, J.; Wehrens, R.; Leuven, R.; Nienhuis, P.; Buydens, L. Exploring field vegetation reflectance as an indicator of soil contamination in river floodplains. Environ. Pollut. 2004, 127, 281–290. [Google Scholar] [CrossRef]

- Farrés, M.; Platikanov, S.Y.; Tsakovski, S.L.; Tauler, R. Comparison of the variable importance in projection (VIP) and of the selectivity ratio (SR) methods for variable selection and interpretation. J. Chemom. 2015, 29, 528–536. [Google Scholar] [CrossRef]

- Chong, I.-G.; Jun, C.-H. Performance of some variable selection methods when multicollinearity is present. Chemom. Intell. Lab. Syst. 2005, 78, 103–112. [Google Scholar] [CrossRef]

- Verrelst, J.; Rivera, J.P.; Gitelson, A.; Delegido, J.; Moreno, J.; Camps-Valls, G. Spectral band selection for vegetation properties retrieval using Gaussian processes regression. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 554–567. [Google Scholar] [CrossRef]

- Chang, C.-W.; Laird, D.A.; Mausbach, M.J.; Hurburgh, C.R. Near-Infrared Reflectance Spectroscopy-Principal Components Regression Analyses of Soil Properties. Soil Sci. Soc. Am. J. 2001, 65, 480–490. [Google Scholar] [CrossRef] [Green Version]

- Mercado-Luna, A.; Rico-Garcia, E.; Lara-Herrera, A.; Soto-Zarazua, G.; Ocampo-Velazquez, R.; Guevara-Gonzalez, R.; Herrera-Ruiz, G.; Torres-Pacheco, I. Nitrogen determination on tomato (Lycopersicon esculentum Mill.) seedlings by color image analysis (RGB). Afr. J. Biotechnol. 2010, 9, 5326–5332. [Google Scholar]

- Oppelt, N.; Mauser, W. Hyperspectral monitoring of physiological parameters of wheat during a vegetation period using AVIS data. Int. J. Remote. Sens. 2004, 25, 145–159. [Google Scholar] [CrossRef]

- He, L.; Zhang, H.-Y.; Zhang, Y.-S.; Song, X.; Feng, W.; Kang, G.-Z.; Wang, C.-Y.; Guo, T.-C. Estimating canopy leaf nitrogen concentration in winter wheat based on multi-angular hyperspectral remote sensing. Eur. J. Agron. 2016, 73, 170–185. [Google Scholar] [CrossRef]

- Wang, W.; Yao, X.; Yao, X.; Tian, Y.; Liu, X.; Ni, J.; Cao, W.; Zhu, Y. Estimating leaf nitrogen concentration with three-band vegetation indices in rice and wheat. Field Crop. Res. 2012, 129, 90–98. [Google Scholar] [CrossRef]

- Nichol, J.E.; Sarker, L.R. Improved Biomass Estimation Using the Texture Parameters of Two High-Resolution Optical Sensors. IEEE Trans. Geosci. Remote. Sens. 2010, 49, 930–948. [Google Scholar] [CrossRef] [Green Version]

- Baret, F.; Houles, V.; Guerif, M. Quantification of plant stress using remote sensing observations and crop models: The case of nitrogen management. J. Exp. Bot. 2006, 58, 869–880. [Google Scholar] [CrossRef] [Green Version]

- Zhao, D.; Reddy, K.R.; Kakani, V.G.; Reddy, V. Nitrogen deficiency effects on plant growth, leaf photosynthesis, and hyperspectral reflectance properties of sorghum. Eur. J. Agron. 2005, 22, 391–403. [Google Scholar] [CrossRef]

- Lemaire, G.; Jeuffroy, M.-H.; Gastal, F. Diagnosis tool for plant and crop N status in vegetative stage. Eur. J. Agron. 2008, 28, 614–624. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2018, 20, 611–629. [Google Scholar] [CrossRef]

- Wood, E.M.; Pidgeon, A.M.; Radeloff, V.C.; Keuler, N.S. Image texture as a remotely sensed measure of vegetation structure. Remote Sens. Environ. 2012, 121, 516–526. [Google Scholar] [CrossRef]

| RGB-Based VI | Formula | Reference |

|---|---|---|

| Woebbecke index (WI) | (g − b)/(r − g) | [31] |

| Excess Green Vegetation Index (ExG) | 2g − r − b | [31] |

| Kawashima index (IKAW) | (r − b)/(r + b) | [20] |

| Green-red ratio index (GRRI) | r/g | [32] |

| Visible atmospherically-resistant index (VARI) | (g − r)/(g + r − b) | [33] |

| Excess Blue Vegetation Index (ExB) | 1.4b − g | [34] |

| Colour Index of Vegetation Extraction (CIVE) | 0.441r − 0.811g + 0.385b + 18.78745 | [35] |

| Normalized green-red difference index (NGRDI) | (g − r)/(g + r) | [19] |

| Vegetative index (VEG) | g/(ra b(1-a)) a = 0.667 | [36] |

| Excess Red Vegetation Index (ExR) | 1.4r − g | [37] |

| Excess green minus Excess Red Vegetation Index (ExGR) | 3g − 2.4r − b | [37] |

| Green leaf index (GLI) | (2g – r − b)/(2g + r + b) | [38] |

| Principal component analysis index (IPCA) | IPCA = 0.994|r − b|+0.961|g − b| + 0.914|g − r| | [11] |

| Modified green blue vegetation index (MGRVI) | (g2 − r2)/(g2 + r2) | [39] |

| Red green blue vegetation index (RGBVI) | (g2 – b × r)/(g2 + b × r) | [39] |

| Normalized difference yellowness index (NDYI) | (g − b)/(g + b) | [40] |

| Color Space | Color Parameter | Definition and Conversion Functions |

|---|---|---|

| RGB | R | combination of lightness and chromaticity (hue and chroma), range from 0 (darkness) to 255 (whiteness), here normalized to [0, 1] and expressed as r |

| G | combination of lightness and chromaticity (hue and chroma), range from 0 (darkness) to 255 (whiteness), here normalized to [0, 1] and expressed as g | |

| B | combination of lightness and chromaticity (hue and chroma), range from 0 (darkness) to 255 (whiteness), here normalized to [0, 1] and expressed as b | |

| HSV | H | hue, H = p(g − b) if Cmax = r; p(b − r)+ 120 if Cmax = g; p(r − g)+ 240 if Cmax = b; (Δ = Cmax − Cmin, Cmax = max(r, g, b), Cmin = min(r, g, b), p = 60/Δ) |

| S | chroma, S = Δ/Cmax | |

| V | lightness, V = Cmax | |

| L*a*b* | L* | lightness, range from 0 (black) to 100 (white), L* = 116(Y/Y0)1/3 −16 if Y/Y0 > 0.008856; 903.3(Y/Y0) otherwise (Y = 0.213r + 0.751g + 0.072b, Y0 = 100) |

| A* | chroma, redness (positive a*) or greenness (negative a*), a* = 500×[(X/X0)1/3− (Y/Y0)1/3] (X = 0.412r + 0.358g + 0.180b, X0 = 95.047) | |

| B* | chroma, yellowness (positive b*) or blueness (negative b*), b* = 200[(Y/Y0)1/3− (Z/Z0)1/3] (Z = 0.019r + 0.119g + 0.950b, Z0 = 108.883) | |

| L*c*h* | L* | has the same definition with L* in L *a*b* |

| C* | chroma, c* = sqrt(a*^2+b*^2) | |

| H* | hue, h* = arctan(b*/a*) | |

| L*u*v* | L* | has the same definition with L* in L*a*b* |

| U* | chroma, redness (positive u*) or greenness (negative u*), u* = 13L*[4X/(X+15Y+3Z) − 4 × 0/(X0 + 15Y0 + 3Z0)] | |

| V* | chroma, yellowness (positive v*) or blueness (negative v*), v* = 13L*[9Y/(X+15Y+3Z) − 9×Y0/(X0 + 15Y0 + 3Z0)] |

| N Status Indicator | Min. | Mean | Max. | Std. | CV (%) | Kurtosis | |

|---|---|---|---|---|---|---|---|

| LNC | Calibration | 2.45 | 3.83 | 5.07 | 0.57 | 14.85 | 2.70 |

| Validation | 2.71 | 3.85 | 5.01 | 0.47 | 12.25 | 3.52 | |

| PNC | Calibration | 1.13 | 2.45 | 4.04 | 0.69 | 27.90 | 2.12 |

| Validation | 1.35 | 2.50 | 3.48 | 0.61 | 24.40 | 1.69 | |

| LND | Calibration | 0.84 | 4.73 | 10.34 | 2.13 | 45.08 | 2.46 |

| Validation | 1.22 | 4.33 | 7.89 | 1.68 | 38.74 | 2.18 | |

| PND | Calibration | 1.30 | 10.10 | 22.88 | 4.81 | 47.63 | 2.52 |

| Validation | 2.54 | 9.20 | 20.58 | 4.36 | 47.41 | 2.66 | |

| Selected Textures | |

|---|---|

| GLCM-based textures | Mea-R, Var-R, Hom-R, Dis-R, Ent-R, Sec-R, Cor-R, Mea-G, Var-G, Hom-G, Dis-G, Ent-G, Sec-G, Cor-G, Mea-B, Hom-B, Con-B, Dis-B, Sec-B, Cor-B |

| Gabor-based textures | Mea-S1-R, Std-S2-R, Std-S3-R, Ene-S3-R, Std-S5-R, Std-S2-G, Mea-S3-G, Ene-S3-G, Mea-S5-G, Std-S5-G, Mea-S1-B, Std-S1-B, Mea-S2-B, Std-S2-B, Ene-S2-B, Ent-S2-B, Mea-S3-B, Ene-S3-B, Ent-S4-B |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, Y.; Yang, G.; Li, Z.; Song, X.; Li, Z.; Xu, X.; Wang, P.; Zhao, C. Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sens. 2020, 12, 3778. https://doi.org/10.3390/rs12223778

Fu Y, Yang G, Li Z, Song X, Li Z, Xu X, Wang P, Zhao C. Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sensing. 2020; 12(22):3778. https://doi.org/10.3390/rs12223778

Chicago/Turabian StyleFu, Yuanyuan, Guijun Yang, Zhenhai Li, Xiaoyu Song, Zhenhong Li, Xingang Xu, Pei Wang, and Chunjiang Zhao. 2020. "Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression" Remote Sensing 12, no. 22: 3778. https://doi.org/10.3390/rs12223778

APA StyleFu, Y., Yang, G., Li, Z., Song, X., Li, Z., Xu, X., Wang, P., & Zhao, C. (2020). Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sensing, 12(22), 3778. https://doi.org/10.3390/rs12223778