Feasibility of Burned Area Mapping Based on ICESAT−2 Photon Counting Data

Abstract

:1. Introduction

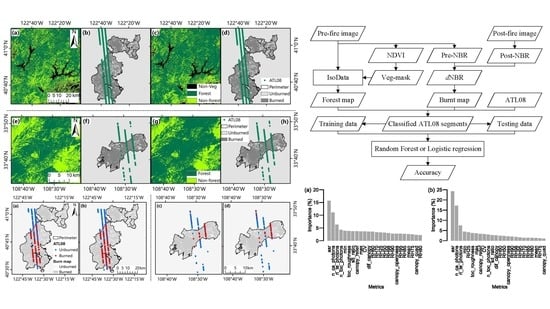

2. Materials and Methods

2.1. Study Area

2.2. Data

2.2.1. ATL08

2.2.2. Sentinel and Landsat Data

2.3. Forest Cover Mapping

2.4. Fitting Models for Burned Area Mapping

2.4.1. Fitting Random Forest Model

2.4.2. Fitting Logistic Regression Model

3. Results

3.1. Burned Area Mapping by Random Forest

3.2. Burned Area Mapping by Logistic Regression

3.3. Fire Severity Prediction Based on Random Forest

4. Discussion

4.1. Comparison of Sentinel−2 and Landsat 8

4.2. Comparison of Classification Methods

4.3. Comparison of LiDAR Metrics

4.4. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jolly, W.M.; Cochrane, M.A.; Freeborn, P.H.; Holden, Z.A.; Brown, T.J.; Williamson, G.J.; Bowman, D.M.J.S. Climate-induced variations in global wildfire danger from 1979 to 2013. Nat. Commun. 2015, 6, 7537. [Google Scholar] [CrossRef]

- Schoennagel, T.; Balch, J.K.; Brenkert-Smith, H.; Dennison, P.E.; Harvey, B.J.; Krawchuk, M.A.; Mietkiewicz, N.; Morgan, P.; Moritz, M.A.; Rasker, R.; et al. Adapt to more wildfire in western North American forests as climate changes. Proc. Natl. Acad. Sci. USA 2017, 114, 4582–4590. [Google Scholar] [CrossRef] [Green Version]

- Yue, C.; Ciais, P.; Cadule, P.; Thonicke, K.; van Leeuwen, T.T. Modelling the role of fires in the terrestrial carbon balance by incorporating SPITFIRE into the global vegetation model ORCHIDEE—Part 2: Carbon emissions and the role of fires in the global carbon balance. Geosci. Model Dev. 2015, 8, 1321–1338. [Google Scholar] [CrossRef] [Green Version]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area 0from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Malambo, L.; Heatwole, C.D. Automated training sample definition for seasonal burned area mapping. ISPRS J. Photogramm. Remote Sens. In press.

- Pleniou, M.; Koutsias, N. Sensitivity of spectral reflectance values to different burn and vegetation ratios: A multi-scale approach applied in a fire affected area. ISPRS J. Photogramm. Remote Sens. 2013, 79, 199–210. [Google Scholar] [CrossRef]

- Koutsias, N.; Karteris, M. Burned area mapping using logistic regression modeling of a single post-fire Landsat-5 Thematic Mapper image. Int. J. Remote Sens. 2000, 21, 673–687. [Google Scholar] [CrossRef]

- Dragozi, E.; Gitas, I.Z.; Stavrakoudis, D.G.; Theocharis, J.B. Burned Area Mapping Using Support Vector Machines and the FuzCoC Feature Selection Method on VHR IKONOS Imagery. Remote Sens. 2014, 6, 12005–12036. [Google Scholar] [CrossRef] [Green Version]

- Escuin, S.; Navarro, R.; Fernández, P. Fire severity assessment by using NBR (Normalized Burn Ratio) and NDVI (Normalized Difference Vegetation Index) derived from LANDSAT TM/ETM images. Int. J. Remote Sens. 2008, 29, 1053–1073. [Google Scholar] [CrossRef]

- Fraser, R.H.; Van der Sluijs, J.; Hall, R.J. Calibrating Satellite-Based Indices of Burn Severity from UAV-Derived Metrics of a Burned Boreal Forest in NWT, Canada. Remote Sens. 2017, 9, 279. [Google Scholar] [CrossRef] [Green Version]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.-J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.T.; Williams, B.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J.; et al. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- De Santis, A.; Chuvieco, E. GeoCBI: A modified version of the Composite Burn Index for the initial assessment of the short-term burn severity from remotely sensed data. Remote Sens. Environ. 2009, 113, 554–562. [Google Scholar] [CrossRef]

- Kato, A.; Moskal, L.M.; Batchelor, J.L.; Thau, D.; Hudak, A.T. Relationships between Satellite-Based Spectral Burned Ratios and Terrestrial Laser Scanning. Forests 2019, 10, 444. [Google Scholar] [CrossRef] [Green Version]

- Lefsky, M.A.; Cohen, W.B.; Harding, D.J.; Parker, G.G.; Acker, S.A.; Gower, S.T. Lidar remote sensing of above-ground biomass in three biomes. Glob. Ecol. Biogeogr. 2002, 11, 393–399. [Google Scholar] [CrossRef] [Green Version]

- Garcia, M.; Saatchi, S.; Casas, A.; Koltunov, A.; Ustin, S.; Ramirez, C.; Garcia-Gutierrez, J.; Balzter, H. Quantifying biomass consumption and carbon release from the California Rim fire by integrating airborne LiDAR and Landsat OLI data. J. Geophys. Res. Biogeosci. 2017, 122, 340–353. [Google Scholar] [CrossRef]

- Montealegre, A.L.; Lamelas, M.T.; Tanase, M.A.; de la Riva, J. Forest Fire Severity Assessment Using ALS Data in a Mediterranean Environment. Remote Sens. 2014, 6, 4240–4265. [Google Scholar] [CrossRef] [Green Version]

- Popescu, S.C. Estimating biomass of individual pine trees using airborne lidar. Biomass Bioenergy 2007, 31, 646–655. [Google Scholar] [CrossRef]

- Wang, C.; Glenn, N.F. Estimation of fire severity using pre- and post-fire LiDAR data in sagebrush steppe rangelands. Int. J. Wildland Fire 2009, 18, 848. [Google Scholar] [CrossRef] [Green Version]

- Narine, L.L.; Popescu, S.; Neuenschwander, A.; Zhou, T.; Srinivasan, S.; Harbeck, K. Estimating aboveground biomass and forest canopy cover with simulated ICESat-2 data. Remote Sens. Environ. 2019, 224, 1–11. [Google Scholar] [CrossRef]

- Popescu, S.C.; Zhou, T.; Nelson, R.; Neuenschwander, A.; Sheridan, R.; Narine, L.; Walsh, K.M. Photon counting LiDAR: An adaptive ground and canopy height retrieval algorithm for ICESat-2 data. Remote Sens. Environ. 2018, 208, 154–170. [Google Scholar] [CrossRef]

- Zwally, H.J.; Schutz, B.; Abdalati, W.; Abshire, J.; Bentley, C.; Brenner, A.; Bufton, J.; Dezio, J.; Hancock, D.; Harding, D.; et al. ICESat’s laser measurements of polar ice, atmosphere, ocean, and land. J. Geodyn. 2002, 34, 405–445. [Google Scholar] [CrossRef] [Green Version]

- Lefsky, M.A. A global forest canopy height map from the Moderate Resolution Imaging Spectroradiometer and the Geoscience Laser Altimeter System. Geophys. Res. Lett. 2010, 37. [Google Scholar] [CrossRef] [Green Version]

- Goetz, S.J.; Sun, M.; Baccini, A.; Beck, P.S.A. Synergistic use of spaceborne lidar and optical imagery for assessing forest disturbance: An Alaska case study. J. Geophys. Res. Biogeosci. 2010, 115, doi. [Google Scholar] [CrossRef] [Green Version]

- García, M.; Popescu, S.; Riaño, D.; Zhao, K.; Neuenschwander, A.; Agca, M.; Chuvieco, E. Characterization of canopy fuels using ICESat/GLAS data. Remote Sens. Environ. 2012, 123, 81–89. [Google Scholar] [CrossRef]

- Markus, T.; Neumann, T.; Martino, A.; Abdalati, W.; Brunt, K.; Csatho, B.; Farrell, S.; Fricker, H.; Gardner, A.; Harding, D.; et al. The Ice, Cloud, and land Elevation Satellite-2 (ICESat-2): Science requirements, concept, and implementation. Remote Sens. Environ. 2017, 190, 260–273. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Wu, L.; Zhu, X.; Lawes, R.; Dunkerley, D.; Zhang, H. Comparison of machine learning algorithms for classification of LiDAR points for characterization of canola canopy structure. Int. J. Remote Sens. 2019, 40, 5973–5991. [Google Scholar] [CrossRef]

- Krishna Moorthy, S.M.; Bao, Y.; Calders, K.; Schnitzer, S.A.; Verbeeck, H. Semi-automatic extraction of liana stems from terrestrial LiDAR point clouds of tropical rainforests. ISPRS J. Photogramm. Remote Sens. 2019, 154, 114–126. [Google Scholar] [CrossRef] [PubMed]

- Neuenschwander, A.; Pitts, K. The ATL08 land and vegetation product for the ICESat-2 Mission. Remote Sens. Environ. 2019, 221, 247–259. [Google Scholar] [CrossRef]

- ATLAS/ICESat-2 L3A Land and Vegetation Height, Version 1 | National Snow and Ice Data Center. Available online: https://nsidc.org/data/ATL08/versions/1 (accessed on 12 September 2019).

- EarthExplorer—Home. Available online: https://earthexplorer.usgs.gov/ (accessed on 12 September 2019).

- Sen2Cor | STEP. Available online: https://step.esa.int/main/third-party-plugins-2/sen2cor/ (accessed on 12 September 2019).

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- The Normalized Burn Ratio. Available online: https://burnseverity.cr.usgs.gov/pdfs/lav4_br_cheatsheet.pdf (accessed on 6 December 2019).

- Miller, J.D.; Knapp, E.E.; Key, C.H.; Skinner, C.N.; Isbell, C.J.; Creasy, R.M.; Sherlock, J.W. Calibration and validation of the relative differenced Normalized Burn Ratio (RdNBR) to three measures of fire severity in the Sierra Nevada and Klamath Mountains, California, USA. Remote Sens. Environ. 2009, 113, 645–656. [Google Scholar] [CrossRef]

- Zhao, K.; Popescu, S. Lidar-based mapping of leaf area index and its use for validating GLOBCARBON satellite LAI product in a temperate forest of the southern USA. Remote Sens. Environ. 2009, 113, 1628–1645. [Google Scholar] [CrossRef]

| Label | Group | Long Name | Description |

|---|---|---|---|

| canopy_h_metrics: RH25, RH50, RH60, RH70, RH75, RH80, RH85, RH90, RH95, RH98 | gtx/land_segments/canopy | Canopy height metrics | Height metrics based on the cumulative distribution of relative canopy heights above the interpolated ground surface. The height metrics are calculated at the following percentiles: 25,50,60,70,75,80,85,90,95, 98% |

| canopy_openness | gtx/land_segments/canopy | Canopy openness | Standard Deviation of all photons classified as canopy photons within the segment to provide inference of canopy openness |

| h_canopy_quad | gtx/land_segments/canopy | Canopy quadratic mean | The quadratic mean height of individual classified relative canopy photon heights above the estimated terrain surface |

| h_dif_canopy | gtx/land_segments/canopy | Canopy diff to median height | Difference between RH98 and RH50 |

| h_max_canopy | gtx/land_segments/canopy | Maximum canopy height | Relative maximum of individual canopy heights within segment |

| h_mean_canopy | gtx/land_segments/canopy | Mean canopy height | Mean of individual relative canopy heights within segment |

| h_min_canopy | gtx/land_segments/canopy | Minimum canopy height | The minimum of relative individual canopy heights within segment |

| n_ca_photons | gtx/land_segments/canopy | Number of canopy photons | The number of photons classified as canopy within the segment |

| n_toc_photons | gtx/land_segments/canopy | Number of top of canopy photons | The number of photons classified as top of canopy within the segment |

| toc_roughness | gtx/land_segments/canopy | Top of canopy roughness | Standard deviation of the relative heights of all photons classified as top of canopy within the segment |

| asr | gtx/land_segments | Apparent surface reflectance | Apparent surface reflectance of the 100 m segment |

| n_te_photons | gtx/land_segments/terrain | Number of ground photons | The number of the photons classified as terrain within the segment |

| CV | - | Coefficient of variation | canopy_openness/h_mean_canopy |

| sd_ratio | - | Standard deviation ratio | toc_roughness/canopy_openness |

| canopy_relief | - | Canopy relief ratio | (h_mean_canopy-h_min_canopy)/(h_max_canopy-h_min_canopy) |

| Sentinel−2 Bands | Center Wavelength | Lower-Upper | Landsat 8 Bands | Center Wavelength | Lower-Upper |

|---|---|---|---|---|---|

| - | - | 1 | 0.443 | 0.435–0.451 | |

| 2 | 0.494 | 0.439–0.535 | 2 | 0.482 | 0.452–0.512 |

| 3 | 0.560 | 0.537–0.582 | 3 | 0.561 | 0.533–0.590 |

| 4 | 0.665 | 0.646–0.685 | 4 | 0.655 | 0.636–0.673 |

| 8 | 0.834 | 0.767–0.908 | 5 | 0.865 | 0.851–0.879 |

| 11 | 1.612 | 1.539–1.681 | 6 | 1.609 | 1.567–1.651 |

| 12 | 2.194 | 2.072–2.312 | 7 | 2.201 | 2.107–2.294 |

| dNBR Value | Severity Level |

|---|---|

| −0.5 ≤ dNBR < −0.25 | High Regrowth |

| −0.25 ≤ dNBR < −0.1 | Low Regrowth |

| −0.1 ≤ dNBR < 0.1 | Unburned |

| 0.1 ≤ dNBR < 0.27 | Low |

| 0.27 ≤ dNBR < 0.44 | Moderate-Low |

| 0.44 ≤ dNBR < 0.66 | Moderate-High |

| 0.66 ≤ dNBR < 1.33 | High |

| Reference | Reference | ||||||||||

| Sentinel−2 map | Unburn | Burned | R_Sum | U_Acc | Landsat 8 map | Unburn | Burned | R_Sum | U_Acc | ||

| Unburn | 95 | 18 | 113 | 84.07% | Unburn | 95 | 34 | 129 | 73.64% | ||

| Burned | 12 | 53 | 65 | 81.54% | Burned | 19 | 75 | 94 | 79.79% | ||

| C_Sum | 107 | 71 | 178 | C_Sum | 114 | 109 | 223 | ||||

| P_Acc | 88.79% | 74.65% | P_Acc | 83.33% | 68.81% | ||||||

| Reference | Reference | ||||||||||

| Sentinel−2 map | Unburn | Burned | R_Sum | U_Acc | Landsat 8 map | Unburn | Burned | R_Sum | U_Acc | ||

| Unburn | 85 | 24 | 109 | 77.98% | Unburn | 83 | 37 | 120 | 69.17% | ||

| Burned | 22 | 47 | 69 | 68.12% | Burned | 31 | 72 | 103 | 69.90% | ||

| C_Sum | 107 | 71 | 178 | C_Sum | 114 | 109 | 223 | ||||

| P_Acc | 79.44% | 66.20% | P_Acc | 72.81% | 66.06% | ||||||

| Metrics for Random Forest | Total OOB Error | OOB of Unburned | OOB of Burned |

|---|---|---|---|

| Use 24 metrics | 18.24% | 13.52% | 24.60% |

| Remove asr | 22.80% | 18.23% | 28.96% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, M.; Popescu, S.; Malambo, L. Feasibility of Burned Area Mapping Based on ICESAT−2 Photon Counting Data. Remote Sens. 2020, 12, 24. https://doi.org/10.3390/rs12010024

Liu M, Popescu S, Malambo L. Feasibility of Burned Area Mapping Based on ICESAT−2 Photon Counting Data. Remote Sensing. 2020; 12(1):24. https://doi.org/10.3390/rs12010024

Chicago/Turabian StyleLiu, Meng, Sorin Popescu, and Lonesome Malambo. 2020. "Feasibility of Burned Area Mapping Based on ICESAT−2 Photon Counting Data" Remote Sensing 12, no. 1: 24. https://doi.org/10.3390/rs12010024

APA StyleLiu, M., Popescu, S., & Malambo, L. (2020). Feasibility of Burned Area Mapping Based on ICESAT−2 Photon Counting Data. Remote Sensing, 12(1), 24. https://doi.org/10.3390/rs12010024