1. Introduction

Hyperspectral image (HSI), covering hundreds of continuous spectral bands, has been widely used in lots of different applications [

1,

2,

3]. Due to spatial resolution, pixels in remote sensing HSI often consist of mixtures of different classes of land covers (known as endmembers) [

4,

5]. This mixing phenomenon poses great challenges to HSI processing problems, such as segmentation, classification, location estimation, and recognition [

6,

7]. Therefore, many researchers focus on the field of hyperspectral unmixing, the aim of which is to estimate the endmembers and their abundances [

8,

9]. The major challenges include scenarios where there is a limited availability of samples for training, different classes of samples with similar spectral features, and various spectral features in the same classes of samples [

10,

11].

The linear spectral mixture model and nonlinear spectral mixture model are the two approaches for addressing these difficulties and have been discussed in [

12]. Many methods, including statistics-based, geometrical-based, and nonnegative matrix factorization, have been used to solve the linear model, which shows good performances in certain situations [

13,

14,

15,

16,

17,

18]. These linear spectral unmixing (LSU) algorithms usually involve endmember extraction and abundance estimation. However, because the linear model does not exactly match the real scenarios, it cannot obtain appropriate unmixing results in most cases [

2,

19,

20]. Therefore, it is necessary to develop the nonlinear model for unmixing [

21,

22,

23].

In recent years, artificial neural networks (ANNs) and deep learning (DL) have been making a great success in the HSI processing area, including for unmixing. In [

24], artificial neural network classification was discussed for mixed pixels with land cover composition, and both re-scaled activation levels and percentage cover were obtained. The auto-associative neural network (AANN) was proposed to compute the pixel abundance by using the NN structure for nonlinear unmixing in [

25]. This algorithm indicated that features could firstly be extracted from pixels, and then the second stage performed mapping from these features to abundances, while the feature extraction process should be trained separately, and spatial information was not used in the method. A special case of the ANN, called the nonnegative sparse autoencoder (NNSAE) [

26,

27,

28], was introduced to achieve hyperspectral unmixing for the pixels with outliers and low signal-to-noise ratio. In [

29,

30], pixel-based and three-dimensional (3D)-based methods utilizing a deep convolutional neural network (CNN) were presented to explore contextual features of HSI, and then obtain abundances of a pixel by using the multilayer perceptron (MLP) architecture. As the MLP can obtain rich and high-order features from original hyperspectral images, DL algorithms can achieve excellent performances for unmixing. However, it requires a great number of training samples and training parameters, which leads to long training durations.

Considering the above problems, the scattering transform structure, which is similar to the CNN structure [

31] but uses known rather than learnt filters, shows potential for scenarios with limited or low-quality training data, and has been effectively applied to feature extraction, classification, and recognition in audio and image processing [

32,

33,

34,

35]. According to the literature [

36], the scattering transform is iteratively computed with a cascade of unitary wavelet modulus operators to obtain the deep invariances, and delocalizes signal information into scattering decomposition paths. At the same time, each deformation layer does not change the content of the signals. Both deep neural networks and scattering transforms can provide high-order coefficients for stable and invariable feature representations [

37]. Therefore, the scattering transform can be thought of as a simplified deep learning model. The main difference between scattering transform and CNN is described in [

38]. The CNN learns its convolutional kernels by using a training process, which can require a computationally expensive training stage, while the scattering transform uses fixed convolution functions. In addition, the use of a fixed convolution function within the scattering transform framework has a good theoretical foundation and shows better performance than the CNN when the training data is limited and of low-quality. For hyperspectral image processing, as shown in [

39], the three-dimensional scattering transform can provide rich descriptors of complex structures with less training samples, reducing the feature variability and keeping the local consistency of labels in the spatial neighborhood. Compared with the CNN approach in [

40], the three-dimensional scattering transform is more effective for both spectral representation and classification.

In this paper, end-to-end pixel-based scattering transform and preliminary 3D-based scattering transform frameworks are proposed for remote sensing hyperspectral unmixing, and the scattering transform framework for hyperspectral unmixing is called STFHU (scattering transform framework to hyperspectral unmixing). In this approach, the scattering transform is firstly utilized to achieve high-order features of HSI, and then the k-nearest neighbor (k-NN) regressor is used to obtain the abundance. The main characteristics of this proposed framework are briefly summarized as follows:

- (1)

The framework based on scattering transform is firstly employed to solve the hyperspectral unmixing problem. The STFHU framework extracts the high-level information by using a multilayer network to decompose the hyperspectrum and then utilizes the k-NN regressor to relate the feature vectors to their abundances.

- (2)

The proposed method can obtain equivalent performance using less training samples than CNN-based approaches. Meanwhile, the parameter setting of the scattering transform framework is less complicated than that of the CNN.

- (3)

The scattering transform features are well suited to eliminate effects of Gaussian white noise. The model trained using non-noisy data can also achieve satisfactory unmixing results when being applied to noisy data.

The rest of this paper is organized as follows. The proposed scattering transform framework for hyperspectral unmixing is presented in

Section 2.

Section 3 and

Section 4 compare the proposed approach with state-of-the-art algorithms, which describe and discuss the experimental results based on simulated hyperspectral data and real-world hyperspectral data. Conclusions are drawn in

Section 5.

2. Methodology

In this section, the scattering transform framework is proposed for hyperspectral image unmixing. Given the

vth pixel spectrum as

, the spectral mixture model

f can be simply described as:

where

is the abundance fractions, and

denotes the abundance of the

kth endmember.

is the endmember matrix of

endmembers and

is the number of bands of hyperspectral data.

represents the error vector.

is the abundance non-negativity constraint, while

is the sum-to-one constraint for the abundances.

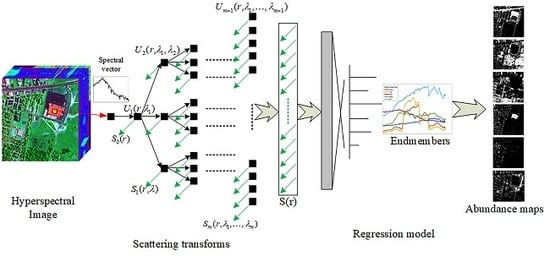

The proposed STFHU framework is illustrated in

Figure 1, in which the notations are utilized to clearly illustrate the structure of the proposed method and will be discussed in

Section 2. The spectral vectors are processed by the scattering transform network, which aims at extracting the high-level feature representation of the spectrum. This is achieved by using a cascade of transforms applied firstly to the input spectral vectors, which include a single pixel vector or neighborhood of the pixel vector, and then sequentially to the outputs of each transform stage. Subsequently, the features are used as the input to the regression model, and the abundance of the spectrum is then obtained. The scattering transforms are typically implemented using wavelet functions (known as wavelet scattering transforms) but other transforms are also possible (for example, the Fourier scattering transform).

2.1. Pixel-Based Wavelet Scattering Transform

Let

be a wavelet cluster, which is scaled by

from the mother wavelet, and it can be shown as:

and then a wavelet modular operator

is defined, which includes the wavelet arithmetic and modular arithmetic,

where the first part,

, is called scattering coefficient and represents the main information at low frequency bands of the input signal, while the second part,

, is the scattering propagator, which is the model of the nonlinear wavelet transform.

is the coefficient output of each different order, and

is the input to the transform of the next order, used to regain the high frequency information.

is the spectral vector input, and

represents the variables in wavelet modular operator. Moreover, the scaling function is

.

Figure 1 shows the

J = 3.

In this way, the scattering transform constructs invariable, stable, and abundant signal information by utilizing iterative wavelet decomposition, a modulus operation, and a low pass filter. The zero-order scattering transform output is:

and then

can be used as the input to the first-order transform:

In addition, the first-order scattering transform output and the corresponding input are:

Finally, a collection of the scattering transform coefficient outputs from zero-order to

mth-order can be obtained as:

which is the scattering transform feature vector for the hyperspectral pixel

. The main advantages of scattering transforms include the translation invariance, local deformation stability, energy conservation, and strong anti-noise ability.

2.2. 3D-Based Scattering Transform

As the mixture in one pixel usually includes land cover that is around the pixel, the spatial information is crucial for hyperspectral image processing and should be taken into account in spectral unmixing.

The spectral vector at location

in spatial coordinates is given as:

and in order to extract the spectral–spatial information, a 3D filter

is utilized to compute the data cube of hyperspectral images. Because the spectral information is the major information for unmixing,

is firstly assumed where

f(

p,

q)

f(

p,

q,

o). As shown in

Figure 2, the filter

with the size of

is represented, and the new data cube after being filtered is:

where

are the neighbors of

, while

are the coefficients of the filter. In this paper, the average filter is used to construct the new cube.

Compared with , includes the spectral information and spatial information. Therefore, it can be figured out that one of the key factors in the 3D scattering transform framework for hyperspectral unmixing is designing the filter function .

From Equations (2)–(10), the scattering transform coefficients can be retrieved as:

It can be seen that the scattering transform processing framework derives features in a similar way to a CNN processing framework.

The hierarchical example spectra of the scattering transform network are demonstrated in

Figure 3 and in the “scattering transform” part of

Figure 1. The original spectrum of one pixel in one hyperspectral image with 156 bands is shown in the first row, while the other rows illustrate the scattering transform coefficients of this spectrum. In this instance, the parameters are set as

. The coefficients in the second row, which are applied by using the low-pass filter

, are very smooth and show consistency compared with the original spectrum. In the third and fourth rows, it can be found that the high frequency information is separated by

. For the fourth row, the high-pass scattering transform value is very low; therefore, only the three low-pass information is left. Then, the scattering transform feature vector

with 1092 dimensions, which comprises the seven scattering transform coefficient vectors in the above rows, is shown in the fifth row. Thus, the feature vector has richer, smoother information than the original spectrum, while maintaining a similar spectral envelope to the original spectra.

2.3. Regression Model of Scattering Transform Features

According to the scattering transform hyperspectral unmixing structure in

Figure 1, the input is the spectral vector of a pixel, and the output are the abundances of endmembers. After the pixel-based or 3D-based scattering transform results

are obtained, the scattering coefficients of each pixel at position

can be used as scattering transform features. Therefore, the mixture model can be rewritten as:

After that, the regression model is used to predict the abundance corresponding to the endmembers of each pixel by utilizing the feature vectors. For the regression model, k-nearest neighbor (k-NN) is applied to learn a regressor based on the scattering transform features in samples for training. k-NN predicts the values of the spectra in test sets based on how closely the features resemble the spectra in the training set. As the endmembers are often considered by using the pure pixels in hyperspectral images, the operation principal of the k-NN regressor can be illustrated in

Figure 4. The inputs are scattering transform features, and the outputs are abundance maps.

4. Discussion

The above experimental results show that the proposed pixel-based STFHU method can obtain good performance in HIS unmixing. In this section, we would like to discuss the robustness to noise, effect of limited training samples, computational complexity of the proposed method, and preliminary 3D-based STFHU.

According the results in

Section 3, the method proposed in this paper is more robust to random noise interference in the remote sensing image and more adaptable to the environment, which brings benefits in effectively solving the problem of spectral variability caused by the variety of endmembers.

Figure 19 illustrates the advantages of the scattering transform approach in extracting reliable features from noisy hyperspectral data.

Figure 19a,c plots the original spectral and Noise2 spectral data, which have a mathematical expectation of zero and a variation of 0.005, separately.

Figure 19b,d delineates the curves of scattering transform features extracted from the original data and Noise2, respectively. By comparing

Figure 19b,d, it can be seen that the overall information carried by the noisy image and the original image present excellent consistency, which means that the transformed noisy spectral data can reflect the information of the non-noisy component of the mixed signal well. Therefore, the scattering transform features entail benefits in effectively reducing the effects of white noise so that the accuracy of hyperspectral unmixing can be improved.

- (2)

Effect of limited training samples

When the number of samples used for training is relatively high, it is easier to achieve excellent unmixing results, while in real-world scenarios, the samples for training can be difficult to obtain, which makes it meaningful to compare the performances when there are limited numbers of samples for training.

Table 2 compares the RMSE values when estimating the abundance maps of eight endmembers using non-noisy hyperspectral simulated data and three algorithms at different training ratios, including 10% and 5%. In both training ratios, the average RMSE of the CNN algorithm is higher than the other two methods, indicating a high demand of large numbers of training data. When 5% samples are utilized for training, the performance of CNN is worse than that of the 10% condition. Moreover, compared with the ANN method, the algorithm proposed in this paper presents lower average RMSE and rms-AAD results at both training ratios. When the training ratio decreases from 10 to 5%, the average RMSE of STFHU has a 20% increase from 0.0340 to 0.0408, while the average RMSE of ANN increases by 43.47% from 0.0421 to 0.0604.

In addition, from the results for 10% and 5% training ratios in

Table 3, the proposed method achieves an average RMSE of 0.0790 at a training ratio of 5%, which is better than the CNN average RMSE result of 0.0818 at a training ratio of 10%. Additionally, it also precedes 0.1165 for LSU and 0.1415 for ANN utilizing 50% samples for training. These results demonstrate the unmixing ability of the proposed method when the proportion of samples for training is small, which shows equivalent or better results than the contrastive approaches using 50% of samples for training.

This proves that when there are limited samples for training, the proposed approach shows more apparent advantages in hyperspectral unmixing than other contrastive methods.

- (3)

Discussion of computational complexity

It is necessary to train a considerable number of samples for building regression models, but our proposed method just needs to calculate the feature coefficient. Therefore, this framework can reduce the time cost. In this part, we mainly discuss the computational complexity of STFHU, LSU, ANN, and CNN.

From [

49], we can obtain that the scattering coefficients are calculated with

. In [

50], the complexity of k-NN is

, where

n is the cardinality of the training set and

d is the dimension of each sample. As the proposed STFHU is composed of scattering transforms and k-NN regression, the total computational complexity is

.

Based on [

51], the computational complexity of least square regression is

.

The time computational complexity of the CNN can be computed by

, where

m is the length of feature map side,

is the length of convolution kernel side,

is the number of input channels,

is the number of output channels,

D is the number of convolution layers, and

represents the

th convolution layer. According to [

52], the computational complexity of the CNN can be shown to be

, which is the same as the complexity of the ANN method.

Therefore, it can be seen that the computational complexity of STFHU is much smaller than that of the least square regression,, and CNN and ANN, . Hence, the proposed method is significantly more computationally efficient than the comparative methods when using the same amount of training samples.

Furthermore, using the CNN requires modification of the network structure and parameters, such as the convolution layers, pooling layers, learning rate, and epochs, to optimize the performance for different training datasets, resulting in time-consuming training phases. In comparison, the proposed algorithm requires fewer parameters, including J and m, and the implementation of the k-NN regressor utilizing default settings achieves satisfactory performance, whilst different settings do not lead to significant changes in the performance. It means that our STFHU is less dependent on parameter choices. Thus, the method proposed in this paper shows advantages in simplifying the network structure and increasing the efficiency of computation.

- (4)

Discussion of the preliminary 3D-based STFHU results

3D-based approaches have been validated to be useful for HIS processing, which is preliminarily taken into account in this paper.

Table 6 shows the average RMSE (Avg-RMSE) and rms-AAD results of unmixing utilizing both pixel-based and 3D-based scattering transform approaches based on small amounts of urban image samples for training. It can be observed that the average RMSE and rms-AAD of unmixing results become larger as the training ratio decreases using the same methods. To solve problems involved by utilizing a small proportion of samples for training, the 3D-based scattering transform is proposed, especially for real-world hyperspectral unmixing. Considering the effects on single-pixel spectral curve caused by the environment, the 3D spatial information can enrich the information included in the curve, thus being practical for real-world applications. In

Table 6, the average RMSE using the 3D-based scattering transform at a training ratio of 0.5% is 0.1584, while the value for the pixel-based approach is 0.2148, which is larger than 0.1912, the result at 0.3% training ratio utilizing the 3D-based method. Likewise, the 3D-based approach can achieve equivalent rms-AAD at 0.5% training ratio compared with the result when using a training ratio of 2% for the pixel-based method. The aforementioned results verify that the 3D spatial information can provide effective cues when using low training ratios.

In future research, this type of information will be further utilized to combine with the scattering transform features to develop new algorithms to further improve the performance of hyperspectral unmixing.

5. Conclusions

In this paper, a novel scattering transform framework is proposed to improve the accuracy of hyperspectral unmixing. The STFHU method possesses a multilayer structure for extracting features, which is similar to the structure of CNN and can increase the accuracy of describing the desired features. The pixel-based and 3D-based scattering transforms are excellently suited for hyperspectral unmixing since they not only lead to sufficient information in the extracted features, but also suppress the interference of noise. In particular, this approach is robust against Gaussian white noise and a small amount of training samples. These scattering transform techniques are then combined with the k-NN regressor to form a framework for end-to-end feature extraction and deep scattering network for abundance estimation. Compared with CNN, the proposed solution has a clear structure and few parameters, leading to better viability and efficiency of computation. Experimental results based on simulated data and three real-world hyperspectral remote sensing image datasets provide abundant evidences of the robustness and adaptiveness of the proposed approach. Under the interference of Gaussian white noise, whose variance is 0.005, the scattering transform features are helpful to achieve closer abundance map predictions of the ground truth, thus having better unmixing results than the other contrastive approaches. Moreover, based on identical data for training and testing, the proposed algorithm gains the least RMSE and rms-AAD among all investigated methods, and it can also accurately complete the unmixing task when utilizing 5% of the samples for training. In addition, this paper presents preliminary verifications of the performance of 3D-based scattering transform based on a small number of samples, which is better than that of the pixel-based approach.

Although the proposed scattering transform framework can obtain desirable performance for hyperspectral unmixing, there is still a need to further improve the utilization of spatial correlation. For the preliminary 3D-based framework in this paper, the spatial information is calculated firstly, and then we mainly use the spectral information for scattering transform. In terms of future research, we plan to investigate how to provide a whole joint spectral-spatial scattering transform framework for hyperspectral spectral image unmixing.