1. Scenario and Motivations

For several decades, satellite systems for Earth observation (EO) have acquired a huge number of images of crucial importance for many human tasks. These data are used for visual interpretation or as intermediate products for subsequent processing and information extraction. The growing needs of very high resolution products have pushed towards the design of high performance acquisition devices. Nevertheless, due to physical constraints on the number of photons per pixel per band and the sensor noise, both photon and electronic, that determines the signal to noise ratio (SNR), very fine spatial and spectral resolutions cannot be achieved through a unique instrument [

1]. Thus, image fusion techniques represent a viable solution to overcome this issue by fusing higher spatial resolution broad-spectrum images with lower spatial resolution multichannel data.

The word

pansharpening usually refers to the process of the enhancement of the spatial resolution of a multispectral (MS) image exploiting an almost simultaneously acquired panchromatic (Pan) image with higher spatial resolution. Thus, pansharpening methods leverage on the complementary resolutions of the MS and Pan images to synthesize a fused product with the same spectral resolution of the MS image and the same spatial resolution of the Pan data [

1,

2]. Generally speaking, pansharpening increases the spatial resolution of the MS image paying it by the introduction of spectral distortion. Thus, it is often useful for visual or automated analysis tasks. Whenever a Pan image is not available, but we have MS and hyperspectral (HS) bands with different resolutions, e.g., for Sentinel-2 [

3], the sharpening can be synthesized from the available higher spatial resolution MS/HS bands by following the so-called

hypersharpening paradigm [

4,

5].

New achievements in pansharpening often exploit the concept of super-resolution [

6]. In spite of their mathematical elegance, many of these methods exhibit little increments in performance with respect to the state-of-the-art [

7,

8] obtained by strongly increasing the computational cost [

9] and requiring a heavy parameter tuning phase. Super-resolution, or, generally speaking, optimization based variational methods, either model-based [

10,

11] or not [

12], usually suffer from higher computational burden with respect to traditional approaches. For instance, image fusion techniques based on deep learning architectures require huge amount of images in order to train the underlying model, thus leading to a high computational burden requiring special hardware architectures (i.e., GPUs) to train the networks for the specific task. Furthermore, the performance of methods based on super-resolution is often linked to an optimization (often dataset-dependent) of the parameters or a proper selection of a training set as for deep learning-based approaches. Thus, the comparison with these methods, referred to as variational optimization-based (VO) [

8], despite their high performance, is considered out-of-the-scope of this paper due to their limited reproducibility, meaning with this term that, even starting from the same code, two distinct users can get different synthetic products fusing the same data.

After a first generation of methods, unconstrained from objective quality evaluation issues [

13], the current second generation of pansharpening methods, established ten years later [

2], relies upon approaches all based on the same steps: (i) the MS bands are superimposed, i.e., interpolated and co-registered, to the Pan image, (ii) the spatial details are extracted from the Pan, and (iii) they are injected into the original MS image according to a proper injection model. The detail extraction can be

spectral-based, originally known as component substitution (CS), or

spatial-based, generating methods into the multi-resolution analysis (MRA) class [

14]. The Pan image is usually preliminarily

histogram-matched equalizing (using a linear model) the mean and the standard deviation of its low-pass spatial version to those of the spectral component that shall be replaced: the intensity component, for CS, the MS band that shall be sharpened, for MRA [

15,

16,

17].

The injection model rules the combination of the MS image with the spatial details extracted from the Pan image. Two widespread models are:

the projective model, which is derived from the Gram-Schmidt (GS) orthogonalization procedure, representing the basis of many state-of-the-art pansharpening approaches like the GS spectral sharpening [

18], the context-based decision (CBD) [

19], and the regression-based injection model [

20]. This model can be either global, as for GS, or local [

21], as for CBD [

19];

the multiplicative (or contrast-based) model. High-pass modulation (HPM), Brovey transform (BT) [

1], smoothing filter-based intensity modulation (SFIM) [

22], and the spectral distortion minimizing (SDM) injection model [

23] are all based on this injection model. This model is inherently local since the injection gain changes pixel by pixel. Furthermore, it is at the basis of the raw data fusion of physically heterogeneous data, such as MS and synthetic aperture radar (SAR) images [

24,

25].

Focusing on the multiplicative injection model, in SFIM [

22], the first interpretation of this model in terms of the radiative transfer model ruling the acquisition of an MS image from a real-world scene has been provided [

26]. A low spatial-resolution spectral reflectance, previously estimated from the MS bands and a low-pass spatial resolution version of the Pan image, is sharpened through multiplication by a high spatial resolution solar irradiance represented by the Pan image. Thus, in the last few years, the atmospheric path radiance of the MS band [

27] has been considered by some authors [

28,

29]. Following the radiative transfer model [

26], the atmospheric path-radiance that appears as

haze in a true color image should be estimated and subtracted from each band before the spatial modulation is performed. Recently, the haze-corrected versions of contrast-based CS and MRA pansharpening methods have been derived, even testing many methods for the estimation of the path radiances of the MS bands [

30]. Both image-based and model-based methods have been considered to this aim. It has been proven that image-based haze-estimation approaches can reach the same fusion performance of the theoretical modeling of atmosphere and of an exhaustive search performed at degraded spatial scale [

29].

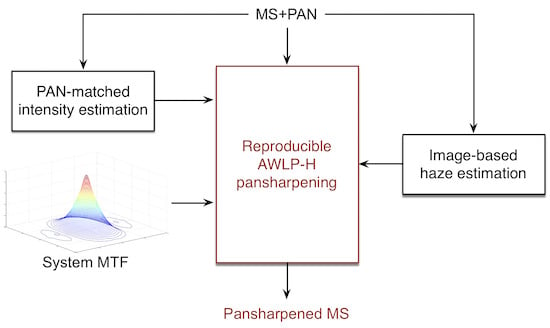

In this paper, the most popular

hybrid, i.e., CS followed by MRA, pansharpening method, namely the additive wavelet luminance proportional (AWLP) method [

31], has been revisited and optimized by following the same criteria used for individually optimizing CS and MRA fusion methods [

2] and exploiting the haze correction for the multiplicative injection model peculiar of AWLP. The result is an extremely fast and high performing method capable of providing totally reproducible results without manual adjustments, thanks to optimizations implicitly embedded into its flowchart. A comparative assessment with the baseline AWLP and with the most powerful methods taken from the Pansharpening Toolbox [

32] on IKONOS, QuickBird, and WorldView-2 datasets, highlights that the revised AWLP represents the epitome of the second-generation methods and exhibits all the favorable characteristics for routine processing of large image datasets as well as for a fair and accurate benchmarking of newly developed pansharpening methods.

The remaining of this paper is organized as follows.

Section 2 concisely illustrates the spectral and spatial models of the imaging instruments and the radiative transfer model of imaging through the atmosphere. In

Section 3, CS, MRA and hybrid methods, focusing on AWLP, are reviewed. An instrument-optimized, haze-corrected version of AWLP is proposed in

Section 4. The procedures for the assessment of pansharpened images, both at full resolution and at reduced resolution, are reviewed in

Section 5.

Section 6 is devoted to the experimental results and comparisons on datasets from three different satellite instruments. Finally, conclusions are drawn in

Section 7.

3. A Review of Pansharpening Methods

The second-generation pansharpening methods can be divided into CS, MRA, and hybrid methods [

14]. The unique difference between CS and MRA is the way to extract the Pan details, which strongly characterizes the fused images [

2,

44]. Hybrid methods are the cascade of a CS and an MRA, either CS followed by MRA or, more seldom, MRA followed by CS [

15]. The notation used in this paper will be firstly illustrated. Then, a brief review of CS and MRA introduces hybrid methods focusing on AWLP.

3.1. Notation

The math notation is as follows. Vectors are in bold lowercase (e.g., ) with the i-th element denoted as . 2-D and 3-D arrays are indicated in bold uppercase (e.g., ). An MS image is a 3-D array composed by N bands indexed by the subscript k. Hence, denotes the k-th spectral band of . The Pan image is indicated as . The MS pansharpened image and the upsampled MS image are indicated as and , respectively. Matrix product and ratio are intended as element-wise operations.

3.2. CS

The component substitution (CS) family relies upon the concept of a forward transformation of the MS data in order to separate the spatial component from the spectral components. A substitution of the Pan image can be safely done in this new domain getting the spatial enhanced MS product after the backward transformation. When a linear transformation with a unique component substitution is adopted, this process can be summarized, leading to the fast implementations of CS methods, by the following fusion rule for each

[

1,

2]:

where

k indicates the spectral band,

are the

injection gains that could be pixel- and band-dependent, while

, often called intensity component, is as follows

where

are spectral weights optimally estimated by exploiting the multivariate regression framework using an MS spectral band and a low-pass spatial resolution version of the Pan image,

[

18]. Finally,

is

histogram-matched to

, i.e.,

where

and

indicates the mean and the standard deviation operators, respectively [

15]. It is worth remarking that CS methods are robust with respect to aliasing and spatial misalignments [

45].

Regarding the injection gains in Equation (

2), different rules are proposed in the literature. Spatially uniform gains for each band

,

, are calculated as follows [

18]:

where

and

are the covariance and the variance operators, respectively. Instead, the multiplicative (or

contrast-based) injection rule is based on the definition of space-varying injection gains for each band

,

, as

In this latter case, the fusion rule for each band becomes

We wish to highlight that the multiplicative injection model is perhaps the sole means to merge heterogeneous dataset, like optical and synthetic aperture radar (SAR) data [

25].

3.3. MRA

The multi-resolution analysis (MRA) approaches are based on the injection of the high-pass spatial details of the Pan image into the upsampled MS image [

14]. The general MRA fusion rule for each

is as follows:

where the Pan image is preliminarily histogram-matched to the upsampled

k-th MS band [

15]

and

is the low-pass filtered version of

.

It is worth pointing out that the different MRA approaches are uniquely characterized by (i) the low-pass filter to get the

image, (ii) the presence or absence of a decimator/interpolator pair [

14], and (iii) the set of injection coefficients that, again, can be either spatially uniform,

, or space-varying,

. Moreover, MRA methods are robust with respect to temporal misalignments [

46].

The multiplicative version of MRA methods, for each , is as follows

Equation (

10) accommodates the high-pass modulation (HPM) [

1], the smoothing filter-based intensity modulation (SFIM) [

22], and the spectral distortion minimizing (SDM) technique [

23] that differ from one another by the spatial filtering exploited to get

.

3.4. Hybrid Methods

In the literature, hybrid methods are also presented. In this case, the spectral transformation of CS methods is cascaded with MRA to extract the spatial details. This leads to the so-called hybrid methods [

47,

48]. The most widespread hybrid approach using a multiplicative injection model is the AWLP [

31]. The fusion rule for each

is as follows:

in which the low-pass filter is a separable 5×5

spline kernel. Note that Equation (

11) cannot be written as a product, unlike what happens to Equations (

7) and (

10).

According to a recent study [

14], hybrid methods are equivalent either to spectral or to spatial methods, depending on whether the detail that is injected is

or

. Thus, the AWLP is equivalent to an MRA method and its histogram matching should be Equation (

9) instead of Equation (

4).

The correct histogram matching was introduced in [

15] and found to be beneficial for performance:

In

Section 4, the improved AWLP Equation (

12) will be further enhanced by means of a haze correction, analogously to what has been done for pure CS and MRA methods [

29,

30]. To do so, the minimum mean square error (MMSE) intensity component [

18] is calculated differently from the original publication [

31].

4. AWLP Pansharpening with Haze Correction

Let us start recalling some recent achievements with regards to the haze correction of multiplicative-based CS methods [

29]. Given the particularization of the general CS Equation (

2) to the injection coefficients Equation (

7), i.e.,

we have that the haze-corrected version of Equation (

13) is

where

denotes the constant haze value for the

k-th MS band,

is given by Equation (

4),

is the MMSE intensity [

18], and

is the haze both of the MMSE intensity and of Pan:

This correction was derived in [

29] from the interpretation of the multiplicative pansharpening in terms of the radiative transfer model Equation (

1). It is worth remarking that, in order to have

as close as possible to

, the weights,

, must be estimated via linear multivariate regression [

18].

Under this hypothesis, we can obtain the haze-corrected version of the AWLP method starting from Equations (

12) and (

14):

It is worth remarking that the proposed approach is optimized on both sensor and atmosphere. Indeed, the spatial response of the sensor is taken into account by the low-pass filter applied to

, which is designed to match the MTF of the MS sensor (i.e., imposing a Gaussian shape filter matched with the MS sensor’s MTF by fixing the gain at Nyquist frequency to

). Instead, the spectral response is implicitly considered when the weights to calculate the intensity component

are estimated according to the linear model in Equation (

3). Finally, the atmospheric optimization is performed by considering the path radiance in the proposed haze-corrected AWLP method.

The image-based dark-object subtraction (DOS) method [

35] is exploited for estimating the haze (atmospheric path-radiance) for each MS spectral band. Under the assumption that dark pixels exist within an image and have zero reflectance (or 1% reflectance), the radiance obtained by such dark pixels is mainly due to atmospheric path radiance [

35]. Thus, the estimation of the coefficient

for the

k-th spectral band is performed by calculating the minimum of the

k-th MS spectral band [

28]. Thus, Equation (

16) requires no parametric adjustments to find the values of haze.

5. Assessment Protocols and Indices

The assessment of pansharpening products is surely a critical issue [

34]. Two validation procedures are often applied. The first one is the so-called validation at reduced scale [

49]. It relies upon the reduction, through spatial filters, of the spatial resolution of both the MS and the Pan images aiming of exploiting the original MS image as reference [

49]. In order to compare the fused product with the reference image, multidimensional indexes are used. In particular, the

relative dimensionless global error in synthesis, ERGAS [

1,

34], the spectral angle mapper (SAM) [

2], and the multispectral extension of the universal image quality index, generally called Q

(Q4 for four bands and Q8 in the case of eight-band products) [

50,

51] are used in this work.

Despite of its accuracy (thanks to the presence of a reference image), the above-mentioned procedure is based on an implicit assumption of “invariance among scale” that could not be valid. For this reason, several protocols at full resolution, i.e., validating the fused products coming from the fusion of the original MS and PAN images, have been proposed in the literature [

52,

53,

54,

55,

56,

57]. In this work, the hybrid quality with no reference index (HQNR) [

58] is selected. It consists of two indexes measuring the spatial distortion,

, borrowed by the one used in the QNR index [

59], and the spectral distortion,

, borrowed by the one of Khan’s protocol [

60]. The HQNR is obtained by combining both the distortion indexes as follows:

where

and

are two coefficients that weight the spectral and the spatial contributions, respectively. Both the coefficients are set to 1. The two distortion indexes

and

range from 0 to 1, thus even the HQNR can assume a value in the set

. Its ideal value is 1.

7. Conclusions

In this study, we pointed out the need for a fast and high performing pansharpening method providing reproducible results on any dataset of any size. Such a method, on one side, should expedite routine fusion of large datasets (e.g., Google Earth), on the other side, should become an essential tool for a fair benchmarking of newly developed methods. Starting from a popular hybrid method developed in 2005, a series of modifications has been introduced to achieve an implicit optimization towards the spectral and spatial responses of the imaging instrument and towards the radiative transfer through the atmosphere.

The spectral response is captured by a multivariate regression of the interpolated MS bands providing an intensity component that is least squares-matched to a low-pass version of the Pan image. The spatial response is accounted by using Gaussian-like analysis filters matched with the MTF of the MS channels that is a solution widely established in the literature. Eventually, the imaging mechanism through the atmosphere is exploited through a correction in the multiplicative detail-injection model of MS path-radiance terms, estimated by means of the dark-object image-based method, as the minimum values attained over each spectral band. In fact, estimation and correction of path-radiances are relevant to get high performance from pansharpening methods relied upon spatial modulation.

The proposed AWLP with haze-correction, referred to as AWLP-H, is fast and comparable, from a computational point of view, with classical methods (see, e.g., the GS). Nevertheless, AWLP-H achieves the best performance among the most powerful methods of the Pansharpening Toolbox [

2] in a fair and reproducible assessment, carried out both at reduced and at full scale, on three different datasets from as many instruments. The procedure does not need any adjustments for WorldView data, i.e., where some MS bands do not overlap with the Pan channel.