A Gray Scale Correction Method for Side-Scan Sonar Images Based on Retinex

Abstract

:1. Introduction

2. Current Gray Scale Correction Methods for Side-Scan Sonar Images

2.1. Time Variant Gain (TVG)

2.2. Histogram Equalization (HE)

2.3. Nonlinear Compensation

2.4. Function Fitting

2.5. Sonar Propagation Attenuation Model

2.6. Beam Pattern

3. Gray Scale Correction Method Based on Retinex

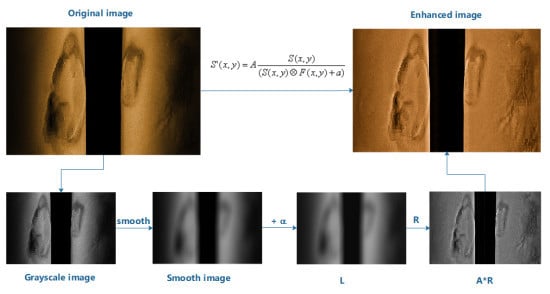

4. Our Method

5. Experiments and Analysis

5.1. Experiments and Analysis of Parameter A

5.2. Experiments and Analysis of Parameter a

5.3. Experiments and Analysis of Smoothing Function

5.4. Comparative Experiments and Analysis of Other Methods Based on Retinex

5.5. Comparative Experiments and Analysis of Gray Scale Correction Methods for Side-Scan Sonar Images

6. Expansion of Our Method

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Dura, E.; Zhang, Y.; Liao, X.; Dobeck, G.J.; Carin, L. Active learning for detection of mine-like objects in side-scan sonar imagery. IEEE J. Ocean. Eng. 2015, 30, 360–371. [Google Scholar] [CrossRef]

- Reggiannini, M.; Salvetti, O. Seafloor analysis and understanding for underwater archeology. J. Cult. Herit. 2017, 24, 147–156. [Google Scholar] [CrossRef]

- Hovland, M.; Gardner, J.V.; Judd, A.G. The significance of pockmarks to understanding fluid flow processes and geohazards. Geofluids 2002, 2, 127–136. [Google Scholar] [CrossRef] [Green Version]

- Kaeser, A.J.; Litts, T.L.; Tracy, T.W. Using low-cost side-scan sonar for benthic mapping throughout the lower flint river. River. Res. Appl. 2013, 29, 634–644. [Google Scholar] [CrossRef]

- Blondel, P. The Handbook of Sidescan Sonar; Springer: Berlin/Heidelberg, Germany, 2009; pp. 62–66. ISBN 978-3-540-42641-7. [Google Scholar]

- Chang, Y.C.; Hsu, S.K.; Tsai, C.H. Sidescan sonar image processing: Correcting Brightness Variation and Patching Gaps. J. Mar. Sci Tech.-Taiw. 2010, 18, 785–789. [Google Scholar]

- Capus, C.G.; Banks, A.C.; Coiras, E.; Ruiz, I.T.; Smith, C.J.; Petillot, Y.R. Data correction for visualisation and classification of sidescan SONAR imagery. IET Radar Sonar Navig. 2008, 2, 155–169. [Google Scholar] [CrossRef]

- Capus, C.; Ruiz, I.T.; Petillot, Y. Compensation for Changing Beam Pattern and Residual TVG Effects with Sonar Altitude Variation for Sidescan Mosaicing and Classification. In Proceedings of the 7th European Conference Underwater Acoustics, Delft, The Netherlands, 5–8 July 2004. [Google Scholar]

- Zhao, J.; Yan, J.; Zhang, H.M.; Meng, J.X. A new radiometric correction method for side-scan sonar images in consideration of seabed sediment variation. Remote Sens. 2017, 9, 575. [Google Scholar] [CrossRef]

- Ye, X.F.; Li, P.; Zhang, J.G.; Shi, J.; Gou, S.X. A feature-matching method for side-scan sonar images based on nonlinear scale space. J. Mar. Sci. Technol. 2016, 21, 38–47. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, H.; Wang, X. Processing principles of side-scan sonar data for seamless mosaic image. J. Geomat. 2017, 42, 26–33. [Google Scholar]

- Zhao, J.; Shang, X.; Zhang, H. Reconstructing seabed topography from side-scan sonar images with self-constraint. Remote Sens. 2018, 10, 201. [Google Scholar] [CrossRef]

- Johnson, H.P.; Helferty, M. The geological interpretation of side-scan sonar. Rev. Geophys. 1990, 28. [Google Scholar] [CrossRef]

- Shippey, G.; Bolinder, A.; Finndin, R. Shade correction of side-scan sonar imagery by histogram transformation. In Proceedings of the Oceans, Brest, France, 13–16 September 1994; IEEE: New York, NY, USA; pp. 439–443. [Google Scholar]

- Li, P. Research on Image Matching Method of the Side-Scan Sonar Image. Doctoral Dissertation, Harbin Engineering University, Harbin, China, 2016. [Google Scholar]

- Al-Rawi, M.S.; Galdran, A.; Yuan, X. Intensity Normalization of Sidescan Sonar Imagery. In Proceedings of the Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016; pp. 12–15. [Google Scholar]

- Al-Rawi, M.S.; Galdran, A.; Isasi, A. Cubic Spline Regression Based Enhancement of Side-Scan Sonar Imagery. In Proceedings of the Oceans IEEE, Aberdeen, UK, 19–22 June 2017; pp. 19–22. [Google Scholar]

- Burguera, A.; Oliver, G. Intensity Correction of Side-Scan Sonar Images. In Proceedings of the IEEE Emerging Technology & Factory Automation, Barcelona, Spain, 16–19 September 2014; pp. 16–19. [Google Scholar]

- Kleeman, L.; Kuc, R. Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 491–519. ISBN 978-3-319-32552-1. [Google Scholar]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1997, 237, 108–128. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Barnard, K.; Funt, B. Analysis and Improvement of Multi-Scale Retinex. In Proceedings of the 5th Color and Imaging Conference Final Program and Proceedings, Scottsdale, AZ, USA, 1–5 January 1997; pp. 221–226. [Google Scholar]

- Jobson, D.J.; Woodell G, G.A. Retinex processing for automatic image enhancement. Electron. Imaging. 2004, 13, 100–110. [Google Scholar] [CrossRef]

- Jin, L.; Miao, Z. Research on the Illumination Robust of Target Recognition. In Proceedings of the IEEE International Conference on Signal Processing, Chengdu, China, 6–10 December 2016; pp. 811–814. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zheng, J.; Hu, H.M. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.; Ding, X. A Weighted Variational Model for Simultaneous Reflectance and Illumination Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2782–2790. [Google Scholar]

- Fu, X.; Zeng, D.; Huang, Y.; Liao, Y.; Ding, X.; Paisley, J. A fusion-based enhancing method for weakly illuminated images. Signal Process. 2016, 129, 82–96. [Google Scholar] [CrossRef]

| Method | PSNR | Information Entropy | Standard Deviation | Average Gradient |

|---|---|---|---|---|

| Original image | 6.81746 | 42.1187 | 5.89745 | |

| SSR | 7.50039 | 6.80343 | 74.8485 | 6.4373 |

| MSR | 8.42826 | 5.8048 | 54.2663 | 9.2899 |

| MSRCR | 6.48304 | 6.54661 | 54.3578 | 6.29672 |

| MSRCP | 7.22485 | 5.66164 | 43.4622 | 5.42281 |

| Ours | 14.5954 | 7.58250 | 43.8442 | 9.48556 |

| Original Image | Algorithm | PNSR | Information Entropy | Standard Deviation | Average Gradient | Algorithm Time-Consuming(s) |

|---|---|---|---|---|---|---|

| Image1 | LIME | 12.8283 | 7.59424 | 54.9971 | 11.3484 | 2.15712 |

| NPE | 16.9553 | 7.21194 | 45.2014 | 8.33524 | 20.9530 | |

| SRIE | 19.1414 | 7.28538 | 46.8345 | 7.37814 | 35.7950 | |

| MF | 19.3875 | 7.23853 | 46.1300 | 8.39727 | 3.0470 | |

| our method of mean filter | 14.8291 | 7.50084 | 45.5599 | 9.76665 | 0.5430 | |

| our method of bilateral filter | 15.0356 | 7.50243 | 44.6718 | 9.06058 | 1.7860 | |

| Image2 | LIME | 12.5039 | 7.55372 | 53.4062 | 11.6171 | 2.0779 |

| NPE | 17.4068 | 7.17702 | 14.9912 | 8.01561 | 20.6560 | |

| SRIE | 18.9174 | 7.26646 | 43.9448 | 7.51642 | 33.6090 | |

| MF | 18.6418 | 7.25066 | 42.7528 | 8.66020 | 1.5150 | |

| our method of mean filter | 14.3681 | 7.56064 | 44.7653 | 10.3218 | 0.5440 | |

| our method of bilateral filter | 14.5954 | 7.58250 | 43.8442 | 9.48556 | 1.6880 | |

| Image3 | LIME | 12.9156 | 7.52301 | 56.4356 | 11.98260 | 2.40157 |

| NPE | 18.0214 | 7.36287 | 45.1654 | 8.42337 | 20.5220 | |

| SRIE | 19.3221 | 7.37251 | 47.1540 | 7.91938 | 35.2410 | |

| MF | 19.0227 | 7.33752 | 46.9760 | 8.49770 | 1.4690 | |

| our method of mean filter | 14.9149 | 7.57515 | 46.7814 | 10.30420 | 0.6860 | |

| our method of bilateral filter | 15.1388 | 7.61042 | 45.8554 | 9.41506 | 1.5670 |

| Method | PSNR | Information Entropy | Standard Deviation | Average Gradient |

|---|---|---|---|---|

| Original image | 6.1333 | 11.0744 | 2.55545 | |

| HE | 9.82301 | 7.26025 | 45.695 | 9.87564 |

| Non-linear compensation | 11.4153 | 7.17749 | 45.9537 | 8.71157 |

| Function fitting | 9.91988 | 6.67453 | 48.7124 | 8.34709 |

| Our method | 8.41441 | 7.41057 | 38.7549 | 13.6594 |

| Scene | Image Size | Image Occupied Storage | Time-Consuming (s) |

|---|---|---|---|

| 1 | 370 × 415 | 450 KB | 0.035 |

| 2 | 560 × 420 | 689 KB | 0.061 |

| 3 | 720 × 610 | 1.4 MB | 0.104 |

| 4 | 1024 × 683 | 963 KB | 0.206 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, X.; Yang, H.; Li, C.; Jia, Y.; Li, P. A Gray Scale Correction Method for Side-Scan Sonar Images Based on Retinex. Remote Sens. 2019, 11, 1281. https://doi.org/10.3390/rs11111281

Ye X, Yang H, Li C, Jia Y, Li P. A Gray Scale Correction Method for Side-Scan Sonar Images Based on Retinex. Remote Sensing. 2019; 11(11):1281. https://doi.org/10.3390/rs11111281

Chicago/Turabian StyleYe, Xiufen, Haibo Yang, Chuanlong Li, Yunpeng Jia, and Peng Li. 2019. "A Gray Scale Correction Method for Side-Scan Sonar Images Based on Retinex" Remote Sensing 11, no. 11: 1281. https://doi.org/10.3390/rs11111281