Hyperspectral and Multispectral Image Fusion Using Cluster-Based Multi-Branch BP Neural Networks

Abstract

:1. Introduction

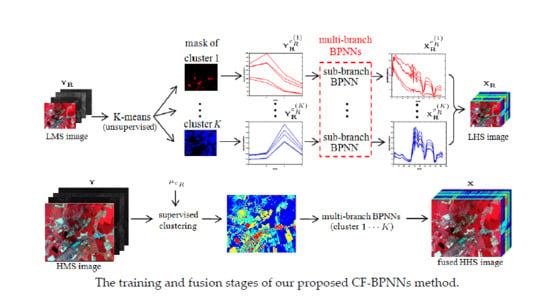

- The fusion problem of HMS and LHS image is firstly formulated as a nonlinear spectral mapping from an HMS to HHS image with the help of an LHS image.

- A cluster-based learning method using multi-branch BPNNs is proposed, to ensure a more reasonable spectral mapping for each cluster.

- An associative spectral clustering method is proposed to ensure that the clusters used in the training and fusion stage are consistent.

2. Problem Formulation

3. Proposed Method

3.1. Associative Spectral Clustering for LMS and HMS Images

3.2. Multi-Branch BPNNs and Spectral Mapping

4. Experimental Results and Discussion

4.1. Comparison Methods and Quality Metrics

4.2. Comparison on the First Dataset

4.3. Comparison on the Second Dataset

4.4. Discussions on Parameters Selection

4.5. Comparison with and without Clustering

5. Conclusions

Funding

Conflicts of Interest

References

- Akhtar, N.; Shafait, F.; Mian, A. Sparse Spatio-Spectral Representation for Hyperspectral Image Super-Resolution; European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 63–78. [Google Scholar]

- Carper, W.J.; Lillesand, T.M.; Kiefer, R.W. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 457–467. [Google Scholar]

- Jiang, C.; Zhang, H.; Shen, H.; Zhang, L. A practical compressed sensing-based pan-sharpening method. IEEE Geosci. Remote Sens. Lett. 2012, 9, 629–633. [Google Scholar] [CrossRef]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. Map estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. 2004, 13, 1174–1184. [Google Scholar] [CrossRef] [PubMed]

- Paris, C.; Bioucas-Dias, J.; Bruzzone, L. A Novel Sharpening Approach for Superresolving Multiresolution Optical Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1545–1560. [Google Scholar] [CrossRef]

- Selva, M.; Santurri, L.; Baronti, S. Improving Hypersharpening for WorldView-3 Data. IEEE Geosci. Remote Sens. Lett. 2018, 1–5. [Google Scholar] [CrossRef]

- Zhu, X.X.; Grohnfeldt, C.; Bamler, R. Exploiting joint sparsity for pansharpening: The J-SparseFI algorithm. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2664–2681. [Google Scholar] [CrossRef]

- Kwan, C.; Choi, J.H.; Chan, S.H.; Zhou, J.; Budavari, B. A super-resolution and fusion approach to enhancing hyperspectral images. Remote Sens. 2018, 10, 1416. [Google Scholar] [CrossRef]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and Multispectral Image Fusion Based on a Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J.Y. Fast fusion of multi-band images based on solving a Sylvester equation. IEEE Trans. Image Process. 2015, 24, 4109–4121. [Google Scholar] [CrossRef] [PubMed]

- Huang, B.; Song, H.; Cui, H.; Peng, J.; Xu, Z. Spatial and spectral image fusion using sparse matrix factorization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1693–1704. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Han, X.; Luo, J.; Yu, J.; Sun, W. Hyperspectral image fusion based on non-factorization sparse representation and error matrix estimation. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 1155–1159. [Google Scholar]

- Nezhad, Z.H.; Karami, A.; Heylen, R.; Scheunders, P. Fusion of hyperspectral and multispectral images using spectral unmixing and sparse coding. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2377–2389. [Google Scholar] [CrossRef]

- Mianji, F.A.; Zhang, Y.; Babakhani, A. Superresolution of hyperspectral images using backpropagation neural networks. In Proceedings of the 2009 2nd International Workshop on Nonlinear Dynamics and Synchronization, Klagenfurt, Austria, 20–21 July 2009; pp. 168–174. [Google Scholar]

- Xiu, L.; Zhang, H.; Guo, Q.; Wang, Z.; Liu, X. Estimating nitrogen content of corn based on wavelet energy coefficient and BP neural network. In Proceedings of the 2015 2nd International Conference on Information Science and Control Engineering, Shanghai, China, 24–26 April 2015; pp. 212–216. [Google Scholar]

- Yang, J.; Zhao, Y.Q.; Chan, J. Hyperspectral and Multispectral Image Fusion via Deep Two-Branches Convolutional Neural Network. Remote Sens. 2018, 10, 800. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and hyperspectral image fusion using a 3-D-convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef]

- Spath, H. The Cluster Dissection and Analysis Theory Fortran Programs Examples; Prentice-Hall, Inc.: Bergen, NJ, USA, 1985. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-means++: The advantages of careful seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2007; pp. 1027–1035. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the Third Conference Fusion of Earth data: Merging Point Measurements, Raster Maps and Remotely Sensed Images, Sophia Antipolis, France, 26–28 January 2000; pp. 99–103. [Google Scholar]

- AVIRIS Airborne System. Available online: http://aviris.jpl.nasa.gov (accessed on 10 September 2018).

- Aiazzi, B.; Selva, M.; Arienzo, A.; Baronti, S. Influence of the System MTF on the On-Board Lossless Compression of Hyperspectral Raw Data. Remote Sens. 2019, 11, 791. [Google Scholar] [CrossRef]

- ROSIS Pavia Center Dataset. Available online: http://www.ehu.eus/ccwintco/index.php/Hyperspectral_ Remote_Sensing_Scenes#Pavia_Centre_scene (accessed on 10 September 2018).

| Method | MSE | PSNR | UIQI | SAM | ERGAS | SSIM |

|---|---|---|---|---|---|---|

| CNMF | 1.6186 | 46.0395 | 0.9866 | 1.4593 | 1.4021 | 0.9951 |

| G-SOMP+ | 1.0728 | 47.8255 | 0.9911 | 1.9503 | 1.2268 | 0.9962 |

| Hysure | 0.4499 | 51.5995 | 0.9933 | 1.0011 | 0.8411 | 0.9979 |

| FUSE | 0.5256 | 50.9242 | 0.9934 | 1.1477 | 0.8584 | 0.9975 |

| LACRF | 1.1300 | 47.6001 | 0.9893 | 1.8461 | 1.2623 | 0.9922 |

| NSFREE | 0.4426 | 51.6707 | 0.9953 | 1.1503 | 0.7849 | 0.9970 |

| CF-BPNNs | 0.2764 | 53.7162 | 0.9970 | 0.8229 | 0.6259 | 0.9989 |

| Bicubic | Bilinear | Nearest | |||||||

|---|---|---|---|---|---|---|---|---|---|

| ERGAS | SAM | SSIM | ERGAS | SAM | SSIM | ERGAS | SAM | SSIM | |

| CNMF | 2.007 | 3.319 | 0.980 | 1.767 | 2.978 | 0.984 | 4.951 | 6.965 | 0.923 |

| G-SOMP+ | 2.246 | 3.949 | 0.979 | 2.350 | 4.184 | 0.978 | 2.433 | 5.049 | 0.966 |

| Hysure | 1.757 | 3.000 | 0.982 | 1.984 | 3.142 | 0.978 | 3.051 | 5.170 | 0.961 |

| FUSE | 1.969 | 3.398 | 0.986 | 1.845 | 2.990 | 0.984 | 2.334 | 4.587 | 0.981 |

| LACRF | 3.126 | 5.587 | 0.945 | 3.242 | 5.701 | 0.939 | 3.668 | 6.344 | 0.941 |

| NSFREE | 1.730 | 2.977 | 0.983 | 1.743 | 3.077 | 0.982 | 1.720 | 3.079 | 0.983 |

| 3D-CNN | 1.676 | 2.730 | 0.988 | 2.069 | 3.022 | - | 3.104 | 3.858 | - |

| CF-BPNNs | 1.710 | 2.882 | 0.992 | 1.737 | 2.902 | 0.992 | 1.665 | 2.812 | 0.992 |

| Method | MSE | PSNR | UIQI | SAM | ERGAS | SSIM |

|---|---|---|---|---|---|---|

| F-BPNN | 0.6533 | 49.9798 | 0.9929 | 1.2907 | 0.9954 | 0.9980 |

| CF-BPNNs | 0.2764 | 53.7162 | 0.9970 | 0.8229 | 0.6259 | 0.9989 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, X.; Yu, J.; Luo, J.; Sun, W. Hyperspectral and Multispectral Image Fusion Using Cluster-Based Multi-Branch BP Neural Networks. Remote Sens. 2019, 11, 1173. https://doi.org/10.3390/rs11101173

Han X, Yu J, Luo J, Sun W. Hyperspectral and Multispectral Image Fusion Using Cluster-Based Multi-Branch BP Neural Networks. Remote Sensing. 2019; 11(10):1173. https://doi.org/10.3390/rs11101173

Chicago/Turabian StyleHan, Xiaolin, Jing Yu, Jiqiang Luo, and Weidong Sun. 2019. "Hyperspectral and Multispectral Image Fusion Using Cluster-Based Multi-Branch BP Neural Networks" Remote Sensing 11, no. 10: 1173. https://doi.org/10.3390/rs11101173