Automatic Ship Detection in Optical Remote Sensing Images Based on Anomaly Detection and SPP-PCANet

Abstract

:1. Introduction

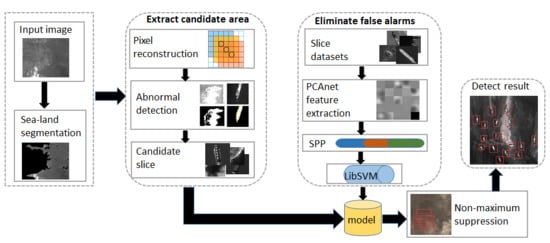

2. Methodology

2.1. Preprocessing: Sea and Land Segmentation

2.2. Pre-Screening: Anomaly Detection Algorithm

2.3. Discrimination: Fine Detection

3. Experimental Results and Analysis

3.1. Dataset Description

3.2. Contrastive Experiments

4. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tello, M.; Lopez-Martinez, C.; Mallorqui, J. A Novel Algorithm for Ship Detection in SAR Imagery Based on the Wavelet Transform. IEEE Geosci. Remote Sens. Lett. 2005, 2, 201–205. [Google Scholar] [CrossRef] [Green Version]

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual region-based convolutional neural network with multilayer fusion for SAR ship detection. Remote Sens. 2017, 9. [Google Scholar]

- Leng, X.; Ji, K.; Zhou, S.; Xing, X.; Zou, H. An Adaptive Ship Detection Scheme for Spaceborne SAR Imagery. Sensors 2016, 16, 1345. [Google Scholar] [CrossRef] [PubMed]

- Benedek, C.; Descombes, X.; Zerubia, J. Building Development Monitoring in Multitemporal Remotely Sensed Image Pairs with Stochastic Birth-Death Dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 33–50. [Google Scholar] [CrossRef] [PubMed]

- Grinias, I.; Panagiotakis, C.; Tziritas, G. MRF-based segmentation and unsupervised classification for building and road detection in peri-urban areas of high-resolution satellite images. Isprs J. Photogram. Remote Sens. 2016, 122, 145–166. [Google Scholar] [CrossRef]

- Yang, X.; Sun, H.; Sun, X.; Yan, M.; Guo, Z.; Fu, K. Position Detection and Direction Prediction for Arbitrary-Oriented Ships via Multiscale Rotation Region Convolutional Neural Network. IEEE Access 2018, 6, 50839–50849. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.B. Fast R-CNN. In Proceedings of the International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: towards real-time object detection with region proposal networks. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. Neural Inf. Process. Syst. 2016, 379–387. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision and Pattern Recognition, Las Vegas Valley, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, PP, 2999–3007. [Google Scholar]

- Kang, M.; Leng, X.; Lin, Z.; Ji, K. A modified faster R-CNN based on CFAR algorithm for SAR ship detection. In Proceedings of the International Workshop on Remote Sensing with Intelligent Processing, Shanghai, China, 19–21 May 2017; pp. 1–4. [Google Scholar]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic Ship Detection of Remote Sensing Images from Google Earth in Complex Scenes Based on Multi-Scale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Lin, H.; Shi, Z.; Zou, Z. Maritime Semantic Labeling of Optical Remote Sensing Images with Multi-Scale Fully Convolutional Network. Remote Sens. 2017, 9, 480. [Google Scholar] [CrossRef]

- Qiu, S.; Wen, G.; Liu, J.; Deng, Z.; Fan, Y. Unified Partial Configuration Model Framework for Fast Partially Occluded Object Detection in High-Resolution Remote Sensing Images. Remote Sens. 2018, 10, 464. [Google Scholar] [CrossRef]

- Dong, C.; Liu, J.; Xu, F. Ship Detection in Optical Remote Sensing Images Based on Saliency and a Rotation-Invariant Descriptor. Remote Sens. 2018, 10, 400. [Google Scholar] [CrossRef]

- Xu, F.; Liu, J.; Dong, C.; Wang, X. Ship Detection in Optical Remote Sensing Images Based on Wavelet Transform and Multi-Level False Alarm Identification. Remote Sens. 2017, 9, 985. [Google Scholar] [CrossRef]

- Xu, F.; Liu, J.; Sun, M.; Zeng, D.; Wang, X. A Hierarchical Maritime Target Detection Method for Optical Remote Sensing Imagery. Remote Sens. 2017, 9, 280. [Google Scholar] [CrossRef]

- Proia, N.; Page, V. Characterization of a Bayesian Ship Detection Method in Optical Satellite Images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 226–230. [Google Scholar] [CrossRef]

- Tang, J.; Deng, C.; Huang, G.B.; Zhao, B. Compressed-Domain Ship Detection on Spaceborne Optical Image Using Deep Neural Network and Extreme Learning Machine. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1174–1185. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Ship Detection in Spaceborne Optical Image With SVD Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5832–5845. [Google Scholar] [CrossRef]

- Li, Z.; Yang, D.; Chen, Z. Multi-layer Sparse Coding Based Ship Detection for Remote Sensing Images. In Proceedings of the IEEE International Conference on Information Reuse and Integration, Francisco, CA, USA, 13–15 August 2015; pp. 122–125. [Google Scholar]

- Li, H.; Li, Z.; Chen, Z.; Yang, D. Multi-layer sparse coding model-based ship detection for optical remote-sensing images. Int. J. Remote Sens. 2017, 38, 6281–6297. [Google Scholar] [CrossRef]

- Han, J.; Zhou, P.; Zhang, D.; Cheng, G.; Guo, L.; Liu, Z.; Bu, S.; Wu, J. Efficient, simultaneous detection of multi-class geospatial targets based on visual saliency modeling and discriminative learning of sparse coding. Isprs J. Photogram. Remote Sens. 2014, 89, 37–48. [Google Scholar] [CrossRef]

- Yokoya, N.; Iwasaki, A. Object Detection Based on Sparse Representation and Hough Voting for Optical Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 2053–2062. [Google Scholar] [CrossRef]

- Wang, X.; Shen, S.; Ning, C.; Huang, F.; Gao, H. Multi-class remote sensing object recognition based on discriminative sparse representation. Appl. Opt. 2016, 55, 1381–1394. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhu, M.; Lin, C.; Chen, D. Ship detection in optical remote sensing image based on visual saliency and AdaBoost classifier. Optoelectron. Lett. 2017, 13, 151–155. [Google Scholar] [CrossRef]

- Qin, Y.; Lu, H.; Xu, Y.; Wang, H. Saliency detection via Cellular Automata. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 110–119. [Google Scholar]

- Ao, W.; Xu, F.; Li, Y.; Wang, H. Detection and Discrimination of Ship Targets in Complex Background From Spaceborne ALOS-2 SAR Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 536–550. [Google Scholar] [CrossRef]

- Nie, T.; He, B.; Bi, G.; Zhang, Y.; Wang, W. A Method of Ship Detection under Complex Background. ISPRS Int. J. Geo-Inf. 2017, 6, 159. [Google Scholar] [CrossRef]

- Song, Z.; Sui, H.; Wang, Y. Automatic ship detection for optical satellite images based on visual attention model and LBP. In Proceedings of the 2014 IEEE Workshop on Electronics, Computer and Applications, Lake Placid, NY, USA, 10 March 2014; pp. 722–725. [Google Scholar]

- Yang, F.; Xu, Q.; Gao, F.; Hu, L. Ship detection from optical satellite images based on visual search mechanism. In Proceedings of the Geoscience and Remote Sensing Symposium, Milan, Italy, 26–31 July 2015; pp. 3679–3682. [Google Scholar]

- Zhu, C.; Zhou, H.; Wang, R.; Guo, J. A Novel Hierarchical Method of Ship Detection from Spaceborne Optical Image Based on Shape and Texture Features. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3446–3456. [Google Scholar] [CrossRef]

- Xia, Y.; Wan, S.; Yue, L. A Novel Algorithm for Ship Detection Based on Dynamic Fusion Model of Multi-feature and Support Vector Machine. In Proceedings of the International Conference on Image & Graphics, Hefei, China, 12–15 August 2011; pp. 521–526. [Google Scholar]

- Selvi, M.U.; Kumar, S.S. Sea Object Detection Using Shape and Hybrid Color Texture Classification. Commun. Comput. Inf. Sci. 2011, 204, 19–31. [Google Scholar]

- Shi, Z.; Yu, X.; Jiang, Z.; Li, B. Ship Detection in High-Resolution Optical Imagery Based on Anomaly Detector and Local Shape Feature. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4511–4523. [Google Scholar]

- Li, Y.; Sun, X.; Wang, H.; Sun, H.; Li, X. Automatic Target Detection in High-Resolution Remote Sensing Images Using a Contour-Based Spatial Model. IEEE Geosci. Remote Sens. Lett. 2012, 9, 886–890. [Google Scholar] [CrossRef]

- Bi, F.; Zhu, B.; Gao, L.; Bian, M. A Visual Search Inspired Computational Model for Ship Detection in Optical Satellite Images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 749–753. [Google Scholar]

- Zhu, J.; Qiu, Y.; Zhang, R.; Huang, J.; Zhang, W. Top-Down Saliency Detection via Contextual Pooling. J. Signal Process. Syst. 2014, 74, 33–46. [Google Scholar] [CrossRef]

- ElDarymli, K.; McGuire, P.; Power, D.; Moloney, C.R. Target detection in synthetic aperture radar imagery: A state-of-the-art survey. J. Appl. Remote Sens. 2013, 7, 071598. [Google Scholar] [CrossRef]

- Greidanus, H.; Kourti, N. Findings of the DECLIMS project—Detection and Classification of Marine Traffic from Space. In Proceedings of the Advances in SAR Oceanography from Envisat and ERS Missions, Frascati, Italy, 23–26 January 2006. [Google Scholar]

- Zheng, N.; Zheng, W.; Xu, Z.L.; Wang, D.C. Bridge Target Detection in SAR Images Based on Texture Feature. Appl. Mech. Mater. 2013, 347-350, 3634–3638. [Google Scholar] [CrossRef]

- Zhan, Y.; You, H.E. Fast Algorithm for Maneuvering Target Detection in SAR Imagery Based on Gridding and Fusion of Texture Features. Geo-Spat. Inf. Sci. 2011, 14, 169–176. [Google Scholar]

| Algorithm | P (%) | R (%) | MR (%) | FR (%) | C_s | S_s |

|---|---|---|---|---|---|---|

| CNN | 0.83 | 0.84 | 0.16 | 0.17 | 361 | 430 |

| Hog | 0.86 | 0.73 | 0.27 | 0.14 | 314 | 430 |

| LBP | 0.82 | 0.64 | 0.36 | 0.18 | 275 | 430 |

| Our method | 0.85 | 0.97 | 0.03 | 0.15 | 417 | 430 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, N.; Li, B.; Xu, Q.; Wang, Y. Automatic Ship Detection in Optical Remote Sensing Images Based on Anomaly Detection and SPP-PCANet. Remote Sens. 2019, 11, 47. https://doi.org/10.3390/rs11010047

Wang N, Li B, Xu Q, Wang Y. Automatic Ship Detection in Optical Remote Sensing Images Based on Anomaly Detection and SPP-PCANet. Remote Sensing. 2019; 11(1):47. https://doi.org/10.3390/rs11010047

Chicago/Turabian StyleWang, Nan, Bo Li, Qizhi Xu, and Yonghua Wang. 2019. "Automatic Ship Detection in Optical Remote Sensing Images Based on Anomaly Detection and SPP-PCANet" Remote Sensing 11, no. 1: 47. https://doi.org/10.3390/rs11010047