A Super-Resolution and Fusion Approach to Enhancing Hyperspectral Images

Abstract

:1. Introduction

- Group 1 [9,10,11]: Group 1 methods require knowledge about PSF that causes the blur in the LR HS images. Some representative Group 1 methods include coupled non-negative matrix factorization (CNMF) [9], Bayesian naïve (BN) [10], and Bayesian sparse (BS) [11]. Due to the incorporation of PSF, they produce good results in some images.

- Group 2 [12,13,14,15,16,17,18,19,20]: Unlike Group 1 methods, which require knowledge about the PSF, Group 2 methods only require an HR pan band. As a result, Group 2 performs slightly worse than Group 1 in some cases. This group contains Principal Component Analysis (PCA) [12], Guided Filter PCA (GFPCA) [13], Gram Schmidt (GS) [14], GS Adaptive (GSA) [15], Modulation Transfer Function Generalized Laplacian Pyramid (MTF-GLP) [16], MTF-GLP with High Pass Modulation (MTF-GLP-HPM) [17], Hysure [18,19], and Smoothing Filter-based Intensity Modulation (SFIM) [20]. As can be seen in Section 3, some methods in this group have excellent performance even without incorporating PSF.

- Group 3 [7,21,22,23]: This group contains single image super-resolution methods. That is, no pan band or other HR bands are needed. The simplest method is the bicubic algorithm [22]. The key difference between the methods in [21,22] and the Plug-and-Play Alternating Direction Method of Multipliers (PAP-ADMM) algorithm in a past paper [7] is that it [7] uses a PSF to improve the enhancement performance.

- Group 4 [24,25,26,27,28]: Similar to Group 3, this group uses single image super-resolution methods. One key difference between methods here and those in Group 3 is that some training images and steps are needed. For example, some dictionaries [24,25,28] and deep learning [26,27] are used to perform the enhancement process. Moreover, no PSF is required.

- Q1.

- Due to recent advances in single image super-resolution methods, will a single-image super-resolution alone be sufficient to produce a good HR image? If so, then there is no need for other fusion algorithms. In Section 3.2, Section 3.4 and Section 3.5, we provide a negative answer to this question and show that a single-image super-resolution alone is insufficient.

- Q2.

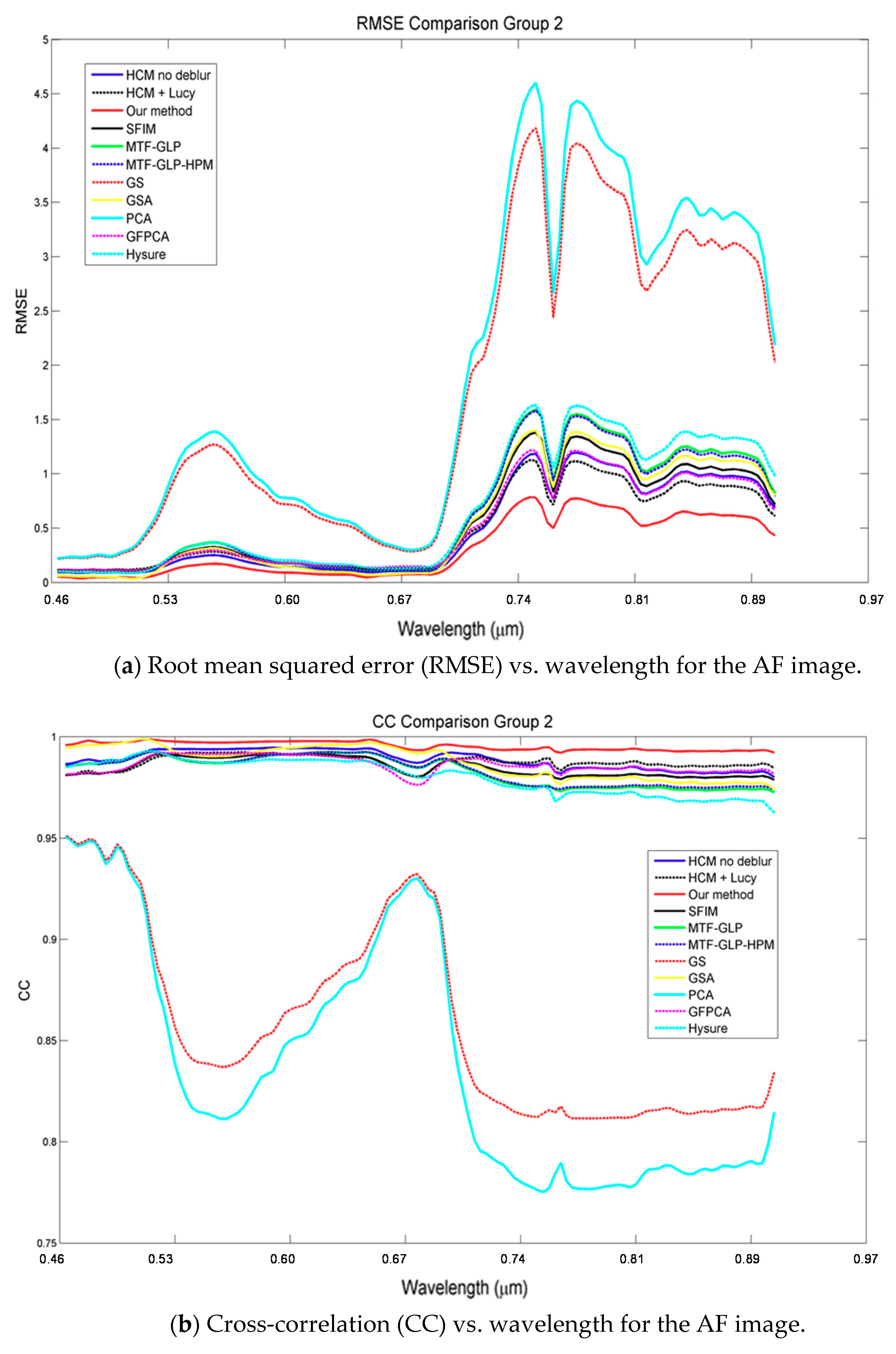

- How much will the single-image super-resolution improve the HCM algorithm? As reported previously in [8], the HCM algorithm already has a comparable performance to Group 1 methods and it does not require a PSF. Thus, if the PSF is included, we might be able to obtain even better results. In Section 3.3 and Section 3.7, we will provide evidence to support this claim.

- Q3.

- Will a single-image super-resolution algorithm help improve Group 2’s performance? In Section 3.3, we will demonstrate that Group 2’s performance cannot be improved with this approach.

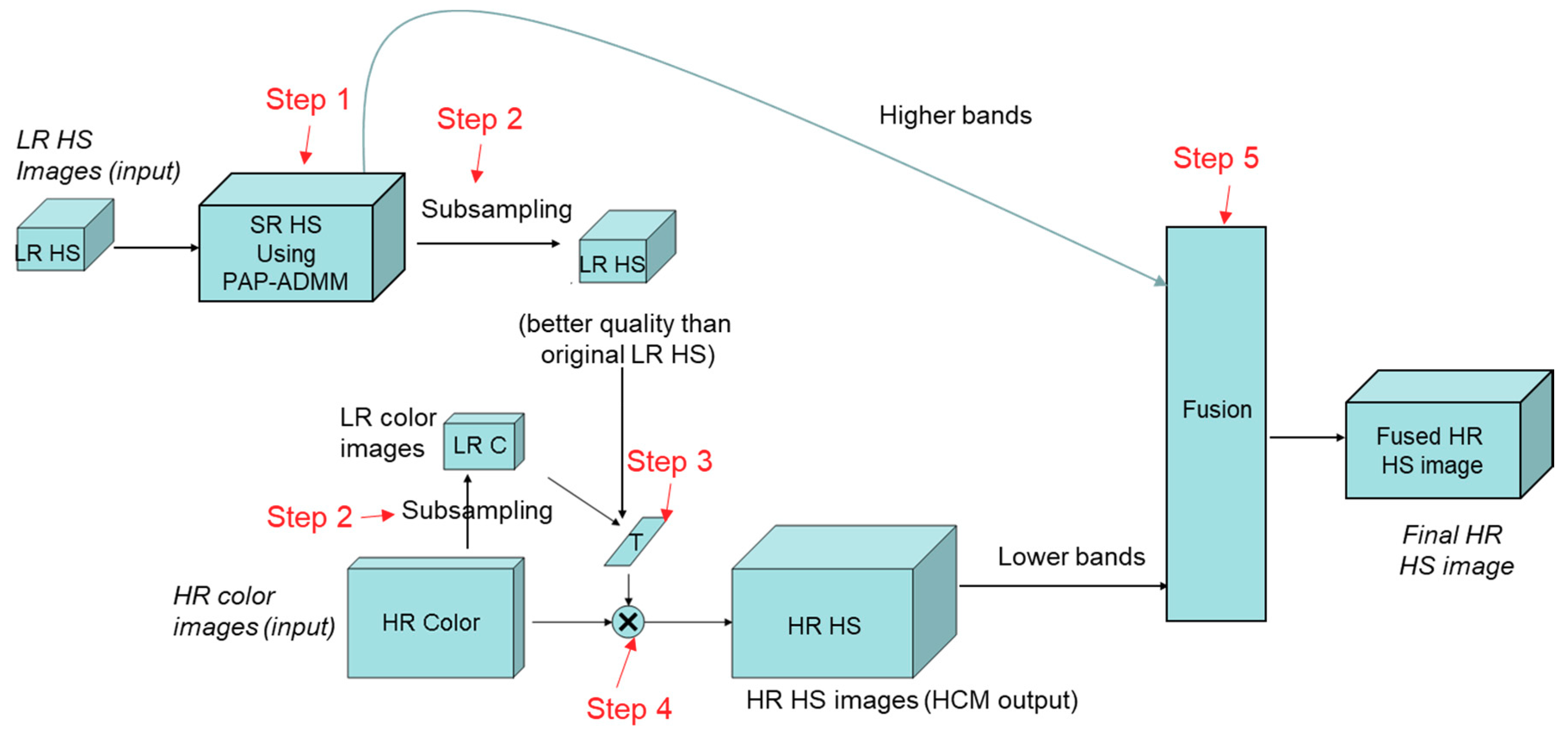

2. Proposed New Algorithm

2.1. Hybrid Color Mapping

2.2. Plug-and-Play ADMM

2.3. Performance Metrics

- CC (Cross-Correlation). The cross-correlation between and is defined aswith and being the mean of the vector and . The ideal value of CC is 1 if perfect reconstruction is accomplished. We also used , which is the CC value between and for each band, to evaluate the performance of different algorithms across individual bands.

- SAM (Spectral Angle Mapper). The spectral angle mapper is

3. Results

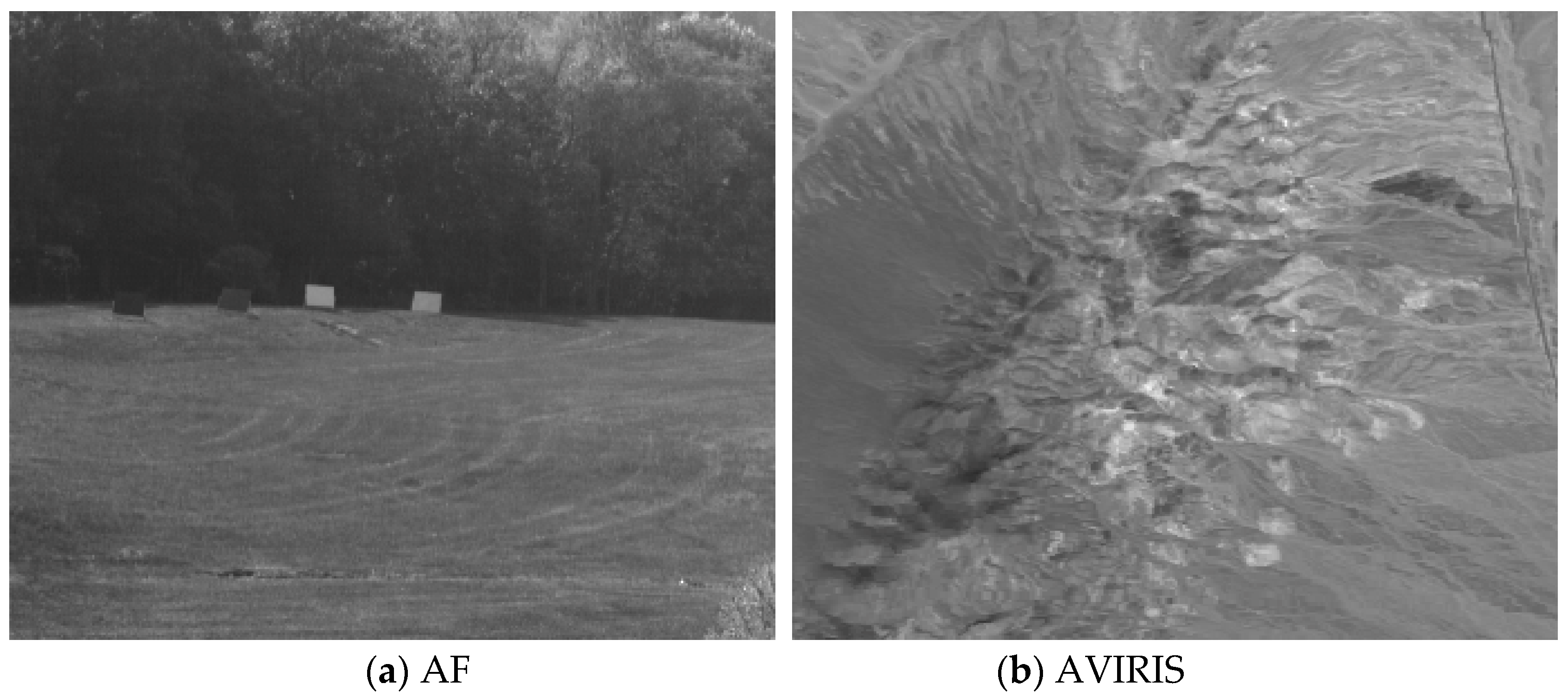

3.1. Data

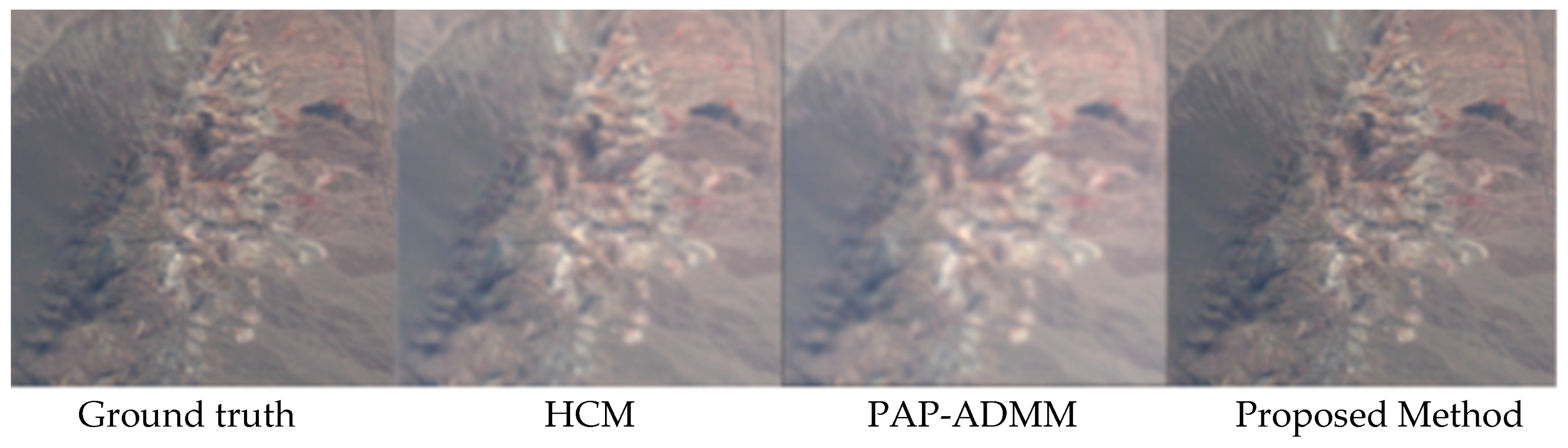

3.2. Comparison between HCM and PAP-ADMM

| Algorithm 1 |

| Input: LR HS data, PSF for the hyperspectral imager, and HR color image |

| Output: HR HS data cube |

| Procedures: |

|

3.3. Comparison with Group 2 Methods

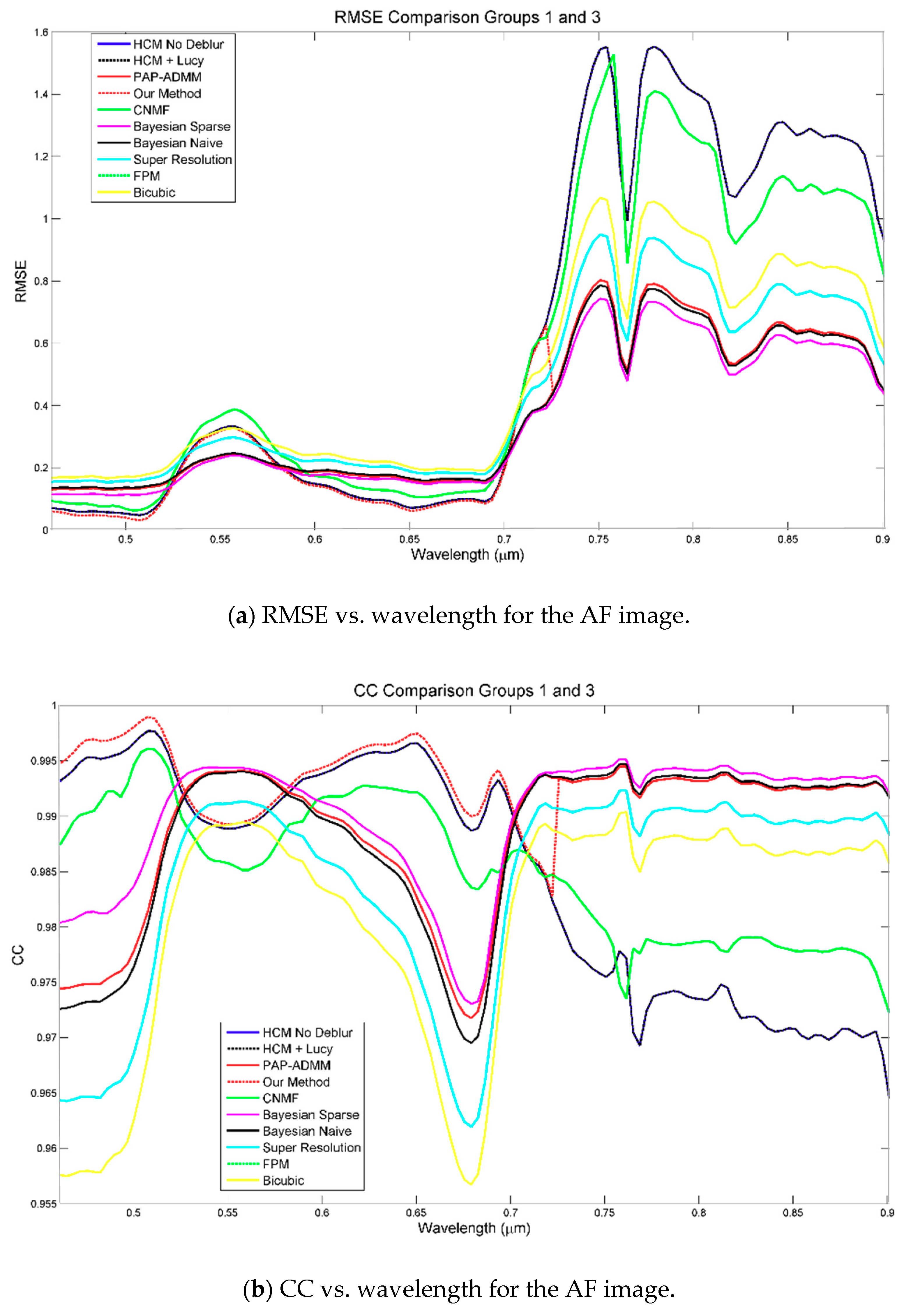

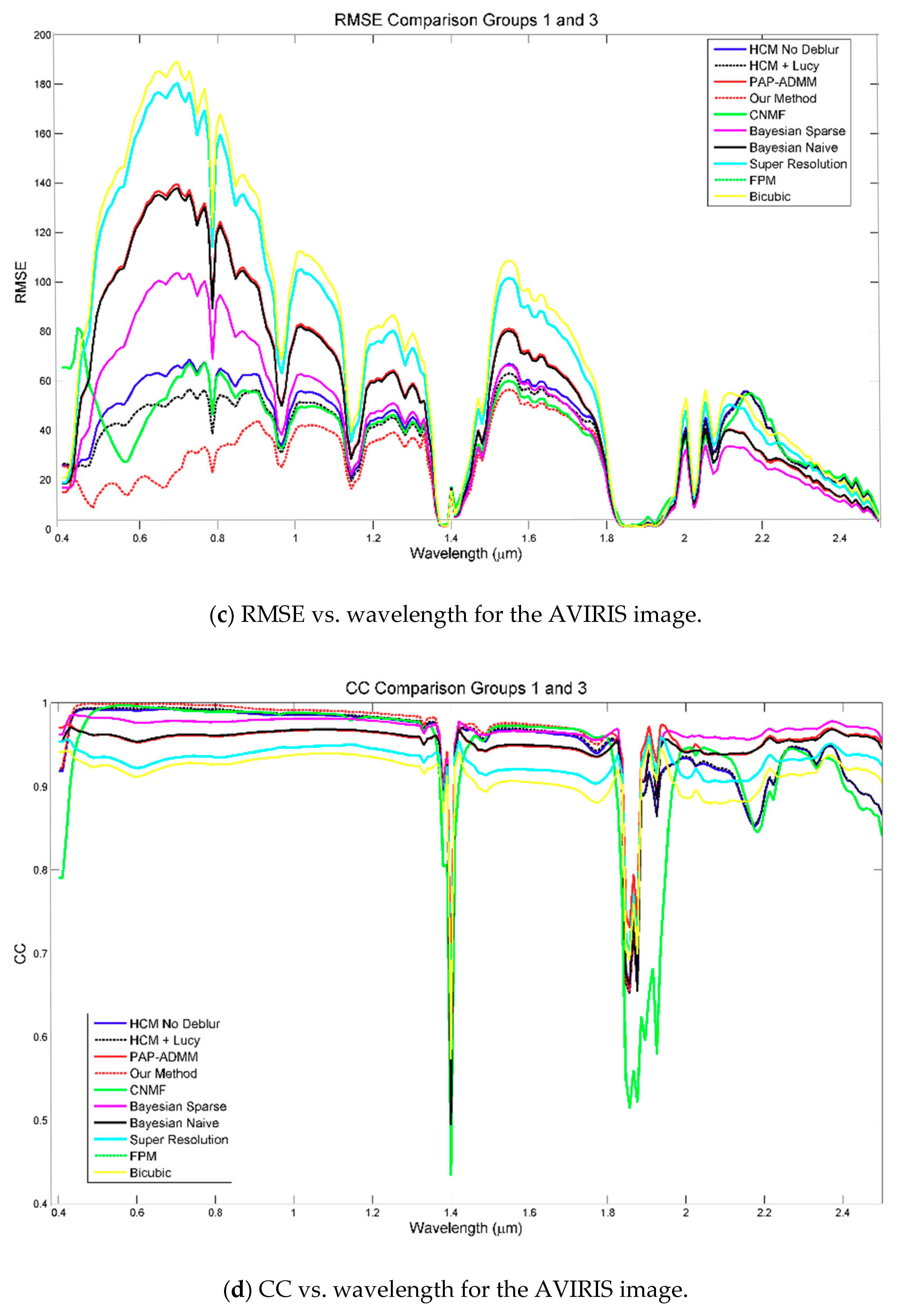

3.4. Comparison with Group 1 and Group 3

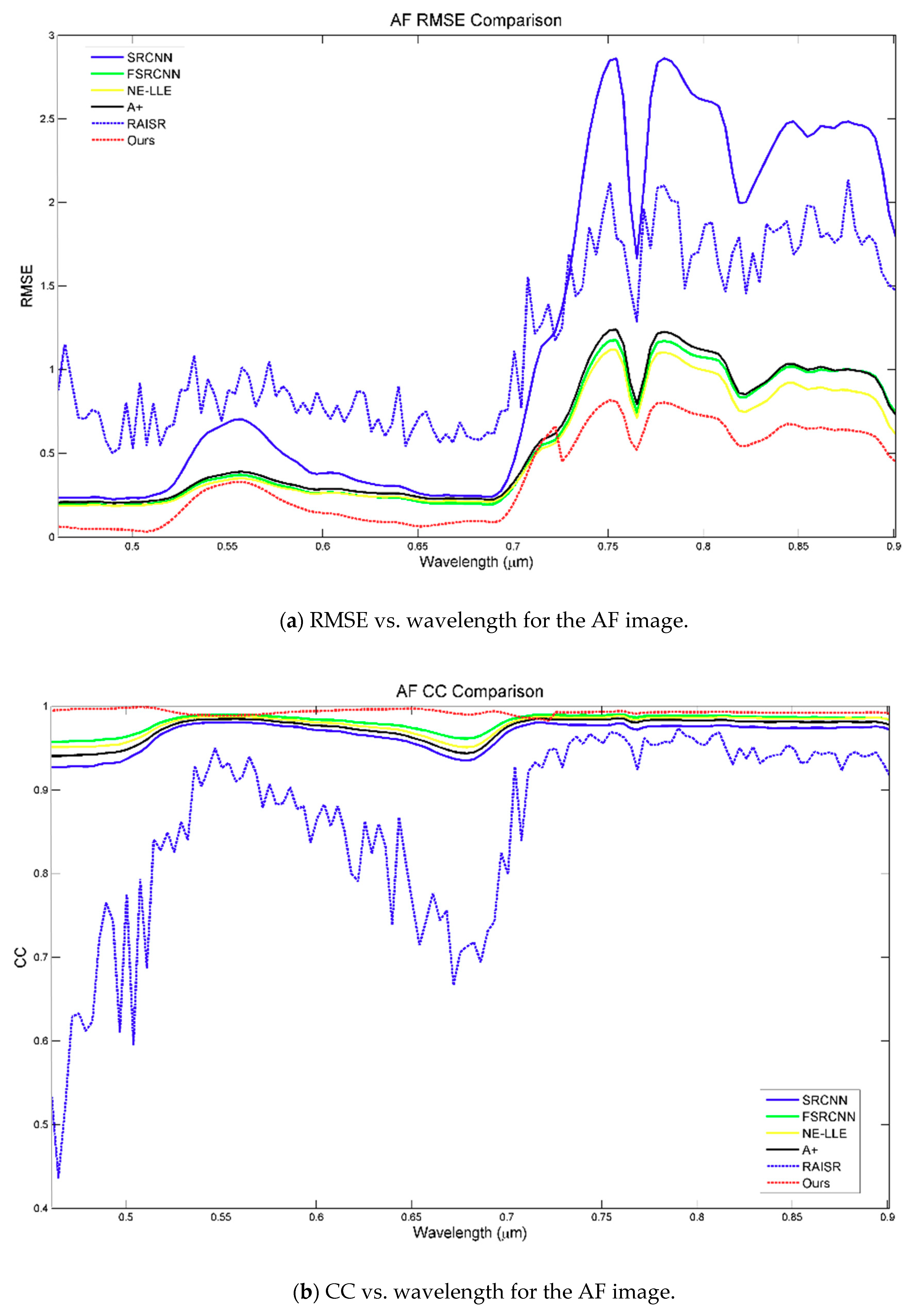

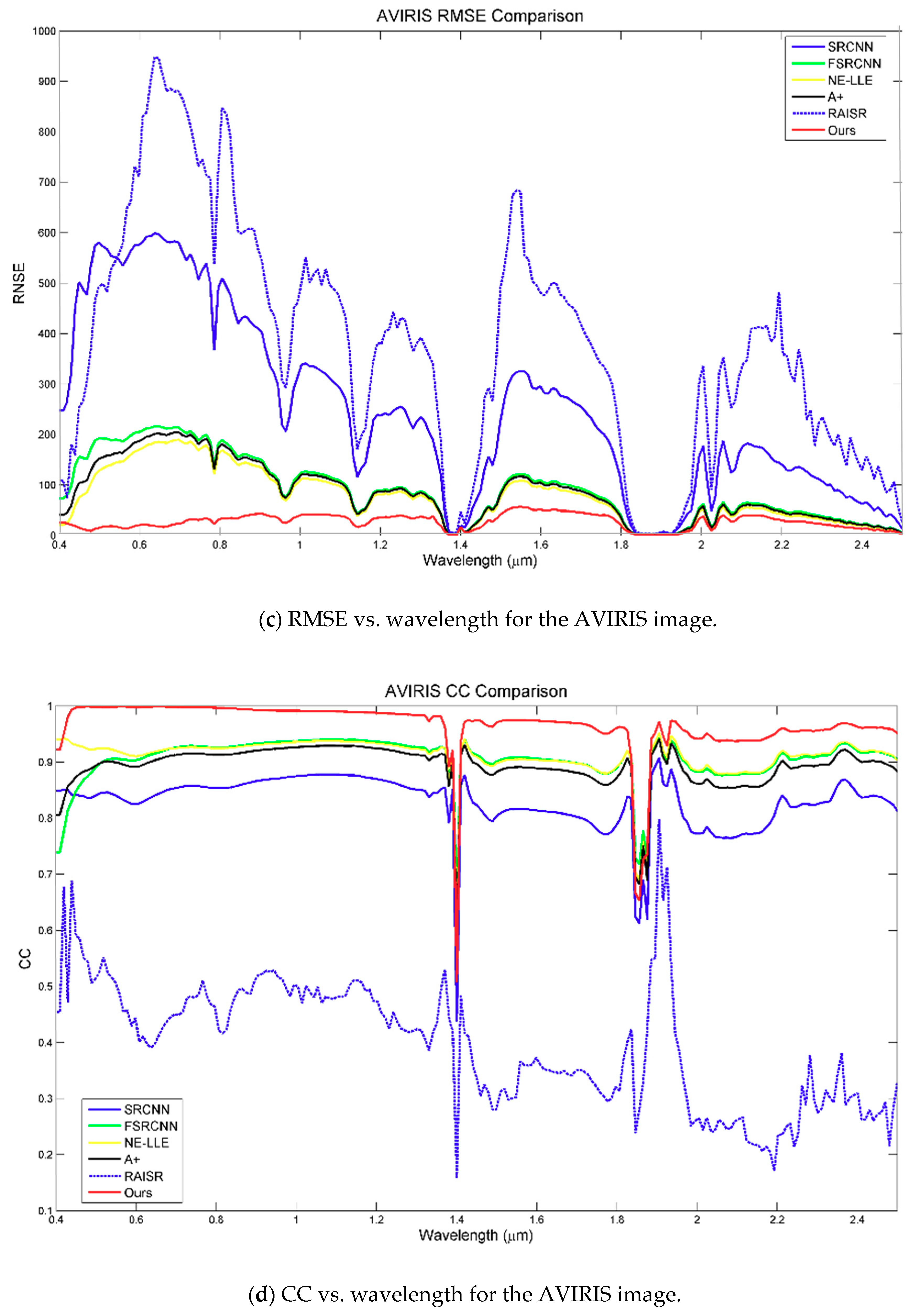

3.5. Comparison with Group 4

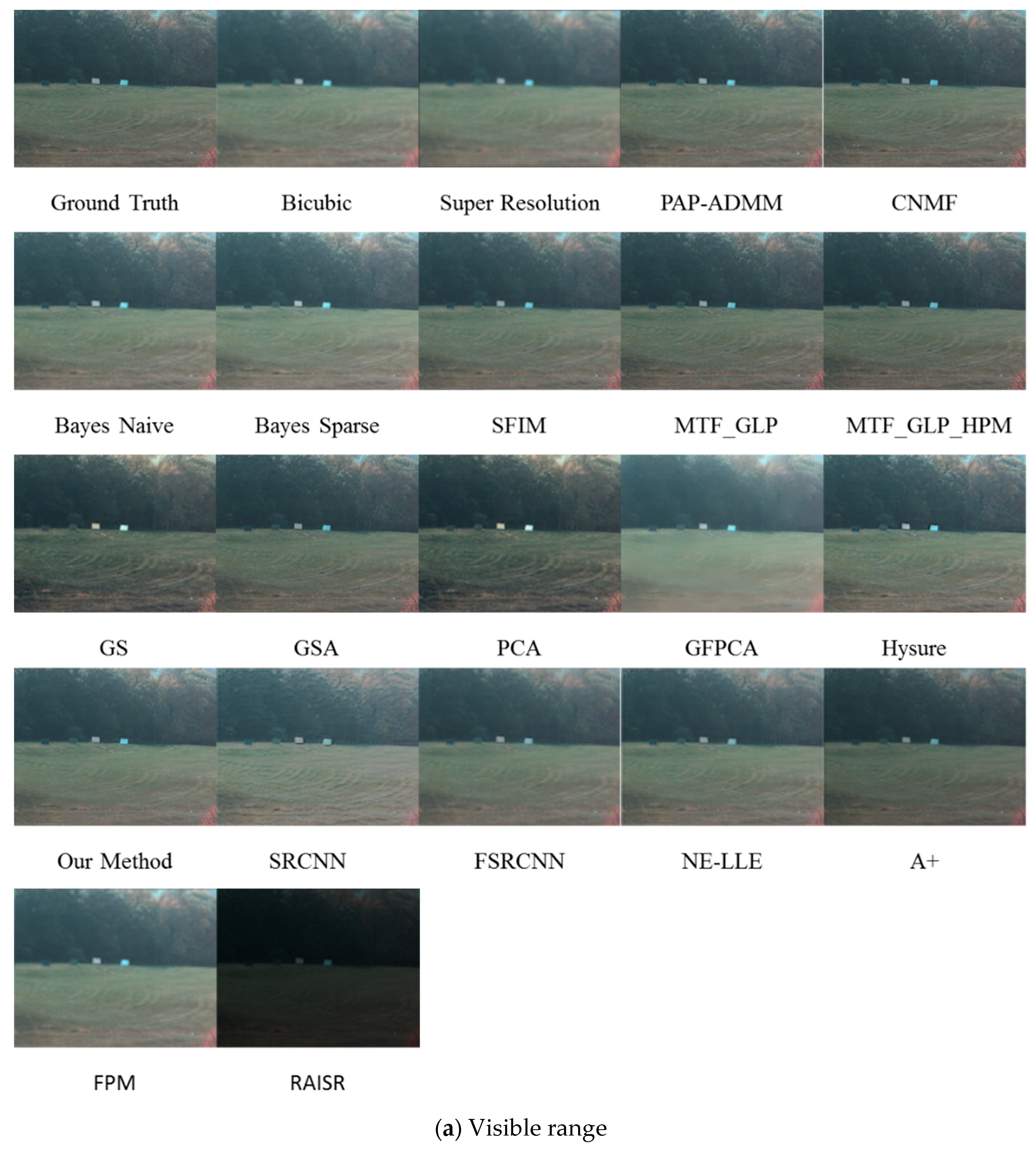

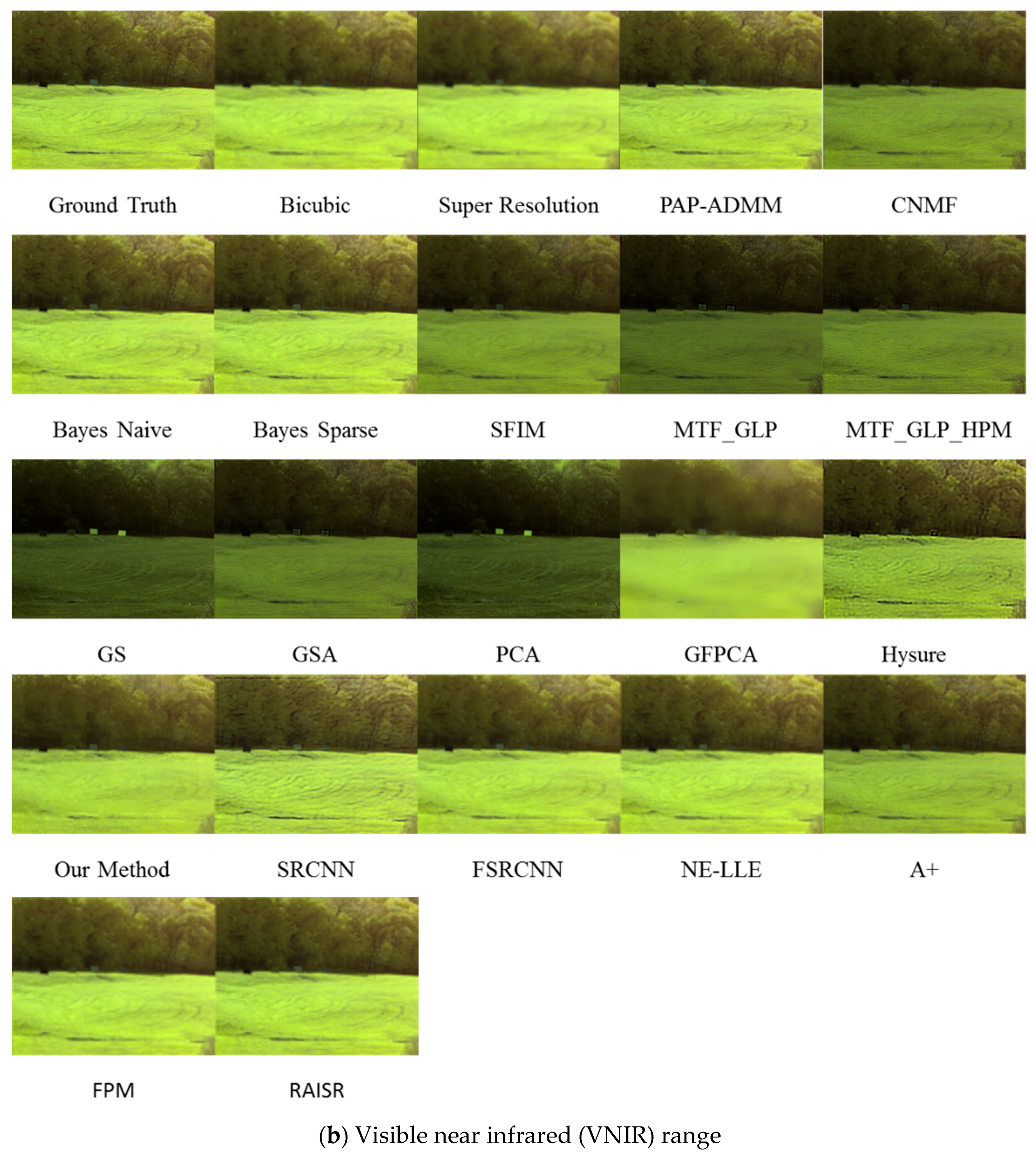

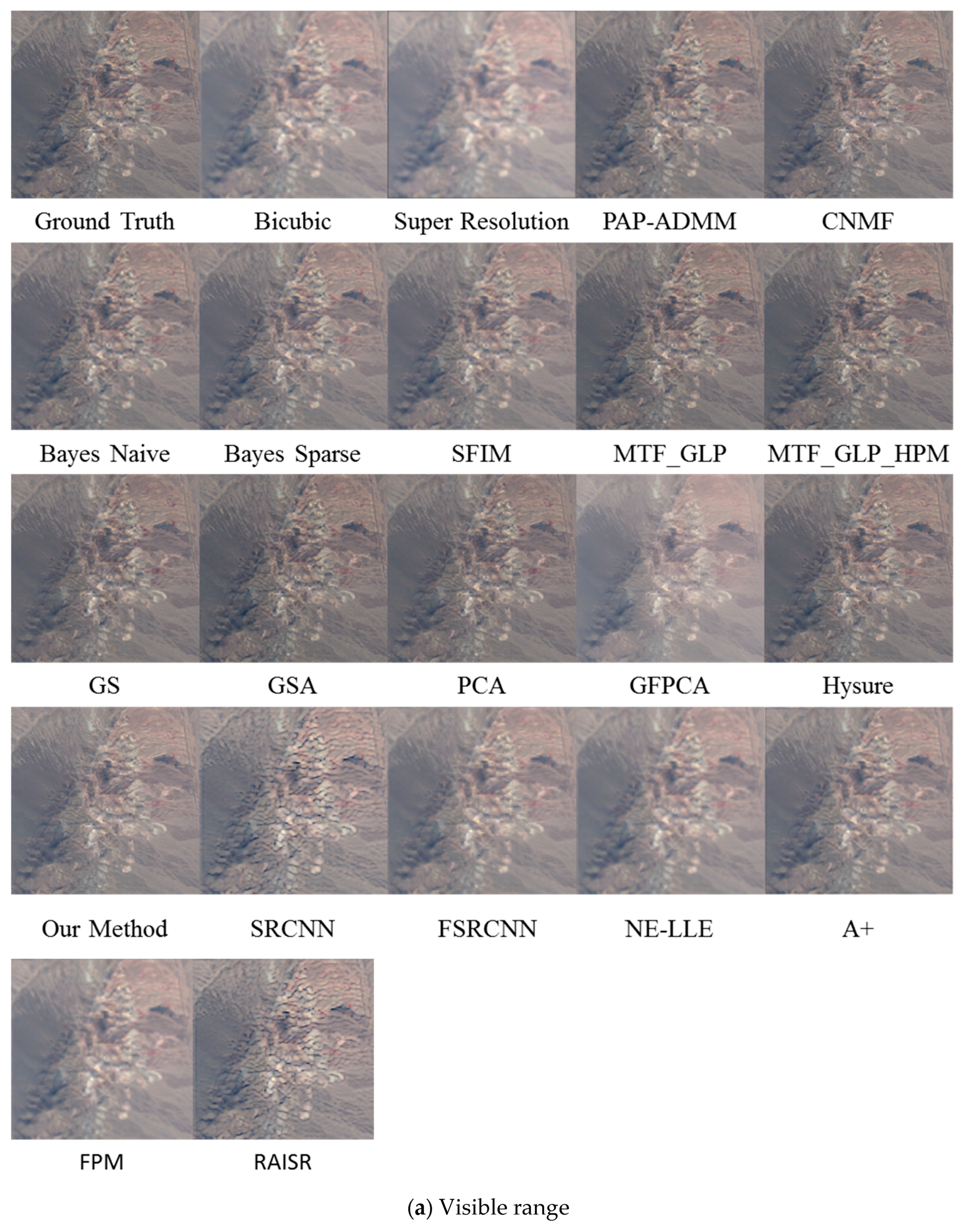

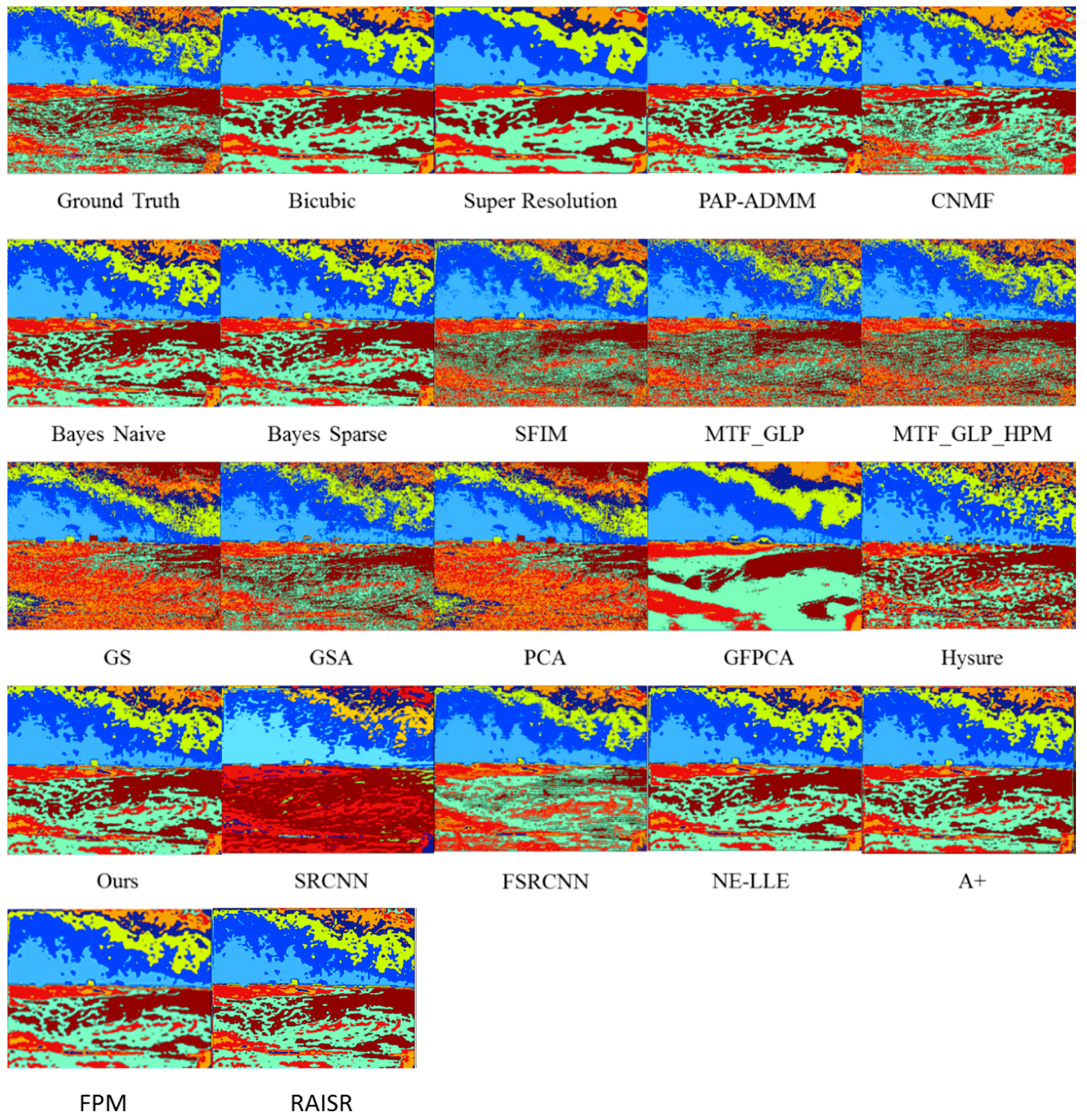

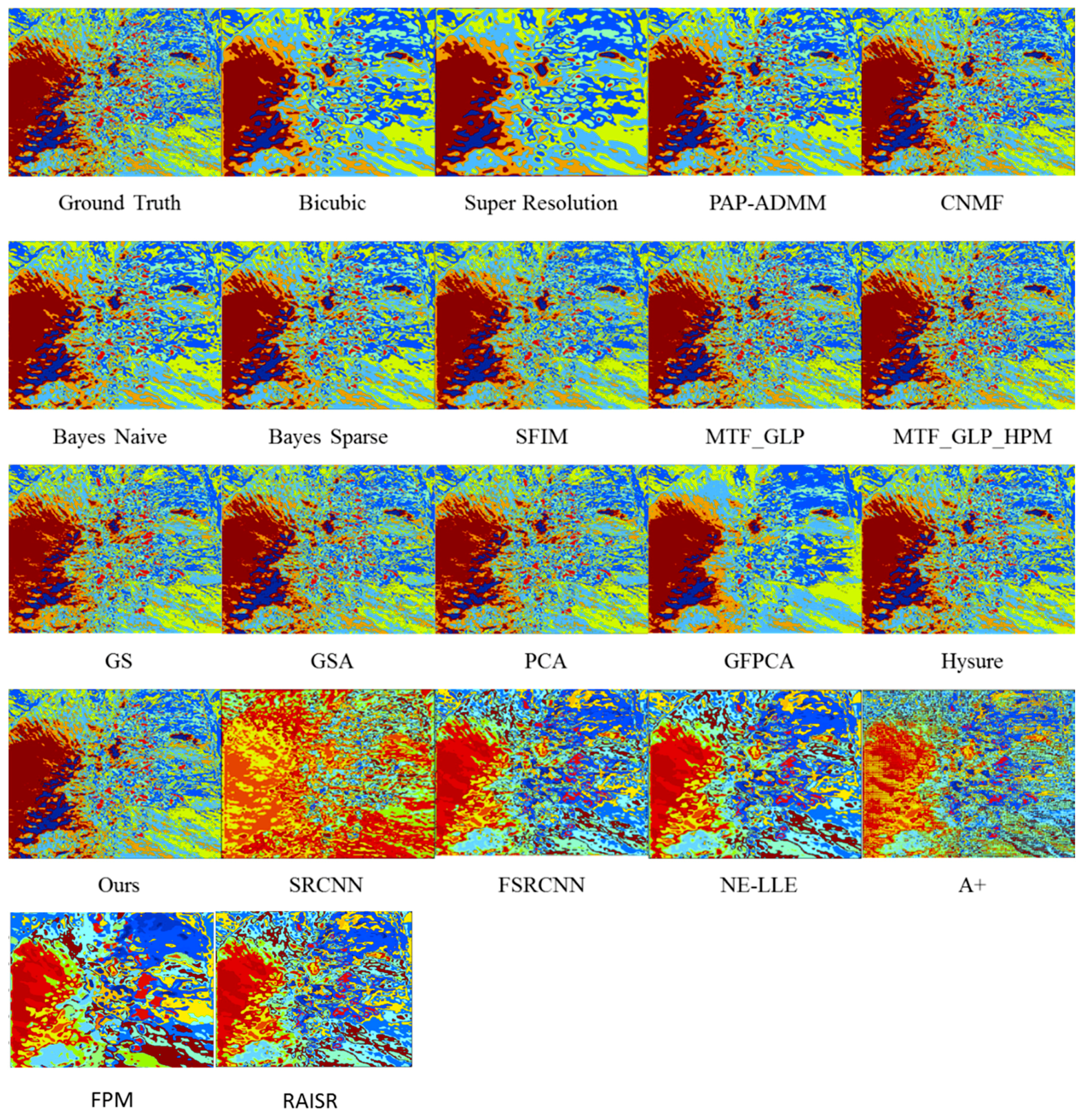

3.6. Visualization of Fused Images Using Different Methods

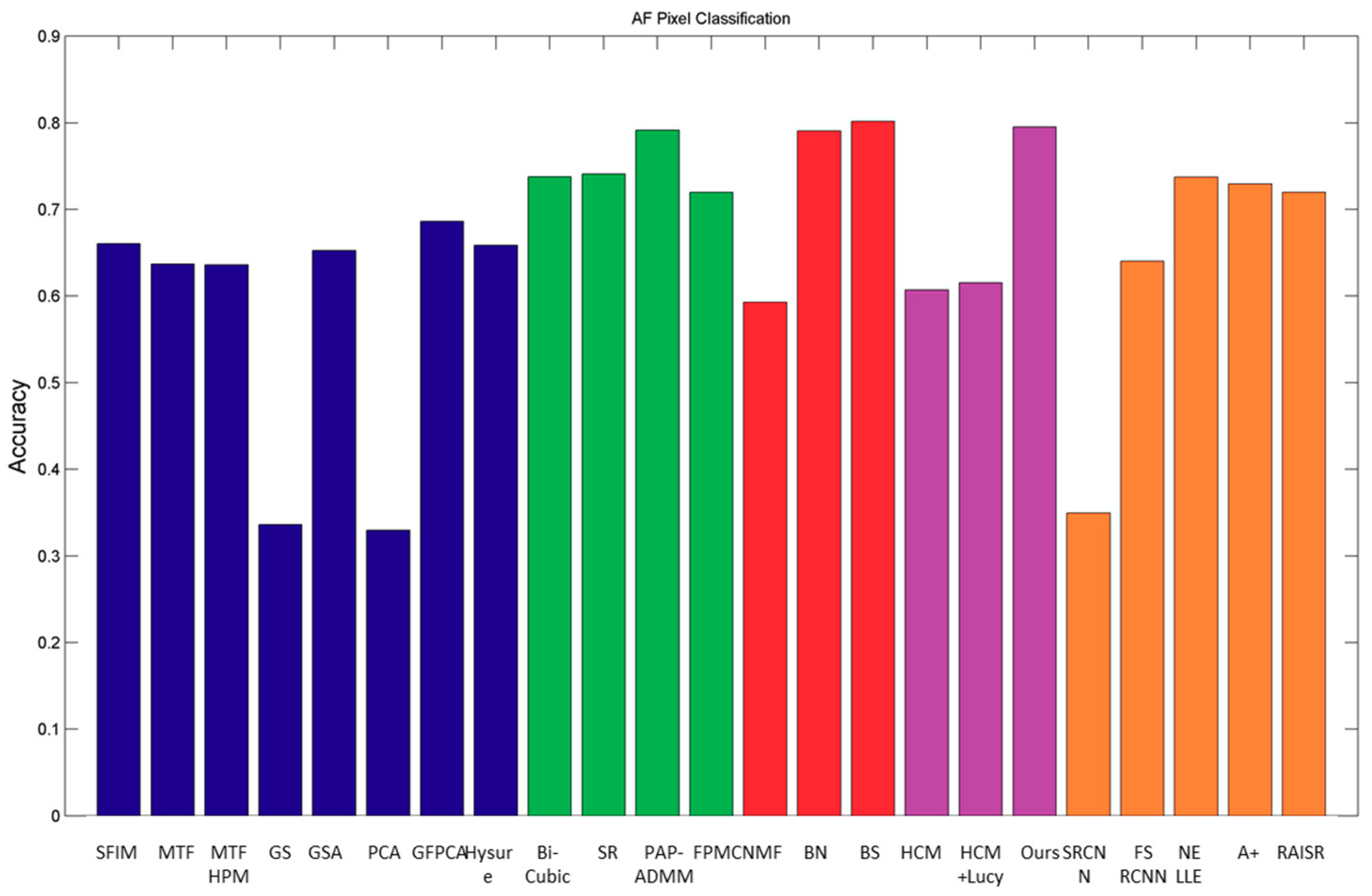

3.7. Performance Comparison of Different Algorithms Using Pixel Clustering

- This study is for pixel clustering, not for land cover classification. In land cover classification, it is normally required to have reflectance signatures of different land covers and the raw radiance images need to be atmospherically compensated to eliminate atmospheric effects.

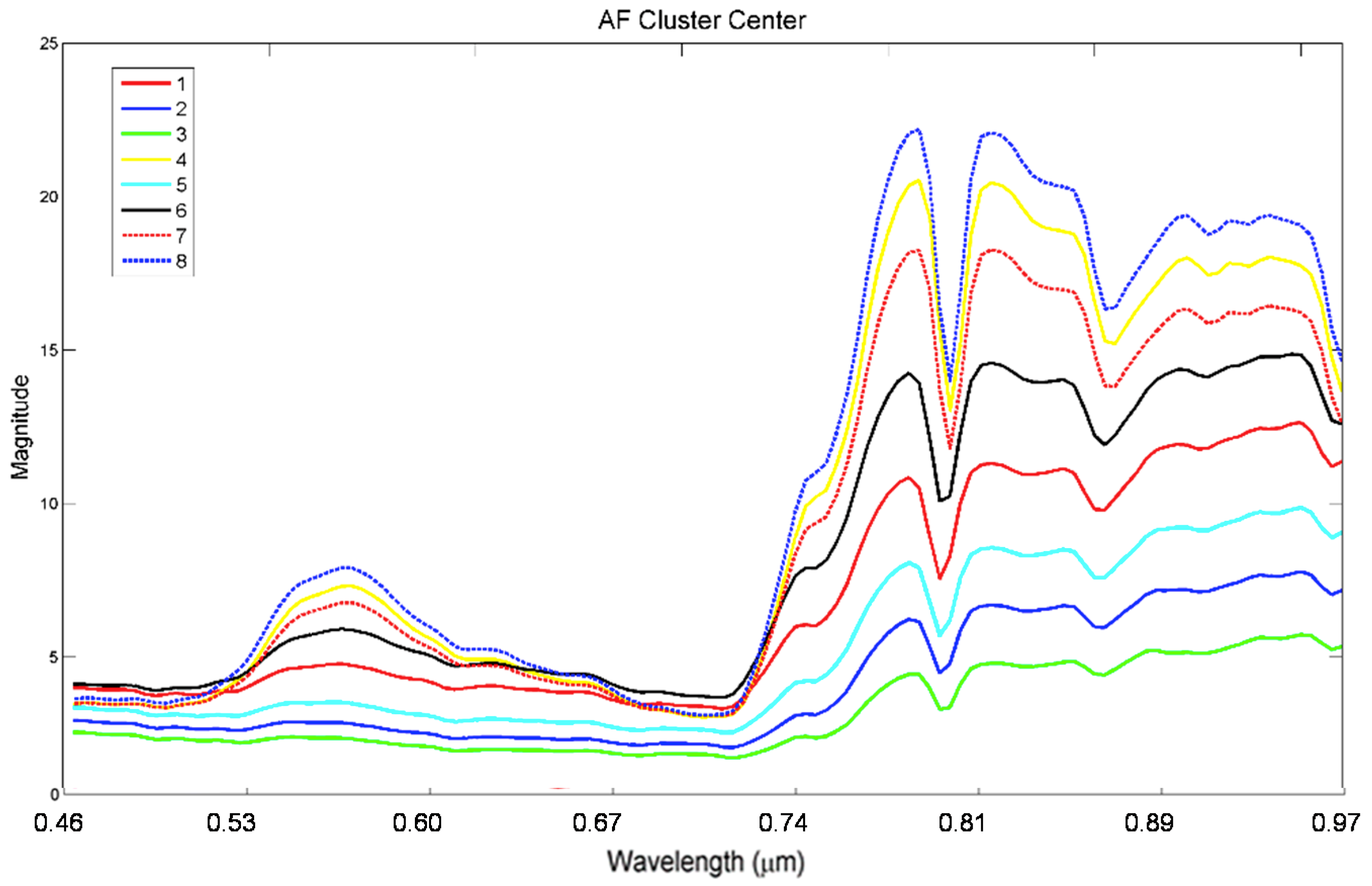

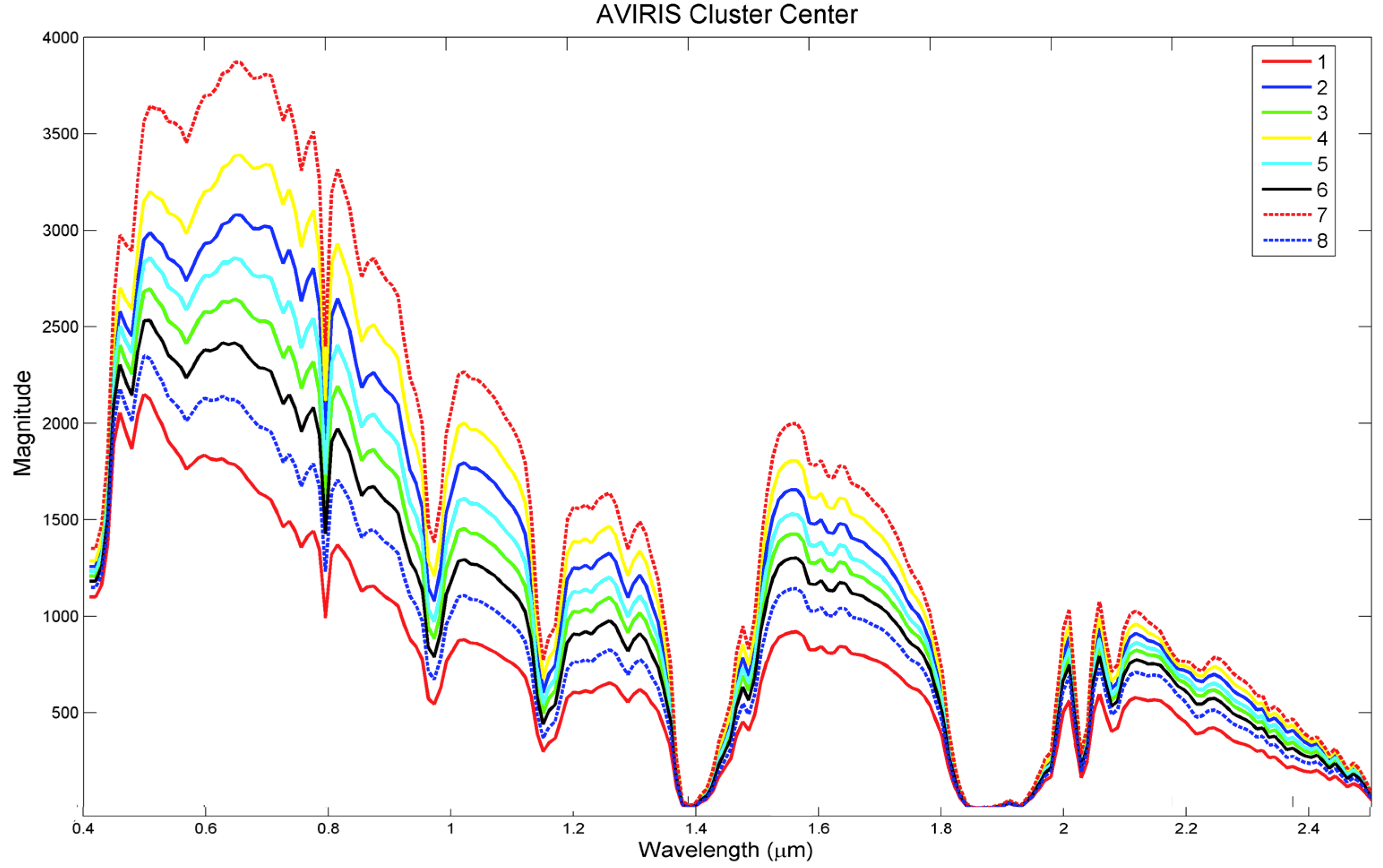

- Because our goal is for pixel clustering, we worked directly in the radiance domain without any atmospheric compensation. We carried out the clustering using k-means algorithm. The number of clusters selected was eight in both the AF and the AVIRIS data sets. Although other numbers could be chosen, we felt that eight clusters would adequately represent the variation of pixels in these images. The eight signatures or cluster means of AF and AVIRIS data sets are shown in Figure 10 and Figure 11, respectively. It can be seen that the clusters are quite distinct.

- Moreover, since our focus is on pixel clustering performance of different pansharpening algorithms, the physical meaning or type of material in each cluster is not the concern of our study.

- A pixel is considered to belong to a particular cluster if its distance to that cluster center is the shortest. Here, distance is defined as the Euclidean distance between two pixel vectors. The main reason is that some of the cluster means in Figure 10 and Figure 11 have similar spectral shapes. If we use spectral angle difference, then there will be many incorrect results.

- It is our belief that if a pansharpening algorithm can preserve the spatial and spectral integrity in terms of RMSE, CC, ERGAS, SAM, and can also achieve a high clustering accuracy, it should be regarded as a high performing algorithm.

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lee, C.M.; Cable, M.L.; Hook, S.J.; Green, R.O.; Ustin, S.L.; Mandl, D.J.; Middleton, E.M. An introduction to the NASA hyperspectral infrared imager (hyspiri) mission and preparatory activities. Remote Sens. Environ. 2015, 167, 6–19. [Google Scholar] [CrossRef]

- Kwan, C.; Yin, J.; Zhou, J.; Chen, H.; Ayhan, B. Fast Parallel Processing Tools for Future HyspIRI Data Processing. In Proceedings of the NASA HyspIRI Science Symposium, Greenbelt, MD, USA, 29–30 May 2013. [Google Scholar]

- Zhou, J.; Chen, H.; Ayhan, B.; Kwan, C. A High Performance Algorithm to Improve the Spatial Resolution of HyspIRI Images. In Proceedings of the NASA HyspIRI Science and Applications Workshop, Washington, DC, USA, 16 October 2012. [Google Scholar]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing; Academic Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Kwan, C.; Dao, M.; Chou, B.; Kwan, L.M.; Ayhan, B. Mastcam Image Enhancement Using Estimated Point Spread Functions. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017. [Google Scholar]

- Chan, S.H.; Wang, X.; Elgendy, O.A. Plug-and-play admm for image restoration: Fixed point convergence and applications. IEEE Trans. Comput. Imaging 2017, 3, 84–98. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C.; Budavari, B. Hyperspectral image super-resolution: A hybrid color mapping approach. J. Appl. Remote Sens. 2016, 10, 035024. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. Map estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. 2004, 13, 1174–1184. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Chavez, P.S., Jr.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat tm and spot panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Liao, W.; Huang, X.; Coillie, F.V.; Gautama, S.; Pizurica, A.; Philips, W.; Liu, H.; Zhu, T.; Shimoni, M.; Moser, G.; et al. Processing of multiresolution thermal hyperspectral and digital color data: Outcome of the 2014 IEEE grss data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2984–2996. [Google Scholar] [CrossRef]

- Laben, C.; Brower, B. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of ms+pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution ms and pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Dalla Mura, M.; Licciardi, G.; Chanussot, J. Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2014, 11, 930–934. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. Hyperspectral image superresolution: An edge-preserving convex formulation. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4166–4170. [Google Scholar]

- Liu, J.G. Smoothing filter based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Yan, Q.; Xu, Y.; Yang, X.; Truong, T.Q. Single image superresolution based on gradient profile sharpness. IEEE Trans. Image Process. 2015, 24, 3187–3202. [Google Scholar] [PubMed]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Desai, D.B.; Sen, S.; Zhelyeznyakov, M.V.; Alenazi, W.; de Peralta, L.G. Super-resolution imaging of photonic crystals using the dual-space microscopy technique. Appl. Opt. 2016, 55, 3929–3934. [Google Scholar] [CrossRef] [PubMed]

- Timofte, R.; de Smet, V.; Van Gool, L. A+: Adjusted anchored neighborhood regression for fast super-resolution. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 111–126. [Google Scholar]

- Chang, H.; Yeung, D.; Xiong, Y. Super-resolution through neighbor embedding. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 391–407. [Google Scholar]

- Romano, Y.; Isidoro, J.; Milanfar, P. RAISR: Rapid and accurate image super resolution. IEEE Trans. Comput. Imaging 2017, 3, 110–125. [Google Scholar] [CrossRef]

- Kwan, C.; Choi, J.H.; Chan, S.; Zhou, J.; Budavari, B. Resolution Enhancement for Hyperspectral Images: A Super-Resolution and Fusion Approach. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 6180–6184. [Google Scholar]

- Kwan, C.; Budavari, B.; Bovik, A.C.; Marchisio, G. Blind Quality Assessment of Fused WorldView-3 Images by Using the Combinations of Pansharpening and Hypersharpening Paradigms. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1835–1839. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B.; Gao, F.; Zhu, X. A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction. Remote Sens. 2018, 10, 520. [Google Scholar] [CrossRef]

- Kwan, C.; Zhu, X.; Gao, F.; Chou, B.; Perez, D.; Li, J.; Shen, Y.; Koperski, K.; Marchisio, G. Assessment of Spatiotemporal Fusion Algorithms for Worldview and Planet Images. Sensors 2018, 18, 1051. [Google Scholar] [CrossRef] [PubMed]

- Kwan, C.; Budavari, B.; Dao, M.; Ayhan, B.; Bell, J.F. Pansharpening of Mastcam images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5117–5120. [Google Scholar]

- Dao, M.; Kwan, C.; Ayhan, B.; Bell, J.F. Enhancing Mastcam Images for Mars Rover Mission. In Proceedings of the 14th International Symposium on Neural Networks, Hokkaido, Japan, 21–26 June 2017; pp. 197–206. [Google Scholar]

- Kwan, C.; Ayhan, B.; Budavari, B. Fusion of THEMIS and TES for Accurate Mars Surface Characterization. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3381–3384. [Google Scholar]

- Kwan, C.; Haberle, C.; Ayhan, B.; Chou, B.; Echavarren, A.; Castaneda, G.; Budavari, B.; Dickenshied, S. On the Generation of High-Spatial and High-Spectral Resolution Images Using THEMIS and TES for Mars Exploration. In Proceedings of the SPIE Defense + Security Conference, Orlando, FL, USA, 10–13 April 2018. [Google Scholar]

- Kwan, C.; Chou, B.; Kwan, L.M.; Budavari, B. Debayering RGBW Color Filter Arrays: A Pansharpening Approach. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017. [Google Scholar]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef] [Green Version]

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. Int. J. Comput. Vis. 1994, 12, 43–77. [Google Scholar] [CrossRef] [Green Version]

- Kwan, C.; Budavari, B.; Dao, M.; Zhou, J. New Sparsity Based Pansharpening Algorithm for Hyperspectral Images. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York, NY, USA, 19–21 October 2017. [Google Scholar]

- Sreehari, S.; Venkatakrishnan, S.V.; Wohlberg, B.; Drummy, L.F.; Simmons, J.P.; Bouman, C.A. Plug-and-play priors for bright field electron tomography and sparse interpolation. IEEE Trans. Comput. Imaging 2016, 2, 408–423. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Kwan, C.; Ayhan, B.; Eismann, M. A Novel Cluster Kernel RX Algorithm for Anomaly and Change Detection Using Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6497–6504. [Google Scholar] [CrossRef]

- Hook, S.J.; Rast, M. Mineralogic mapping using airborne visible infrared imaging spectrometer (aviris), shortwave infrared (swir) data acquired over cuprite, Nevada. In Proceedings of the Second Airborne Visible Infrared Imaging Spectrometer (AVIRIS) Workshop, Pasadena, CA, USA, 4–5 June 1990; pp. 199–207. [Google Scholar]

- Fish, D.A.; Walker, J.G.; Brinicombe, A.M.; Pike, E.R. Blind deconvolution by means of the Richardson-Lucy algorithm. J. Opt. Soc. Am. A 1995, 12, 58–65. [Google Scholar] [CrossRef]

| Methods | RMSE | CC | SAM | ERGAS |

|---|---|---|---|---|

| PAP-ADMM [7] | 66.2481 | 0.9531 | 0.7848 | 1.9783 |

| HCM [8] | 44.3475 | 0.9492 | 0.9906 | 2.0302 |

| Proposed | 30.1907 | 0.9672 | 0.9008 | 1.7205 |

| AF | AVIRIS | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Group | Methods | Time | RMSE | CC | SAM | ERGAS | Time | RMSE | CC | SAM | ERGAS |

| 1 | CNMF [9] | 12.5225 | 0.5992 | 0.9922 | 1.4351 | 1.7229 | 23.7472 | 32.2868 | 0.9456 | 0.9590 | 2.1225 |

| Bayes Naïve [10] | 0.5757 | 0.4357 | 0.9881 | 1.2141 | 1.6588 | 0.8607 | 67.2879 | 0.9474 | 0.8137 | 2.1078 | |

| Bayes Sparse [11] | 208.8200 | 0.4133 | 0.9900 | 1.2395 | 1.5529 | 235.4995 | 51.7010 | 0.9619 | 0.7635 | 1.8657 | |

| 2 | SFIM [20] | 0.9862 | 0.7176 | 0.9846 | 1.5014 | 2.2252 | 1.5615 | 63.7443 | 0.9469 | 0.9317 | 2.0790 |

| MTF GLP [16] | 1.3806 | 0.8220 | 0.9829 | 1.6173 | 2.4702 | 2.2464 | 57.5260 | 0.9524 | 0.9254 | 2.0103 | |

| MTF GLP HPM [17] | 1.3982 | 0.8096 | 0.9833 | 1.5540 | 2.4387 | 2.2250 | 57.5618 | 0.9524 | 0.9201 | 2.0119 | |

| GS [14] | 1.0496 | 2.1787 | 0.8578 | 2.4462 | 7.0827 | 1.8252 | 54.9411 | 0.9554 | 0.9420 | 1.9609 | |

| GSA [15] | 1.2079 | 0.7485 | 0.9875 | 1.5212 | 2.1898 | 1.9784 | 32.4501 | 0.9695 | 0.8608 | 1.6660 | |

| PCA [12] | 2.3697 | 2.3819 | 0.8382 | 2.6398 | 7.7194 | 2.9788 | 48.9916 | 0.9603 | 0.9246 | 1.8706 | |

| GFPCA [13] | 1.1724 | 0.6478 | 0.9862 | 1.5370 | 2.0573 | 2.1686 | 61.9038 | 0.9391 | 1.1720 | 2.2480 | |

| Hysure [18,19] | 117.0571 | 0.8683 | 0.9810 | 1.7741 | 2.6102 | 62.4685 | 38.8667 | 0.9590 | 1.0240 | 1.8667 | |

| 2* | SFIM [20] | 0.9743 | 0.6324 | 0.9901 | 1.3449 | 1.8881 | 1.5327 | 37.0572 | 0.9737 | 0.7205 | 1.5931 |

| MTF GLP [16] | 1.3482 | 0.7420 | 0.9882 | 1.4738 | 2.1628 | 2.1313 | 26.4199 | 0.9772 | 0.6975 | 1.5132 | |

| MTF GLP HPM [17] | 1.4132 | 0.7255 | 0.9887 | 1.4020 | 2.1171 | 2.1364 | 26.5246 | 0.9772 | 0.6969 | 1.5159 | |

| GS [14] | 1.0689 | 2.1660 | 0.8590 | 2.3500 | 7.0568 | 1.6384 | 54.1610 | 0.9624 | 0.8324 | 1.8748 | |

| GSA [15] | 1.2013 | 0.6572 | 0.9896 | 1.3541 | 1.9617 | 2.0012 | 42.8342 | 0.9698 | 0.7686 | 1.6734 | |

| PCA [12] | 2.3945 | 2.3755 | 0.8387 | 2.5490 | 7.7105 | 2.9703 | 48.0821 | 0.9680 | 0.8109 | 1.7678 | |

| GFPCA [13] | 1.1923 | 0.6754 | 0.9837 | 1.5688 | 2.1893 | 1.9354 | 73.6587 | 0.9362 | 1.2344 | 2.4518 | |

| Hysure [18,19] | 119.4377 | 0.8101 | 0.9832 | 1.6748 | 2.4467 | 66.0869 | 38.8677 | 0.9544 | 1.0355 | 1.9516 | |

| 3 | PAP-ADMM [7] | 21,440.000 | 0.4308 | 0.9889 | 1.1622 | 1.6149 | 3368.0000 | 66.2481 | 0.9531 | 0.7848 | 1.9783 |

| Super Resolution [21] | 279.1789 | 0.5232 | 0.9839 | 1.3215 | 1.9584 | 1329.5920 | 86.7154 | 0.9263 | 0.9970 | 2.4110 | |

| Bicubic [22] | 0.0412 | 0.5852 | 0.9807 | 1.3554 | 2.1560 | 0.1038 | 92.2143 | 0.9118 | 1.0369 | 2.5728 | |

| FPM [23] | 23.7484 | .5851 | 0.9804 | 1.3551 | 2.1555 | 52.63799 | 92.2141 | 0.9117 | 1.0369 | 2.5728 | |

| 4 | SRCNN [26] | 503.1755 | 1.5649 | 0.9663 | 2.8086 | 4.7609 | 4897.0460 | 303.9198 | 0.8291 | 1.5628 | 7.4368 |

| FSRCNN [27] | 470.0645 | 0.6637 | 0.9814 | 1.4091 | 2.3852 | 836.1380 | 109.2260 | 0.9052 | 1.0936 | 2.8898 | |

| NE+ LLE [25] | 27,848.000 | 0.6130 | 0.9779 | 1.4018 | 2.2859 | 44,722.000 | 92.3977 | 0.9106 | 1.0423 | 2.5791 | |

| A+ [24] | 40.5783 | 0.6872 | 0.9733 | 1.4156 | 2.5288 | 65.9070 | 100.9513 | 0.8925 | 1.0473 | 2.7643 | |

| RAISR [28] | 1287.2375 | 1.2830 | 0.8611 | 3.6061 | 6.0723 | 2341.1421 | 373.7005 | 0.3947 | 1.4193 | 13.0819 | |

| Ours | HCM no deblur [8] | 0.5900 | 0.5812 | 0.9908 | 1.4223 | 1.7510 | 1.5000 | 44.3474 | 0.9492 | 0.9906 | 2.0302 |

| HCM Lucy [46] | 1.2000 | 0.6009 | 0.9879 | 1.3950 | 1.9308 | 1.5000 | 37.2436 | 0.9518 | 0.9683 | 1.9720 | |

| Our method | 0.5900 | 0.4151 | 0.9956 | 1.1442 | 1.2514 | 1.5000 | 30.1907 | 0.9672 | 0.9008 | 1.7205 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwan, C.; Choi, J.H.; Chan, S.H.; Zhou, J.; Budavari, B. A Super-Resolution and Fusion Approach to Enhancing Hyperspectral Images. Remote Sens. 2018, 10, 1416. https://doi.org/10.3390/rs10091416

Kwan C, Choi JH, Chan SH, Zhou J, Budavari B. A Super-Resolution and Fusion Approach to Enhancing Hyperspectral Images. Remote Sensing. 2018; 10(9):1416. https://doi.org/10.3390/rs10091416

Chicago/Turabian StyleKwan, Chiman, Joon Hee Choi, Stanley H. Chan, Jin Zhou, and Bence Budavari. 2018. "A Super-Resolution and Fusion Approach to Enhancing Hyperspectral Images" Remote Sensing 10, no. 9: 1416. https://doi.org/10.3390/rs10091416