Hyperspectral Target Detection via Adaptive Information—Theoretic Metric Learning with Local Constraints

Abstract

:1. Introduction

- The proposed ITML-ALC algorithm can use limited numbers of target samples to detect targets without certain assumptions, compared with traditional target detection methods.

- ITML-ALC needs only one parameter to be adjusted, and the detection results are relatively stable for different values of parameter.

- ITML-ALC can remain the locality information and improve the detection performance via considering both the threshold and the changes between the distances before and after metric learning, while existing metric learning based methods uses fixed threshold to make decision.

2. Methods

2.1. Related Work

2.2. Combining ITML and Adaptively Local Constraints

2.3. Final Sketch of ITML-ALC Algorithm

| Algorithm 1: Procedure of ITML-ALC |

| Input: A set of pairwise training data points , the trade–off parameter Output: Metric matrix |

| Step (1): Initialization: input Mahalanobis matrix , initialized slack variable , , , . Set: , . Step (2): Repeat {Main loop} (a) (b) , (c) (d) (e) (f) Until: convergence is attained Return: |

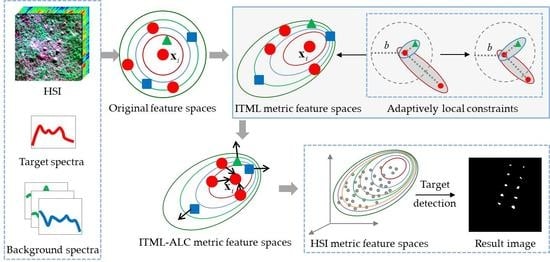

2.4. Workflow of ITML-ALC Algorithm

3. Experiments Analysis and Results

3.1. Dataset Description

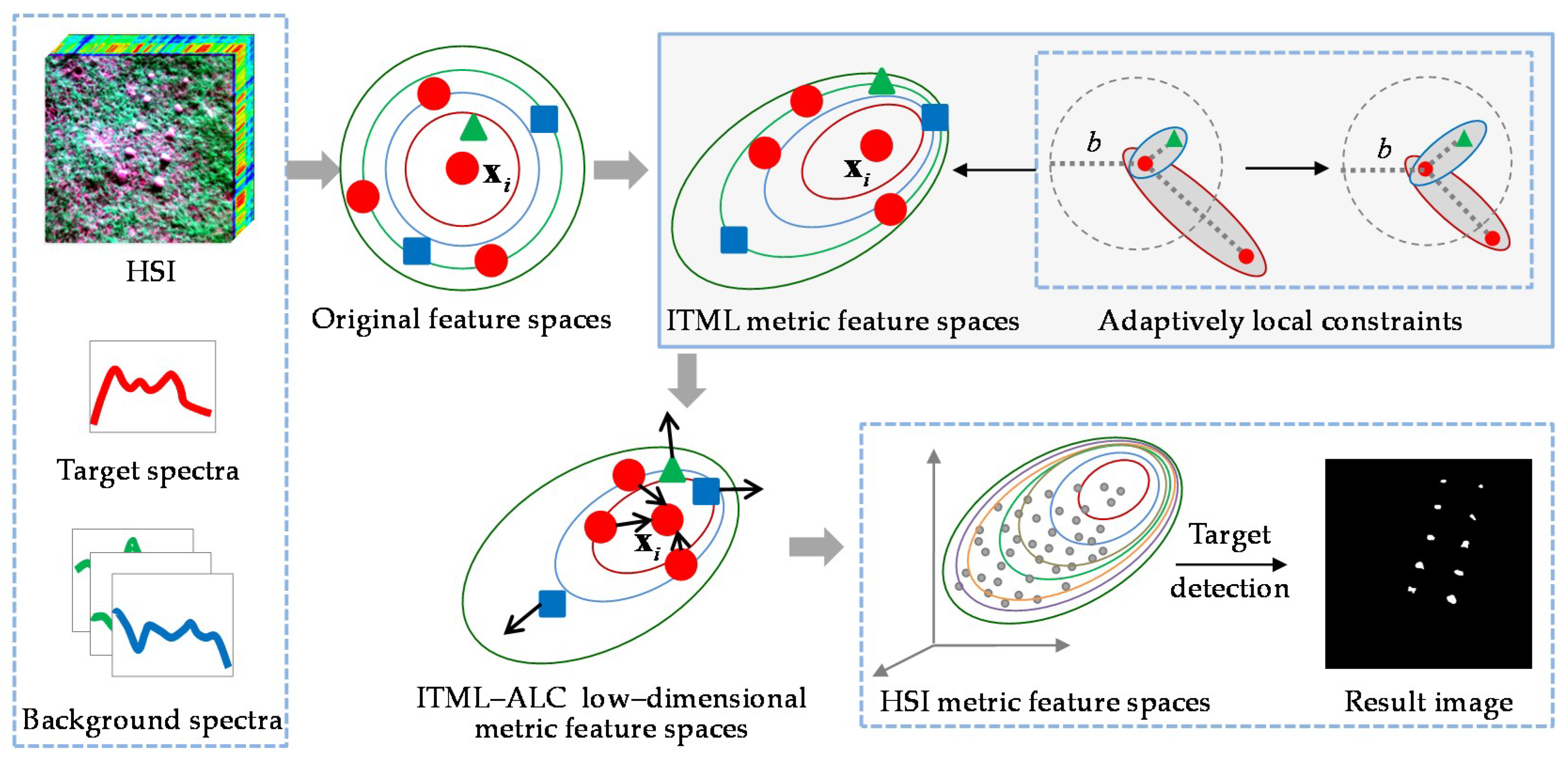

- (1)

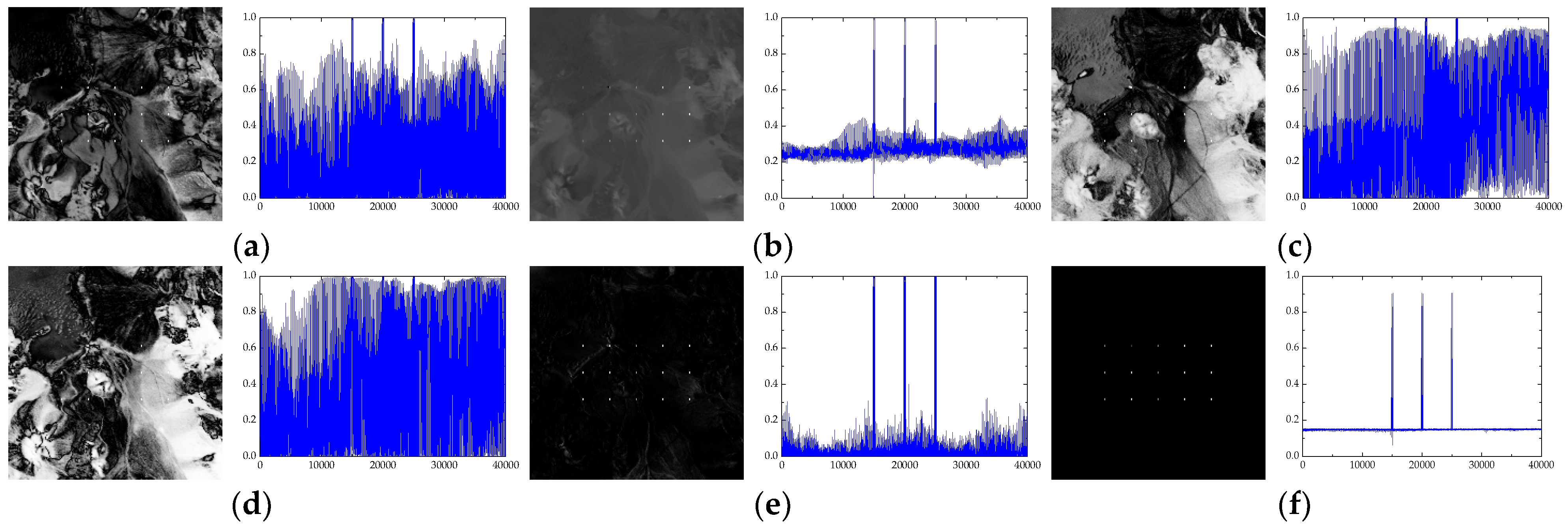

- AVIRIS LCVF dataset: This image was acquired by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor, operated by National Aeronautics and Space Administration (NASA), USA, covering the Lunar Crater Volcanic Field (LCVF) in Northern Nye County, NV, USA. This dataset is a synthetic dataset and is available from the website of NASA website. Many studies used this dataset for HSI processing [38,39]. The spatial resolution of the LCVF image is 20 m per pixel, and the spectral resolution of the image is 5 nm, with 224 spectral channels in wavelengths ranging from 370 to 2510 nm. An area of pixels is used for the experiments, as shown in Figure 2a, including red oxidized basaltic cinders, rhyolite, playa (dry lakebed), shade, and vegetation. We implant the alunite spectrum, which is obtained from the U.S. Geological Survey (USGS) digital spectral library, into the image for simulating target detection in the considered scene. Figure 2b shows corresponding locations of the implanted target panels. The added target panels have the same size, i.e., two pixels for each target panel, and the detailed coordinates of all 30 target pixels are given in Table 1. In this table, all the implanted target pixels are mixed pixels, and each spectrum of the HSI is mixed with the pure prior target spectrum and the original background spectra by the following equation: , where is the implanted fraction, which varies from 10% to 50%, as indicated in Table 1. The adopted pure target spectrum and some representative background samples spectra (denoted as A to H) are shown in Figure 2c, and the locations of the background samples given in Figure 2c are highlighted in Figure 2d.

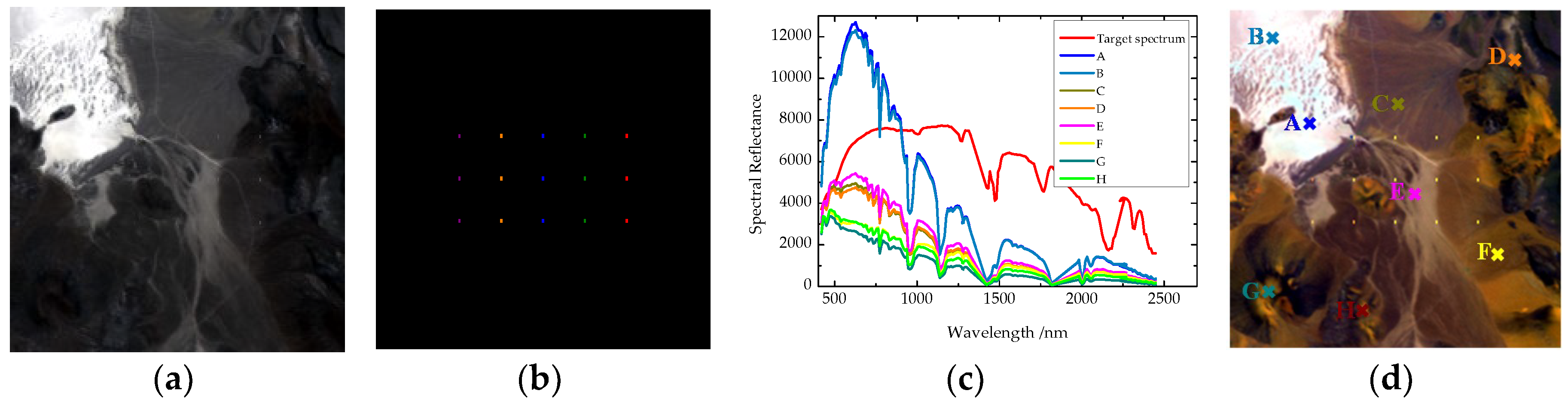

- (2)

- AVIRIS San Diego airport dataset: This image capturing an airport in the region of San Diego, CA, USA, was recorded by the AVIRIS sensor, as shown in Figure 3a [40]. The spatial resolution of this image is 3.5 m per pixel. The image has 224 spectral channels in wavelengths ranging from 370 to 2510 nm. A total of 189 bands are used in the experiments after removing the bands that correspond to the water absorption regions, low-signal noise ratio (SNR), and bad bands (1–6, 33–35, 97, 107–113, 153–166, and 221–224). An area of pixels is used for the experiments, including roofs, bare soil, grass, road, airstrip, and shadow, which contains more complicated background land-cover classes. There are three airplanes in the image denoted as the targets of interest, which consist of 58 target pixels, and are denoted with the white target mask in Figure 3b. Figure 3c shows the spectra of mean target pixels and some representative background samples. We select the target spectra of the centers of airplanes as a priori target spectra, and we randomly select eight background spectra as the background samples.

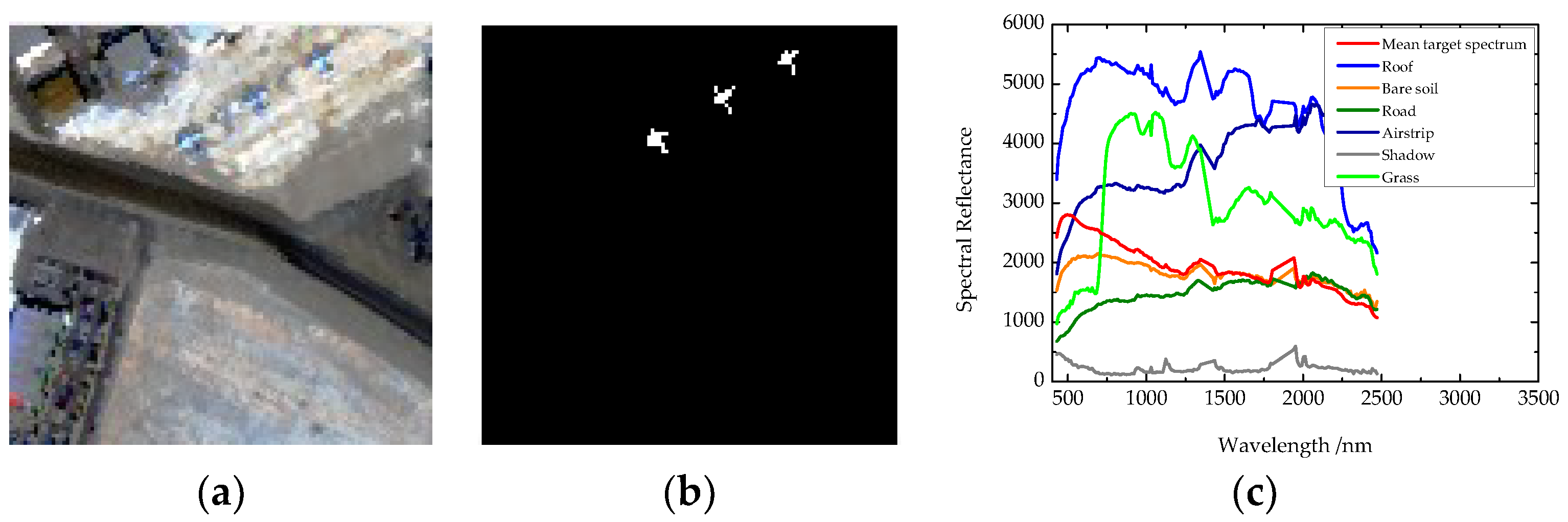

- (3)

- HYDICE urban dataset: This image is a Hyperspectral Digital Imagery Collection Experiment (HYDICE) airborne sensor dataset, sponsored by the U.S. Navy Space and Warfare Systems Command, covering a suburban residential area [41,42], as illustrated in Figure 4a. The scene mainly covers grass fields with some forest, and the rest of the scene is mixed with a parking lot with some vehicles, a residential area, and a roadway where some vehicles exist. The spatial resolution of this image is approximately 3 m, and the spectral resolution of the image is about 10 nm. The image scene contains an area of pixels, with 210 spectral bands in wavelengths ranging from 400 to 2500 nm. There are 17 pixels of desired targets, the vehicles, which are contained in the parking lot and the road, as shown in Figure 4b. Besides, the spectra of mean target pixels and some representative background samples are illustrated in Figure 4c. We randomly select a target spectrum as a priori target spectrum, and seven background spectra as the background samples.

3.2. Parameter Analysis

3.3. Detection Results and Validation

4. Discussion

- (1)

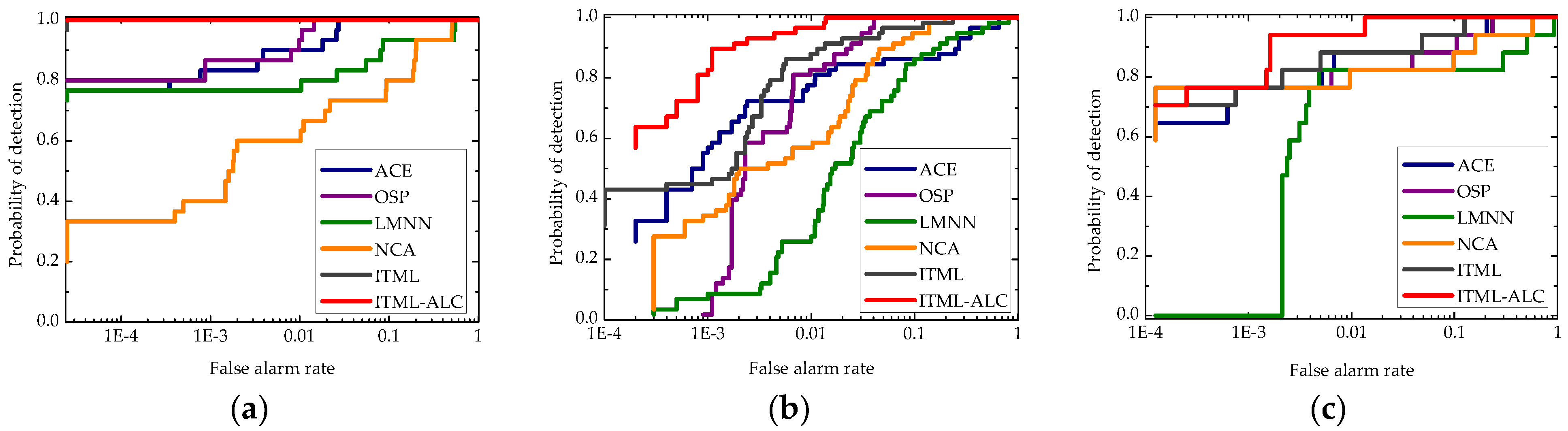

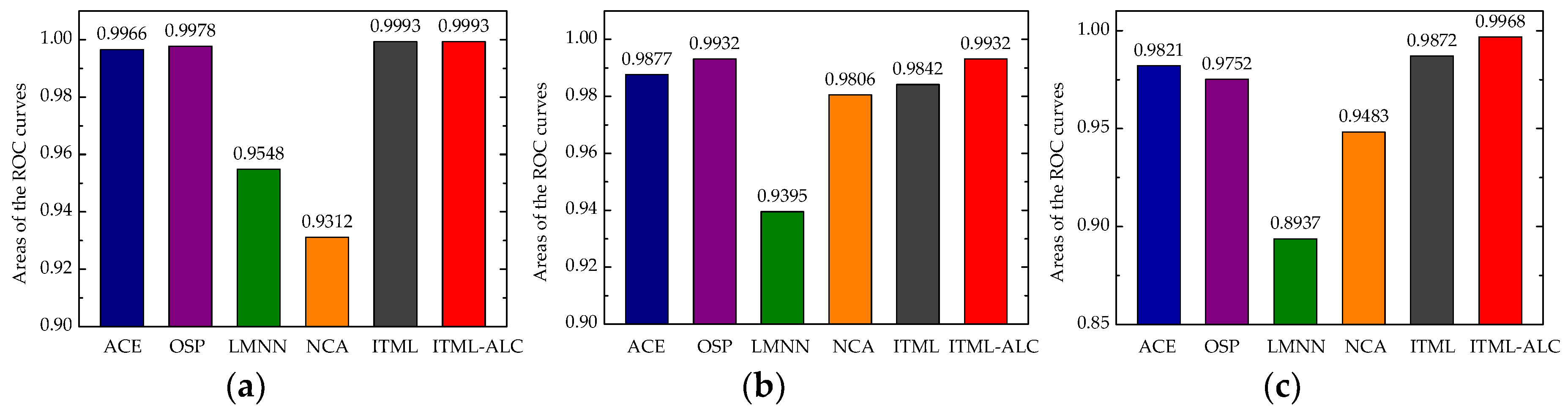

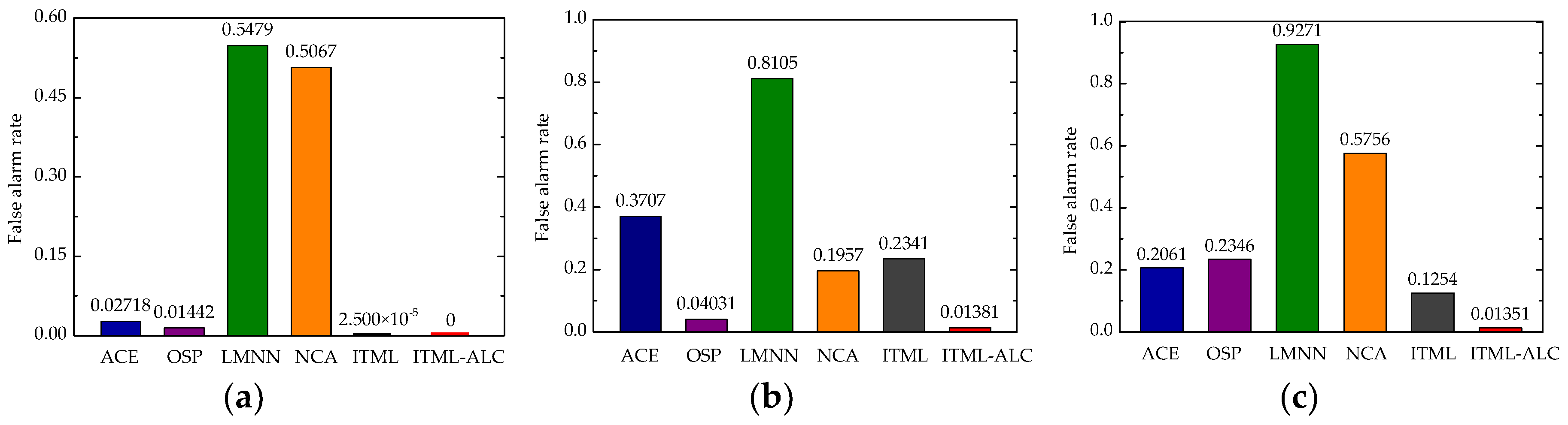

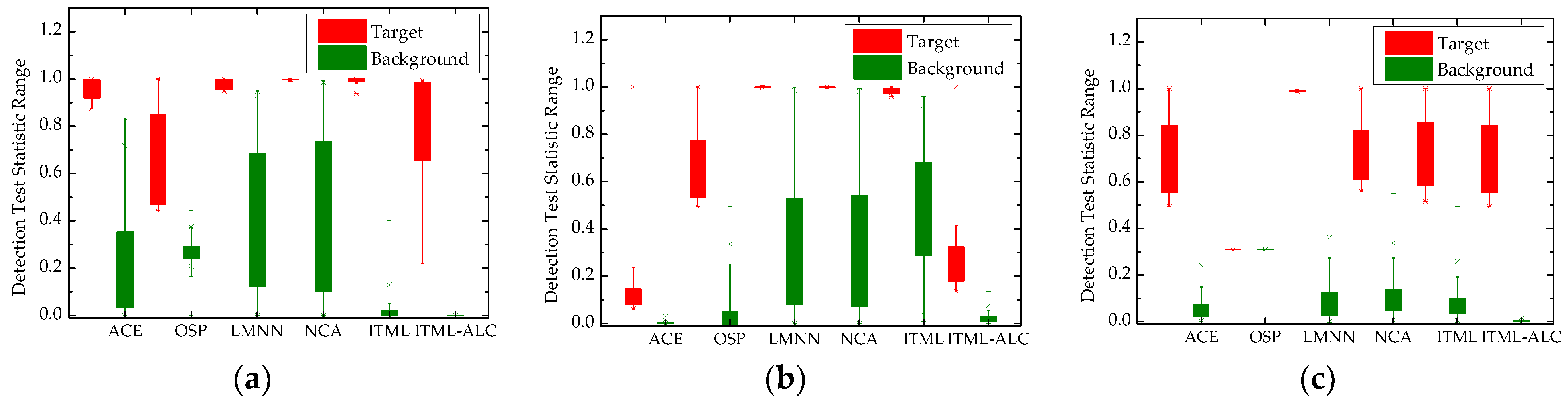

- As metric learning-based methods, LMNN and NCA methods do not perform well (shown in Figure 7), and the cause may be that LMNN and NCA methods both have problems with handling high-dimensional data and are sensitive to the selection of initial points, leading to inability to achieve the optimal value if the parameters are not selected appropriately. To date, only a few researchers use distance metric learning for target detection. For example, reference [15] adopts LMNN and NCA as the comparison algorithms to prove the effectiveness of proposed supervised metric learning (SML) algorithm for the AVIRIS LCVF dataset. Though the implanted target locations of reference [15] are a little different compared to this paper, LMNN and NCA have poor similarity performance in both papers. When the value of FAR is equal to 10 × 10−4, the detection probabilities of LMNN in reference [15] and this paper are both about 80%. Similarly, in the two articles, the detection probability of NCA reaches 100% when the FAR is about 1, respectively. Moreover, although SML algorithm can recognize target pixels easily, the background pixels cannot be suppressed to an even lower value, compared with the ITML-ALC algorithm. This could be because that SML only use a similarity propagation constraint to simultaneously link target pixels and background ones, while ITML-ALC adopts adaptively local constraints to separate similar and dissimilar point-pairs.

- (2)

- For the HYDICE urban dataset, the FAR is reduced to a level when the detection probability of ACE is at 80% in this paper, while FAR is reduced to a level when the detection probability of ACE is at 70% in the reference [45]. Though they use different prior information, the ACE results of the different articles achieve a slightly different performance. Niu et al. [45] propose an adaptive weighted learning method (AWLM) using a self-completed background dictionary (SCBD) to extract the accurate target spectrum for hyperspectral target detection. When the FAR is reduced to 10 × 10−3, the detection probability of the proposed algorithm in reference [45] (named AWLM_SCBD+ACE) is at nearly 90%, while the detection probability of ITML-ALC in this paper is also at 90%. However, ITML-ALC only has trade-off parameter to be adjusted, but AWLM_SCBD+ACE additional parameters, i.e., and of adaptive weights, which can be used to suppress the influence of irrelevant pixels.

- (3)

- In addition, we compare ITML-ALC with MMML and RFML, which are previously proposed in [27] and [28], respectively. Figure 11 shows the corresponding ROC curves for the AVIRIS San Diego airport dataset. It can be found that ITML-ALC has similar performance when the FAR is less than 10 × 10−3, and then ITML-ALC outperforms other methods. Maybe because ITML-ALC can shrink the distances between samples of similar pairs and expand the distances between samples of dissimilar pairs compared with MMML and RFML.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Manolakis, D.; Shaw, G. Detection Algorithms for Hyperspectral Imaging Applications. IEEE Signal Process. Mag. 2002, 19, 29–43. [Google Scholar] [CrossRef]

- Manolakis, D.; Truslow, E.; Pieper, M.; Cooley, T.; Brueggeman, M. Detection Algorithms in Hyperspectral Imaging Systems: An Overview of Practical Algorithms. IEEE Signal Process Mag. 2014, 31, 24–33. [Google Scholar] [CrossRef]

- Stefanou, M.S.; Kerekes, J.P. Image-Derived Prediction of Spectral Image Utility for Target Detection Applications. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1827–1833. [Google Scholar] [CrossRef] [Green Version]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban Change Detection Based on Dempster–Shafer Theory for Multitemporal Very High–Resolution Imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Random Access Memories: A New Paradigm for Target Detection in High Resolution Aerial Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2018, 27, 1100–1111. [Google Scholar] [CrossRef] [PubMed]

- Manolakis, D.; Marden, D.; Shaw, G.A. Hyperspectral Image Processing for Automatic Target Detection Applications. J. Lincoln Lab. 2003, 14, 79–116. [Google Scholar]

- Lei, J.; Li, Y.; Zhao, D.; Xie, J.; Chang, C.I.; Wu, L.; Li, X.; Zhang, J.; Li, W. A Deep Pipelined Implementation of Hyperspectral Target Detection Algorithm on FPGA using HLS. Remote Sens. 2018, 10, 516. [Google Scholar] [CrossRef]

- Harsanyi, J.C.; Chang, C.I. Hyperspectral Image Classification and Dimensionality Reduction: An Orthogonal Subspace Projection Approach. IEEE Trans. Geosci. Remote Sens. 1994, 32, 779–785. [Google Scholar] [CrossRef]

- Kraut, S.; Scharf, L.L.; McWhorter, L.T. Adaptive Subspace Detectors. IEEE Trans. Signal Process. 2001, 49, 1–16. [Google Scholar] [CrossRef]

- Yang, C.; Tan, Y.; Bruzzone, L.; Lu, L.; Guan, R. Discriminative Feature Metric Learning in the Affinity Propagation Model for Band Selection in Hyperspectral Images. Remote Sens. 2017, 9, 782. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Q.; Du, B.; Huang, X.; Tang, Y.Y.; Tao, D. Simultaneous Spectral–Spatial Feature Selection and Extraction for Hyperspectral Images. IEEE Trans. Cybern. 2018, 48, 16–28. [Google Scholar] [CrossRef] [PubMed]

- Kwon, H.; Nasrabadi, N.M. Kernel Matched Subspace Detectors for Hyperspectral Target Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 178–194. [Google Scholar] [CrossRef] [PubMed]

- Kwon, H.; Nasrabadi, N.M. Kernel Spectral Matched Filter for Hyperspectral Imagery. Int. J. Comput. Vis. 2007, 71, 127–141. [Google Scholar] [CrossRef]

- Capobianco, L.; Garzelli, A.; Camps-Valls, G. Target Detection with Semisupervised Kernel Orthogonal Subspace Projection. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3822–3833. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X.; Du, B. Hyperspectral Remote Sensing Image Subpixel Target Detection Based on Supervised Metric Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4955–4965. [Google Scholar] [CrossRef]

- Gu, Y.; Chanussot, J.; Jia, X.; Benediktsson, J.A. Multiple Kernel Learning for Hyperspectral Image Classification: A Review. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6547–6565. [Google Scholar] [CrossRef]

- Yuan, Y.; Zheng, X.; Lu, X. Spectral–spatial Kernel Regularized for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3815–3832. [Google Scholar] [CrossRef]

- Gu, Y.; Liu, T.; Jia, X.; Benediktsson, J.A.; Chanussot, J. Nonlinear Multiple Kernel Learning with Multi–Structure–Element Extended Morphological Profiles for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3235–3247. [Google Scholar] [CrossRef]

- Luo, F.; Du, B.; Zhang, L.; Zhang, L.; Tao, D. Feature Learning Using Spatial–Spectral Hypergraph Discriminant Analysis for Hyperspectral Image. IEEE Trans. Cybern. 2018. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Du, B.; Zhang, L.; Zhang, L. Exploring Locally Adaptive Dimensionality Reduction for Hyperspectral Image Classification: A Maximum Margin Metric Learning Aspect. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1136–1150. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. Sparse Transfer Manifold Embedding for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1030–1043. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, B.; Zhang, L.; Liu, T. Joint Sparse Representation and Multitask Learning for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 894–906. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, W.; Li, X. A Hybrid Sparsity and Distance–based Discrimination Detector for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1704–1717. [Google Scholar] [CrossRef]

- Dong, Y.; Du, B.; Zhang, L.; Zhang, L. Dimensionality Reduction and Classification of Hyperspectral Images Using Ensemble Discriminative Local Metric Learning. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2509–2524. [Google Scholar] [CrossRef]

- Du, B.; Zhang, L. A Discriminative Metric Learning Based Anomaly Detection Method. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6844–6857. [Google Scholar]

- Gu, Y.; Feng, K. Optimized Laplacian Svm with Distance Metric Learning for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1109–1117. [Google Scholar] [CrossRef]

- Dong, Y.; Zhang, L.; Zhang, L.; Du, B. Maximum Margin Metric Learning Based Target Detection for Hyperspectral Images. ISPRS J. Photogramm. Remote Sens. 2015, 108, 138–150. [Google Scholar] [CrossRef]

- Dong, Y.; Du, B.; Zhang, L. Target Detection Based on Random Forest Metric Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1830–1838. [Google Scholar] [CrossRef]

- Goldberger, J.; Roweis, S.; Hinton, G.; Salakhutdinov, R. Neighbourhood Components Analysis. In Proceedings of the 17th International Conference on Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 13–18 December 2004; pp. 513–520. [Google Scholar]

- Weinberger, K.Q.; Saul, L.K. Distance Metric Learning for Large Margin Nearest Neighbor Classification. J. Mach. Learn. Res. 2009, 10, 207–244. [Google Scholar]

- Davis, J.V.; Kulis, B.; Jain, P.; Sra, S.; Dhillon, I.S. Information—Theoretic Metric Learning. In Proceedings of the 24th International Conference on Machine Learning (ICML), New York, NY, USA, 20–24 June 2007; pp. 209–216. [Google Scholar]

- Yang, X.; Wang, M.; Tao, D. Person Re–Identification with Metric Learning using Privileged Information. IEEE Trans. Image Process. 2018, 27, 791–805. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Zuo, W.; Zhang, L.; Li, P. Shrinkage Expansion Adaptive Metric Learning. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 456–471. [Google Scholar]

- Luo, Y.; Wen, Y.; Liu, T.; Tao, D. Transferring Knowledge Fragments for Learning Distance Metric from a Heterogeneous Domain. IEEE Trans. Pattern Anal. Mach. Intell. 2018. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object Detection in Optical Remote Sensing Images Based on Weakly Supervised Learning and High-Level Feature Learning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3325–3337. [Google Scholar] [CrossRef] [Green Version]

- Zheng, X.; Yuan, Y.; Lu, X. Dimensionality Reduction by Spatial–spectral Preservation in Selected Bands. IEEE Trans. Image Process. 2017, 55, 5185–5197. [Google Scholar] [CrossRef]

- Li, Z.; Chang, S.; Liang, F.; Huang, T.S.; Cao, L.; Smith, J.R. Learning Locally—Adaptive Decision Functions for Person Verification. In Proceedings of the IEEE Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 23–28 June 2013; pp. 3610–3617. [Google Scholar]

- Su, H.; Zhao, B.; Du, Q.; Du, P.; Xue, Z. Multifeature Dictionary Learning for Collaborative Representation Classification of Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 99, 1–18. [Google Scholar] [CrossRef]

- Chang, C.I.; Du, Q. Estimation of Number of Spectrally Distinct Signal Sources in Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2004, 42, 608–619. [Google Scholar] [CrossRef] [Green Version]

- Du, B.; Zhang, L. Target Detection Based on a Dynamic Subspace. Pattern Recogn. 2014, 47, 344–358. [Google Scholar] [CrossRef]

- Messinger, D.; Ziemann, A.; Schlamm, A.; Basener, B. Spectral Image Complexity Estimated through Local Convex Hull Volume. Available online: https://ieeexplore.ieee.org/abstract/document/5594869/ (accessed on 3 September 2018).

- Ma, L.; Crawford, M.; Tian, J. Anomaly Detection for Hyperspectral Images Based on Robust Locally Linear Embedding. J. Infrared Millim. Terahertz 2010, 31, 753–762. [Google Scholar] [CrossRef]

- Lobo, J.M.; Jiménez-Valverde, A.; Real, R. AUC: A Misleading Measure of the Performance of Predictive Distribution Models. Global Ecol. Biogeogr. 2008, 17, 145–151. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral Remote Sensing Data Analysis and Future Challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef] [Green Version]

- Niu, Y.; Wang, B. Extracting Target Spectrum for Hyperspectral Target Detection: An Adaptive Weighted Learning Method Using a Self-Completed Background Dictionary. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1604–1617. [Google Scholar] [CrossRef]

| Color of Target Panel | Sample Index | Line Index | Fraction |

|---|---|---|---|

| 50 | (75, 76) (100, 101) (125, 126) | 10% |

| 75 | (75, 76) (100, 101) (125, 126) | 20% |

| 100 | (75, 76) (100, 101) (125, 126) | 30% |

| 125 | (75, 76) (100, 101) (125, 126) | 40% |

| 150 | (75, 76) (100, 101) (125, 126) | 50% |

| Experimental Dataset | LCVF Dataset | San Diego Airport Dataset | Urban Dataset |

|---|---|---|---|

| Sensor | AVIRIS | ACIRIS | HYDICE |

| Spectral band | 224 | 224 | 210 |

| Spatial resolution | 20 m | 3.5 m | 3 m |

| Wavelength | 370–2510 nm | 370–2510 nm | 400–2500 nm |

| Image size |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, Y.; Du, B.; Zhang, L.; Hu, X. Hyperspectral Target Detection via Adaptive Information—Theoretic Metric Learning with Local Constraints. Remote Sens. 2018, 10, 1415. https://doi.org/10.3390/rs10091415

Dong Y, Du B, Zhang L, Hu X. Hyperspectral Target Detection via Adaptive Information—Theoretic Metric Learning with Local Constraints. Remote Sensing. 2018; 10(9):1415. https://doi.org/10.3390/rs10091415

Chicago/Turabian StyleDong, Yanni, Bo Du, Liangpei Zhang, and Xiangyun Hu. 2018. "Hyperspectral Target Detection via Adaptive Information—Theoretic Metric Learning with Local Constraints" Remote Sensing 10, no. 9: 1415. https://doi.org/10.3390/rs10091415

APA StyleDong, Y., Du, B., Zhang, L., & Hu, X. (2018). Hyperspectral Target Detection via Adaptive Information—Theoretic Metric Learning with Local Constraints. Remote Sensing, 10(9), 1415. https://doi.org/10.3390/rs10091415