Figure 1.

Study site: Buffalo, NY, USA.

Figure 1.

Study site: Buffalo, NY, USA.

Figure 2.

The changes and observation difference between the multi-temporal high-resolution image and light detection and ranging (LiDAR) data are highlighted by red circles or polygons, and all LiDAR data is displayed in pseudo-color raster image (red band and blue band: normalized Digital Surface Model (nDSM), green band: intensity). Above: (a) high-resolution image after changes; (b) LiDAR data before changes; (c) high building in the high-resolution image with mis-registration; (d) high building in the LiDAR data with mis-registration; (e) high-resolution image without missing data; (f) LiDAR data with missing data; (g) high-resolution image with shadows; and, (h) LiDAR data without shadows.

Figure 2.

The changes and observation difference between the multi-temporal high-resolution image and light detection and ranging (LiDAR) data are highlighted by red circles or polygons, and all LiDAR data is displayed in pseudo-color raster image (red band and blue band: normalized Digital Surface Model (nDSM), green band: intensity). Above: (a) high-resolution image after changes; (b) LiDAR data before changes; (c) high building in the high-resolution image with mis-registration; (d) high building in the LiDAR data with mis-registration; (e) high-resolution image without missing data; (f) LiDAR data with missing data; (g) high-resolution image with shadows; and, (h) LiDAR data without shadows.

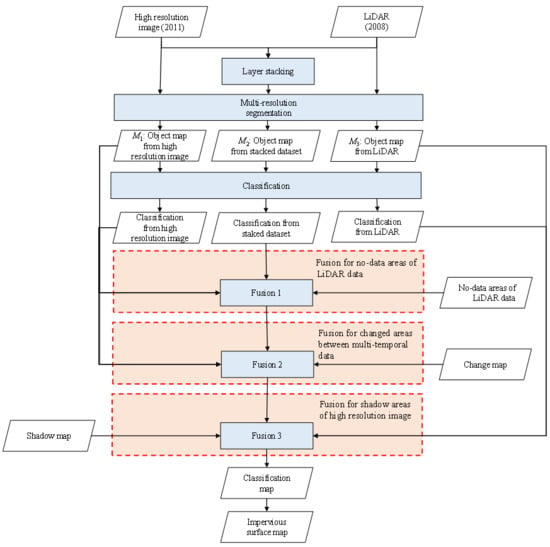

Figure 3.

Flowchart of the proposed method. The multi-sensor data acquired at different time were process by change detection to obtain the change map. The shadow map and no-data areas of LiDAR data were obtained separately. Finally, the multi-source data were interpreted by the proposed object-based post-classification fusion with the shadow map, change map, and no-data areas. The accurate impervious surface map was generated for the result.

Figure 3.

Flowchart of the proposed method. The multi-sensor data acquired at different time were process by change detection to obtain the change map. The shadow map and no-data areas of LiDAR data were obtained separately. Finally, the multi-source data were interpreted by the proposed object-based post-classification fusion with the shadow map, change map, and no-data areas. The accurate impervious surface map was generated for the result.

Figure 4.

Flowchart of change detection with multi-temporal LiDAR data and high-resolution image. The multi-sensor data were firstly stacked and segmented by multi-resolution segmentation (MRS) algorithm. The object based data were processed by multivariate alteration detection (MAD) algorithm and OTSU thresholding to obtain the binary change map.

Figure 4.

Flowchart of change detection with multi-temporal LiDAR data and high-resolution image. The multi-sensor data were firstly stacked and segmented by multi-resolution segmentation (MRS) algorithm. The object based data were processed by multivariate alteration detection (MAD) algorithm and OTSU thresholding to obtain the binary change map.

Figure 5.

Diagram for the object-based post-classification fusion. If the object is totally in the interior of the changed area, its class type is determined with the independent classification result. Otherwise, if the object intersects with the changed area, its class type is determined mainly while considering the joint classification result in the unchanged area.

Figure 5.

Diagram for the object-based post-classification fusion. If the object is totally in the interior of the changed area, its class type is determined with the independent classification result. Otherwise, if the object intersects with the changed area, its class type is determined mainly while considering the joint classification result in the unchanged area.

Figure 6.

Flowchart of object-based post-classification fusion of multi-temporal LiDAR data and the high-resolution image. The joint classification and independent classification results are obtained at first. Then, three steps of object-based post-classification fusion is achieved to obtain the accurate classification map. The impervious surface map is obtained by merging the classes.

Figure 6.

Flowchart of object-based post-classification fusion of multi-temporal LiDAR data and the high-resolution image. The joint classification and independent classification results are obtained at first. Then, three steps of object-based post-classification fusion is achieved to obtain the accurate classification map. The impervious surface map is obtained by merging the classes.

Figure 7.

The spatial correspondences for the no-data area between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for no-data area. The red rectangle indicates the schematic object from the high-resolution image.

Figure 7.

The spatial correspondences for the no-data area between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for no-data area. The red rectangle indicates the schematic object from the high-resolution image.

Figure 8.

The spatial correspondence for the changed areas between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for changed area. The red rectangle indicates the schematic object from the high-resolution image.

Figure 8.

The spatial correspondence for the changed areas between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for changed area. The red rectangle indicates the schematic object from the high-resolution image.

Figure 9.

The spatial correspondence for the mis-registration areas between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for no-data area. The red rectangle indicates the schematic object from the high-resolution image.

Figure 9.

The spatial correspondence for the mis-registration areas between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for no-data area. The red rectangle indicates the schematic object from the high-resolution image.

Figure 10.

The spatial correspondence for the shadow areas between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for shadow areas. The red rectangle indicates the schematic object from the LiDAR data.

Figure 10.

The spatial correspondence for the shadow areas between multi-temporal LiDAR data and the high-resolution image: (a) high-resolution image; (b) LiDAR data; and, (c) mask for shadow areas. The red rectangle indicates the schematic object from the LiDAR data.

Figure 11.

Change intensity images obtained by (

a) supervised MAD, (

b) unsupervised MAD, and (

c) stack PCA. Higher values in the image indicate the higher probability to be changed. The four red rectangles and yellow labels indicate the zoomed areas shown in

Figure 12 below.

Figure 11.

Change intensity images obtained by (

a) supervised MAD, (

b) unsupervised MAD, and (

c) stack PCA. Higher values in the image indicate the higher probability to be changed. The four red rectangles and yellow labels indicate the zoomed areas shown in

Figure 12 below.

Figure 12.

The change intensity image of supervised MAD (greyscale image), and its corresponding areas in the high-resolution image (true color image) and LiDAR data (pseudo-color image). The cases shown above are (a) real landscape change, (b) mis-registration, (c) shadows, and (d) another real landscape change. Higher values of the change intensity indicate the high probability to be changed.

Figure 12.

The change intensity image of supervised MAD (greyscale image), and its corresponding areas in the high-resolution image (true color image) and LiDAR data (pseudo-color image). The cases shown above are (a) real landscape change, (b) mis-registration, (c) shadows, and (d) another real landscape change. Higher values of the change intensity indicate the high probability to be changed.

Figure 13.

Binary change maps by OTSU of (a) supervised MAD, (b) unsupervised MAD, (c) stack PCA, and (d) the binary change maps of post-classification, where the changed areas are white and the unchanged areas are black.

Figure 13.

Binary change maps by OTSU of (a) supervised MAD, (b) unsupervised MAD, (c) stack PCA, and (d) the binary change maps of post-classification, where the changed areas are white and the unchanged areas are black.

Figure 14.

Reference samples for change detection. Changed/unchanged samples are labelled in yellow/green, respectively.

Figure 14.

Reference samples for change detection. Changed/unchanged samples are labelled in yellow/green, respectively.

Figure 15.

Reference map for the high-resolution image taken in 2011. The legends indicate the colors in the image.

Figure 15.

Reference map for the high-resolution image taken in 2011. The legends indicate the colors in the image.

Figure 16.

(a) Independent classification map of high-resolution image, (b) independent classification map of LiDAR data, (c) joint classification map using LIDAR data and high-resolution image, and (d) classification map following object-based post-classification fusion (the proposed method).

Figure 16.

(a) Independent classification map of high-resolution image, (b) independent classification map of LiDAR data, (c) joint classification map using LIDAR data and high-resolution image, and (d) classification map following object-based post-classification fusion (the proposed method).

Figure 17.

Classification accuracies with different fusion approaches. Different colors indicate the increased accuracies after each step of object-based post-classification fusion.

Figure 17.

Classification accuracies with different fusion approaches. Different colors indicate the increased accuracies after each step of object-based post-classification fusion.

Figure 18.

Fusion for no-data areas of LiDAR data: (a) high-resolution image, (b) LiDAR data, (c) joint classification map using LiDAR data and high-resolution image before object-based post-classification fusion, and (d) classification map after object-based post-classification fusion.

Figure 18.

Fusion for no-data areas of LiDAR data: (a) high-resolution image, (b) LiDAR data, (c) joint classification map using LiDAR data and high-resolution image before object-based post-classification fusion, and (d) classification map after object-based post-classification fusion.

Figure 19.

Fusion for changed areas between multi-temporal data: (a) high-resolution image, (b) LiDAR data, (c) joint classification map using LiDAR data and high-resolution image before object-based post-classification fusion, and (d) classification map after object-based post-classification fusion.

Figure 19.

Fusion for changed areas between multi-temporal data: (a) high-resolution image, (b) LiDAR data, (c) joint classification map using LiDAR data and high-resolution image before object-based post-classification fusion, and (d) classification map after object-based post-classification fusion.

Figure 20.

Fusion for shadow areas of high-resolution image: (a) high-resolution image, (b) LiDAR data, (c) joint classification map using LiDAR data and high-resolution image before object-based post-classification fusion, and (d) classification map after object-based post-classification fusion.

Figure 20.

Fusion for shadow areas of high-resolution image: (a) high-resolution image, (b) LiDAR data, (c) joint classification map using LiDAR data and high-resolution image before object-based post-classification fusion, and (d) classification map after object-based post-classification fusion.

Figure 21.

Impervious surface distribution map in 2011.

Figure 21.

Impervious surface distribution map in 2011.

Table 1.

Max Kappa and current Kappa for supervised MAD and comparative methods.

Table 1.

Max Kappa and current Kappa for supervised MAD and comparative methods.

| | Current Kappa | Max Kappa |

|---|

| | K-Means | OTSU |

|---|

| sp_MAD | 0.5187 | 0.6419 | 0.7440 |

| un_MAD | 0.1901 | 0.2328 | 0.7550 |

| stack_PCA | 0.2003 | 0.2524 | 0.6881 |

| post-class | | | 0.1409 |

Table 2.

Quantitative assessment of classification maps for the proposed method and the comparison methods. The reference is shown as

Figure 15. The values in the table indicate the overall accuracy and Kappa coefficient.

Table 2.

Quantitative assessment of classification maps for the proposed method and the comparison methods. The reference is shown as

Figure 15. The values in the table indicate the overall accuracy and Kappa coefficient.

| | High-Resolution Image | LiDAR Data | Joint Classification | Proposed Method |

|---|

| OA (%) | 77.30 | 77.80 | 87.49 | 91.89 |

| Kappa | 0.6999 | 0.7128 | 0.8372 | 0.8942 |

Table 3.

Confusion matrix of the high-resolution image classification map, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

Table 3.

Confusion matrix of the high-resolution image classification map, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

| | Building | Vegetation | R&P | Soil | Pavement | Shadow | Total |

|---|

| Building | 39,401 | 18 | 10,862 | 6653 | 1758 | 3815 | 62,507 |

| Vegetation | 122 | 46,289 | 0 | 321 | 82 | 109 | 46,923 |

| R&P | 13,432 | 17 | 37,133 | 3977 | 3277 | 501 | 58,337 |

| Soil | 6220 | 29 | 529 | 15,300 | 513 | 292 | 22,883 |

| Pavement | 3340 | 54 | 586 | 874 | 11,957 | 629 | 17,440 |

| Total | 62,515 | 46,407 | 49,110 | 27,125 | 17,587 | 5346 | |

Table 4.

Confusion matrix of the LiDAR data classification map, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

Table 4.

Confusion matrix of the LiDAR data classification map, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

| | Building | Vegetation | R&P | Soil | Pavement | Total |

|---|

| Building | 47,471 | 1106 | 11,364 | 619 | 1947 | 62,507 |

| Vegetation | 381 | 39,748 | 6 | 2296 | 4492 | 46,923 |

| R&P | 527 | 2728 | 48,608 | 2835 | 3639 | 58,337 |

| Soil | 376 | 493 | 2876 | 16,747 | 2391 | 22,883 |

| Pavement | 470 | 303 | 1487 | 4594 | 10,586 | 17,440 |

| Total | 49,225 | 44,378 | 64,341 | 27,091 | 23,055 | |

Table 5.

Confusion matrix of the joint classification map, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

Table 5.

Confusion matrix of the joint classification map, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

| | Building | Vegetation | R&P | Soil | Pavement | Shadow | Total |

|---|

| Building | 46,926 | 21 | 9792 | 902 | 887 | 3979 | 62,507 |

| Vegetation | 302 | 45,605 | 16 | 94 | 439 | 467 | 46,923 |

| R&P | 601 | 2 | 48,523 | 6073 | 1756 | 1382 | 58,337 |

| Soil | 419 | 24 | 618 | 20,751 | 364 | 707 | 22,883 |

| Pavement | 504 | 131 | 1120 | 1045 | 13,815 | 825 | 17,440 |

| Total | 48,752 | 45,783 | 60,069 | 28,865 | 17,261 | 7360 | |

Table 6.

Confusion matrix for the proposed method, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

Table 6.

Confusion matrix for the proposed method, where the reference is shown as

Figure 15. The values in the table indicate the numbers of pixels.

| | Building | Vegetation | R&P | Soil | Pavement | Total |

|---|

| Building | 58,002 | 246 | 2426 | 899 | 934 | 62,507 |

| Vegetation | 34 | 46,051 | 36 | 121 | 681 | 46,923 |

| R&P | 2997 | 6 | 50,182 | 3259 | 1893 | 58,337 |

| Soil | 438 | 24 | 678 | 21,267 | 476 | 22,883 |

| Pavement | 406 | 201 | 1011 | 1133 | 14,689 | 17,440 |

| Total | 61,877 | 46,528 | 54,333 | 26,679 | 18,673 | |

Table 7.

False alarm rate (FAR) and omission rate (OR) of each class for the proposed method and the comparison methods. Bold numbers indicate the lowest rates of false alarms and omissions for each class.

Table 7.

False alarm rate (FAR) and omission rate (OR) of each class for the proposed method and the comparison methods. Bold numbers indicate the lowest rates of false alarms and omissions for each class.

| | High-Resolution Image | LiDAR Data | Joint Classification | Proposed Method |

|---|

| | FAR (%) | OR (%) | FAR (%) | OR (%) | FAR (%) | OR (%) | FAR (%) | OR (%) |

|---|

| Building | 31.13 | 26.68 | 3.36 | 24.23 | 3.75 | 19.82 | 5.00 | 5.48 |

| Vegetation | 0.50 | 0.63 | 10.97 | 23.63 | 0.39 | 1.83 | 1.10 | 1.96 |

| Soil | 39.39 | 44.22 | 30.53 | 23.24 | 28.11 | 6.43 | 5.01 | 13.87 |

| Pavement | 17.34 | 35.17 | 56.40 | 29.83 | 19.96 | 16.85 | 23.39 | 7.11 |

| Road & Parking | 26.48 | 24.39 | 25.32 | 16.17 | 19.22 | 14.80 | 21.91 | 16.09 |

Table 8.

Confusion matrix and accuracy of the impervious surface distribution map.

Table 8.

Confusion matrix and accuracy of the impervious surface distribution map.

| | Impervious | Pervious | Total (Reference) |

|---|

| Impervious | 131,480 | 2349 | 13,3829 |

| Pervious | 6804 | 67,457 | 74,261 |

| Total (Test) | 138,284 | 69,806 | 208,090 |

| Overall Accuracy | 95.6014% | | |

| Kappa | 0.9029 | | |