2.1. Study Areas

We chose two study areas to evaluate the ability of the ORS program’s methods to map and monitor intra-seasonal and multi-year forest decline across different ecological settings (

Table 1 and

Figure 1).

The Rio Grande National Forest study area captures a slow onset forest disturbance caused by mountain pine beetle (

Dendroctonus ponderosae) and spruce bark beetle (

Dendroctonus rufipennis). The Forest has been experiencing long-term gradual decline and mortality, with a total of 617,000 acres being infested by spruce beetles since 1996 [

16]. Inclusion of this study area presents an opportunity to test the ability of the change algorithms to discern new areas of forest disturbance from areas affected in previous years.

The Rhode Island, Massachusetts, and Connecticut (Southern New England) study area experienced extensive Gypsy Moth (Lymantria dispar dispar) defoliation events in 2016 and 2017. This region typifies many challenges associated with detecting ephemeral forest disturbances using remote sensing data. These challenges include the temporal variations in phenological conditions and persistent nature of cloud cover in the region.

Within each study area, we analyzed pixels that had a NLCD 2011 Tree Canopy Cover [

17] value greater than 30 percent.

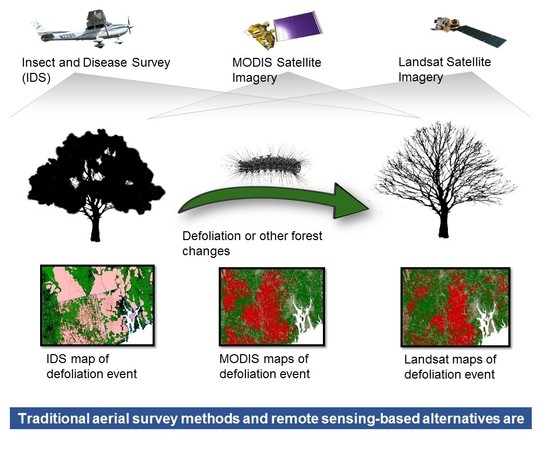

2.4. ORS-Data

ORS methods were built using satellite image data that had been collected for at least 5 years and would likely be regularly available into the future. This includes imagery from Landsat Thematic Mapper (TM, 1984–2011), Enhanced Thematic Mapper (ETM+, 1999–Present), and Operational Land Imager (OLI, 2013-Present). Each Landsat sensor has a revisit frequency of 16 days. Since Landsat 5 and 7 overlapped during 1999–2011 and Landsat 7 and 8 overlapped during 2013–present, these periods have a revisit frequency of about 8 days. All Landsat-based analyses were conducted at 30 m spatial resolution.

ORS methods were also applied to MODerate-resolution Imaging Spectroradiometer (MODIS) imagery collected from the Terra (1999–present) and Aqua (2002–present) satellites, which is available multiple times a day for most locations on Earth. MODIS imagery has a spectral resolution similar to Landsat, but coarser spatial resolution than Landsat. All MODIS-based analyses were conducted at 250 m spatial resolution.

This project utilized Landsat Ecosystem Disturbance Adaptive Processing System (LEDAPS) surface reflectance values from Landsat 5 and 7 [

19], Landsat 8 Surface Reflectance Code (LaSRC) values for Landsat 8 [

20], and MODIS Terra and Aqua 8-day surface reflectance composites. All data were accessed through Google Earth Engine, which acquires MODIS data from the NASA Land Processes Distributed Active Archive Center (LP DAAC), USGS/Earth Resources Observation and Science (EROS) Center, Sioux Falls, South Dakota, and Landsat data from EROS Center, Sioux Falls, South Dakota. LP DAAC MODIS collections used are found in

Table 2.

2.5. Data Preparation

All data preparation and analysis were performed with Google Earth Engine (GEE). GEE provides access to most freely available earth observation data and provides an application programming interface (API) to analyze and visualize these data [

21].

All Landsat images first underwent pixel-wise cloud and cloud shadow masking using the Google cloudScore algorithm for cloud masking and Temporal Dark Outlier Mask (TDOM) for cloud shadows. Since neither of these methods is currently documented in the peer-reviewed literature, they are briefly described below, and are fully described and evaluated in a forthcoming paper [

22]. MODIS 8-day composites are intended to be cloud-free, so no pre-processing was conducted.

The Google cloudScore algorithm exploits the spectral and thermal properties of clouds to identify and remove these artifacts from image data. To simplify the process, the cloudScore function uses a min/max normalization function to rescale expected reflectance or temperature values between 0 and 1 (Equation (1)). The algorithm applies the normalization function to five cloud spectral properties. The final cloudScore is the minimum of these normalized values (Equation (2)). The algorithm finds pixels that are bright and cold, but do not share the spectral properties of snow. Specifically, it defines the cloud score as:

Any pixel with a cloudScore value greater than 0.2 was identified as being a cloud. This value was qualitatively evaluated through extensive use throughout the CONUS to provide the best balance of detecting clouds, while not committing cold, bright surfaces. Any values inside the resulting mask were removed from subsequent steps of the analysis.

After clouds were masked, cloud shadows were identified and masked. Since the Landsat archive is extensive across time, cloud shadows generally appear as anomalously dark pixels. GEE provides access to the entire archive of Landsat, enabling methods that require querying extensive time series of data. The Temporal Dark Outlier Mask (TDOM) method was used to identify pixels that are dark in relative and absolute terms. Generally, pixels will not always be obscured by a cloud shadow, making this method effective. Specifically:

where

and

are the mean and standard deviation, respectively, of a given band

b across the time series,

M is the multispectral Landsat image,

is the threshold for the shadow z-score (−1 for this study), and

is the threshold for the

(0.35 for this study).

The TDOM method first computes the mean (

) and standard deviation (

σb) of the near-infrared (NIR) and shortwave-infrared (SWIR1) bands across a collection of images. For each image, the algorithm then computes the z-score of the NIR and SWIR1 bands (

z(Mb)) (Equation (5)) (

Figure 2). Each image also has a darkness metric computed as the sum of the NIR and SWIR1 bands (

). Cloud shadows are then identified if a pixel has a z-score of less than −1 for both the NIR and SWIR1 bands and a darkness value less than 0.35 (Equation (8)). These thresholds were chosen after extensive qualitative evaluation of TDOM outputs from across the CONUS.

This study used the normalized difference vegetation index (

NDVI) (Equation (6)) [

23] and the normalized burn ratio (

NBR) (Equation (7)) [

24] as indices of forest greenness to detect forest changes.

where

NIR is the near infrared band of a multispectral image,

red is the red band of a multispectral image, and

SWIR2 is the second shortwave infrared band of a multispectral image. Many additional common indices are available for use when running ORS algorithms, but were omitted from this study. These two indices are used since the bands necessary to create them are available for both MODIS and Landsat, they are very similar to the indices used in the RTFD program, and they have proven effective throughout the remote sensing change detection literature [

6,

7,

11,

23,

24].

2.6. Change Detection Algorithms

Three algorithms were developed and tested in GEE using MODIS and Landsat data. Employing GEE for this study enabled us to efficiently test various change detection approaches using Landsat and MODIS image data archives, without downloading and storing the data locally.

ORS mapping needs fell into two categories: ephemeral change related to defoliation events and long-term change related to tree mortality from insects and disease. The three algorithms used in this study were selected to address the needs posed by these forest change types. We refer to these algorithms as: basic z-score (

Figure 3), harmonic z-score (

Figure 4), and linear trend (

Figure 5).

The basic z-score method builds on ideas from RTFD, serving as a natural starting point for ORS mapping methods. The harmonic z-score method combines ideas from harmonic regression-based methods, such as EWMACD [

12] and BFAST Monitor [

11], with those from the basic z-score method to leverage data from throughout the growing season. The linear trend method is built on ideas from Image Trends from Regression Analysis (ITRA) [

25] and the RTFD trend method [

3]. It uses linear regression to fit a line across a series of years.

Both the basic z-score and harmonic z-score method work by identifying pixels that differ from a baseline period. The primary difference is what data are used to compute the z-score. Both methods start by acquiring imagery for a baseline period (generally 3–5 years) and analysis years. The basic z-score method uses the mean and standard deviation of the baseline period within a targeted date range for a specified band or index, while the harmonic z-score method follows the EWMACD and BFAST Monitor methods by first fitting a harmonic regression model to all available cloud/cloud shadow-free Landsat or MODIS data.

The harmonic regression model is intended to mitigate the impact of seasonality on the spectral response, leaving remaining variation to be related to change unrelated to phenology. The harmonic is defined as:

where

b is the band or index of the image,

are the coefficients being fit by the model, and t is the sum of the date expressed as a year and the proportion of a year.

The harmonic regression model is fitted on a pixel-wise basis to the baseline data and then applied to both the baseline and the analysis data. Next, the residual error is computed for both the baseline and analysis data. Up to this point, the harmonic z-score method, EWMACD, and BFAST Monitor methods are largely the same. The primary difference with these three methods is how change is classified. While EWMACD and BFAST Monitor use exponentially weighted moving average (EWMA) charting and moving sum (MOSUM) of the residuals, respectively [

11,

12], the harmonic z-score method uses the mean and standard deviation of the targeted date period baseline residuals to compute the z-score of the targeted analysis period residuals. The date range and specific years used to define the baseline can be tailored to optimize the discernment of specific disturbances within the analysis years.

Since an analysis period may have more than a single observation, a method for summarizing these values is specified for both the basic and harmonic z-score methods to constrain the final z-score value. For this study, the mean of values was used. Change is then identified by thresholding the summary z-score value. For this project, summarized z-score values less than −0.8 were identified as change. This threshold was chosen based on analyst expertise obtained from iterative qualitative comparison of z-score results with post-disturbance image data.

The linear trend method makes use of the median of the cloud and cloud shadow-free observations available within a specified target date period. This is done for a specified number of years prior to the analysis year, referred to as an epoch. For an epoch, an ordinary least square linear regression model is fit on a pixel-wise basis as follows:

where

b is the band or index of image,

a0 is the intercept,

a1 is the slope,

t is the date, and

y is the predicted value.

Change is identified where the slope (

a1) is less than a specified threshold. The threshold used for this study was −0.03. This was chosen based on analyst expertise similar to the identification of the threshold used for the z-score methods. This method differs from ITRA [

25] since it does not use a

t-test to classify change.

ORS methods differ from existing RTFD methods in several key areas. Firstly, RTFD products are created in a near-real time environment that GEE cannot currently provide. The composites that are used are therefore slightly different than those used in ORS. Secondly, all baseline statistics are computed on a pixel-wise basis for ORS methods, while they are computed on a zone-wise basis for RTFD methods—where the zones are defined by the combination of USGS mapping zone, forest type, and MODIS look angle strata [

3]. The most pronounced difference, however, is that all model input parameters for RTFD are fixed, while ORS parameters can be tailored to a specific forest disturbance event by an expert user.

We tested all three methods in both study areas. Analysts chose targeted date ranges based on expert knowledge of when each event was most visible. For the Southern New England study area, ORS analyses were conducted between 25 May and 9 July for both 2016 and 2017. Baseline years spanned 2011–2015 for harmonic z-score and regular z-score methods while a three-year epoch length was used for the linear trend method. For the Rio Grande National Forest study area, ORS analyses were conducted from 9 July to 15 October for 2013 and 2014. Baseline years spanned 2007–2011 for harmonic z-score and regular z-score methods while a five-year epoch length was used for the linear trend method.

2.7. Accuracy Assessment Methods

We performed an independent accuracy assessment to understand how well ORS products performed relative to existing FHP disturbance mapping programs. We followed best practices for sample design, response design, and analysis as outlined by Olofsson et al. (2014) [

26] and Pontius and Millones (2011) [

27]. We drew a simple random sample across all 30 m × 30 m pixels that were within each study area’s tree mask. Since all change detection for this study was performed retrospectively, timely field reference data could not be collected. Instead, we collected independent reference data using TimeSync [

13]. TimeSync is a tool that enables a consistent manual inspection of the Landsat time series along with high resolution imagery found within Google Earth. Single Landsat pixel-size (30 m × 30 m) plots were analyzed throughout the baseline and analysis periods. The response design was created for the US Forest Service Landscape Change Monitoring System (LCMS) [

28] and USGS Landscape Change Monitoring Assessment and Projection (LCMAP) [

29] projects to provide consistent depictions of land cover, land use, and change process. Rigorous analyst training and calibration was used to overcome the subjective nature of analyzing data in this manner. We analyzed 230 plots across the Rio Grande National Forest and 416 plots across the Southern New England study area. We then cross-walked all responses for each year to change and no change. For consistency, all ORS, IDS, and RTFD outputs had the same 30 m spatial resolution tree mask used for drawing the reference sample applied to them. All MODIS-based ORS and RTFD outputs were resampled from 250 m to 30 m spatial resolution using nearest neighbor resampling. The reference data was then compared to each of the ORS outputs along with the IDS and RTFD products for each analysis year. Accuracy metrics follow suggestions by Pontius and Millones (2011) [

27]. They include those related to allocation disagreement (overall accuracy, and class-wise omission and commission error rates) and quantity disagreement (reference and predicted prevalence). The 5th and 95th percentile confidence interval was calculated using the overall accuracy of 500 bootstrap random samples for each assessed output. While these methods provide a depiction of the accuracy of the assessed change detection methods within the tree mask, it completely omits any areas outside of this mask from all analyses.