4.1. Accuracy of Individual Tree Detection Based on Canopy Maxima and Watershed Segmentation Approaches

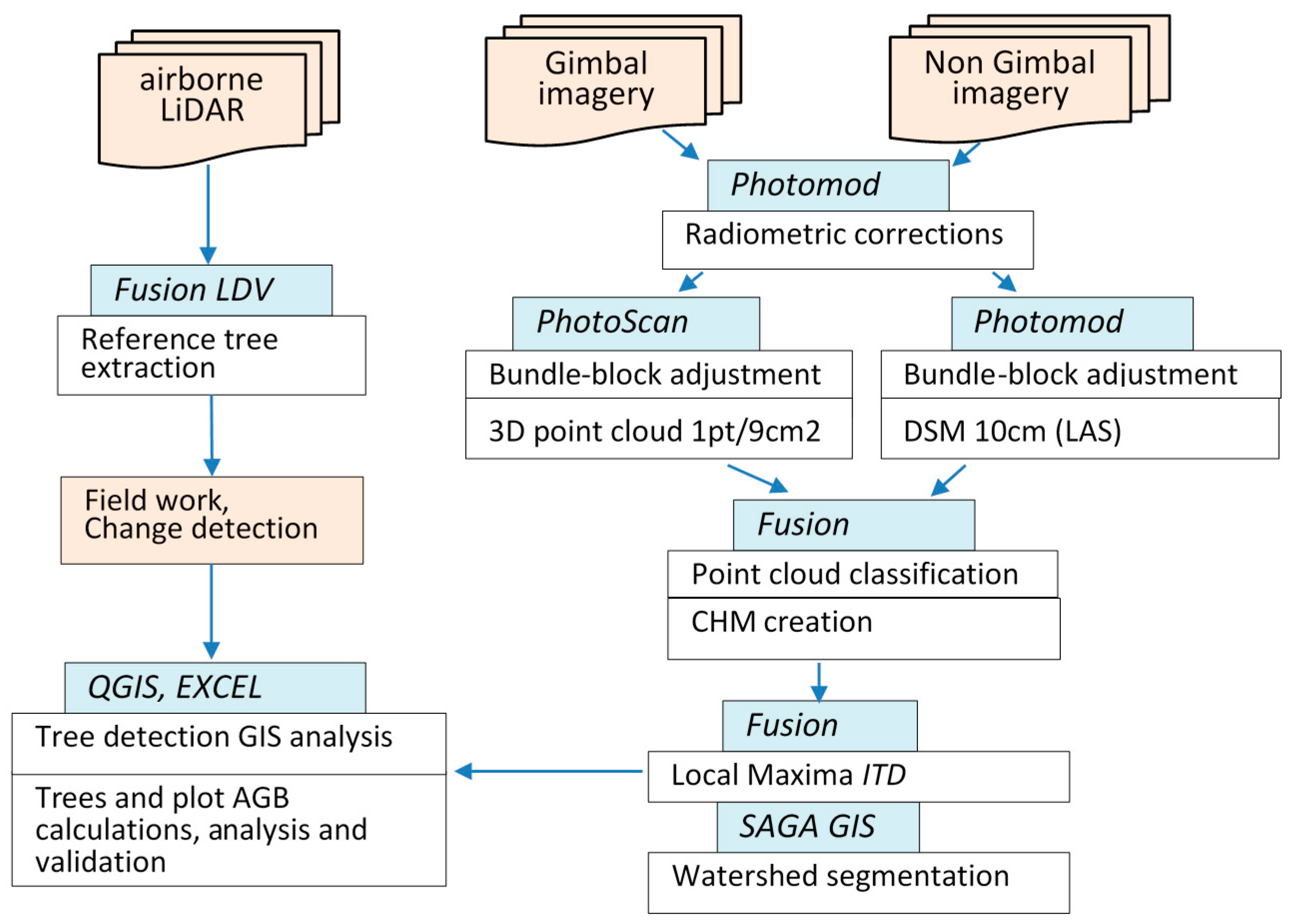

The accuracy and completeness of CHMs generated from 3D dense point clouds have a direct effect on individual tree detection performance. CHM generation is affected by the SfM matching algorithm and the accuracy of 3D scene geometry reconstruction from 2D images. In our study, it was primarily related to: (1) the accuracy of the bundle-block adjustment; (2) the vegetation structure; (3) the spatial resolution of the raster CHMs; (4) an appropriately sized circular height–crown diameter relationship search window for identifying individual canopies by the local maxima routine; and (5) the effectiveness of the chosen SfM matching algorithm.

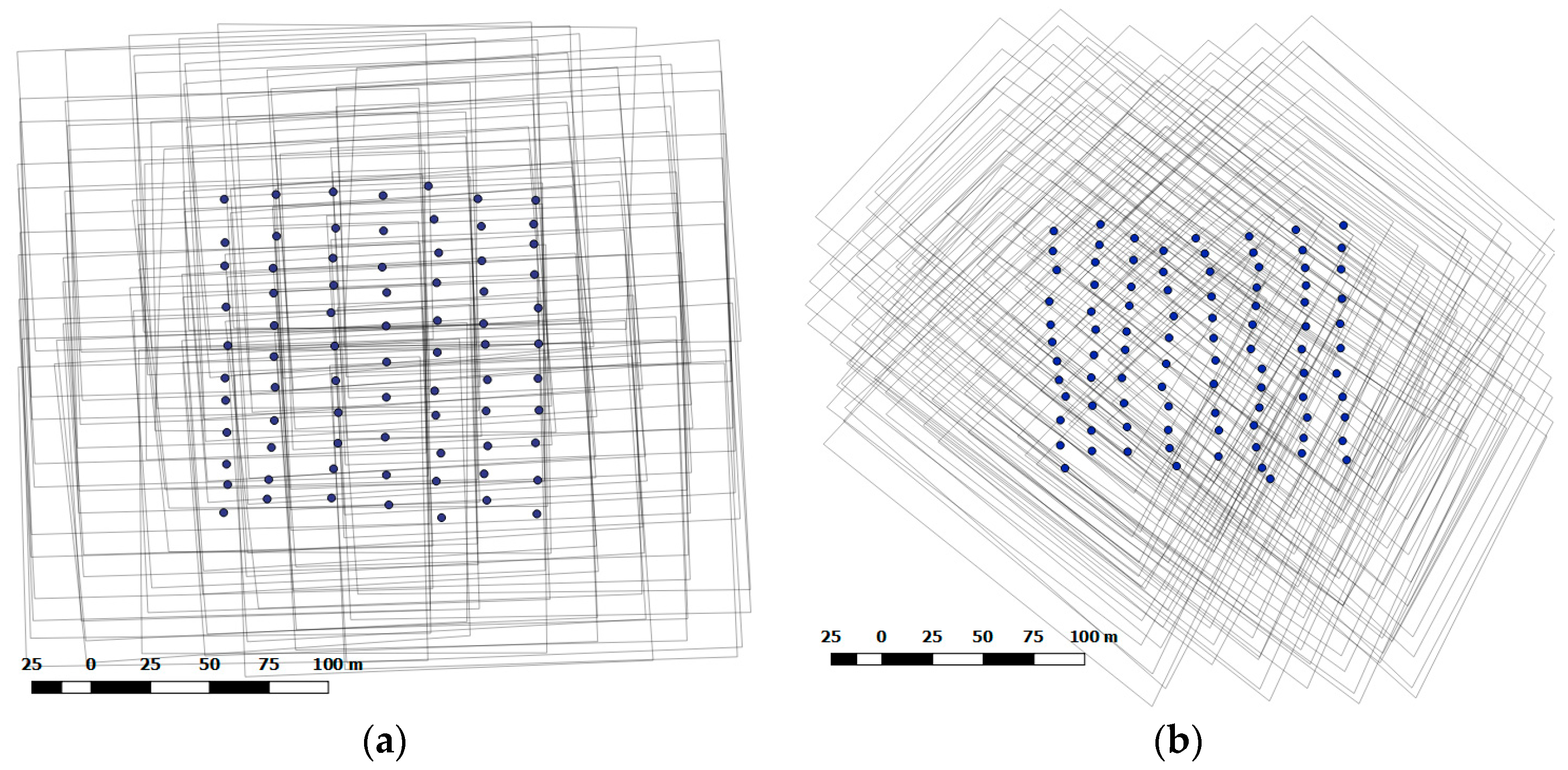

Important factors for the consideration of bundle-block adjustment accuracy include the number of GCPs needed for image georeferencing, their distribution, and camera self-calibration calculations. Agüera-Vega [

31] and Goldstein [

32] showed that optimal results for UAS image bundle-block adjustment and SfM can be reached with 10–15 signalised GCPs. In our case, the GCP measurements were performed after the image data acquisition, so we were restricted to measuring well identified man-made (poles, concrete slab corners) and natural (tree stumps) objects. Due to the limited number of such GCPs across the study area, the height (Z) GCPs were added to preserve the block homogenous accuracy. As vertical accuracy of the bundle-block adjustment is extremely influential on tree heights measurements, we suggest measuring additional non-signalised or even signalised height (Z) GCPs across the study area, based on a regular locational pattern. In this study, the

Photomod package produced better results related to the enhanced vertical accuracy of the bundle-block adjustment; these were attributable to the stereo mode for GCP manual measurements, which is not available in

PhotoScan.

The accurate representation of the terrain is crucial for characterising the 3D structure of vegetation, which is necessary for CHM calculations [

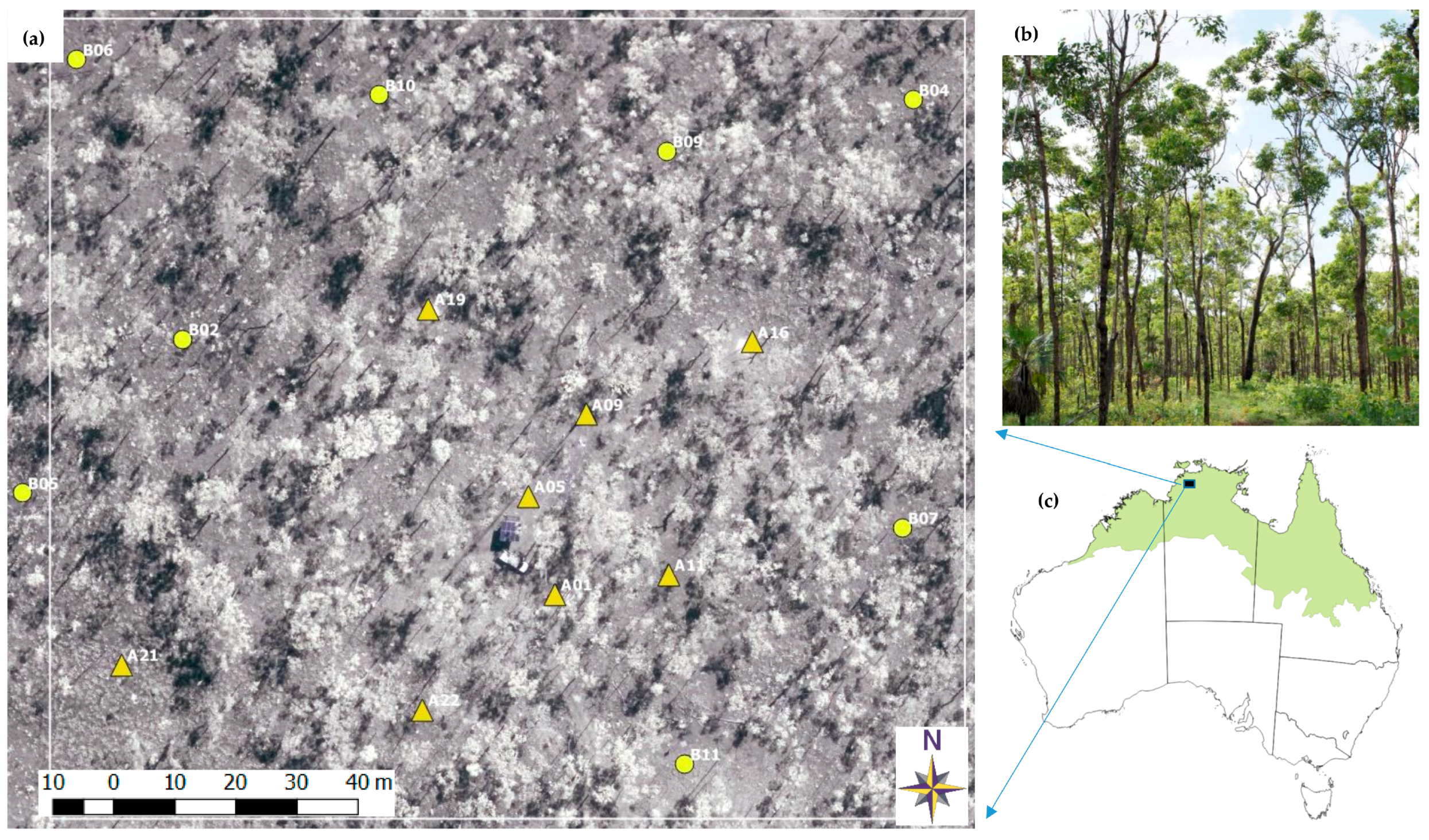

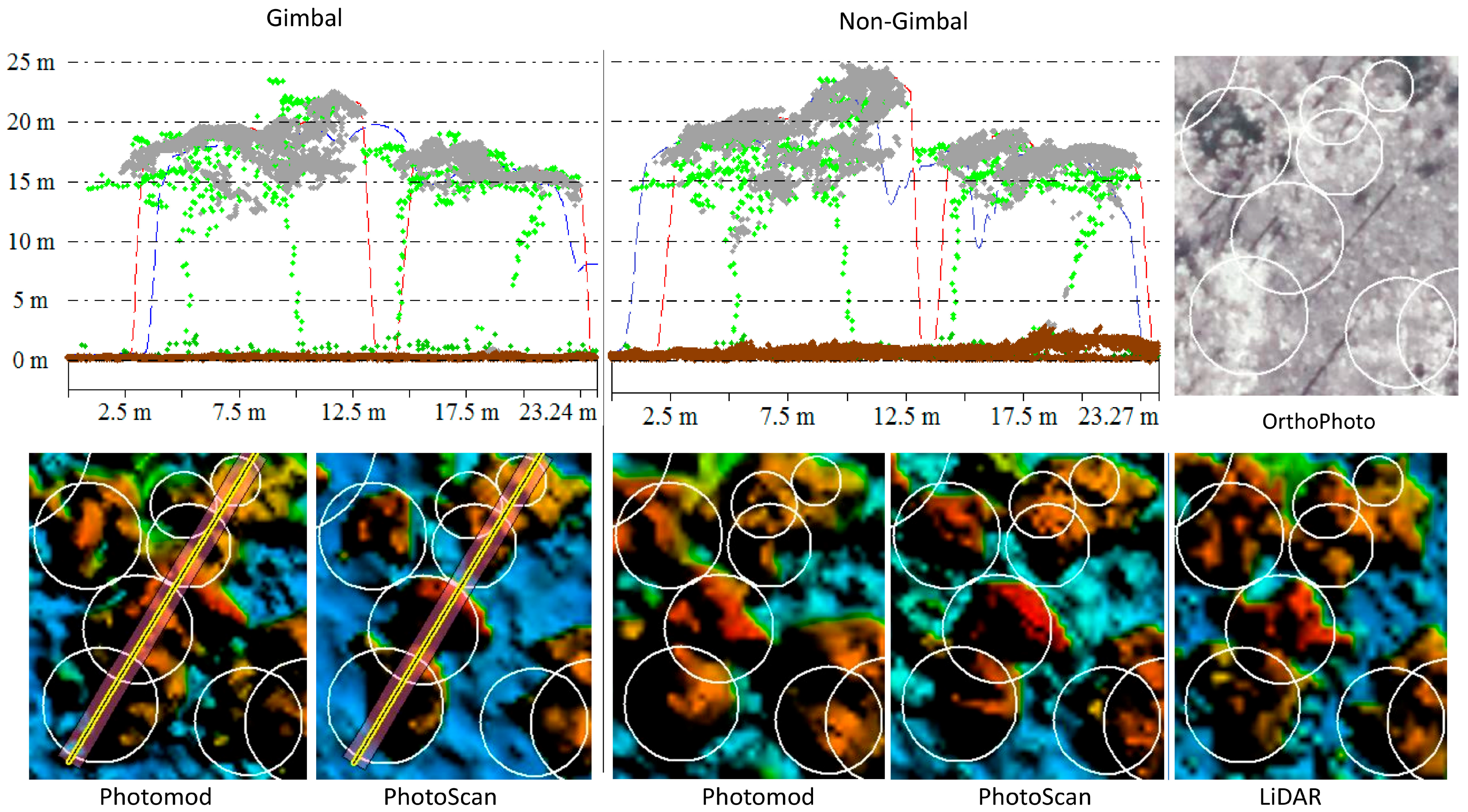

15]. The current study found that the

Eucalyptus spp. savanna vegetation structure is sufficiently transparent for accurate terrain reconstruction by SfM matching techniques. Based on our results, ~50% of all 3D point cloud extracted points related to the ground surface, which negates the need to use an external digital terrain model for CHM generation (

Figure 6). On the other hand, crown transparency had a direct impact on tree detection rates using SfM matching. These findings suggest that the optimal image data acquisition time is between the end of the wet and start of the dry seasons, when canopy cover of Australian tropical savanna is at maximum [

33]. Overall, SfM-based ground surfaces provided an accurate and applicable representation of the terrain across the study plot (

Table 2). The largest differences in the non-gimbal SfM-based models likely originated from the poor reconstructed image geometry during image block relative orientation (tie point matching) and 3D point cloud SfM calculations (high noise;

Figure 6).

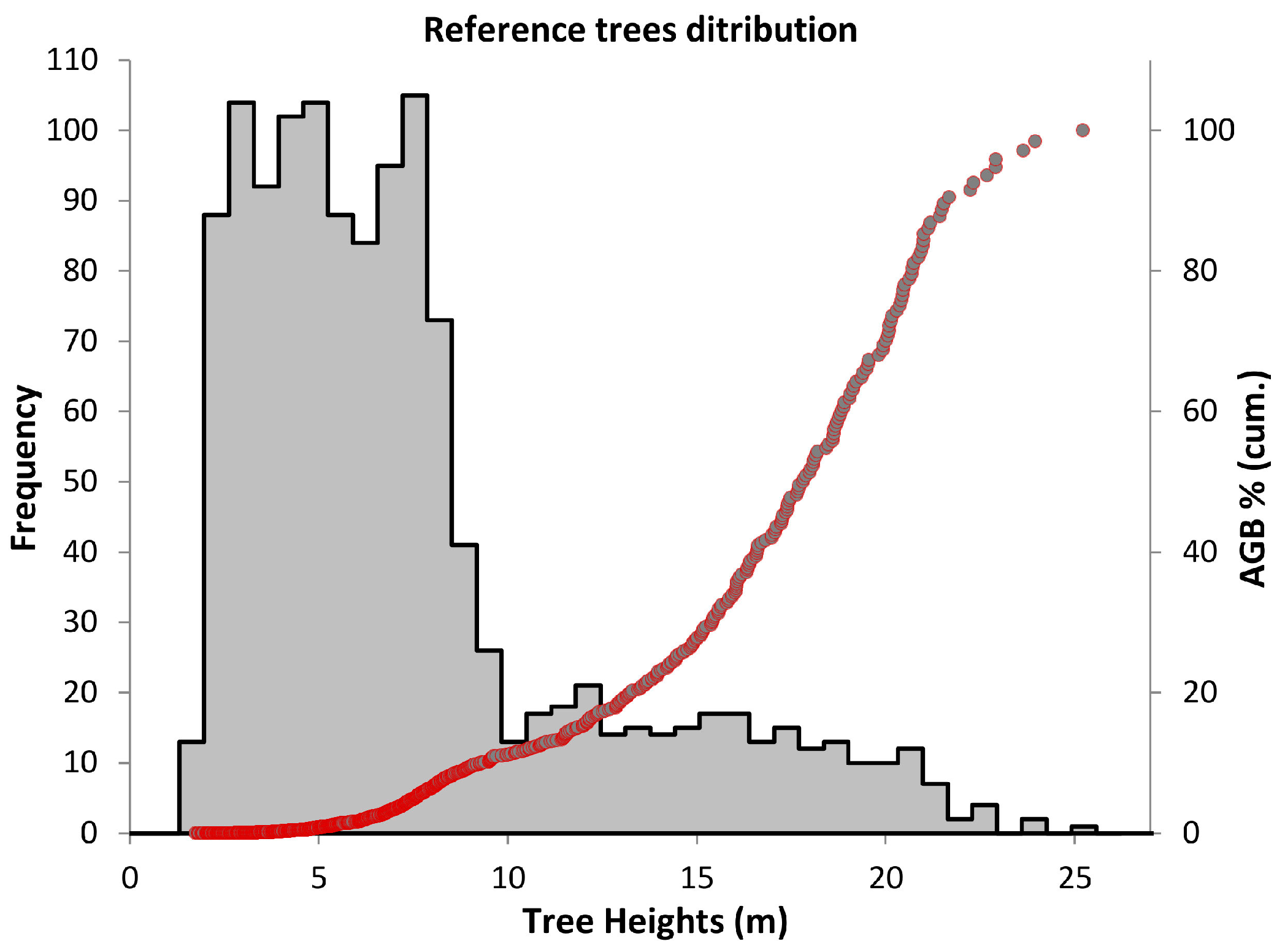

The spatial resolution of the CHM greatly impacts the detectability of small trees <10 m (omission error), whilst simultaneously impacting the local maxima detection of tall tree crowns (commission error). We found that small, understorey, and intermediate trees could not be reliably identified with the local maxima approach at all resolutions, where the detection rate was 35% at 0.3–0.4 m CHM resolution, and reduced to 25% at 1 m CHM resolution. Similarly, depending on the ITD approach, many other LiDAR studies [

34,

35,

36,

37] demonstrate similarly low detection rates of small trees (<40%), describing poor representativeness in point clouds due to overstory obscuration. However, in our study, the omission error for trees <10 m, had a minor influence on the final biomass estimates, since all of the small trees account for only 13% of total AGB.

The occurrence of false tree peaks (

H > 10 m) added further challenges. The ~40% commission error is related to multi-local maxima in corresponding tree crowns, while the remaining proportion represent falsely detected trees. We found that using the 0.3-m CHM significantly increased (by ~100%) the number of extra local maxima in corresponding tree crowns. In turn, this led to greater commission errors and substantial AGB overestimation. The detection of dominant and co-dominant trees remained stable for the 0.5 and 1 m CHMs resolutions, providing a reliable tree detection rate (65–70%) for tropical

Eucalyptus spp. savanna (

Table 3).

Despite all models (

Table 4 and

Table 5) showing similar tree detection rates, our findings demonstrated slightly better results related to models based on the

PhotoScan 3D point cloud, especially with watershed segmentation. This variance could be attributed to the different matching algorithms that were used in the two software packages. Although all of the SfM-based models showed generally adequate tree detection rates, LiDAR-based measurements were better by 17% for all of the trees, and by 9% for dominant and co-dominant trees. Comparison of the LiDAR and SfM point cloud vertical profiles (

Figure 6) show that SfM did not capture the foliage distribution of the midstory and understory canopy layers. At the same time, the SfM point cloud provided a greater point density than the LiDAR data, depending only on image resolution, and used matching algorithms. It is likely that the discrepancy in detection rates between the LiDAR and SfM data could be partly ameliorated by using a camera with a larger sensor and oblique imagery, which is an important consideration for future research.

Another issue related to tree detection accuracy was the significant effect of the local maxima search window size relative to the tree crown size. Therefore, filter dimensions require careful selection [

26,

38]. As a result, individual tree detection accuracy can be improved through clarification of height–crown diameter relationships before each project when undertaking the canopy maxima approach. Therefore, it may be the case that watershed segmentation can be used as a key tree detection approach, as it does not need the height–crown diameter relationship calculation. To minimise tree detection commission errors, the watershed segmentation needs a definition of the threshold value for segments to join. Another advantage of watershed segmentation use over a

local maxima approach is that it provides additional tree attributes, such as crown delineation and canopy area data. Similarly, the watershed segmentation approach cannot correctly extract tree segment areas, due to considerable variation in crown diameter and the crown transparency of dominant and co-dominant Eucalypt trees. Thus, the crown area segments extracted by the watershed segmentation cannot improve the AGB estimation of Eucalypt trees in Australian tropical savannas.

4.2. The Effect of Camera Calibration Precision on the Accuracy of Tree Height and Biomass Estimation

In this study, the accuracy of the CHM had a direct effect on final AGB estimation, given indirect allometry based only on tree height. Under/overestimation of tree heights (

Table 6) led to corresponding variation in plot AGB estimation, from −11% to +15% for trees (

H > 10 m), depending on the model (except in the case of the non-gimbal

PhotoScan model). The

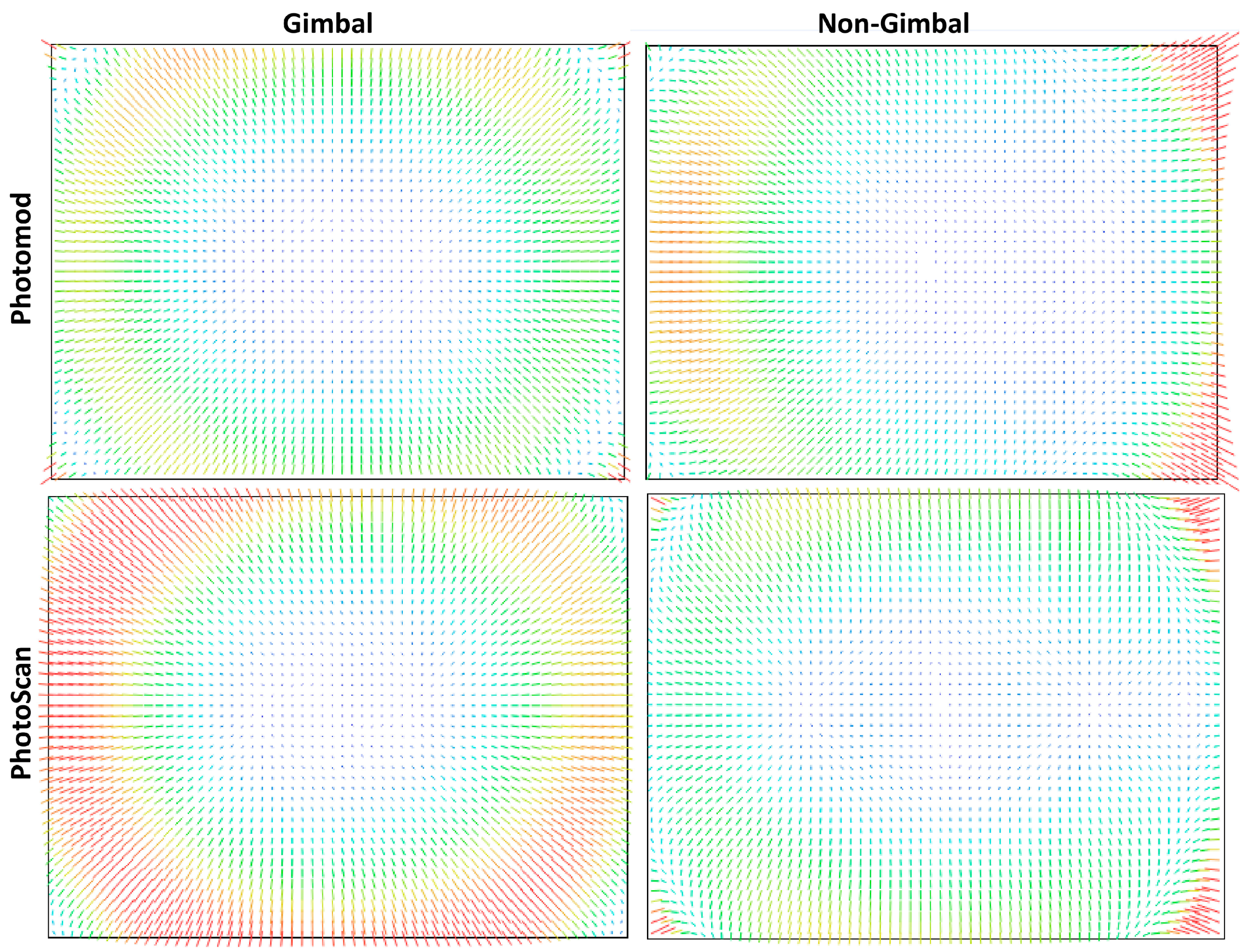

Photomod gimbal-based CHMs tended to underestimate tree height (~−25 cm mean), while the

PhotoScan models overestimated (~+10 cm mean). The tree height underestimation in the

Photomod models can be partly explained by smoothing filters and the interpolation process applied during the DSM creation from the 3D point cloud. As well, the results from

Photomod and

PhotoScan are likely to be related to volatility and errors in the camera’s self-calibration process during the independent block-bundle adjustments (

Figure 7), which therefore affected the vertical accuracy of the extracted digital surface model [

39].

The differences in the self-calibration results may be explained, firstly, by all of the GCPs being located on flat terrain (<0.5 m height range), which is disadvantageous in terms of accuracy and correlation between camera parameters; it is a non-optimal approach to producing metrically corrected and scene-independent calibration [

40]. Based on James and Robson [

39], another possible explanation for this discrepancy is that the self-calibrating bundle adjustment of non-metric cameras may not be able to derive lens radial distortion accurately, resulting in a systematic vertical error possibly remaining, even with sufficient numbers of GCPs. We anticipate that a camera with a larger sensor and detector pixel size could provide better accuracy in tree height estimation due to its more stable internal sensor geometry and better radiometry.

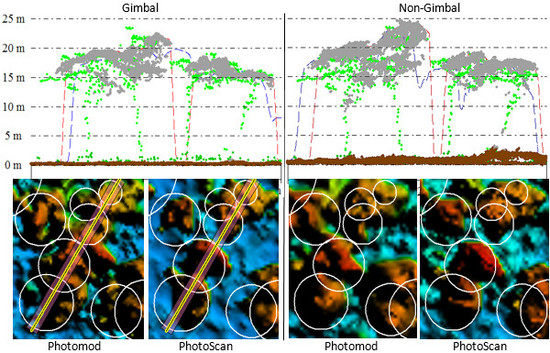

The

Photomod-based model of the non-gimbal flight provided slightly better results in comparison with the gimbal flight, especially in the case of several tall tree detections (

Figure 8). These results are likely related to noticeable changes of camera orientation angles, and the fact that the camera was not angled at nadir during the non-gimbal flight. Besides self-calibration issues, the tree detection omission and commission errors do not compensate for each other, which obviously leads to systematic under/overestimation of AGB in each corresponding model.

4.3. Aspects and Limitations of Data Acquisition by GoPro HERO4 Camera

The main limitation of the GoPro camera is that the very small sensor (1.55 µm detector pixel size), in combination with a small lens aperture, has low sensitivity to light (low signal to noise ratio and low dynamic range). Additionally, the operations of the camera are limited by the availability of automatic shooting and continuous data acquisition modes (1 s in our case) only. As a result, to provide a sufficient shutter speed (<1/1000 s) for image acquisition, the camera must be operated in sunny conditions with a sun angle >50°. Hence, we do not recommend using the acquired GoPro imagery without basic radiometry pre-processing (contrast, sharpness, etc.).

Direct georeferencing, based only on on-board GoPro GPS data, cannot be used for accurate forestry applications due to the low accuracy of the mobile GPS (5–20 m absolute error, in our case). The GCPs must be measured for indirect image georeferencing and camera self-calibration. This study, and our experience in UAS data processing, has demonstrated that on-board GPS precision is not a major factor defining successful UAS imagery processing results. The ability to deliver radiometrically corrected and undistorted images with high overlap and stable camera orientation angles is more important, which is in agreement with Bosak [

41]. This can be achieved by camera platform-stabilising (gimbal use) during data acquisition. Despite the non-gimbal acquisition reducing the cost and weight of the equipment and sometimes providing better results in tree detection (

Figure 8), we recommend using a gimbal for accurate UAS mapping. The gimbal can help prevent unexpected problems related to image block relative orientation (tie point matching) and 3D point cloud matching (high noise, which has impact on point classification accuracy (

Table 2;

Figure 6)). In most cases, the problems we’ve mentioned cannot be solved by an inexperienced user, and require highly skilled photogrammetric ability and experience and comprehensive software tools (stereo mode, pair-wise error deep analysis etc.). Taking all these factors together, we conclude that a GoPro camera with gimbal can be used for the AGB estimation of the dominant and co-dominant trees in Australian tropical savannas, with a plot accuracy ±15% (without counting both small and understory tress). Furthermore, the limitation of this study related to fact that presented individual tree detection results can be applied only in local areas with similar

Eucalyptus spp. vegetation.