Enhancing Land Cover Mapping through Integration of Pixel-Based and Object-Based Classifications from Remotely Sensed Imagery

Abstract

:1. Introduction

2. Methods

2.1. Generating the Class Proportions of Pixels and Objects

2.2. Estimating the Class Spatial Dependence from Object-Scale Properties for Each Pixel

2.3. Fusing the Class Proportions of Pixels and the Spatial Dependence of Pixels

2.4. Determining the Optimal Class Label of Each Pixel within an Object

3. Experiments

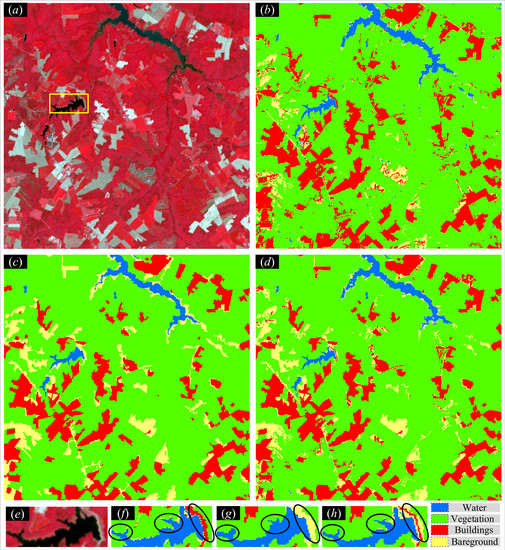

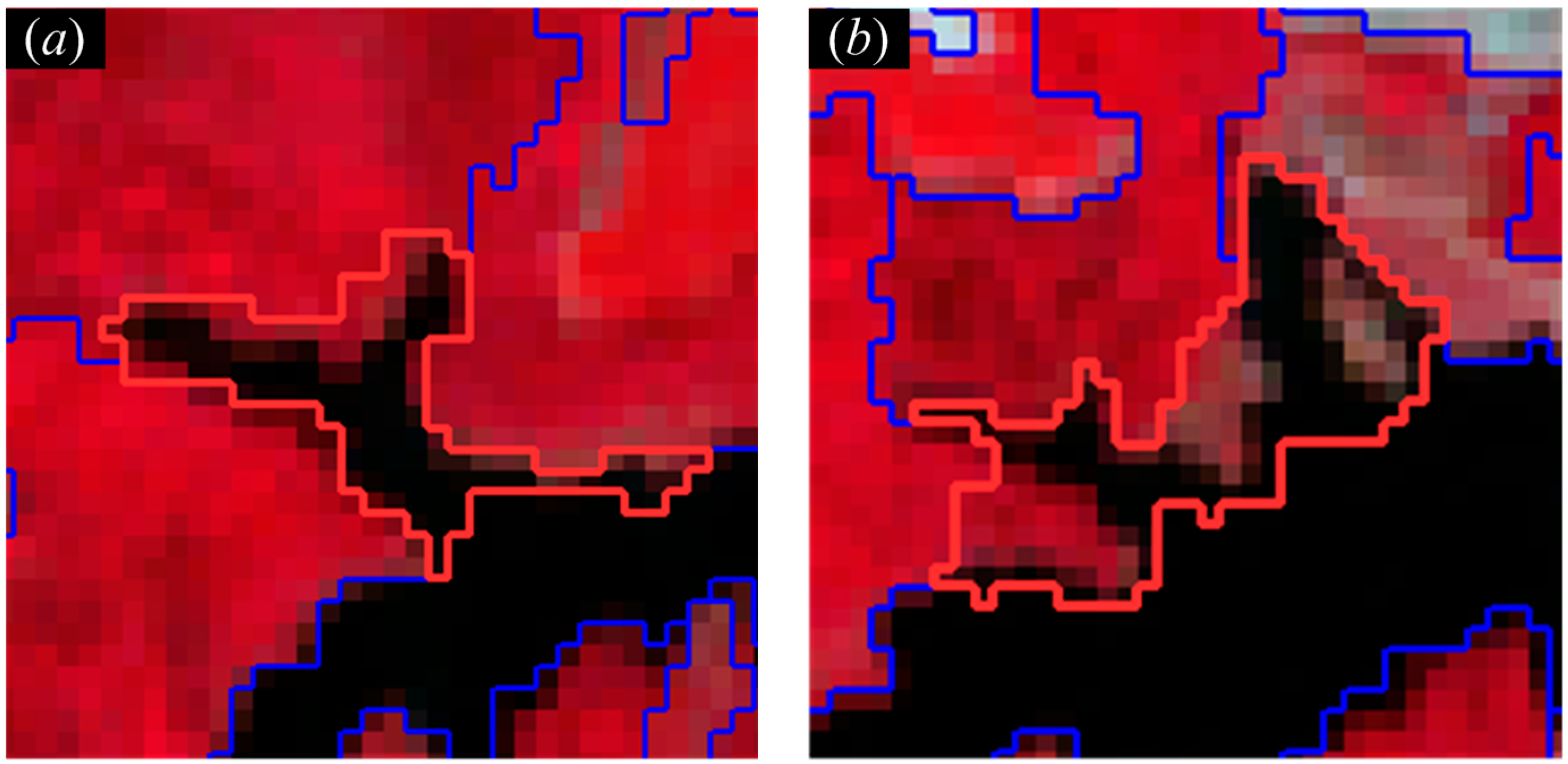

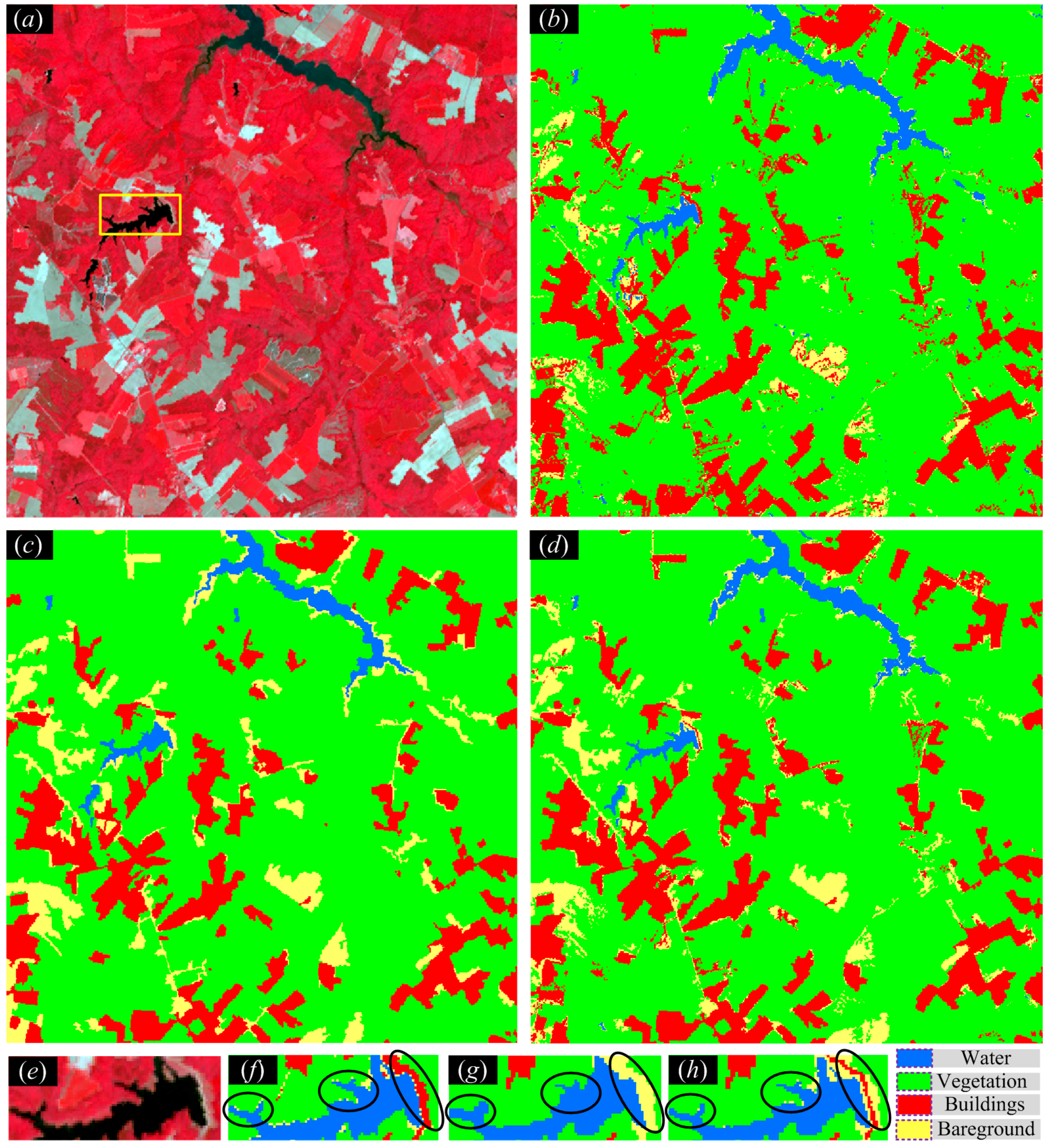

3.1. Experiment on ASTER Imagery

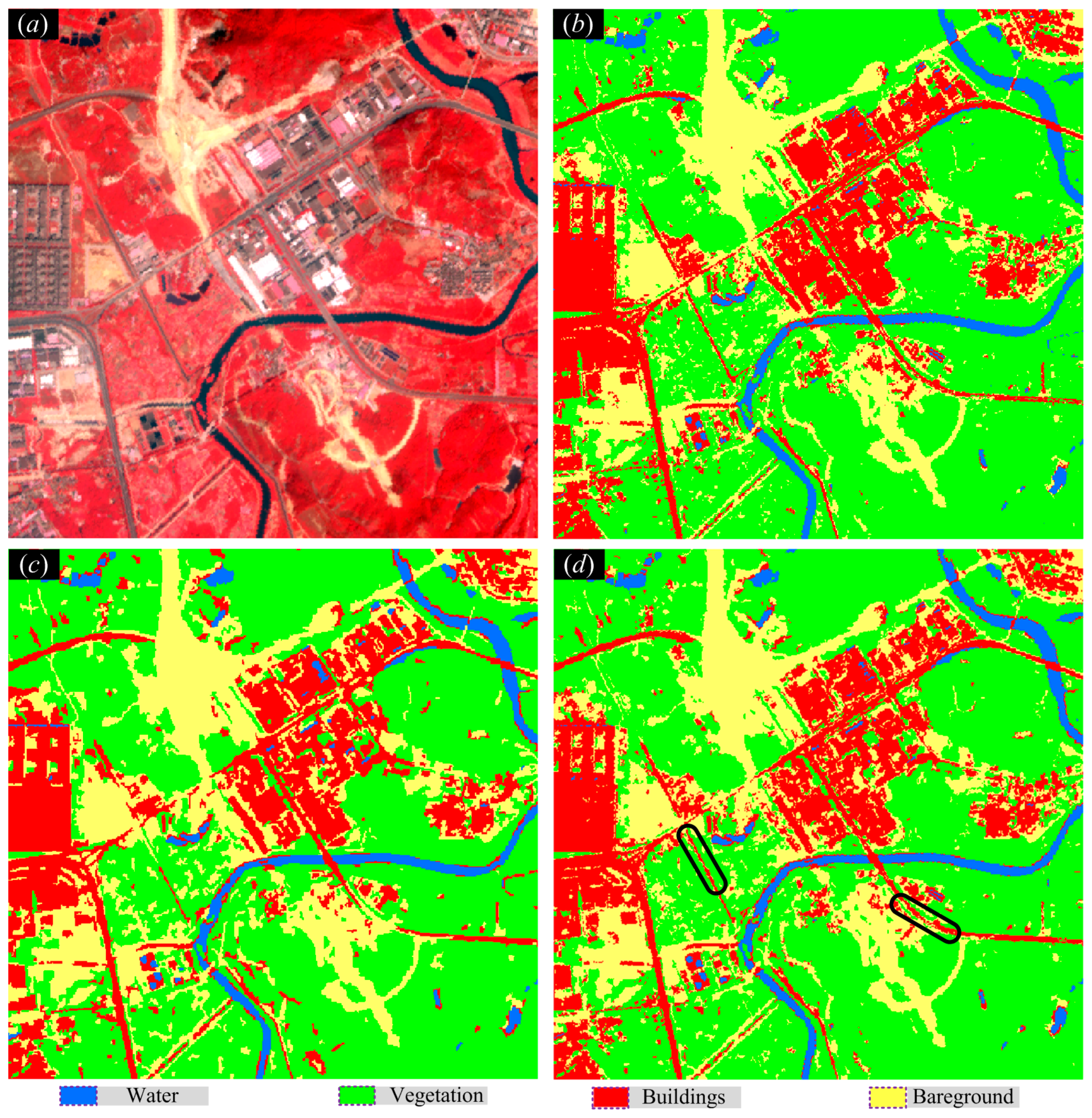

3.2. Experiment on ZY-3 Imagery

4. Discussion

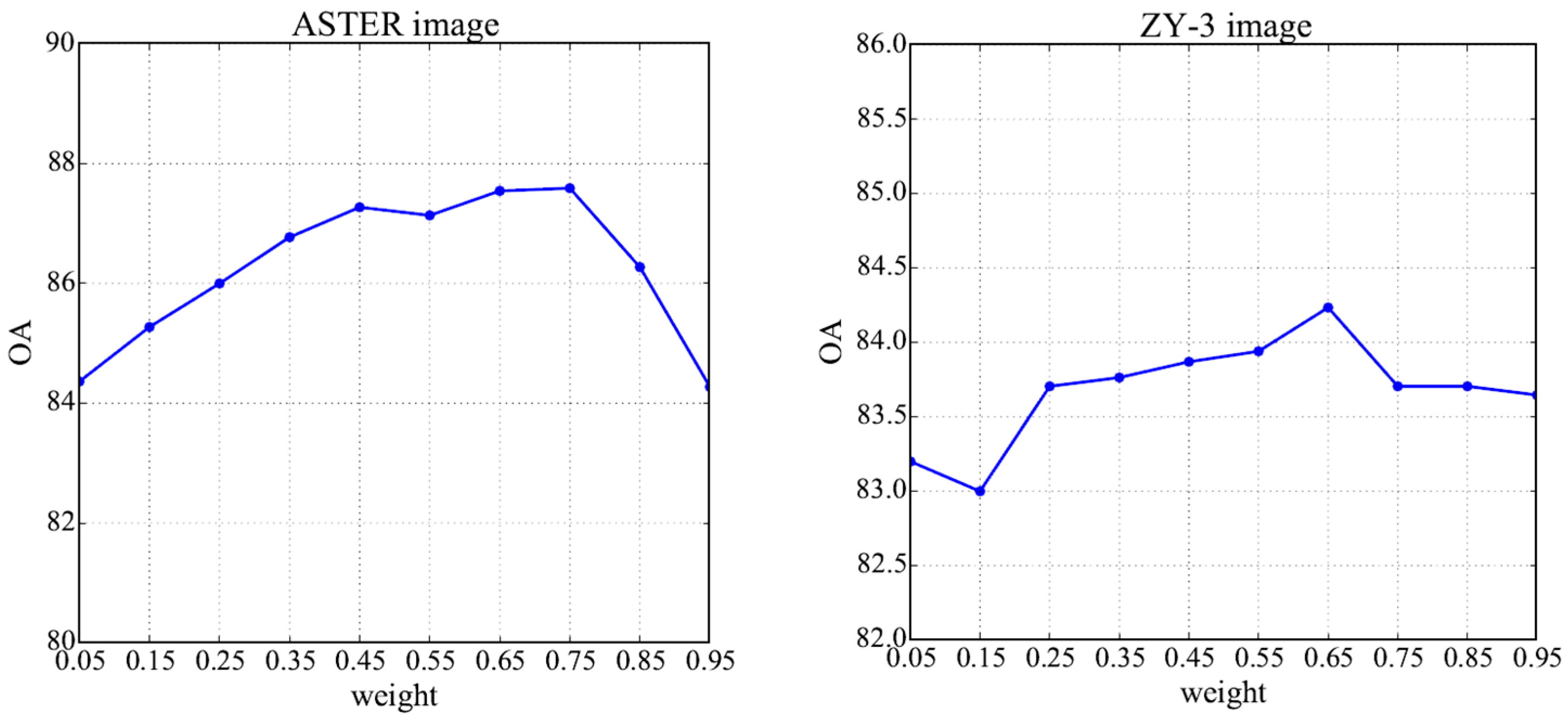

4.1. Impact of Fusion Weight on IPOC Performance

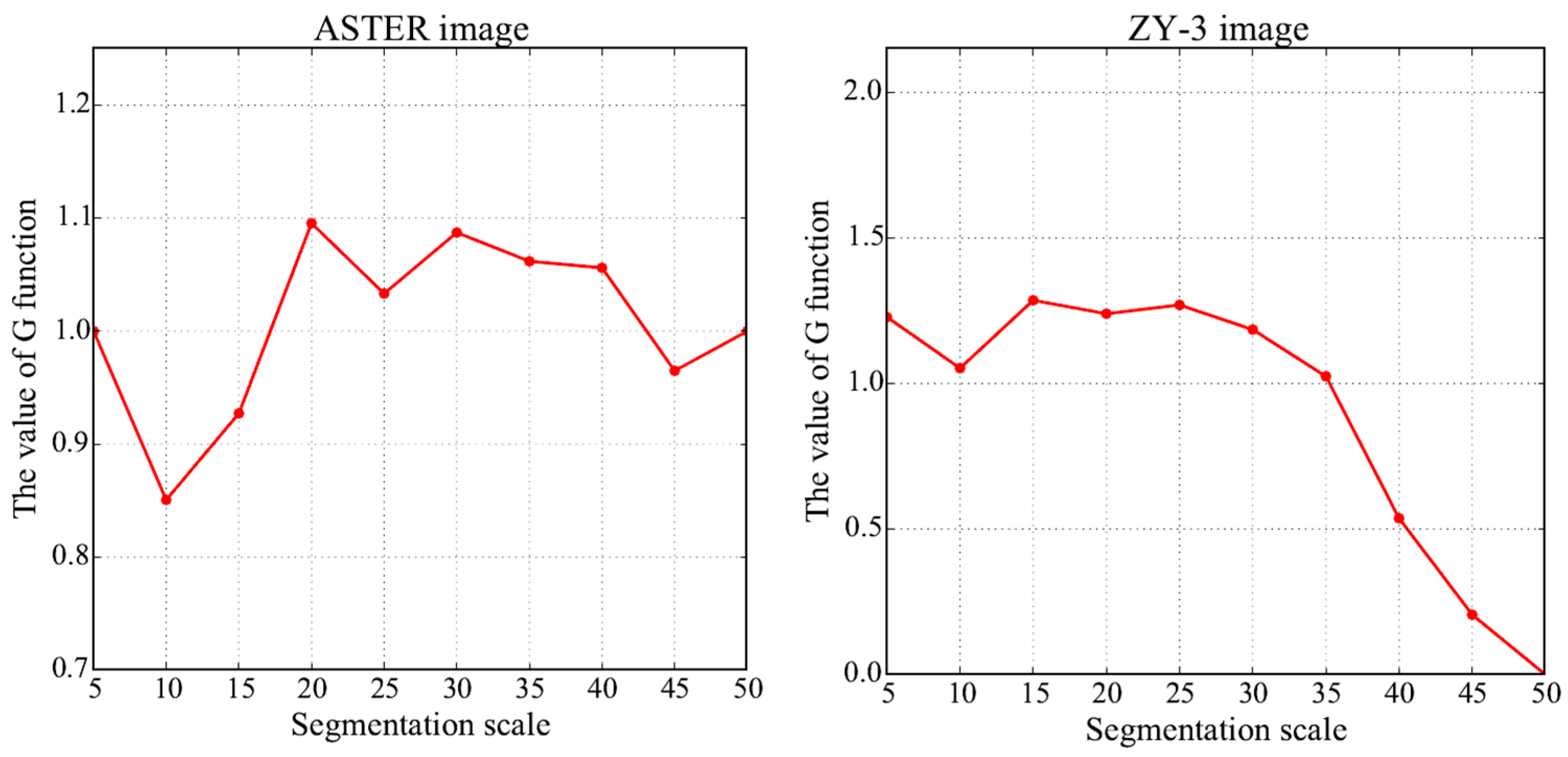

4.2. Analysis of Image Segmentation Scales

4.3. Comparison between IPOC and the Other Method

4.4. Uncertainty Analysis of Validation Data

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A pok-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Cihlar, J. Land cover mapping of large areas from satellites: Status and research priorities. Int. J. Remote Sens. 2000, 21, 1093–1114. [Google Scholar] [CrossRef]

- Tso, B.; Mather, P.M. Classification Methods for Remotely Sensed Data; CRC: Boca Raton, FL, USA, 2009. [Google Scholar]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; Grillmayer, R.; Achard, F.; Kraxner, F.; Obersteiner, M. Geo-wiki.Org: The use of crowdsourcing to improve global land cover. Remote Sens. 2009, 1, 345–354. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic object-based image analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Shi, C.; Wang, L. Incorporating spatial information in spectral unmixing: A review. Remote Sens. Environ. 2014, 149, 70–87. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dube, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using spot-5 hrg imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Lanorte, A.; De Santis, F.; Nole, G.; Blanco, I.; Loisi, R.V.; Schettini, E.; Vox, G. Agricultural plastic waste spatial estimation by landsat 8 satellite images. Comput. Electron. Agric. 2017, 141, 35–45. [Google Scholar] [CrossRef]

- Bialas, J.; Oommen, T.; Rebbapragada, U.; Levin, E. Object-based classification of earthquake damage from high-resolution optical imagery using machine learning. J. Appl. Remote Sens. 2016, 10, 036025. [Google Scholar] [CrossRef]

- Keyport, R.N.; Oommen, T.; Martha, T.R.; Sajinkumar, K.S.; Gierke, J.S. A comparative analysis of pixel- and object-based detection of landslides from very high-resolution images. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 1–11. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Jia, Y. Integrating object boundary in super-resolution land cover mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 219–230. [Google Scholar] [CrossRef]

- Wang, L.; Sousa, W.P.; Gong, P. Integration of object-based and pixel-based classification for mapping mangroves with ikonos imagery. Int. J. Remote Sens. 2004, 25, 5655–5668. [Google Scholar] [CrossRef]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Espindola, G.M.; Camara, G.; Reis, I.A.; Bins, L.S.; Monteiro, A.M. Parameter selection for region-growing image segmentation algorithms using spatial autocorrelation. Int. J. Remote Sens. 2006, 27, 3035–3040. [Google Scholar] [CrossRef]

- Johnson, B.; Xie, Z.X. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote Sens. 2011, 66, 473–483. [Google Scholar] [CrossRef]

- Fisher, P. The pixel: A snare and a delusion. Int. J. Remote Sens. 1997, 18, 679–685. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Ge, Y.; Chen, Y.; Stein, A.; Li, S.; Hu, J. Enhanced sub-pixel mapping with spatial distribution patterns of geographical objects. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2356–2370. [Google Scholar] [CrossRef]

- Li, X.J.; Meng, Q.Y.; Gu, X.F.; Jancso, T.; Yu, T.; Wang, K.; Mavromatis, S. A hybrid method combining pixel-based and object-oriented methods and its application in hungary using chinese hj-1 satellite images. Int. J. Remote Sens. 2013, 34, 4655–4668. [Google Scholar] [CrossRef]

- Costa, H.; Carrao, H.; Bacao, F.; Caetano, M. Combining per-pixel and object-based classifications for mapping land cover over large areas. Int. J. Remote Sens. 2014, 35, 738–753. [Google Scholar] [CrossRef]

- Malinverni, E.S.; Tassetti, A.N.; Mancini, A.; Zingaretti, P.; Frontoni, E.; Bernardini, A. Hybrid object-based approach for land use/land cover mapping using high spatial resolution imagery. Int. J. Geogr. Inf. Sci. 2011, 25, 1025–1043. [Google Scholar] [CrossRef]

- Aguirre-Gutierrez, J.; Seijmonsbergen, A.C.; Duivenvoorden, J.F. Optimizing land cover classification accuracy for change detection, a combined pixel-based and object-based approach in a mountainous area in mexico. Appl. Geogr. 2012, 34, 29–37. [Google Scholar] [CrossRef]

- Goncalves, L.M.S.; Fonte, C.C.; Julio, E.N.B.S.; Caetano, M. A method to incorporate uncertainty in the classification of remote sensing images. Int. J. Remote Sens. 2009, 30, 5489–5503. [Google Scholar] [CrossRef]

- Sheeren, D.; Bastin, N.; Ouin, A.; Ladet, S.; Balent, G.; Lacombe, J.P. Discriminating small wooded elements in rural landscape from aerial photography: A hybrid pixel/object-based analysis approach. Int. J. Remote Sens. 2009, 30, 4979–4990. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, F.; Nemmaoui, A.; Aguilar, M.; Chourak, M.; Zarhloule, Y.; García Lorca, A. A quantitative assessment of forest cover change in the moulouya river watershed (morocco) by the integration of a subpixel-based and object-based analysis of landsat data. Forests 2016, 7, 23. [Google Scholar] [CrossRef]

- Atkinson, P.M. Mapping Sub-Pixel Boundaries from Remotely Sensed Images; Kemp, Z., Ed.; Taylor and Francis: London, UK, 1997; pp. 166–180. [Google Scholar]

- Boucher, A.; Kyriakidis, P.C. Super-resolution land cover mapping with indicator geostatistics. Remote Sens. Environ. 2006, 104, 264–282. [Google Scholar] [CrossRef]

- Foody, G.M.; Muslim, A.M.; Atkinson, P.M. Super-resolution mapping of the waterline from remotely sensed data. Int. J. Remote Sens. 2005, 26, 5381–5392. [Google Scholar] [CrossRef]

- Li, L.; Xu, T.; Chen, Y. Improved urban flooding mapping from remote sensing images using generalized regression neural network-based super-resolution algorithm. Remote Sens. 2016, 8, 625. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Du, Y.; Zhang, Y. Spatially adaptive superresolution land cover mapping with multispectral and panchromatic images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2810–2823. [Google Scholar] [CrossRef]

- Ling, F.; Foody, G.; Li, X.; Zhang, Y.; Du, Y. Assessing a temporal change strategy for sub-pixel land cover change mapping from multi-scale remote sensing imagery. Remote Sens. 2016, 8, 642. [Google Scholar] [CrossRef]

- Mertens, K.C.; De Baets, B.; Verbeke, L.P.C.; de Wulf, R.R. A sub-pixel mapping algorithm based on sub-pixel/pixel spatial attraction models. Int. J. Remote Sens. 2006, 27, 3293–3310. [Google Scholar] [CrossRef]

- Tatem, A.J.; Lewis, H.G.; Atkinson, P.M.; Nixon, M.S. Multiple-class land-cover mapping at the sub-pixel scale using a hopfield neural network. Int. J. Appl. Earth Obs. Geoinf. 2001, 3, 184–190. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. The effect of the point spread function on sub-pixel mapping. Remote Sens. Environ. 2017, 193, 127–137. [Google Scholar] [CrossRef]

- Xu, X.; Tong, X.; Plaza, A.; Zhong, Y.; Xie, H.; Zhang, L. Joint sparse sub-pixel mapping model with endmember variability for remotely sensed imagery. Remote Sens. 2017, 9, 15. [Google Scholar] [CrossRef]

- Zhang, Y.; Atkinson, P.M.; Li, X.; Ling, F.; Wang, Q.; Du, Y. Learning-based spatia-temporal superresolution mapping of forest cover with modis images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 600–614. [Google Scholar] [CrossRef]

- Zhong, Y.; Wu, Y.; Xu, X.; Zhang, L. An adaptive subpixel mapping method based on map model and class determination strategy for hyperspectral remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1411–1426. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Foody, G.M.; Ge, Y.; Zhang, Y.; Du, Y. Generating a series of fine spatial and temporal resolution land cover maps by fusing coarse spatial resolution remotely sensed images and fine spatial resolution land cover maps. Remote Sens. Environ. 2017, 196, 293–311. [Google Scholar] [CrossRef]

- Goovaerts, P. Kriging and semivariogram deconvolution in the presence of irregular geographical units. Math. Geosci. 2008, 40, 101–128. [Google Scholar] [CrossRef]

- Truong, N.P.; Heuvelink, G.B.M.; Pebesma, E. Bayesian area-to-point kriging using expert knowledge as informative priors. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 128–138. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Wang, Q.; Jiang, Y. A subpixel mapping algorithm combining pixel-level and subpixel-level spatial dependences with binary integer programming. Remote Sens. Lett. 2014, 5, 902–911. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Heuvelink, G.B.M.; Hu, J.; Jiang, Y. Hybrid constraints of pure and mixed pixels for soft-then-hard super-resolution mapping with multiple shifted images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2040–2052. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Song, D. Superresolution land-cover mapping based on high-accuracy surface modeling. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2516–2520. [Google Scholar] [CrossRef]

- Guo, X.; Huang, X.; Zhang, L.; Zhang, L.; Plaza, A.; Benediktsson, J.A. Support tensor machines for classification of hyperspectral remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3248–3264. [Google Scholar] [CrossRef]

- Martha, T.R.; Kerle, N.; Jetten, V.; van Westen, C.J.; Kumar, K.V. Characterising spectral, spatial and morphometric properties of landslides for semi-automatic detection using object-oriented methods. Geomorphology 2010, 116, 24–36. [Google Scholar] [CrossRef]

- Martha, T.R. Detection of Landslides by Object-Oriented Image Analysis; The University of Twente: Enschede, The Netherlands, 2011. [Google Scholar]

- Chen, Y.; Ge, Y.; Heuvelink, G.B.M.; An, R.; Chen, Y. Object-based superresolution land cover mapping from remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 328–340. [Google Scholar] [CrossRef]

- Frank, J.; Rebbapragada, U.; Bialas, J.; Oommen, T.; Havens, T.C. Effect of label noise on the machine-learned classification of earthquake damage. Remote Sens. 2017, 9, 803. [Google Scholar] [CrossRef]

- Esfahani, M.S.; Dougherty, E.R. Effect of separate sampling on classification accuracy. Bioinformatics 2014, 30, 242–250. [Google Scholar] [CrossRef] [PubMed]

| Method | Water | Vegetation | Buildings | Bare Ground | |

|---|---|---|---|---|---|

| PHC | PA (%) | 77.25 | 92.07 | 79.04 | 55.11 |

| UA (%) | 75.26 | 86.77 | 66.67 | 78.57 | |

| OA (%) = 79.95 | KA = 0.6918 | ||||

| OHC | PA (%) | 68.78 | 94.17 | 79.52 | 66.53 |

| UA (%) | 80.25 | 83.85 | 90.66 | 75.11 | |

| OA (%) = 82.95 | KA = 0.7327 | ||||

| IPOC | PA (%) | 77.78 | 95.72 | 84.58 | 75.95 |

| UA (%) | 97.35 | 86.14 | 90.93 | 85.36 | |

| OA (%) = 87.59 | KA = 0.8058 | ||||

| Method | Water | Vegetation | Buildings | Bare Ground | |

|---|---|---|---|---|---|

| PHC | PA (%) | 80.68 | 83.78 | 75.54 | 79.42 |

| UA (%) | 92.21 | 88.30 | 78.00 | 67.64 | |

| OA (%) = 80.65 | KA = 0.7074 | ||||

| OHC | PA (%) | 81.82 | 84.27 | 73.61 | 81.00 |

| UA (%) | 88.89 | 89.51 | 83.52 | 63.56 | |

| OA (%) = 80.82 | KA = 0.7108 | ||||

| IPOC | PA (%) | 84.09 | 87.07 | 79.90 | 82.85 |

| UA (%) | 100.00 | 88.81 | 87.77 | 70.40 | |

| OA (%) = 84.24 | KA = 0.7602 | ||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Zhou, Y.; Ge, Y.; An, R.; Chen, Y. Enhancing Land Cover Mapping through Integration of Pixel-Based and Object-Based Classifications from Remotely Sensed Imagery. Remote Sens. 2018, 10, 77. https://doi.org/10.3390/rs10010077

Chen Y, Zhou Y, Ge Y, An R, Chen Y. Enhancing Land Cover Mapping through Integration of Pixel-Based and Object-Based Classifications from Remotely Sensed Imagery. Remote Sensing. 2018; 10(1):77. https://doi.org/10.3390/rs10010077

Chicago/Turabian StyleChen, Yuehong, Ya’nan Zhou, Yong Ge, Ru An, and Yu Chen. 2018. "Enhancing Land Cover Mapping through Integration of Pixel-Based and Object-Based Classifications from Remotely Sensed Imagery" Remote Sensing 10, no. 1: 77. https://doi.org/10.3390/rs10010077