Energy Performance Indicators in the Swedish Building Procurement Process

Abstract

:1. Introduction

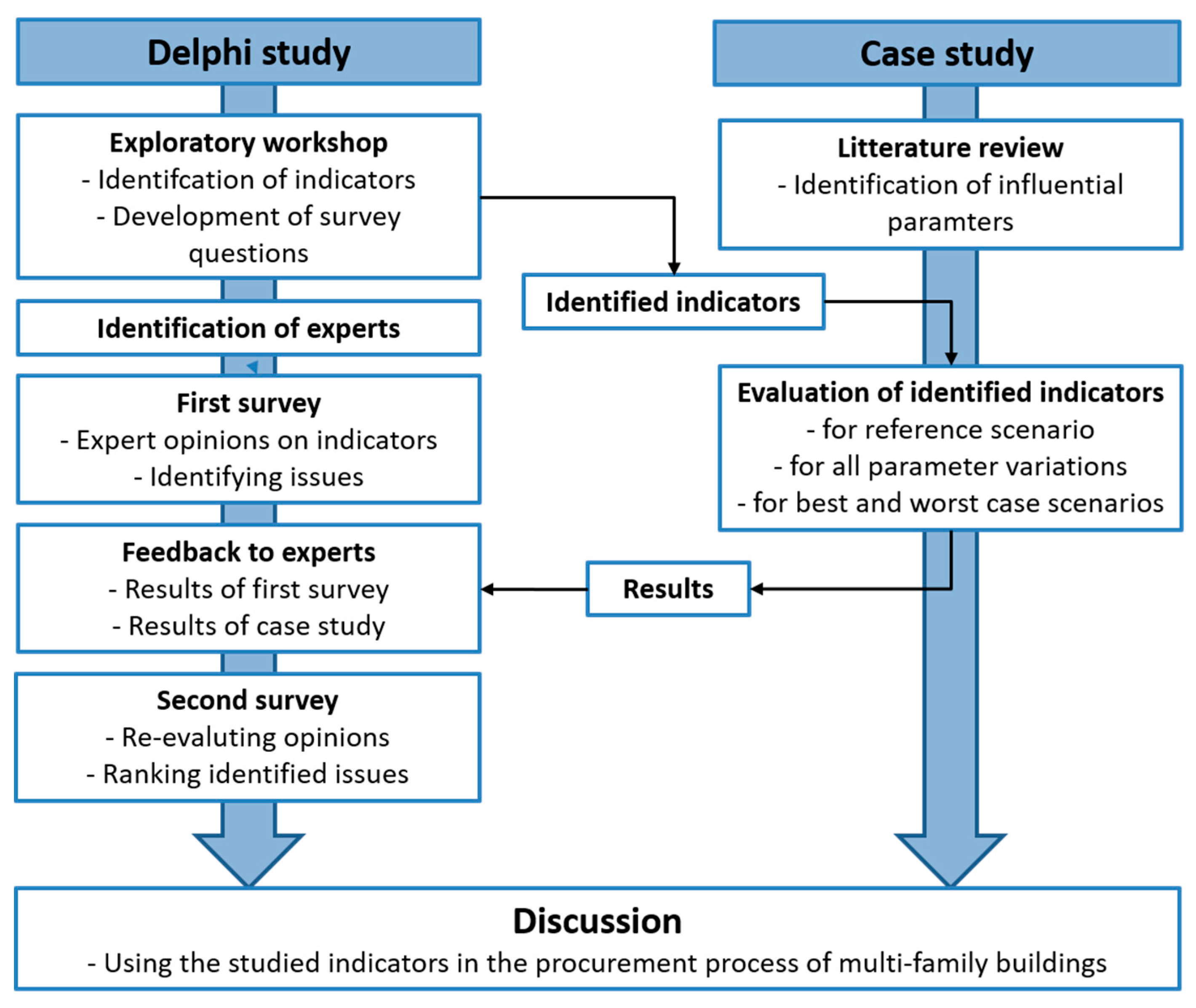

2. Method

2.1. Delphi Methodology

Application of the Delphi Methodology in this Study

- I1.

- the envelope air leakage @ 50 Pa (L/sm2);

- I2.

- U-values for different building parts (W/m2K);

- I3.

- the average U-value of the building envelope (W/m2K);

- I4.

- the specific heat loss through heat transfer, ventilation, and air leakage at the winter outdoor design temperature as defined by the Swedish Centre for Zero-energy [45] (henceforth SHLWDT) (W/m2K);

- I5.

- the heat loss coefficient including heat loss through ventilation, air leakage, and heat transfer towards the outdoor air, but not towards the ground (henceforth L) (kWh/°C);

- I6.

- the specific net energy need for space heating, domestic hot water, and facility appliances per heated floor area (not including any energy production conversion losses or heat losses within the house premise) (kWh/m2); and

- I7.

- the specific purchased energy for space heating, domestic hot water, and facility appliances supplied to the building’s technical installations for building services and energy system, per heated floor area (not including “free” energy such as solar or geothermal) [22] [kWh/m2].

2.2. Case Study Methodology

2.2.1 Reference Scenario

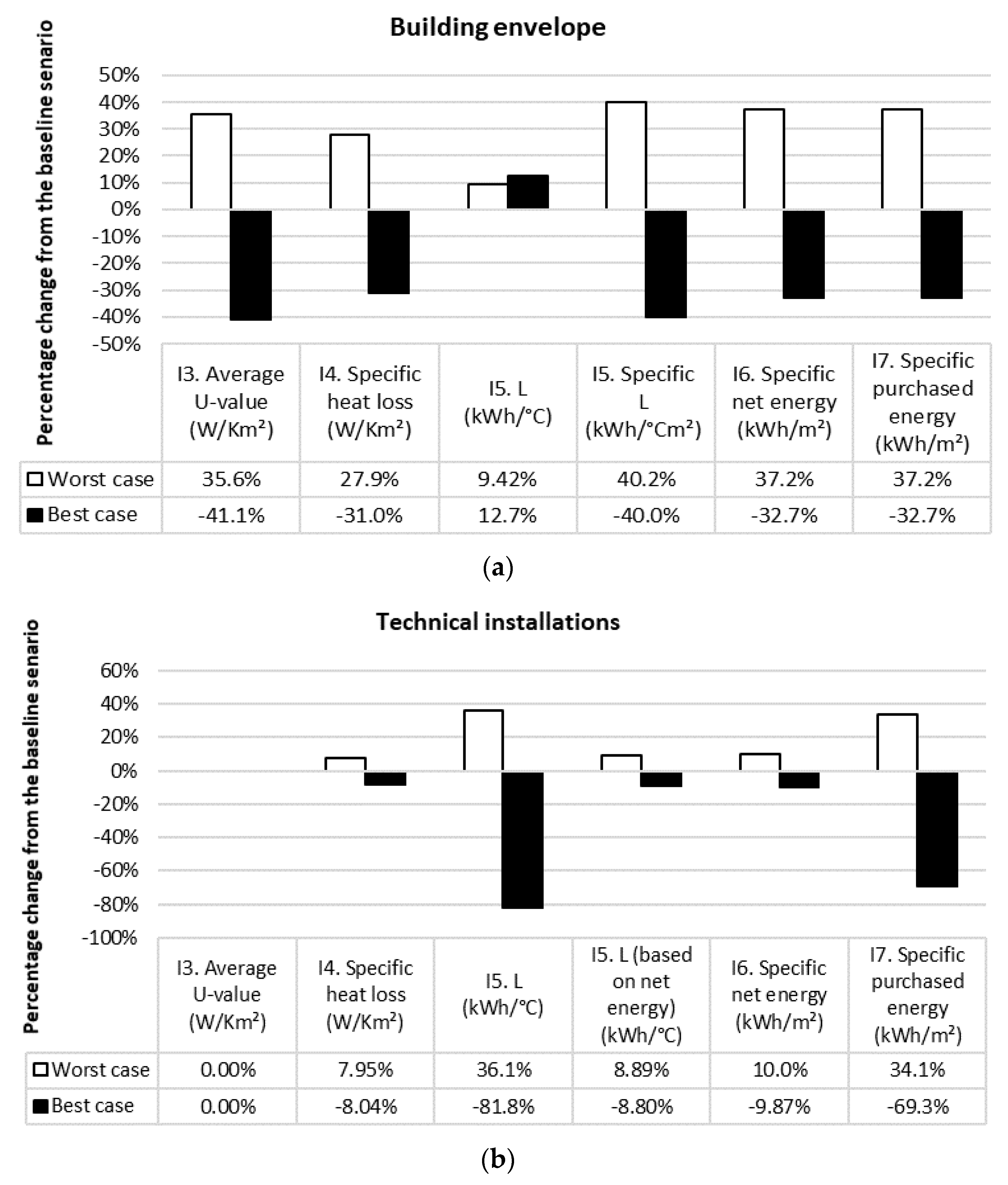

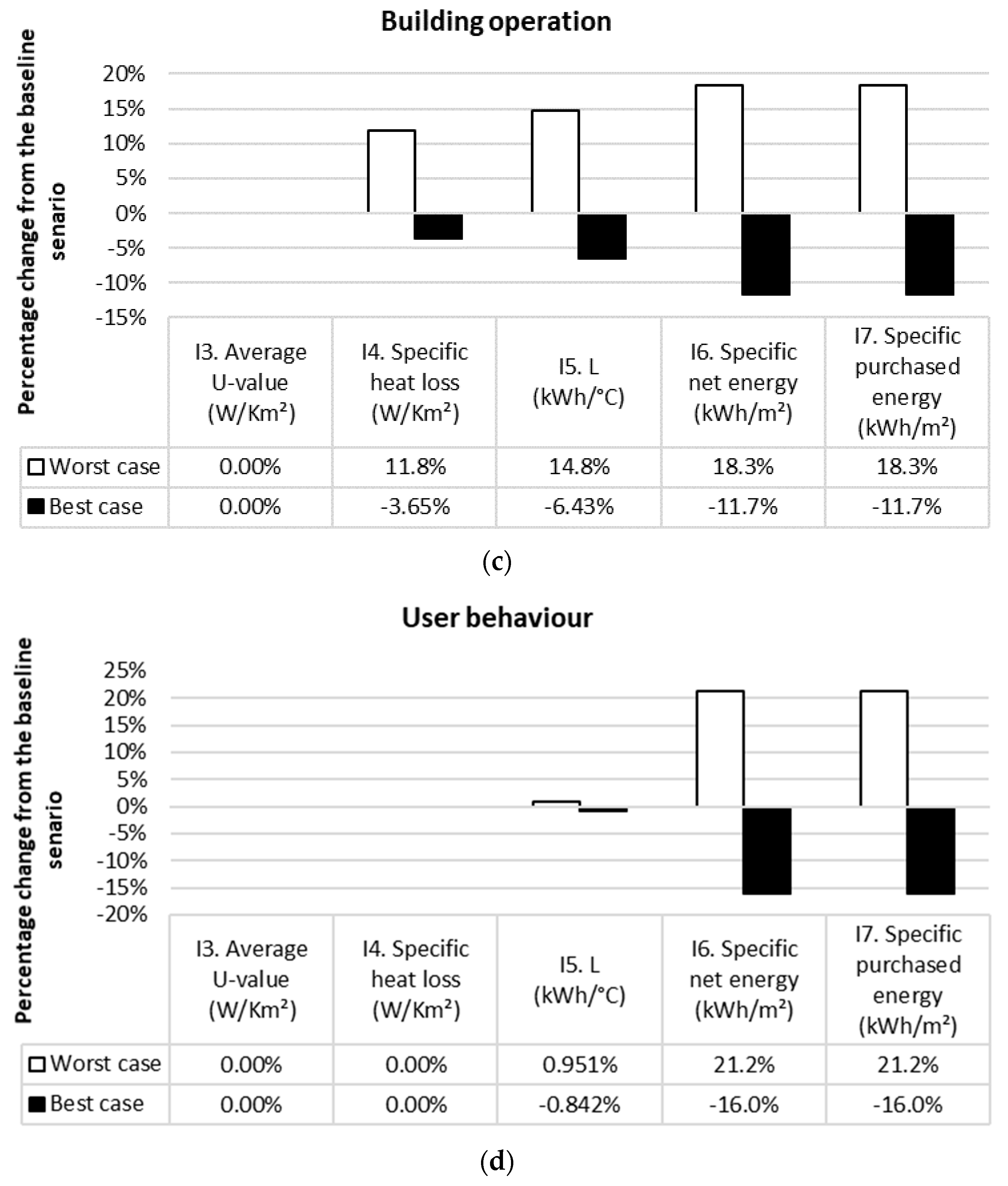

2.2.2 Parameter Variations

3. Results

3.1. Results from the Delphi Study

“(the cost) matters less, within reasonable limits”

“(the timeframe) matters less, but it may be difficult to enforce requirements (on building energy performance) if the verification process takes too long.”

“(You should) agree upon a calculation (procedure) in the procurement process and measure air tightness, heat exchanger function, and room air temperatures.”

“(You should verify building energy performance) in the design phase to show the theoretical level; (then) follow up in the finished building (at the final inspection) to ensure this level.”

“(You may use) a mix of both (evaluations in the design phase and operational phase) as long as you do not measure things we cannot influence (e.g., user behavior).”

“The building envelope may be verified in the final inspection, but the (technical) installations should be adapted to the function of the building and its residents.”

“…. (the specific purchased energy) says nothing about the performance of the building envelope, you can e.g., compensate for a bad envelope by putting solar panels on the roof.”

“Although you have to measure the energy use since there is an interest (from the client) to know the cost for heating the building, the energy performance (of the building) is of course something else.”

“… (the specific purchased energy) is difficult to verify. It is difficult to determine if any deviations depend on the building or on the users.”

“The stricter the requirements get the higher percentage of the energy consumption will be influenced by user behavior and operation. This leads to a need (for the construction companies) to keep very good track of the energy consumption in order to avoid disputes.”

”The building envelope should stand for 100 years, the installations are exchanged more often. It should therefore be more important to measure parameters connected to the building envelope.”

”…the contractor has more control over the installations than over the operation and user behavior.”

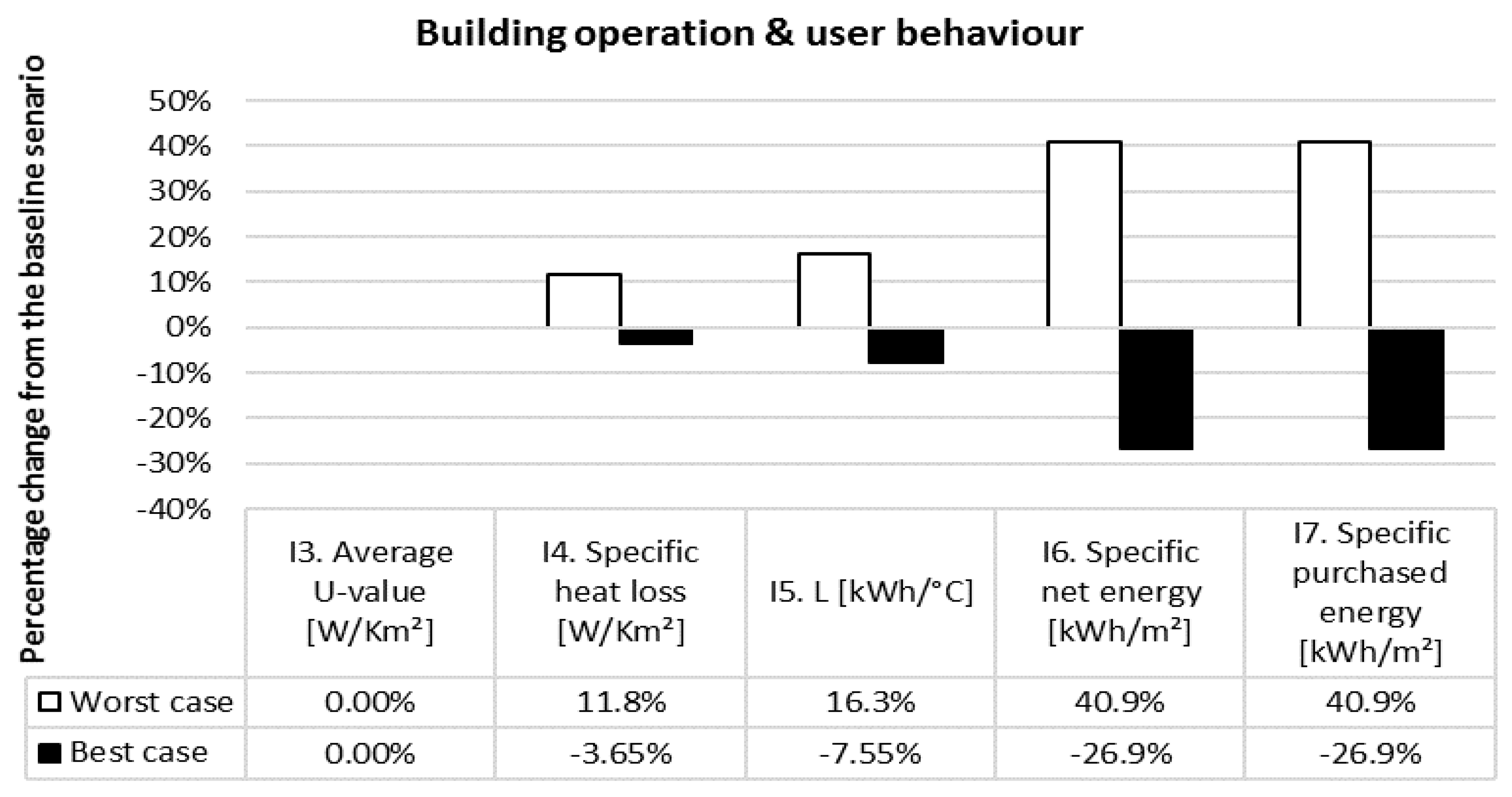

3.2. Results from the Case Study

4. Discussion

Future Research

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Pérez-Lombard, L.; Ortiz, J.; Pout, C. A review on buildings energy consumption information. Energy Build. 2008, 40, 394–398. [Google Scholar] [CrossRef]

- Wang, N.; Chang, Y.-C.; Dauber, V. Carbon print studies for the energy conservation regulations of the UK and China. Energy Build. 2010, 42, 695–698. [Google Scholar] [CrossRef]

- Annunziata, E.; Frey, M.; Rizzi, F. Towards nearly zero-energy buildings: The state-of-art of national regulations in Europe. Energy 2013, 57, 125–133. [Google Scholar] [CrossRef]

- Lee, W.L.; Chen, H. Benchmarking Hong Kong and China energy codes for residential buildings. Energy Build. 2008, 40, 1628–1636. [Google Scholar] [CrossRef]

- Melo, A.; Sorgato, M.; Lamberts, R. Building energy performance assessment: Comparison between ASHRAE standard 90.1 and Brazilian regulation. Energy Build. 2014, 70, 372–383. [Google Scholar] [CrossRef]

- Iwaro, J.; Mwasha, A. A review of building energy regulation and policy for energy conservation in developing countries. Energy Policy 2010, 38, 7744–7755. [Google Scholar] [CrossRef]

- Garcia Casals, X. Analysis of building energy regulation and certification in Europe: Their role, limitations and differences. Energy Build. 2006, 38, 381–392. [Google Scholar] [CrossRef]

- Application of the Local Criteria/Standards and Their Differences for Very Low-Energy and Low Energy Houses in the Participating Countries. Available online: http://www.enerhaus.ch/files/Dateien/NorthPass_D2_Application_of_local_criteria.pdf (accessed on 18 October 2017).

- Thuller, K. Low-Energy Buildings in Europe—Standards, Criteria and Consequences; Lund university: Lund, Sweden, 2010. [Google Scholar]

- Allard, I.; Olofsson, T.; Hassan, O. Methods for energy analysis of residential buildings in Nordic countries. Renew. Sustionable Energy Rev. 2013, 22, 306–318. [Google Scholar] [CrossRef]

- Asdrubali, F.; Bonaut, M.; Battisti, M.; Venegas, M. Comparative study of energy regulations for buildings in Italy and Spain. Energy Build. 2008, 40, 1805–1815. [Google Scholar] [CrossRef]

- Fayaz, R.; Kari, B.M. Comparison of energy conservation building codes of Iran, Turkey, Germany, China, ISO 9164 and EN 832. Appl. Energy 2009, 86, 1949–1955. [Google Scholar] [CrossRef]

- Kunz, J.; Maile, T.; Bazjanac, V. Summary of the Energy Analysis of the First Year of the Stanford Jerry Yang & Akiko Yamazaki Environment & Energy (Y2E2) Building; Stanford University: Stanford, CA, USA, 2009. [Google Scholar]

- Wall, M. Energy-efficient terrace houses in Sweden—Simulations and measurements. Energy Build. 2006, 38, 627–634. [Google Scholar] [CrossRef]

- Piette, M.A.; Kinney, S.K.; Haves, P. Analysis of an information monitoring and diagnostic system to improve building operations. Energy Build. 2001, 33, 783–791. [Google Scholar] [CrossRef]

- Scofield, J.H. Early Performance of a Green Academic Building. ASHRAE Trans. Symp. 2002, 108, 1214. [Google Scholar]

- Maile, T.; Bazjanac, V.; Fischer, M. A method to compare simulated and measured data to assess building energy performance. Build. Environ. 2012, 56, 241–251. [Google Scholar] [CrossRef]

- Hens, H.; Parijs, W.; Deurinck, M. Energy consumption for heating and rebound effects. Energy Build. 2010, 42, 105–110. [Google Scholar] [CrossRef]

- Yoshino, H.; Hong, T.; Nord, N. IEA EBC annex 53: Total energy use in buildings—Analysis and evaluation methods. Energy Build. 2017, 152, 124–136. [Google Scholar] [CrossRef]

- Burman, E.; Mumovic, D.; Kimpian, J. Towards measurement and verification of energy performance under the framework of the European directive for energy performance of buildings. Energy 2014, 77, 153–163. [Google Scholar] [CrossRef]

- Yousefi, F.; Gholipour, Y.; Yan, W. A study of the impact of occupant behaviors on energy performance of building envelopes using occupants’ data. Energy Build. 2017, 148, 182–198. [Google Scholar] [CrossRef]

- The Swedish National Board of Housing, Building, and Planning. BBR 22—Boverkets Föreskrifter om Ändring i Verkets Byggregler (2011:6)—Föreskrifter och Allmänna Råd; The Swedish National Board of Housing, Building, and Planning: Karlskrona, Sweden, 2015. [Google Scholar]

- The Swedish Program for Standardizing and Verifying Energy Performance in Buildings (SVEBY). Brukarindata bostäder; The Swedish Program for Standardizing and Verifying Energy Performance in Buildings (SVEBY): Stockholm, Sweden, 2012. [Google Scholar]

- Jensen, L. Analys av Osäkerhet i Beräkning av Energianvändning i hus och Utveckling av Säkerhetsfaktorer; Lunds Universitet: Lunds, Sweden, 2010. [Google Scholar]

- The Swedish National Board of Housing, Building, and Planning. Konsekvensutredning BEN 1—Boverkets Föreskrifter och Allmänna Råd (2016:12) om Fastställande av Byggnadens Energianvändning vid Normalt Brukande och ett Normalår. Available online: https://www.boverket.se/contentassets/f3bf0ac62dc148438a007c987aeaea21/konsekvensutredning-ben-1.pdf (accessed on 13 October 2017).

- Byggindustrin. Förenkla Byggreglernas Energikrav. Available online: http://byggindustrin.se/artikel/debatt/forenkla-byggreglernas-energikrav-25138 (accessed on 17 October 2017).

- Svenska Dagbladet. Boverket Tänker fel om Bostäder och Energi. Available online: https://www.svd.se/boverket-tanker-fel-om-bostader-och-energi (accessed on 17 October 2017).

- Okoli, C.; Pawlowski, S.D. The Delphi method as a research tool: an example, design considerations and applications. Inf. Manag. 2004, 42, 15–29. [Google Scholar] [CrossRef] [Green Version]

- Kauko, K.; Palmroos, P. The Delphi method in forecasting financial markets—An experimental study. Int. J. Forecast. 2014, 30, 313–327. [Google Scholar] [CrossRef]

- Förster, B.; von der Gracht, H. Assessing Delphi panel composition for strategic foresight—A comparison of panels based on company-internal and external participants. Technol. Forecast. Soc. Chang. 2014, 84, 214–229. [Google Scholar] [CrossRef]

- Linstone, H.; Turoff, M. The Delphi Method: Techniques and Applications; Addison-Wesley: Boston, MA, USA, 1975. [Google Scholar]

- Barnes, S.J.; Mattsson, J. Understanding current and future issues in collaborative consumption: A four-stage Delphi study. Technol. Forecast. Soc. Chang. 2016, 104, 200–211. [Google Scholar] [CrossRef]

- Osborne, J.; Collins, S.; Ratcliffe, M.; Millar, R.; Duschl, R. What ideas-about-science should be taught in school science? A Delphi study of the Expert community. J. Res. Sci. Teach. 2013, 40, 692–720. [Google Scholar] [CrossRef]

- Frewer, L.; Fisher, A.; Wentholt, M.; Marvin, H.; Ooms, B. The use of Delphi methodology in agrifood policy development: Some lessons learned. Tehnol. Forecast. Soc. Chang. 2011, 78, 1514–1525. [Google Scholar] [CrossRef]

- Norman, D.; Olaf, H. An experimental application of the Delphi method to the use of experts. Manag. Sci. 1963, 9, 458–467. [Google Scholar]

- Day, J.; Bobeva, M. A Generic Toolkit for the Successful Management of Delphi Studies. Electron. J. Bus. Res. Methods 2005, 3, 103–116. [Google Scholar]

- Hansson, F.; Sinead, K. Enhancing rigour in the Delphi technique research. Technol. Forecasting Soc. Chang. 2011, 78, 1695–1704. [Google Scholar] [CrossRef]

- Van Djik, J. Delphi Method, Developing an Instrument to Control Technological Change for Employees. Qual. Quant. 1989, 23, 189–203. [Google Scholar] [CrossRef]

- Linstone, H.; Turoff, M. Delphi: A brief look backward and forward. Technol. Forecast. Soc. Chang. 2011, 78, 1712–1719. [Google Scholar] [CrossRef]

- Johnson, J. A Ten Year Forecast in the Electronics Industry. Ind. Market. Manag. 1976, 5, 45–55. [Google Scholar] [CrossRef]

- Hsu, C.-C.; Sandford, B.A. The Delphi Tehnique: Making Sense of Consensus. Pract. Assess. Res. Eval. 2007, 12, 1–8. [Google Scholar]

- Landeta, J. Current validityof the Delphi method in social sciences. Technol. Forecast. Soc. Chang. 2006, 73, 467–482. [Google Scholar] [CrossRef]

- Keeney, S. The Delphi technique. In The Research Process in Nursing; Blackwell publishing: London, UK, 2009; pp. 227–236. [Google Scholar]

- Schmidt, R. Managing Delphi surveys using nonparametric statistical techniques. Decis. Sci. 1997, 28, 3. [Google Scholar] [CrossRef]

- Sveriges Centrum för Nollenergihus. Kravspecifikation för Nollenergihus, Passivhus och Minienergihus—Bostäder (FEBY 12); Sveriges Centrum för Nollenergihus: Stockholm, Sweden, 2012. [Google Scholar]

- Capozzoli, A.; Mechri, H.E.; Corrado, V. Impacts of architectural design choices on building energy performance applicaitons of uncertainty and sensitiivty techniques. In Proceedings of the Eleventh International IBPSA Conference, Glasgow, UK, 27–30 July 2009. [Google Scholar]

- Zhao, M.; Kunzel, H.M.; Antretter, F. Parameters influencing the energy performance of residential buildings in different Chinese climate zones. Energy Build. 2015, 96, 64–75. [Google Scholar] [CrossRef]

- Ioannou, A.; Itard, L. Energy performance and comfort in residential buildings: Sensitivity for building parameters and occupancy. Energy Build. 2015, 92, 216–233. [Google Scholar] [CrossRef]

- El Fouih, Y.; Stabat, P.; Rivière, P.; Hoang, P.; Archambault, V. Adequacy of air-to-air heat recovery ventilation system applied in low energy buildings. Energy Build. 2012, 54, 29–39. [Google Scholar] [CrossRef]

- Merzkirch, A.; Maas, S.; Scholzen, F.; Waldmann, D. Energy efficiency of centralized and decentralized ventilation units in residential buildigs—Specific fan power, heat recovery efficiency, shortcuts and volume flow unbalances. Energy Build. 2016, 116, 376–383. [Google Scholar] [CrossRef]

- Berg, F.; Flyen, A.-C.; Lund Godbolt, Å.; Brorström, T. User-driven energy efficiency in historic buildings: A review. J. Cult. Heritage 2017. [Google Scholar] [CrossRef]

- Torcellini, P.; Pless, S.; Deru, M.; Crawley, D. Zero Energy Buildings: A Critical Look at the Definition. In ACEEE Summer Study; National Renewable Energy Laboratory and Department of Energy: Pacific Grove, CA, USA, 2006. [Google Scholar]

- EQUA Simulation AB. IDA Indoor Climate and Energy. Available online: http://www.equa.se/en/ida-ice (accessed on 14 June 2015).

- EQUA Simulation AB. Validation of IDA Indoor Climate and Energy 4.0 with Respect to CEN Standard EN 15255-2007 and 15265-2007; EQUA Simulation AB: Solna, Sweden, 2010. [Google Scholar]

- EQUA Simulation AB. Validation of IDA Indoor Climate and Energy 4.0 Build 4 with Respect to ANSI/ASHRAE Standard 140-2004; EQUA Simulation AB: Solna, Sweden, 2010. [Google Scholar]

- Bergsten, B. Energiberäkningsprogram för Byggnader—En Jämförelse Utifrån Funktions- och Användaraspekter; Effektiv: Borås, Sweden, 2001. [Google Scholar]

- Sahlin, P.; Bring, A. IDA Solver—A tool for building and energy systems simulation. In Proceedings of the Building Simulation Conference, Nice, France, 20–22 August 1991. [Google Scholar]

- International Organization for Standardization (ISO). ISO 13789:2007 Thermal Performance of Buildings; International Organization for Standardization (ISO): Geneva, Switzerland, 2007. [Google Scholar]

- Sjögren, J.-U.; Andersson, S.; Olofsson, T. An approach to evaluate the energy performance of buildings based on incomplete monthly data. Energy Build. 2007, 39, 945–953. [Google Scholar] [CrossRef]

- Sjögren, J.-U.; Andresson, S.; Olofsson, T. Sensitivity of the total heat loss coefficient determined by the energy signature approach to different time periods and gained energy. Energy Build. 2009, 41, 801–808. [Google Scholar] [CrossRef]

- Belussi, L.; Danca, L. Method for the prediction of malfunctions of buildings through real energy consumption analysis: Holistic and multidisciplinary approach of Energy Signature. Energy Build. 2012, 55, 715–720. [Google Scholar] [CrossRef]

- Ghiaus, C. Experimental estimation of building energy performance by robust regression. Energy Build. 2006, 38, 582–587. [Google Scholar] [CrossRef]

- Danov, S.; Carbonell, J.; Cipriano, J.; Marti-Herrero, J. Approaches to evaluate building energy performance from daily consumption data considering dynamic and solar gain effects. Energy Build. 2013, 57, 110–118. [Google Scholar] [CrossRef]

- Westergren, K.-E.; Högberg, H.; Norlén, U. Monitoring energy consumption in single-family houses. Energy Build. 1999, 29, 247–257. [Google Scholar] [CrossRef]

- Wei, G.; Liu, M.; Claridge, E. Signatures of heating and cooling energy consumption for typical AHUs. In Proceedings of the Eleventh Symposium on Improving Building Systems in Hot and Humid Climates, Fort Worth, TX, USA, 1–2 June 1998. [Google Scholar]

- Hammarsten, S. A critical appraisal of energy-signature models. Appl. Energy 1987, 26, 97–110. [Google Scholar] [CrossRef]

- Lidelöw, S.; Munck, K.F. Byggentreprenörens Energisignatur; NCC Construction Sverige och Luleå Tekniska Universitet: Luleå and Malmö, Sweden, 2015. [Google Scholar]

- Vesterberg, J.; Andersson, S.; Olofsson, T. A single-variate building energy signature approach for periods with substantial solar gain. Energy Build. 2016, 122, 185–191. [Google Scholar] [CrossRef]

- Meteonorm. METEOTEST. Available online: http://meteonorm.com/ (accessed on 15 February 2017).

- EQUA Simulation AB. User Manual—IDA Indoor Climate and Energy—Version 4.5; Solna, Sweden, 2013. [Google Scholar]

- Schild, P.; Mysen, M. Technical Note AIVC 65—Recommendations on Specific Fan Power and Fan System Efficiency; Air Infiltration and Ventilation Centre: Sint-Stevens-Woluwe, Belgium, 2009. [Google Scholar]

- LIP kansliet. Tekniktävling—Teknikupphandling av Energiberäkningsmodell för Energieffektivt Sunda Flerbostadshus (MEBY); LIP kansliet: Stockholm, Sweden, 2002. [Google Scholar]

- Energimyndigheten. Available online: http://www.energimyndigheten.se/tester/tester-a-o/pelletspannor/ (accessed on 15 February 2017).

- Che, D.; Liu, Y.; Gao, C. Evaluation of retrofitting a conventional natural gas fired boiler into a condensing boiler. Energy Convers. Manag. 2004, 45, 3251–3266. [Google Scholar] [CrossRef]

| External Conditions | Building Envelope | Technical Installations | Building Operation | User Behavior |

|---|---|---|---|---|

| P1. Climate [24] | P2. Form factor (envelope area/volume) [46] P3. Window to floor area ratio [46] P4. Average U-value [47,48] P5. Envelope air leakage @ 50 Pa [47] | P6. Heating system a P7. Ventilation heat recovery efficiency [49] P8. Specific fan power [49] | P9. Indoor temperature [48] P10. Ventilation rate [21,48] P11. Supply to exhaust air rate ratio [50] | P12. Energy use for household appliances [21] P13. Energy use for domestic hot water [51] P14. Number of occupants [21] P15. Airing (by opening windows) [51] |

| Parameter | Value | Source/Comment |

|---|---|---|

| External conditions | ||

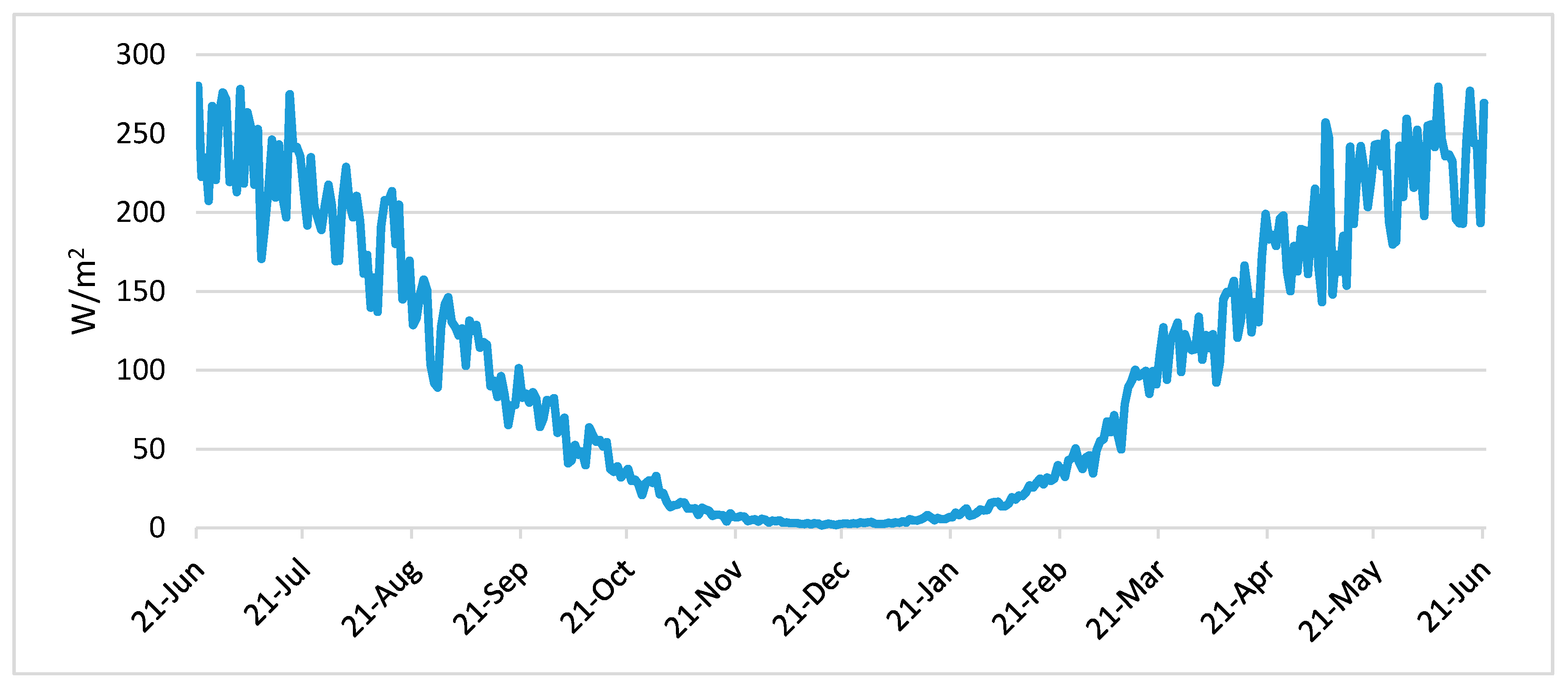

| Climate data | Umeå1961–1990 | Average values 1961–1990 [69]. Yearly average temperature 4.00 °C. |

| Wind profile | Suburban | [70] |

| Building envelope | ||

| Envelope area | 1847 m2 | From blueprints. |

| Heated floor area | 1495 m2 | Heated above 10 °C. From blueprints. |

| Volume | 3952 m3 | From blueprints. |

| Form factor | 0.47 | Envelope area to volume ratio. |

| Window to floor area ratio | 16.3% | From blueprints. |

| Average U-value | 0.307 W/m2K | External walls 0.127 W/m2K. Roof 0.0810 W/m2K. Foundation 0.238 W/m2K. Windows 1.20 W/m2K. From blueprints. Thermal bridges assumed to 72.4 W/K, representing typical values [70]. |

| Envelope air leakage at 50 Pa | 0.6 L/smenv2 | Highest allowed for buildings with less than 100 m2 floor area, with a window area smaller than 20% of the heated floor area and with no space cooling according to BBR [22]. |

| Technical installations | ||

| Heating system | District heating | For space heating and domestic hot water. From tender documents. Assumed to have 100% efficiency, since the heat not transferred to the buildings heating system returns to the district heating system. Heat losses from the internal heating system assumed to 4% of the delivered space heating energy, 50% contributing to space heating, representing typical values [70]. |

| Ventilation system | A supply- and exhaust system with heat recovery. A rotary heat exchanger with 80% temperature efficiency. | From tender documents. Supply air duct heat loss assumed to be 1.16 W/m2 at a 7 °C temperature difference between duct and zone, 50% contributing to space heating, representing typical values [70]. |

| Specific fan power | 2 kW/(m3/s) | Requirement for heat recovery ventilation systems (HRV) in BBR [22] Ventilation fan efficiency assumed to 60%, representing typical values [71]. |

| Elevator | Gearless traction elevator | From tender documents. Using 50 kWh per apartment and year [72]. |

| Lighting in common areas | 11 fluorescent lamps, each emitting 25 W 16 h/day | Assumption. |

| Building operation | ||

| Indoor temperature | 22 °C | [22] Used as supply air temperature set-point for the ventilation heat exchanger, to allow for maximum heat recovery. |

| Ventilation rate | 0.35 L/sm2 | [22] |

| Supply to exhaust air rate ratio | 1 | Supply air rate = exhaust air rate. Assumption. |

| User behavior | ||

| Energy for household appliances | 3.42 W/m2 | 70% becomes internal heat gains [23]. |

| Energy for domestic hot water | 25 kWh/m2 year | 20% becomes internal heat gains [23]. |

| Number of occupants | 40 | Emitting 80 W each 14 h/day [23]. |

| Airing | 0.5 L/smenv2 | By opening windows [23]. |

| Parameters | Best-Case Scenario | Worst-Case Scenario |

|---|---|---|

| Building envelope | ||

| P2. Form factor (nr. of floors) | 0.39 (8 floors, 47 apartments, 82 occupants, 2807 m2 heated floor area, and an average U-value of 0.35 W/m2K.) | 0.52 (3 floors, 17 apartments, 30 occupants, 1167 m2 heated floor area, and an average U-value of 0.29 W/m2K.) |

| P3. Window to floor area ratio (%) | 10 (the recommended minimum in BBR [22].) | 20 |

| P4. Average U-value (W/m2K) | 0.20 | 0.40 (maximum allowed in BBR [22].) |

| P5. Envelope air leakage @ 50 Pa a (L/smenv2) | 0.3 (required in the Swedish passive house criteria [44].) | 0.9 |

| Technical installations | ||

| P6. Heating system b | HP (with a COP factor of 5 for heating and 3 for domestic hot water.) | PB (with an efficiency of 80%, the average of 11 pellet boilers used in Sweden [73].) |

| P7. Ventilation heat recovery efficiency a (%) | 90 | 70 (recommended minimum in BBR [22].) |

| P8. Specific fan power a (kW/(m3/s)) | 1.5 | 2.5) |

| Building operation | ||

| P9. Indoor temperature a (°C) | 21 | 23 |

| P10. Ventilation rate a (L/sm2) | 0.25 (representing a lower ventilation need e.g., due to demand control). | 0.45 (representing a higher ventilation need e.g., due to air contaminants.) |

| P11. Supply to exhaust air rate ratio a,c | - | 1.05 (supply air rate 105% of exhaust air rate.) |

| User behavior | ||

| P12. Energy use for household appliances a,d (W/m2) | 4.4 | 2.4 |

| P13. Energy use for domestic hot water a (kWh/m2 year) | 20 | 30 |

| P14. Number of occupants a,d | 60 | 20 |

| P15. Airing a (L/smenv2) | 0.25 | 0.75 |

| Category of Issues | Issues | Number of Respondents | |

|---|---|---|---|

| 1st rank within Each Category | 1st Rank Overall | ||

| Requirements on specific purchased energy | Tough requirements on purchased energy requirements and possible fines are risky, since it is not possible to control all factors that influence building’s energy performance. | 8 | 5 |

| To ensure compliance with the energy efficiency requirements, a substantial safety margin is required due to the various factors that may cause uncertainty. | 4 | 1 | |

| Uncertainties and responsibility | Purchased energy is significantly affected by the users’ behavior | 4 | 6 |

| Fixing the liability/responsibility is ambiguous in situations when the energy requirement is not met | 3 | 4 | |

| Operating times, ventilation, and indoor temperatures as well as envelope air leakage and airing are factors that have a major impact on the purchased energy. | 3 | 5 | |

| Verification method | With improper follow-up, competition in procurement can be distorted when accounting for the promised performance and the one stickler for the rules may find it difficult to win projects against unscrupulous competitors. | 8 | 6 |

| It is problematic to do the follow-up during the first years when the building is not dried out properly and control of the installations have not been optimized. | 5 | 3 | |

| Parameters influencing the purchased energy | The heating source and heating system efficiency affects the amount of purchased energy (heat pumps and solar panels are favored). | 6 | 5 |

| Hot water usage is increasing with more people/m2, despite better installations. | 4 | 3 | |

| Parameters | Reference | Variations | Indicators | ||||

|---|---|---|---|---|---|---|---|

| I3. | I4. | I5. | I6. | I7. | |||

| Average U-Value | SHLWDT | L | Specific Net Energy | Specific Purchased Energy | |||

| (W/m2K) | (W/m2K) | (kWh/°C) | (kWh/m2) | (kWh/m2) | |||

| External conditions | |||||||

| P1. Climate data | Umeå1961-1990 | Umeå2000-2009 | ±0% | ±0% | −0.94% | −1.6% | −1.6% |

| Building envelope | |||||||

| P2. Form factor (no. of | 0.47 | 0.39 | +13% | −16% | +84% | −4.9% | −4.85% |

| floors) | 0.52 | −6.6% | +1.3% | −21% | +3.1% | +3.1% | |

| P3. Window to floor | 16.3 | 10 | −19% | −9.2% | −16% | −10% | −10% |

| area ratio (%) | 20 | +11% | +8.0% | +9.9% | +5.7% | +5.7% | |

| P4. Average U-value | 0.31 | 0.2 | −34% | −25% | −25% | −22% | −22% |

| (W/m2K) | 0.4 | +32% | +22% | +25% | +24% | +24% | |

| P5. Envelope air leakage @ 50 Pa | 0.6 | 0.3 | ±0% | −5.9% | −0.75% | −1.8% | −1.8% |

| (L/smenv2) | 0.9 | ±0% | +6.0% | +0.71% | +1.8% | +1.8% | |

| Technical installations | |||||||

| P6. Heating system | DH | HP | ±0% | ±0% | −80% | ±0% | −67% |

| DH + S | ±0% | ±0% | +0.64% | ±0% | −15% | ||

| NGB | ±0% | ±0% | +9.9% | ±0% | +6.5% | ||

| PB | ±0% | ±0% | +25% | ±0% | +22% | ||

| P7. Ventilation heat recovery efficiency (%) | 80 | 90 | ±0% | −8.0% | −8.8% | −7.4% | −7.4% |

| 70 | ±0% | +8.0% | +9.1% | +7.6% | +7.6% | ||

| P8. Specific fan power | 2 | 1.5 | ±0% | ±0% | −0.12% | −2.4% | −2.4% |

| (kW/(m3/s)) | 2.5 | ±0% | ±0% | +0.084% | +2.4% | +2.4% | |

| Building operation | |||||||

| P9. Indoor temperature | 22 | 21 | ±0% | −2.4% | ±0% | −4.7% | −4.7% |

| (°C) | 23 | ±0% | +2.4% | ±0% | +4.9% | +4.9% | |

| P10. Ventilation rate | 0.35 | 0.25 | ±0% | −4.6% | −6.4% | −7.3% | −7.3% |

| (L/sm2) | 0.45 | ±0% | +4.6% | +6.5% | +7.3% | +7.23% | |

| P11. Supply to exhaust | 1 | 0.95 | ±0% | −5.3% | −0.42% | −0.49% | −0.49% |

| air rate ratio | 1.05 | ±0% | +1.9% | +5.2% | +3.4% | +3.4% | |

| User behavior | |||||||

| P12. Energy for household appliances | 3.42 | 4.4 | ±0% | ±0% | ±0% | −5.7% | −5.7% |

| (W/m2) | 2.4 | ±0% | ±0% | ±0% | +6.0% | +6.0% | |

| P13. Energy for domestic hot water | 25 | 20 | ±0% | ±0% | ±0% | −8.1% | −8.1% |

| (kWh/m2) | 30 | ±0% | ±0% | ±0% | +7.7% | +7.7% | |

| P14. Number of | 40 | 60 | ±0% | ±0% | −0.19% | −1.1% | −1.1% |

| occupants | 20 | ±0% | ±0% | +0.30% | +5.4% | +5.4% | |

| P15. Airing (L/sm2) | 0.5 | 0.25 | ±0% | ±0% | −0.60% | −1.5% | −1.5% |

| 0.75 | ±0% | ±0% | +0.60% | +1.5% | +1.5% | ||

| Studied Indicators | Preferred by the Building Practitioners | Less Dependent of Building Operation and User Behavior Compared to the Specific Purchased Energy (I7) | Less Dependent of the Technical Installations Compared to the Specific Purchased Energy (I7) |

|---|---|---|---|

| For calculation based evaluations | |||

| U-values for different building parts (I2) | X | X a | X b |

| Average U-value (I3) | X | X ac | X b |

| SHLWDT (I4) | X | X c | X c |

| L (I5) | - | X c | X c,d |

| Specific net energy (I6) | - | - | X c |

| Specific purchased energy (I7) | - | - | - |

| For measurement based evaluations | |||

| Envelope air leakage (I1) | X | X a | X b |

| L (I5) | - | X c | X c,d |

| Specific net energy (I6) | - | - | X c |

| Specific purchased energy (I7) | - | - | - |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Allard, I.; Olofsson, T.; Nair, G. Energy Performance Indicators in the Swedish Building Procurement Process. Sustainability 2017, 9, 1877. https://doi.org/10.3390/su9101877

Allard I, Olofsson T, Nair G. Energy Performance Indicators in the Swedish Building Procurement Process. Sustainability. 2017; 9(10):1877. https://doi.org/10.3390/su9101877

Chicago/Turabian StyleAllard, Ingrid, Thomas Olofsson, and Gireesh Nair. 2017. "Energy Performance Indicators in the Swedish Building Procurement Process" Sustainability 9, no. 10: 1877. https://doi.org/10.3390/su9101877

APA StyleAllard, I., Olofsson, T., & Nair, G. (2017). Energy Performance Indicators in the Swedish Building Procurement Process. Sustainability, 9(10), 1877. https://doi.org/10.3390/su9101877