Responsive and Minimalist App Based on Explainable AI to Assess Palliative Care Needs during Bedside Consultations on Older Patients

Abstract

:1. Introduction

2. Materials and Methods

2.1. Ethics

2.2. Data

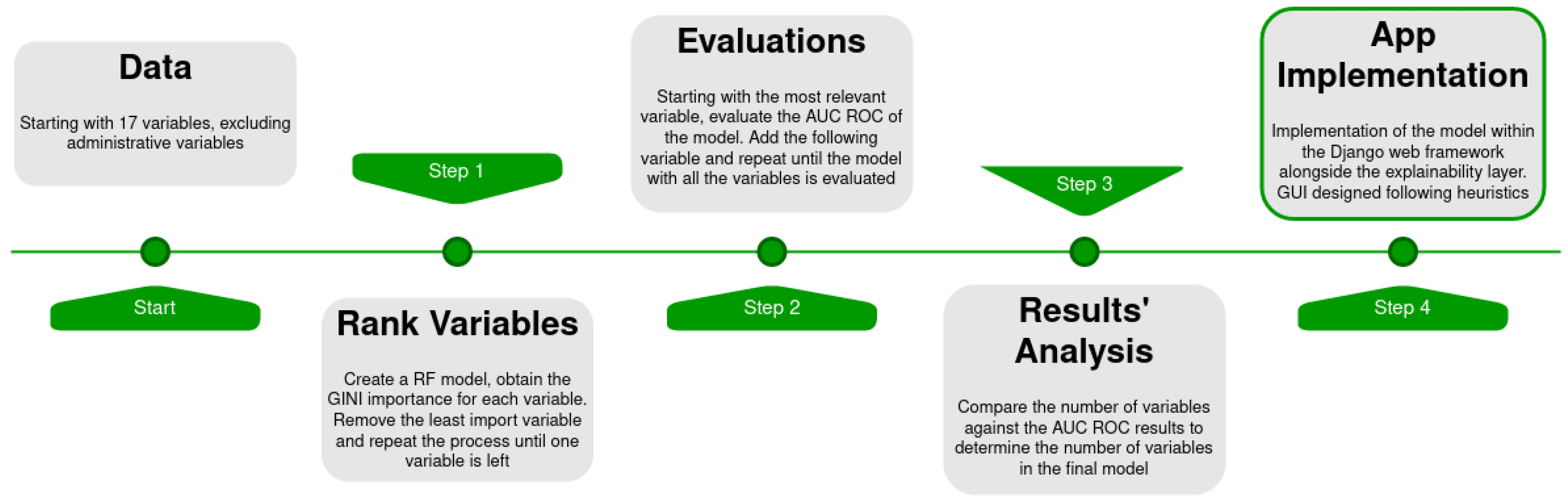

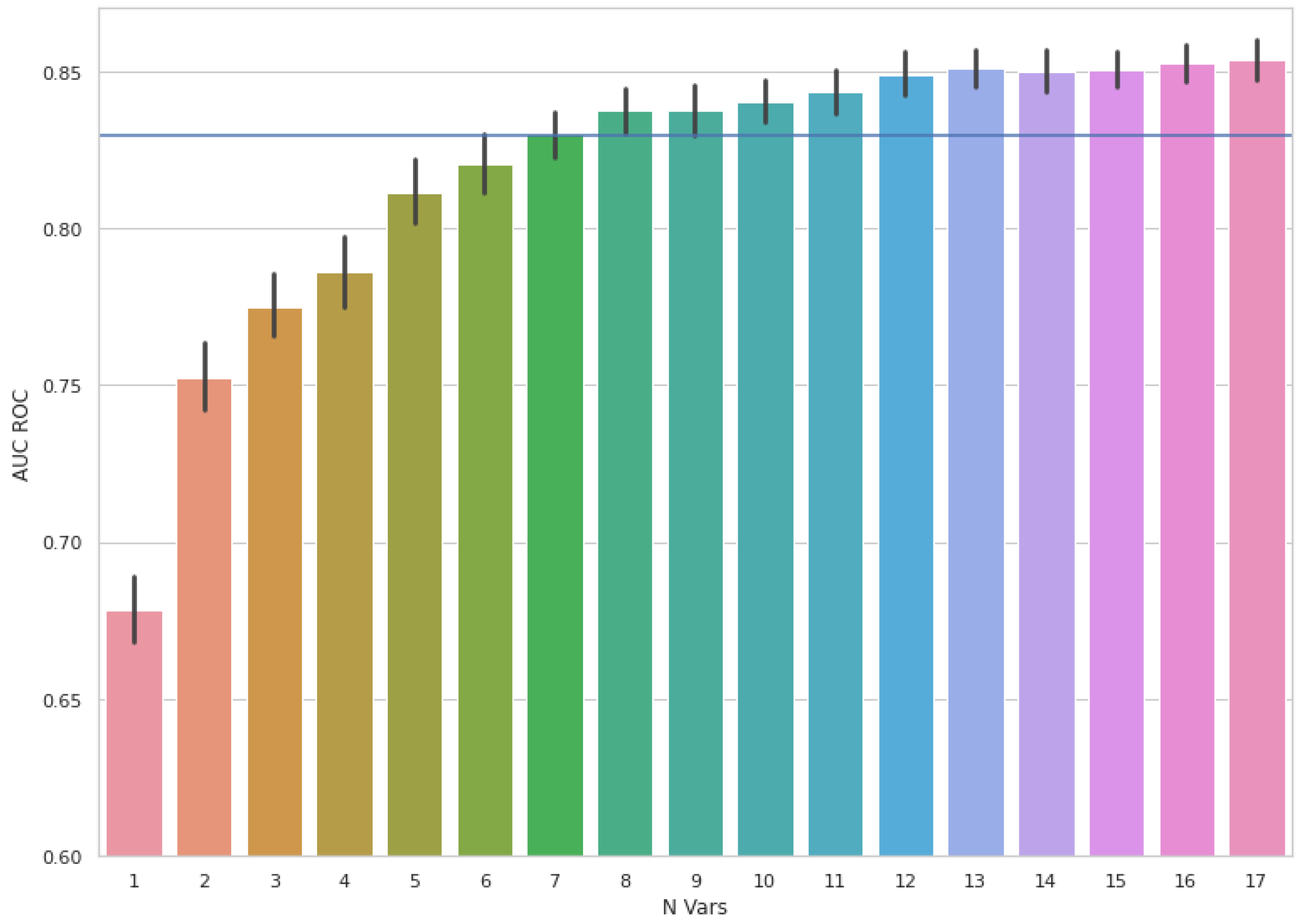

2.3. Feature Selection and Modelling

2.4. Explainability Layer

2.5. APP Implementation and Software

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Roberts, J.L.; World Health Organization. Terminology: A Glossary of Technical Terms on the Economics and Finance of Health Services (No. EUR/ICP/CARE 94 01/CN01); WHO Regional Office for Europe: Copenhagen, Denmark, 1998. [Google Scholar]

- Borgonovi, E.; Adinolfi, P.; Palumbo, R.; Piscopo, G. Framing the shades of sustainability in health care: Pitfalls and perspectives from Western EU Countries. Sustainability 2018, 10, 4439. [Google Scholar] [CrossRef] [Green Version]

- Kyeremanteng, K.; Gagnon, L.P.; Thavorn, K.; Heyland, D.; D’Egidio, G. The impact of palliative care consultation in the ICU on length of stay: A systematic review and cost evaluation. J. Intensive Care Med. 2018, 33, 346–353. [Google Scholar] [CrossRef]

- Wallerstedt, B.; Benzein, E.; Schildmeijer, K.; Sandgren, A. What is palliative care? Perceptions of healthcare professionals. Scand. J. Caring Sci. 2019, 33, 77–84. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Radbruch, L.; De Lima, L.; Knaul, F.; Wenk, R.; Ali, Z.; Bhatnaghar, S.; Blanchard, C.; Bruera, E.; Buitrago, R.; Burla, C.; et al. Redefining Palliative Care-A New Consensus-Based Definition. J. Pain Symptom Manag. 2020, 60, 754–764. [Google Scholar] [CrossRef] [PubMed]

- Temel, J.S.; Greer, J.A.; Muzikansky, A.; Gallagher, E.R.; Admane, S.; Jackson, V.A.; Dahlin, C.M.; Blinderman, C.D.; Jacobsen, J.; Pirl, W.F.; et al. Early palliative care for patients with metastatic non-small-cell lung cancer. N. Engl. J. Med. 2010, 363, 733–742. [Google Scholar] [CrossRef] [Green Version]

- Bakitas, M.; Lyons, K.D.; Hegel, M.T.; Balan, S.; Brokaw, F.C.; Seville, J.; Hull, J.G.; Li, Z.; Tosteson, T.D.; Byock, I.R.; et al. Effects of a palliative care intervention on clinical outcomes in patients with advanced cancer: The Project ENABLE II randomised controlled trial. JAMA 2009, 302, 741–749. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yennurajalingam, S.; Urbauer, D.L.; Casper, K.L.; Reyes-Gibby, C.C.; Chacko, R.; Poulter, V.; Bruera, E. Impact of a palliative care consultation team on cancer-related symptoms in advanced cancer patients referred to an outpatient supportive care clinic. J. Pain Symptom Manag. 2011, 41, 49–56. [Google Scholar] [CrossRef] [PubMed]

- Quinn, K.L.; Stukel, T.; Stall, N.M.; Huang, A.; Isenberg, S.; Tanuseputro, P.; Goldman, R.; Cram, P.; Kavalieratos, D.; Detsky, A.S.; et al. Association between palliative care and healthcare outcomes among adults with terminal non-cancer illness: Population based matched cohort study. BMJ 2020, 370, m2257. [Google Scholar] [CrossRef]

- Simoens, S.; Kutten, B.; Keirse, E.; Berghe, P.V.; Beguin, C.; Desmedt, M.; Menten, J. The costs of treating terminal patients. J. Pain Symptom Manag. 2010, 40, 436–448. [Google Scholar] [CrossRef] [Green Version]

- Smith, S.; Brick, A.; O’Hara, S.; Normand, C. Evidence on the cost and cost-effectiveness of palliative care: A literature review. Palliat. Med. 2014, 28, 130–150. [Google Scholar] [CrossRef]

- Etkind, S.N.; Bone, A.E.; Gomes, B.; Lovell, N.; Evans, C.J.; Higginson, I.J.; Murtagh, F. How many people will need palliative care in 2040? Past trends, future projections and implications for services. BMC Med. 2017, 15, 102. [Google Scholar] [CrossRef] [Green Version]

- Kayastha, N.; LeBlanc, T.W. When to Integrate Palliative Care in the Trajectory of Cancer Care. Curr. Treat. Options Oncol. 2020, 21, 41. [Google Scholar] [CrossRef]

- Downar, J.; Goldman, R.; Pinto, R.; Englesakis, M.; Adhikari, N.K. The “surprise question” for predicting death in seriously ill patients: A systematic review and meta-analysis. CMAJ Can. Med. Assoc. J. 2017, 189, E484–E493. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bernabeu-Wittel, M.; Ollero-Baturone, M.; Moreno-Gaviño, L.; Barón-Franco, B.; Fuertes, A.; Murcia-Zaragoza, J.; Ramos-Cantos, C.; Alemán, A.; Fernández-Moyano, A. Development of a new predictive model for polypathological patients. The PROFUND index. Eur. J. Intern. Med. 2011, 22, 311–317. [Google Scholar] [CrossRef] [PubMed]

- Van Walraven, C.; McAlister, F.A.; Bakal, J.A.; Hawken, S.; Donzé, J. External validation of the Hospital-patient One-year Mortality Risk (HOMR) model for predicting death within 1 year after hospital admission. CMAJ Can. Med. Assoc. J. 2015, 187, 725–733. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Van Walraven, C.; Forster, A.J. The HOMR-Now! Model Accurately Predicts 1-Year Death Risk for Hospitalised Patients on Admission. Am. J. Med. 2017, 130, 991.e9–991.e16. [Google Scholar] [CrossRef] [Green Version]

- Wegier, P.; Koo, E.; Ansari, S.; Kobewka, D.; O’Connor, E.; Wu, P.; Steinberg, L.; Bell, C.; Walton, T.; van Walraven, C.; et al. mHOMR: A feasibility study of an automated system for identifying inpatients having an elevated risk of 1-year mortality. BMJ Qual. Saf. 2019, 28, 971–979. [Google Scholar] [CrossRef]

- Avati, A.; Jung, K.; Harman, S.; Downing, L.; Ng, A.; Shah, N.H. Improving palliative care with deep learning. BMC Med. Inform. Decis. Mak. 2018, 18 (Suppl. S4), 122. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blanes-Selva, V.; Ruiz-García, V.; Tortajada, S.; Benedí, J.M.; Valdivieso, B.; García-Gómez, J.M. Design of 1-year mortality forecast at hospital admission: A machine learning approach. Health Inform. J. 2021, 27, 1460458220987580. [Google Scholar] [CrossRef]

- Mahadevaiah, G.; Rv, P.; Bermejo, I.; Jaffray, D.; Dekker, A.; Wee, L. Artificial intelligence-based clinical decision support in modern medical physics: Selection, acceptance, commissioning, and quality assurance. Med. Phys. 2020, 47, e228–e235. [Google Scholar] [CrossRef]

- Leslie, S.J.; Hartswood, M.; Meurig, C.; McKee, S.P.; Slack, R.; Procter, R.; Denvir, M.A. Clinical decision support software for management of chronic heart failure: Development and evaluation. Comput. Biol. Med. 2006, 36, 495–506. [Google Scholar] [CrossRef]

- Yu, K.H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef]

- Ehrler, F.; Weinhold, T.; Joe, J.; Lovis, C.; Blondon, K. A mobile app (BEDSide Mobility) to support nurses’ tasks at the patient’s bedside: Usability study. JMIR mHealth uHealth 2018, 6, e57. [Google Scholar] [CrossRef]

- Reeder, B.; Drake, C.; Ozkaynak, M.; Jones, W.; Mack, D.; David, A.; Wald, H.L. Usability inspection of a mobile clinical decision support app and a short form heuristic evaluation checklist. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2019; pp. 331–344. [Google Scholar]

- Singh, V.K.; Wu, B.U.; Bollen, T.L.; Repas, K.; Maurer, R.; Johannes, R.S.; Banks, P.A. A prospective evaluation of the bedside index for severity in acute pancreatitis score in assessing mortality and intermediate markers of severity in acute pancreatitis. Am. J. Gastroenterol. 2009, 104, 966–971. [Google Scholar] [CrossRef]

- O’Donnell, M.J.; Fang, J.; D’Uva, C.; Saposnik, G.; Gould, L.; McGrath, E. Investigators of the Registry of the Canadian Stroke Network. The PLAN score: A bedside prediction rule for death and severe disability following acute ischemic stroke. Arch. Intern. Med. 2012, 172, 1548–1556. [Google Scholar] [CrossRef] [Green Version]

- Glance, L.G.; Lustik, S.J.; Hannan, E.L.; Osler, T.M.; Mukamel, D.B.; Qian, F.; Dick, A.W. The Surgical Mortality Probability Model: Derivation and validation of a simple risk prediction rule for noncardiac surgery. Ann. Surg. 2012, 255, 696–702. [Google Scholar] [CrossRef] [Green Version]

- Bertsimas, D.; Lukin, G.; Mingardi, L.; Nohadani, O.; Orfanoudaki, A.; Stellato, B.; Hellenic COVID-19 Study Group. COVID-19 mortality risk assessment: An international multi-center study. PLoS ONE 2020, 15, e0243262. [Google Scholar] [CrossRef]

- Blanes-Selva, V.; Doñate-Martínez, A.; Linklater, G.; García-Gómez, J.M. Complementary frailty and mortality prediction models on older patients as a tool for assessing palliative care needs. MedRxiv 2021. [Google Scholar] [CrossRef]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef] [Green Version]

- Haneuse, S.; Arterburn, D.; Daniels, M.J. Assessing Missing Data Assumptions in EHR-Based Studies: A Complex and Underappreciated Task. JAMA 2021, 4, e210184. [Google Scholar]

- Buuren, S.V.; Groothuis-Oudshoorn, K. mice: Multivariate imputation by chained equations in R. J. Stat. Softw. 2010, 45, 1–68. [Google Scholar] [CrossRef] [Green Version]

- Sperrin, M.; Martin, G.P. Multiple imputation with missing indicators as proxies for unmeasured variables: Simulation study. BMC Med. Res. Methodol. 2020, 20, 185. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Long Beach CA, USA, 2017. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Oliphant, T.E. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- McKinney, W. Data structures for statistical computing in python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; Volume 445, pp. 51–56. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Duchesnay, E. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Bargas-Avila, J.A.; Brenzikofer, O.; Roth, S.P.; Tuch, A.N.; Orsini, S.; Opwis, K. Simple but Crucial User Interfaces in the World Wide Web: Introducing 20 Guidelines for Usable Web form Design, User Interfaces; INTECH: Rijeka, Croatia, 2010. [Google Scholar]

- Inouye, S.K.; Bogardus, S.T., Jr.; Vitagliano, G.; Desai, M.M.; Williams, C.S.; Grady, J.N.; Scinto, J.D. Burden of illness score for elderly persons: Risk adjustment incorporating the cumulative impact of diseases, physiologic abnormalities, and functional impairments. Med. Care 2003, 41, 70–83. [Google Scholar] [CrossRef]

- Fischer, S.M.; Gozansky, W.S.; Sauaia, A.; Min, S.J.; Kutner, J.S.; Kramer, A. A practical tool to identify patients who may benefit from a palliative approach: The CARING criteria. J. Pain Symptom Manag. 2006, 31, 285–292. [Google Scholar] [CrossRef]

- Arihan, O.; Wernly, B.; Lichtenauer, M.; Franz, M.; Kabisch, B.; Muessig, J.; Jung, C. Blood Urea Nitrogen (BUN) is independently associated with mortality in critically ill patients admitted to ICU. PLoS ONE 2018, 13, e0191697. [Google Scholar] [CrossRef] [Green Version]

- Wu, B.U.; Johannes, R.S.; Sun, X.; Conwell, D.L.; Banks, P.A. Early changes in blood urea nitrogen predict mortality in acute pancreatitis. Gastroenterology 2009, 137, 129–135. [Google Scholar] [CrossRef]

- Cauthen, C.A.; Lipinski, M.J.; Abbate, A.; Appleton, D.; Nusca, A.; Varma, A.; Vetrovec, G.W. Relation of blood urea nitrogen to long-term mortality in patients with heart failure. Am. J. Cardiol. 2008, 101, 1643–1647. [Google Scholar] [CrossRef]

- Martín-Pérez, M.; Ruigómez, A.; Pastor-Barriuso, R.; García López, F.J.; Villaverde-Hueso, A.; Damián, J. Number of Medications and Mortality Among Residents in Nursing Homes. J. Am. Med. Dir. Assoc. 2019, 20, 643–645. [Google Scholar] [CrossRef]

- De Gelder, J.; Lucke, J.A.; Heim, N.; de Craen, A.J.; Lourens, S.D.; Steyerberg, E.W.; de Groot, B.; Fogteloo, A.J.; Blauw, G.J.; Mooijaart, S.P. Predicting mortality in acutely hospitalised older patients: A retrospective cohort study. Intern. Emerg. Med. 2016, 11, 587–594. [Google Scholar] [CrossRef] [Green Version]

- Patel, K.V.; Semba, R.D.; Ferrucci, L.; Newman, A.B.; Fried, L.P.; Wallace, R.B.; Bandinelli, S.; Phillips, C.S.; Yu, B.; Connelly, S.; et al. Red cell distribution width and mortality in older adults: A meta-analysis. The journals of gerontology. Ser. A Biol. Sci. Med. Sci. 2010, 65, 258–265. [Google Scholar] [CrossRef]

- Shortliffe, E.H.; Sepúlveda, M.J. Clinical Decision Support in the Era of Artificial Intelligence. JAMA 2018, 320, 2199–2200. [Google Scholar] [CrossRef] [PubMed]

- Carroll, C.; Marsden, P.; Soden, P.; Naylor, E.; New, J.; Dornan, T. Involving users in the design and usability evaluation of a clinical decision support system. Comput. Methods Programs Biomed. 2002, 69, 123–135. [Google Scholar] [CrossRef]

- Wegier, P.; Kurahashi, A.; Saunders, S.; Lokuge, B.; Steinberg, L.; Myers, J.; Downar, J. mHOMR: A prospective observational study of an automated mortality prediction model to identify patients with unmet palliative needs. BMJ Supportive Palliat. Care 2021, 28, 971–979. [Google Scholar] [CrossRef]

- Haun, M.W.; Estel, S.; Ruecker, G.; Friederich, H.C.; Villalobos, M.; Thomas, M.; Hartmann, M. Early palliative care for adults with advanced cancer. Cochrane Database Syst. Rev. 2017, 6, CD11129. [Google Scholar] [CrossRef]

- May, P.; Garrido, M.M.; Cassel, J.B.; Kelley, A.S.; Meier, D.E.; Normand, C.; Morrison, R.S. Prospective cohort study of hospital palliative care teams for inpatients with advanced cancer: Earlier consultation is associated with larger cost-saving effect. J. Clin. Oncol. 2015, 33, 2745. [Google Scholar] [CrossRef]

- Lowery, W.J.; Lowery, A.W.; Barnett, J.C.; Lopez-Acevedo, M.; Lee, P.S.; Secord, A.A.; Havrilesky, L. Cost-effectiveness of early palliative care intervention in recurrent platinum-resistant ovarian cancer. Gynecol. Oncol. 2013, 130, 426–430. [Google Scholar] [CrossRef]

- Sáez, C.; Romero, N.; Conejero, J.A.; García-Gómez, J.M. Potential limitations in COVID-19 machine learning due to data source variability: A case study in the nCov2019 dataset. J. Am. Med. Inform. Assoc. JAMIA 2021, 28, 360–364. [Google Scholar] [CrossRef] [PubMed]

- Petersen, C.; Adams, S.A.; DeMuro, P.R. mHealth: Don’t forget all the stakeholders in the business case. Medicine 2.0 2015, 4, e4. [Google Scholar] [CrossRef] [PubMed]

- Ganasegeran, K.; Abdulrahman, S.A. Adopting m-Health in clinical practice: A Boon or a Bane? In Telemedicine Technologies; Academic Press: Cambridge, MA, USA, 2019; pp. 31–41. [Google Scholar]

| Variable | Rank | Mean ± Std | Missings |

|---|---|---|---|

| Number of Active Groups (Meds) | 1 | 2.44 ± 3.68 | 0% |

| Charlson Index | 2 | 4.77 ± 3.34 | 0.2% |

| Barthel Index | 3 | 51.91 ± 39.75 | 73.4% |

| Metastatic Tumour | 4 | - 1 | 0% |

| Age | 5 | 79.4 ± 8.36 | 0% |

| Urea (mg/dL) | 6 | 61.19 ± 43.36 | 37.1% |

| RDW SD 2 (fL) | 7 | 49.66 ± 7.36 | 21.7% |

| Leukocyte (10³/μL) | 8 | 9.23 ± 6.85 | 21.6% |

| RDW CV 3 (%) | 9 | 15.26 ± 2.43 | 21.7% |

| Sodium (mEq/L) | 10 | 139.89 ± 4.92 | 21.2% |

| C Reactive Protein (mg/L) | 11 | 55.1 ± 70.54 | 47.3% |

| Creatinine (mg/dL) | 12 | 1.23 ± 1 | 20.7% |

| Haematocrit (%) | 13 | 36.24 ± 5.8 | 21.6% |

| Glucose (mg/dL) | 14 | 122.5 ± 54.98 | 24.0% |

| Number of Previous ER | 15 | 6.04 ± 6.56 | 1.4% |

| Number of Previous Admissions | 16 | 7.62 ± 7.49 | 0% |

| Potassium (mEq/L) | 17 | 4.22 ± 0.61 | 22.5% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Blanes-Selva, V.; Doñate-Martínez, A.; Linklater, G.; Garcés-Ferrer, J.; García-Gómez, J.M. Responsive and Minimalist App Based on Explainable AI to Assess Palliative Care Needs during Bedside Consultations on Older Patients. Sustainability 2021, 13, 9844. https://doi.org/10.3390/su13179844

Blanes-Selva V, Doñate-Martínez A, Linklater G, Garcés-Ferrer J, García-Gómez JM. Responsive and Minimalist App Based on Explainable AI to Assess Palliative Care Needs during Bedside Consultations on Older Patients. Sustainability. 2021; 13(17):9844. https://doi.org/10.3390/su13179844

Chicago/Turabian StyleBlanes-Selva, Vicent, Ascensión Doñate-Martínez, Gordon Linklater, Jorge Garcés-Ferrer, and Juan M. García-Gómez. 2021. "Responsive and Minimalist App Based on Explainable AI to Assess Palliative Care Needs during Bedside Consultations on Older Patients" Sustainability 13, no. 17: 9844. https://doi.org/10.3390/su13179844