Using Decision Tree to Predict Response Rates of Consumer Satisfaction, Attitude, and Loyalty Surveys

Abstract

:1. Introduction

2. Materials and Methods

2.1. Sample of Studies

2.2. Coding of Main Variables

2.3. Methods

3. Results

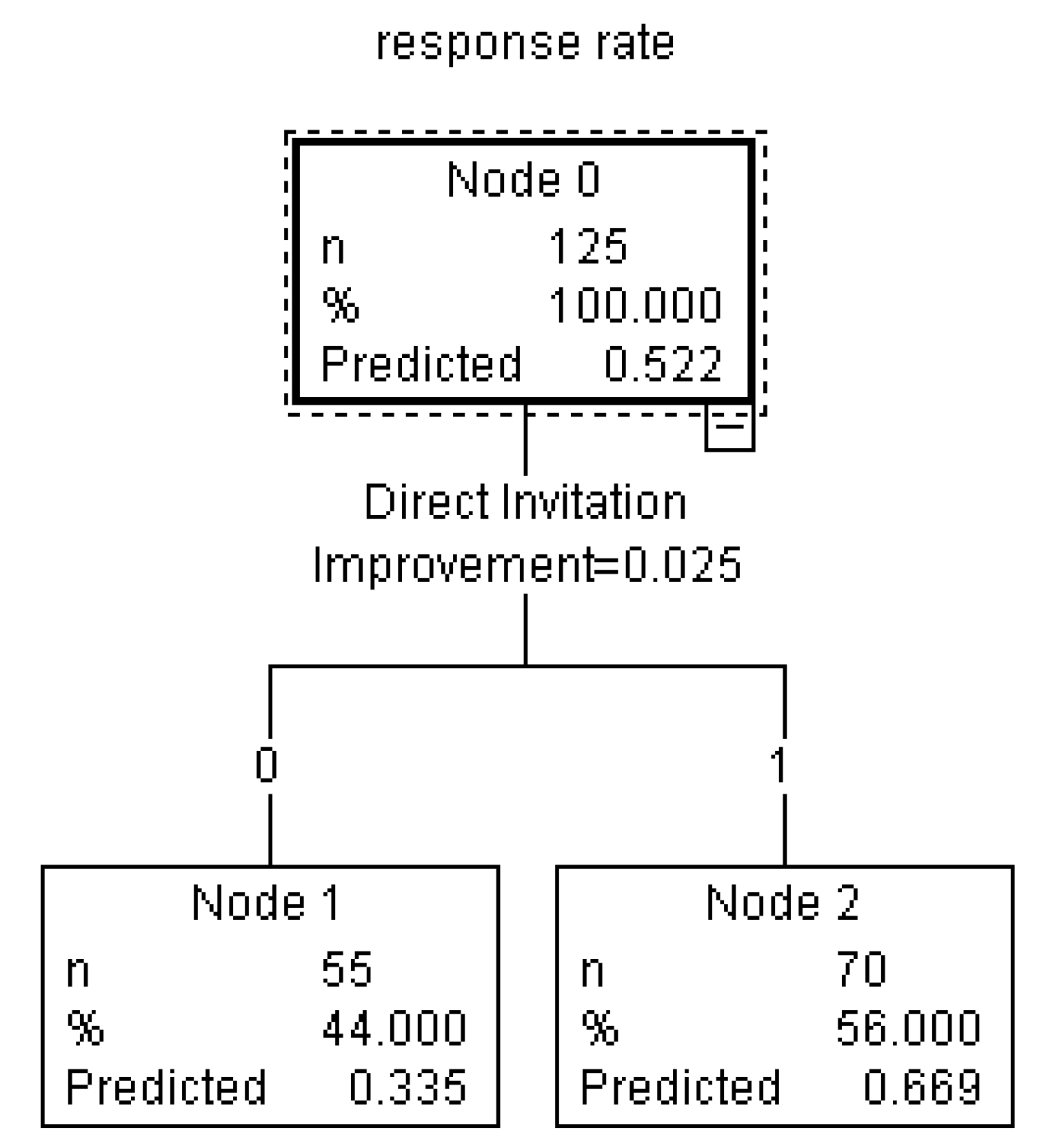

4. Predicting Response Rates

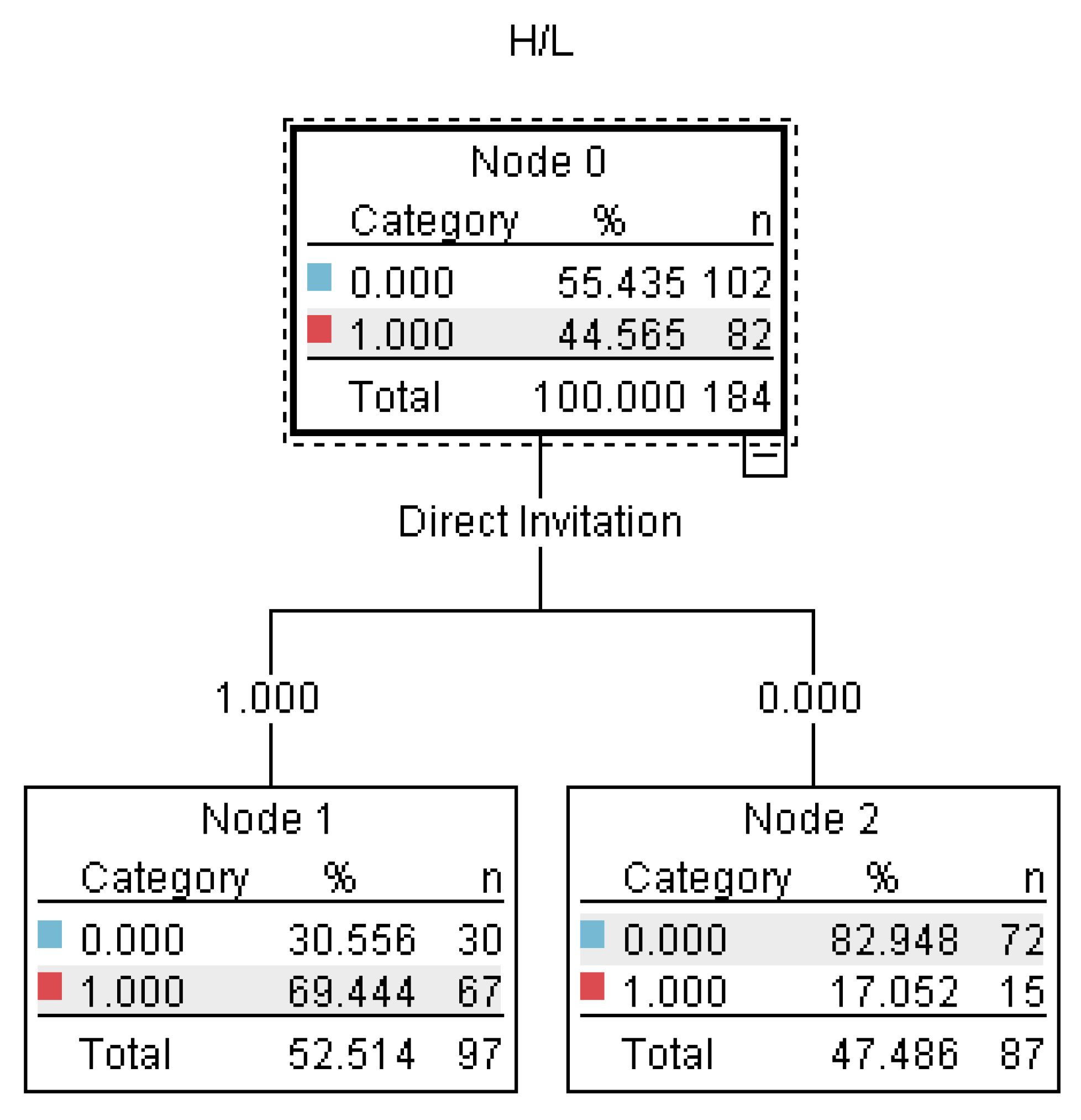

5. Predicting High and Low Response Rates

6. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Hox, J.J.; De Leeuw, E.D. A comparison of nonresponse in mail, telephone, and face-to-face surveys. Qual. Quant. 1994, 28, 329–344. [Google Scholar] [CrossRef] [Green Version]

- Groves, R.M. Nonresponse rates and nonresponse bias in household surveys. Public Opin. Q. 2006, 70, 646–675. [Google Scholar] [CrossRef]

- Tsoukatos, E.; Rand, G.K. Path analysis of perceived service quality, satisfaction and loyalty in Greek insurance. Manag. Serv. Qual. Int. J. 2006, 16, 501–519. [Google Scholar] [CrossRef]

- Luarn, P.; Lin, H.H. A Customer Loyalty Model for E-Service Context. J. Electron. Commer. Res. 2003, 4, 156–167. [Google Scholar]

- Reichheld, F.F.; Schefter, P. E-loyalty: Your secret weapon on the web. Harv. Bus. Rev. 2000, 78, 105–113. [Google Scholar]

- Curtin, R.; Presser, S.; Singer, E. Changes in telephone survey nonresponse over the past quarter century. Public Opin. Q. 2005, 69, 87–98. [Google Scholar] [CrossRef]

- De Leeuw, E.; De Heer, W. Trends in household survey nonresponse: A longitudinal and international comparison. Surv. Nonresponse 2002, 41, 41–54. [Google Scholar]

- Groves, R.M.; Cialdini, R.B.; Couper, M.P. Understanding the decision to participate in a survey. Public Opin. Q. 1992, 56, 475–495. [Google Scholar] [CrossRef]

- Petrova, P.K.; Cialdini, R.B.; Sills, S.J. Consistency-based compliance across cultures. J. Exp. Soc. Psychol. 2007, 43, 104–111. [Google Scholar] [CrossRef]

- Porter, S.R.; Whitcomb, M.E.; Weitzer, W.H. Multiple surveys of students and survey fatigue. New Dir. Inst. Res. 2004, 2004, 63–73. [Google Scholar] [CrossRef]

- Busby, D.M.; Yoshida, K. Challenges with Online Research for Couples and Families: Evaluating Nonrespondents and the Differential Impact of Incentives. J Child Fam Stud. 2015, 24, 505–513. [Google Scholar] [CrossRef]

- Dykema, J.; Stevenson, J.; Klein, L.; Kim, Y.; Day, B. Effects of E-Mailed Versus Mailed Invitations and Incentives on Response Rates, Data Quality, and Costs in a Web Survey of University Faculty. Soc. Sci Comput. Rev. 2013, 31, 359–370. [Google Scholar] [CrossRef]

- Patrick, M.E.; Singer, E.; Boyd, C.J.; Cranford, J.A.; McCabe, S.E. Incentives for college student participation in web-based substance use surveys. Addict. Behav. 2013, 38, 1710–1714. [Google Scholar] [CrossRef] [PubMed]

- Shih, T.H.; Fan, X.T. Comparing response rates from Web and mail surveys: A meta-analysis. Field Methods 2008, 20, 249–271. [Google Scholar] [CrossRef]

- Dillman, D.A. Presidential address: Navigating the rapids of change: Some observations on survey methodology in the early twenty-first century. Public Opin. Q. 2002, 66, 473–494. [Google Scholar] [CrossRef]

- Edwards, P.J.; Roberts, I.; Clarke, M.J.; DiGuiseppi, C.; Wentz, R.; Kwan, I.; Cooper, R.; Felix, L.M.; Pratap, S. Methods to increase response to postal and electronic questionnaires. Cochrane Database Syst. Rev. 2009, 3. [Google Scholar] [CrossRef]

- Haunberger, S. Explaining Unit Nonresponse in Online Panel Surveys: An Application of the Extended Theory of Planned Behavior. J. Appl. Soc. Psychol. 2011, 41, 2999–3025. [Google Scholar] [CrossRef]

- McCambridge, J.; Kalaitzaki, E.; White, I.R.; Khadjesari, Z.; Murray, E.; Linke, S.; Thompson, S.G.; Godfrey, C.; Wallace, P. Impact of length or relevance of questionnaires on attrition in online trials: Randomized controlled trial. J. Med. Internet Res. 2011, 13, e96. [Google Scholar] [CrossRef]

- Zillmann, D.; Schmitz, A.; Skopek, J.; Blossfeld, H.P. Survey topic and unit nonresponse. Qual. Quant. 2014, 48, 2069–2088. [Google Scholar] [CrossRef]

- Bruggen, E.; Dholakia, U.M. Determinants of Participation and Response Effort in Web Panel Surveys. J. Interact. Mark. 2010, 24, 239–250. [Google Scholar] [CrossRef]

- Fan, W.M.; Yan, Z. Factors affecting response rates of the web survey: A systematic review. Comput. Hum. Behav. 2010, 26, 132–139. [Google Scholar] [CrossRef]

- Porter, S.R.; Whitcomb, M.E. Non-response in student surveys: The role of demographics, engagement and personality. Res High. Educ. 2005, 46, 127–152. [Google Scholar] [CrossRef]

- Marcus, B.; Schütz, A. Who are the people reluctant to participate in research? Personality correlates of four different types of nonresponse as inferred from self-and observer ratings. J. Personal. 2005, 73, 959–984. [Google Scholar] [CrossRef] [PubMed]

- Cook, C.; Heath, F.; Thompson, R.L. A meta-analysis of response rates in Web- or internet-based surveys. Educ. Psychol. Meas. 2000, 60, 821–836. [Google Scholar] [CrossRef]

- Couper, M.P. The Future of Modes of Data Collection. Public Opin. Q. 2011, 75, 889–908. [Google Scholar] [CrossRef] [Green Version]

- De Leeuw, D. To mix or not to mix data collection modes in surveys. J. Off. Stat. 2005, 21, 233. [Google Scholar]

- Dillman, D.A.; Phelps, G.; Tortora, R.; Swift, K.; Kohrell, J.; Berck, J.; Messer, B.L. Response rate and measurement differences in mixed-mode surveys using mail, telephone, interactive voice response (IVR) and the Internet. Soc. Sci. Res. 2009, 38, 3–20. [Google Scholar] [CrossRef]

- Kaplowitz, M.D.; Hadlock, T.D.; Levine, R. A comparison of Web and mail survey response rates. Public Opin. Q. 2004, 68, 94–101. [Google Scholar] [CrossRef]

- Bandilla, W.; Couper, M.P.; Kaczmirek, L. The mode of invitation for web surveys. Surv. Pract. 2012, 5, 1–5. [Google Scholar] [CrossRef]

- Beebe, T.J.; Rey, E.; Ziegenfuss, J.Y.; Jenkins, S.; Lackore, K.; Talley, N.J.; Locke, R.G. Shortening a survey and using alternative forms of prenotification: Impact on response rate and quality. BMC Med. Res. Methodol. 2010, 10, 50. [Google Scholar] [CrossRef] [PubMed]

- Bosnjak, M.; Neubarth, W.; Couper, M.P.; Bandilla, W.; Kaczmirek, L. Prenotification in web-based access panel surveys-The influence of mobile text messaging versus e-mail on response rates and sample composition. Soc. Sci. Comput. Rev. 2008, 26, 213–223. [Google Scholar] [CrossRef]

- Keusch, F. How to Increase Response Rates in List-Based Web Survey Samples. Soc. Sci. Comput. Rev. 2012, 30, 380–388. [Google Scholar] [CrossRef]

- Faught, K.S.; Whitten, D.; Green, K.W. Doing survey research on the Internet: Yes, timing does matter. J. Comput. Inf. Syst. 2004, 44, 26–34. [Google Scholar]

- Sauermann, H.; Roach, M. Increasing web survey response rates in innovation research: An experimental study of static and dynamic contact design features. Res. Policy 2013, 42, 273–286. [Google Scholar] [CrossRef]

- Porter, S.R.; Whitcomb, M.E. Mixed-mode contacts in web surveys-Paper is not necessarily better. Public Opin. Q. 2007, 71, 635–648. [Google Scholar] [CrossRef]

- Fang, J.M.; Shao, P.J.; Lan, G. Effects of innovativeness and trust on web survey participation. Comput. Hum. Behav. 2009, 25, 144–152. [Google Scholar] [CrossRef]

- Pan, B.; Woodside, A.G.; Meng, F. How Contextual Cues Impact Response and Conversion Rates of Online Surveys. J. Travel Res. 2014, 53, 58–68. [Google Scholar] [CrossRef]

- Church, A.H. Estimating the effect of incentives on mail survey response rates: A meta-analysis. Public Opin. Q. 1993, 57, 62–79. [Google Scholar] [CrossRef]

- Edwards, P.; Roberts, I.; Clarke, M.; DiGuiseppi, C.; Pratap, S.; Wentz, R.; Kwan, I. Increasing response rates to postal questionnaires: Systematic review. Br. Med. J. 2002, 324, 1183–1185. [Google Scholar] [CrossRef]

- Millar, M.M.; Dillman, D.A. Improving Response to Web and Mixed-Mode Surveys. Public Opin. Q. 2011, 75, 249–269. [Google Scholar] [CrossRef] [Green Version]

- Preece, M.J.; Johanson, G.; Hitchcock, J. Lottery Incentives and Online Survey Response Rates. Surv. Pract. 2013, 3, 3002. [Google Scholar] [CrossRef]

- Rosoff, P.M.; Werner, C.; Clipp, E.C.; Guill, A.B.; Bonner, M.; Dernark-Wahnefried, W. Response rates to a mailed survey targeting childhood cancer survivors: A comparison of conditional versus unconditional incentives. Cancer Epidemiol. Biomark. 2005, 14, 1330–1332. [Google Scholar] [CrossRef] [PubMed]

- Singer, E.; Ye, C. The Use and Effects of Incentives in Surveys. Ann. Am. Acad. Political Soc. Sci. 2013, 645, 112–141. [Google Scholar] [CrossRef]

- Ziegenfuss, J.Y.; Niederhauser, B.D.; Kallmes, D.; Beebe, T.J. An assessment of incentive versus survey length trade-offs in a Web survey of radiologists. J. Med Internet Res. 2013, 15, e49. [Google Scholar] [CrossRef] [PubMed]

- Deutskens, E.; De Ruyter, K.; Wetzels, M.; Oosterveld, P. Response rate and response quality of Internet-based surveys: An experimental study. Mark. Lett. 2004, 15, 21–36. [Google Scholar] [CrossRef]

- Göritz, A.S. Determinants of the starting rate and the completion rate in online panel studies1. Online Panel Res. Data Qual. Perspect. 2014, 154–170. [Google Scholar] [CrossRef]

- Peytchev, A. Survey Breakoff. Public Opin. Q. 2009, 73, 74–97. [Google Scholar] [CrossRef]

- Porter, S.R.; Whitcomb, M.E. The impact of contact type on web survey response rates. Public Opin. Q. 2003, 67, 579–588. [Google Scholar] [CrossRef]

- Tourangeau, R.; Yan, T. Sensitive questions in surveys. Psychol. Bull. 2007, 133, 859–883. [Google Scholar] [CrossRef]

- Cho, Y.I.; Johnson, T.P.; VanGeest, J.B. Enhancing Surveys of Health Care Professionals: A Meta-Analysis of Techniques to Improve Response. Eval. Health Prof. 2013, 36, 382–407. [Google Scholar] [CrossRef]

- Manfreda, K.L.; Bosniak, M.; Berzelak, J.; Haas, I.; Vehovar, V. Web surveys versus other survey modes-A meta-analysis comparing response rates. Int. J. Mark. Res. 2008, 50, 79–104. [Google Scholar] [CrossRef]

- Sheehan, K.B. E-mail survey response rates: A review. J..Comput.-Mediat. Commun. 2001, 6, JCMC621. [Google Scholar] [CrossRef]

- Yarger, J.; James, T.A.; Ashikaga, T.; Hayanga, A.J.; Takyi, V.; Lum, Y.; Kaiser, H.; Mammen, J. Characteristics in response rates for surveys administered to surgery residents. Surgery 2013, 154, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Helgeson, J.G.; Voss, K.E.; Terpening, W.D. Determinants of mail-survey response: Survey design factors and respondent factors. Psychol. Mark. 2002, 19, 303–328. [Google Scholar] [CrossRef]

- Groves, R.M.; Lyberg, L.E. An overview of nonresponse issues in telephone surveys. In Telephone Survey Methodology; John Wiley & Sons, Inc.: New York, NY, USA, 1988; pp. 191–212. [Google Scholar]

- Keusch, F. Why do people participate in Web surveys? Applying survey participation theory to Internet survey data collection. Manag. Rev. Q. 2015, 65, 183–216. [Google Scholar] [CrossRef]

- Bowling, A. Mode of questionnaire administration can have serious effects on data quality. J. Public Health-UK 2005, 27, 281–291. [Google Scholar] [CrossRef] [Green Version]

- Dillman, D.A. Mail and Internet Surveys: The Tailored Design Method; Wiley: New York, NY, USA, 2000. [Google Scholar]

- Albaum, G.; Smith, S.M. Why people agree to participate in surveys. In Handbook of Survey Methodology for the Social Sciences; Springer: New York, NY, USA, 2012; pp. 179–193. [Google Scholar]

- Goyder, J. The Silent Minority: Nonrespondents on Sample Surveys; Westview Press: Boulder, CO, USA, 1987. [Google Scholar]

- Singer, E. Toward a Benefit-Cost Theory of Survey Participation: Evidence, Further Tests, and Implications. J. Off. Stat. 2011, 27, 379–392. [Google Scholar]

- Singer, E. Exploring the Meaning of Consent: Participation in Research and Beliefs about Risks and Benefits. J. Off. Stat. 2003, 19, 273–285. [Google Scholar]

- Hofstede, G. National cultures in four dimensions: A research-based theory of cultural differences among nations. Int. Stud. Manag. Organ. 1983, 13, 46–74. [Google Scholar] [CrossRef]

- Baruch, Y.; Holtom, B.C. Survey response rate levels and trends in organizational research. Hum. Relat. 2008, 61, 1139–1160. [Google Scholar] [CrossRef]

- Furse, D.H.; Stewart, D.W. Manipulating dissonance to improve mail survey response. Psychol. Mark. 1984, 1, 79–94. [Google Scholar] [CrossRef]

- Festinger, L.N.P. Cognitive Dissonance Theory. 1989) Primary Prevention of HIV/AIDS: Psychological Approaches; Sage Publications: Newbury Park, CA, USA, 1957. [Google Scholar]

- Hackler, J.C.; Bourgett, P. Dollars, Dissonance, and Survey Returns. Public Opin. Q. 1973, 37, 276–281. [Google Scholar] [CrossRef]

| Survey Attributes | Descriptive Statistics |

|---|---|

| Mode of data collection |

|

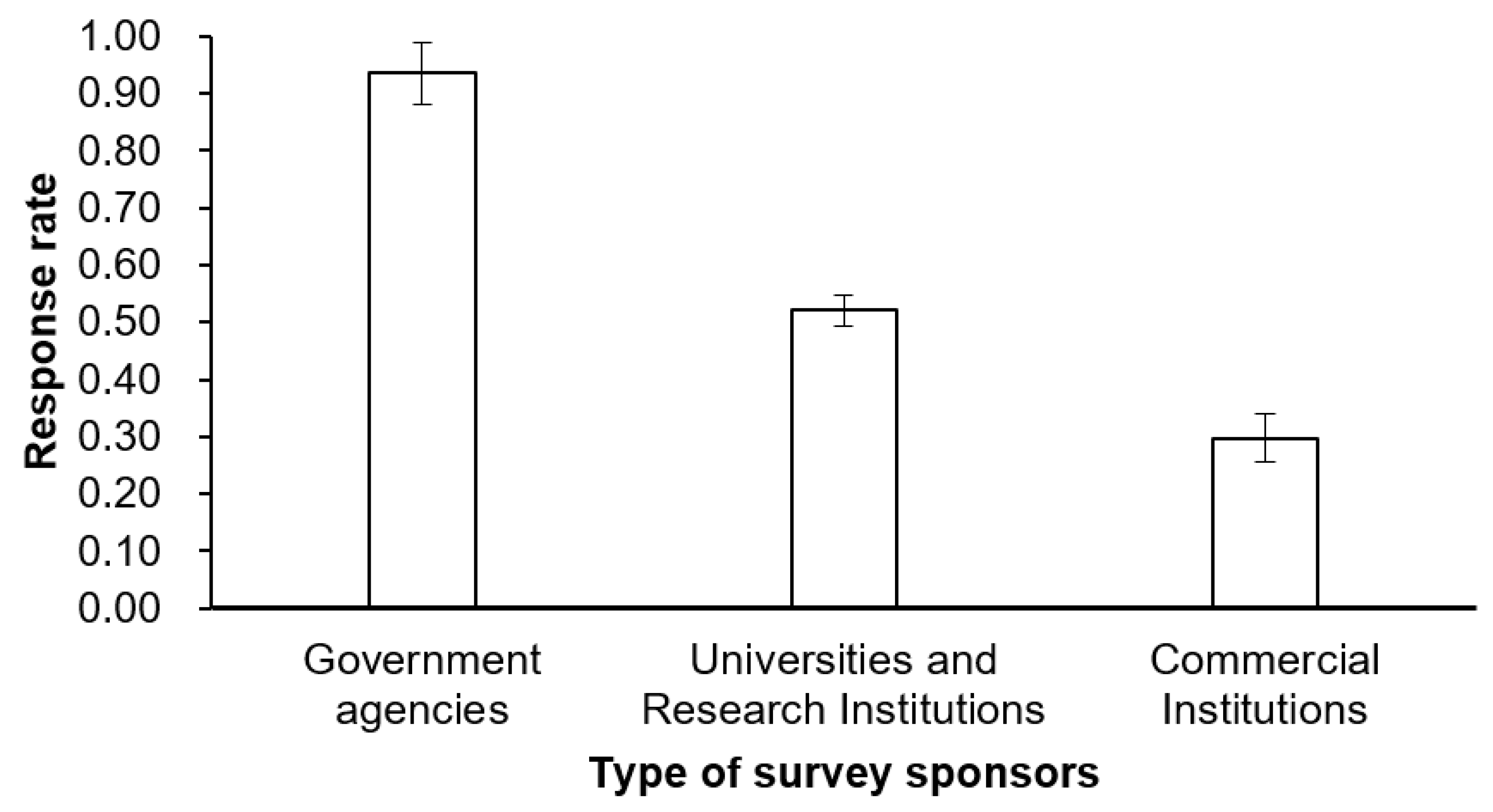

| Type of survey sponsors |

|

| Incentives | Amount of money ($) a |

| Questionnaire length | Number of items (M = 28.9, SD = 15, Range: 6 to 133) |

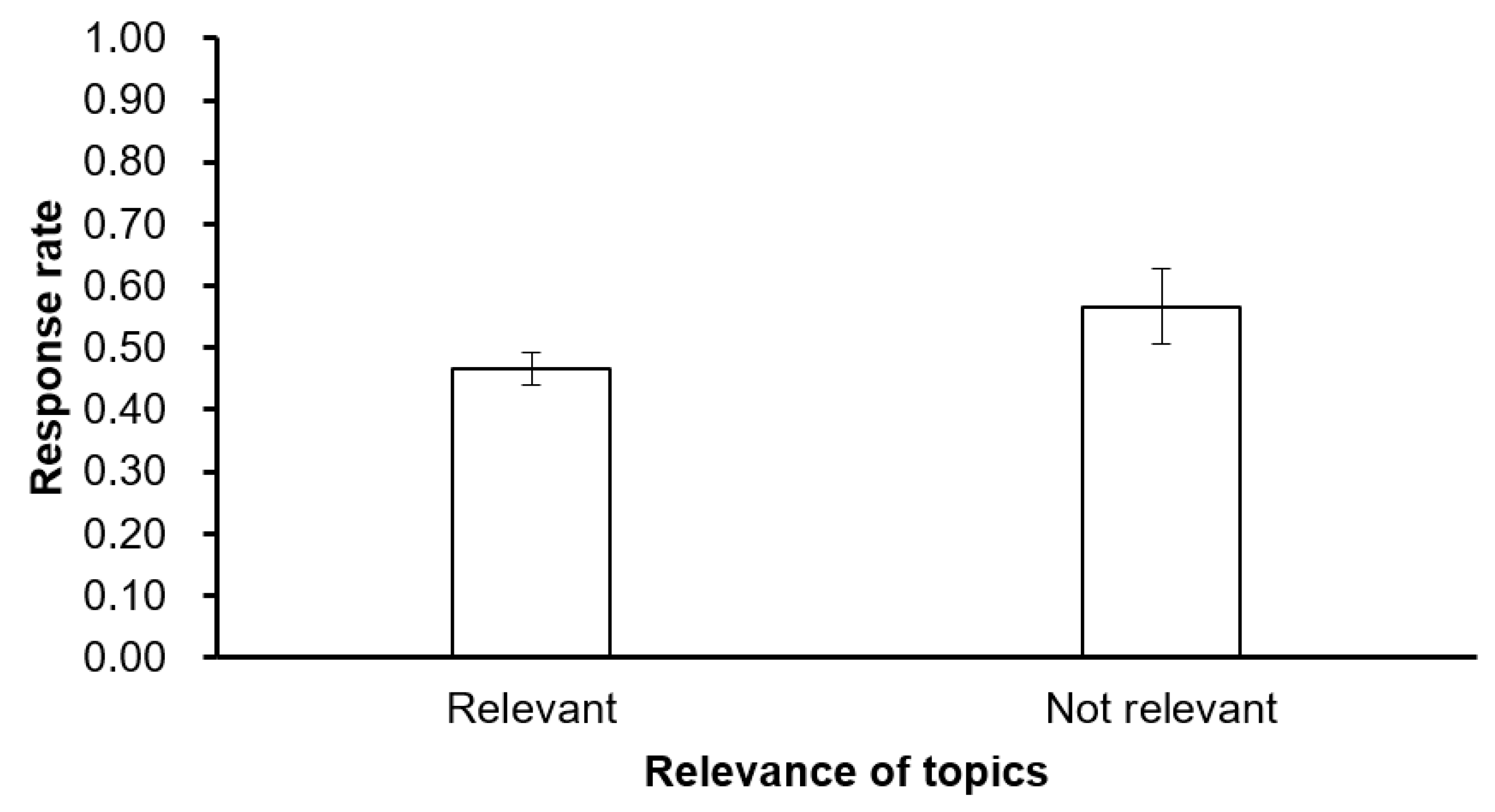

| Relevance of topics |

|

| Sensitivity of topics |

|

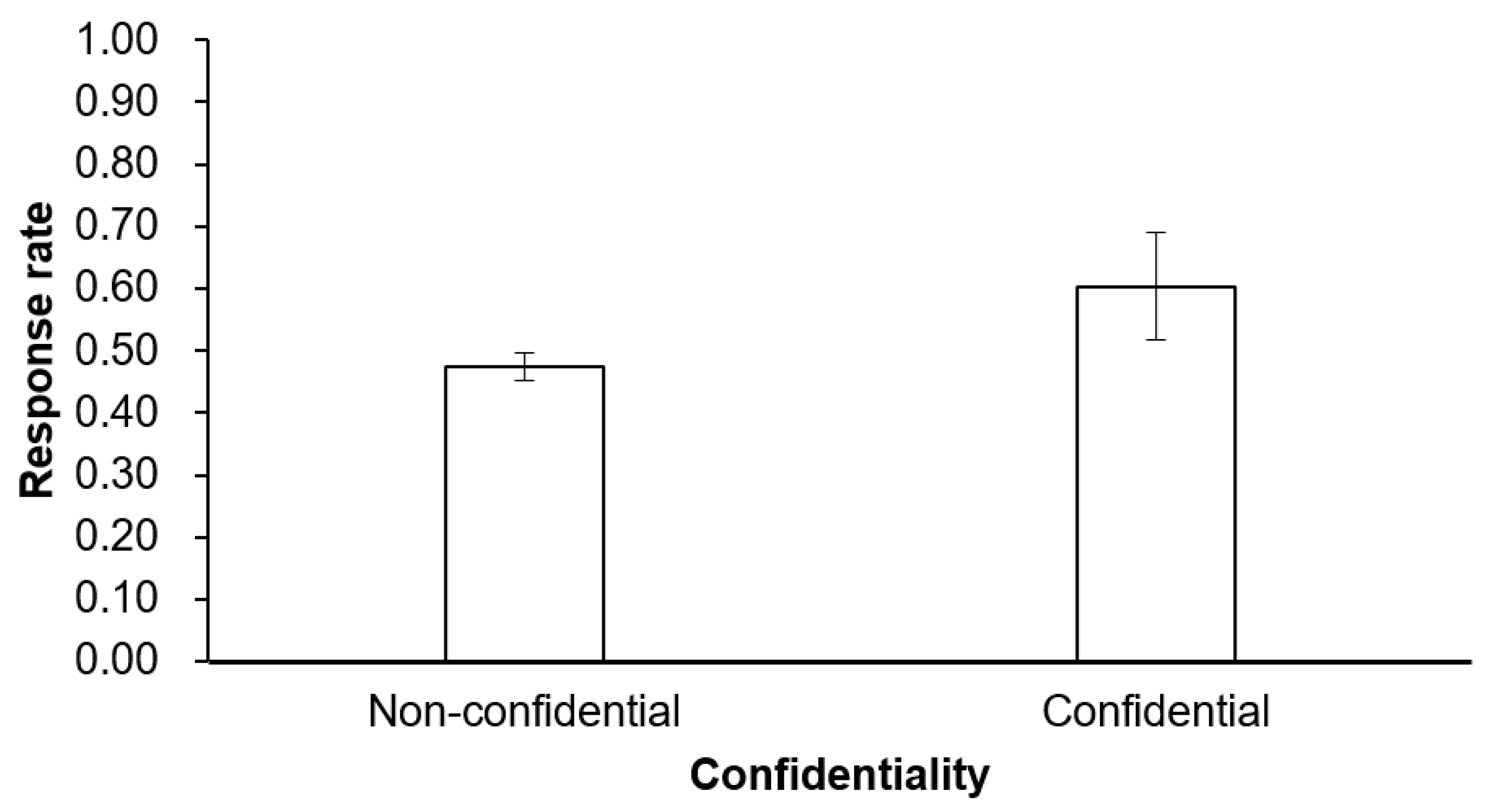

| Confidentiality |

|

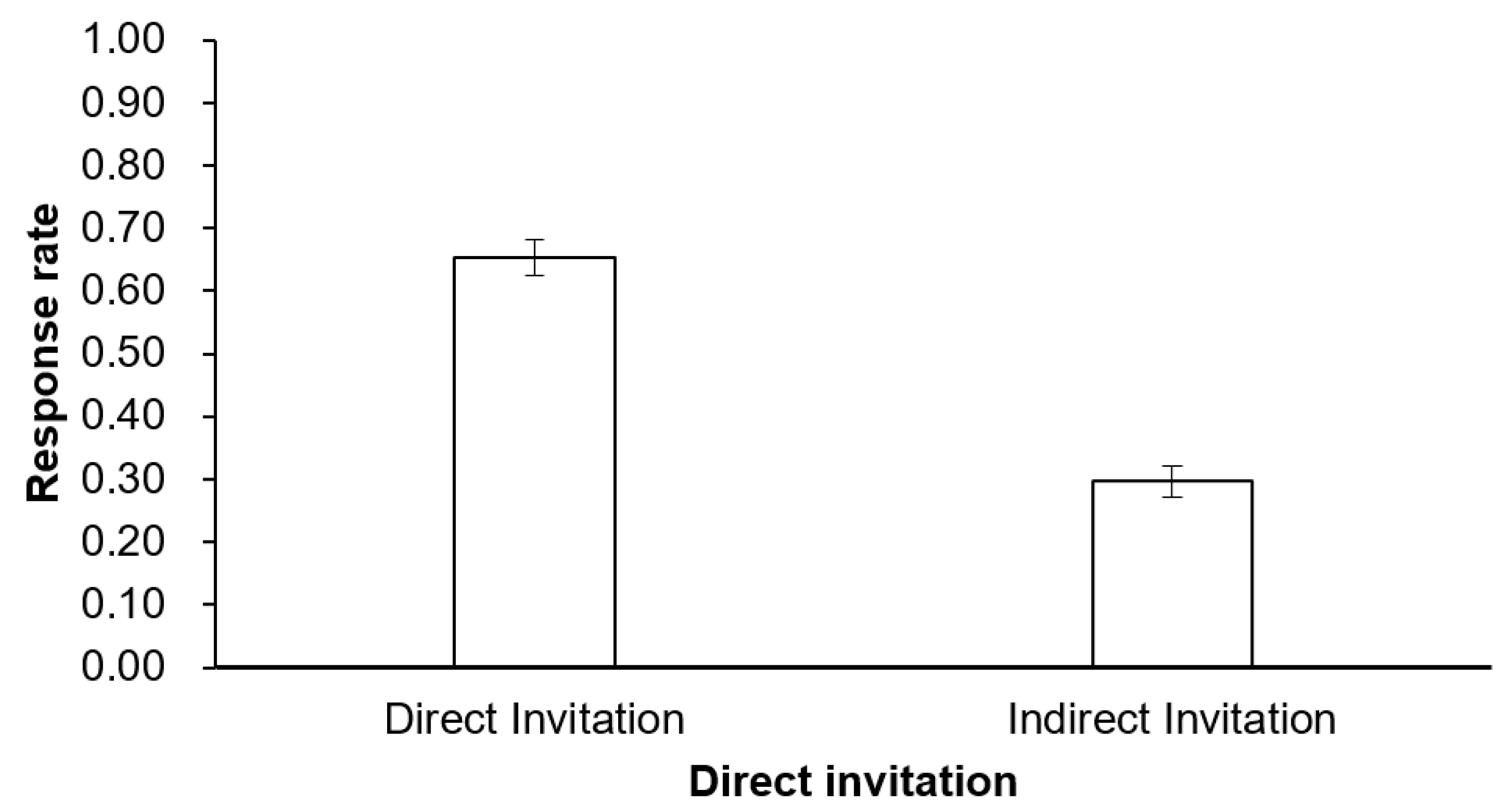

| Direct Invitation |

|

| Country or region’s cultural value orientation | Individualism and collectivism index c (M = 58.63, SD = 30.55, Range: 14 to 91) |

| Training Set | Test Set | |

|---|---|---|

| Decision tree regression | 0.722 | 0.578 |

| Traditional linear regression | 0.615 | 0.423 |

| Recall Rate a | Precision Rate b | |

|---|---|---|

| High response rates | 78.57% | 78.96% |

| Low response rates | 71.87% | 79.31% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Fang, M.; Ye, S.; Chen, C.; Wan, Q.; Qian, X. Using Decision Tree to Predict Response Rates of Consumer Satisfaction, Attitude, and Loyalty Surveys. Sustainability 2019, 11, 2306. https://doi.org/10.3390/su11082306

Han J, Fang M, Ye S, Chen C, Wan Q, Qian X. Using Decision Tree to Predict Response Rates of Consumer Satisfaction, Attitude, and Loyalty Surveys. Sustainability. 2019; 11(8):2306. https://doi.org/10.3390/su11082306

Chicago/Turabian StyleHan, Jian, Miaodan Fang, Shenglu Ye, Chuansheng Chen, Qun Wan, and Xiuying Qian. 2019. "Using Decision Tree to Predict Response Rates of Consumer Satisfaction, Attitude, and Loyalty Surveys" Sustainability 11, no. 8: 2306. https://doi.org/10.3390/su11082306