Digital Twin in Electrical Machine Control and Predictive Maintenance: State-of-the-Art and Future Prospects

Abstract

:1. Introduction

2. Literature Review

2.1. Digital Twin Reviews

What Is Digital Twin?

- Multiphysics, meaning cooperation of different system descriptions, such as aerodynamics, fluid dynamics, electromagnetics, tensions etc.;

- Multiscale. The DT simulation should adapt to the required depth in real time. Users can zoom into the component of a component, up to a complete view of the DT;

- Probabilistic, based on models derived from state-of-the-art analyses on each building block, to predict the future and follow the same description protocol as the real twin;

- Ultrafidelity, offering unlimited precision down to the lowest possible level. This is, of course, a compromise, often a tradeoff for computational power and time.

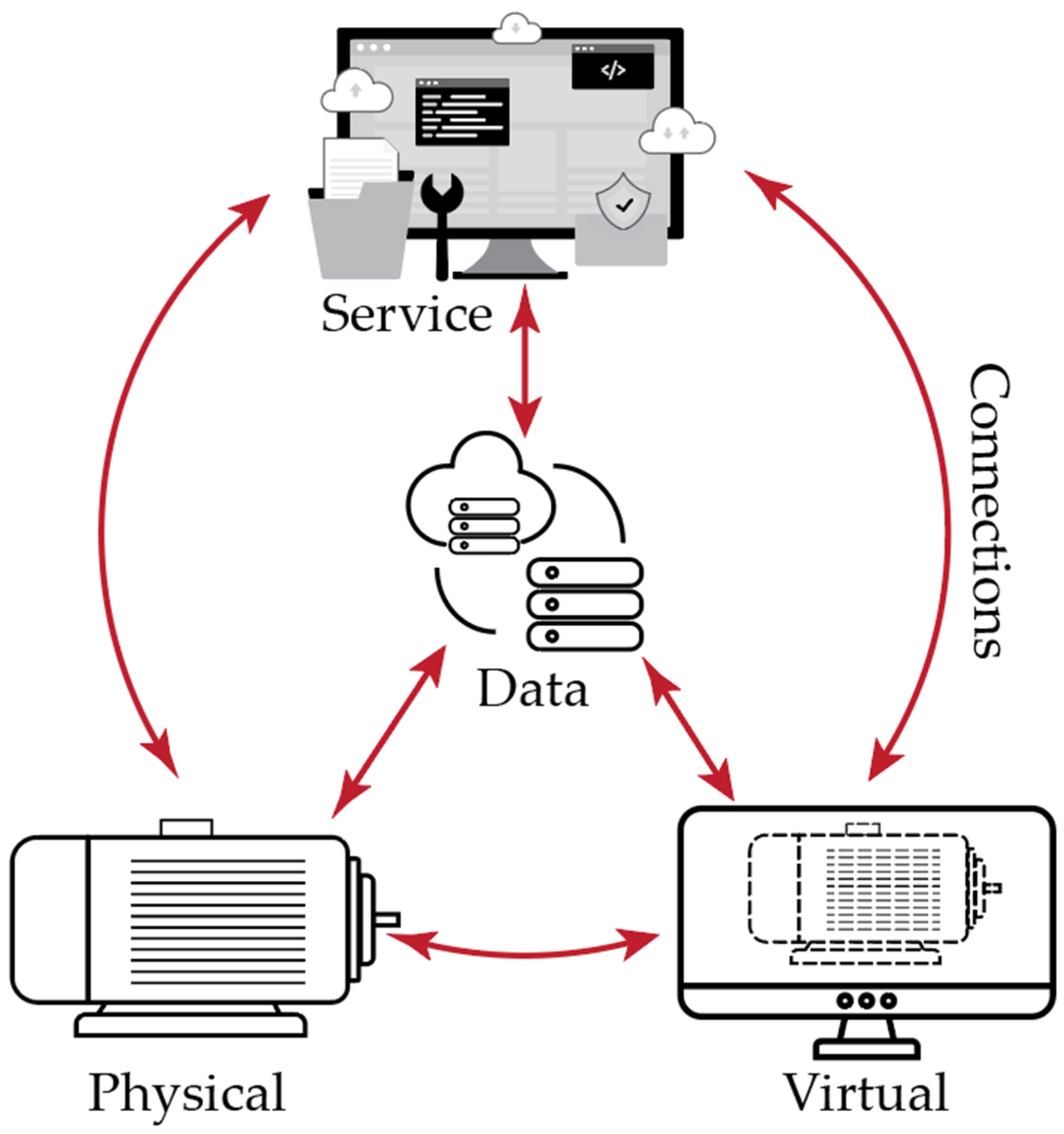

- Physical;

- Virtual;

- Service;

- Data;

- Connections.

- Design phase;

- Manufacturing phase;

- Service phase;

- Retire phase.

- Historical data;

- Real-time sensors;

- Models.

2.2. Surveyed Literature

- Part analysis and reverse engineering;

- Development;

- Virtual Commissioning and Validation;

- Physical Reconditioning.

- Interdisciplinary, mature software collaboration. Literature protagonists include MATLAB/Simulink, ANSYS and ADAMS.

- High Level Architecture (HLA), as established by IEEE and recommended by NATO through STANAG 4603.

- Unified Modeling Languages (UML), such as Modelica.

- Training personnel in a simulated environment.

- Providing a visual aid to serve as a manual and a library during downtime.

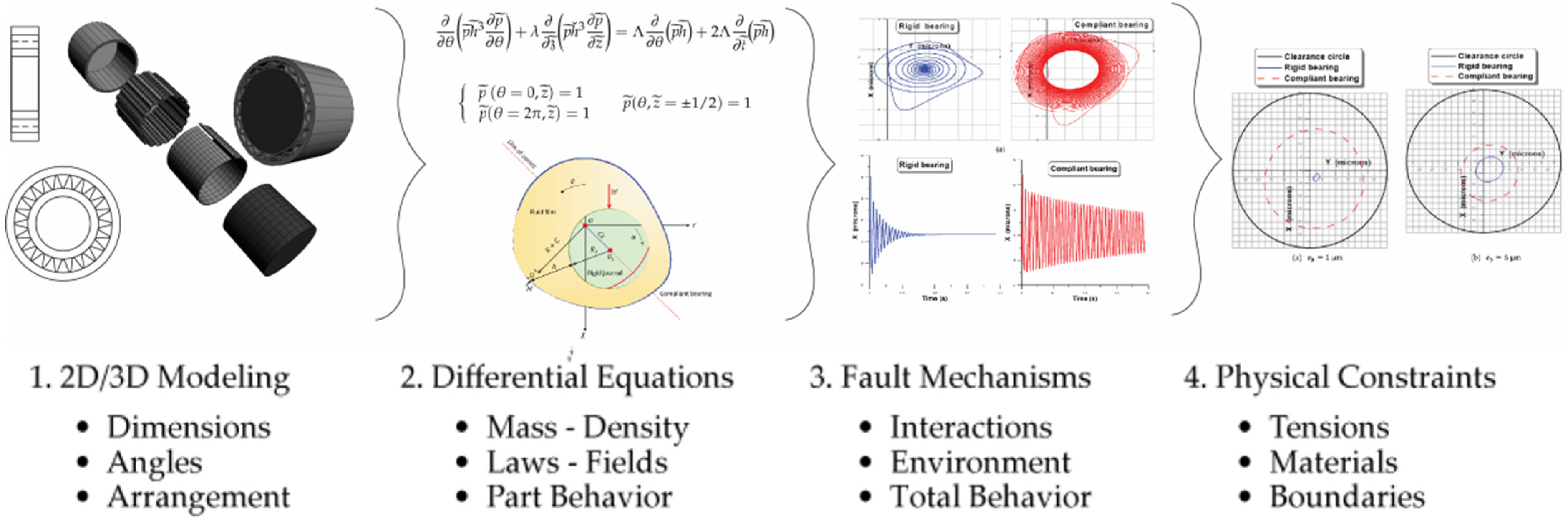

- Geometric model, constructing its spatial representation.

- Physical model, inserting the physical attributes to the representation.

- Behavior model, integrating the reaction with the environment.

- Constraint model, realizing the boundaries of all operations.

- The manufacturer of the system constructs a DT in their software of choice as they deem appropriate. The DT is validated and then the physical system is constructed.

- Data are amassed and learning algorithms are trained during this process. The resulting physical system is evaluated and DTL is used as the connecting utility between the real and virtual twin. The DT is used as a complete evaluation, real-time, and simulation system.

- Data extraction and processing;

- Maintenance knowledge modeling; and

- Advisory capabilities

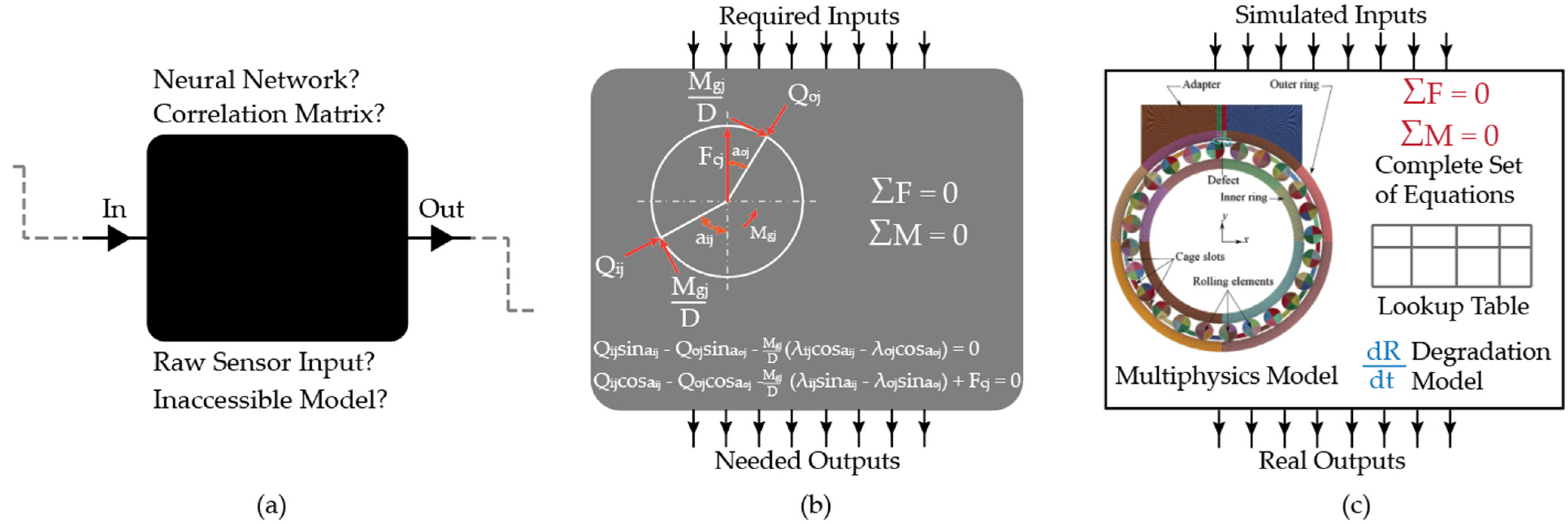

- Black Boxes. These models only include inputs and outputs without knowledge of the inner workings of the component. Can be used as placeholders during the making process.

- Grey Boxes, using theoretical data to complete the component’s effects and results. Can be used in cases of missing data or choice of not including a sensor, while not having a previous iteration of it.

- White Boxes. The exact functionality and inner workings of the model are known. These are the current final iteration of the component model and include update protocols, have undergone validation and closely resemble the real component.

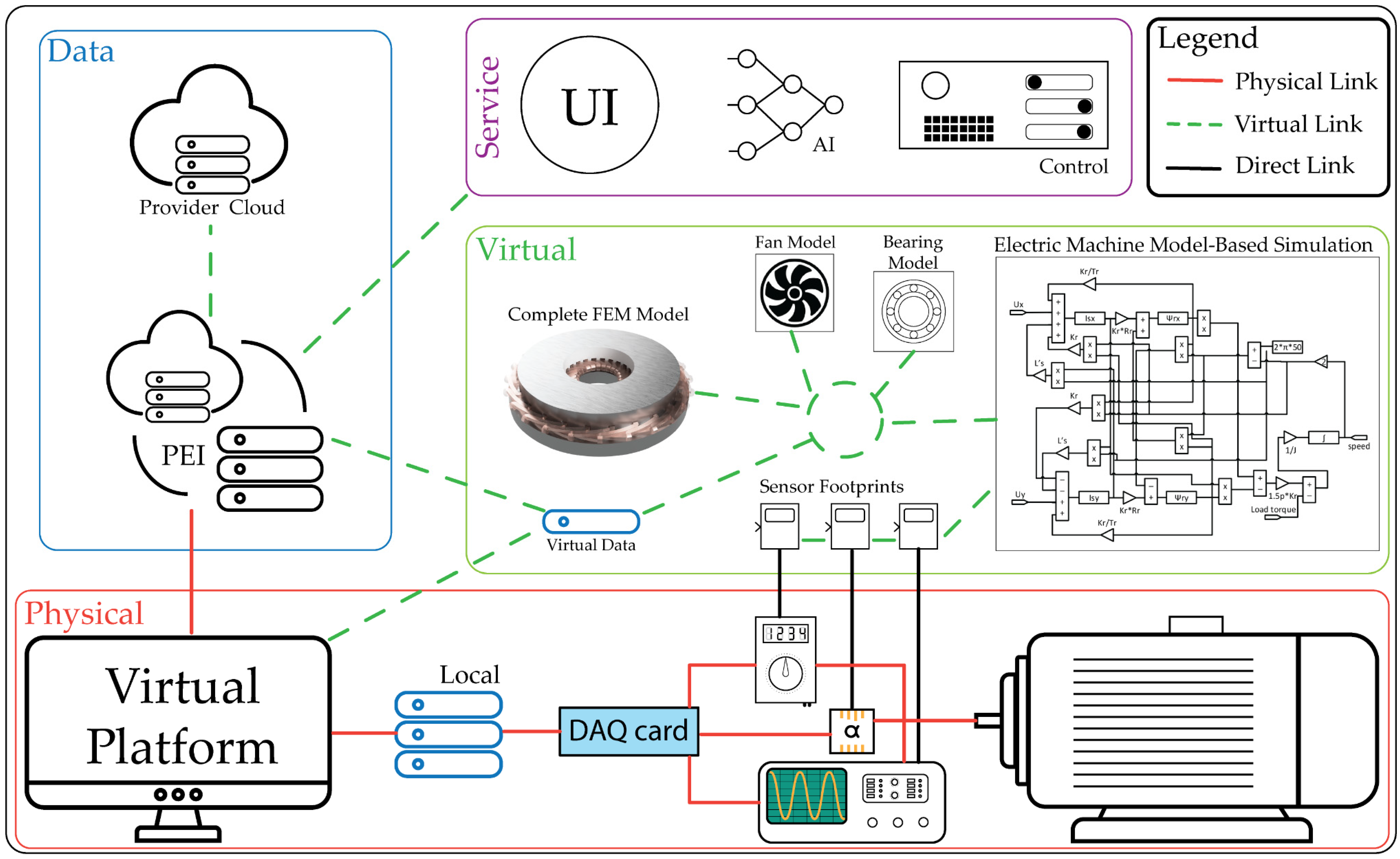

- Advanced physical modeling of the machine. Kinematic and dynamic characteristics of the model, including positions of virtual sensors, only where and if they are required.

- Synchronous simulation tuning in order to as closely as possible resemble their real counterparts.

- Simulation using real input data and comparison with real output data.

- Combination of the Real and Virtual Twin in a comparison. This is the final stage of the DT usage.

- Level 1 is the physical model of the generator and its electromagnetic parameters, following the existing literature.

- Level 2 enhances the previous levels with additional analytical models, resulting in a better estimation of the machine. This point has also been explored.

- Levels 3 and 4 realize the flux calculation, which is an extensively discussed and complicated procedure. Levels 2–4 utilize the novel DT methodology benefits of enhancing the conventional model with interdisciplinary approaches.

- Level 5 is the final level and employs the connection of this output to the “back” of the machine to create the closed loop model.

- It considers space harmonics and magnetic imbalances, due to FEM modeling.

- It can support any number of electrical circuits, following the modularity requirement of the DT.

- It can compute a distribution of power losses inside the machine, which is paramount to achieving a true DT and a major challenge of research.

3. Discussion

3.1. Is This Classification Useful for Literature?

Proposed Solution

3.2. Proposed Complete Definition of Digital Twin Framework

3.2.1. Life Cycle

3.2.2. Five-Dimensional Digital Twin Framework

3.2.3. Creating the DTF Iteratively

3.2.4. Software

3.3. Contribution of the DTF in Industry

- Serve as a data integrator for a CPS in one PEI package;

- Translate and optimize the data into usable form for IoT technologies;

- Preserve post-processing information for future endeavors.

- Combining the pre-existing models and analyses;

- Integrating Big Data and AI technologies in the DTF.

3.4. Proposed Definition

- Organic (adjective, formal): consisting of different, interconnected parts; happening in a natural way [59]. This single world encapsulates two of the most important aspects of the proposed DTF but can be unintuitive to some; we deem the definition better for it.

- Can represent… in real time: the DTF can be used with ultrafidelity to delve deeper into a single mechanism, forgoing the requirement of real-time computational capability. However, to be classified as a DT, the framework should be able to tap into the “multiscale” quality: sacrifice fidelity for real-time simulation. Otherwise, it is a DM.

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gritli, Y.; Bellini, A.; Rossi, C.; Casadei, D.; Filippetti, F.; Capolino, G.A. Condition monitoring of mechanical faults in induction machines from electrical signatures: Review of different techniques. In Proceedings of the 2017 IEEE 11th International Symposium on Diagnostics for Electrical Machines, Power Electronics and Drives (SDEMPED), Tinos, Greece, 29 August–1 September 2017; pp. 77–84. [Google Scholar] [CrossRef]

- Capolino, G.A.; Romary, R.; Hénao, H.; Pusca, R. State of the art on stray flux analysis in faulted electrical machines. In Proceedings of the 2019 IEEE Workshop on Electrical Machines Design, Control and Diagnosis WEMDCD, Athens, Greece, 22–23 April 2019; pp. 181–187. [Google Scholar] [CrossRef]

- Riera-Guasp, M.; Antonino-Daviu, J.A.; Capolino, G.A. Advances in electrical machine, power electronic, and drive condition monitoring and fault detection: State of the art. IEEE Trans. Ind. Electron. 2015, 62, 1746–1759. [Google Scholar] [CrossRef]

- Antonino-Daviu, J. Electrical monitoring under transient conditions: A new paradigm in electric motors predictive maintenance. Appl. Sci. 2020, 10, 6137. [Google Scholar] [CrossRef]

- Henao, H.; Capolino, G.A.; Fernandez-Cabanas, M.; Filippetti, F.; Bruzzese, C.; Strangas, E.; Pusca, R.; Estima, J.; Riera-Guasp, M.; Hedayati-Kia, S. Trends in fault diagnosis for electrical machines: A review of diagnostic techniques. IEEE Ind. Electron. Mag. 2014, 8, 31–42. [Google Scholar] [CrossRef]

- Lopez-Perez, D.; Antonino-Daviu, J. Application of Infrared Thermography to Failure Detection in Industrial Induction Motors: Case Stories. IEEE Trans. Ind. Appl. 2017, 53, 1901–1908. [Google Scholar] [CrossRef]

- Grieves, M. Digital twin: Manufacturing excellence through virtual factory replication. White Pap. 2014, 1, 1–7. [Google Scholar]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y.C. Digital Twin in Industry: State-of-the-Art. IEEE Trans. Ind. Inform. 2019, 15, 2405–2415. [Google Scholar] [CrossRef]

- Kande, M.; Isaksson, A.J.; Thottappillil, R.; Taylor, N. Rotating electrical machine condition monitoring automation-A review. Machines 2017, 5, 24. [Google Scholar] [CrossRef] [Green Version]

- Orosz, T.; Rassõlkin, A.; Kallaste, A.; Arsénio, P.; Pánek, D.; Kaska, J.; Karban, P. Robust design optimization and emerging technologies for electrical machines: Challenges and open problems. Appl. Sci. 2020, 10, 11–13. [Google Scholar] [CrossRef]

- Lee, S.B.; Stone, G.C.; Antonino-Daviu, J.; Gyftakis, K.N.; Strangas, E.G.; Maussion, P.; Platero, C.A. Condition Monitoring of Industrial Electric Machines: State of the Art and Future Challenges. IEEE Ind. Electron. Mag. 2020, 14, 158–167. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital Twin: Enabling Technologies, Challenges and Open Research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Lim, K.Y.H.; Zheng, P.; Chen, C.H. A state-of-the-art survey of Digital Twin: Techniques, engineering product lifecycle management and business innovation perspectives. J. Intell. Manuf. 2020, 31, 1313–1337. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F.; Hu, T.; Anwer, N.; Liu, A.; Wei, Y.; Wang, L.; Nee, A.Y.C. Enabling technologies and tools for digital twin. J. Manuf. Syst. 2021, 58, 3–21. [Google Scholar] [CrossRef]

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of digital twin about concepts, technologies, and industrial applications. J. Manuf. Syst. 2021, 58, 346–361. [Google Scholar] [CrossRef]

- Kritzinger, W.; Karner, M.; Traar, G.; Henjes, J.; Sihn, W. Digital Twin in manufacturing: A categorical literature review and classification. IFAC-PapersOnLine 2018, 51, 1016–1022. [Google Scholar] [CrossRef]

- Glaessgen, E.H.; Stargel, D.S. The digital twin paradigm for future NASA and U.S. Air force vehicles. In Proceedings of the 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference: Special Session on the Digital Twin, Honolulu, HI, USA, 23–26 April 2012. [Google Scholar] [CrossRef] [Green Version]

- Gabor, T.; Belzner, L.; Kiermeier, M.; Beck, M.T.; Neitz, A. A simulation-based architecture for smart cyber-physical systems. In Proceedings of the 2016 IEEE International Conference on Autonomic Computing (ICAC); IEEE: Piscataway, NY, USA, 2016; pp. 374–379. [Google Scholar]

- Chen, Y. Integrated and intelligent manufacturing: Perspectives and enablers. Engineering 2017, 3, 588–595. [Google Scholar] [CrossRef]

- Zhuang, C.; Liu, J.; Xiong, H. Digital twin-based smart production management and control framework for the complex product assembly shop-floor. Int. J. Adv. Manuf. Technol. 2018, 96, 1149–1163. [Google Scholar] [CrossRef]

- Liu, Z.; Meyendorf, N.; Mrad, N. The role of data fusion in predictive maintenance using digital twin. In Proceedings of the AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2018; Volume 1949, p. 20023. [Google Scholar]

- Zheng, Y.; Yang, S.; Cheng, H. An application framework of digital twin and its case study. J. Ambient Intell. Humaniz. Comput. 2019, 10, 1141–1153. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.; Liu, X.; Zheng, Y. A Digital-Twin-Assisted Fault Diagnosis Using Deep Transfer Learning. IEEE Access 2019, 7, 19990–19999. [Google Scholar] [CrossRef]

- Madni, A.M.; Madni, C.C.; Lucero, S.D. Leveraging digital twin technology in model-based systems engineering. Systems 2019, 7, 7. [Google Scholar] [CrossRef] [Green Version]

- Kannan, K.; Arunachalam, N. A digital twin for grinding wheel: An information sharing platform for sustainable grinding process. J. Manuf. Sci. Eng. 2019, 141, 021015. [Google Scholar] [CrossRef]

- Kiritsis, D.; Bufardi, A.; Xirouchakis, P. Research issues on product lifecycle management and information tracking using smart embedded systems. Adv. Eng. Inform. 2003, 17, 189–202. [Google Scholar] [CrossRef]

- Söderberg, R.; Wärmefjord, K.; Carlson, J.S.; Lindkvist, L. Toward a Digital Twin for real-time geometry assurance in individualized production. CIRP Ann. Manuf. Technol. 2017, 66, 137–140. [Google Scholar] [CrossRef]

- Toso, F.; Favato, A.; Torchio, R.; Carbonieri, M.; De Soricellis, M.; Alotto, P.; Bolognani, S. Digital Twin Software for Electrical Machines. Master’s Thesis, Universita’ Degli Studi di Padova, Padova, Italy, 2020. [Google Scholar]

- Magargle, R.; Johnson, L.; Mandloi, P.; Davoudabadi, P.; Kesarkar, O.; Krishnaswamy, S.; Batteh, J.; Pitchaikani, A. A Simulation-Based Digital Twin for Model-Driven Health Monitoring and Predictive Maintenance of an Automotive Braking System. In Proceedings of the 12th International Modeling Conference, Prague, Czech Republic, 15–17 May 2017; Volume 132, pp. 35–46. [Google Scholar] [CrossRef] [Green Version]

- Ayani, M.; Ganebäck, M.; Ng, A.H.C. Digital Twin: Applying emulation for machine reconditioning. Procedia CIRP 2018, 72, 243–248. [Google Scholar] [CrossRef]

- Sivalingam, K.; Sepulveda, M.; Spring, M.; Davies, P. A Review and Methodology Development for Remaining Useful Life Prediction of Offshore Fixed and Floating Wind turbine Power Converter with Digital Twin Technology Perspective. In Proceedings of the 018 2nd International Conference on Green Energy and Applications (ICGEA), Singapore, 24–26 March 2018; pp. 197–204. [Google Scholar] [CrossRef]

- Rosen, R.; Boschert, S.; Sohr, A. Next Generation Digital Twin. ATP Mag. 2018, 60, 86–96. [Google Scholar] [CrossRef]

- Luo, W.; Hu, T.; Zhu, W.; Tao, F. Digital twin modeling method for CNC machine tool. In Proceedings of the 2018 IEEE 15th International Conference on Networking, Sensing and Control (ICNSC), Zhuhai, China, 27–29 March 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Luo, W.; Hu, T.; Zhang, C.; Wei, Y. Digital twin for CNC machine tool: Modeling and using strategy. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 1129–1140. [Google Scholar] [CrossRef]

- Luo, W.; Hu, T.; Ye, Y.; Zhang, C.; Wei, Y. A hybrid predictive maintenance approach for CNC machine tool driven by Digital Twin. Robot. Comput. Integr. Manuf. 2020, 65, 101974. [Google Scholar] [CrossRef]

- Wei, Y.; Hu, T.; Zhou, T.; Ye, Y.; Luo, W. Consistency retention method for CNC machine tool digital twin model. J. Manuf. Syst. 2021, 58, 313–322. [Google Scholar] [CrossRef]

- Vathoopan, M.; Johny, M.; Zoitl, A.; Knoll, A. Modular Fault Ascription and Corrective Maintenance Using a Digital Twin. IFAC-PapersOnLine 2018, 51, 1041–1046. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, W.; Zhang, C.; Yang, C.; Chu, H. Data Super-Network Fault Prediction Model and Maintenance Strategy for Mechanical Product Based on Digital Twin. IEEE Access 2019, 7, 177284–177296. [Google Scholar] [CrossRef]

- Swana, E.F.; Doorsamy, W. Investigation of Combined Electrical Modalities for Fault Diagnosis on a Wound-Rotor Induction Generator. IEEE Access 2019, 7, 32333–32342. [Google Scholar] [CrossRef]

- Bou-Saïd, B.; Lahmar, M.; Mouassa, A.; Bouchehit, B. Dynamic performances of foil bearing supporting a jeffcot flexible rotor system using FEM. Lubricants 2020, 8, 14. [Google Scholar] [CrossRef] [Green Version]

- Venkatesan, S.; Manickavasagam, K.; Tengenkai, N.; Vijayalakshmi, N. Health monitoring and prognosis of electric vehicle motor using intelligent-digital twin. IET Electr. Power Appl. 2019, 13, 1328–1335. [Google Scholar] [CrossRef]

- Wang, J.; Ye, L.; Gao, R.X.; Li, C.; Zhang, L. Digital Twin for rotating machinery fault diagnosis in smart manufacturing. Int. J. Prod. Res. 2019, 57, 3920–3934. [Google Scholar] [CrossRef]

- Cattaneo, L.; MacChi, M. A Digital Twin Proof of Concept to Support Machine Prognostics with Low Availability of Run-To-Failure Data. IFAC-PapersOnLine 2019, 52, 37–42. [Google Scholar] [CrossRef]

- Aivaliotis, P.; Georgoulias, K.; Chryssolouris, G. The use of Digital Twin for predictive maintenance in manufacturing. Int. J. Comput. Integr. Manuf. 2019, 32, 1067–1080. [Google Scholar] [CrossRef]

- Cao, H.; Niu, L.; Xi, S.; Chen, X. Mechanical model development of rolling bearing-rotor systems: A review. Mech. Syst. Signal Process. 2018, 102, 37–58. [Google Scholar] [CrossRef]

- Singh, S.; Köpke, U.G.; Howard, C.Q.; Petersen, D. Analyses of contact forces and vibration response for a defective rolling element bearing using an explicit dynamics finite element model. J. Sound Vib. 2014, 333, 5356–5377. [Google Scholar] [CrossRef]

- Ebrahimi, A. Challenges of developing a digital twin model of renewable energy generators. In Proceedings of the 2019 IEEE 28th International Symposium on Industrial Electronics (ISIE), Vancouver, BC, Canada, 12–14 June 2019; pp. 1059–1066. [Google Scholar] [CrossRef]

- Tong, X.; Liu, Q.; Pi, S.; Xiao, Y. Real-time machining data application and service based on IMT digital twin. J. Intell. Manuf. 2020, 31, 1113–1132. [Google Scholar] [CrossRef]

- Bouzid, S.; Viarouge, P.; Cros, J. Real-time digital twin of a wound rotor induction machine based on finite element method. Energies 2020, 13, 5413. [Google Scholar] [CrossRef]

- Mukherjee, V.; Martinovski, T.; Szucs, A.; Westerlund, J.; Belahcen, A. Improved analytical model of induction machine for digital twin application. In Proceedings of the 2020 International Conference on Electrical Machines (ICEM), Gothenburg, Sweden, 23–26 August 2020; pp. 183–189. [Google Scholar] [CrossRef]

- Rassõlkin, A.; Orosz, T.; Demidova, G.L.; Kuts, V.; Rjabtšikov, V.; Vaimann, T.; Kallaste, A. Implementation of digital twins for electrical energy conversion systems in selected case studies. Proc. Est. Acad. Sci. 2021, 70, 19–39. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F. Digital Twin and Big Data towards Smart Manufacturing and Industry 4.0: 360 Degree Comparison. IEEE Access 2018, 6, 3585–3593. [Google Scholar] [CrossRef]

- Stark, R.; Fresemann, C.; Lindow, K. Development and operation of Digital Twins for technical systems and services. CIRP Ann. 2019, 68, 129–132. [Google Scholar] [CrossRef]

- Saifulin, R.; Pajchrowski, T.; Breido, I. A Buffer Power Source Based on a Supercapacitor for Starting an Induction Motor under Load. Energies 2021, 14, 4769. [Google Scholar] [CrossRef]

- Baranov, G.; Zolotarev, A.; Ostrovskii, V.; Karimov, T.; Voznesensky, A. Analytical model for the design of axial flux induction motors with maximum torque density. World Electr. Veh. J. 2021, 12, 24. [Google Scholar] [CrossRef]

- Stefenon, S.F.; Freire, R.Z.; dos Santos Coelho, L.; Meyer, L.H.; Grebogi, R.B.; Buratto, W.G.; Nied, A. Electrical insulator fault forecasting based on a wavelet neuro-fuzzy system. Energies 2020, 13, 484. [Google Scholar] [CrossRef] [Green Version]

- Jiang, H.; Qin, S.; Fu, J.; Zhang, J.; Ding, G. How to model and implement connections between physical and virtual models for digital twin application. J. Manuf. Syst. 2021, 58, 36–51. [Google Scholar] [CrossRef]

- Elmouatamid, A.; Ouladsine, R.; Bakhouya, M.; El Kamoun, N.; Khaidar, M.; Zine-Dine, K. Review of control and energy management approaches in micro-grid systems. Energies 2021, 14, 168. [Google Scholar] [CrossRef]

- Hornby, A.S.; Cowie, A.P. Oxford Advanced Learner’s Dictionary; Oxford University Press: Oxford, UK, 1995; p. 1428. [Google Scholar]

- Bevilacqua, M.; Bottani, E.; Ciarapica, F.E.; Costantino, F.; Di Donato, L.; Ferraro, A.; Mazzuto, G.; Monteriù, A.; Nardini, G.; Ortenzi, M.; et al. Digital twin reference model development to prevent operators’ risk in process plants. Sustainability 2020, 12, 1088. [Google Scholar] [CrossRef] [Green Version]

- 61. Riku Ala-Laurinaho Sensor Data Transmission from a Physical Twin to a Digital Twin. Master’s Thesis, Aalto University, Espoo, Finland, 2019; p. 105.

- Angrish, A.; Starly, B.; Lee, Y.S.; Cohen, P.H. A flexible data schema and system architecture for the virtualization of manufacturing machines (VMM). J. Manuf. Syst. 2017, 45, 236–247. [Google Scholar] [CrossRef]

- He, Y.; Guo, J.; Zheng, X. From Surveillance to Digital Twin: Challenges and Recent Advances of Signal Processing for Industrial Internet of Things. IEEE Signal Process. Mag. 2018, 35, 120–129. [Google Scholar] [CrossRef]

- Zhu, Z.; Liu, C.; Xu, X. Visualisation of the digital twin data in manufacturing by using augmented reality. Procedia CIRP 2019, 81, 898–903. [Google Scholar] [CrossRef]

- Aivaliotis, P.; Georgoulias, K.; Alexopoulos, K. Using digital twin for maintenance applications in manufacturing: State of the Art and Gap analysis. In Proceedings of the 2019 IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC), Valbonne Sophia-Antipolis, France, 17–19 June 2019. [Google Scholar] [CrossRef]

| Construct | Physical to Virtual Data Flow | Virtual to Physical Data Flow |

|---|---|---|

| Digital Model | manual | manual |

| Digital Shadow | automatic | manual |

| Digital Twin | automatic | automatic |

| Ref. | Char. | Comment |

|---|---|---|

| [28] | DS | Thorough usage of proposed DS capabilities. Role in control is acknowledged in definition, but proposed model serves only as virtual sensor. Usage of data in automatic control is implied, but not enforced. Modifying virtual control values in any way should be reflected in real twin to be a DT. |

| [29] | DΜ | Excellent demonstration of multiphysics, multiscale simulation with software cooperation. Real twin control methods are simulated but not accessible from virtual twin. Measurements are done once before constructing the model and no real-time connections are mentioned, rendering work as DΜ. Model is used to predict RUL and wear-tear, in addition to AI training. PM target is achieved. |

| [30] | DT | Inclusive realization of DT capabilities. Digital verification. Physical controllers fully cooperative with DT software, displaying the DT’s role in control. Operators controlled DT via physical console. Results of application case include upgrades to the machine through retrofitting project, enabled by its emulation. |

| [31] | DS | Authors duly describe methodology as in DT “Framework”. Focus in one part of system, emphasizing fidelity choice. Operational data from SCADA system into physical-based models with no control through software—DS. Virtual sensors used to optimize real locations. DT paradigms cleanly recalled. |

| [32] | DT | Original coin of “nexDT”. Proposition of DT paradigm, no application. Agreement on all aspects of proposed DT definition. Broader designation but encapsulates all aspects. |

| [33,34,35,36] | DT | Proposed DT model of CNC Machine Tool. CNCMT is excellent candidate for first era DTs as it can facilitate creation of projects’ DTs. Cited work explores all aspects of proposed DT methodology and provides real-time interconnection between cyber and physical space. Extensive application case study and report. |

| [37] | DM | Authors provide proof of concept using Modelica based model along with inputs and outputs, acknowledging minimality. Description of work fits DM as fault and sensor models exist in simulation. No data receiving from real sensors or back-transfer of control is mentioned. Goal is UI/Testbench for operator. |

| [38] | DT | Work follows five-dimensional DT model and all life-cycle phases. Focus is on data handling for PM. DT is ancillary. Excellent proposition of control usage in PM. DT chooses and validates maintenance work before guiding repair robot. Exemplary for PM DT. |

| [41] | DS | PMSM, IGBT, battery models followed by NN is encased in DT. Receives real sensory input but no role in control as user decides following steps post observation. Directly useful without bloat. “i-DT” term is mentioned for NN-enhanced model. |

| [23] | DT | Work follows paradigm of including all life-cycle phases and relevant rules and subsystems. Control not directly realized but physical entity operates according to simulation without intervention, thus being deemed an automatic data transfer process. |

| [42] | DS | Work suggests automatic optimization of physical system based on analysis of virtual twin, but application only employed in diagnostics. Sensory input from physical system. Model is continuously updated. |

| [43] | DS | Drilling machine DT proof of concept. Sensory input and comparison to real system, status updated but used only in RUL prediction. No back adjustments. |

| [44] | DS | Advisory nature proposed DT is highlighted. Sensory input from real twin. Great paradigm of DT usage in PM as transfer of data back to real twin is of limited usefulness. All other aspects excellently covered. |

| [47] | DS | Author presents the most complete combination of physical test bench and digital representation out of all reviewed works. Digital twin is self-optimizing and receives extensive input but does not automatically change real twin, receiving the designation of DS. All other paradigms of DT included in work. |

| [48] | DT | Another approach to Intelligent CNCMT. Sensors update digital model, complete with intelligent algorithms. After validation, simulated data are returned to real twin to optimize and complete work. |

| [49] | DS | Full life-cycle model of EM. Sensory output with model updating. PM operations achieved but no back transfer of information. Core of work is comparison and establishment of better base model. |

| [50] | DM | Acknowledged proposition of model to be used in DT. Standalone work is a DM of EM as it has no sensory input yet. |

| [51] | DT | Authors acknowledge bidirectional, seamless data transfer and role in control, designating their approach as a DT. While multiple applications are discussed, the consensus fits the proposed DT paradigm. |

| Classification | Works | Applications | Overview |

|---|---|---|---|

| Digital Model | [29,37,50] | Manufacturing, Design, Training | Non-living model, snapshot from DTF. Modeling of subsystems, steady-state operations, low computational cost applications. Conventional modeling currently employed. |

| Digital Shadow | [28,31,41,42,43,44,47,49] | Condition Monitoring, Predictive Maintenance, DAQ, Optimization, UI | Living footprint of physical system. Complete visualization, tracking, DAQ, UI. Optimized and complete methodology combination in state-of-the-art CM and PM. |

| Digital Twin | [23,30,32,33,34,35,36,38,48,51] | All of the above plus Control, Industrial IoT, Retrofit, Reconfiguration, Operation | Living simulation of physical system. Control integration and changes reflection. Boundaries and constraints. Complete lifecycle depiction and cloud storage. Industry 4.0 paradigm. |

| Requirement of | Digital Model | Digital Shadow | Digital Twin |

|---|---|---|---|

| Automatic Data Flow | no | Physical to Virtual | Bidirectional |

| Time-Varying | no | yes | yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Falekas, G.; Karlis, A. Digital Twin in Electrical Machine Control and Predictive Maintenance: State-of-the-Art and Future Prospects. Energies 2021, 14, 5933. https://doi.org/10.3390/en14185933

Falekas G, Karlis A. Digital Twin in Electrical Machine Control and Predictive Maintenance: State-of-the-Art and Future Prospects. Energies. 2021; 14(18):5933. https://doi.org/10.3390/en14185933

Chicago/Turabian StyleFalekas, Georgios, and Athanasios Karlis. 2021. "Digital Twin in Electrical Machine Control and Predictive Maintenance: State-of-the-Art and Future Prospects" Energies 14, no. 18: 5933. https://doi.org/10.3390/en14185933