Deep Learning with Stacked Denoising Auto-Encoder for Short-Term Electric Load Forecasting

Abstract

:1. Introduction

2. Electric Load Forecasting System

2.1. Single Parameter Electric Load Forecasting Model

2.2. Transdimensional Electric Load Forecasting Model

3. SDAE Neural Netwok

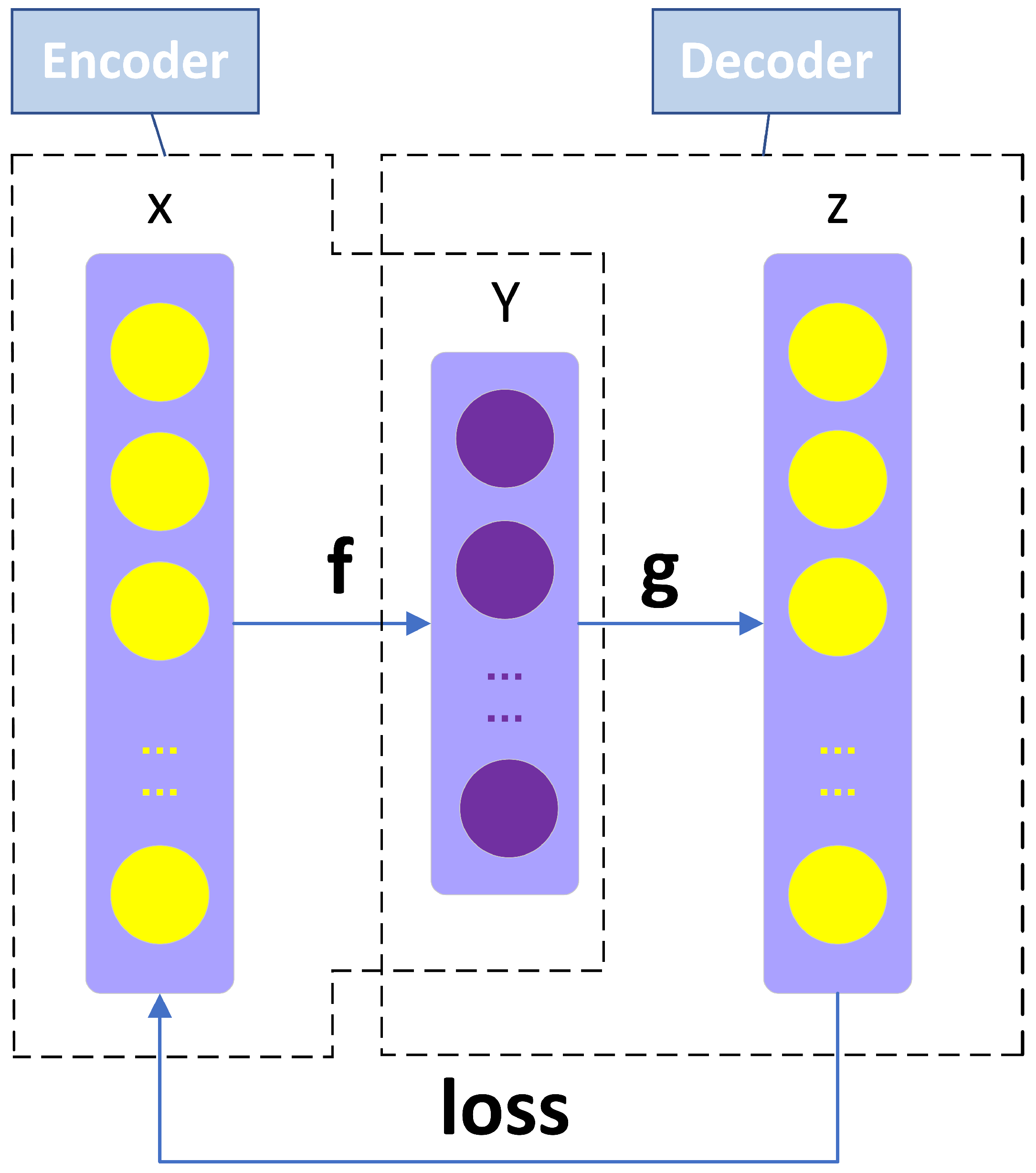

3.1. Auto-Encoder

3.2. Denoising Auto-Encoder(DAE) and Stacked Denoising Auto-Encoder (SDAE)

3.3. SDAE Model

- Raw data are standardized in data preprocessing.

- Greedy layer-wise pre-training is used on the parameters of the entire network to pre-train the network [31]. By using the SDAE, the initial weight values and initial offset values of the whole neural network are obtained, and the weight error range of the fine-tuning process is reduced [32], effectively avoiding over-fitting and gradient vanishing in our research.

- The early-stop method is also used to prevent overfitting. This strategy is widely used in traditional machine learning. It is currently the simplest and most effective way, and it is better than the regularization method in many cases.

- The performance of the network is highly dependent on the number of layers in the hidden layer and the number of neurons in each layer. Therefore, we use the fit_generator function in the code that forms the network.

- Parameters W and b are updated by using the gradient descent method.

- In each layer, hidden units = 400, dropout = 10%, epoch = 20, encoder activation function = sigmoid, decoder activation function = linear, loss function = MSE, batch = 20.

3.4. Experiment Process

- Step 1: Collect the electric load data to construct a training datasets matrix, where the data dimensions include historical electric load data, somatosensory temperature data, and relative humidity data.

- Step 2: Respectively calculate the daily average, weekly average and monthly average of historical electric load data and form three single-sequence datasets.

- Step 3: Input the three datasets into their respective SDAE network models, and set each correlation coefficient to obtain the daily average data weight matrix Wd and the corresponding paranoid item Bd, the weekly average data weight matrix Ww and the corresponding paranoid item Bw, the monthly average data weight matrix Wm, and the corresponding paranoid item Bm.

- Step 4: Respectively execute the forward algorithm and the backpropagation algorithm to optimize the parameters of the electric load model. Then, find the average load values with the smallest forecasting error as the fourth factor of the whole dataset to form a new training dataset which is input into the SDAE network model.

- Step 5: Minimize the cost of each neuron by multiple debugging to obtain a well-trained SDAE network model.

- Step 6: Import the previously prepared test data, input the original data into the well-trained SDAE model, and obtain the test result by iteration.

- Step 7: Compare the predicted values with the actual values and calculate the average error.

4. Case Study

4.1. Data Descriptions

4.2. Forecasting Performance Metrics

4.3. Load Forecasting

4.3.1. The Selection of the Fourth Factor

4.3.2. Forecast Results and Comparative Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Lai, C.S.; Tao, Y.; Xu, F.; Ng, W.W.; Jia, Y.; Yuan, H.; Huang, C.; Lai, L.L.; Xu, Z.; Locatelli, G. A robust correlation analysis framework for imbalanced and dichotomous data with uncertainty. Inform. Sci. 2019, 470, 58–77. [Google Scholar] [CrossRef]

- Hernandez, L.; Baladron, C.; Aguiar, J.M.; Carro, B.; Sanchez-Esguevillas, A.J.; Lloret, J.; Massana, J. A Survey on Electric Power Demand Forecasting: Future Trends in Smart Grids, Microgrids and Smart Buildings. IEEE Commun. Surv. Tutor. 2014, 16, 1460–1495. [Google Scholar] [CrossRef]

- Xiu-lan, S.; De-feng, H.; Li, Y. Bilinear models-based short-term load rolling forecasting of smart grid. In Proceedings of the 31st Chinese Control Conference, Hefei, China, 25–27 July 2012; pp. 6826–6829. [Google Scholar]

- Amral, N.; Ozveren, C.S.; King, D. Short term load forecasting using Multiple Linear Regression. In Proceedings of the 2007 42nd International Universities Power Engineering Conference, Brighton, UK, 4–6 September 2007; pp. 1192–1198. [Google Scholar]

- Lee, C.M.; Ko, C.N. Short-term load forecasting using lifting scheme and ARIMA models. Expert Syst. Appl. 2011, 5, 5902–5911. [Google Scholar] [CrossRef]

- Taylor, J.W. Short-Term Load Forecasting With Exponentially Weighted Methods. IEEE Trans. Power Syst. 2012, 2, 458–464. [Google Scholar] [CrossRef]

- Huang, N.; Lu, G.; Xu, D. A Permutation Importance–Based Feature Selection Method for Short-Term Electricity Load Forecasting Using Random Forest. Energies 2016, 9, 767. [Google Scholar] [CrossRef]

- Moon, J.; Kim, Y.; Son, M.; Hwang, E. Hybrid Short-Term Load Forecasting Scheme Using Random Forest and Multilayer Perceptron. Energies 2018, 11, 3283. [Google Scholar] [CrossRef]

- Ceperic, E.; Ceperic, V.; Baric, A. A Strategy for Short-Term Load Forecasting by Support Vector Regression Machines. IEEE Trans. Power Syst. 2013, 11, 4356–4364. [Google Scholar] [CrossRef]

- Merkel, G.; Povinelli, R.; Brown, R. Short-Term Load Forecasting of Natural Gas with Deep Neural Network Regression. Energies 2018, 11, 2018. [Google Scholar] [CrossRef]

- Hossen, T.; Plathottam, S.J.; Angamuthu, R.K.; Ranganathan, P.; Salehfar, H. Short-term load forecasting using deep neural networks (DNN). In Proceedings of the 2017 North American Power Symposium (NAPS), Morgantown, WV, USA, 17–19 September 2017; pp. 1–6. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, L. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Chen, X.; Liu, X.; Wang, Y.; Gales, M.J.F.; Woodland, P.C. Efficient Training and Evaluation of Recurrent Neural Network Language Models for Automatic Speech Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 11, 2146–2157. [Google Scholar] [CrossRef]

- Li, X.; Wu, X. Constructing long short-term memory based deep recurrent neural networks for large vocabulary speech recognition. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 2015; pp. 4520–4524. [Google Scholar]

- Mayer, H.; Gomez, F.; Wierstra, D.; Nagy, I.; Knoll, A.; Schmidhuber, J. A System for Robotic Heart Surgery that Learns to Tie Knots Using Recurrent Neural Networks. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 543–548. [Google Scholar]

- Sundermeyer, M.; Ney, H.; Schlüter, R. From Feedforward to Recurrent LSTM Neural Networks for Language Modeling. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 3, 517–529. [Google Scholar] [CrossRef]

- Senjyu, T.; Mandal, P.; Uezato, K.; Funabashi, T. Next, day load curve forecasting using recurrent neural network structure. IEE Proc. Gen. Trans. Distrib. 2004, 5, 388–394. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M.; Li, R. The title of the cited articleDeep Learning for Household Load Forecasting—A Novel Pooling Deep RNN. IEEE Trans. Smart Grid 2018, 9, 5271–5280. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2019, 1, 841–851. [Google Scholar] [CrossRef]

- Tian, C.; Ma, J.; Zhang, C.; Zhan, P. A Deep Neural Network Model for Short-Term Load Forecast Based on Long Short-Term Memory Network and Convolutional Neural Network. Energies 2018, 12, 3493. [Google Scholar] [CrossRef]

- Kim, M.; Choi, W.; Jeon, Y.; Liu, L. A Hybrid Neural Network Model for Power Demand Forecasting. Energies 2019, 12, 931. [Google Scholar] [CrossRef]

- Gan, D.; Wang, Y.; Zhang, N.; Zhu, W. Enhancing short-term probabilistic residential load forecasting with quantile long–short-term memory. J. Eng. 2017, 2017, 2622–2627. [Google Scholar] [CrossRef]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M.A. Single and Multi–Sequence Deep Learning Models for Short and Medium Term Electric Load Forecasting. Energies 2019, 12, 149. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Wang, Y.; Liao, W.; Chang, Y. Gated Recurrent Unit Network–Based Short-Term Photovoltaic Forecasting. Energies 2018, 11, 2163. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked denoising auotoencoders: Learning useful representations in a deep network with a local denoising criterion. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural network. Science 2006, 7, 504–507. [Google Scholar] [CrossRef]

- Ye, X.; Wang, L.; Xing, H.; Huang, L. Denoising hybrid noises in image with stacked autoencoder. In Proceedings of the IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 2720–2724. [Google Scholar]

- Gu, F.; Khoshelham, K.; Valaee, S.; Shang, J.; Zhang, R. Locomotion Activity Recognition Using Stacked Denoising Autoencoders. IEEE Internet Things J. 2018, 6, 2085–2093. [Google Scholar] [CrossRef]

- Du, B.; Xiong, W.; Wu, J.; Zhang, L.; Zhang, L.; Tao, D. Stacked Convolutional Denoising Auto-Encoders for Feature Representation. IEEE Trans. Cybernet. 2017, 4, 1017–1027. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.A. Extracting and Composing Robust Features with Denoising Auto conder. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; Volume 10, pp. 1096–1103. [Google Scholar]

- Rostami, T. Adaptive color mapping for NAO robusting neural network. Adv. Comput. Sci. Int. J. 2015, 3. [Google Scholar]

- Kuo, J.Y.; Pan, C.W.; Lei, B. Using Stacked Denoising Autoencoder for the Student Dropout Prediction. In Proceedings of the 2017 IEEE International Symposium on Multimedia (ISM), Taichung, Taiwan, 11–13 December 2017; pp. 483–488. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Liang, J.; Liu, R. Stacked Denoising Autoencoder and Dropout Together to Prevent Overfitting in Deep Neural Network. In Proceedings of the 2015 8th International Congress on Image and Signal Processing (CISP), Shenyang, China, 14–16 October 2015; Volume 10, pp. 697–701. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Wang, L.; Zhang, Z.; Chen, J. Short-Term Electricity Price Forecasting With Stacked Denoising Autoencoders. IEEE Trans. Power Syst. 2017, 7, 2673–2681. [Google Scholar] [CrossRef]

- Historical Weather Data of Fuyang. Available online: https://tianqi.911cha.com (accessed on 1 March 2019).

| Metric | DA | WA | MA |

|---|---|---|---|

| MSE/MW | 316.93 | 19,066.71 | 25,043.12 |

| MAE/MW | 14.56 | 119.54 | 158.25 |

| RMSE/MW | 10.41 | 79.80 | 158.25 |

| Week | Average MAPE | Max MAPE | Average MSE/MW | Max MSE/MW |

|---|---|---|---|---|

| Monday | 2.87% | 13.05% | 1470.09 | 20,208.65 |

| Tuesday | 2.84% | 14.89% | 1474.40 | 22,878.74 |

| Wednesday | 2.94% | 13.51% | 1509.40 | 24,338.06 |

| Thursday | 2.90% | 14.00% | 1547.41 | 22,898.14 |

| Friday | 2.88% | 13.57% | 1506.83 | 20,218.29 |

| Saturday | 2.85% | 15.89% | 1580.70 | 28,186.05 |

| Sunday | 2.82% | 13.66% | 1592.79 | 26,784.63 |

| MAPE | MSE/WM | MAE/WM | RMSE/WM | |

|---|---|---|---|---|

| SDAE | 2.88% | 1524.44 | 27.99 | 27.22 |

| BP | 3.66% | 4030.83 | 35.19 | 56.51 |

| AE | 6.16% | 12,082.66 | 56.61 | 94.24 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, P.; Zheng, P.; Chen, Z. Deep Learning with Stacked Denoising Auto-Encoder for Short-Term Electric Load Forecasting. Energies 2019, 12, 2445. https://doi.org/10.3390/en12122445

Liu P, Zheng P, Chen Z. Deep Learning with Stacked Denoising Auto-Encoder for Short-Term Electric Load Forecasting. Energies. 2019; 12(12):2445. https://doi.org/10.3390/en12122445

Chicago/Turabian StyleLiu, Peng, Peijun Zheng, and Ziyu Chen. 2019. "Deep Learning with Stacked Denoising Auto-Encoder for Short-Term Electric Load Forecasting" Energies 12, no. 12: 2445. https://doi.org/10.3390/en12122445