1. Introduction

The world energy demand is increasing day by day. As pointed out in [

1], it is estimated that the world energy consumption will increase from 549 quadrillion British thermal unit (Btu) in 2012 to 629 quadrillion Btu in 2020. A further 48% increase (to 815 quadrillion Btu) is expected by 2040. More than half of the increase will correspond to Asian countries that do not belong to the Organization for Economic Co-operation and Development (OECD), including China and India.

Several factors are contributing to such growing energy demand, e.g., the rapid grow of the human population and increasing energy required by buildings and technology applications. Therefore, the development of efficient energy management systems and predictive models for forecasting energy consumption are becoming important in decision-making for effective energy saving and development in particular areas, in order to decrease both the costs associated to it and the environmental impact this consumption presents. Governments are also taking actions into these matters. For example, the European Commission is constantly developing measures to increase the EU’s energy-efficiency targets and to make them legally binding. Under the current energy plan, EU countries will have to adopt a set of minimum energy efficiency requirements in order to achieve an increment of at least

in the energy efficiency [

2]. Moreover, all EU countries have reached an agreement in order to reach an increment of at least

by 2020, , to be reviewed by 2020 with the potential to raise the target to

by 2030.

Electric energy consumption forecasting algorithms can provide several benefits in this sense. For example, in [

3,

4] forecasting is used to assess what fraction of the generated power should be stored locally for later use and what fraction of it can instead be fed to the loads or injected into the network. Generally, forecasting can be divided into three categories, depending on the prediction horizon, i.e., the time scale of the predictions. Short-term load forecasting, characterised by prediction horizons going from one hour up to a week, medium-term load forecasting, with prediction from one month up to a year, and long-term load forecasting, for prediction involving a prediction horizon of more than one year [

5].

Short-term load forecasting is an important problem. In fact, with reliable and precise prediction of short-term load, schedules can be generated in order to determine the allocation of generation resources, operational limitations, environmental and equipment usage constraints. Knowing the short-term energy demand can also help in ensuring the power system security since accurate load prediction can be used to determine the optimal operational state of power systems. Moreover, the predictions can be helpful in preparing the power systems according to the future predicted load state. Precise predictions also have an economic impact, and may improve the reliability of power systems. The reliability of a power system is affected by abrupt variations of the energy demand. Shortage of power supply can be experienced if the demand is underestimated, while resources may be wasted in producing energy if such energy demand is overestimated. From the above observations, we can understand why short-term load forecasting has gained popularity. The work presented in this paper lies among the short-term load forecasting.

Basically, there are two main approaches to forecasting energy consumption, conventional methods, such as [

6,

7] and, more recently, methods based on machine learning. Conventional methods, including statistical analysis, smoothing techniques such as the autoregressive integrated moving average (ARIMA) and exponential smoothing and regression-based approaches, can achieve satisfactory results when solving linear problems. Machine learning strategies, in contrast to traditional methods, are also suitable for non-linear cases. Among the machine learning strategies approaches, strategies such as Artificial Neural Networks (ANN) or Support Vector Machines (SVM) have been successfully (and increasingly) exploited to forecast power consumption data, e.g., [

8,

9,

10]. Although machine learning techniques provide effective solutions for time series forecasting, these methods tend to get stuck in a local optimum. For instance, ANN and SVM may get trapped in a local optimum if the configurations parameters are not properly set.

In order to overcome such limitations, in this paper we propose an approach based on ensemble learning [

11,

12,

13], and more specifically, we propose a two-layer ensemble scheme. Ensemble learning is a machine learning paradigm where multiple learners are trained to solve the same problem. In contrast to ordinary machine learning approaches, which try to learn one hypothesis from training data, ensemble methods try to construct a set of hypotheses and combine them. This approach usually yields better results than the use of a single strategy, since it provides better generalizations, i.e., adaptation to unseen cases, better capability of escaping from local optima and superior search capabilities. In this paper, we propose a novel ensemble scheme, which is based on two layers. On the bottom layer, three learning algorithms are used, and their predictions are used by another strategy at the top level.

In order to assess the performances of our proposal, we use a dataset regarding the electricity consumption in Spain registered over a period of more than nine years. We use a fixed prediction horizon of four hours, while we vary the historical window size, i.e., the amount of historical data used in order to make the predictions. Experimental results shows that an ensemble scheme can achieve better results than single methods, obtaining more precise predictions than other state of the art methods. Therefore, we can summarize the contributions of this work as follows:

The rest of the paper is organised as follows. In

Section 2, we provide a brief overview of the state of the art on prediction of time series, with a special focus on prediction of energy consumption.

Section 3 describes the data used in this paper and the proposed strategy. In particular, in

Section 3.3 we describe the particular ensemble learning scheme used. Results are discussed in

Section 4. Finally in

Section 5, we draw the main conclusions and discuss possible future works.

2. Time Series Forecasting

This section provides a basic background on time series. We refer the reader to [

14] for a more extensive introduction to time series analysis. Moreover, in

Section 2.1 we present an overview of relevant works on time series forecasting.

A time series is a sequence of time-ordered observations measured at equal intervals of time. In a time series consisting of T real value samples , () represents the recorded value at time i. We can then define the problem of time series forecasting as the problem of predicting the values of , given the previous () samples, with the objective of minimizing the error between the predicted value and the actual value (). Here, we refer to w as the historical window, i.e., how many values we consider in order to produce the predictions, and to h as the prediction horizon, which represents how far in the future one aims to predict.

Traditionally, time series are decomposed into the three components [

14]:

Trend—This term refers to the general tendency exhibited by the time series. A time series can present different types of trends, such as linear, logarithmic, exponential power, polynomial, etc.

Seasonality—This is a pattern of changes that represents periodic fluctuations of constant length. This variations are originated by effects that are stable along with time, magnitude and direction.

Residual—This component represents the remaining, mostly unexplainable, parts of the time series. It also describes random and irregular influences that, in case of being high enough, can mask the trend and seasonality.

More decomposition patterns can be included in order to represent long-run cycles, e.g., holiday effects. However, real-world time series are challenging to forecast due to meaningful irregular components they incorporate.

An important aspect is also to determine if a time series is stationary. This means to verify whether or not the mean and variance of the time series are constant over time. If a time series is not stationary, some transformation techniques must be applied before one can apply some forecasting methods.

According to the number of variables involved, time series analysis can be divided into univariate and multivariate analysis [

15]. In the univariate case, a time series consists of a single observation recorded sequentially. In contrast, in multivariate time series the values of more than one variables are recorder at each time stamp. The interaction among such variables should be taken into account.

There are different techniques that can be applied to the problem of time series forecasting. Such approaches can be roughly divided into two categories, linear and non-linear methods [

16]. Linear methods try to model the time series using a linear function. The basic idea is that even if the random component of a time series may prevent one from making any precise predictions, the strong correlation among data allows to assume that the next observation can be determined by a linear combination of the preceding observations, except for additive noise.

Non-linear methods are currently in use in the machine learning domain. These methods try to extract a model, that can be non-linear, which describe the observed data, and then use the so obtained model in order to forecast future values of the time series. Machine learning techniques have gained popularity in the forecasting field, due to the fact that while conventional methods can achieve satisfactory results in linear problems, machine learning methods are suitable also for non-linear modelling [

15].

2.1. Related Work

The number of studies addressing the electricity consumption forecasting is increasing due to several reasons, such as gaining knowledge about the demand drivers [

17], or comprehending the different energy consumption patterns in order to adopt new policies according to demand response scenarios [

18], or, again, measuring the socio-economic and environmental impact of energy production for a more sustainable economy [

19].

In the conventional approach, the Auto-Regressive and Moving Average (ARMA) is a very common technique that arises as a mix of the Auto-Regressive (AR) and the Moving Average (MA) models. In [

6] Nowicka-Zagrajek and Weron applied the ARMA model to the California power market. In another work, Chujai et al. [

20] compared the Auto-Regressive Integrated Moving Average (ARIMA) with ARMA on household electric power consumption. The results showed that the ARIMA model performed better than ARMA at forecasting longer periods of time, while ARMA is better at shorter periods of time. The ARIMA methods were applied in [

21] by Mohanad et al. to predict short-term electricity demand in Queensland (Australia) market. ARMA is usually applied on stationary stochastic processes [

6] while ARIMA on non-stationary cases [

22].

Regression based methods are also popular in energy consumption studies. The use of the simple regression model of the ambient temperature was proposed by Schrock and Claridge [

23], where the authors investigated a supermarket’s electricity use. In later studies, however, the use of multiple regression analysis is preferred, due to the capability to handle more complex models. Lam et al. [

24] used such an approach to analyse office buildings in different climates in China. In another work, Braun et al. [

25] performed multiple regression analysis on gas and electricity usage in order to study how the change in the climate affects the energy consumption in buildings. In a more recent work Mottahedia et al [

26] investigated the suitability of the multiple-linear regression to model the effect of building shape on total energy consumption in two different climate regions.

As stated in the previous section, a significant part of recent studies in the literature is focussed on time series forecasting using machine learning techniques. Among these techniques, Artificial Neural Networks (ANN) have been extensively applied. In an early work presented by Nizami and Ai-Garni [

27], the authors developed a two-layered fed-forward ANN to analyse the relation between electric energy consumption and weather-related variables. In another work, Kelo and Dudul [

28] proposed to use a wavelet Elman neural network to forecast short-term electrical load prediction under the influence of ambient air temperature. In [

29] Chitsaz et al. combined the wavelet and ANN for short-term electricity load forecasting in micro-grids. In a more recent work, Zheng et al. [

30] developed a hybrid algorithm that combines similar days selection, empirical mode decomposition, and long short-term memory neural networks to construct a prediction model for short-term load forecasting. Other recent examples of using ANN for the problem of energy consumption prediction are [

31,

32,

33].

Despite the popularity of ANN, other novel-techniques are lately gaining attention. For instance, Talavera-Llames et al. [

34] adapted a Nearest Neighbours-based strategy to address the energy consumption forecasting problem in a Big Data environment. Torres et al. [

35] developed a novel strategy based on Deep Learning to predict times series and tested such strategy on electricity consumption data recorded in Spain from 2007 to 2016. Zheng et al. [

36] also presents a Deep Learning approach to deal with forecasting short term electric load time series. Galicia et al. [

37] compared Random Forest with Decision Trees, Linear Regression and the gradient-boosted trees on Spanish electricity load data with a ten-minute frequency. Furthermore, Evolutionary Algorithms have been applied to short-term forecasting energy demand by Castelli et al. in [

38,

39]. Burger and Moura [

40] tackled the forecasting of electricity demand by applying an ensemble learning approach that uses Ordinary Least Squares and k-Nearest Neighbors. In [

41], Papadopoulos and Karakatsanis explore the ensemble learning approach and compare four dfferent mehtods: seasonal autoregressive moving average (SARIMA), seasonal autoregressive moving average with exogenous variable (SARIMAX), random forests (RF) and gradient boosting regression trees (GBRT). Finally, Li et al. [

42] proposed a novel ensemble method for load forecasting based on wavelet transform, extreme learning machine (ELM) and partial least squares regression.

For a more exhaustive review of the state of the art in the field of time series forecasting, we refer the reader to, for example, Martínez-Álvarez et al. [

16], where an extensive review of machine learning methods is proposed, while Daut et al. [

43] and Deb et al. [

15] review conventional and artificial intelligence methods.

3. Materials and Methods

In this section we will provide details about the data and the methods used in this paper.

3.1. Data

The dataset used in this work records the general electricity consumption in Spain (expressed in MW) over a period of 9 years and 6 months, with a 10 min period between each measurement. Thus, what is measured is the electricity consumption taken as a whole, not relative to a specific sector. In total, the dataset is composed by 497.832 measurements, which go from 1 January 2007 at midnight till 21 June 2016 at 11:40 p.m. The dataset is available on request.

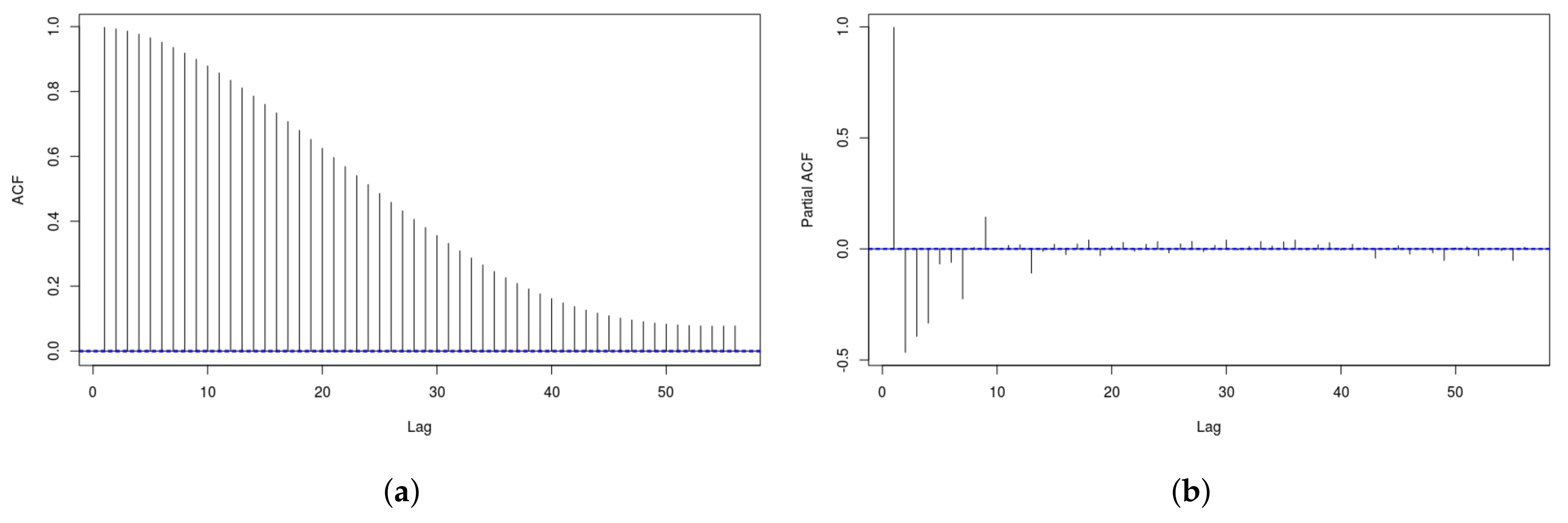

Figure 1 shows both the AutoCorrelation Function (ACF) and the Partial AutoCorrelation Function (PACF) for the dataset considered in this paper. Both graphs have a few significant lags but these die out quickly, so we can conclude our series is stationary. In order to support this conclusions, we have run different tests, namely the Ljung-Box, the Augmented Dickey–Fuller (ADF) and the Kwiatkowski-Phillips-Schmidt-Shin (KPSS). All the test have return a very low p-value, confirming the stationarity of the series.

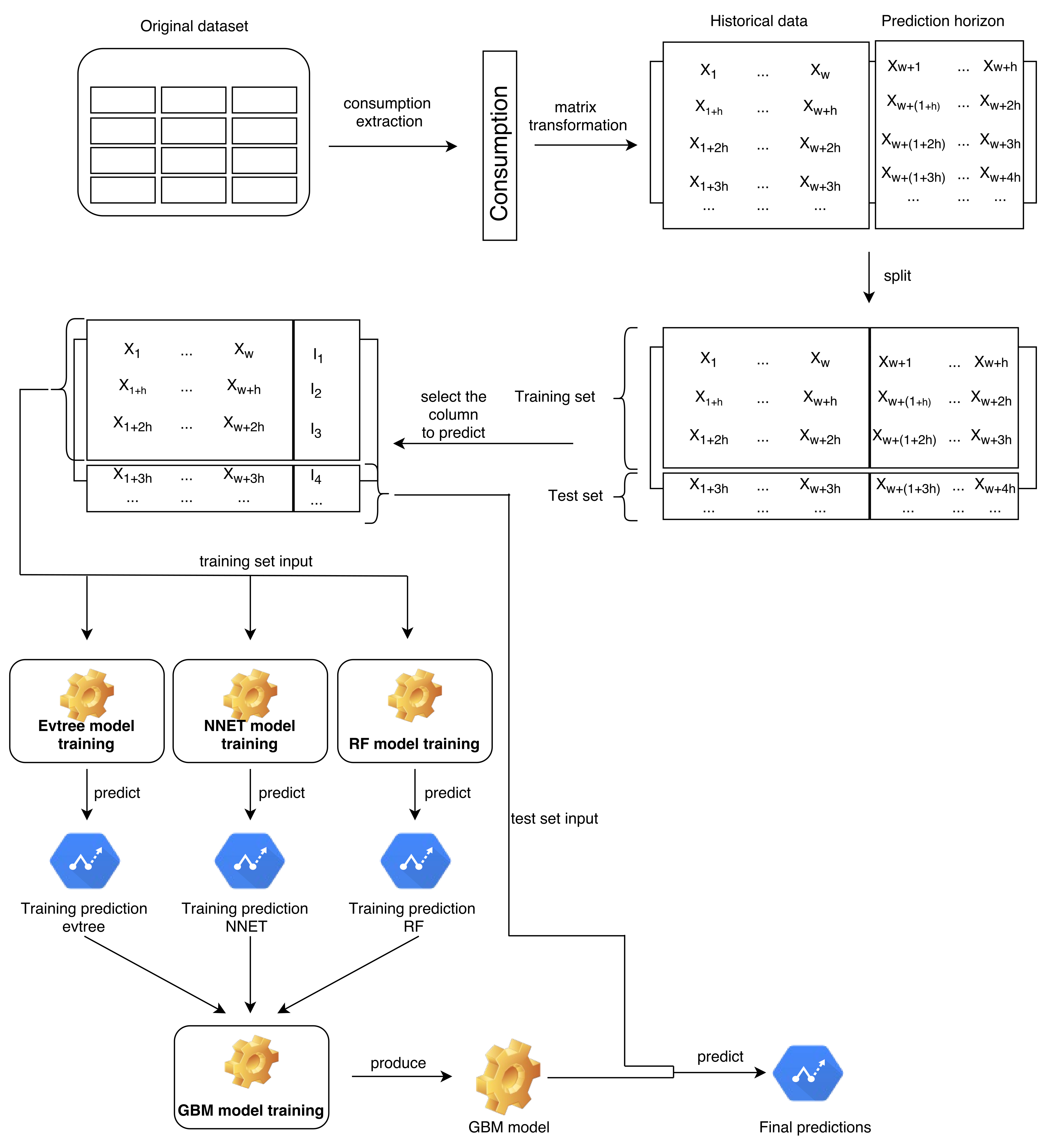

The original dataset has been pre-processed in order to be used, as in [

35]. First, the attribute corresponding to consumption has been extracted, and a consumption vector has been obtained. After that, the consumption vector has been redistributed in a matrix depending on a historical window,

w, and a prediction horizon,

h. The historical window, or data history (

w) represents the number of previous entries taken into consideration in order to train a model that will be used to predict the subsequent values (

h). This process is detailed in

Figure 2.

In this study, the prediction horizon (

h) has been set to 24, corresponding to a period of 4 h. Moreover, different values of the data history have been used. In particular,

w has been set to the values 24, 48, 72, 96, 120, 144 and 168, corresponding to 4, 8, 12, 16, 20, 24 and 28 h, respectively. The resulting datasets have been divided into

for the training set and

for the test set.

Table 1 provides the details of each dataset. Notice that for all the obtained datasets, the last 24 columns represent the values to be predicted, and thus are not considered for training purposes.

3.2. Ensemble Learning

In the last few years, ensemble models are taking more relevance due to the good performance obtained in several tasks like classification or regression problems [

44]. These methods consist in combining different learning models in order to improve the results obtained by each individual model.

The earliest works on ensemble learning were carried out in 1990s, e.g., [

45,

46,

47], where it was proven that multiple weak learning algorithms could be converted into a strong learning algorithm. In a nutshell, ensemble learning [

48,

49] is a procedure where multiple learner modules are applied on a data set to extract multiple predictions. Such predictions are then combined into one composite prediction.

Usually two phases are employed. In a first phase a set of base learners are obtained from training data, while in the second phase the learners obtained in the first phase are combined in order to produce a unified prediction model. Thus, multiple forecasts based on the different base learners are constructed and combined into an enhanced composite model superior to the base individual models. This integration of all good individual models into one improved composite model generally leads to higher accuracy levels.

According to [

48] there are three main reasons why ensemble learning is successful in ML. The first reason is statistical. Models can be seen as searching a hypothesis space

H to identify the best hypothesis. However, since usually the datasets are limited, we can find many different hypotheses in

H which can fit reasonably well, and we cannot establish a priori which one will generalize better, i.e., will perform the best on unseen data. This makes it difficult to choose among the hypotheses. It follows that the use of ensemble methods can help to avoid this issue by using several models to get a good approximation of the unknown true hypothesis.

The second reason is computational. Many models work by performing some form of local search to minimize error functions. These searches can get stuck in local optima. An ensemble constructed by starting the local search from many different points may provide a better approximation to the true unknown function.

The third argument is representational. In many situations, the unknown function we are looking for may not be included in H. However, a combination of several hypotheses drawn from H can enlarge the space of representable functions, which could then also include the unknown true function.

The most used and well-known of the basic ensemble methods are bagging, boosting and stacking.

Bagging in this scheme, a number of models are built, the results obtained by these models are considered equally, and a voting mechanism is used in order to settle on the majority result. In case of regression the average predictions is usually the final output.

Boosting is similar to bagging, but with one conceptual modification. Instead of assigning equal weighting to models, boosting assigns different weights to classifiers, and derives its ultimate result based on weighted voting. In case of regression a weighted average is usually the final output.

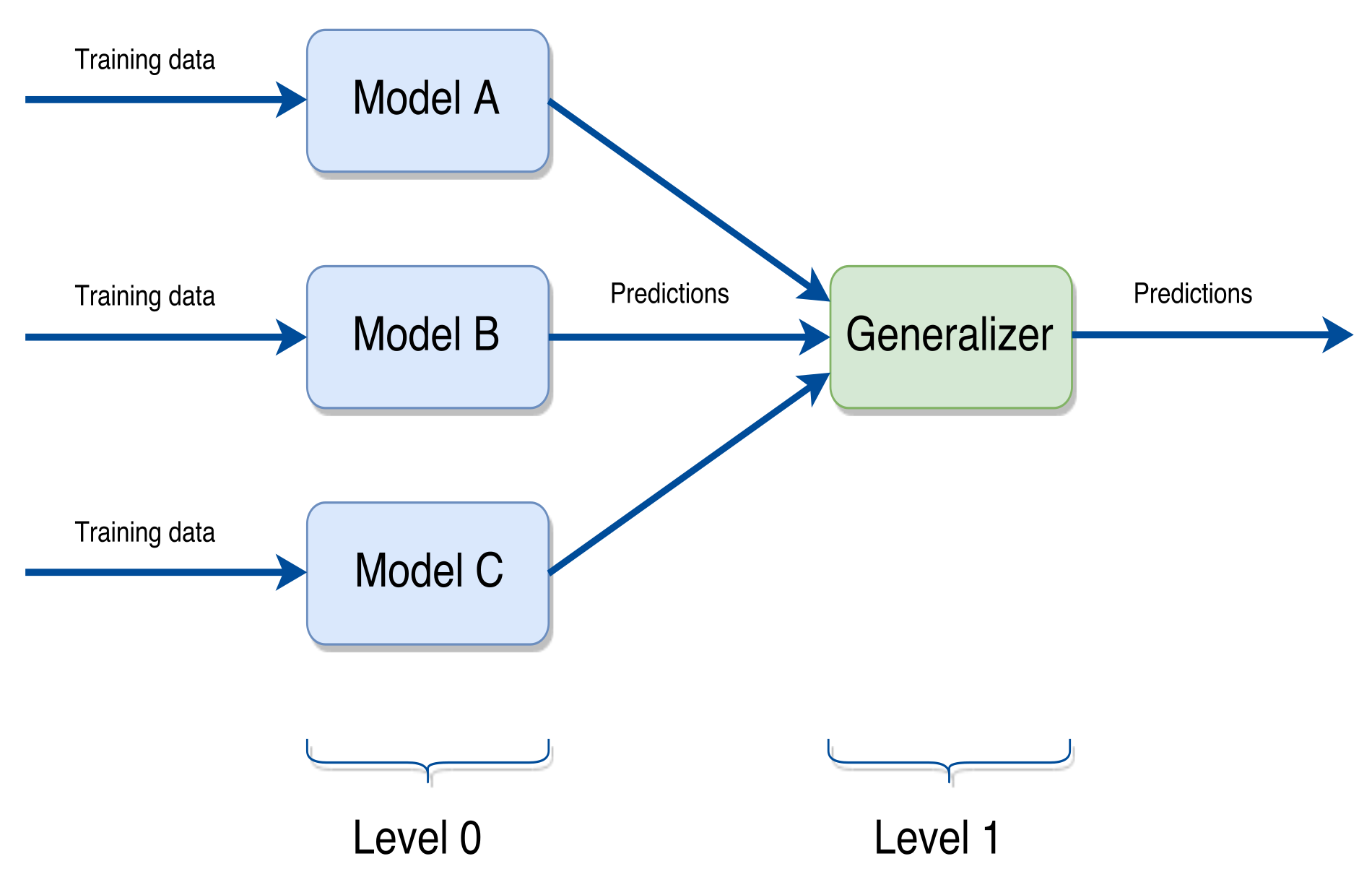

Stacking builds its models using different learning algorithms and then a combiner algorithm is trained to make the ultimate predictions using the predictions generated by the base algorithms. This combiner can be any ensemble technique.

In this paper we have used a stacking approach, since we believe it to be the most suitable in case of the regression problem considered in this work.

Figure 3 shows a general scheme of such approach. In the following section we will specify which learning algorithms have been used in the scheme we propose. We can define a stacking ensemble scheme more formally in the following way. Given a set of

N different learning algorithms

and the pair

, with

representing the

w recorded values and

the

h values to predict. Let

be the model induced by the learning algorithm

on

to predict

, and let

be the generalizer function responsible for combining the models for predicting such value.

can be a generic function, such as the average, or a model induced by a learning algorithm. Then, the estimated

value is given by the expression:

Ensemble methods have been successfully applied for solving pattern classification, regression and forecasting in time series problems [

50,

51]. For example, Adhikari [

52] proposed a linear combination method for time series forecasting that determines the combining weights through a novel neural network structure. Bagnal et al. [

53] proposed a method using an ensemble of classifiers on different data transformations in order to improve the accuracy of time-series classification. Authors demonstrated that the simple combination of all classifiers in one ensemble obtained better performance than any of its components. Jin and Dong [

51] proposed a deep neural network-based ensemble method that integrates filtering views, local views, distorted views, explicit and implicit training, subview prediction, and Simple Average for classification of biomedical data. In particular, they used the Chinese Cardiovascular Disease cardiograms database. Chatterjee et al. [

54] developed an ensemble support vector machine algorithm for reliability forecasting of a mining machine. This method is based on least square support vector machine (LS-SVM) with hyper parameters optimized by a Genetic Algorithm (GA). The output of this model was generalized from a combination of multiple SVM predicted results in time series dataset. Additionally, the advantages of ensemble methods for regression from different viewpoints such as strength-correlation or biasvariance was also demonstrate in the literature [

55].

Ensemble learning based methods have been also applied in energy time series forecasting context. For example, Zang et al. [

56] proposed a method, called extreme learning machine (ELM), which was successfully applied on the Australian National Electricity Market data. Another example was presented by Tan et al. in [

57] where the authors proposed a price forecasting method based on wavelet transform combined with ARIMA and GARCH models. The method was applied on Spanish and PJM electricity markets. Fan et al. [

58] proposed a ensemble machine learning model based on Bayesian Clustering by Dynamics (BCD) and SVM. The proposed model was trained and tested on the data of the historical load from New York City in order to forecasts the hourly electricity consumption. Tasnim et al. [

59] proposed a cluster-based ensemble framework to predict wind power by using an ensemble of regression models on natural clusters within wind data. The method was tested on a large number of wind datasets of locations across spread Australia.

Ensembles of ANNs have been recently applied in the literature with the aim of energy consumption or price forecasting. For instance, the authors in [

60] presented a building-level neural network-based ensemble model for day-ahead electricity load forecasting. The method showed that it outperforms the previously established best performing model by up to 50%, in the context of load data from operational commercial and industrial sites. Jovanovic et al. [

61] used three artificial neural networks for prediction of heating energy consumption of a university campus. The authors tested the neural networks with different parameter combinations, which, when used in an ensemble scheme, achieved better results.

3.3. Methods

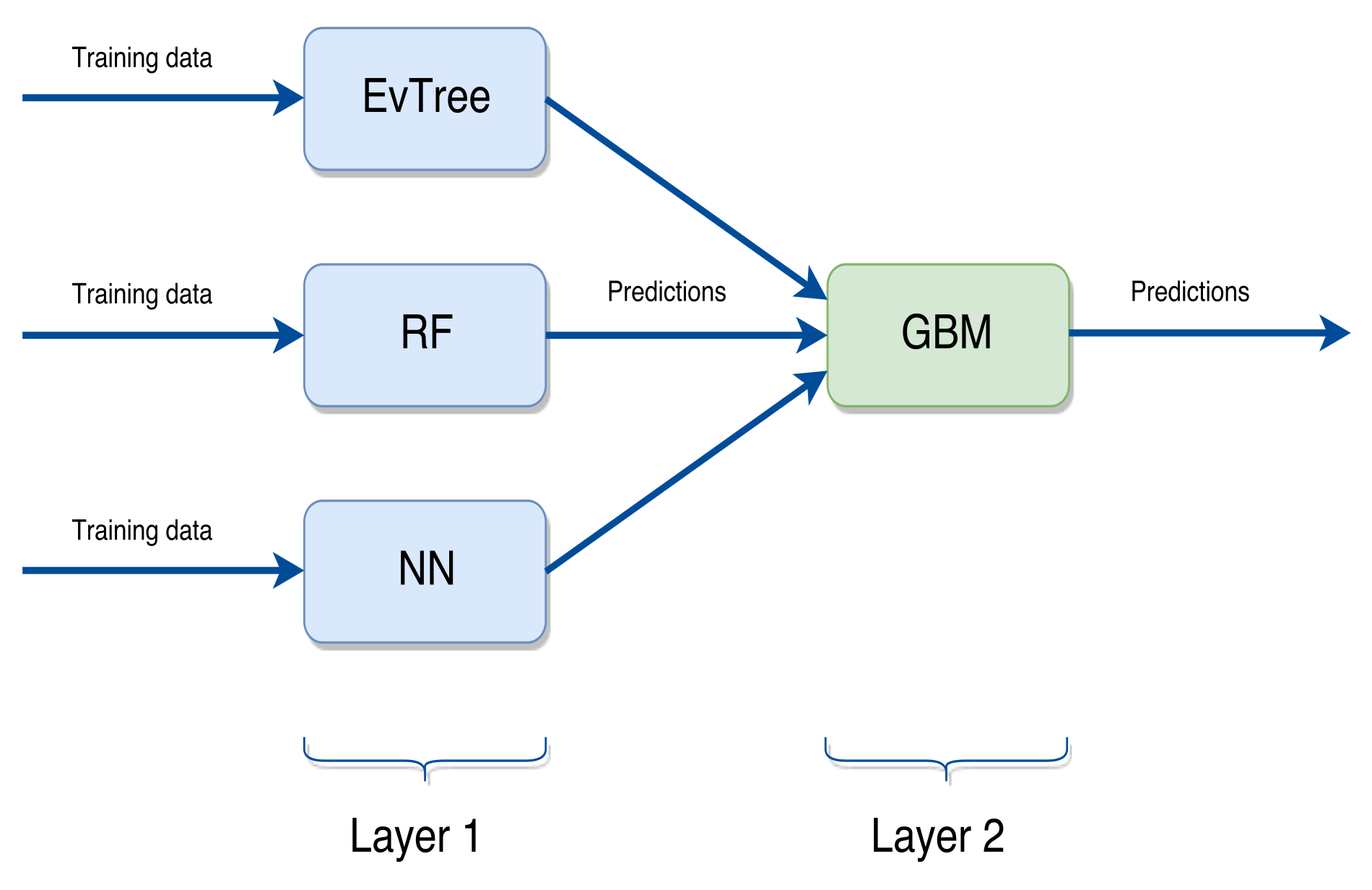

As already stated in

Section 3.2, in our proposal we used a stacking ensemble scheme. In particular, we employed a scheme formed by three base learning methods and a top method. The basic learning methods are regression trees based on Evolutionary Algorithms, Artificial Neural Networks and Random Forests. At the top level, we have used the Generalized Boosted Regression Models in order to combine the predictions produced by the bottom level. The employed scheme is graphically shown in

Figure 4.

In the following, we provide some basic notions regarding the methods used in the ensemble scheme.

Evolutionary Algorithms (EAs) for Regression Trees EAs [

62] are population-based strategies that use techniques inspired by evolutionary biology such as inheritance, mutation, selection and crossover. Each individual

i of the population represents a candidate solution to a given problem and is assigned a fitness function, which is a measure of the quality of the solution represented by

i. Typically EAs start from an initial population consisting of randomly initialised individuals. Each individual is evaluated in order to determine its fitness value. Then a selection mechanism is used in order to select a number of individuals. Usually the selection is based on the fitness, so that fitter individuals have more probabilities of being selected. Selected individuals generate offspring, i.e., new solutions, by means of the application of crossover and mutation operators. This process is repeated over a number of generations or until a good enough solution is found. The idea is that better and better solutions will be found at each generation. Moreover, the use of stochastic operators, such as mutation, allows EAs to escape from local optima. For the problem tackled in this paper, each individual encodes a regression tree. A regression tree is a decision tree similar to a classification tree [

63]. Both classification and regression trees aim at modeling a response variable

Y by a vector of

P predictor variables

. The different is that for classification trees,

Y is qualitative and for regression trees

Y is quantitative. In both cases

can be continuous and/or categorical variables.

Regression trees are commonly used in regression-type problems, where we attempt to predict the values of a continuous variable from one or more continuous and/or categorical predictor variables. An advantage of using regression trees is that results can be easier to interpret. Other greedy strategies have been used in order to obtained regression trees, for example [

64,

65]. The main challenge of such strategiesis that the search space is typically huge, rendering full-grid searches computationally infeasible. Due to their search capabilities, EAs have proven that they can overcome this limitation.

In this paper, we have used the R

evtree package (from now on EVTree) [

66], with the following parameters:

minbucket: 8 (minimum number of observations in each terminal node)

minsplit: 100 (minimum number of observations in each internal node)

maxdepth: 15 (maximum tree depth)

ntrees: 300 (number of tree in the population)

niterations: 1000 (maximum number of generations)

alpha: 0.25 (complexity part of the cost function)

operatorprob: with this parameter, we can specify, in list or vector form, the probabilities for the following variation operators:

- –

pmutatemajor: 0.2 (Major split rule mutation, selects a random internal node

r and changes the split rule, defined by the corresponding split variable

, and the split point

[

66])

- –

pmutateminor: 0.2 (Minor split rule mutation is similar to the major split rule mutation operator. However, it does not alter

and only changes the split point

by a minor degree, which is defined by four cases describes in [

66])

- –

pcrossover: 0.8 (Crossover probability)

- –

psplit: 0.2 (Split selects a random terminal-node and assigns a valid, randomly generated, split rule to it. As a consequence, the selected terminal node becomes an internal node r and two new terminal nodes are generated)

- –

pprune: 0.4 (Prune chooses a random internal node r, where r > 1, which has two terminal nodes as successors and prunes it into a terminal node [

66])

Artificial Neural Networks (ANNs) ANNs [

67] are computational models inspired by the structure and functions of biological neural networks. The basic unit of computation is the neuron, also called node, which receives input from other nodes or from an external source and computes an output. In order to compute such output, the node applies a function

f called the

Activation Function, which has the purpose of introducing non-linearity into the output. Furthermore, the output is produced only if the inputs are above a certain threshold.

Basically, an ANN creates a relationship between input and output values and is composed of interconnected nodes grouped in several layers. Among such layers we can distinguish the outer ones, called input and output layers, from the “internal” ones, called hidden layers. In contrast to biological neurons networks, ANNs usually consider only one type of node, in order to simplify the model calculation and analysis.

The intensity of the connection between nodes is determined by weights, which are modified during the learning process. Therefore, the learning process consists in adapting the connections to the data structure that model the environment and to characterize its relations.

According to the structure, there are different types of ANN. The suitability of the structure depends on several factors as, for example, the quality and the volume of the input data. The simplest type of ANN is the so called feedforward neural network. In such networks, nodes from adjacent layers are interconnected and each connection has a weight associated to it. The information moves forward from the input to the output layer through the hidden nodes. There is only one node at the output layers, which provides the final results of the network, being it a class label or a numeric value.

In this paper we have used the

nnet package of R [

68], a package for feed-forward neural networks with a single hidden layer, and for multinomial log-linear models.

The following parameters were used in this paper:

size: 10 (number of hidden units)

skip: true (add skip-layer connections from input to output)

MaxNWts: 10,000 (maximum number of weights allowed)

maxit: 1000 (maximum number of iterations)

Random Forests (RF) The term Random Forest was introduced by Breinman and Cutle in [

69], and refers to a set of decision trees which form an ensemble of predictors. Thus, RF is basically an ensemble of decision trees, where each tree is trained separately on a idependent randomly selected training set. It follows that each tree depends on the values of an input dataset sampled independently, with the same distribution for all trees.

In other words, the trees generated are different since they are obtained from different training sets from a bootstrap subsampling and different random subsets of features to split on at each tree node. Each tree is fully grown, in order to obtain low-bias trees. Moreover, at the same time, the random subsets of features result in low correlation between the individual trees, so the algorithm yields an ensemble that can achieve both, low bias and low variance [

70]. For classification, each tree in the RF casts a unit vote for the most popular class at input. The final result of the classifier is determined by a majority vote of the trees. For regression, the final prediction is the average of the predictions from the set of decision trees.

The method is less computationally expensive than others tree-based classifiers that adopt bagging strategies, since each tree is generated by taking into account only a portion of the input features [

71].

In this paper, we have used the implementation from the

randomForest package of R [

72], which provides a R interface to the original implementation by Breiman and Cutle. For this study, the algorithm is used with the following parameters:

Generalized Boosted Regression Models (GBM) [

73,

74]. This method iteratively trains a set of decision trees. The current ensemble of trees is used in order to predict the value of each training example. The prediction errors are then estimated, and poor predictions are adjusted, so that in the next iterations the previous mistakes are corrected. Gradient boosting involves three elements:

A loss function to be optimised. Such function is problem dependent. For instance, for regression a squared error can be used and for classification we could use logarithmic loss.

A weak learner to make predictions. Regression trees are used to this aim, and a greedy strategy is used in order to build such trees. This strategy is based on using a scoring function used each time a split point has to be added to the tree. Other strategies are commonly adopted in order to constrain the trees. For examples one may limit the depth of the tree, the number of splits or the number of nodes.

An additive model to add trees to minimise the loss function. This is done in a sequential way, and the trees already contained in the model built so far are not changed. In order to minimise the loss during this phase, a gradient descend procedure is used. The procedure stops when a maximum number of trees has been added to the model or once there is no improvement in the model.

Overfitting is common in gradient boosting, and usually, some regularisation methods are used in order to reduce it. These methods basically penalise various parts of the algorithm. Usually some mechanisms are used in order to impose constraints on the construction of decision trees, for example limit the depth of the trees, the number of nodes or leafs or the number of observation per split.

Another mechanism is shrinkage, which is basically weighting the contribution of each tree to the sequential sum of the predictions of the trees. This is done with the aim of slowing down the learning rate of the algorithm. As a consequence the training takes longer, since more trees are added to the model. In this way a trade-off between the learning rate and the number of trees can be reached.

In this paper we have used the GBM package of R [

75] with the following parameters:

distribution: Gaussian (function of the distribution to use)

n.trees: 3000 (total number of trees, i.e., the number of gradient boosting iteration)

interaction.depth: 40 (maximum depth of variable interactions)

shrinkage: 0.9 (learning rate)

n.minobsinnode: 3 (minimum number of observations in the trees terminal nodes)

All the parameters used in this paper were set after running preliminary experiments on the data.

We have selected the strategies forming our ensemble scheme based on their popularity and good results achieved in similar problems. Moreover, we have selected algorithms that base the predictions on decision trees, and complemented the possible weakness of such methods by using Artificial Neural Networks. In particular, both RF and GBM are based on an ensemble of decision trees, but the set of trees is obtained in a different way, with RF building each tree independently. EAs provide the ability of escaping local optima, thus we believe that these methods complement each other. Moreover, in order to overcome possible representation limitations of decision trees, we have used NNs, which can handle very well non-linear learning and are tolerant to noise. GBM training generally takes longer than RF, since trees are built sequentially. Moreover, decision trees obtained are prone to overfitting, so we have used it on the top layer, where the predictions are based on three columns, i.e., the output of the three base learners.

The final ensemble scheme we proposed is depicted in

Figure 5. We can see that the training set is used in order to obtain the predictions of the base level, consisting of RF, NN and EVTree. The so obtained predictions are then used by the top layer (GBM) in order to produce the final predictions for each problem.

Then, according to the notation introduced in

Section 3.2,

EVTree,

ANN and

= RF,

,

and

are the models induced by EVTree, ANN and RF, respectively, while

is the model produced by GBM. Thus the final predictions are produced by GBM, which builds the model using the predictions generated by the three bottom layer methods.

4. Results

In this section we provide the results obtained on the dataset described in

Section 3.1 and draw the main conclusions. In order to assess the performances of both the ensemble scheme and the base methods, we used five measures commonly used in regression: the mean relative error (MRE), the mean absolute error (MAE), the symmetric mean absolute percentage error (SMAPE), the coefficient of determination

, and the root mean squared error (RMSE), which are defined as [

16]:

In the above equations, is the predicted value, the real value and is the mean of the observed data.

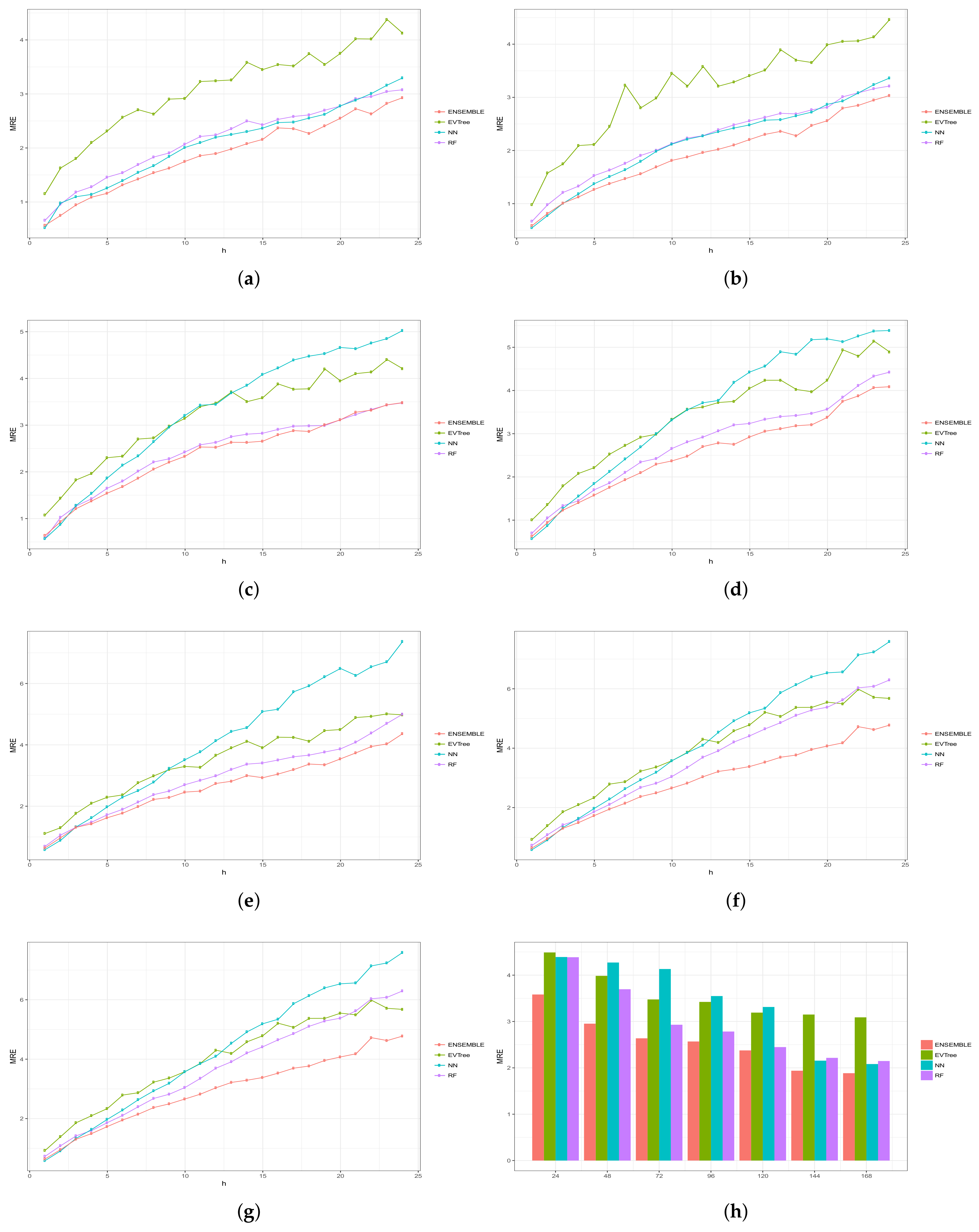

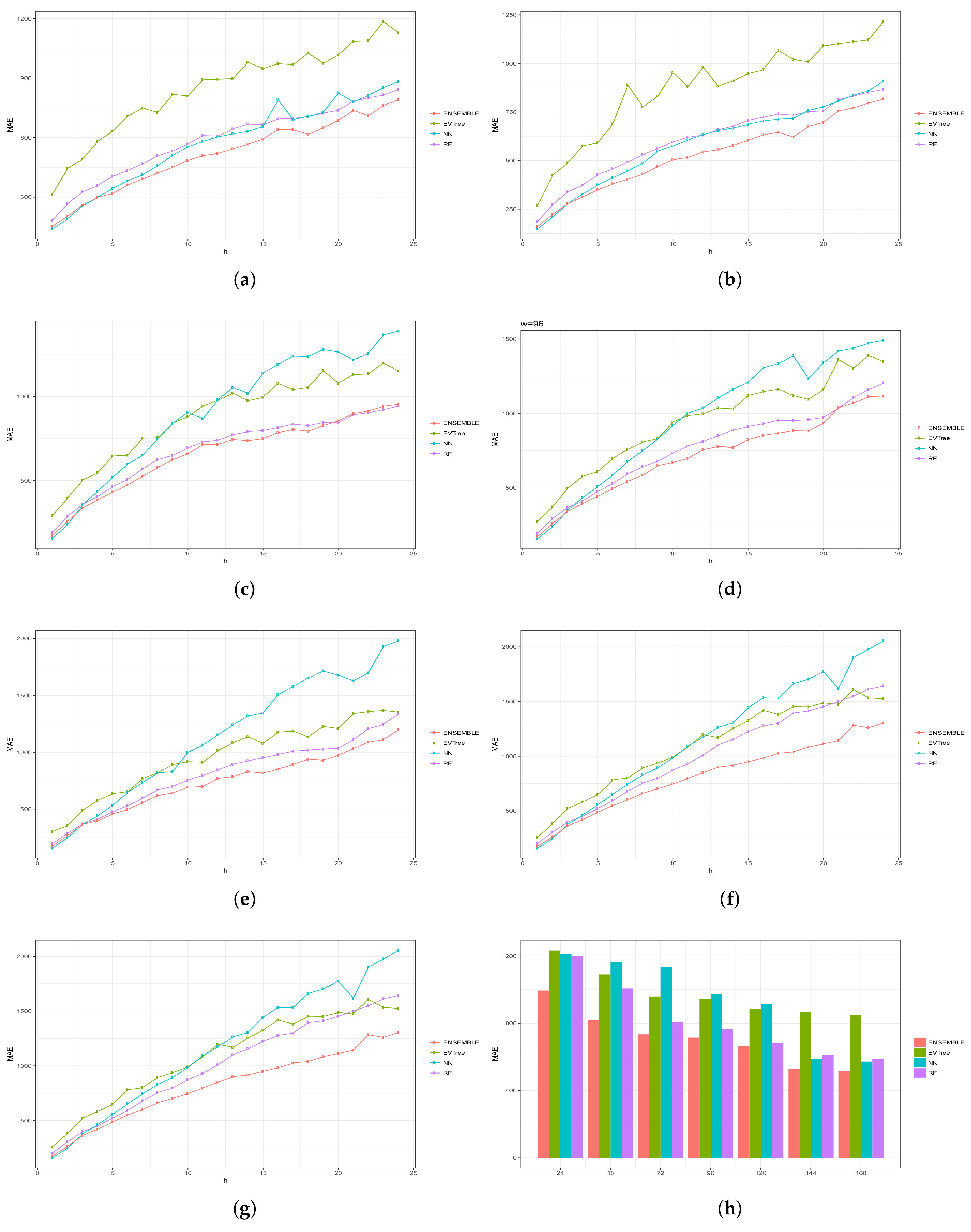

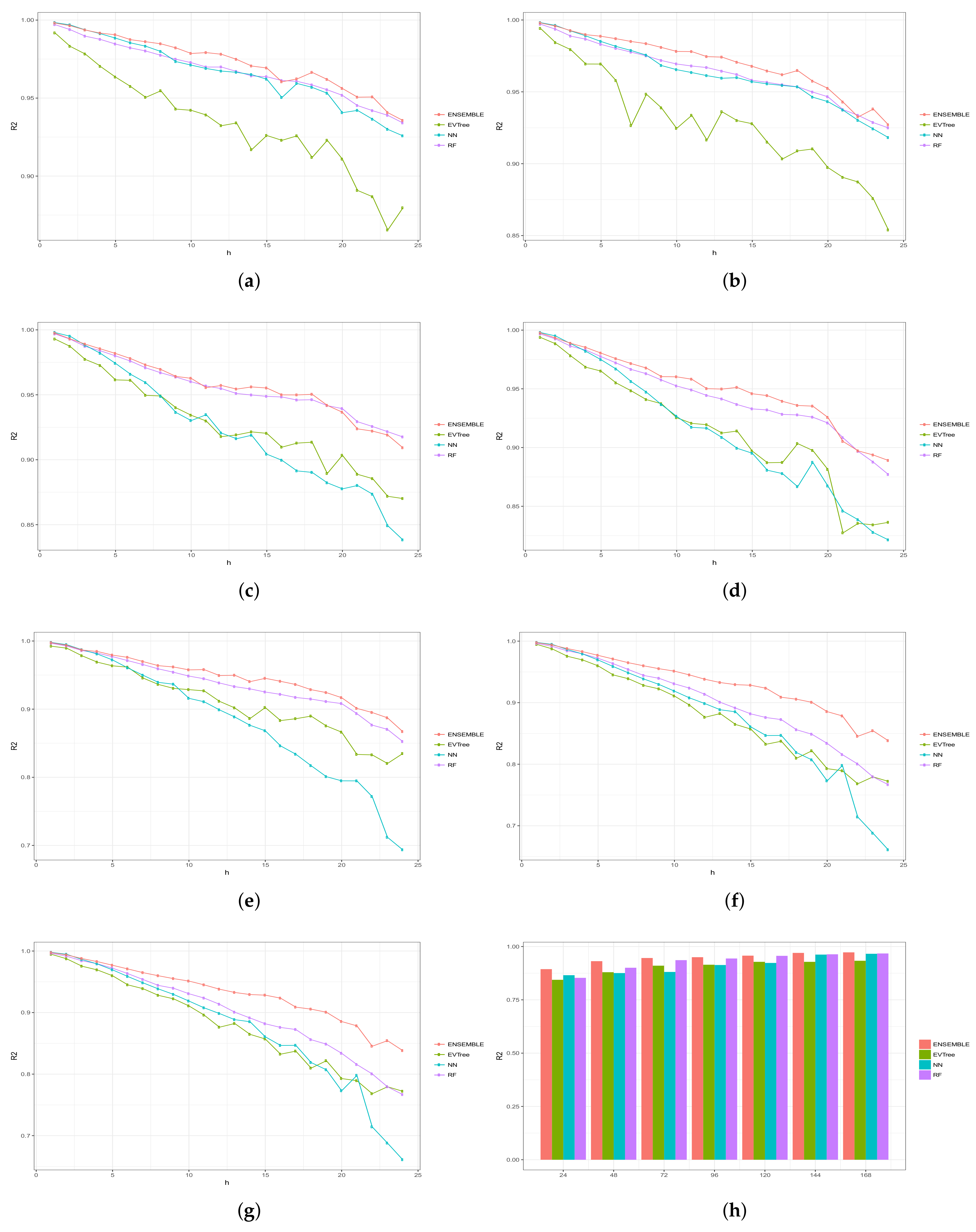

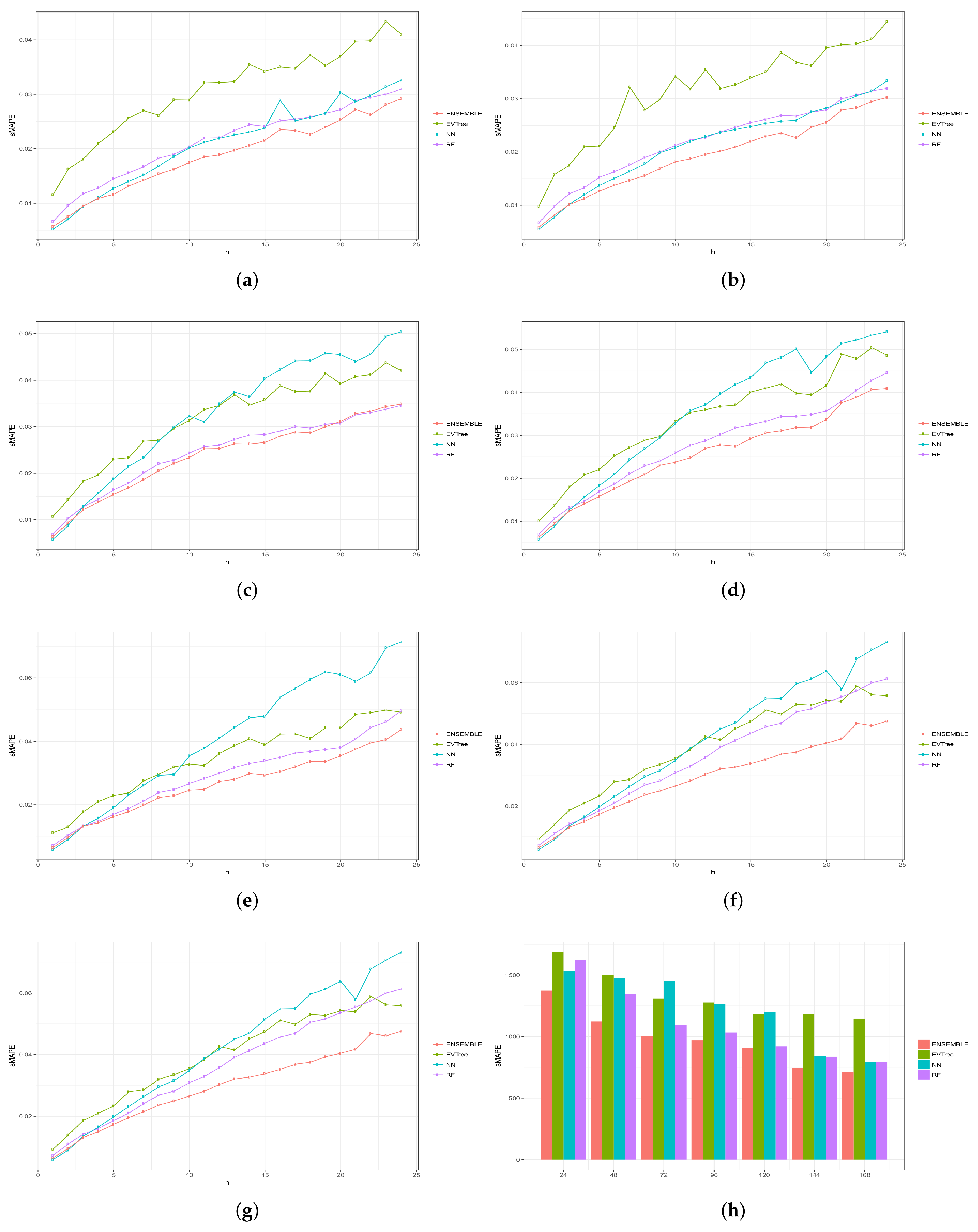

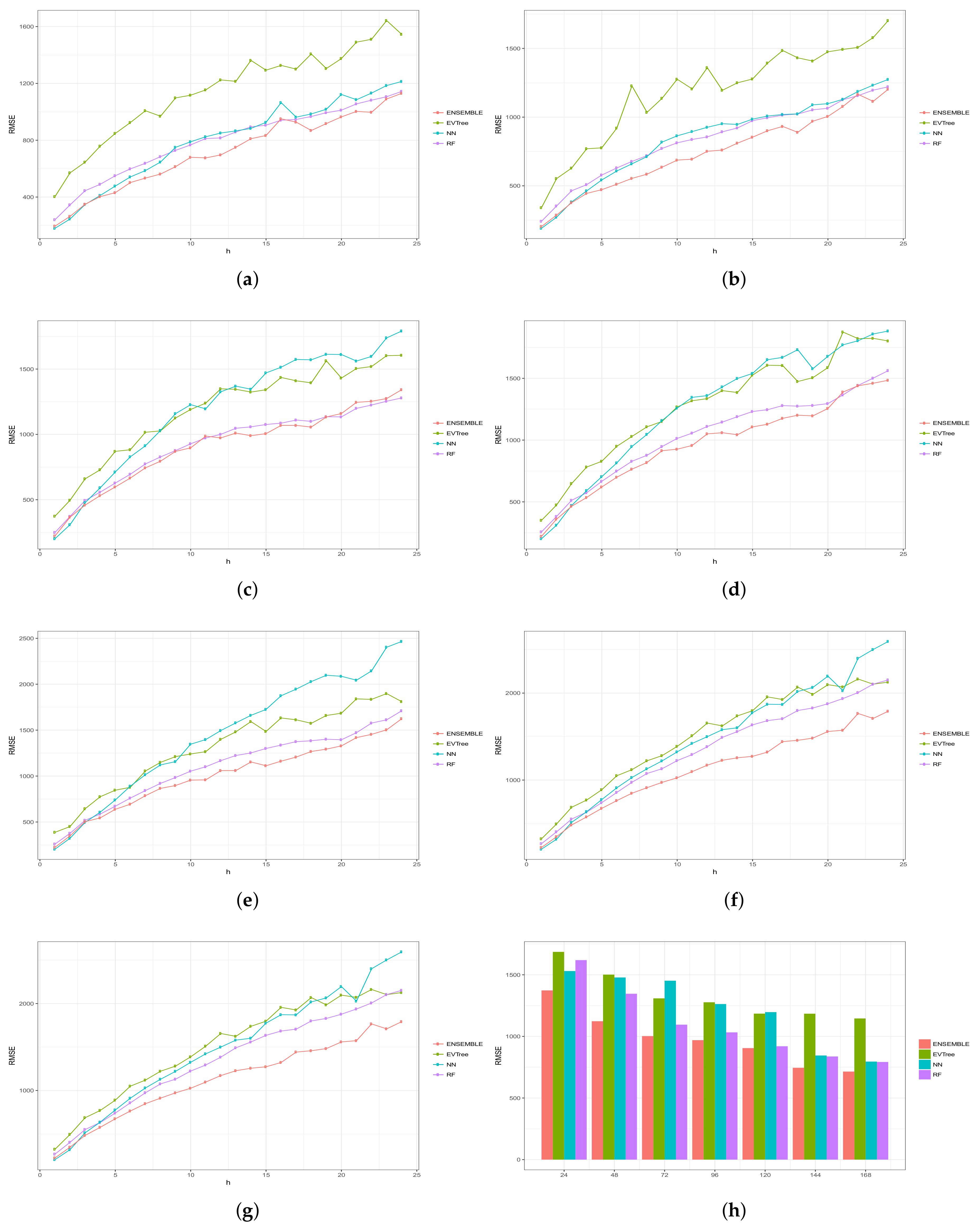

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10 show the results obtained on all the problems (

h) for each historical window (

w) used by both the algorithms employed in the bottom layer (EVTree, NN and RF) and by the top layer of the ensemble scheme (GBM). The average results obtained are also shown in the bar graphs. The detailed results obtained by the ensemble scheme and by the base methods can be found in

Appendix A, and in particular in

Table A1,

Table A2,

Table A3,

Table A4 and

Table A5, where results are grouped by the size of the historical window used, as indicated by the first row.

The first and main conclusion that we can draw from these graphs is that the best results were obtained when all the predictions of the baseline methods were combined by GBM at the top level of the ensemble scheme. In particular, when using a historical window of 168 measuraments, the average MRE obtained was , while the was , the MAE was , the RMSE and the average sMAPE was . In order to assess the significance of the results with respect to the results obtained, we applied a statistical paired two-tailed t-test with confidence level of . According to this test, all the results are significantly different, a part from the MRE, MAE, RMSE and sMAPE obtained by EVTree and NN when historical windows of and 48 were used. Moreover, when w was set to 24, all the results obtained by the bottom layer methods were not significantly different, as far as MRE, MAE and sMAPE are concerned. When we consider MAE, RMSE and sMAPE, results obtained by RF and NN are considered equal for a historical window of 168. The same holds when is considered, moreover, in the case of , results obtained by these two methods are not considered significantly different for a historical window of 120 as well. Considering again , results obtained by EVTree and NN are considered equal for historical windows of size 120 and 72. The results produced by the top layer were always significantly better in all the cases but when considering for a historical window equal to 120. In this case results obteind by RF are not significantly different. Results obtained by RF and NN are not considered different for historical window values of 144 and 120 as far as RMSE is concerned.

Results are summarized in

Table 2, where a ranking of the methods is shown, according to the MRE obtained.

In general, we can also notice the degradation of performances of NN when the historical window used is reduced. In fact, for a historical window of 168, NN obtains the best results among the three bottom layer methods, while for smaller historical windows, starting from 120 measurements, the predictions obtained by this method are always worst than those obtained by RF, and are comparable or worst than the predictions produced by EVTree. Similar consideration can be extracted for the other measures.

We can also notice that the predictions are less and less accurate for increasing values of p, meaning that it is easier to predict the very near future demand than the medium-far future demand. In this sense, we can also observe that NN performs really well on the first two problems. In fact, for values of p equals to 1 or 2, in many cases the predictions obtained by NN are superior to those obtained by the top layer. However, as the value of p increments, the results obtained by the top layer are much better than the results achieved by the three bottom methods. Basically the real difference is made when the problems become harder and harder.

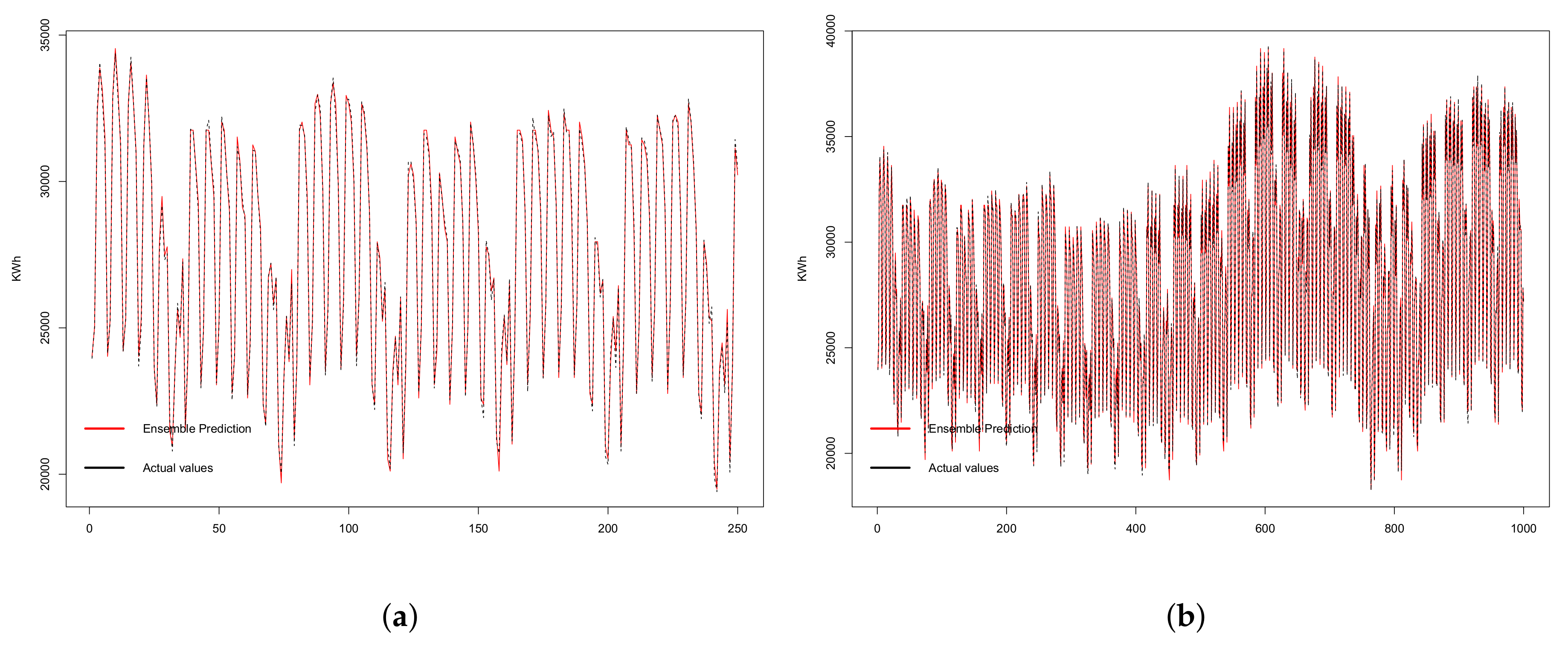

Finally, in

Figure 11, we present a comparison of the real and predicted values for a subset of the time series when a historical window of 168 was used. For readability reasons, we have selected two subsets of 250 and 1000 readings, respectively shown in

Figure 11a,b. We have included the figure regarding 250 in order to provide a more detailed view of the predictions. We can notice that the predictions are very accurate, and that they can describe in a very precise way the original time series.

In order to globally assess the performance of our proposal, we have compared the results achieved by our ensemble scheme with the results obtained by the single components used in our proposal, i.e., Random Forest (RF), Neural Networks (NN) and Evolutionary Decition Trees (EV), and the results obtained by other four state of the art methods: linear regression (LR), ARMA and ARIMA, Deep Learning (DL) and a decision tree algorithm (DT). In particular we have taken into account linear regression, as a reference time series forecasting strategy [

76,

77]. The well-known stochastic gradient descent method has been used to minimise the mean square error for the training set in order to obtain the model. We have used a decision tree greedy algorithm [

78] that performs a recursive binary partitioning of the feature space in order to build a decision tree. This algorithm uses the information gain in order to build the decision trees, and we have used the default parameter as in the package

rpart of R [

79]. For the conventional methods ARMA and ARIMA, we have used a tool [

80] for determining the order of auto-regressive (AR) terms (

p), the degree of differencing (

d) and the order of moving-average (MA) terms (

q). The values obtained are

,

and

. The deep learning-based methodology has been designed using

framework of R [

81]. This framework implements a Feed Forward Neural Network (also called multi-layer perceptron) that can be launched in distributed environments. The network is trained with stochastic gradient descend using back-propagation algorithm. In order to set the parameters for this algorithm, we have used a grid search approach. As a consequence, we have used a hyperbolic tangent function as activation function, the number of hidden layer was set to 3 and the number of neurons to 30. The distribution function was set to Poisson and in order to avoid overfitting, one of two regularization parameter (Lambda) has ben set to 0.001. The other parameter were set as default as in [

35].

Table 3 shows the results of such comparison for each value of historical window considered. We can notice that our proposal outperforms all the other methods, obtaining the best results in all the historical window values considered. This is particularly noticeable for smaller values of

w. Another conclusion that we can draw from the table is that LR and NN obtains good results, which are comparable, especially on higher values of the historical window

w. Among the single methods, RF obtains, in general, good results, especially for smaller values of historical. It can be noticed that RF achieves better results than LR and NN strategies in all cases except for

w values 144 and 168, while it outperforms DL and EV in all cases. In general, the classical strategies ARMA and ARIMA do not perform well on this problem. DT does not perform well on this problem either. This is probably due to the greedy strategy used by this algorithm, which may cause it to get stuck in some local optima. The same considerations may be done for GBM, even if the ensemble nature of this algorithm provides an advantage over DT, and so the results obtained are better. In general we can conclude that the results obtained on this problem by the ensemble scheme are satisfactory, as they achieve more accurate predictions for this short-term electricity consumption forecast problem.

5. Conclusions and Future Works

Accurate short-term forecasting regarding the electric energy demand would provide several benefits, both economical and environmental. For instance, predictions can be taken into account in order to reduce the costs of energy productions, decreasing in the same way the impact on the environment. The predictions are made by taking into consideration data regarding the past demand of electricity, i.e., taking into account historical data. In short-term forecasting, the aim is to be able to predict the near future demand.

In this paper, we have approached the electric energy short-term forecasting problem with a methodology based on ensemble learning. Ensemble learning allows to combine the predictions made by different learning mechanisms in order to achieve predictions that are usually more accurate. More specifically, we have used a stacking ensemble learning scheme, where two levels of learning methods are used. The prediction made by the first level methods are passed to a top method which combines them in order to produce the final forecastings. In this paper, we have used three base learning methods, i.e, regression trees based on Evolutionary Computation, Random Forest and Artificial Neural Networks. At the top layer we have used an algorithm based on Gradient Boosting. We have considered different historical windows, i.e., different amount of historical data used in order to obtain a prediction, and we have focused on predicting the electricity demand of the following four hours. We have compared the results obtained by the ensemble method with the results obtained by the single methods and by linear regression and a decision tree algorithm. Predictions obtained by the ensemble scheme were always superior to the result of the other methods. We have also observed that some methods, like NN, are able to make very precise predictions in the very near future, but that results degenerates the further in the future we aim to predict. Moreover, when the size of historical windows used is small, results are significantly improved when the ensemble scheme is employed. This is due to the degradation in performances of single methods that need the support of more historical data in order to achieve acceptable results.

As for future works, we intend to explore other ensemble schemes, using different methods, for example methods based on support vector machines, deep learning [

82] or methods based on SP Theory of Intelligence [

83]. Moreover, we are planning on using other datasets, both regarding the electric energy consumption, but also other kind of time series, in order to check if our approach can be generalized to other kind of problems.