A Quantification Index for Power Systems Transient Stability

Abstract

:1. Introduction

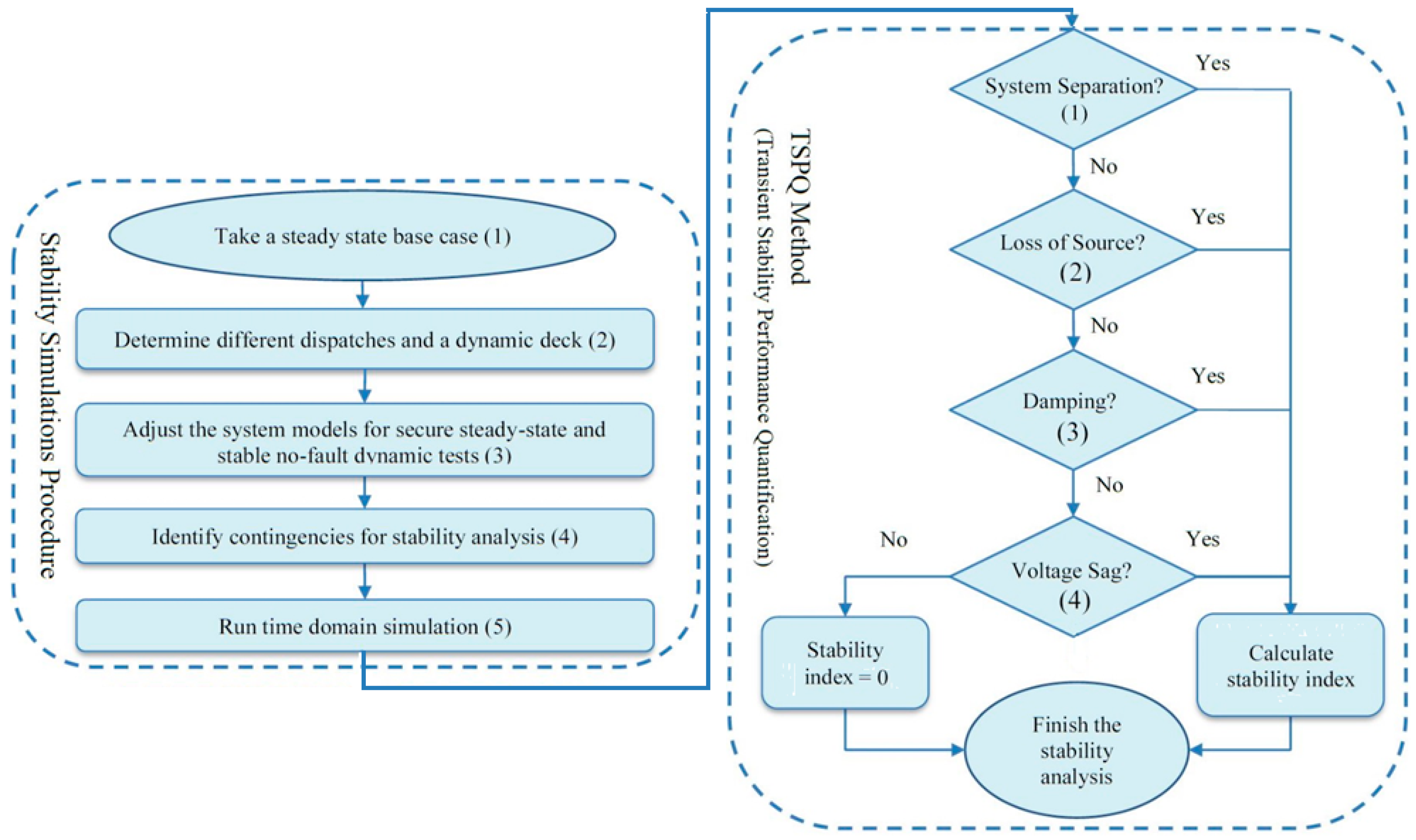

2. Overview of the Proposed Performance Quantification Procedure

3. Detailed Description of TSPQ Method

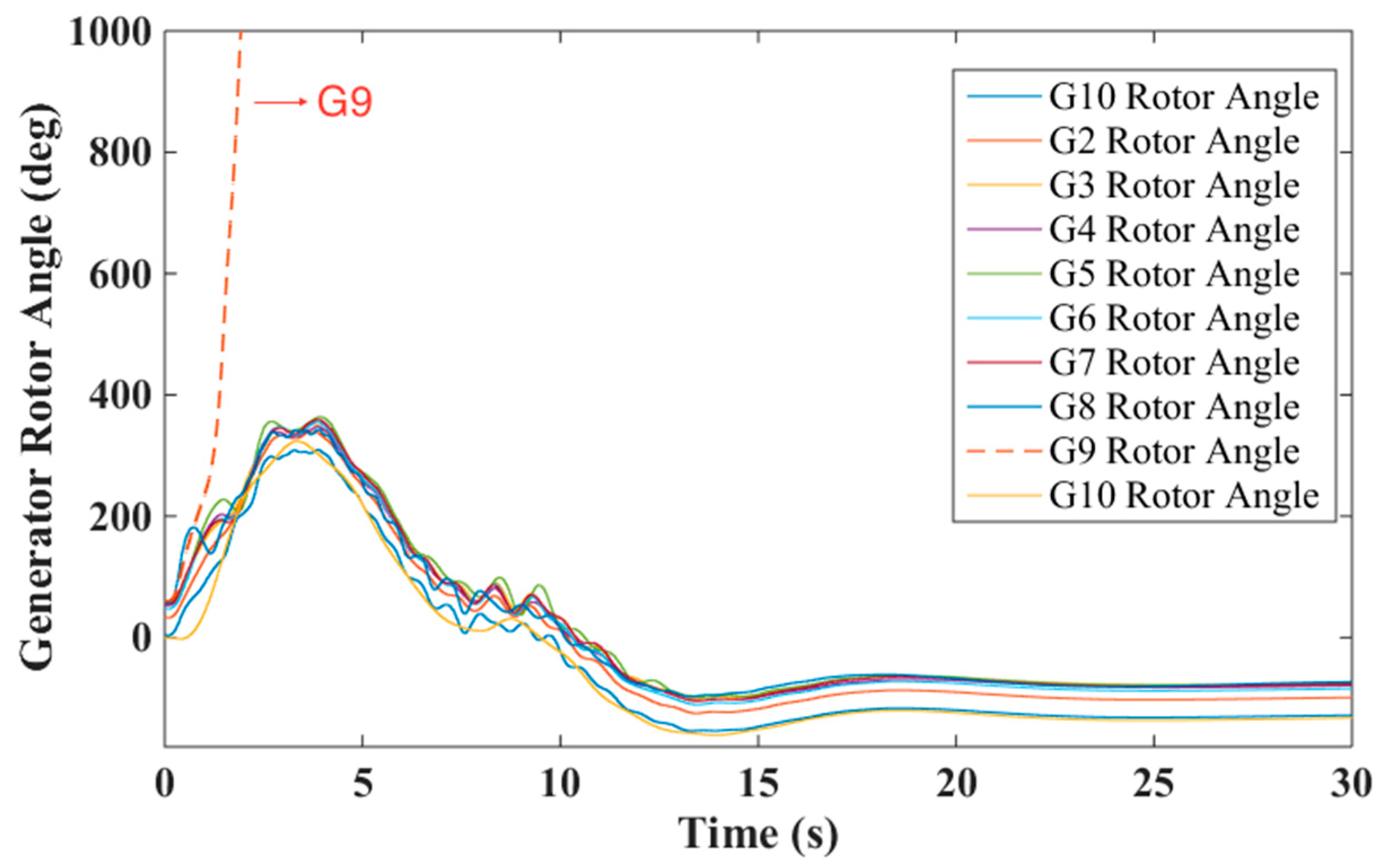

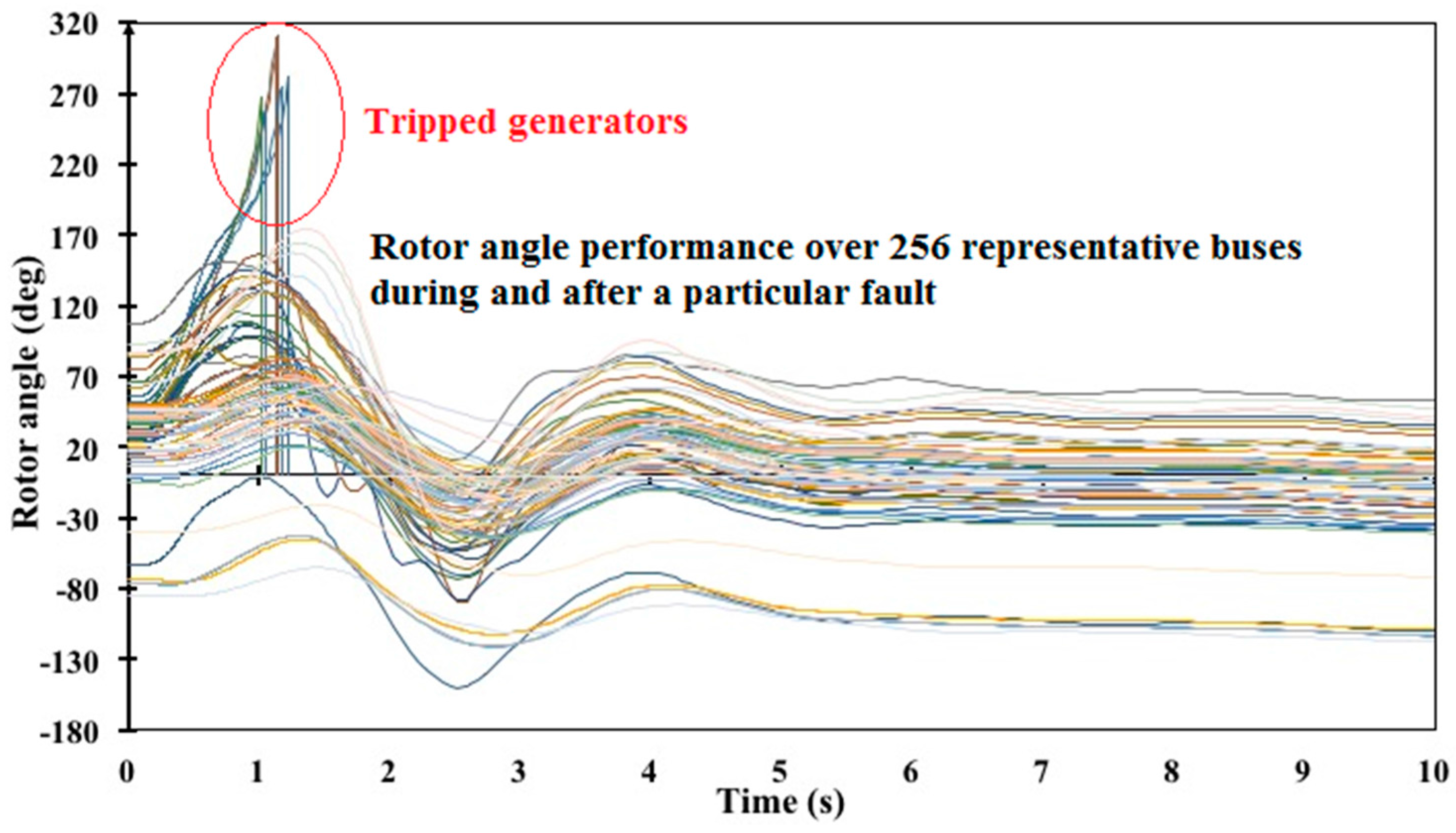

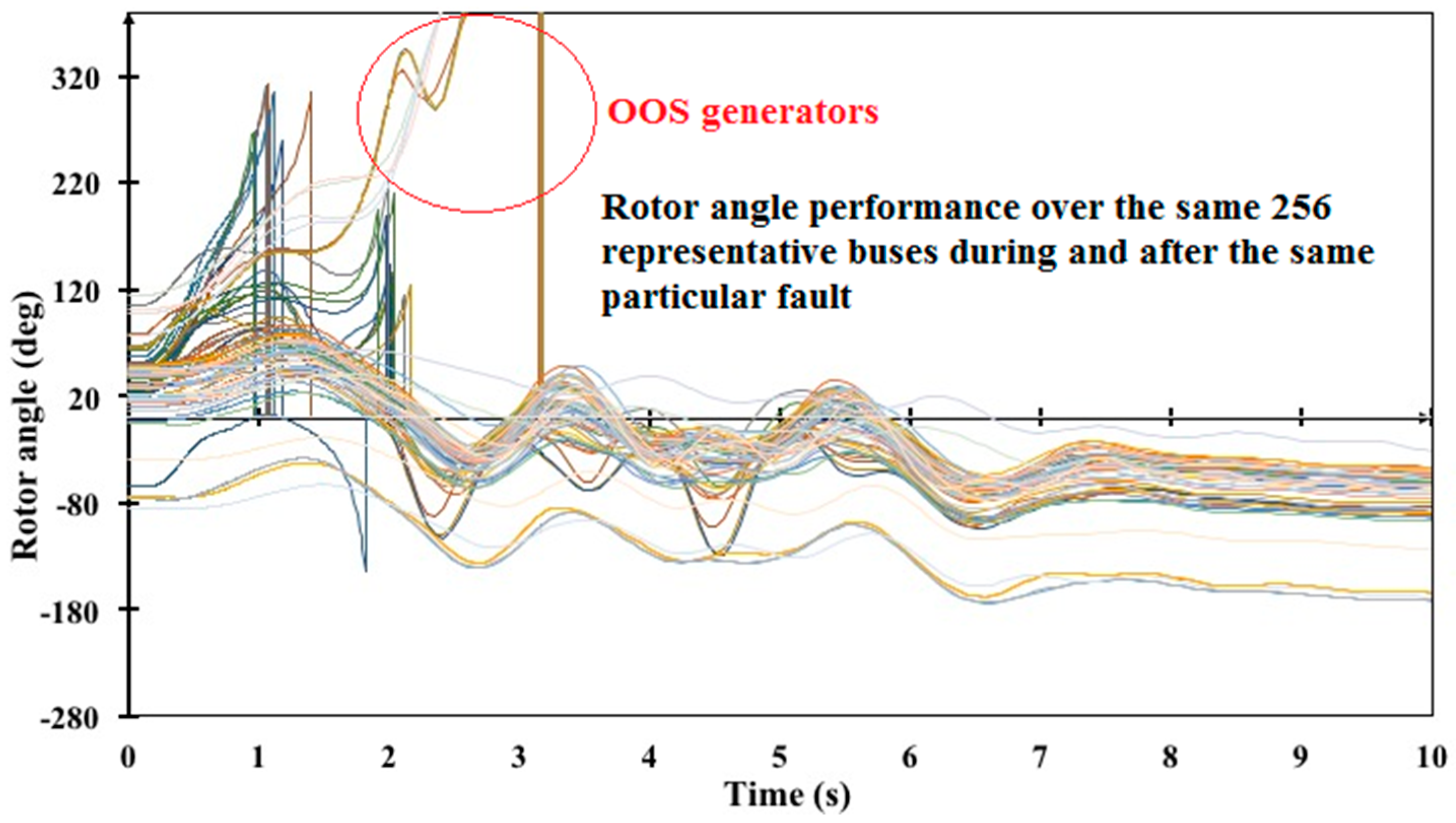

3.1. System Separation Module

3.2. Loss of Source Module

3.3. Damping Module

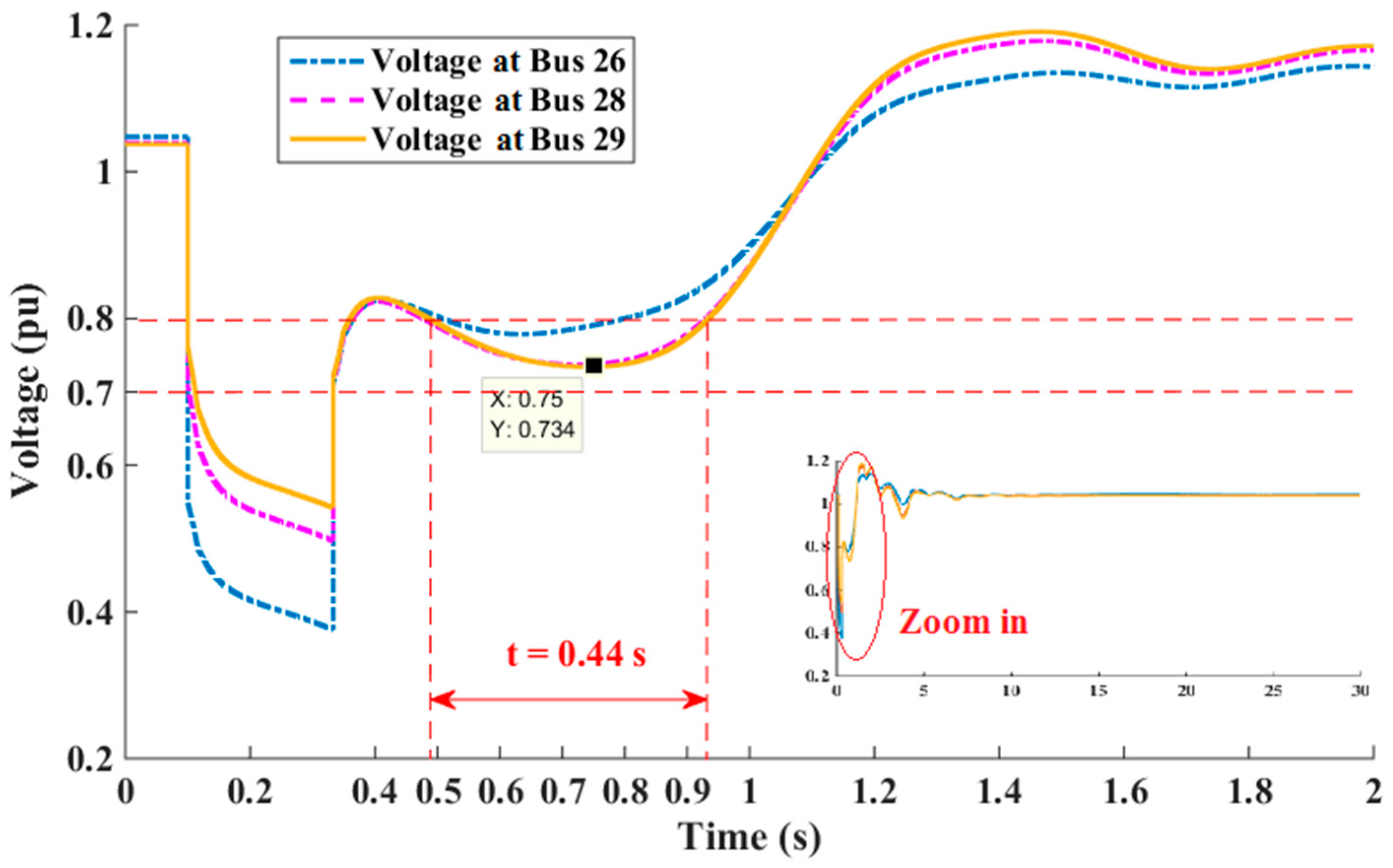

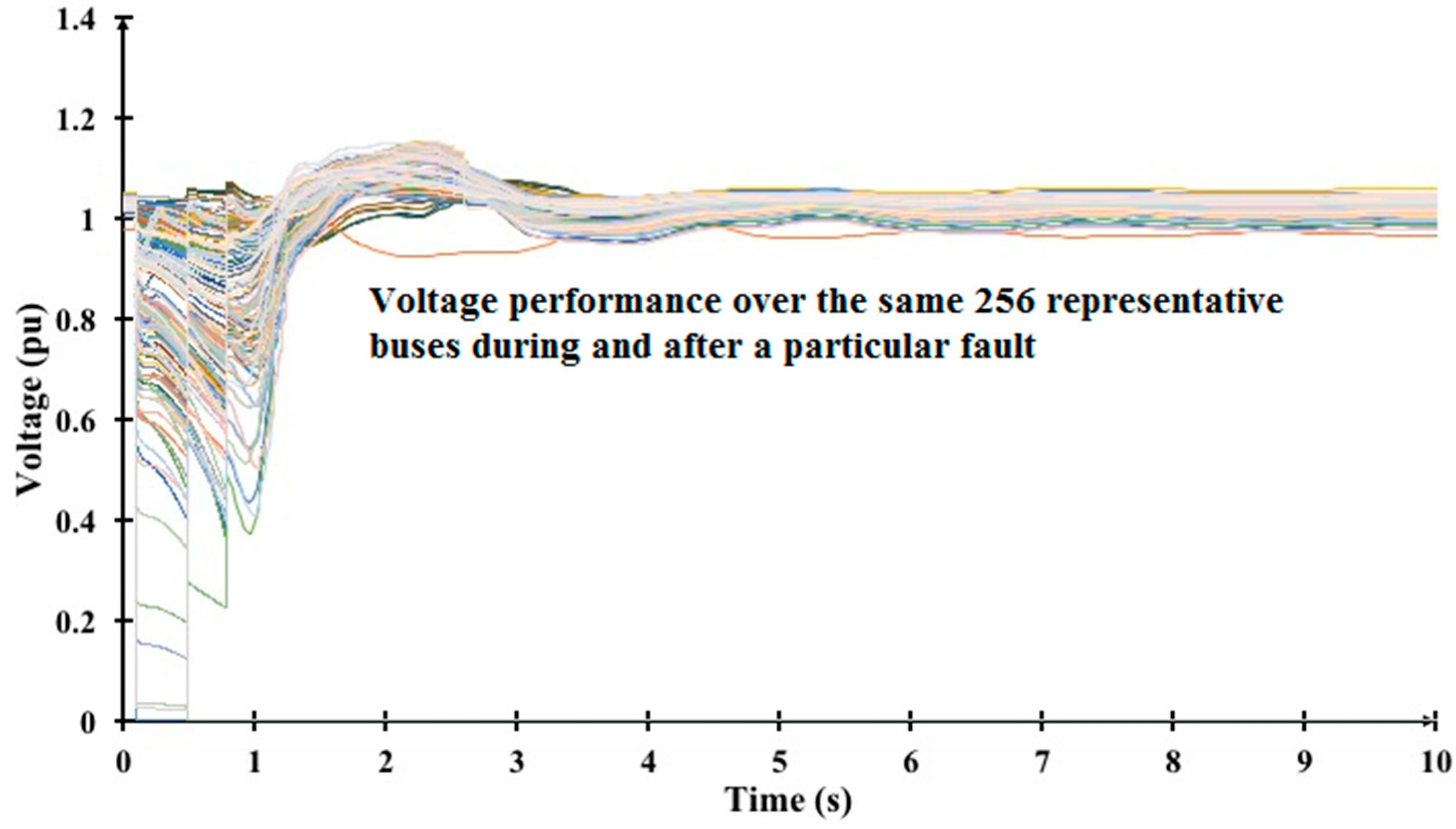

3.4. Voltage Sag Module

3.5. Stability Index R Calculation

4. IEEE 39-Bus Test System Case Study and Verification

4.1. IEEE 39-Bus Test System

4.2. Contingencies and Dispatches

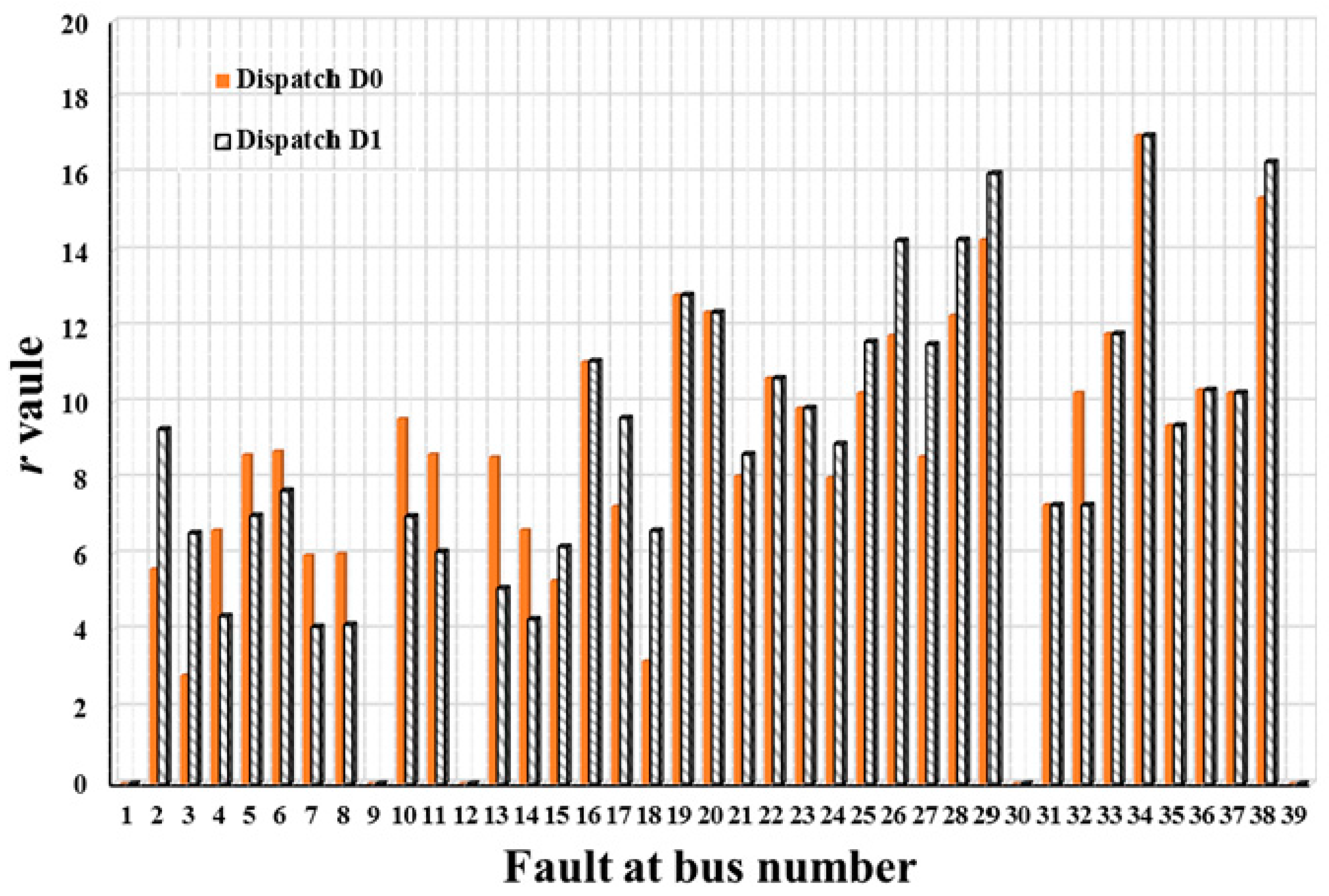

4.3. TSPQ Method Results

4.3.1. Results for Dispatch D0

4.3.2. Comparison between Dispatch D0 and D1

- Group 1

- black buses: 1, 9, 12, 19, 20, 22, 30, 31, 33, 34, 35, 36, 37 and 39.

- Group 2

- green buses: 4, 5, 6, 7, 8, 10, 11, 13, 14 and 32.

- Group 3

- red buses: 2, 3, 15, 16, 17, 18, 21, 23, 24, 25, 26, 27, 28, 29 and 38.

4.3.3. Normalized Index Presentation

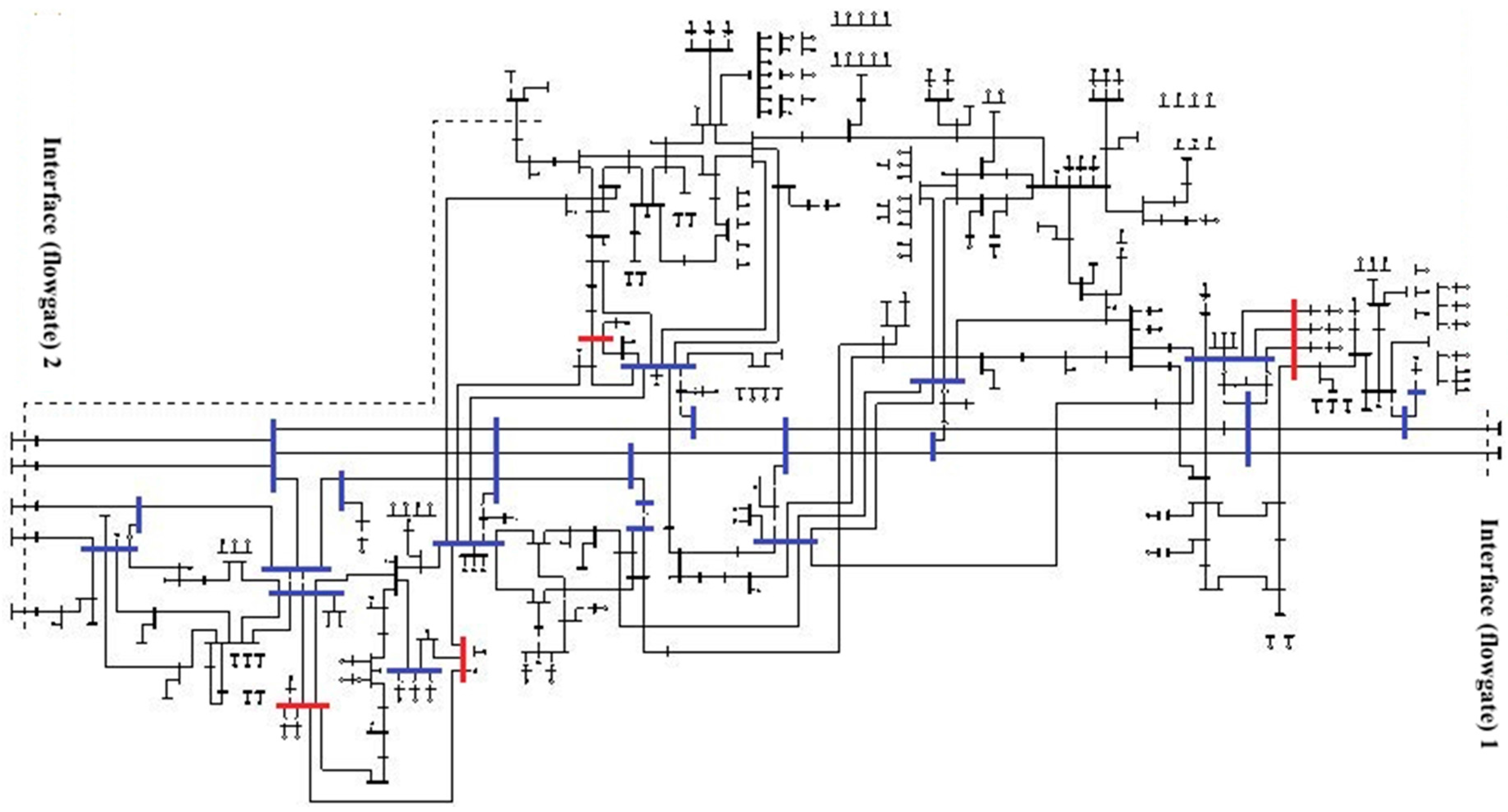

5. Implementation on a Real Power System

- (1)

- A total of 108 assumptive, three-phase fault contingencies with a pessimistic 10 s clearing time was applied at stations with voltages equal and above 115 kV across Maine as shown in the New England Geographic Transmission Map [22]. If any of these contingencies did not cause a criteria violation based on [11,14,15,16,17], it was removed from further consideration, to limit the data volume to only the non-trivial faults.

- (2)

- The locations where the 10 s duration three-phase contingency resulted in stability violations were re-tested by applying three-phase faults with the actual, normal clearing times (4–6 cycles range). The simulation results files were then analyzed through the TSPQ methodology and the findings are included in this report.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Northeast Power Coordinating Council, Inc. Classification of Bulk Power System Elements, Document A-10; NPCC Inc.: New York, NY, USA, 2009. [Google Scholar]

- North American Electric Reliability Corporation. Standard TPL-001-4—Transmission System Planning Performance Requirements; NERC: Atlanta, GA, USA, 2016. [Google Scholar]

- Zhu, Y.; Brown, D. Enhancing stability simulation for NERC Reliability Standard TPL-001-4 compliance. In Proceedings of the EPEC, London, ON, Canada, 26–28 October 2015. [Google Scholar]

- Fouad, A.A.; Kruempel, K.C.; Vittal, V.; Ghafurian, A.; Nodehi, K.; Mitsche, J.V. Transient Stability Program Output Analysis. IEEE Trans. Power Syst. 1986, 1, 2–8. [Google Scholar] [CrossRef]

- Maria, G.A.; Tang, C.; Kim, J. Hybrid Transient Stability Analysis [Power System]. IEEE Trans. Power Syst. 1990, 5, 384–393. [Google Scholar] [CrossRef]

- Tang, C.K.; Graham, C.E.; El-Kady, M.; Alden, R.T.H. Transient Stability Index from Conventional Time Domain Simulation. IEEE Trans. Power Syst. 1994, 9, 1524–1530. [Google Scholar] [CrossRef]

- Ma, F.; Luo, X.; Litvinov, E. Cloud Computing for Power System Simulations at ISO New England—Experiences and Challenges. IEEE Trans. Smart Grid 2016, 7, 2596–2603. [Google Scholar] [CrossRef]

- Smith, S.; Woodward, C.; Min, L.; Jing, C.; Rosso, A.D. On-line transient stability analysis using high performance computing. In Proceedings of the 2014 IEEE PES Innovative Smart Grid Technologies Conference (ISGT), Washington, DC, USA, 19–22 February 2014. [Google Scholar]

- Meng, K.; Dong, Z.Y.; Wong, K.P. Enhancing the computing efficiency of power system dynamic analysis with PSS_E. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009. [Google Scholar]

- Chen, S.; Onwuachumba, A.; Musavi, M.; Lerley, P. Transient Stability Performance Quantification Method for Power System Applications. In Proceedings of the Power Systems Conference (PSC), Clemson, SC, USA, 8–11 March 2016. [Google Scholar]

- ISO New England Inc. Transmission Planning Technical Guide Appendix F; ISO New England: Holyoke, MA, USA, 2013. [Google Scholar]

- Fouad, A.A.; Vittal, V. Power System Response to a Large Disturbance: Energy Associated with System Separation. IEEE Trans. Power Appar. Syst. 1983, 102, 3534–3540. [Google Scholar] [CrossRef]

- IEEE/CIGRE Joint Task Force on Stability Terms and Definitions. Definition and Classification of Power System Stability. IEEE Trans. Power Syst. 2004, 19, 1387–1401. [Google Scholar]

- Siemens Power Technologies International. Stability Study Report for Q371 Wind Project Interconnecting to Line L-163 near Jackman 115 kV Substation in New Hampshire Prepared for ISO-NE, 30 November 2012. Available online: https://www.nhsec.nh.gov/projects/2015-02/application/documents/10-02-15-sec-2015-02-appendix-6-final-redacted-non-ceii-r110-11-final-stability-study-rpt-fo.pdf (accessed on 5 July 2017).

- ISO New England Stability Task Force. Damping Criterion Basic Document; ISO New England: Holyoke, MA, USA, 2009. [Google Scholar]

- Reliability Standards for the New England Power Pool; NEPOOL Planning. Procedure NO. 3; ISO-NE: Holyoke, MA, USA, 2004.

- ISO New England Inc. Transmission Planning Technical Guide Appendix E, Dynamic Stability Simulation Voltage Sag Guideline; System Planning; ISO New England Inc.: Holyoke, MA, USA, 2013. [Google Scholar]

- ISO New England Inc. ISO New England Planning Procedure No. 5–6, Interconnection Planning Procedure for Generation and Elective Transmission Upgrades; ISO New England: Holyoke, MA, USA, 2016. [Google Scholar]

- ISO New England Inc. Transmission Planning Technical Guide, 15 January 2016. Available online: https://www.iso-ne.com/static-assets/documents/2016/01/planning_technical_guide_1_15_16.pdf (accessed on 5 July 2017).

- Pai, M.A. Energy Function Analysis for Power System Stability; Springer: New York, NY, USA, 1989. [Google Scholar]

- Athay, T.; Podmore, R.; Virmani, S. A Practical Method for the Direct Analysis of Transient Stability. IEEE Trans. Power Appar. Syst. 1979, 98, 573–584. [Google Scholar] [CrossRef]

- ISO New England Inc. New England Geographic Transmission Map through 2024, 2014. Available online: https://www.iso-ne.com/static-assets/documents/2015/05/2015_celt_appendix_f.pdf (accessed on 4 February 2017).

| D0 (MW) | D1 (MW) | D2 (MW) | D3 (MW) | D4 (MW) | D5 (MW) | |

|---|---|---|---|---|---|---|

| G1 | 998 | 999.5 | 994 | 994.3 | 996.8 | 997.2 |

| G2 | 573 | 573 | 573 | 573 | 673 | 673 |

| G3 | 650 | 550 | 450 | 350 | 750 | 812.5 |

| G4 | 632 | 632 | 632 | 632 | 732 | 790 |

| G5 | 508 | 508 | 508 | 508 | 608 | 600 |

| G6 | 650 | 650 | 650 | 650 | 750 | 812.5 |

| G7 | 560 | 560 | 560 | 560 | 660 | 845 |

| G8 | 540 | 540 | 640 | 640 | 640 | 1000 |

| G9 | 830 | 933 | 933 | 933 | 930 | 1000 |

| G10 | 250 | 250 | 250 | 350 | 350 | 500 |

| Total MW in each dispatch | ||||||

| 6191 | 6195.5 | 6190 | 6190.3 | 7089.8 | 8030.2 | |

| Fault Location | Clearing Time (Cycles) | SS (f1) | LOS (f2) | Damping (f3) | Vsag (f4) |

|---|---|---|---|---|---|

| Bus2 | 1 | 0 | 0 | 0 | 0 |

| Bus2 | 2 | 0 | 0 | 0 | 0 |

| Bus2 | 3 | 0 | 0 | 0 | 0 |

| Bus2 | 4 | 0 | 0 | 0 | 0 |

| Bus2 | 5 | 0 | 0 | 0 | 0 |

| Bus2 | 6 | 0 | 0 | 0 | 0 |

| Bus2 | 7 | 0 | 0 | 0 | 0 |

| Bus2 | 8 | 0 | 0 | 0 | 0 |

| Bus2 | 9 | 0 | 0 | 0 | 0 |

| Bus2 | 10 | 0 | 0 | 0 | 0 |

| Bus2 | 11 | 0 | 0 | 0 | 0 |

| Bus2 | 12 | 0 | 0 | 0 | 0 |

| Bus2 | 13 | 0 | 0 | 0 | 0 |

| Bus2 | 14 | 0 | 0 | 0 | 0.266 |

| Bus2 | 15 | 0 | 0 | 0 | 0.344 |

| Bus2 | 16 | 1 | N/A | N/A | N/A |

| Bus2 | 17 | 1 | N/A | N/A | N/A |

| Bus2 | 18 | 1 | N/A | N/A | N/A |

| Bus2 | 19 | 1 | N/A | N/A | N/A |

| Bus2 | 20 | 1 | N/A | N/A | N/A |

| rBus2 = 5.61 | |||||

| Fault Location | Clearing Time (Cycles) | Dispatch D0 | Dispatch D1 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| SS (f1) | LOS (f2) | Damping (f3) | Vsag (f4) | SS (f1) | LOS (f2) | Damping (f3) | Vsag (f4) | ||

| Bus2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Bus2 | 8 | 0 | 0 | 0 | 0.226 | 0 | 0 | 0 | 0.231 |

| Bus2 | 9 | 0 | 0 | 0 | 0.281 | 0 | 0 | 0 | 0.290 |

| Bus2 | 10 | 0 | 0 | 0 | 0.375 | 0 | 0 | 0 | 0.402 |

| Bus2 | 11 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 12 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 13 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 14 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 15 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 16 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 17 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 18 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 19 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| Bus2 | 20 | 1 | N/A | N/A | N/A | 1 | N/A | N/A | N/A |

| rBus16 = 10.882 | rBus16 = 10.923 | ||||||||

| Index | D0 | D1 | D2 | D3 | D4 | D5 |

|---|---|---|---|---|---|---|

| R | 315.404 | 319.937 | 308.417 | 295.182 | 477.585 | 780 |

| Rn | 40.44% | 41.02% | 39.54% | 37.84% | 61.23% | 100% |

| Index | SLL1 | SLL2 | SLL3 | SP1 |

|---|---|---|---|---|

| R | 22 | 26 | 21 | 21 |

| Rn | 44.9% | 53.06% | 42.86% | 42.86% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Onwuachumba, A.; Musavi, M.; Lerley, P. A Quantification Index for Power Systems Transient Stability. Energies 2017, 10, 984. https://doi.org/10.3390/en10070984

Chen S, Onwuachumba A, Musavi M, Lerley P. A Quantification Index for Power Systems Transient Stability. Energies. 2017; 10(7):984. https://doi.org/10.3390/en10070984

Chicago/Turabian StyleChen, Shengen, Amamihe Onwuachumba, Mohamad Musavi, and Paul Lerley. 2017. "A Quantification Index for Power Systems Transient Stability" Energies 10, no. 7: 984. https://doi.org/10.3390/en10070984