1. Introduction

Electric load prediction is one of the major tasks in power management departments which carry out electric power dispatching, usage and planning. Improving the accuracy of load forecasting is helpful for planning power management and is advantageous to the arrangement of power grid operations. Meanwhile, it is also advantageous for the reduction of power generation costs and is beneficial to establish the power for construction plans. Therefore, electric load forecasting has become an important part of power system management modernization.

In recent years, a large number of techniques for time series forecasting have been used in electric load forecasting. In traditional predictive methods, the linear regression model and grey model have been widely used. Goia et al. proposed a linear regression model using heating demand data to forecast the short-term peak load in a district-heating system [

1]. Zhou et al. presented a trigonometric grey prediction approach by combing the traditional GM (1, 1) with the trigonometric residual modification technique for forecasting electricity demand [

2]. Akay et al. proposed GPRM to predict Turkey’s total and industrial electricity consumption [

3]. Meanwhile, artificial neural networks and support vector machines have a variety of applications in electric load forecasting. Chang et al. proposed the so-called EEuNN framework by adopting a weighted factor to calculate the importance of each factor among the different rules to predict monthly electricity demand in Taiwan [

4]. Kavaklioglu used SVR to model and predict Turkey’s electricity consumption [

5]. Wang et al. presented a combined ε-SVR model considering seasonal proportions based on development trends from history data to forecast the short-term electricity demand [

6]. Other predictive techniques have also been proposed to deal with the electric load forecasting problem. Kucukali et al. attempted to forecast Turkey’s short-term gross annual electric demand by applying fuzzy logic methodology based on the economical, political and electricity market conditions of the country [

7]. Dash et al. presented the development of a hybrid neural network to model a fuzzy expert system for time series forecasting of electric loads [

8]. Taylor showed that for predictions up to a day-ahead the triple seasonal methods outperform the double seasonal methods in predicting electricity demand [

9].

However, no matter which techniques are utilized to predict electric load, with only a single model it is difficult to achieve high precision because of the various shortcomings in the models [

10]. Therefore, hybrid models are built with different combinations of data mining technology to improve the prediction accuracy in load forecasting research. Wu et al. proposed a Particle Swarm Optimization-Supporter Vector Machine (PSO-SVM) model based on cluster analysis techniques and data accumulation pretreatment in short-term electric load forecasting [

11]. Chen et al. proposed a new electric load forecasting model by hybridizing FTS and GHSA with LSSVM [

12]. Huang presented a SVR-based load forecasting model which hybridized the chaotic mapping function and QPSO with SVM [

13]. Zhang et al. successfully established a novel model for electric load forecasting by combination of SSA, SVM and CS [

14]. Hence, it is apparent that lots of novel hybrid models combined with different data mining techniques could improve the prediction precision in the research of load forecasting.

In this paper we also propose an outstanding hybrid model to predict electric loads. In non-parametric model research, KNN regression has been widely used in time series prediction. The KNN regression is one of the historical approximation methods in machine learning. The main idea of the algorithm is that we get the output by calculating the degree of similarity between the independent variables [

15]. Poloczek proposed the KNN regression as a geo-imputation preprocessing step for pattern-label-based short-term wind prediction of spatio-temporal wind data sets [

16]. Ban proposed a new multivariate regression approach for financial time series based on knowledge shared from referential nearest neighbors [

17]. Hu proposed a conjunction model named EMD-KNN which was based on an EMD and KNN regressive model for forecasting annual average rainfall [

18]. Li established a novel hybrid BAMO with KNN to predict apoptosis protein sequences using statistical factors and dipeptide composition [

19]. Wang selected k-nearest neighbors to correct the commonly used precipitation data on the Qinghai-Tibetan plateau by establishing the relationship between daily precipitation and environmental as well as other meteorological factors [

20]. Meanwhile in the neural network research field, Huang established an innovative algorithm called extreme learning machine (ELM) which was based on a traditional single-hidden-layer feed-forward neural network [

21]. Generally speaking, ELM not only reduces the training time of neural networks but also has a great predictive performance. Meanwhile the speed of ELM is faster than traditional machine learning algorithms. Ma built an adaptive prediction model based on ELM to predict traffic flows [

22]. Masri targeted predicting the functional properties of soil samples by establishing a hybrid model based on a Savitzky-Golay filter for preprocessing and ELM for obtaining non-linear relationship [

23]. Liao used ELM and economic theory to study stock price forecasting and the results showed that ELM had the highest prediction accuracy [

24]. Zhang researched the short-term prediction of wind by a proposed model based on wavelet decomposition and ELM [

25]. Shamshirband predicted the horizontal global solar radiation by using and extreme learning machine method and the comparative results clearly specified that ELM can provide reliable predictions with better precision compared to the traditional techniques [

26]. In the field of signal processing, compared to traditional methods such as EMD and EEMD, the wavelet transform (WT) has a great ability to obtain the characteristics of data from the time and frequency domains [

27]. Hence we can eliminate the noise in signals and grab the main information using WT. Wang targeted forecasting future stock prices by utilizing wavelet analysis to denoise the time series applying a neural network to obtain the nonlinear relationships [

28]. Wang proposed a novel approach for short-term load forecasting by applying wavelet denoising in a combined model that is a hybrid of SARIMA and a neural network [

29]. Qin predicted chaotic time series based on wavelet denoising, phase space reconstruction and LSSVM, and the proposed model had better performance than the traditional models [

30]. Abbaszadeh proposed a new hybrid model based on a wavelet denoising technique to denoise hydrological time series and applied ANN to acquire the best prediction of hydrological data [

31].

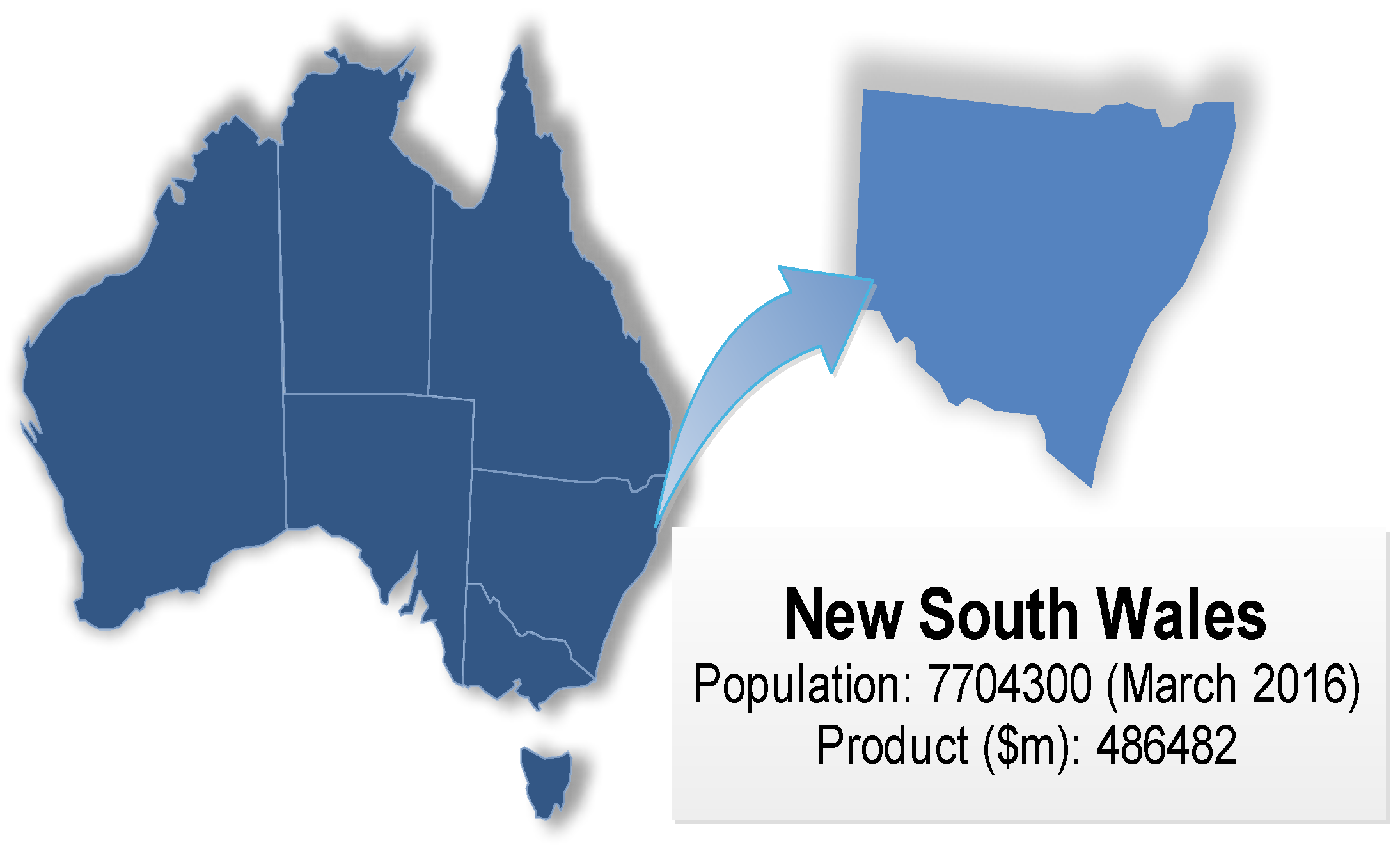

In this paper, because the Australian region of New South Wales (abbreviated as NSW) is an active economic region in the Asia-Pacific region and it has a large population, its economic development and peoples’ lives have become inextricably tied to electricity, so the electricity department is concerned with ensuring sufficient power production. Accurate electric load forecasting could be particularly important for this, as surplus electricity production will lead to environmental pollution and resource wastage. Thus, effective electric load forecasting could be a useful indicator for decision-makers, and effective early warning of an increase in electricity demand is important to ensure supply-demand balance. Based on these facts, we propose a new hybrid model based on the wavelet denoising technique, k-nearest neighbor regression and extreme learning machine to forecast the short-term electricity load in New South Wales.

This paper mainly consists of three parts: (1) the first part introduces the wavelet denoising technique, the k-nearest neighbor regression (KNN), extreme learning machine (ELM) and the establishment of the proposed hybrid model; (2) the second part presents the data set of the experiment, the evaluation criteria of models, the predictive values of the electric load and the analysis of comparative models; (3) the third part is a summary of the proposed model to illustrate the great predictive performance we could achieve for electric loads through the proposed ELM-WA-KNN hybrid model (EWKM).

2. Materials and Methods

The proposed hybrid model presented in this paper for short-term electric load forecasting mainly consists of three basic data mining technologies: wavelet denoising technique, k-nearest neighbor regression (KNN) and extreme learning machine (ELM). Detailed descriptions are given below.

2.1. Wavelet Denoising Technique

The wavelet transform proposed by Mallat has become one of the strongest mathematical tools for providing a time-frequency representation of an analyzed signal in the time series domain. Detailed coefficients are produced by high-pass filters and approximation series are produced by low-pass filters [

32]. With the development of the wavelet analysis theory, the dyadic wavelet transform (DWT) for discrete time series

is as shown in Equation (1):

where

and

are the scale and location of the discrete wavelet, respectively.

N stands for the integer power of 2, and

for the wavelet function. By the transform method, DWT could eliminate the white nose of the time series and acquire the useful information of the time series on a different scale.

2.2. Extreme Learning Machine (ELM)

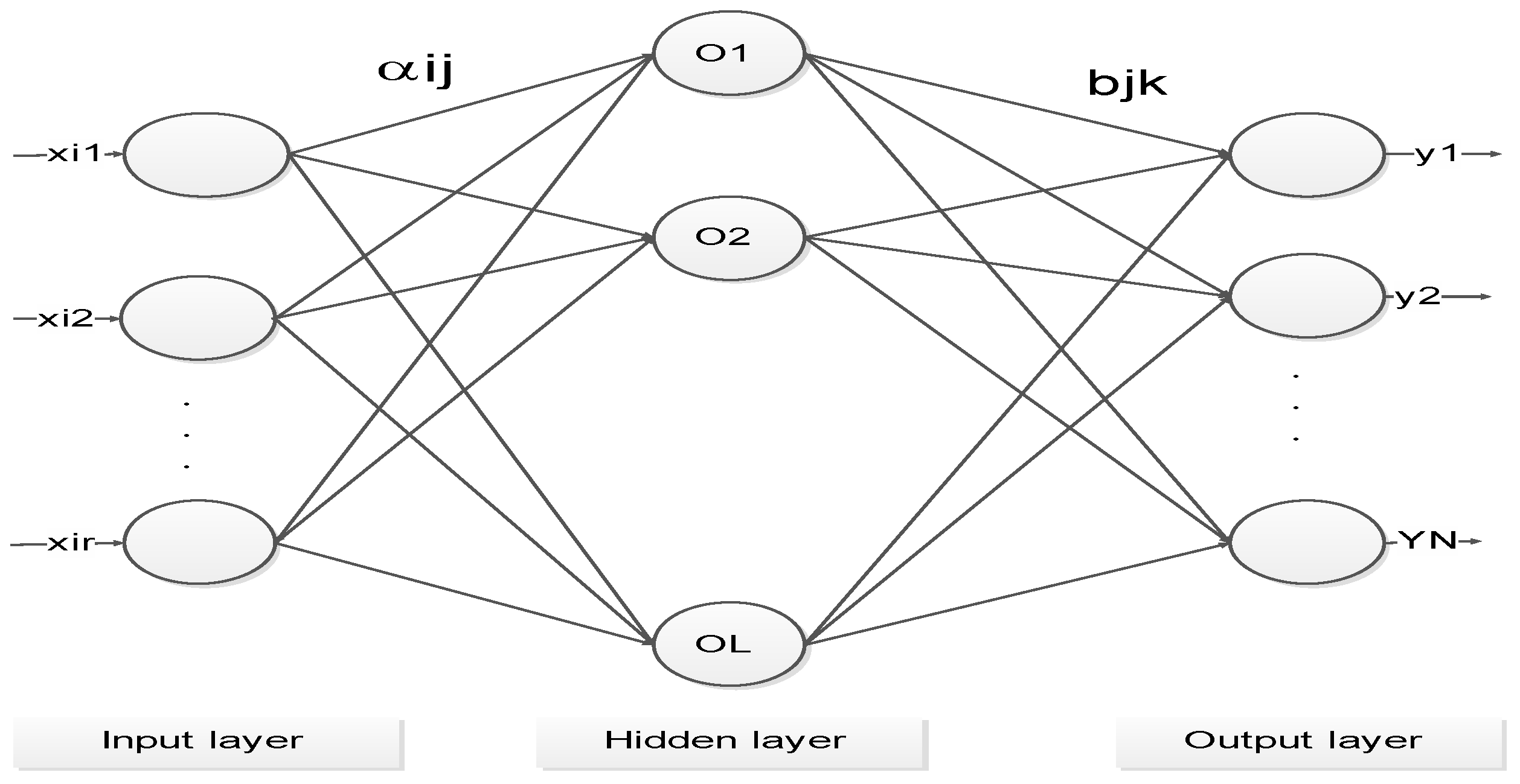

Extreme learning machine (ELM) is a type of the single-hidden-layer feed-forward neural network (SLFN) which cannot adjust the parameters of the neural network, but ELM has the threshold of the hidden layer and the weights between each layers. The model of the ELM is as shown in

Figure 1.

Given a data set

, the output of the ELM is:

where

;

stands for the learning parameters of the hidden layers and

represents the weights between the input layer and hidden layer. Meanwhile the

is the weights between the hidden layer and output layer and

is the activation function of the hidden layer. Specifically, the radial basis function is selected as the active function in the hidden node in the experiment. So we could obtained the formula of the ELM as follows:

where the weight matrix between the hidden layer and output layer is

and

stands for the ELM output. It is apparent that

represents the result of the hidden layer. Here, two important ELM theorems by Liang must to be mentioned [

33]:

Theorem 1. If SLFN with additive nodes and with activation function which is differentiable in any interval of is given, then the output matrix of hidden layer is invertible and .

Theorem 2. If small positive value and activation function : which is differentiable in any interval is given, then there exists such that arbitrary distinct input vectors randomly produced based upon any continuous probability distribution with probability one.

Based on Theorems 1 and 2, we could solve the equation by the least squares method and the result is:

where

is the Moore-Penrose pseudo inverse of the hidden layer.

2.3. k-Nearest Neighbor Regression (KNN)

KNN is a non-parametric technology which derives from pattern recognition studies [

34]. With the development of the study of nonlinear dynamics, many researchers have utilized KNN to frequently predict time series because the algorithm has a great ability to get the nonlinear properties of a time series. The main idea of KNN is that the similarity (neighborhood) between the independent variable of the predictors is used and the independent variable in the historical observations is calculated to acquire the best estimators for the predictor [

15].

KNN applies a metric on the predictors to seek the set of k past nearest neighbors in the historical data set for the current condition. To deal with the regressive problem, Lall and Sharma proposed the kernel function and we could get the result of the KNN regression as follows [

35]:

where

represents the value of the prediction;

stands for the magnitude of nearest neighbor

j in the above formula. It must be noticed that

j is the order of the nearest neighbors based on the distance from the current condition

i (

j = 1 to

k ). The similarity between predictor and historical label is depended on the distance as following formula:

where

is the

th independent variable of

;

stands for the

th independent variable of

and

represents the number of independent variables in the formula.

Establishing the kernel function of KNN is therefore the main concern to predict the time series. Many researchers have tried different methods to build fitter kernel functions in dealing with the regression. However almost all the kernel functions are established based on the linear relationship and these methods have failed to get the nonlinear property, so in this paper the kernel function is obtained by ELM, which is a popular data mining technique, to search for the nonlinear properties.

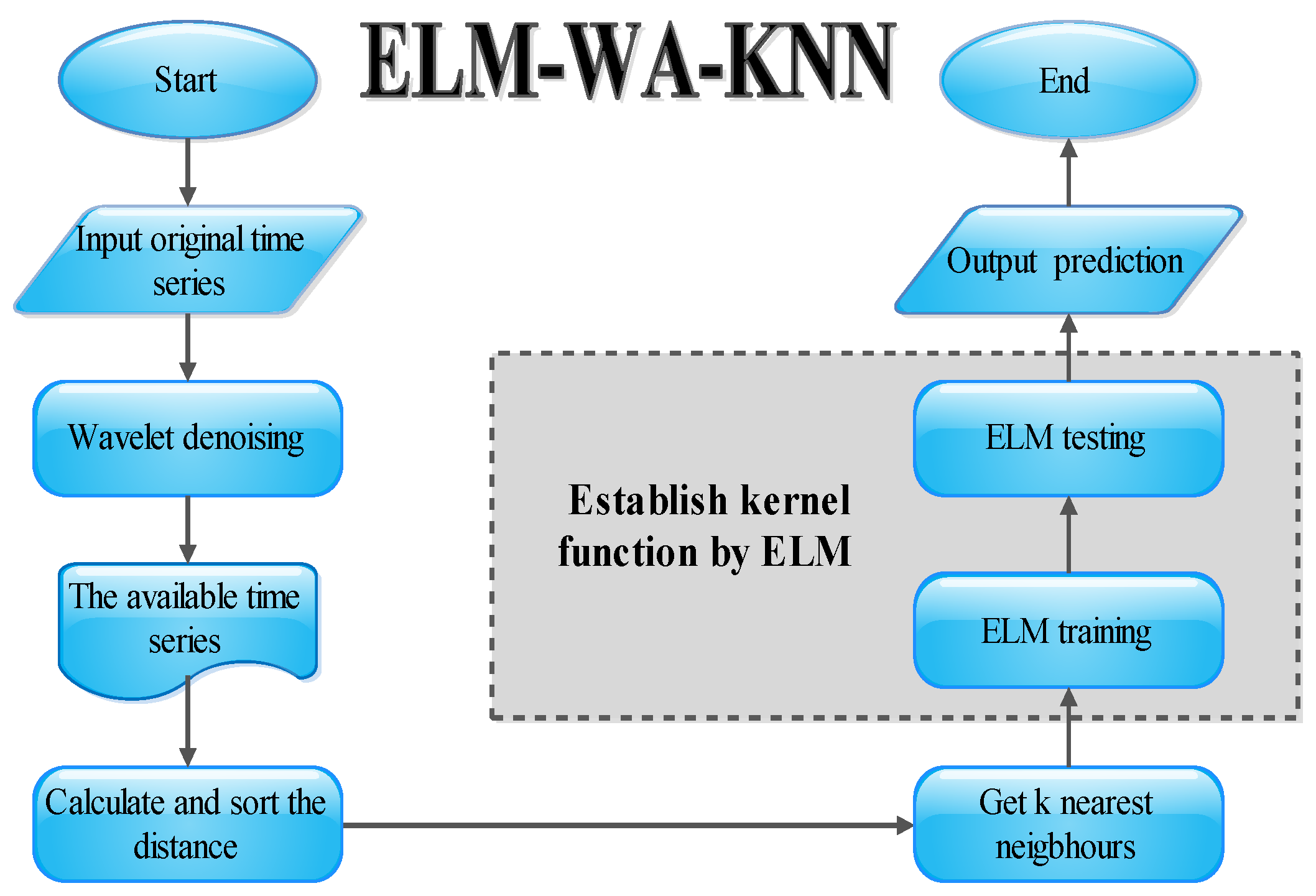

2.4. The Proposed Hybrid Model

In this section, the hybrid model of ELM-WA-KNN is proposed as follows:

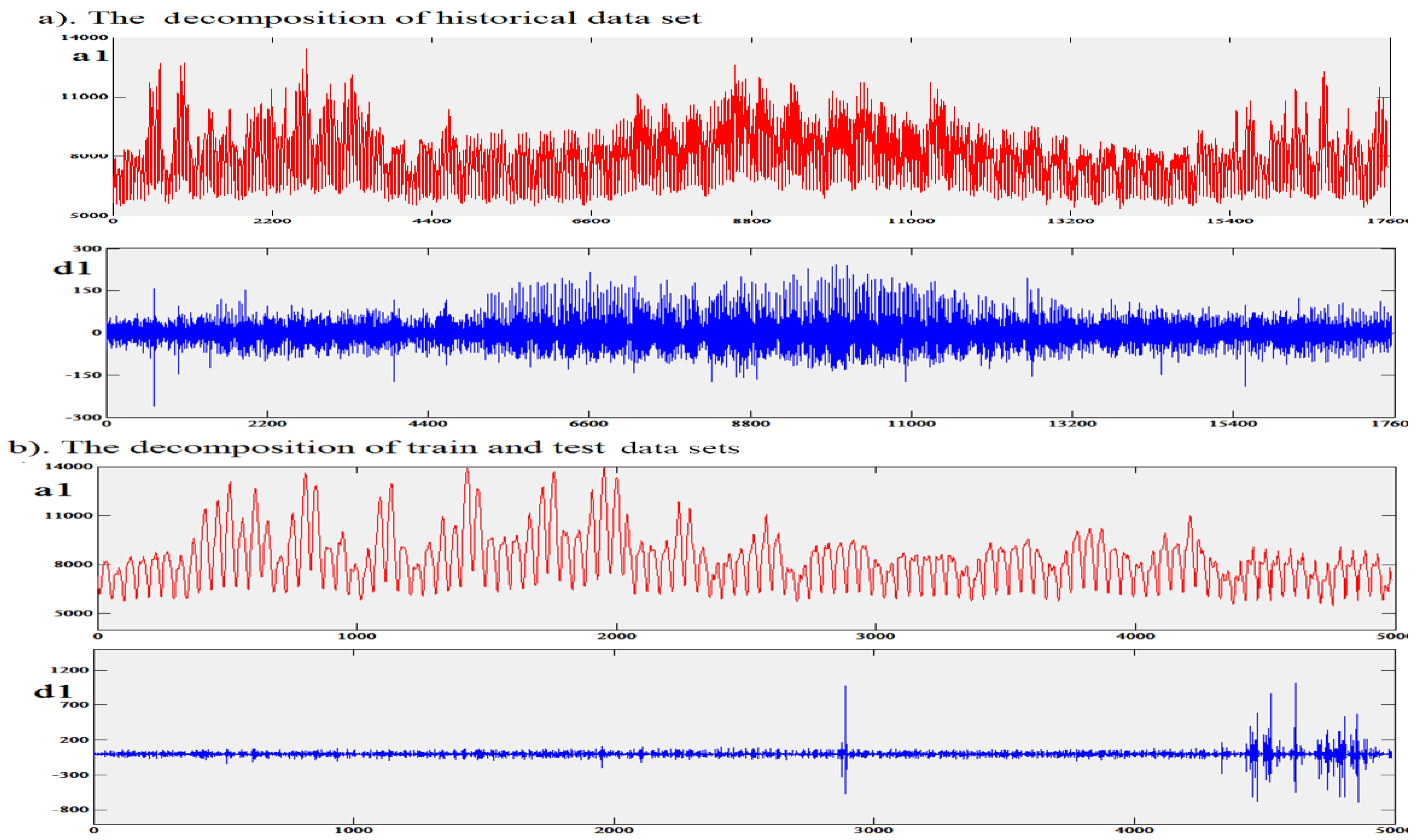

Suppose the historical data set is , the training data set is and the testing data set is .

Firstly, the electric load time series including the historical data set, the training data set and the testing data set is decomposed by DWT. The one low-frequency signal and one high-frequency signal are regarded as the available time series and white noise, respectively. It could be expressed as follows:

Secondly, we select the last six electric load data as the input variable and the following one as the output variable of the hybrid model. The formula of the system is as follows:

Thirdly, the distances between the training (or testing) target and one of historical targets is represented by the following formula:

Suppose

stands for any one of the training (or testing) targets and

are the corresponding characteristic indicators of the training (or testing) data. In addition,

is the historical data target and

are the corresponding characteristic indicators of the historical data. In this paper, the distance is calculated by Euclidean distance:

Furthermore, the historical target of

and the distance corresponding to the historical target can be expressed as follows:

Next, the distances can be listed in ascending order and the first k historical targets can be obtained as .

Then, the kernel function can be obtained by ELM:

where the kernel function

can be trained by ELM. It cannot be ignored that a simple linear relationship is employed as the traditional method to build the kernel function, but the method cannot deal with the nonlinear relationship. Hence, ELM is selected to establish the kernel function because of its great accuracy and high speed. Finally, the prediction value of the innovative EWKM hybrid model can be obtained, and the basic structure of the proposed model is shown in

Figure 2.

4. Conclusions and Future Work

In electricity demand forecasting, noise signals, caused by various unstable factors, often corrupt electric load series. The contribution of this paper is a method that uses a hybrid model based on wavelet denoising processing. In previous studies, models had been usually established with the original data, however, this paper takes the low-frequency signal to modeling, so that it can reduce errors caused by noise signals. Moreover, in the construction of the kernel function, the traditional way is to construct a linear relationship, but this paper introduces the ELM into the establishment of the kernel function, so that it can optimize the KNN algorithm. Through the analysis of experimental results, a conclusion can be drawn that the every part included in the new hybrid model is necessary to predict future electric loads.

However, this paper only takes electric load data as the research subject, without taking other related variables into consideration. To resolve such limitations, future research should aim to include other factors which may influence the electric demand, such as the population and GDP, and there’s a lot of room for improvement.