A Method to Facilitate Uncertainty Analysis in LCAs of Buildings

Abstract

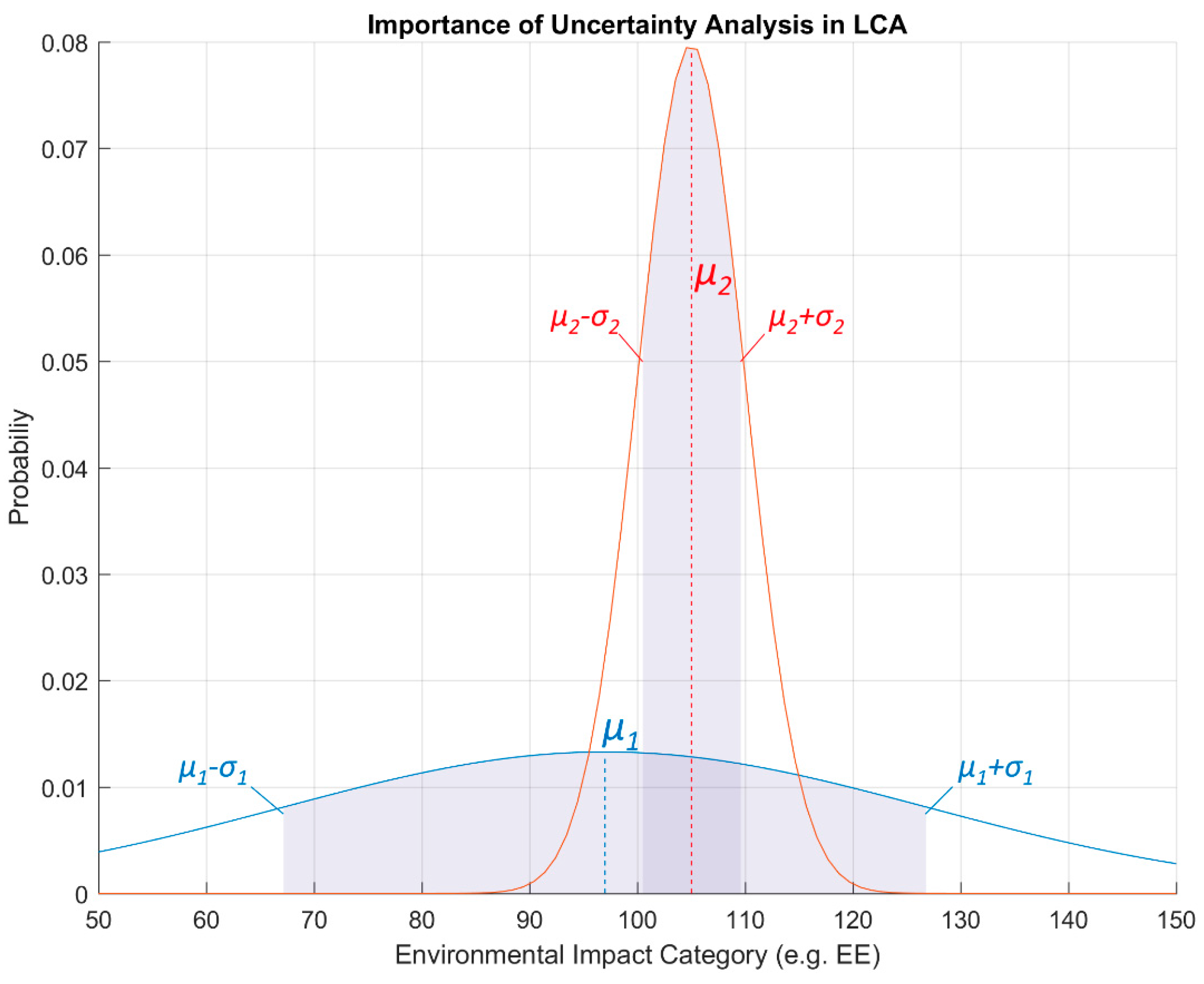

:1. Introduction and Theoretical Background

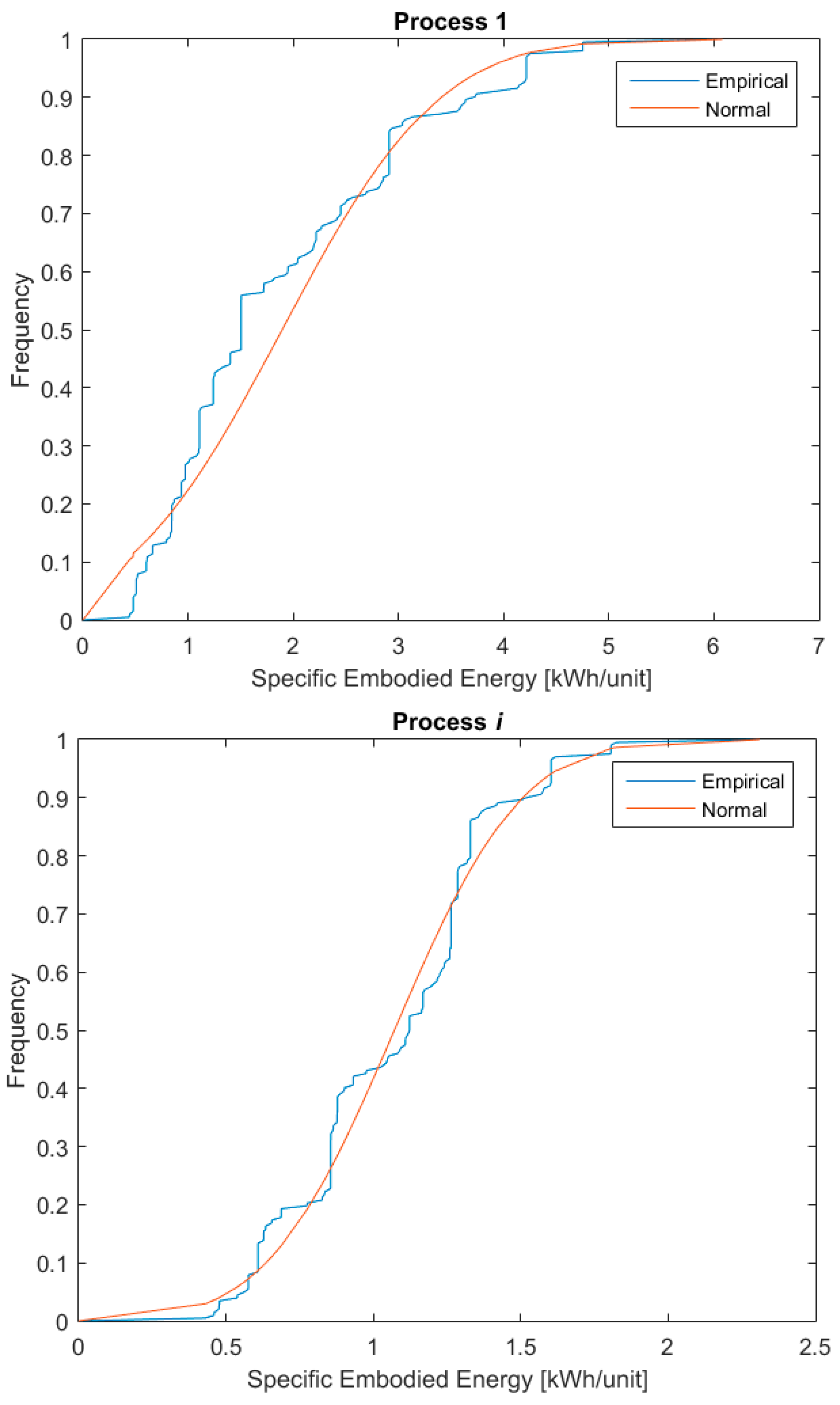

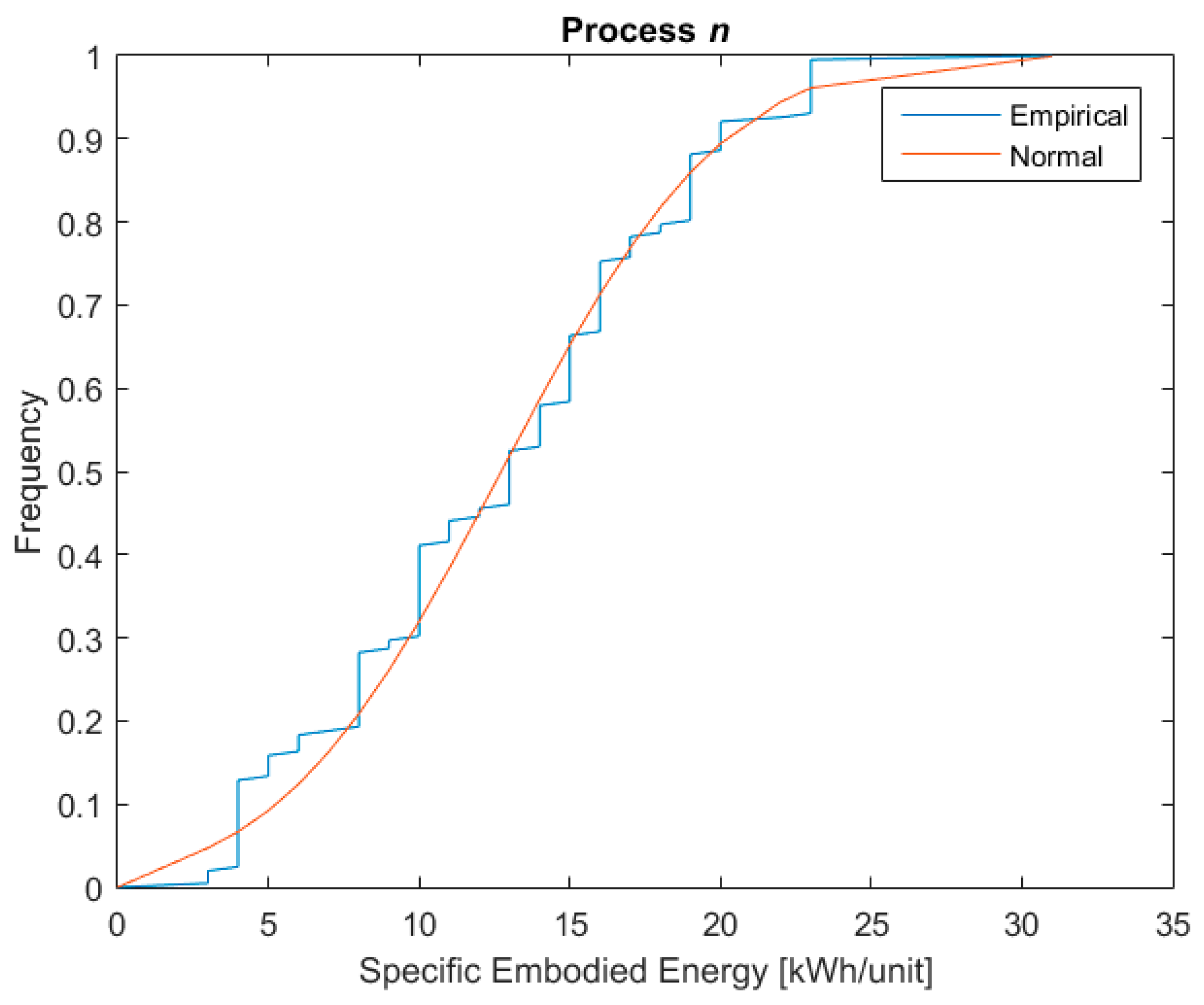

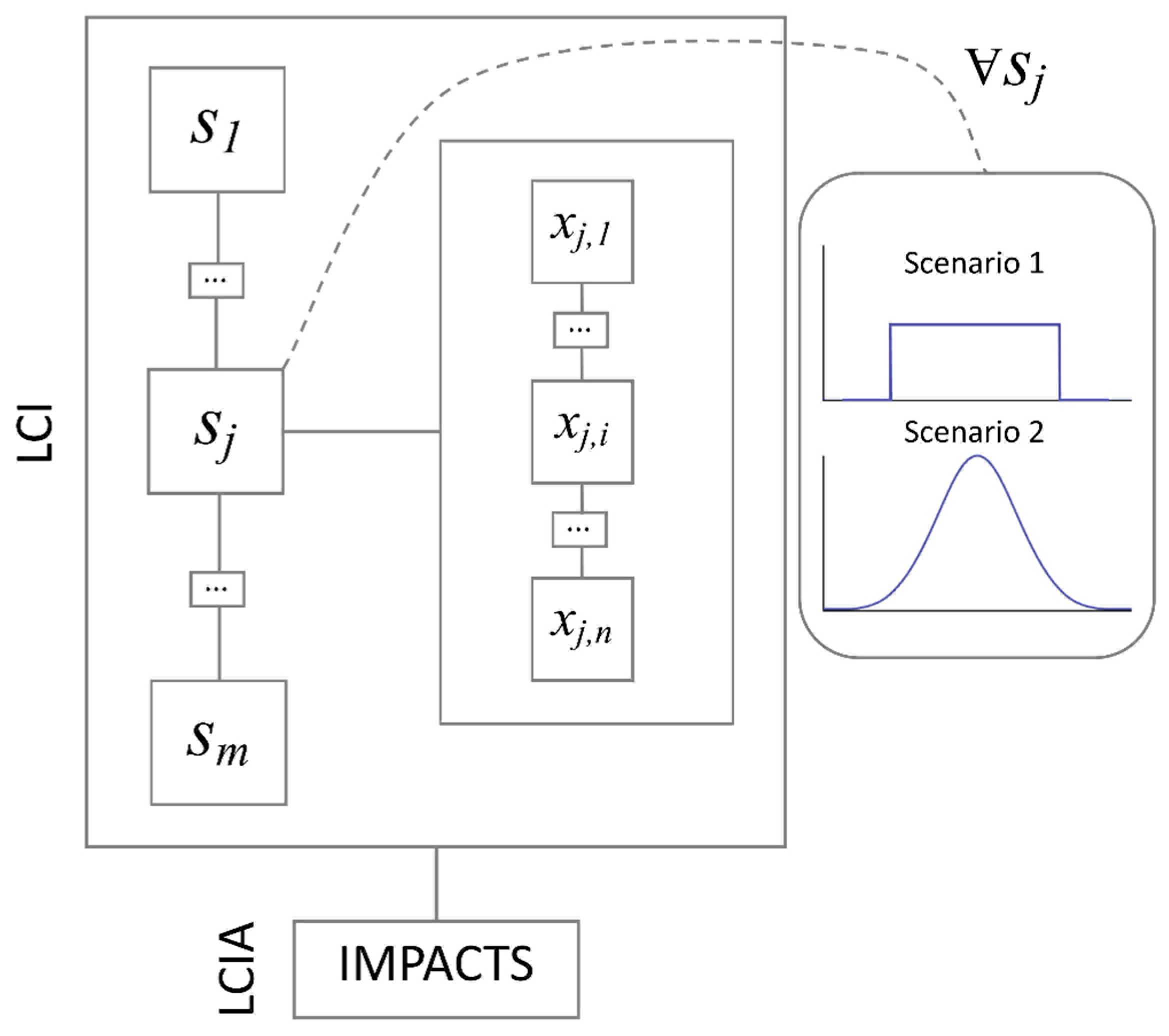

2. Theory and Methods

The Algorithm Developed for This Research

- LCI is constituted by as few as two entries (e.g., only two life cycle processes)—an example could be a very simple construction product or material such as unfired clay;

- LCI is constituted by as many entries as those for which collected primary data were available.

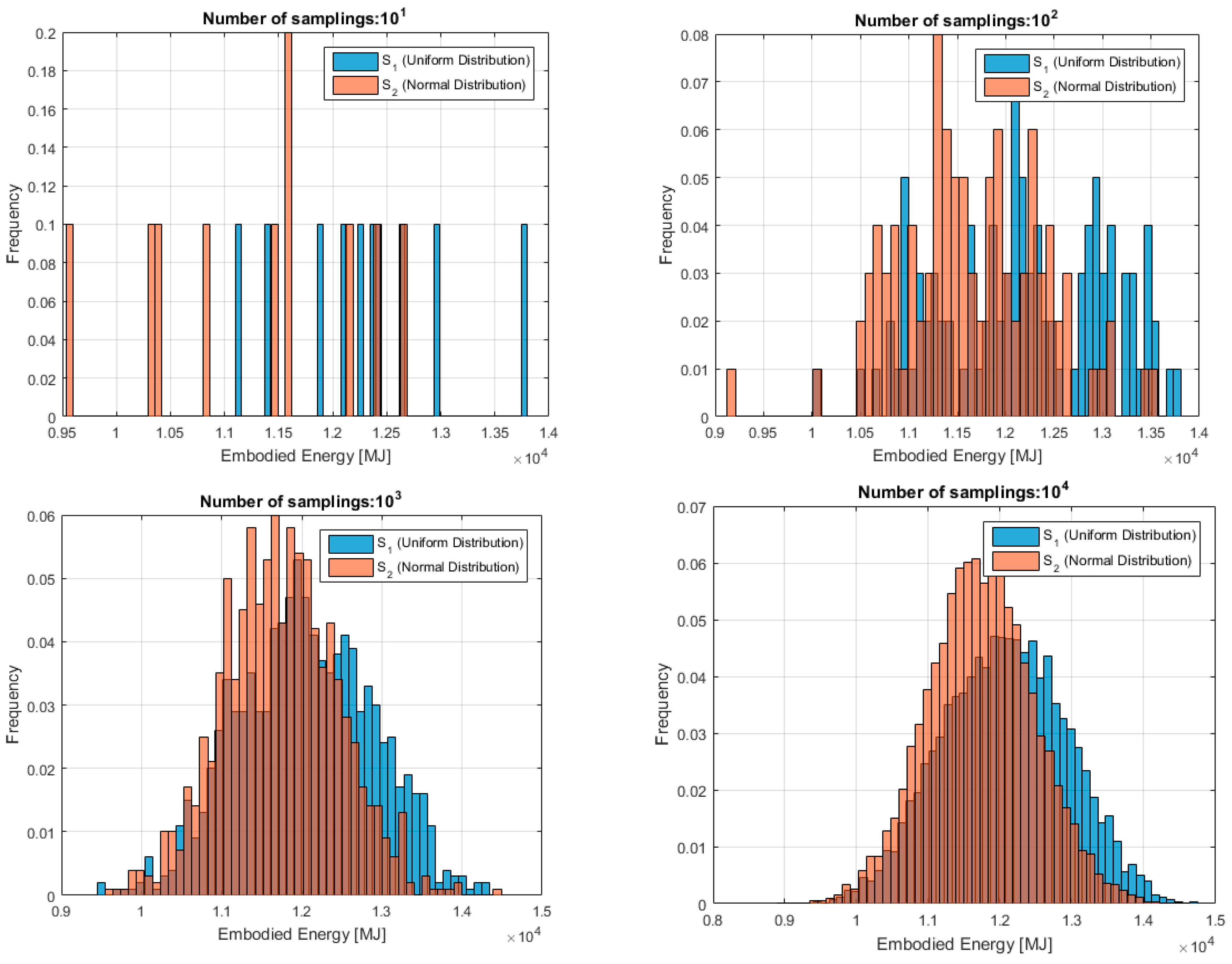

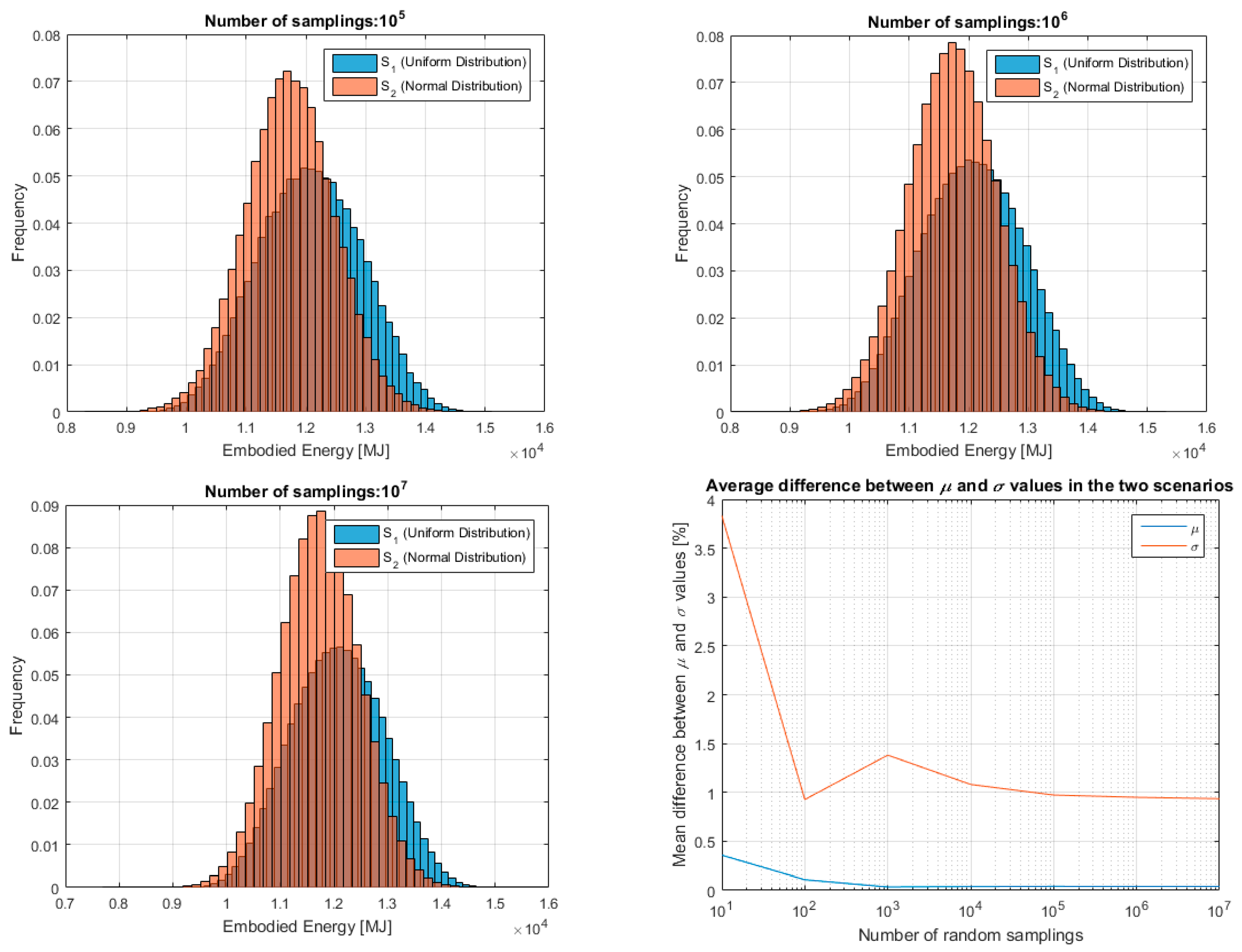

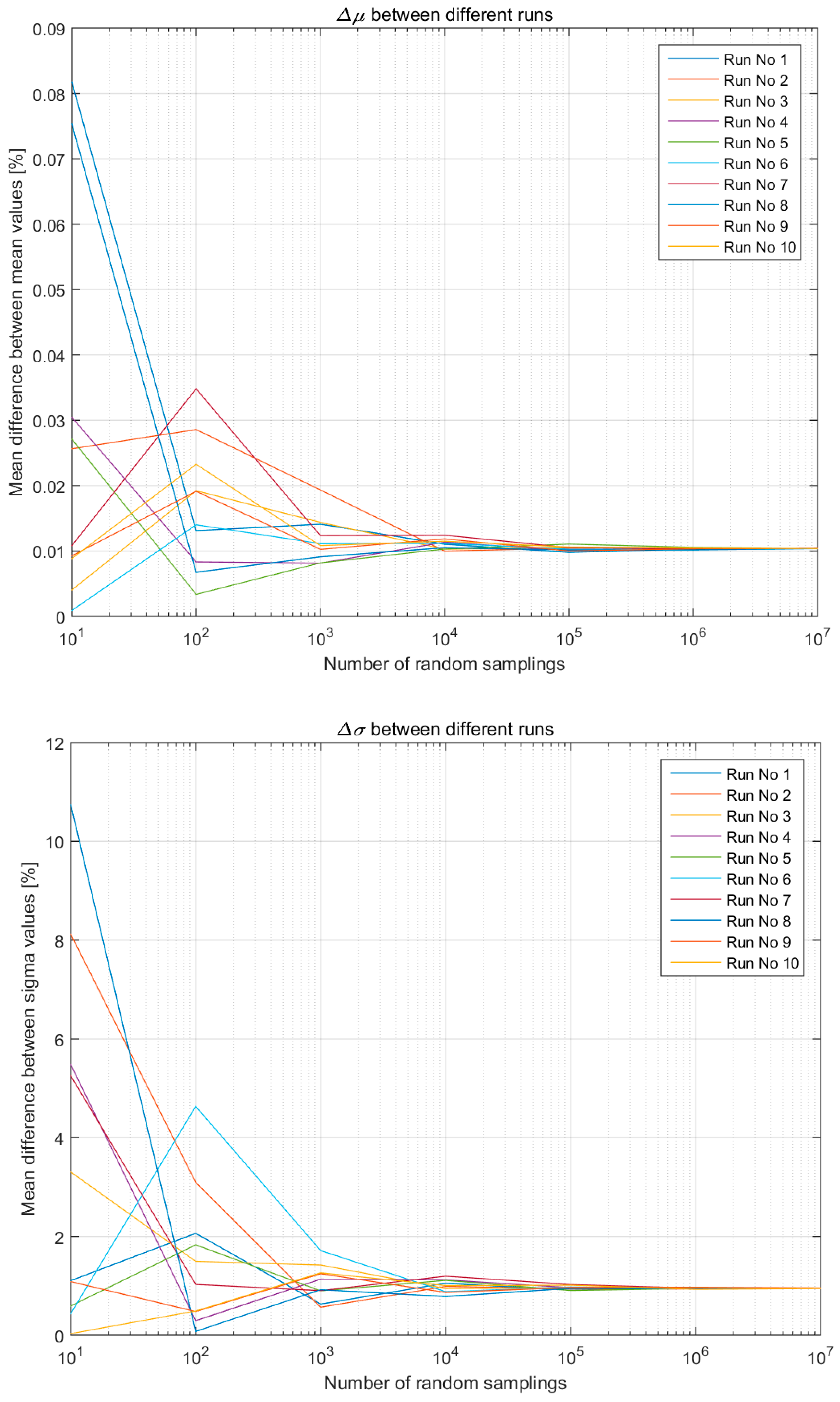

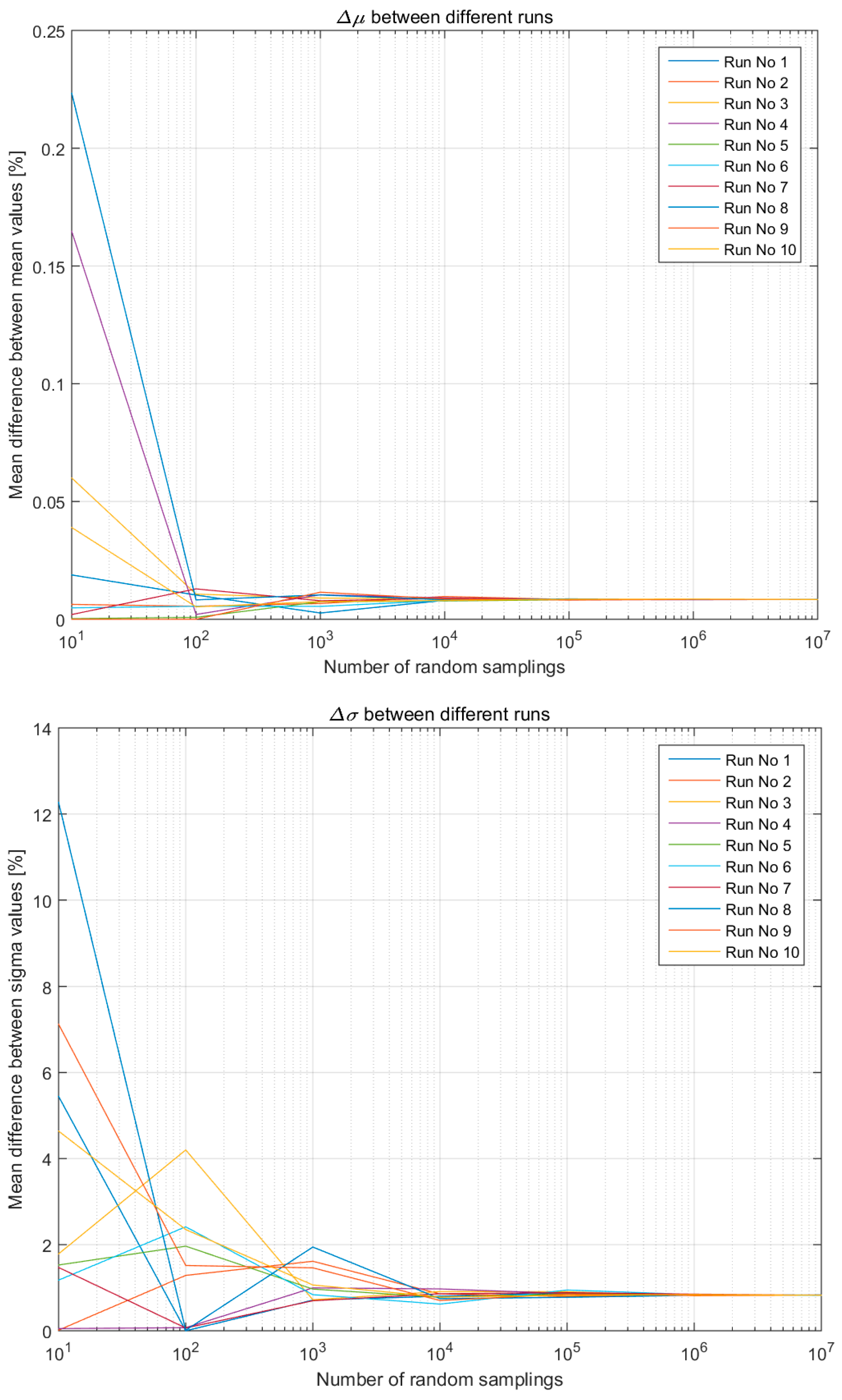

3. Results and Discussion

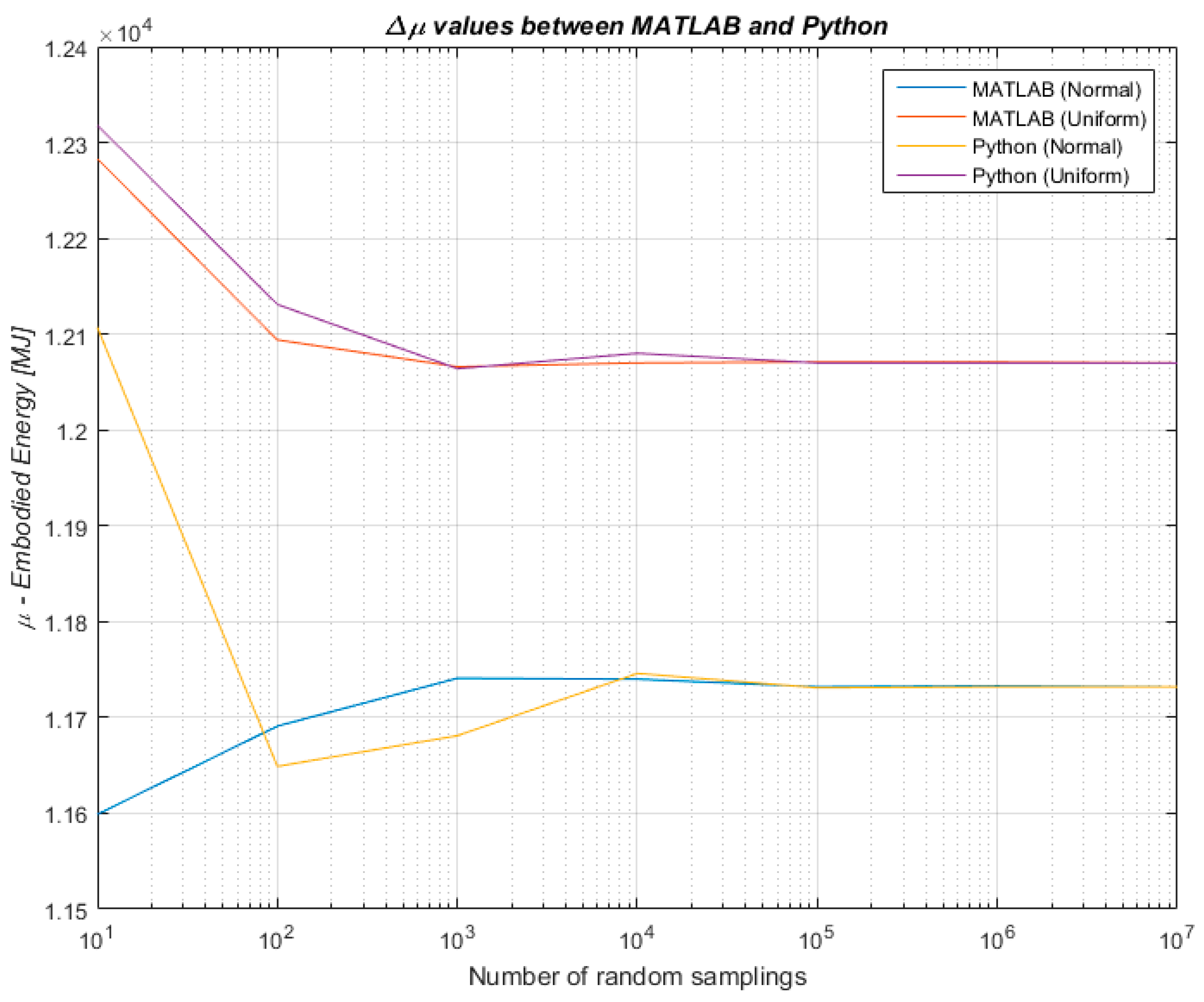

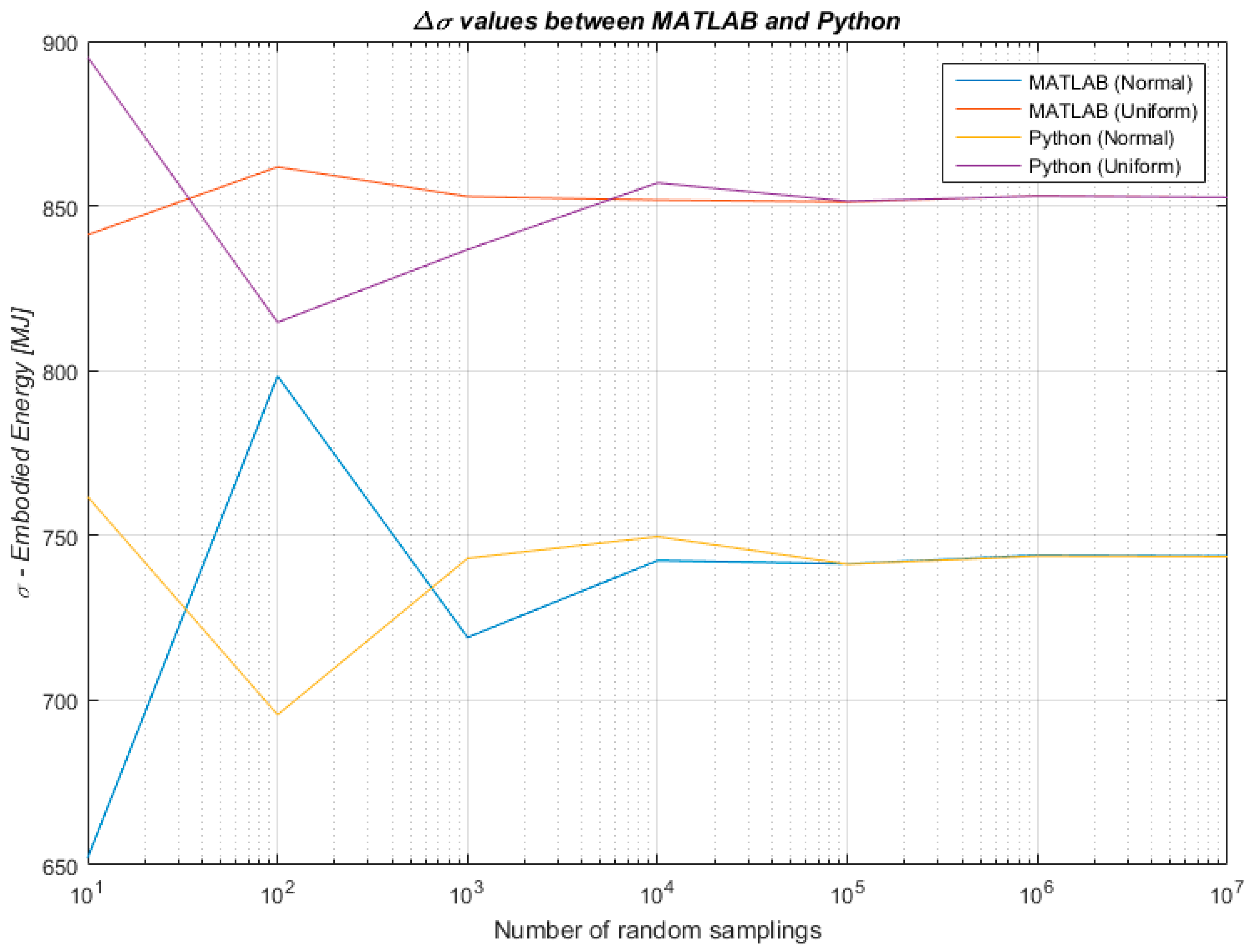

- 0.01% for the μ, and,

- 1% for the σ.

- 0.01% for the μ, and,

- 1% for the σ.

4. Conclusions and Future Work

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Neri, E.; Cespi, D.; Setti, L.; Gombi, E.; Bernardi, E.; Vassura, I.; Passarini, F. Biomass Residues to Renewable Energy: A Life Cycle Perspective Applied at a Local Scale. Energies 2016, 9, 922. [Google Scholar] [CrossRef]

- Bonamente, E.; Cotana, F. Carbon and Energy Footprints of Prefabricated Industrial Buildings: A Systematic Life Cycle Assessment Analysis. Energies 2015, 8, 12685–12701. [Google Scholar] [CrossRef]

- Thiel, C.; Campion, N.; Landis, A.; Jones, A.; Schaefer, L.; Bilec, M. A Materials Life Cycle Assessment of a Net-Zero Energy Building. Energies 2013, 6, 1125–1141. [Google Scholar] [CrossRef]

- Zabalza, I.; Scarpellini, S.; Aranda, A.; Llera, E.; Jáñez, A. Use of LCA as a Tool for Building Ecodesign. A Case Study of a Low Energy Building in Spain. Energies 2013, 6, 3901–3921. [Google Scholar] [CrossRef]

- Magrassi, F.; Del Borghi, A.; Gallo, M.; Strazza, C.; Robba, M. Optimal Planning of Sustainable Buildings: Integration of Life Cycle Assessment and Optimization in a Decision Support System (DSS). Energies 2016, 9, 490. [Google Scholar] [CrossRef]

- United States Environmental Protection Agency (US EPA). Exposure Factors Handbook; Report EPA/600/8-89/043; United States Enviornmental Protection Agency: Washington, DC, USA, 1989.

- Lloyd, S.M.; Ries, R. Characterizing, Propagating, and Analyzing Uncertainty in Life-Cycle Assessment: A Survey of Quantitative Approaches. J. Ind. Ecol. 2007, 11, 161–179. [Google Scholar] [CrossRef]

- Pomponi, F.; Moncaster, A.M. Embodied carbon mitigation and reduction in the built environment—What does the evidence say? J. Environ. Manag. 2016, 181, 687–700. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.-R.; Wu, W.-J.; Wang, Y.-F. Bridge life cycle assessment with data uncertainty. Int. J. Life Cycle Assess. 2016, 21, 569–576. [Google Scholar] [CrossRef]

- Huijbregts, M. Uncertainty and variability in environmental life-cycle assessment. Int. J. Life Cycle Assess. 2002, 7, 173. [Google Scholar] [CrossRef]

- Di Maria, F.; Micale, C.; Contini, S. A novel approach for uncertainty propagation applied to two different bio-waste management options. Int. J. Life Cycle Assess. 2016, 21, 1529–1537. [Google Scholar] [CrossRef]

- Björklund, A.E. Survey of approaches to improve reliability in LCA. Int. J. Life Cycle Assess. 2002, 7, 64–72. [Google Scholar] [CrossRef]

- Cellura, M.; Longo, S.; Mistretta, M. Sensitivity analysis to quantify uncertainty in Life Cycle Assessment: The case study of an Italian tile. Rene. Sustain. Energy Rev. 2011, 15, 4697–4705. [Google Scholar] [CrossRef]

- Huijbregts, M.A.J.; Norris, G.; Bretz, R.; Ciroth, A.; Maurice, B.; Bahr, B.; Weidema, B.; Beaufort, A.S.H. Framework for modelling data uncertainty in life cycle inventories. Int. J. Life Cycle Assess. 2001, 6, 127–132. [Google Scholar] [CrossRef]

- Roeder, M.; Whittaker, C.; Thornley, P. How certain are greenhouse gas reductions from bioenergy? Life cycle assessment and uncertainty analysis of wood pellet-to-electricity supply chains from forest residues. Biomass Bioenergy 2015, 79, 50–63. [Google Scholar] [CrossRef]

- Heijungs, R.; Suh, S. The Computational Structure of Life Cycle Assessment; Springer Science & Business Media: New York, NY, USA, 2002; Volume 11. [Google Scholar]

- André, J.C.S.; Lopes, D.R. On the use of possibility theory in uncertainty analysis of life cycle inventory. Int. J. Life Cycle Assess. 2011, 17, 350–361. [Google Scholar] [CrossRef]

- Benetto, E.; Dujet, C.; Rousseaux, P. Integrating fuzzy multicriteria analysis and uncertainty evaluation in life cycle assessment. Environ. Model. Softw. 2008, 23, 1461–1467. [Google Scholar] [CrossRef]

- Heijungs, R.; Tan, R.R. Rigorous proof of fuzzy error propagation with matrix-based LCI. Int. J. Life Cycle Assess. 2010, 15, 1014–1019. [Google Scholar] [CrossRef]

- Egilmez, G.; Gumus, S.; Kucukvar, M.; Tatari, O. A fuzzy data envelopment analysis framework for dealing with uncertainty impacts of input–output life cycle assessment models on eco-efficiency assessment. J. Clean. Prod. 2016, 129, 622–636. [Google Scholar] [CrossRef]

- Hoxha, E.; Habert, G.; Chevalier, J.; Bazzana, M.; Le Roy, R. Method to analyse the contribution of material's sensitivity in buildings’ environmental impact. J. Clean. Prod. 2014, 66, 54–64. [Google Scholar] [CrossRef]

- Weidema, B.P.; Wesnæs, M.S. Data quality management for life cycle inventories—An example of using data quality indicators. J. Clean. Prod. 1996, 4, 167–174. [Google Scholar] [CrossRef]

- Wang, E.; Shen, Z. A hybrid Data Quality Indicator and statistical method for improving uncertainty analysis in LCA of complex system—Application to the whole-building embodied energy analysis. J. Clean. Prod. 2013, 43, 166–173. [Google Scholar] [CrossRef]

- Sonnemann, G.W.; Schuhmacher, M.; Castells, F. Uncertainty assessment by a Monte Carlo simulation in a life cycle inventory of electricity produced by a waste incinerator. J. Clean. Prod. 2003, 11, 279–292. [Google Scholar] [CrossRef]

- Von Bahr, B.; Steen, B. Reducing epistemological uncertainty in life cycle inventory. J. Clean. Prod. 2004, 12, 369–388. [Google Scholar] [CrossRef]

- Lasvaux, S.; Schiopu, N.; Habert, G.; Chevalier, J.; Peuportier, B. Influence of simplification of life cycle inventories on the accuracy of impact assessment: Application to construction products. J. Clean. Prod. 2014, 79, 142–151. [Google Scholar] [CrossRef]

- Benetto, E.; Dujet, C.; Rousseaux, P. Possibility Theory: A New Approach to Uncertainty Analysis? (3 pp). Int. J. Life Cycle Assess. 2005, 11, 114–116. [Google Scholar] [CrossRef]

- Coulon, R.; Camobreco, V.; Teulon, H.; Besnainou, J. Data quality and uncertainty in LCI. Int. J. Life Cycle Assess. 1997, 2, 178–182. [Google Scholar] [CrossRef]

- Lo, S.-C.; Ma, H.-W.; Lo, S.-L. Quantifying and reducing uncertainty in life cycle assessment using the Bayesian Monte Carlo method. Sci. Total Environ. 2005, 340, 23–33. [Google Scholar] [CrossRef] [PubMed]

- Bojacá, C.R.; Schrevens, E. Parameter uncertainty in LCA: Stochastic sampling under correlation. Int. J. Life Cycle Assess. 2010, 15, 238–246. [Google Scholar] [CrossRef]

- Canter, K.G.; Kennedy, D.J.; Montgomery, D.C.; Keats, J.B.; Carlyle, W.M. Screening stochastic life cycle assessment inventory models. Int. J. Life Cycle Assess. 2002, 7, 18–26. [Google Scholar] [CrossRef]

- Ciroth, A.; Fleischer, G.; Steinbach, J. Uncertainty calculation in life cycle assessments. Int. J. Life Cycle Assess. 2004, 9, 216–226. [Google Scholar] [CrossRef]

- Geisler, G.; Hellweg, S.; Hungerbuhler, K. Uncertainty analysis in life cycle assessment (LCA): Case study on plant-protection products and implications for decision making. Int. J. Life Cycle Assess. 2005, 10, 184–192. [Google Scholar] [CrossRef]

- Huijbregts, M.A.J. Application of uncertainty and variability in LCA. Int. J. Life Cycle Assess. 1998, 3, 273–280. [Google Scholar] [CrossRef]

- Huijbregts, M.A.J. Part II: Dealing with parameter uncertainty and uncertainty due to choices in life cycle assessment. Int. J. Life Cycle Assess. 1998, 3, 343–351. [Google Scholar] [CrossRef]

- Miller, S.A.; Moysey, S.; Sharp, B.; Alfaro, J. A Stochastic Approach to Model Dynamic Systems in Life Cycle Assessment. J. Ind. Ecol. 2013, 17, 352–362. [Google Scholar] [CrossRef]

- Niero, M.; Pizzol, M.; Bruun, H.G.; Thomsen, M. Comparative life cycle assessment of wastewater treatment in Denmark including sensitivity and uncertainty analysis. J. Clean. Prod. 2014, 68, 25–35. [Google Scholar] [CrossRef]

- Sills, D.L.; Paramita, V.; Franke, M.J.; Johnson, M.C.; Akabas, T.M.; Greene, C.H.; Testert, J.W. Quantitative Uncertainty Analysis of Life Cycle Assessment for Algal Biofuel Production. Environ. Sci. Technol. 2013, 47, 687–694. [Google Scholar] [CrossRef] [PubMed]

- Su, X.; Luo, Z.; Li, Y.; Huang, C. Life cycle inventory comparison of different building insulation materials and uncertainty analysis. J. Clean. Prod. 2016, 112, 275–281. [Google Scholar] [CrossRef]

- Hong, J.; Shen, G.Q.; Peng, Y.; Feng, Y.; Mao, C. Uncertainty analysis for measuring greenhouse gas emissions in the building construction phase: A case study in China. J. Clean. Prod. 2016, 129, 183–195. [Google Scholar] [CrossRef]

- Chou, J.-S.; Yeh, K.-C. Life cycle carbon dioxide emissions simulation and environmental cost analysis for building construction. J. Clean. Prod. 2015, 101, 137–147. [Google Scholar] [CrossRef]

- Heijungs, R. Identification of key issues for further investigation in improving the reliability of life-cycle assessments. J. Clean. Prod. 1996, 4, 159–166. [Google Scholar] [CrossRef]

- Chevalier, J.-L.; Téno, J.-F.L. Life cycle analysis with ill-defined data and its application to building products. Int. J. Life Cycle Assess. 1996, 1, 90–96. [Google Scholar] [CrossRef]

- Peereboom, E.C.; Kleijn, R.; Lemkowitz, S.; Lundie, S. Influence of Inventory Data Sets on Life-Cycle Assessment Results: A Case Study on PVC. J. Ind. Ecol. 1998, 2, 109–130. [Google Scholar] [CrossRef]

- Reap, J.; Roman, F.; Duncan, S.; Bras, B. A survey of unresolved problems in life cycle assessment. Int. J. Life Cycle Assess. 2008, 13, 374–388. [Google Scholar] [CrossRef]

- Hong, J.; Shaked, S.; Rosenbaum, R.K.; Jolliet, O. Analytical uncertainty propagation in life cycle inventory and impact assessment: Application to an automobile front panel. Int. J. Life Cycle Assess. 2010, 15, 499–510. [Google Scholar] [CrossRef]

- Cambridge University Built Environment Sustainability (CUBES). Focus group on ‘Risk and Uncertainty in Embodied Carbon Assessment’ (Facilitator: Francesco Pomponi). Proceeding of the Cambridge University Built Environment Sustainability (CUBES) Embodied Carbon Symposium 2016, Cambridge, UK, 19 April 2016. [Google Scholar]

- Peters, G.P. Efficient algorithms for Life Cycle Assessment, Input-Output Analysis, and Monte-Carlo Analysis. Int. J. Life Cycle Assess. 2006, 12, 373–380. [Google Scholar] [CrossRef]

- Heijungs, R.; Lenzen, M. Error propagation methods for LCA—A comparison. Int. J. Life Cycle Assess. 2014, 19, 1445–1461. [Google Scholar] [CrossRef]

- Marvinney, E.; Kendall, A.; Brodt, S. Life Cycle-based Assessment of Energy Use and Greenhouse Gas Emissions in Almond Production, Part II: Uncertainty Analysis through Sensitivity Analysis and Scenario Testing. J. Ind. Ecol. 2015, 19, 1019–1029. [Google Scholar] [CrossRef]

- Ventura, A.; Senga Kiessé, T.; Cazacliu, B.; Idir, R.; Werf, H.M. Sensitivity Analysis of Environmental Process Modeling in a Life Cycle Context: A Case Study of Hemp Crop Production. J. Ind. Ecol. 2015, 19, 978–993. [Google Scholar]

- Gregory, J.; Noshadravan, A.; Olivetti, E.; Kirchain, R. A Methodology for Robust Comparative Life Cycle Assessments Incorporating Uncertainty. Environ. Sci. Technol. 2016, 50, 6397–6405. [Google Scholar] [CrossRef] [PubMed]

- Pomponi, F. Operational Performance and Life Cycle Assessment of Double Skin Façades for Office Refurbishments in the UK. Ph.D. Thesis, University of Brighton, Brighton, UK, 2015. [Google Scholar]

- Sprinthall, R.C. Basic Statistical Analysis, 9th ed.; Pearson Education: Upper Saddle River, NJ, USA, 2011. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pomponi, F.; D’Amico, B.; Moncaster, A.M. A Method to Facilitate Uncertainty Analysis in LCAs of Buildings. Energies 2017, 10, 524. https://doi.org/10.3390/en10040524

Pomponi F, D’Amico B, Moncaster AM. A Method to Facilitate Uncertainty Analysis in LCAs of Buildings. Energies. 2017; 10(4):524. https://doi.org/10.3390/en10040524

Chicago/Turabian StylePomponi, Francesco, Bernardino D’Amico, and Alice M. Moncaster. 2017. "A Method to Facilitate Uncertainty Analysis in LCAs of Buildings" Energies 10, no. 4: 524. https://doi.org/10.3390/en10040524