Development of an Automatic Testing Platform for Aviator’s Night Vision Goggle Honeycomb Defect Inspection

Abstract

:1. Introduction

- A novel searching algorithm, which is able to achieve fast and accurate focusing, is proposed. The main advantage of the developed method is also addressed through a comparison study.

- Different sharpness estimation methods for NVGs are also considered for comparison studies.

- A honeycomb defect detection process is proposed to automatic point out the number of defects and it corresponding locations. Therefore, the detection procedure can be realized efficiently and objectively.

2. Proposed Approach

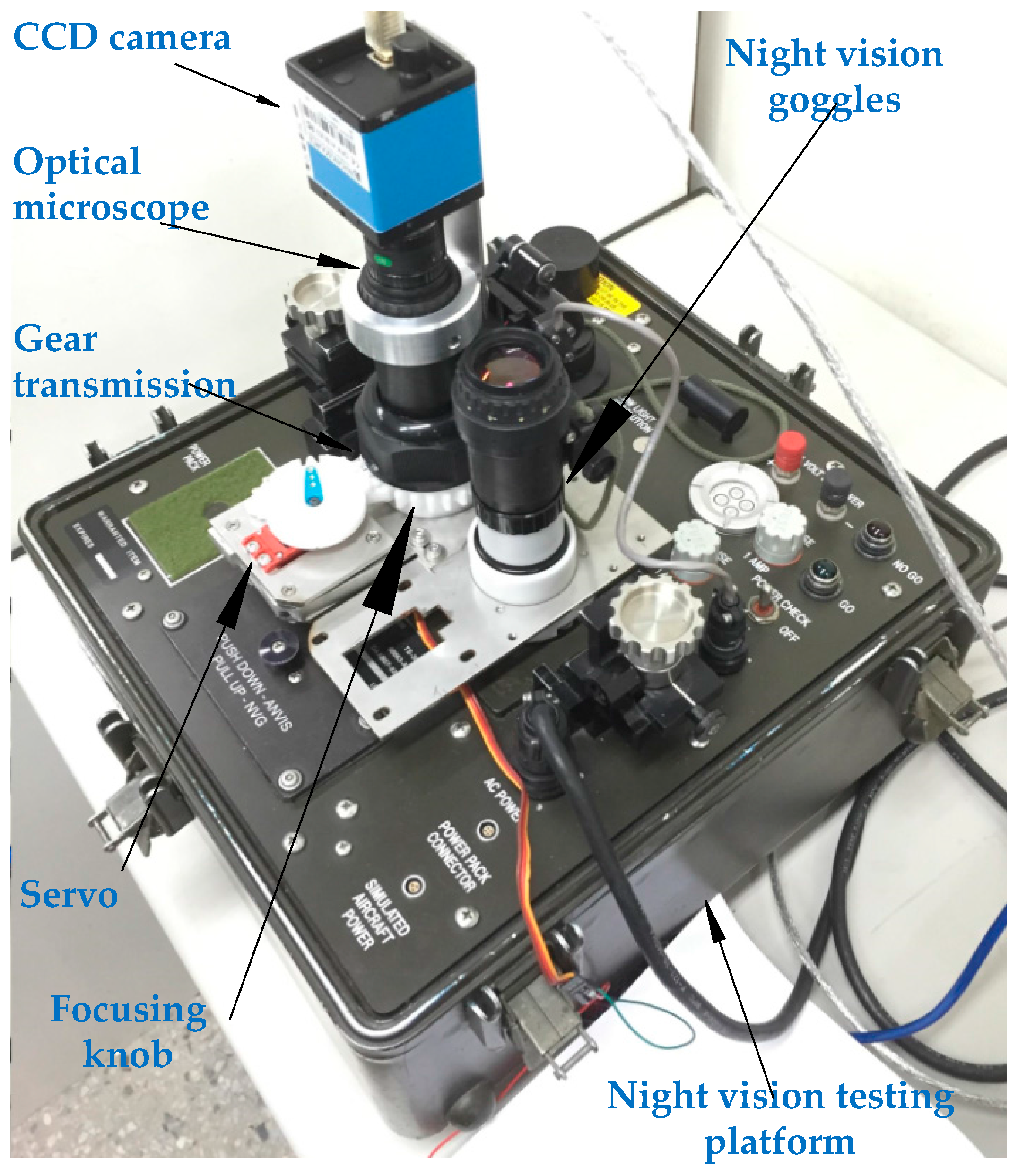

2.1. A. Experimental Setup

2.2. Process for Passive Auto-Focusing

2.3. Search Approaches

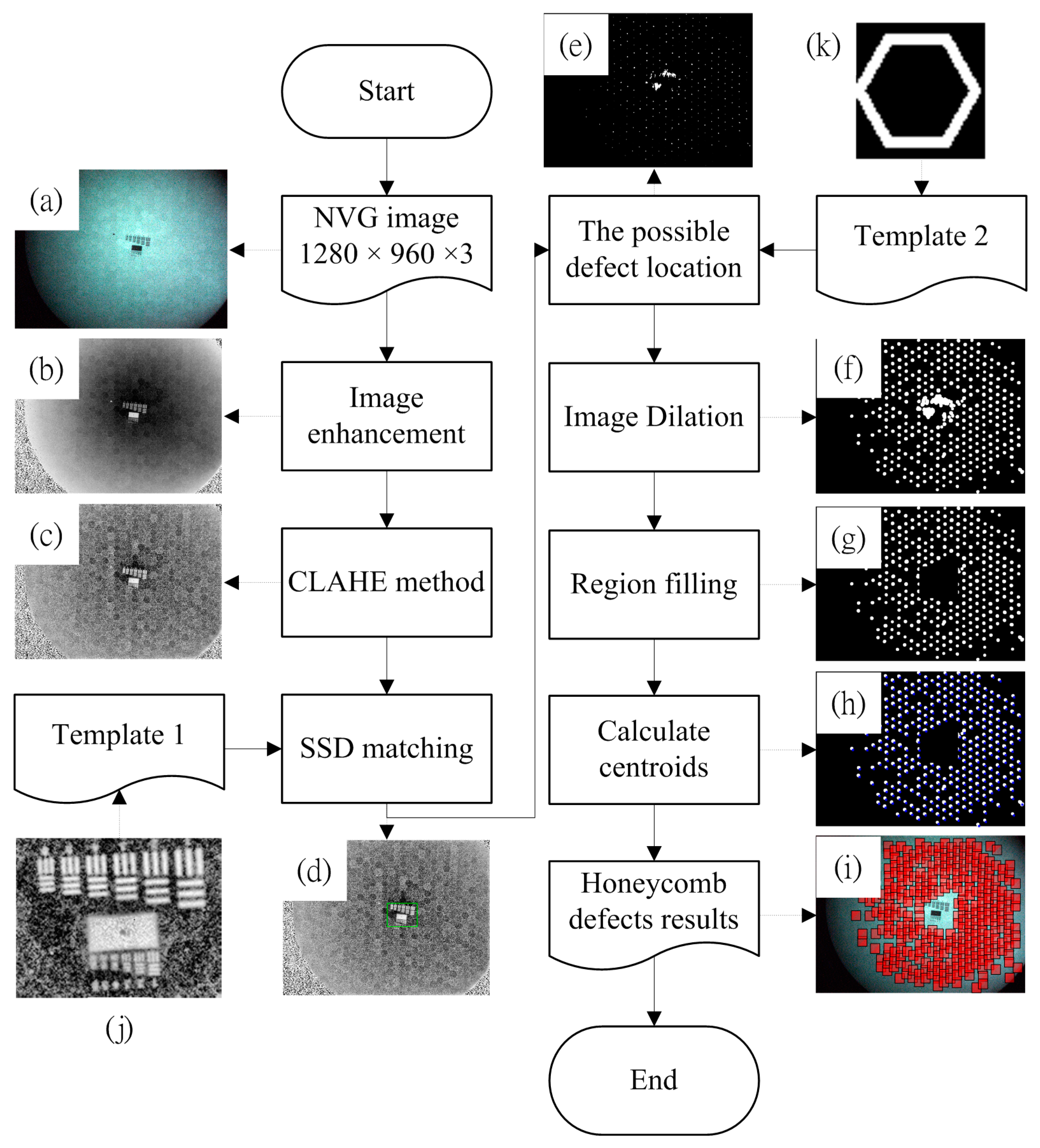

2.4. Honeycomb Defect Detection Procedure

- A color image of dimensions 960 × 1280 × 3 pixels is obtained via a CCD camera, as shown in Figure 5a.

- Since the green color array component from the RGB color system has the most obvious honeycomb defects and the blue color array component has the least, the green array is subtracted from the blue array after extracting them from the color image to reduce processing dimensions. This allows a reduction in the non-honeycomb defect region components and accentuates the honeycomb defect region information. The obtained gray level image is shown in Figure 5b.

- The sum square difference (SSD) method [27] is used to detect the positions of the mark, which is applied to the focusing process, using the template image shown in Figure 5j. The mark area is highlighted by a rectangular region shown in Figure 5d. The matching measure SSD is defined as follows:where is the search region (or the so-called primary image) at the position and is template image at the position which is of size .

- To find the location of the honeycomb defects, the binary template, Figure 5k, of size 61 × 58 pixels is introduced to carry out a “shift” operation for the image obtained in step 3. During the operation, a sub-image will be binarized using a threshold of its average intensity. If the amount of the corresponding pixels having the same value with “template 2” is greater than 55% of the total amount, the sub-image will set to be white. Otherwise, it will be black. The “white” area indicates possible location of the honeycomb defect as shown in Figure 5e. Hence, this step will produce a binary image, wherein the white points mark locations that are similar to honeycomb defects. The process of calculated possible defect location image is summarized in Table 3.

- White color expansion is performed on the resulting binarized image of step 5 to group the honeycomb defects around the neighborhood, as shown in Figure 5f.

- The region of mark identified in step 4 is removed for further processing. After the removal, the image becomes Figure 5g.

- For honeycomb defect positioning, the centroid of each white-colored dot indicates the upper left corner of a honeycomb defect. The result is shown in Figure 5h.

3. Results and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Harrington, L.K.; McIntire, J.P.; Hopper, D.G. Assessing the binocular advantage in aided vision. Aviat. Space Environ. Med. 2014, 85, 930–939. [Google Scholar] [CrossRef]

- Biberman, L.M.; Alluisi, E.A. Pilot Errors Involving Head-Up Displays (HUDS), Helmet-Mounted Displays (HMDS), and Night Vision Goggles (NVGS); DTIC Document; DTIC: Fort Belvoir, VA, USA, 1992. [Google Scholar]

- Sabatini, R.; Richardson, M.; Cantiello, M.; Toscano, M.; Fiorini, P.; Zammit-Mangion, D.; Jia, H. Night vision imaging systems development, integration and verification in military fighter aircraft. J. Aeronaut. Aerosp. Eng. 2013, 2, 1–12. [Google Scholar]

- SK, V.; Joshi, V. Nvg research, training and operations in field: Bridging the quintessential gap. Ind. J. Aerosp. Med. 2012, 56, 1. [Google Scholar]

- Antonio, J.C.; Joralmon, D.Q.; Fiedler, G.M.; Berkley, W.E. Aviator’s Night Vision Imaging System Preflight Adjustment/Assessment Procedures; DTIC Document; DTIC: Fort Belvoir, VA, USA, 1994. [Google Scholar]

- Lapin, L. How to Get Anything on Anybody; Intelligence Here, Ltd.: Shasta, CA, USA, 2003. [Google Scholar]

- United States Department of the Army. Operator’s Manual: Aviator’s Night Vision Imaging System AN/AVS-6(V)1, (NSN 5855-01-138-4749) and AN/AVS-6(V)2, (NSN 5855-01-138-4748); Department of the Army: Washington, DC, USA, 1989.

- Macuda, T.; Allison, R.S.; Thomas, P.; Truong, L.; Tang, D.; Craig, G.; Jennings, S. Comparison of three night vision intensification tube technologies on resolution acuity: Results from grating and hoffman anv-126 tasks. In Proceedings of the Helmet- and Head-Mounted Displays X: Technologies and Applications, Orlando, FL, USA, 28 March 2005; pp. 32–39. [Google Scholar]

- Pinkus, A.; Task, H.L. Night Vision Goggles Objectives Lens Focusing Methodology; DTIC Document; DTIC: Fort Belvoir, VA, USA, 2000. [Google Scholar]

- Craig, G.L.; Erdos, R.; Jennings, S.; Brulotte, M.; Ramphal, G.; Sabatini, R.; Dumoulin, J.; Petipas, D.; Leger, A.; Krijn, R.; et al. Flight Testing of Night Vision Systems in Rotorcraft; North Atlantic Treaty Organisation: Washington, DC, USA, 2007. [Google Scholar]

- Zeng, D.; Benilov, A.; Bunin, B.; Martini, R. Long-wavelength ir imaging system to scuba diver detection in three dimensions. IEEE Sens. J. 2010, 10, 760–764. [Google Scholar] [CrossRef]

- Bernal, O.D.; Zabit, U.; Bosch, T.M. Robust method of stabilization of optical feedback regime by using adaptive optics for a self-mixing micro-interferometer laser displacement sensor. IEEE J. Sel. Top. Quantum Electron. 2015, 21, 336–343. [Google Scholar] [CrossRef]

- Chen, C.-Y.; Hwang, R.-C.; Chen, Y.-J. A passive auto-focus camera control system. Appl. Soft Comput. 2010, 10, 296–303. [Google Scholar] [CrossRef]

- Gamadia, M.; Kehtarnavaz, N. A filter-switching auto-focus framework for consumer camera imaging systems. IEEE Trans. Consum. Electron. 2012, 58, 228–236. [Google Scholar] [CrossRef]

- Yousefi, S.; Rahman, M.; Kehtarnavaz, N. A new auto-focus sharpness function for digital and smart-phone cameras. IEEE Trans. Consum. Electron. 2011, 57, 1003–1009. [Google Scholar] [CrossRef]

- Florea, C.; Florea, L. Parametric logarithmic type image processing for contrast based auto-focus in extreme lighting conditions. Int. J. Appl. Math. Comput. Sci. 2013, 23. [Google Scholar] [CrossRef]

- Gamadia, M.; Kehtarnavaz, N.; Roberts-Hoffman, K. Low-light auto-focus enhancement for digital and cell-phone camera image pipelines. IEEE Trans. Consum. Electron. 2007, 53, 249–257. [Google Scholar] [CrossRef]

- Chrzanowski, K. Review of night vision metrology. Opto-Electron. Rev. 2015, 23, 149–164. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Z.; Jiang, M.; Chang, M. Fast and accurate auto-focusing algorithm based on the combination of depth from focus and improved depth from defocus. Opt. Express 2014, 22, 31237–31247. [Google Scholar] [CrossRef] [PubMed]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Estrada, A. Feasibility of Using the an/pvs-14 Monocular Night Vision Device for Pilotage; DTIC Document; DTIC: Fort Belvoir, VA, USA, 2000. [Google Scholar]

- Tsai, D.C.; Chen, H.H. Reciprocal focus profile. IEEE Trans. Image Process. 2012, 21, 459–468. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Zhang, X.; Fu, H.; Chen, L.; Zhang, H.; Fu, X. Robust passive autofocus system for mobile phone camera applications. Comput. Electr. Eng. 2014, 40, 1353–1362. [Google Scholar] [CrossRef]

- Kang-Sun, C.; Sung-Jea, K. New Autofocusing Technique Using the Frequency Selective Weighted Median Filter for Video Cameras. In Proceedings of the 1999 ICCE International Conference on Consumer Electronics, Los Angeles, CA, USA, 22–24 June 1999; pp. 160–161. [Google Scholar]

- Kehtarnavaz, N.; Oh, H.J. Development and real-time implementation of a rule-based auto-focus algorithm. Real-Time Imaging 2003, 9, 197–203. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems IV; Paul, S.H., Ed.; Academic Press Professional, Inc.: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Essannouni, F.; Thami, R.O.H.; Aboutajdine, D.; Salam, A. Simple noncircular correlation method for exhaustive sum square difference matching. Opt. Eng. 2007, 46, 107004. [Google Scholar]

| In-Focus Result | Average Elapsed Time (s) | Correlation Coefficient | Entropy | |

|---|---|---|---|---|

| Criterion | =Y | <0.01 | >0.2 | <0.4 |

| Absolute Central Moment | N | 0.0009 | −0.2280 | 0.5203 |

| Brenner’s focus measure | Y | 0.0012 | −0.1168 | 0.5942 |

| Image contrast | Y | 9.0607 | 0.0420 | 1.1801 |

| Image curvature measure | Y | 0.0049 | 0.0980 | 2.9132 |

| DCT energy ratio | Y | 5.2191 | 0.1596 | 0.5203 |

| DCT reduced energy ratio | Y | 5.0998 | 0.1667 | 2.2880 |

| Gaussian derivative | Y | 0.0026 | 0.2570 | 0.7414 |

| Gray-level variance | Y | 0.0008 | 0.3519 | 0.5203 |

| Gray-level local variance | Y | 0.0030 | 0.1500 | 0.7414 |

| Normalized gray-level variance | Y | 0.0007 | 0.3330 | 0.2980 |

| Gradient energy | Y | 0.0010 | 0.0162 | 4.6710 |

| Thresholded absolute gradient | Y | 0.0013 | −0.0181 | 3.1345 |

| Squared gradient | Y | 0.0006 | 0.0100 | 4.7005 |

| Helmli and Scherer’s mean | Y | 0.0024 | 0.0800 | 4.0896 |

| Histogram entropy | N | 0.0006 | 0.3667 | 2.6489 |

| Histogram range | N | 0.0004 | 0.3840 | 3.4788 |

| Energy of laplacian | Y | 0.0013 | −0.1166 | 1.8104 |

| Modified Laplacian | Y | 0.0016 | −0.1074 | 0.7414 |

| Variance of Laplacian | Y | 0.0014 | −0.0882 | 0.4463 |

| Diagonal laplacian | Y | 0.0026 | −0.0896 | 0.2980 |

| Steerable filters-based measure | Y | 0.0067 | 0.3288 | 0.4463 |

| Spatial frequency measure | Y | 0.0013 | 0.0164 | 5.5735 |

| Tenengrad | Y | 0.0019 | 0.0901 | 4.8222 |

| Tenengrad variance | Y | 0.0020 | 0.0706 | 0.2980 |

| Vollath’s autocorrelation | Y | 0.0007 | 0.1072 | 0.5203 |

| Sum of wavelet coefficients | N | 0.0097 | −0.2256 | 0.5942 |

| Variance of wavelet coefficients | Y | 0.0087 | −0.15375 | 1.1801 |

| Ratio of wavelet coefficients | Y | 0.0210 | −0.0285 | 2.9132 |

| Iterations | Accuracy (L1: ±0, L2: ±1, L3: ±2, Failure) | Improvement Percentage (%) | |

|---|---|---|---|

| Global search | 110 | L1 | N/A |

| Hill-climbing Search | 2 | failure | failure |

| Binary search | 8 | L2 | 92.73 |

| Rule-based search (12-3-2-1) | 39 | L1 | 64.55 |

| Rule-based search (12-4-3-2) | 24 | L2 | 78.18 |

| Rule-based search (12-5-4-3) | 19 | L2 | 82.73 |

| Rule-based search (12-6-5-4) | 16 | L3 | 85.45 |

| Gradient-based variable step search (0.25, 60, 1) | 9 | L2 | 91.82 |

| Gradient-based variable step search (0.26, 60, 1) | 11 | L2 | 90 |

| Gradient-based variable step search (0.27, 60, 1) | 8 | L2 | 92.73 |

| Gradient-based variable step search (0.28, 60, 1) | 14 | L1 | 87.27 |

| Gradient-based variable step search (0.29, 60, 1) | 8 | L2 | 92.73 |

| Gradient-based variable step search (0.30, 60, 1) | 9 | L2 | 91.82 |

| Inputs: Figure 5c,k. Outputs: The possible defect location image.

|

| Sample Condition (Inspected by Experts) | Sample Item | Amount of Honeycomb Defects Detected by the Algorithm |

|---|---|---|

| Honeycomb defect | 1 | 1 |

| 2 | 5 | |

| 3 | 2 | |

| 4 | 46 | |

| 5 | 2 | |

| 6 | 5 | |

| 7 | 27 | |

| 8 | 57 | |

| 9 | 14 | |

| 10 | 245 | |

| 11 | 7 | |

| 12 | 4 | |

| 13 | 8 | |

| 14 | 27 | |

| 15 | 11 | |

| Non-honeycomb defect | 1 | 0 |

| 2 | 0 | |

| 3 | 0 | |

| 4 | 0 | |

| 5 | 0 | |

| 6 | 0 | |

| 7 | 0 | |

| 8 | 0 | |

| 9 | 0 | |

| 10 | 0 | |

| 11 | 0 | |

| 12 | 0 | |

| 13 | 0 | |

| 14 | 0 | |

| 15 | 0 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jian, B.-L.; Peng, C.-C. Development of an Automatic Testing Platform for Aviator’s Night Vision Goggle Honeycomb Defect Inspection. Sensors 2017, 17, 1403. https://doi.org/10.3390/s17061403

Jian B-L, Peng C-C. Development of an Automatic Testing Platform for Aviator’s Night Vision Goggle Honeycomb Defect Inspection. Sensors. 2017; 17(6):1403. https://doi.org/10.3390/s17061403

Chicago/Turabian StyleJian, Bo-Lin, and Chao-Chung Peng. 2017. "Development of an Automatic Testing Platform for Aviator’s Night Vision Goggle Honeycomb Defect Inspection" Sensors 17, no. 6: 1403. https://doi.org/10.3390/s17061403