Registration of Vehicle-Borne Point Clouds and Panoramic Images Based on Sensor Constellations

Abstract

:1. Introduction

1.1. Background

1.2. Previous Studies

1.3. Present Work

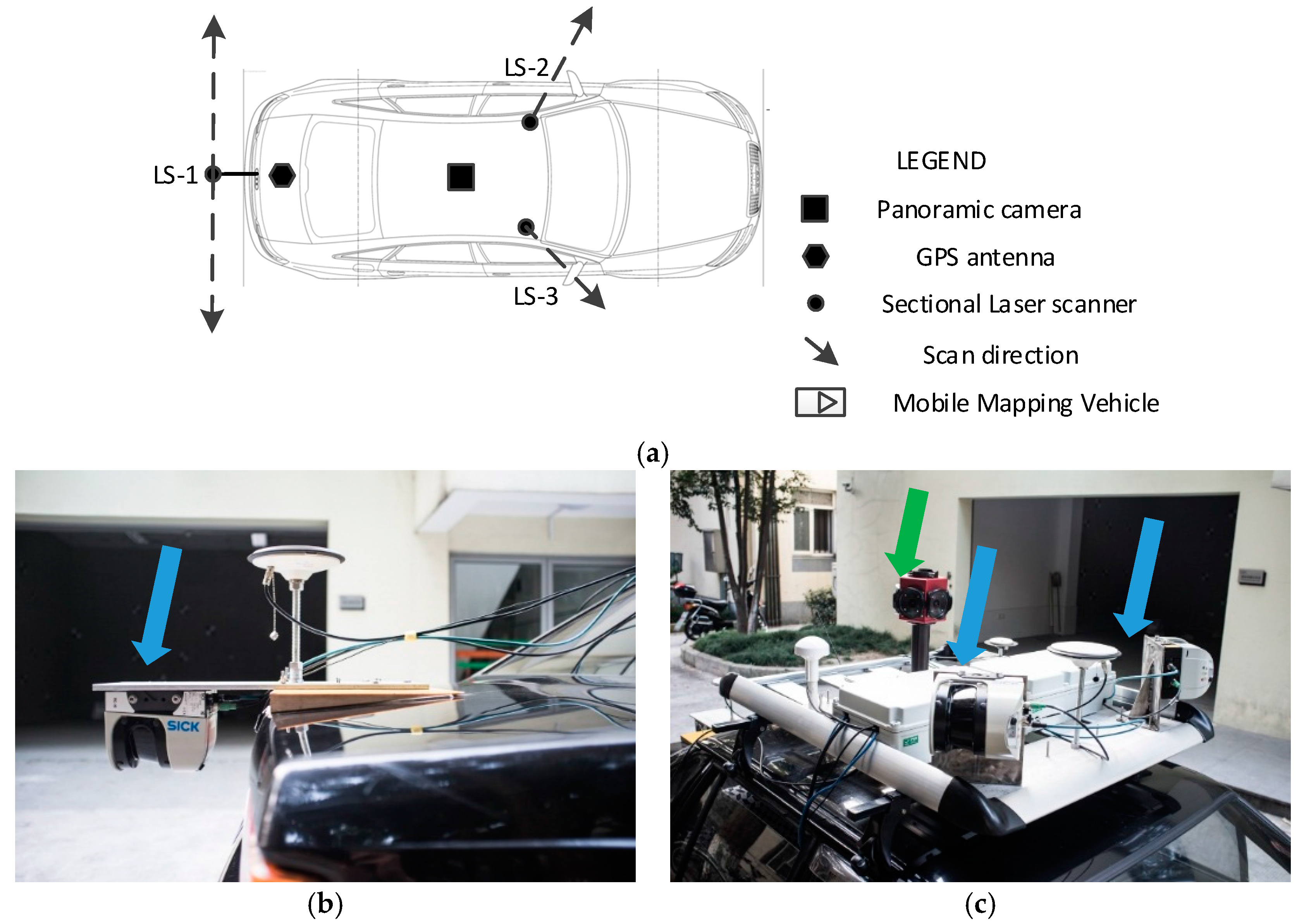

2. Sensor Constellation of MMS

3. Registration of Mobile Point Clouds and Panoramic Images Based on Sensor Constellations

3.1. Flowchart of Proposed Method

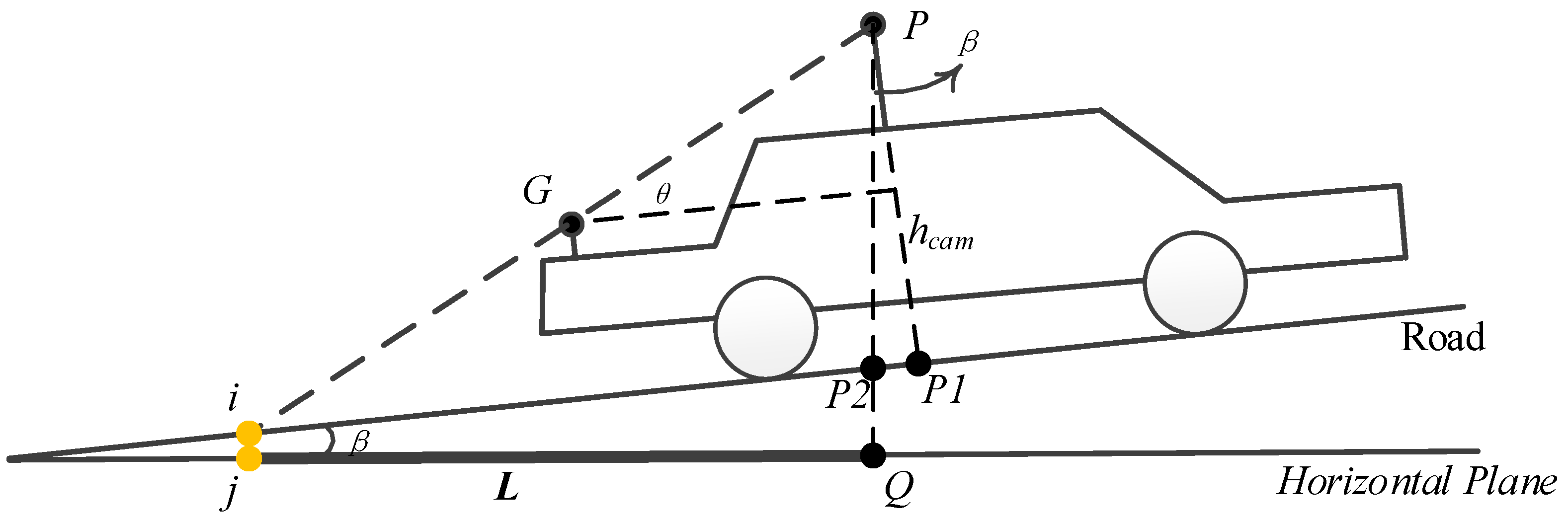

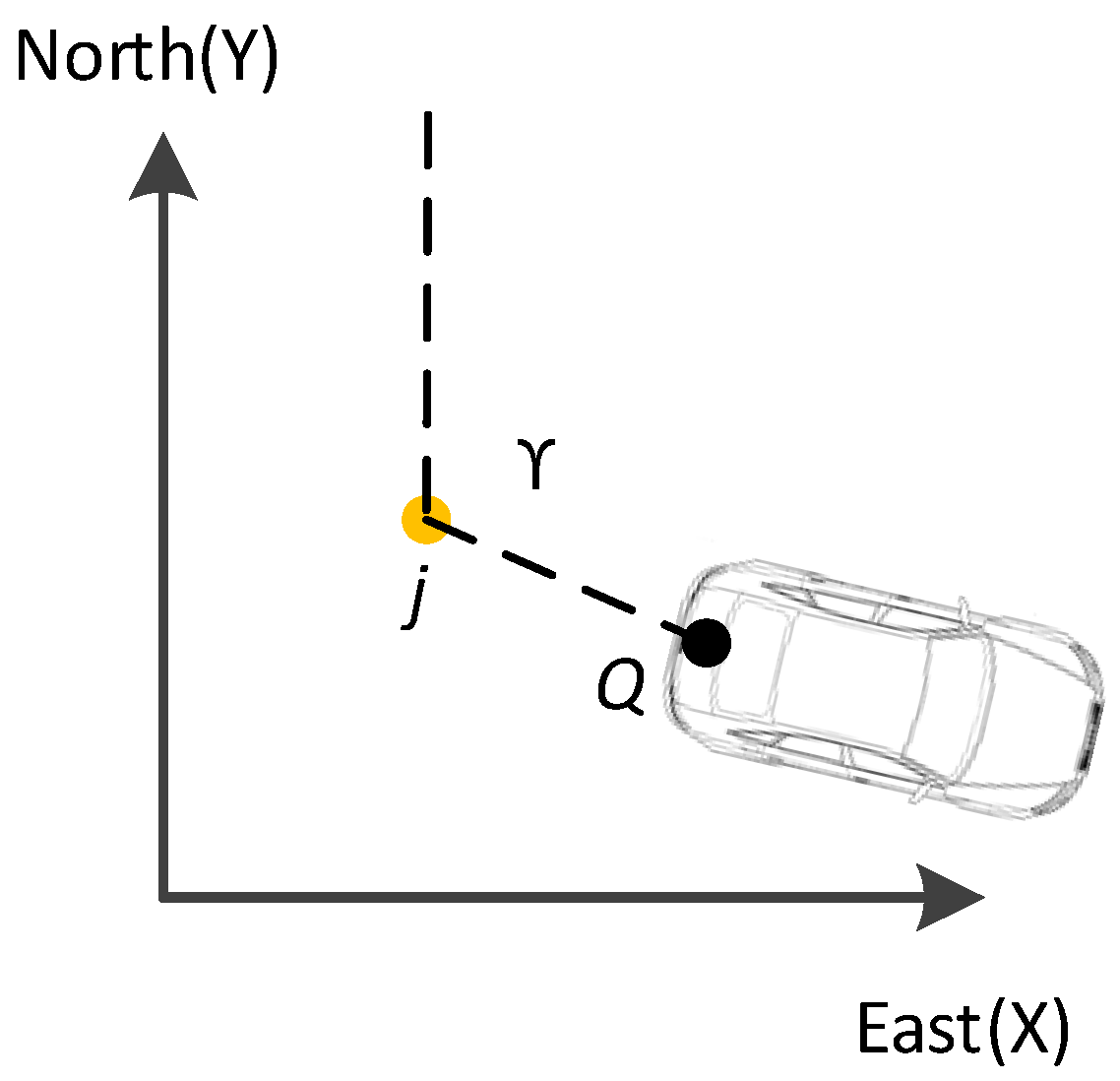

3.2. Segmentation Feature Point Extraction Based on Sensor Constellation

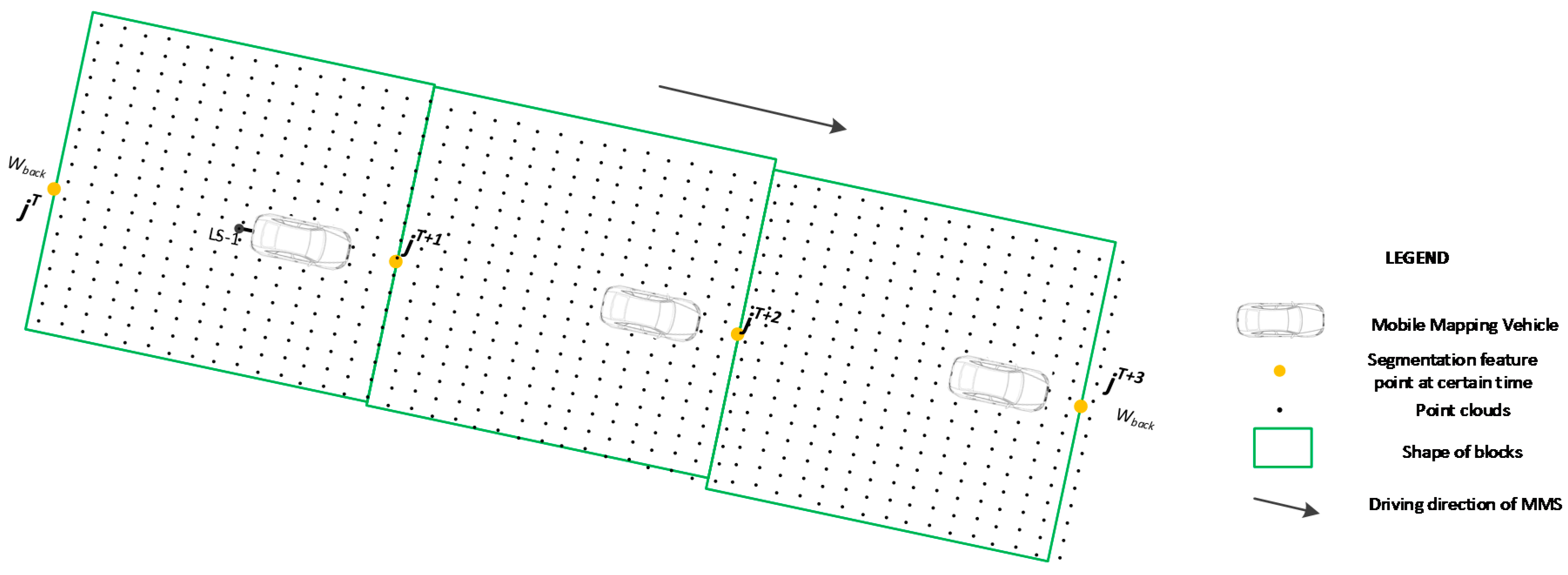

3.3. Division of Original Points into Blocks

3.3.1. Division of the Point Clouds Captured by LS-1

3.3.2. Division of the Point Clouds Captured by LS-2 and LS-3

3.4. Registration of a Block’s Point Clouds and Panoramic Images

4. Case Studies

4.1. Case Area

4.2. Registration Results

4.2.1. Efficiency Evaluation for Different Laser Scanner’s Point Clouds

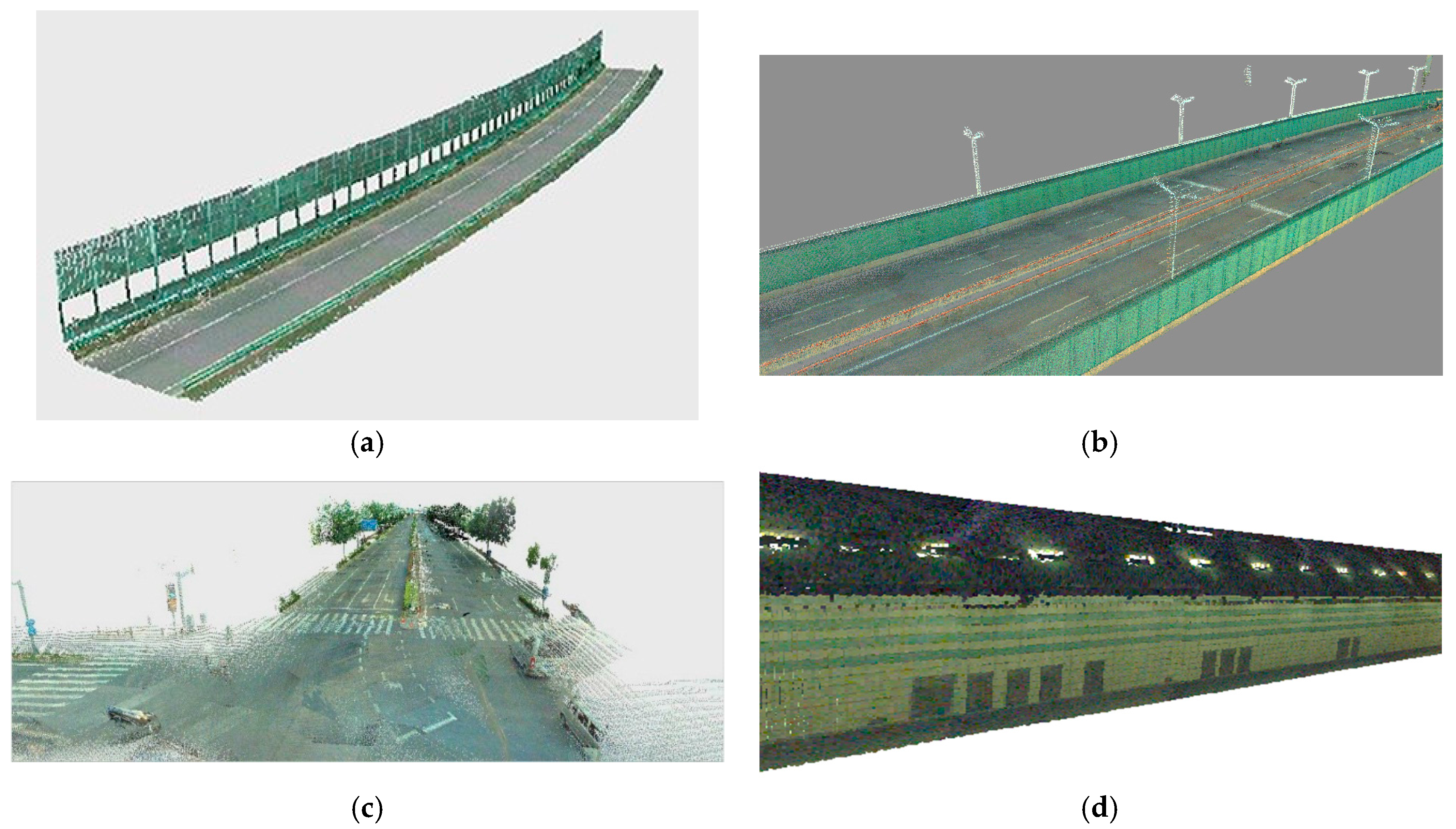

4.2.2. Visualization of Registration Results

4.3. Accuracy Evaluation

4.3.1. Evaluation Method

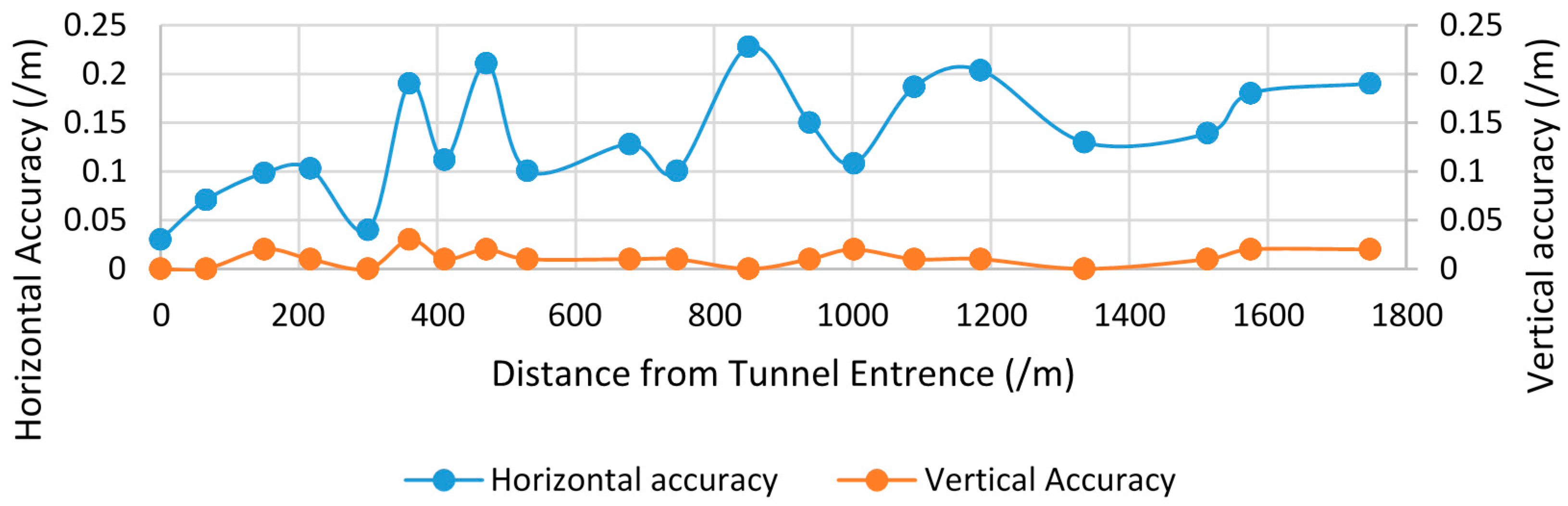

4.3.2. Evaluation Result

4.4. Discussion of the Main Factors Influence Registration Accuracy

4.4.1. Time Synchronization for Different Sensors

4.4.2. Vehicle Speed

4.4.3. Positioning Error

5. Discussion and Conclusions

Acknowledgements

Author Contributions

Conflicts of Interest

Appendix A

| ID | Before Registration | After Registration | |||||||

|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | ||||

| 1 | 557,266.54 | 3,464,931.96 | 5.95 | 557,266.54 | 3,464,931.96 | 5.95 | 0.00 | 0.00 | 0.00 |

| 2 | 558,161.27 | 3,465,074.76 | 5.89 | 558,161.30 | 3,465,074.78 | 5.89 | 0.04 | 0.00 | 0.04 |

| 3 | 558,049.90 | 3,465,035.68 | 5.88 | 558,049.81 | 3,465,035.66 | 5.91 | 0.09 | 0.03 | 0.10 |

| 4 | 557,132.70 | 3,466,020.56 | 5.73 | 557,132.70 | 3,466,020.46 | 5.74 | 0.10 | 0.01 | 0.10 |

| 5 | 557,113.87 | 3,466,025.27 | 5.75 | 557,113.87 | 3,466,025.37 | 5.75 | 0.10 | 0.00 | 0.10 |

| 6 | 55,581.99 | 3,465,065.84 | 5.82 | 555,811.08 | 3,465,065.73 | 5.82 | 0.14 | 0.00 | 0.14 |

| 7 | 556,456.29 | 3,465,032.10 | 7.29 | 556,456.19 | 3,465,032.16 | 7.30 | 0.12 | 0.01 | 0.12 |

| 8 | 556,885.17 | 3,465,062.65 | 10.97 | 556,885.06 | 3,465,062.53 | 10.96 | 0.16 | 0.01 | 0.16 |

| 9 | 556,547.91 | 3,465,037.44 | 6.99 | 556,547.81 | 3,465,037.5 | 7.00 | 0.12 | 0.01 | 0.12 |

| 10 | 557,127.50 | 3,466,267.06 | 5.86 | 557,127.36 | 3,466,266.96 | 5.84 | 0.17 | 0.02 | 0.17 |

| 11 | 557,108.58 | 3,465,007.55 | 5.47 | 557,108.63 | 3,465,007.78 | 5.46 | 0.24 | 0.01 | 0.24 |

| 12 | 558,050.04 | 3,465,035.63 | 5.99 | 558,049.75 | 3,465,035.67 | 5.98 | 0.29 | 0.01 | 0.29 |

| 13 | 557,130.75 | 3,464,592.06 | 5.92 | 557,130.82 | 3,464,592.06 | 5.92 | 0.07 | 0.00 | 0.07 |

| 14 | 557,131.73 | 3,465,560.56 | 7.81 | 557,131.71 | 3,465,560.38 | 7.84 | 0.18 | 0.03 | 0.18 |

| 15 | 557,118.36 | 3,465,905.69 | 5.88 | 557,118.45 | 3,465,905.49 | 5.88 | 0.22 | 0.00 | 0.22 |

| 16 | 557,144.34 | 3,464,662.67 | 5.66 | 557,144.32 | 3,464,662.33 | 5.66 | 0.34 | 0.00 | 0.34 |

| 17 | 556,322.90 | 3,465,038.68 | 7.58 | 556,322.63 | 3,465,038.75 | 7.59 | 0.28 | 0.01 | 0.28 |

| 18 | 558,458.61 | 3,465,031.80 | 5.81 | 558,458.40 | 3,465,031.94 | 5.80 | 0.25 | 0.01 | 0.25 |

| 19 | 557,388.51 | 3,465,059.40 | 9.48 | 557,388.86 | 3,465,059.36 | 9.47 | 0.35 | 0.01 | 0.35 |

| 20 | 555,258.91 | 3,465,052.20 | 7.46 | 555,259.19 | 3,465,052.06 | 7.46 | 0.31 | 0.00 | 0.31 |

| ID | Before Registration | After Registration | |||||||

|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | ||||

| 1 | 529,034.53 | 3,426,244.34 | 7.09 | 529,034.53 | 3,426,244.34 | 7.09 | 0.00 | 0.00 | 0.00 |

| 2 | 529,278.04 | 3,424,996.51 | 13.86 | 529,278.04 | 3,424,996.51 | 13.86 | 0.00 | 0.00 | 0.00 |

| 3 | 529,296.45 | 3,423,696.15 | 6.23 | 529,296.45 | 3,423,696.15 | 6.23 | 0.00 | 0.00 | 0.00 |

| 4 | 529,601.83 | 3,422,438.62 | 6.41 | 529,601.90 | 3,422,438.66 | 6.42 | 0.08 | 0.01 | 0.08 |

| 5 | 530,364.20 | 3,421,574.78 | 6.68 | 530,364.23 | 3,421,574.81 | 6.69 | 0.04 | 0.01 | 0.04 |

| 6 | 531,210.65 | 3,420,911.27 | 7.35 | 531,210.69 | 3,420,911.32 | 7.34 | 0.06 | 0.01 | 0.06 |

| 7 | 531,896.87 | 3,420,146.05 | 5.94 | 531,896.92 | 3,420,146.07 | 5.94 | 0.05 | 0.00 | 0.05 |

| 8 | 532,229.98 | 3,419,233.14 | 5.86 | 532,230.08 | 3,419,233.16 | 5.86 | 0.10 | 0.00 | 0.10 |

| 9 | 532,474.61 | 3,418,398.48 | 7.56 | 532,474.69 | 3,418,398.40 | 7.55 | 0.11 | 0.01 | 0.11 |

| 10 | 532,079.20 | 3,417,664.18 | 6.25 | 532,079.23 | 3,417,664.06 | 6.25 | 0.12 | 0.00 | 0.12 |

| 11 | 532,531.10 | 3,417,673.95 | 7.63 | 532,530.99 | 3,417,673.98 | 7.64 | 0.11 | 0.01 | 0.11 |

| 12 | 532,504.34 | 3,418,387.89 | 7.60 | 532,504.34 | 3,418,387.89 | 7.60 | 0.00 | 0.00 | 0.00 |

| 13 | 532,251.38 | 3,419,256.93 | 6.05 | 532,251.36 | 3,419,256.79 | 6.06 | 0.14 | 0.01 | 0.14 |

| 14 | 531,904.05 | 3,420,172.37 | 6.27 | 531,903.94 | 3,420,172.31 | 6.27 | 0.13 | 0.00 | 0.13 |

| 15 | 531,224.95 | 3,420,924.95 | 7.56 | 531,224.96 | 3,420,924.79 | 7.57 | 0.16 | 0.01 | 0.16 |

| 16 | 530,387.18 | 3,421,581.64 | 6.96 | 530,387.20 | 3,421,581.51 | 6.97 | 0.13 | 0.01 | 0.13 |

| 17 | 529,614.89 | 3,422,455.49 | 6.17 | 529,614.77 | 3,422,455.43 | 6.18 | 0.13 | 0.01 | 0.13 |

| 18 | 529,316.68 | 3,423,691.46 | 6.09 | 529,316.59 | 3,423,691.46 | 6.09 | 0.09 | 0.00 | 0.09 |

| 19 | 529,301.14 | 3,424,989.35 | 13.86 | 529,301.14 | 3,424,989.35 | 13.86 | 0.00 | 0.00 | 0.00 |

| 20 | 529,073.28 | 3,426,233.00 | 7.77 | 529,073.23 | 3,426,233.09 | 7.77 | 0.10 | 0.00 | 0.10 |

| ID | Before Registration | After Registration | |||||||

|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | ||||

| 1 | 554,018.04 | 3,464,962.70 | −5.59 | 554,018.04 | 3,464,962.67 | −5.59 | 0.03 | 0.00 | 0.03 |

| 2 | 553,953.54 | 3,464,949.68 | −8.90 | 553,953.55 | 3,464,949.61 | −8.90 | 0.07 | 0.00 | 0.07 |

| 3 | 553,871.04 | 3,464,933.35 | −13.28 | 553,870.95 | 3,464,933.31 | −13.26 | 0.10 | 0.02 | 0.10 |

| 4 | 553,805.49 | 3,464,922.17 | −16.53 | 553,805.40 | 3,464,922.12 | −16.52 | 0.10 | 0.01 | 0.10 |

| 5 | 553,722.65 | 3,464,911.07 | −20.63 | 553,722.65 | 3,464,911.11 | −20.63 | 0.04 | 0.00 | 0.04 |

| 6 | 553,663.13 | 3,464,905.16 | −23.48 | 553,663.32 | 3,464,905.15 | −23.45 | 0.19 | 0.03 | 0.19 |

| 7 | 553,612.16 | 3,464,901.45 | −25.88 | 553,612.26 | 3,464,901.40 | −25.87 | 0.11 | 0.01 | 0.11 |

| 8 | 553,551.50 | 3,464,898.54 | −28.75 | 553,551.71 | 3,464,898.52 | −28.73 | 0.21 | 0.02 | 0.21 |

| 9 | 553,491.84 | 3,464,897.35 | −31.51 | 553,491.74 | 3,464,897.36 | −31.50 | 0.10 | 0.01 | 0.10 |

| 10 | 553,342.61 | 3,464,901.97 | −38.04 | 553,342.71 | 3,464,901.89 | −38.03 | 0.13 | 0.01 | 0.13 |

| 11 | 553,273.86 | 3,464,907.56 | −40.30 | 553,273.76 | 3,464,907.57 | −40.31 | 0.10 | 0.01 | 0.10 |

| 12 | 553,169.33 | 3,464,920.51 | −40.67 | 553,169.19 | 3,464,920.33 | −40.67 | 0.23 | 0.00 | 0.23 |

| 13 | 553,080.47 | 3,464,935.61 | −38.84 | 553,080.35 | 3,464,935.52 | −38.85 | 0.15 | 0.01 | 0.15 |

| 14 | 553,016.10 | 3,464,948.96 | −37.44 | 553,016.16 | 3,464,948.87 | −37.42 | 0.11 | 0.02 | 0.11 |

| 15 | 552,928.97 | 3,464,970.59 | −35.29 | 552,928.79 | 3,464,970.54 | −35.28 | 0.19 | 0.01 | 0.19 |

| 16 | 552,833.60 | 3,464,998.49 | −31.51 | 552,833.80 | 3,464,998.53 | −31.50 | 0.20 | 0.01 | 0.20 |

| 17 | 552,686.29 | 3,465,051.83 | −24.51 | 552,686.17 | 3,465,051.78 | −24.51 | 0.13 | 0.00 | 0.13 |

| 18 | 552,514.30 | 3,465,128.75 | −15.75 | 552,514.17 | 3,465,128.70 | −15.76 | 0.14 | 0.01 | 0.14 |

| 19 | 552,454.70 | 3,465,156.64 | −12.71 | 552,454.52 | 3,465,156.65 | −12.73 | 0.18 | 0.02 | 0.18 |

| 20 | 552,290.84 | 3,465,229.46 | −4.36 | 552,290.65 | 3,465,229.47 | −4.38 | 0.19 | 0.02 | 0.19 |

| ID | Before Registration | After Registration | |||||||

|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | ||||

| 1 | 551,179.32 | 3,451,668.88 | 5.57 | 551,179.28 | 3,451,668.87 | 5.57 | 0.04 | 0.00 | 0.04 |

| 2 | 551,244.46 | 3,451,317.43 | 5.56 | 551,244.43 | 3,451,317.42 | 5.57 | 0.03 | 0.01 | 0.03 |

| 3 | 551,341.51 | 3,451,259.73 | 6.26 | 551,341.46 | 3,451,259.65 | 6.26 | 0.09 | 0.00 | 0.09 |

| 4 | 551,487.13 | 3,450,511.96 | 7.77 | 551,487.12 | 3,450,512.06 | 7.79 | 0.10 | 0.02 | 0.10 |

| 5 | 551,361.83 | 3,450,949.48 | 7.66 | 551,361.84 | 3,450,949.40 | 7.68 | 0.08 | 0.02 | 0.08 |

| 6 | 551,013.69 | 3,451,205.67 | 5.76 | 551,013.70 | 3,451,205.64 | 5.75 | 0.03 | 0.01 | 0.03 |

| 7 | 551,253.57 | 3,451,315.07 | 5.58 | 551,253.54 | 3,451,315.06 | 5.59 | 0.03 | 0.01 | 0.03 |

| 8 | 551,165.41 | 3,451,722.61 | 5.81 | 551,165.39 | 3,451,722.44 | 5.83 | 0.17 | 0.02 | 0.17 |

| 9 | 551,458.43 | 3,450,603.59 | 10.52 | 551,458.47 | 3,450,603.60 | 10.52 | 0.04 | 0.00 | 0.04 |

| 10 | 551,532.58 | 3,450,377.08 | 6.07 | 551,532.62 | 3,450,377.11 | 6.06 | 0.05 | 0.01 | 0.05 |

| 11 | 551,308.99 | 3,451,118.60 | 5.32 | 551,308.93 | 3,451,118.58 | 5.32 | 0.06 | 0.00 | 0.06 |

| 12 | 551,197.67 | 3,451,603.26 | 5.49 | 551,197.76 | 3,451,603.20 | 5.47 | 0.11 | 0.02 | 0.11 |

| 13 | 551,114.85 | 3,451,986.20 | 5.57 | 551,114.90 | 3,451,986.13 | 5.58 | 0.09 | 0.01 | 0.09 |

| 14 | 551,043.35 | 3,452,331.86 | 5.55 | 551,043.40 | 3,452,331.59 | 5.55 | 0.27 | 0.00 | 0.27 |

| 15 | 550,908.49 | 3,452,987.78 | 7.50 | 550,908.52 | 3,452,987.57 | 7.50 | 0.21 | 0.00 | 0.21 |

| 16 | 550,873.00 | 3,453,263.91 | 12.26 | 550,872.98 | 3,453,264.28 | 12.27 | 0.37 | 0.01 | 0.37 |

| 17 | 551,009.54 | 3,452,439.48 | 5.86 | 551,009.49 | 3,452,439.87 | 5.89 | 0.39 | 0.03 | 0.39 |

| 18 | 551,132.00 | 3,451,866.43 | 5.82 | 551,132.06 | 3,451,866.35 | 5.85 | 0.10 | 0.03 | 0.10 |

| 19 | 551,101.99 | 3,452,047.10 | 5.51 | 551,102.07 | 3,452,046.83 | 5.52 | 0.28 | 0.01 | 0.28 |

| 20 | 550,984.51 | 3,452,623.22 | 6.30 | 550,984.55 | 3,452,623.00 | 6.30 | 0.22 | 0.00 | 0.22 |

References

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Goad, C. The Ohio State University highway mapping project: The positioning component. In Proceedings of the 47th Annual Meeting of the Institute of Navigation, Williamsburg, VA, USA, 10–12 June 1991; pp. 117–120. [Google Scholar]

- Novak, K. The Ohio State University highway mapping system: The stereo vision system component. In Proceedings of the 47th Annual Meeting of The Institute of Navigation, Williamsburg, VA, USA, 10–12 June 1991; pp. 121–124. [Google Scholar]

- Huang, F.; Wen, C.; Luo, H.; Cheng, M.; Wang, C.; Li, J. Local Quality Assessment of Point Clouds for Indoor Mobile Mapping. Neurocomputing 2016, 196, 59–69. [Google Scholar] [CrossRef]

- Toth, C.; Grejner-Brzezinska, D. Redefining the Paradigm of Modern Mobile Mapping: An Automated High-Precision Road Centerline Mapping System. Photogramm. Eng. Remote Sens. 2004, 70, 685–694. [Google Scholar] [CrossRef]

- Bossche, J.; Peters, J.; Verwaeren, J.; Botteldooren, D.; Theunis, J.; Baets, B. Mobile monitoring for mapping spatial variation in urban air quality: Development and validation of a methodology based on an extensive dataset. Atmos. Environ. 2015, 105, 148–161. [Google Scholar] [CrossRef]

- Adams, M.; Kanaroglou, P. Mapping real-time air pollution health risk for environmental management: Combining mobile and stationary air pollution monitoring with neural network models. J. Environ. Manag. 2015, 168, 133–141. [Google Scholar] [CrossRef] [PubMed]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Building detection by fusion of airborne laser scanner data and multi-spectral images: Performance evaluation and sensitivity analysis. ISPRS J. Photogramm. Remote Sens. 2007, 62, 135–149. [Google Scholar] [CrossRef]

- Torabzadeh, H.; Morsdorf, F.; Schaepman, M. Fusion of imaging spectroscopy and airborne laser scanning data for characterization of forest ecosystems—A review. ISPRS J. Photogramm. Remote Sens. 2014, 97, 25–35. [Google Scholar] [CrossRef]

- Budzan, S.; Kasprzyk, J. Fusion of 3D laser scanner and depth images for obstacle recognition in mobile applications. Opt. Laser Eng. 2016, 77, 230–240. [Google Scholar] [CrossRef]

- Gerke, M.; Xiao, J. Fusion of airborne laserscanning point clouds and images for supervised and unsupervised scene classification. ISPRS J. Photogramm. Remote Sens. 2014, 87, 78–92. [Google Scholar] [CrossRef]

- Hamza, A.; Hafiz, R.; Khan, M.; Cho, Y.; Cha, J. Stabilization of panoramic videos from mobile multi-camera platforms. Image Vis. Comput. 2015, 37, 20–30. [Google Scholar] [CrossRef]

- Ji, S.; Shi, Y.; Shan, J.; Shao, X.; Shi, Z.; Yuan, X.; Yang, P.; Wu, W.; Tang, H.; Shibasaki, R. Particle filtering methods for georeferencing panoramic image sequence in complex urban scenes. ISPRS J. Photogramm. Remote Sens. 2015, 105, 1–12. [Google Scholar] [CrossRef]

- Shi, Y.; Ji, S.; Shao, X.; Yang, P.; Wu, W.; Shi, Z.; Shibasaki, R. Fusion of a panoramic camera and 2D laser scanner data for constrained bundle adjustment in GPS-denied environments. Image Vis. Comput. 2015, 40, 28–37. [Google Scholar] [CrossRef]

- Swart, A.; Broere, J.; Veltkamp, R.; Tan, R. Refined Non-rigid Registration of a Panoramic Image Sequence to a LiDAR Point Cloud. In Photogrammetric Image Analysis, 1st ed.; Uwe, S., Franz, R., Helmut, M., Boris, J., Matthias, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 73–84. [Google Scholar]

- Chen, C.; Zhuo, X. Registration of vehicle based panoramic image and LiDAR point cloud. Proc. SPIE Int. Soc. Opt. Eng. 2013, 8919, 401–410. [Google Scholar]

- Zeng, F.; Zhong, R. The algorithm to generate color point-cloud with the registration between panoramic image and laser point-cloud. In Proceedings of the 35th International Symposium on Remote Sensing of Environment, Beijing, China, 22–26 April 2014. [Google Scholar]

- Cui, T.; Ji, S.; Shan, J.; Gong, J.; Liu, K. Line-Based Registration of Panoramic Images and LiDAR Point Clouds for Mobile Mapping. Sensors 2016, 17, 70. [Google Scholar] [CrossRef] [PubMed]

- Tao, C. Semi-Automated Object Measurement Using Multiple-Image Matching from Mobile Mapping Image Sequences. Photogramm. Eng. Remote Sens. 2000, 66, 1477–1485. [Google Scholar]

- Havlena, M.; Torii, A.; Pajdla, T. Efficient Structure from Motion by Graph Optimization. In Computer Vision—ECCV 2010; Lecture Notes in Computer Science, Volume 6312; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Besl, P.; McKay, N. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Masuda, T.; Yokoya, N. A robust method for registration and segmentation of multiple range images. Comput. Vis. Image Underst. 1995, 61, 295–307. [Google Scholar] [CrossRef]

- Cheng, L.; Tong, L.; Li, M.; Liu, Y. Semi-Automatic Registration of Airborne and Terrestrial Laser Scanning Data Using Building Corner Matching with Boundaries as Reliability Check. Remote Sens. 2013, 5, 6260–6283. [Google Scholar] [CrossRef]

- Zhong, L.; Tong, L.; Chen, Y.; Wang, Y.; Li, M.; Cheng, L. An automatic technique for registering airborne and terrestrial LiDAR data, in Geoinformatics. In Proceedings of the IEEE 21st International Conference on Geoinformatics, Kaifeng, China, 20–22 June 2013. [Google Scholar]

- Wu, H.; Scaioni, M.; Li, H.; Li, N.; Lu, M.; Liu, C. Feature-constrained registration of building point clouds acquired by terrestrial and airborne laser scanners. J. Appl. Remote Sens. 2014, 8, 083587. [Google Scholar] [CrossRef]

- Alba, M.; Barazzetti, L.; Scaioni, M.; Remondino, F. Automatic Registration of Multiple Laser Scans using Panoramic RGB and Intensity Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 3812, 49–54. [Google Scholar] [CrossRef]

- Weinmann, M.; Hinz, S.; Jutzi, B. Fast and automatic image-based registration of TLS data. ISPRS J. Photogramm. Remote Sens. 2011, 66, 62–70. [Google Scholar] [CrossRef]

- Wu, H.; Fan, H. Registration of airborne LiDAR point clouds by matching the linear plane features of building roof facets. Remote Sens. 2016, 8, 447. [Google Scholar] [CrossRef]

- Crosilla, F.; Visintini, D.; Sepic, F. Reliable automatic classification and segmentation of laser point clouds by statistical analysis of surface curvature values. Appl. Geomat. 2009, 1, 17–30. [Google Scholar] [CrossRef]

- Cheng, L.; Wu, Y.; Tong, L.; Chen, Y.; Li, M. Hierarchical Registration Method for Integration of Airborne and Vehicle LiDAR Data. Remote Sens. 2015, 7, 13921–13944. [Google Scholar] [CrossRef]

- Bouroumand, M.; Studnicka, N. The Fusion of Laser Scanning and Close Range Photogrammetry in Bam. Laser-photogrammetric Mapping of Bam Citadel (ARG-E-BAM) Iran. In Proceedings of the ISPRS Commission V, Istanbul, Turkey, 12–23 July 2004. [Google Scholar]

- Reulke, R.; Wehr, A. Mobile panoramic mapping using CCD-line camera and laser scanner with integrated position and orientation system. In Proceedings of the ISPRS Workshop Group V/1, Dresden, Germany, 19–22 February 2004; pp. 165–183. [Google Scholar]

- Rönnholm, P. Registration Quality—Towards Integration of Laser Scanning and Photogrammetry; EuroSDR Official Publication: Frankfurt, Germany, 2011; pp. 9–57. [Google Scholar]

- Rönnholm, P.; Haggrén, H. Registration of laser scanning point clouds and aerial images using either artificial or natural tie features. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 63–68. [Google Scholar] [CrossRef]

- Habib, A.; Ghanma, M.; Morgan, M.; Al-Ruzouq, R. Photogrammetric and LiDAR data registration using linear features. Photogramm. Eng. Remote Sens. 2005, 71, 699–707. [Google Scholar] [CrossRef]

- Yang, M.; Cao, Y.; Mcdonald, J. Fusion of camera images and laser scans for wide baseline 3D scene alignment in urban environments. ISPRS J. Photogramm. Remote Sens. 2011, 66, 1879–1887. [Google Scholar] [CrossRef]

- Wang, L.; Neumann, U. A robust approach for automatic registration of aerial images with untextured aerial LiDAR data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 2623–2630. [Google Scholar]

- Mitishita, E.; Habib, A.; Centeno, J.; Machado, A.; Lay, J.; Wong, C. Photogrammetric and lidar data integration using the centroid of a rectangular roof as a control point. Photogramm. Rec. 2008, 23, 19–35. [Google Scholar] [CrossRef]

- Liu, L.; Stamos, I. A systematic approach for 2D-image to 3D-range registration in urban environments. Comput. Vis. Image Underst. 2012, 116, 25–37. [Google Scholar] [CrossRef]

- Mastin, A.; Kepner, J.; Fisher, J. Automatic registration of LIDAR and optical images of urban scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 2639–2646. [Google Scholar]

- Parmehr, E.; Fraser, C.; Zhang, C.; Leach, J. Automatic registration of optical imagery with 3D LiDAR data using statistical similarity. ISPRS J. Photogramm. Remote Sens. 2014, 88, 28–40. [Google Scholar] [CrossRef]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Hough, P. A Method and Means for Recognizing Complex Patterns. U.S. Patent 3069654, 18 December 1962. [Google Scholar]

- Yang, B.; Chen, C. Automatic registration of UAV-borne sequent images and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2015, 101, 262–274. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Ahokas, E.; Hyyppa, J.; Suomalainen, J. Study of surface brightness from backscattered laser intensity: Calibration of laser data. IEEE Trans. Geosci. Remote Sens. Lett. 2005, 2, 255–259. [Google Scholar] [CrossRef]

- Kaasalainen, S.; Hyyppa, H.; Kukko, A.; Litkey, P.; Ahokas, E.; Hyyppa, J.; Lehner, H.; Jaakkola, A.; Suomalainen, J.; Akujarvi, A.; et al. Radiometric calibration of LIDAR intensity with commercially available reference targets. IEEE Trans. Geosci. Remote 2009, 47, 588–598. [Google Scholar] [CrossRef]

- Roncat, A.; Briese, C.; Jansa, J.; Pfeifer, N. Radiometrically calibrated features of full-waveform lidar point clouds based on statistical moments. IEEE Trans. Geosci. Remote Sens. Lett. 2014, 11, 549–553. [Google Scholar] [CrossRef]

- Wagner, W.; Ullrich, A.; Ducic, V.; Melzer, T.; Studnicka, N. Gaussian decomposition and calibration of a novel small-footprint full-waveform digitising airborne laser scanner. ISPRS J. Photogramm. Remote Sens. 2006, 60, 100–112. [Google Scholar] [CrossRef]

| Laser Scanner | Lens of Panoramic Camera |

|---|---|

| LS-1 | Lens-0 |

| LS-2 | Lens-1, Lens-2, Lens-5 |

| LS-3 | Lens-3, Lens-4, Lens-5 |

| Case Type | Overpass | Freeway | Tunnel | Surface Roads |

|---|---|---|---|---|

| Environment complexity | Complex | Simple | Simple | Complex |

| GPS signal | Good | Good | None | Average |

| Length (km) | 30.8 | 27.5 | 2.0 | 11.6 |

| Average speed (km/h) | 30 | 40 | 30 | 22 |

| Time span (min) | 61.50 | 41.27 | 3.34 | 31.75 |

| Type | Laser Scanner | Total Points | Matched Points | Match Rate (%) | Computation Time (s) |

|---|---|---|---|---|---|

| Overpass | LS-1 | 120,210,560 | 120,099,095 | 99.91 | 3163 |

| LS-2 | 46,040,486 | 45,750,431 | 99.37 | 1972 | |

| LS-3 | 48,009,700 | 47,625,623 | 99.20 | 2060 | |

| Freeway | LS-1 | 72,612,193 | 72,601,383 | 99.98 | 1910 |

| LS-2 | 26,218,503 | 26,207,957 | 99.95 | 1092 | |

| LS-3 | 28,495,605 | 28,486,135 | 99.96 | 1187 | |

| Surface roads | LS-1 | 61,756,177 | 61,695,284 | 99.90 | 1625 |

| LS-2 | 23,249,154 | 23,238,371 | 99.95 | 968 | |

| LS-3 | 26,393,008 | 26,381,647 | 99.95 | 1099 | |

| Tunnel | LS-1 | 8,582,302 | 8,511,419 | 99.79 | 199 |

| LS-2 | 4,797,171 | 4,787,142 | 99.17 | 225 | |

| LS-3 | 6,002,796 | 5,971,450 | 99.47 | 250 |

| Laser Scanner | Total Points | Matched Point | Total Computation Time (s) | Average Computation Efficiency |

|---|---|---|---|---|

| LS-1 | 263,161,232 | 262,907,161 | 6897 | 38,155 |

| LS-2 | 101,305,314 | 99,983,901 | 4257 | 23,870 |

| LS-3 | 108,901,109 | 108,464,855 | 4596 | 24,006 |

| Index | Case Area | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Min. | Max. | Avg. | Min. | Max. | Avg. | Min. | Max. | Avg. | ||

| 1 | Overpass | 0.000 | 0.340 | 0.178 | 0.000 | 0.030 | 0.009 | 0.000 | 0.352 | 0.179 |

| 2 | Intersection | 0.031 | 0.393 | 0.139 | 0.000 | 0.020 | 0.011 | 0.033 | 0.394 | 0.140 |

| 3 | Tunnel | 0.030 | 0.228 | 0.135 | 0.000 | 0.020 | 0.011 | 0.030 | 0.228 | 0.135 |

| 4 | Freeway | 0.000 | 0.160 | 0.079 | 0.000 | 0.010 | 0.020 | 0.000 | 0.161 | 0.112 |

| Sensor | Time System |

|---|---|

| GPS | GPS time |

| IMU | GPS time |

| Panoramic camera | GPS time |

| Laser scanner | Windows time |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, L.; Wu, H.; Li, Y.; Meng, B.; Qian, J.; Liu, C.; Fan, H. Registration of Vehicle-Borne Point Clouds and Panoramic Images Based on Sensor Constellations. Sensors 2017, 17, 837. https://doi.org/10.3390/s17040837

Yao L, Wu H, Li Y, Meng B, Qian J, Liu C, Fan H. Registration of Vehicle-Borne Point Clouds and Panoramic Images Based on Sensor Constellations. Sensors. 2017; 17(4):837. https://doi.org/10.3390/s17040837

Chicago/Turabian StyleYao, Lianbi, Hangbin Wu, Yayun Li, Bin Meng, Jinfei Qian, Chun Liu, and Hongchao Fan. 2017. "Registration of Vehicle-Borne Point Clouds and Panoramic Images Based on Sensor Constellations" Sensors 17, no. 4: 837. https://doi.org/10.3390/s17040837