1. Introduction

As one of the main structures of machine vision sensors, BSVS acquires 3D scene geometric information through one pair of images and has many applications in industrial product inspection, robot navigation, virtual reality, etc. [

1,

2,

3]. Structural parameter calibration is always an important and concerning issue in BSVS. Current calibration methods can be roughly classified into three categories: methods based on 3D targets, 2D targets and 1D targets. 3D target-based methods [

4,

5] obtain the structural parameters by placing the target only once in the sensor field of view. However, its disadvantages lie in the fact that large size 3D targets are exceedingly difficult to machine, and it is usually impossible to maintain the calibration image with all feature points at the same level of clarity. 2D target-based methods [

2,

6] require the plane target to be placed freely at least twice with different positions and orientations, and different target calibration features are unified to a common sensor coordinate frame through the camera coordinate frame. Therefore, calibration operation becomes more convenient than in 3D target-based methods. However, there are also weaknesses in two primary aspects. One is that repeated calibration feature unification will increase transformation errors. The other is that when the two cameras form a large viewing angle or the multi-camera system requires calibration, it is difficult to simultaneously maintain the calibration image with all features at the same level of clarity for all cameras. Regarding 1D target-based methods [

7], which are much more convenient than 2D target-based methods, the target is freely placed no less than four times with different positions and orientations. The image points of the calibration feature points are used to determine the rotation matrix

R and the translation vector

T, and the scale factor of

T is obtained by the known distance constraint. Unfortunately, 1D target-based methods have the same weaknesses as 2D target-based methods. Moreover, in practice, 1D targets should be placed many times to obtain enough feature points.

The sphere is widely used in machine vision calibration owing to its spatial uniformity and symmetry [

8,

9,

10,

11,

12,

13,

14,

15,

16,

17]. Agrawal et al. [

11] and Zhang et al. [

16] both used spheres to calibrate intrinsic camera parameters using the relationship between the projected ellipse of the sphere and the dual image of the absolute conic (DIAC). Moreover, they also mentioned that the structural parameters between two or more cameras could be obtained by using the 3D points cloud registration method. However, this method requires many feature points to guarantee high accuracy. Wong et al. [

17] proposed two methods to recover the fundamental matrix, and then structural parameters could be deduced when the intrinsic parameters of the two cameras are known. One method is to use sphere centres, intersection points and visual points of tangency to compute the fundamental matrix. The other is to determine the fundamental matrix using the homography matrix and the epipoles, which are computed via plane-induced homography. However, the second method requires an extra plane target to transfer the projected ellipse from the first view to the second view.

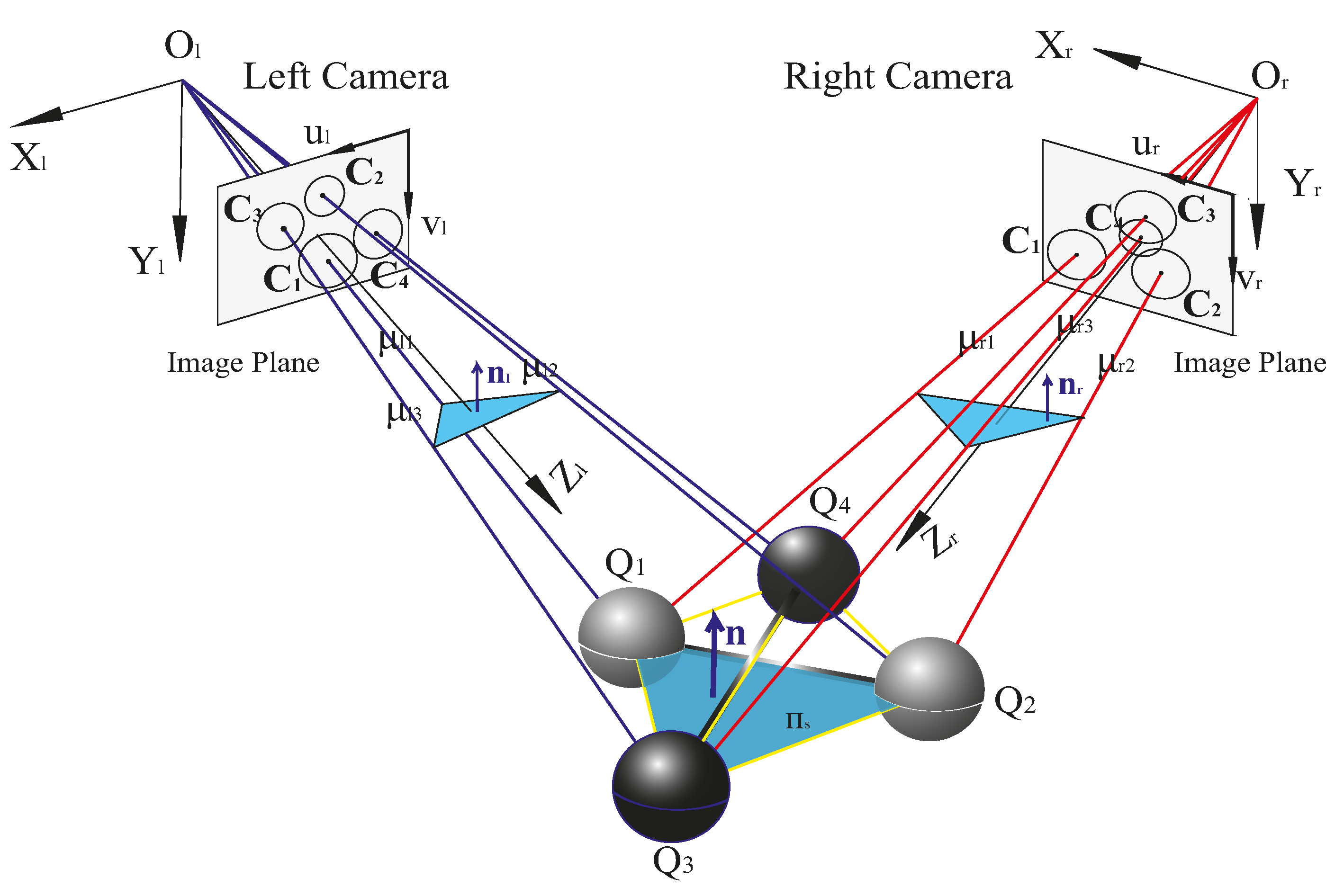

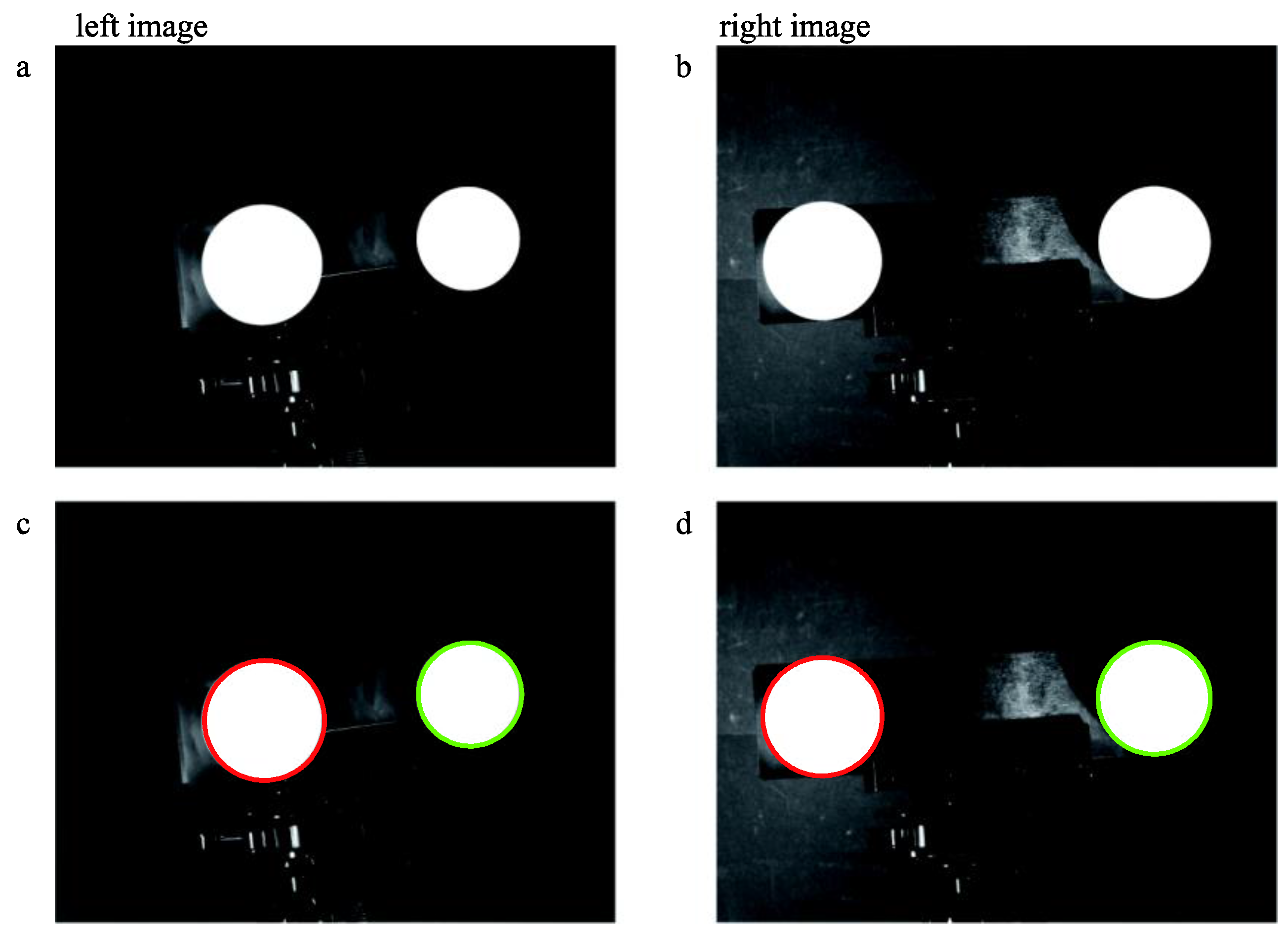

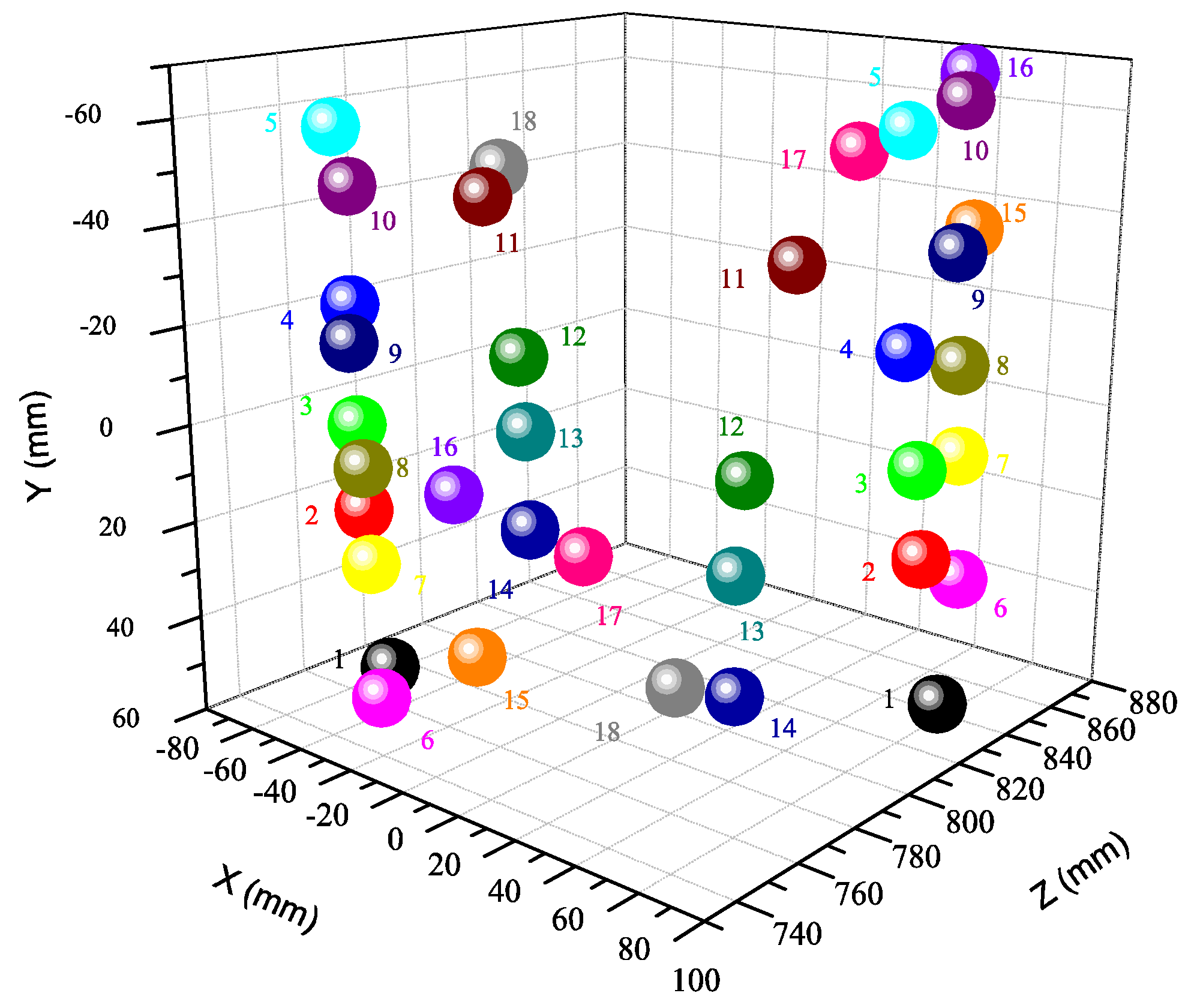

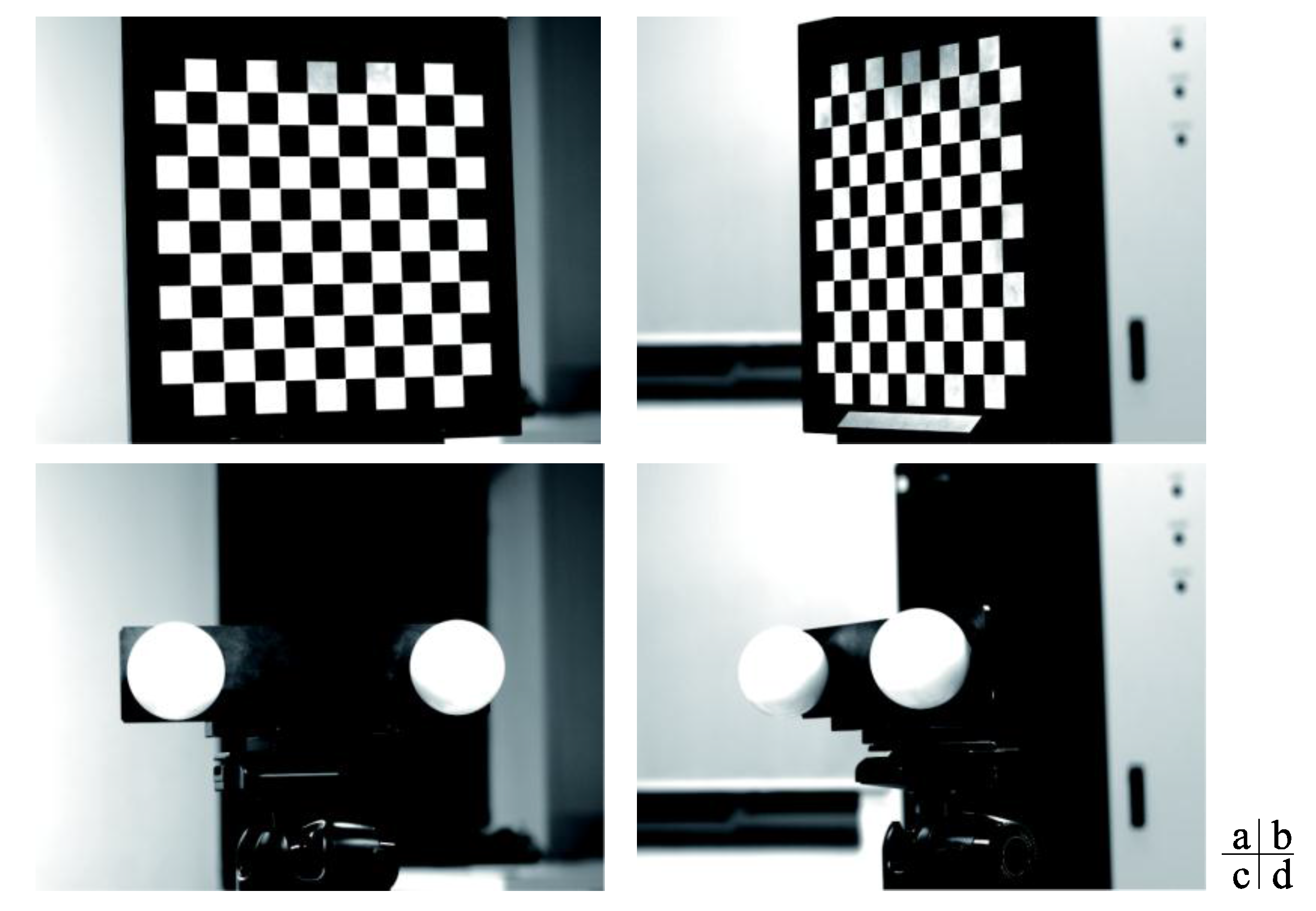

In this paper, we propose a method using a double-sphere target to calibrate the structural parameters of BSVS. The target consists of two identical spheres fixed by a rigid bar of known length and unknown radii. During calibration, the double-sphere target is placed freely at least twice in different positions and orientations. From the projected ellipses of spheres, the image points of sphere centres and a so-called depth-scale factor for each sphere can be calculated. Because any three non-collinear sphere centres determine a spatial plane π

s, if we have at least three non-parallel planes with their normal vectors obtained in both camera coordinate frames, the rotation matrix

R can be solved. However,

πs could not be directly obtained. We obtained its normal vector by an intermediate plane paralleling to the plane

πs, which is recovered by the depth-scale factors and the image points of sphere centres. From the epipolar geometry, a linear relation between the translation vector

T including a scale factor and the image points is derived, and then SVD is used to solve it. Furthermore, the scale factor is determined based on the known distance constraint. Finally,

R and

T are combined to be refined by Levenberg-Marquardt algorithm. Due to the complete symmetry of the sphere, wherever the sphere is placed in the sensor vision field, all cameras can capture the same high-quality images of the sphere, which are essential to maintain the calibration consistency, even if the angle between the principal rays of the two cameras is large. Moreover, regarding multi-camera system calibration, it is often difficult to make the target features simultaneously visible in all views because of the variety of positions and orientations of the cameras. In general, the cameras are divided into several smaller groups, and each group is calibrated separately, and finally all cameras are registered into a common reference coordinate frame [

17]. However, using the double-sphere target can obtain the relationship of the cameras with common view district by once calibration. A kind of terrible configuration of two cameras mentioned above will be often taken place in multi-camera calibration. Therefore, using the double-sphere target can reduce the times of calibration and make calibration operation easy and efficient.

The remaining sections of this paper are organized as follows:

Section 2 briefly describes a few basic properties of the projected ellipse of the sphere.

Section 3 elaborates the principles of the proposed calibration method based on the double-sphere target.

Section 4 provides detailed analysis of the impact on the image point of the sphere centre when the projected ellipse is not accurately extracted with positional deviation.

Section 5 presents computer simulations and real data experiments to verify the proposed method. The conclusions are given in

Section 6.

4. Error Analysis

The general equation of the ellipse is

, and the coordinates of the ellipse centre are given as follows:

The matrix form of the ellipse is written as

. The dual

of

is given by:

where

is an unknown scale factor. Combining Equations (22) and (23), we can then obtain the following relationship between the ellipse centre

and the elements of matrix

:

As known, there are many factors affecting the extraction of the ellipse contour points. Regarding the extracted points, the positional deviation may occur due to noise. We will then discuss how the computation of the image point of the sphere centre is influenced under this condition.

Suppose that the shape of the ellipse remains constant and the ellipse does not rotate; then we use the ellipse centre to represent the position of the ellipse. Let denote the sphere, denote the projected ellipse of sphere , and denote the image point of the sphere centre.

To simplify the discussion, consider the condition in which the sphere centre is in the first quadrant of the camera coordinate frame. Because the sphere centre can be located in the first quadrant of the camera coordinate frame by rotating the camera, this discussion can be generalized.

First of all, let us discuss the element

of

(Note:

). Expanding Equation (5) by the replacements

,

,

gives:

The sphere is always in front of the camera, and the sphere centre is located in the first quadrant of the camera coordinate frame, so we have

,

,

,

and

. Based on these equations, we can obtain:

Next, we discuss the factors that have influences on calculating the image point of the sphere centre. Denoting

by

, we can obtain the following equation from Equation (5):

Substituting

,

into Equation (27), we get:

By Equation (28), we can obtain:

From Equation (29), computing the partial derivative of

with respect to

gives:

where

.

Let:

and we can deduce that

satisfies

when

is valid (see

Appendix C for more details).

Suppose that

is the positional deviation of the fitted ellipse, and

is the computation error of image point

. Equation (30) is then written as:

When and are valid, satisfies , which shows that computation error caused by is reduced.

Because the extracted ellipse contour points have a positional deviation, the fitted ellipse also has a similar deviation. The following section discusses the solution for how to reduce the computation error of the image point of the sphere centre in this condition.

Firstly, consider the relationship between

and

. From Equation (25), we can obtain:

When is valid, we can deduce . Hence, is a monotonically increasing parameter with respect to ().

Second, from Equation (28), we have:

When

, we can obtain:

(see

Appendix D for more details).

Suppose

is the positional deviation of the fitted ellipse, and

is the computation error caused by

. Equation (34) can then be written as:

Based on Equations (26), (35) and (36), we can deduce that has a positive relationship with .

Similarly, we can obtain:

If , are valid, we can deduce that is a monotonically increasing parameter with respect to , and also has a positive relationship with .

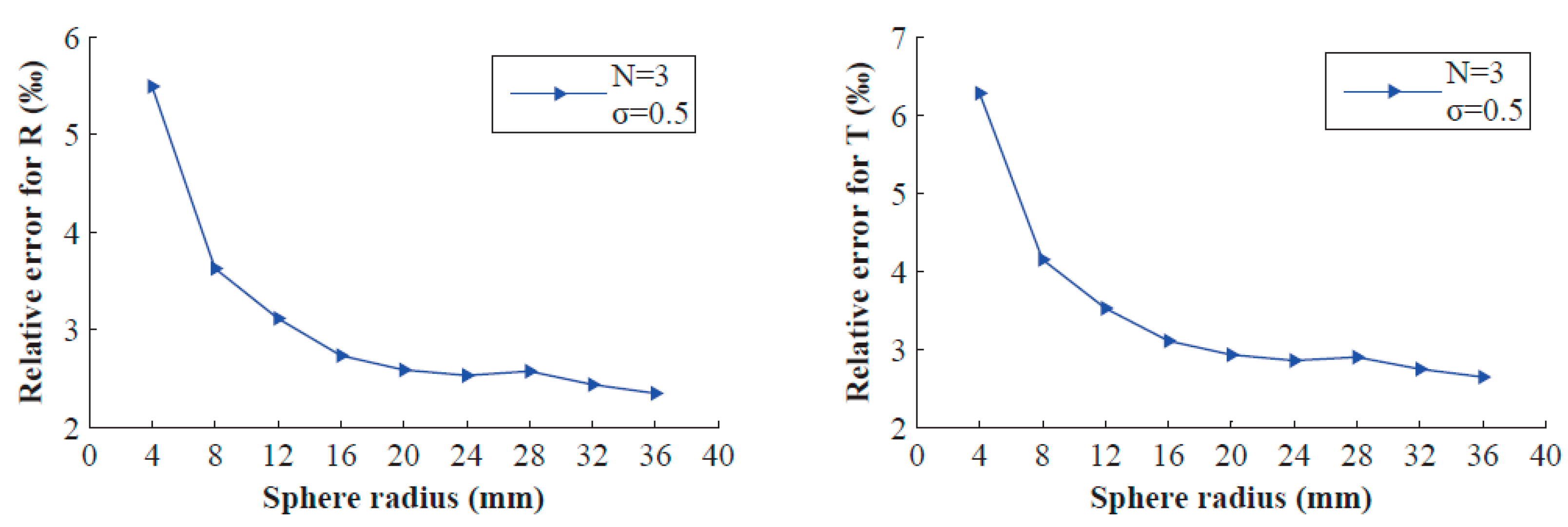

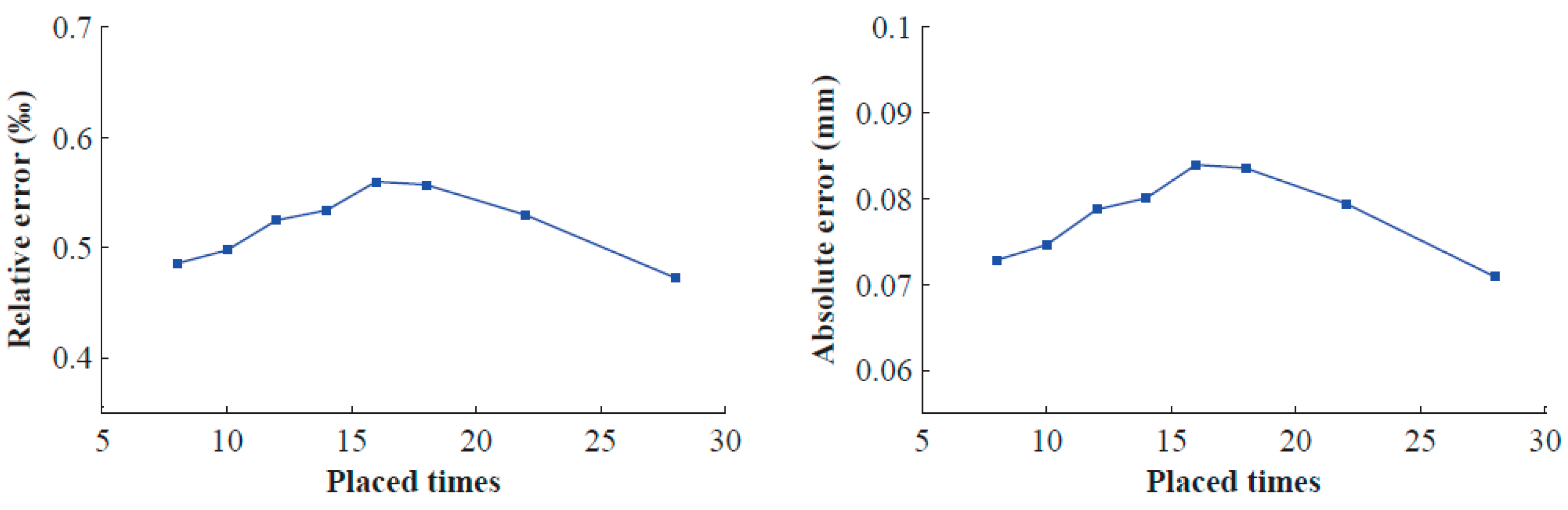

Finally, based on the condition described above, we can obtain the following conclusion that the computation errors , both have positive relationships with . The smaller the value of μ, the smaller the computation error , . By reducing (the depth of the sphere centre) or increasing (the radius of the sphere), is smaller, so , will be smaller. In this way, we can improve the computational accuracy of the image point (,) of the sphere centre.

6. Conclusions

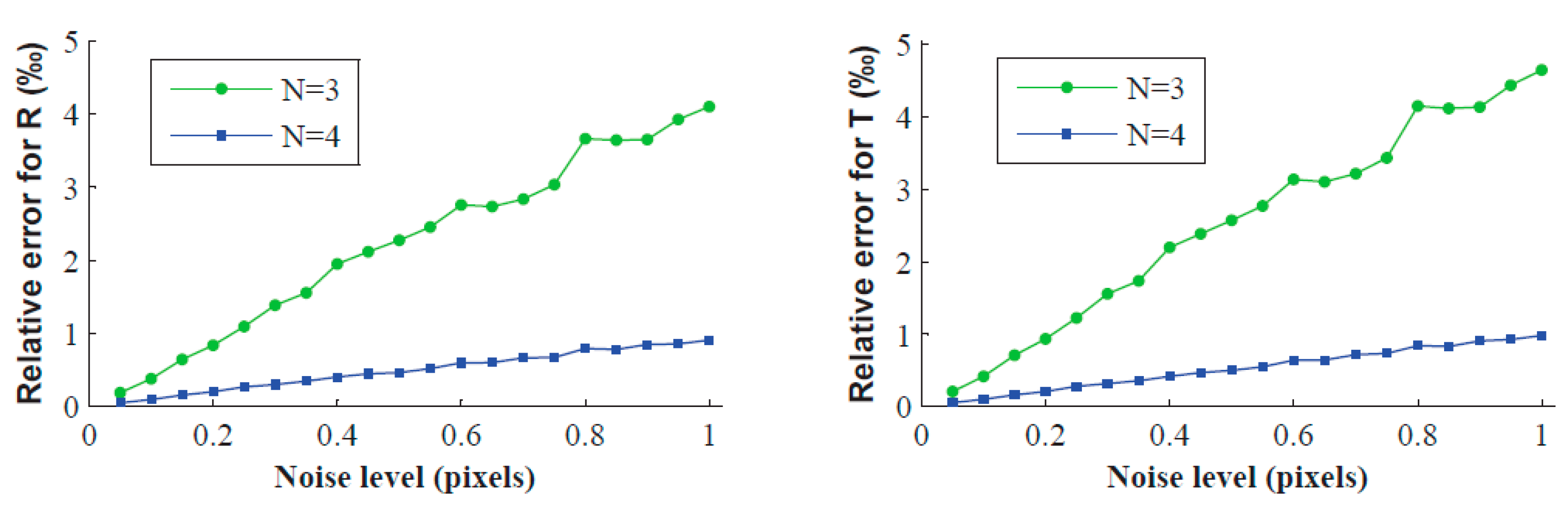

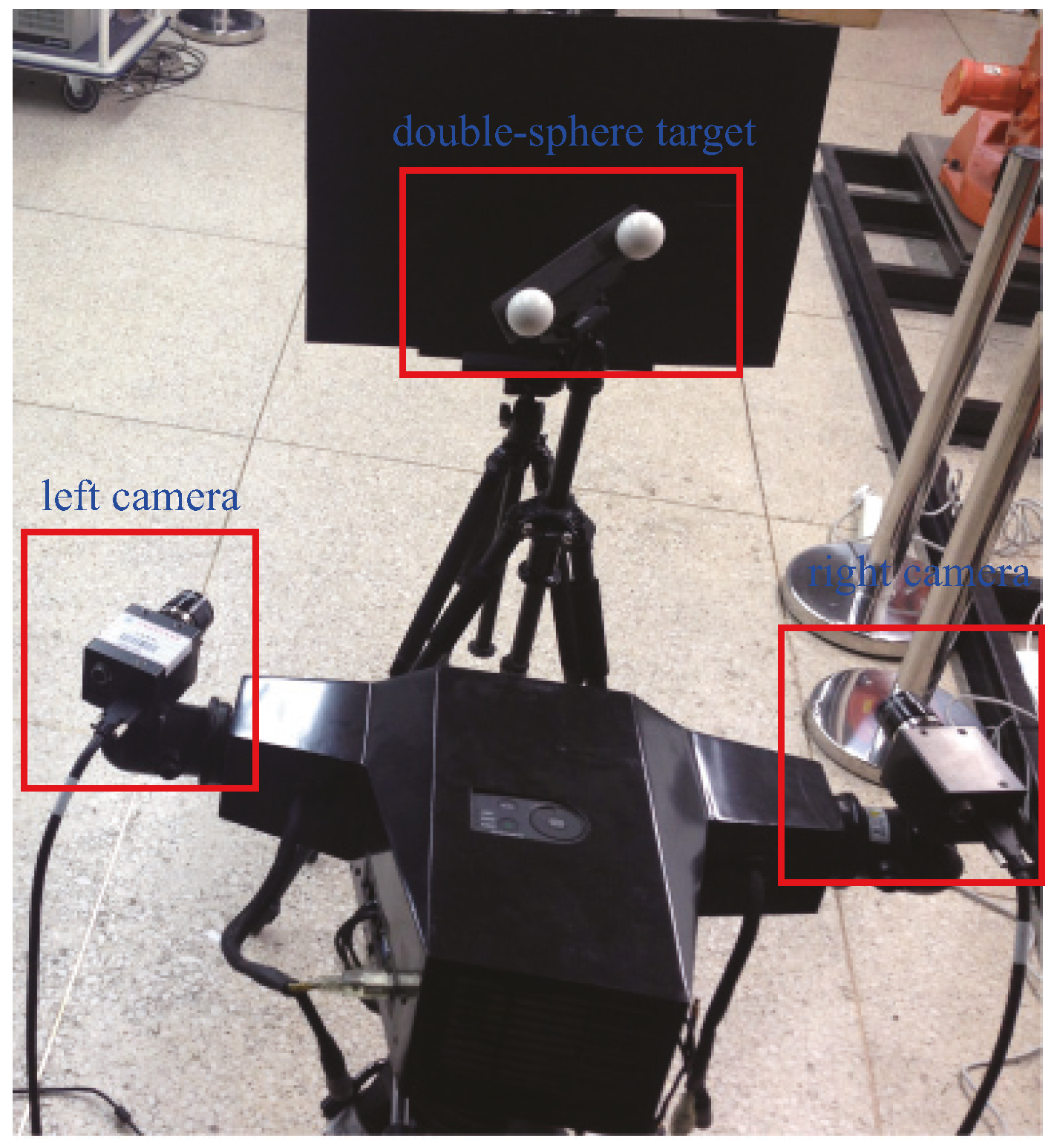

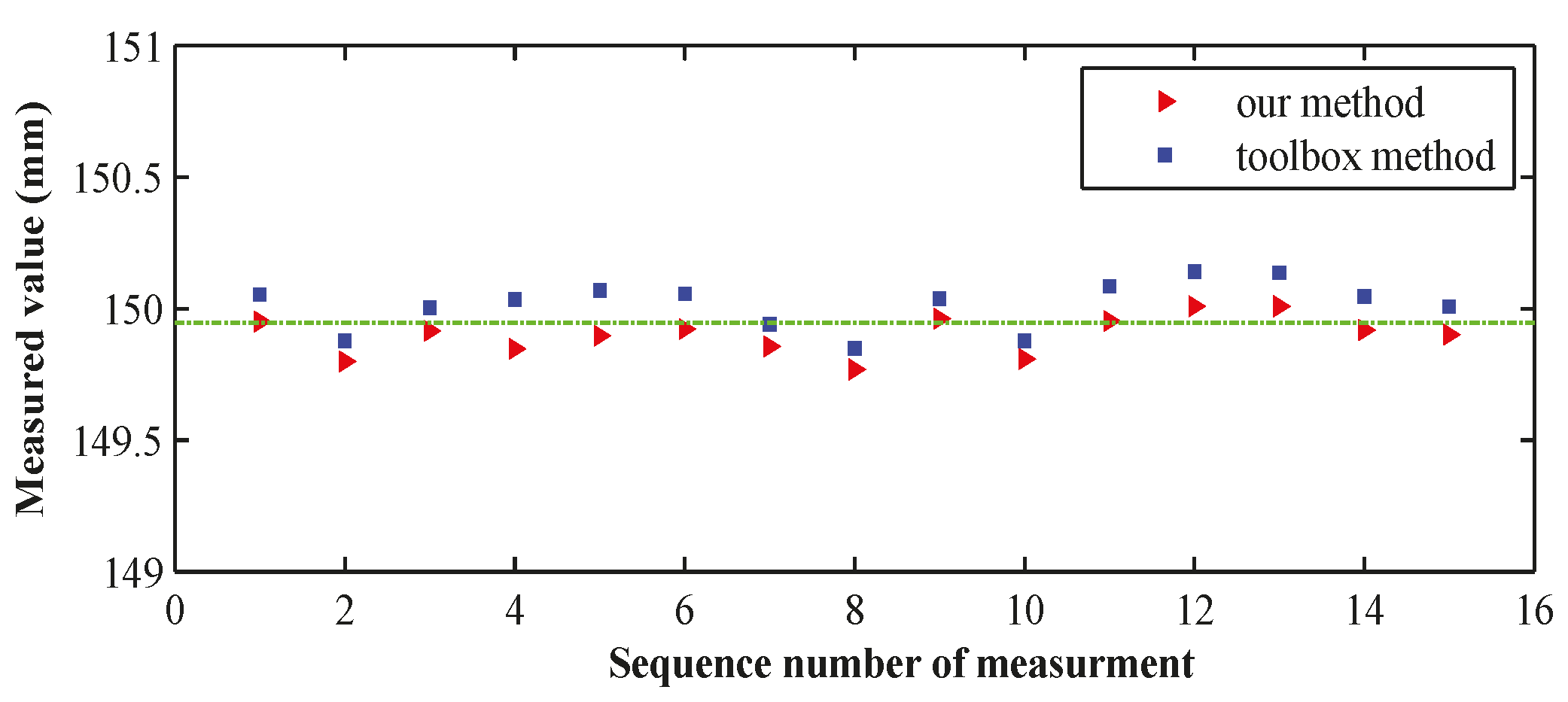

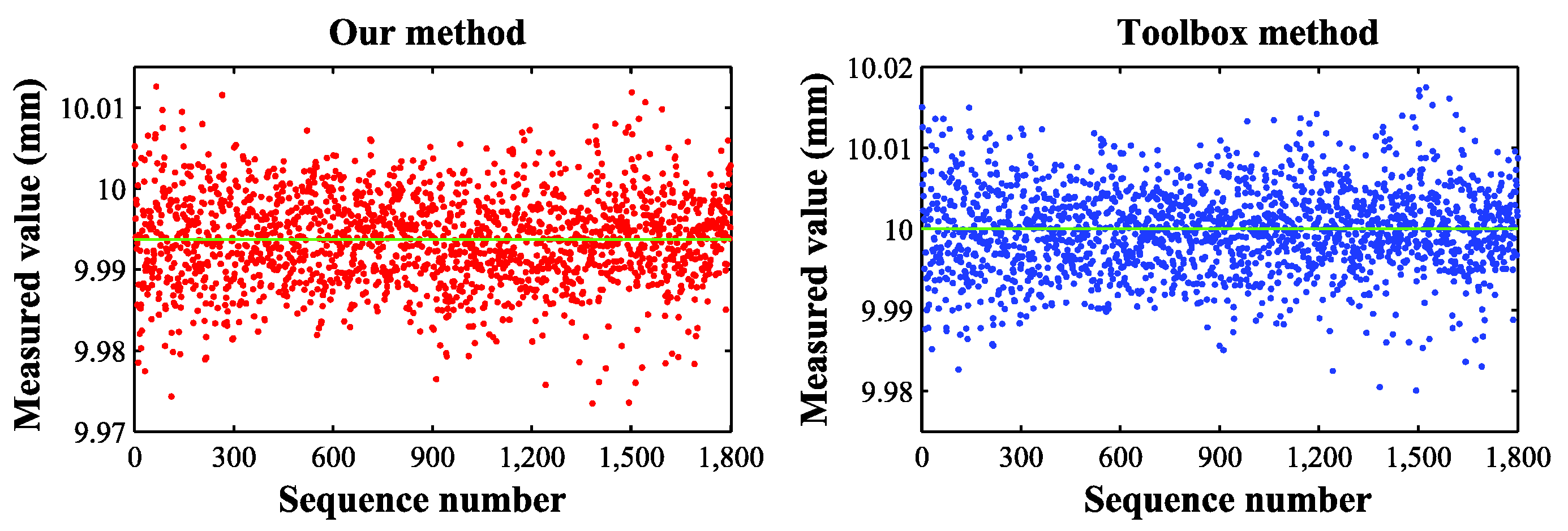

In the paper, we describe a method to calibrate structural parameters. This method requires a double-sphere target placed a few times in different positions and orientations. We utilize the normal vectors of spatial planes to compute the rotation matrix and use a linear algorithm to solve the translation vector. The simulations demonstrate how the noise level, the number of placement times and the depth-scale factor influence the calibration accuracy. Real data experiments have shown that when measuring the object with a length of approximately 150 mm, the accuracy is 0.084 mm, and when measuring 10 mm, the accuracy is 0.008 mm.

If the sphere centres are all coplanar, our method will fail. Therefore, the double-sphere target should be placed in different positions and orientations to avoid this degradation. Because the calibration characteristic of the sphere is its contour, we should prevent the double-sphere target from completely mutual occlusion. As mentioned above, the two spheres should have the same radius. However, if the two sphere centres are unequal, our method can still work. If the ratio of the two radii is known, the ratio value should be considered when recovering the intermediate parallel planes; other computation procedures remain unchanged. If the ratio is unknown, three arbitrary projected ellipses with the same sphere should be selected to recover the intermediate parallel plane. Furthermore, this target must be placed at least four times. Obviously, such a target provides fewer constraints than the target with a known ratio of sphere radii when solving the rotation matrix. To calibrate the BSVS with a small public view while guarantee high accuracy, we can couple these two spheres with large radii to form a double-sphere target. In multi-camera calibration, using the double-sphere target can avoid the simultaneous visibility problem and performs well.