Estimating Shape and Micro-Motion Parameter of Rotationally Symmetric Space Objects from the Infrared Signature

Abstract

:1. Introduction

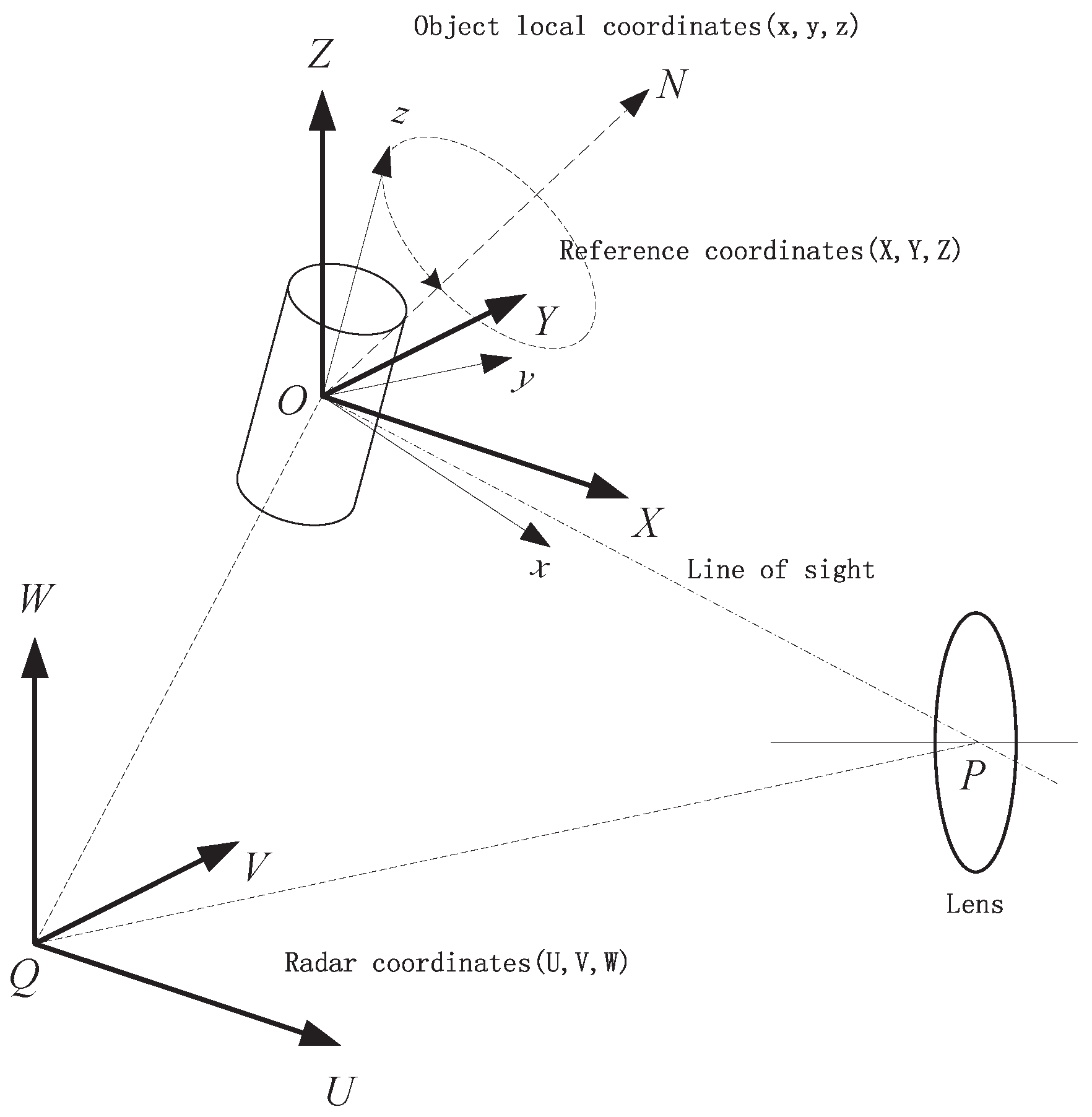

2. Signal Model

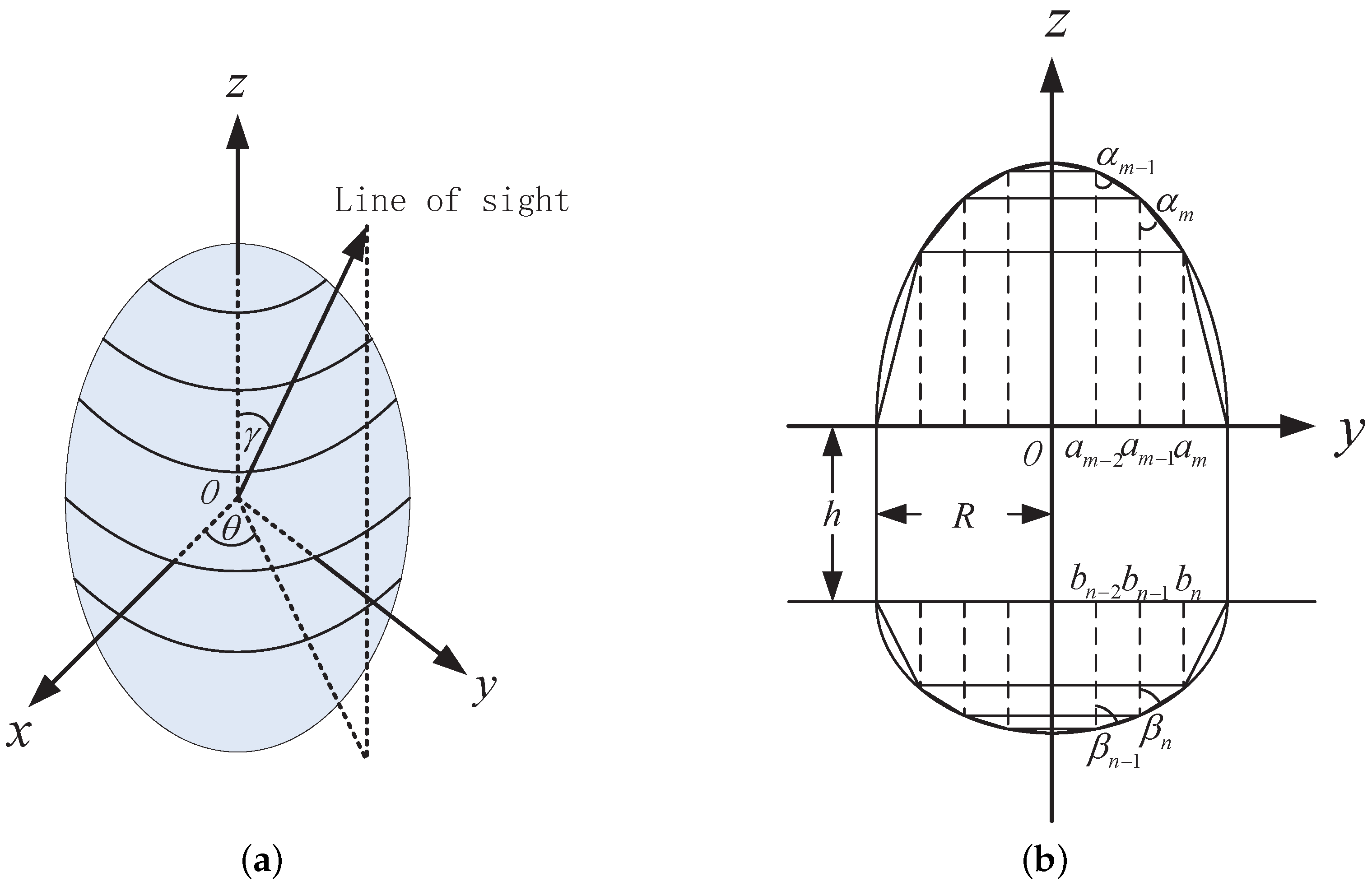

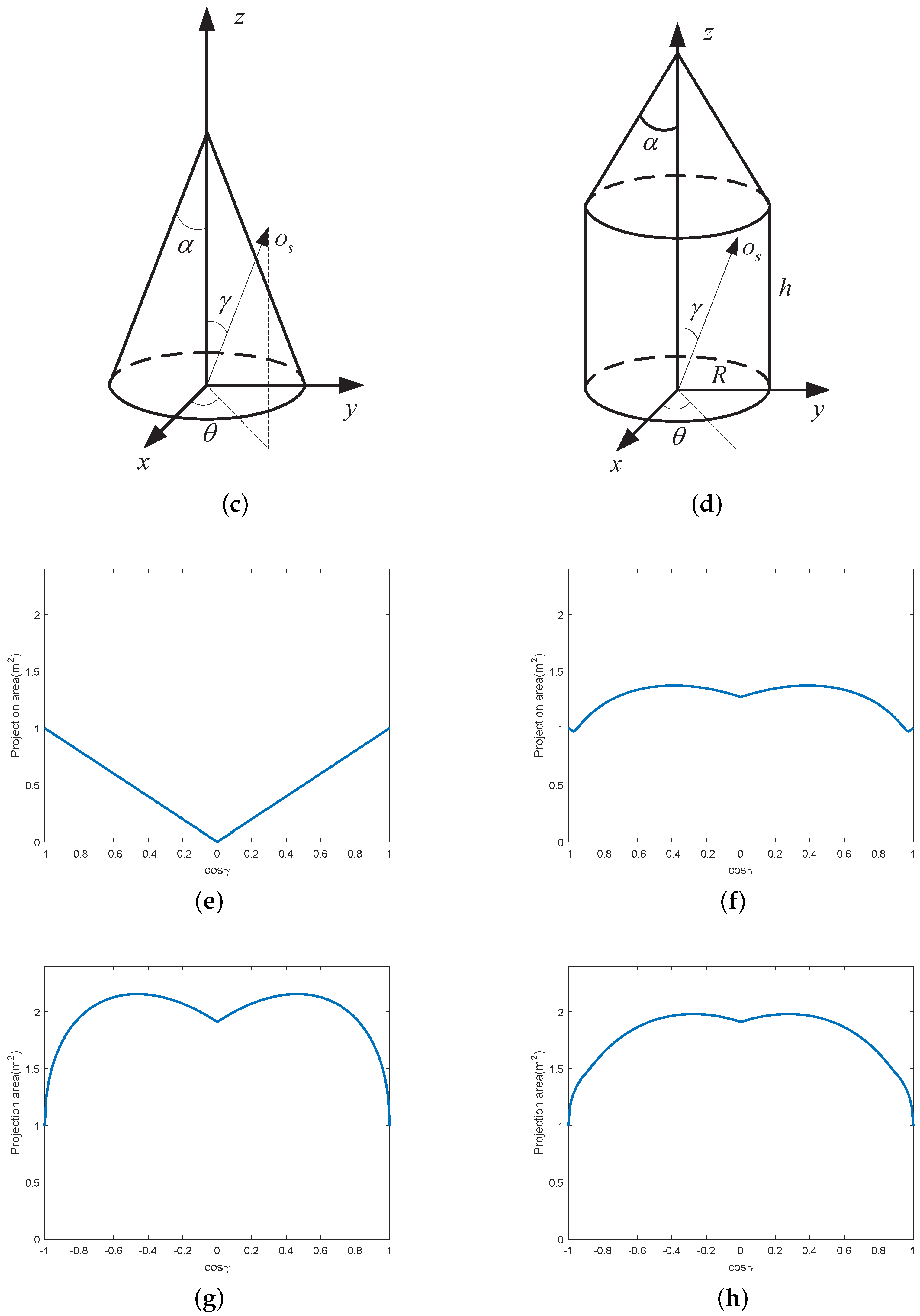

2.1. The Projection of a Rotationally Symmetric Object

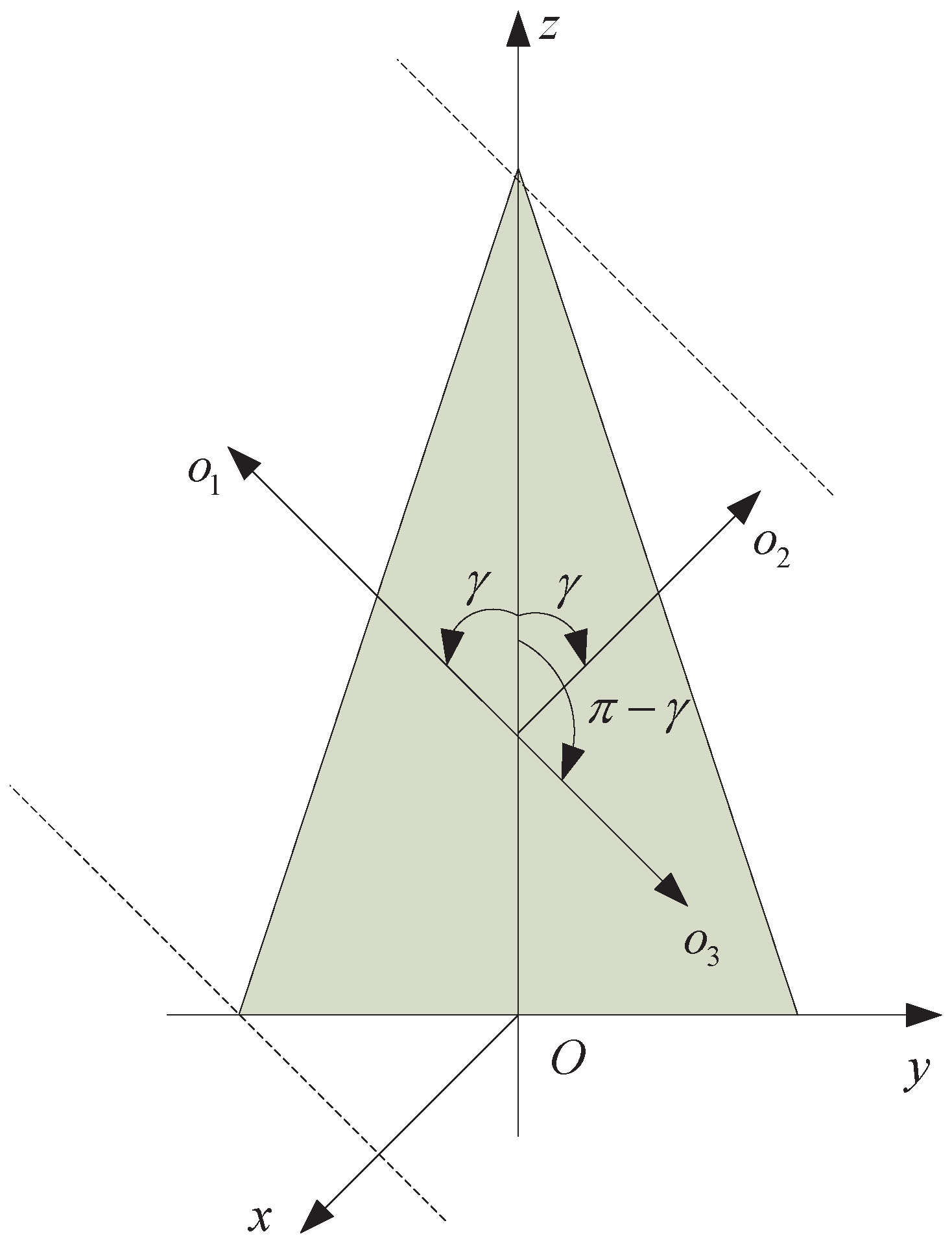

2.2. The Variation of Observing Angle

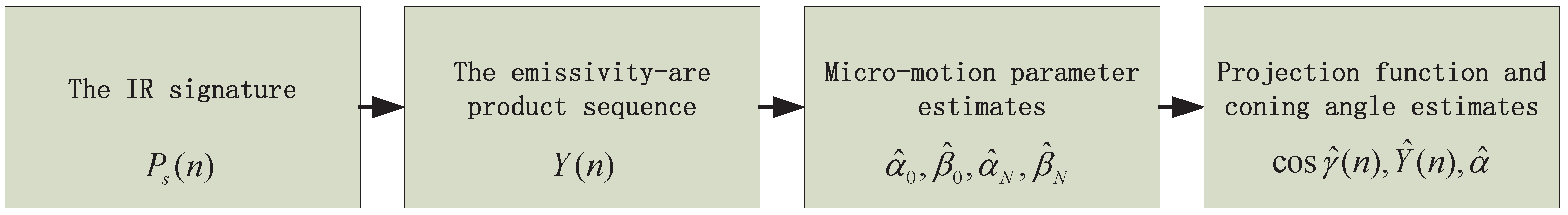

3. Algorithm

4. Experiments

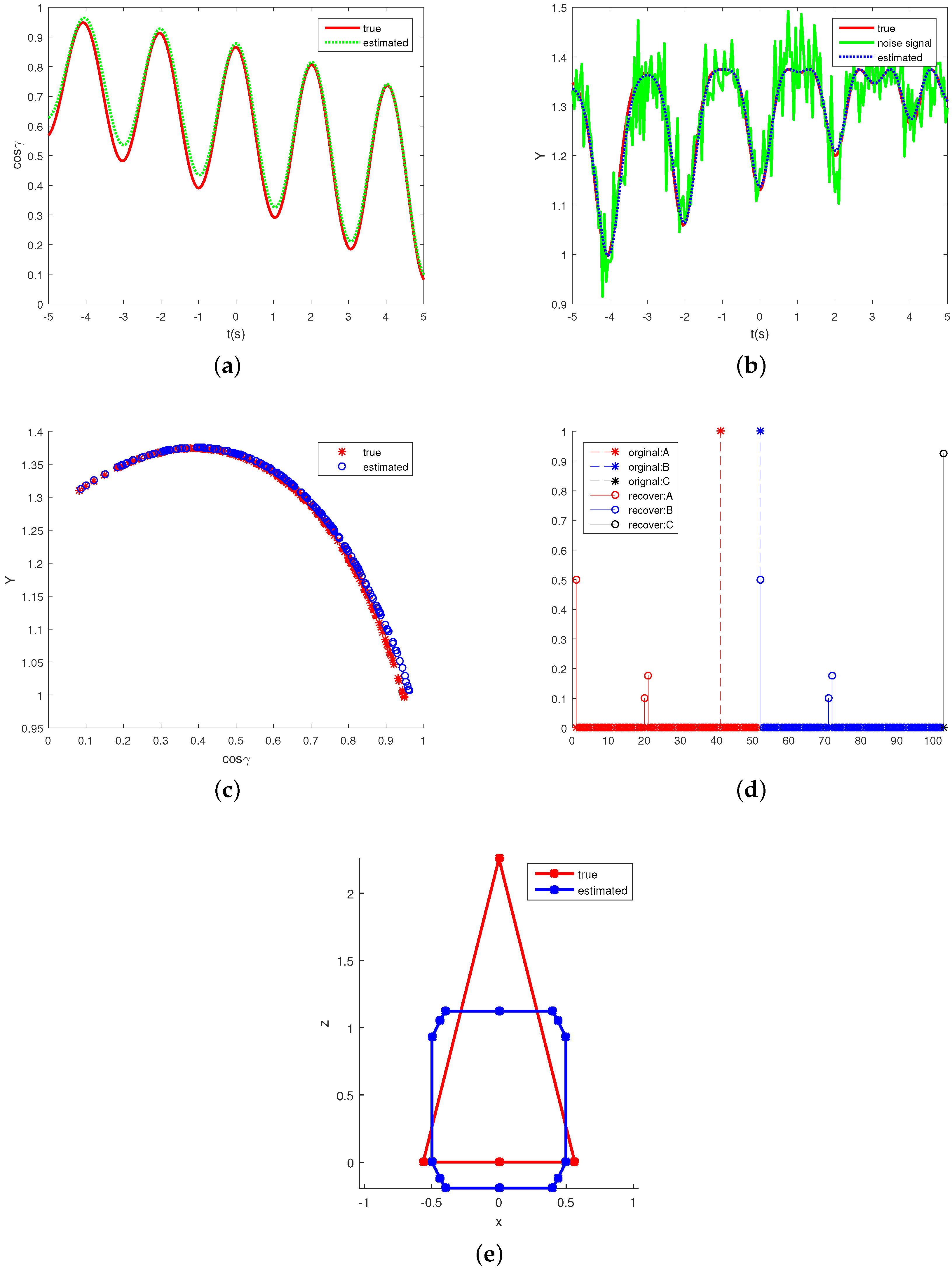

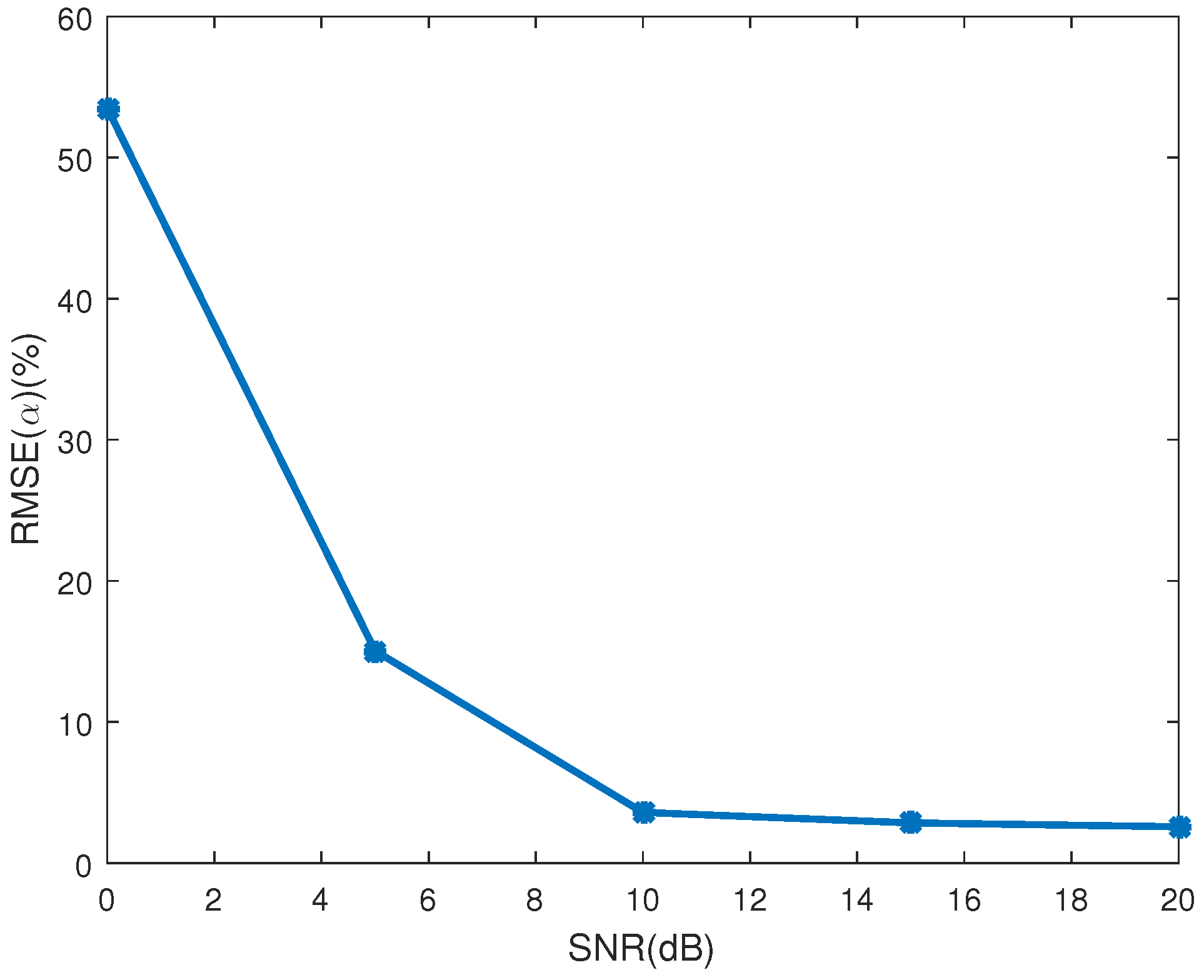

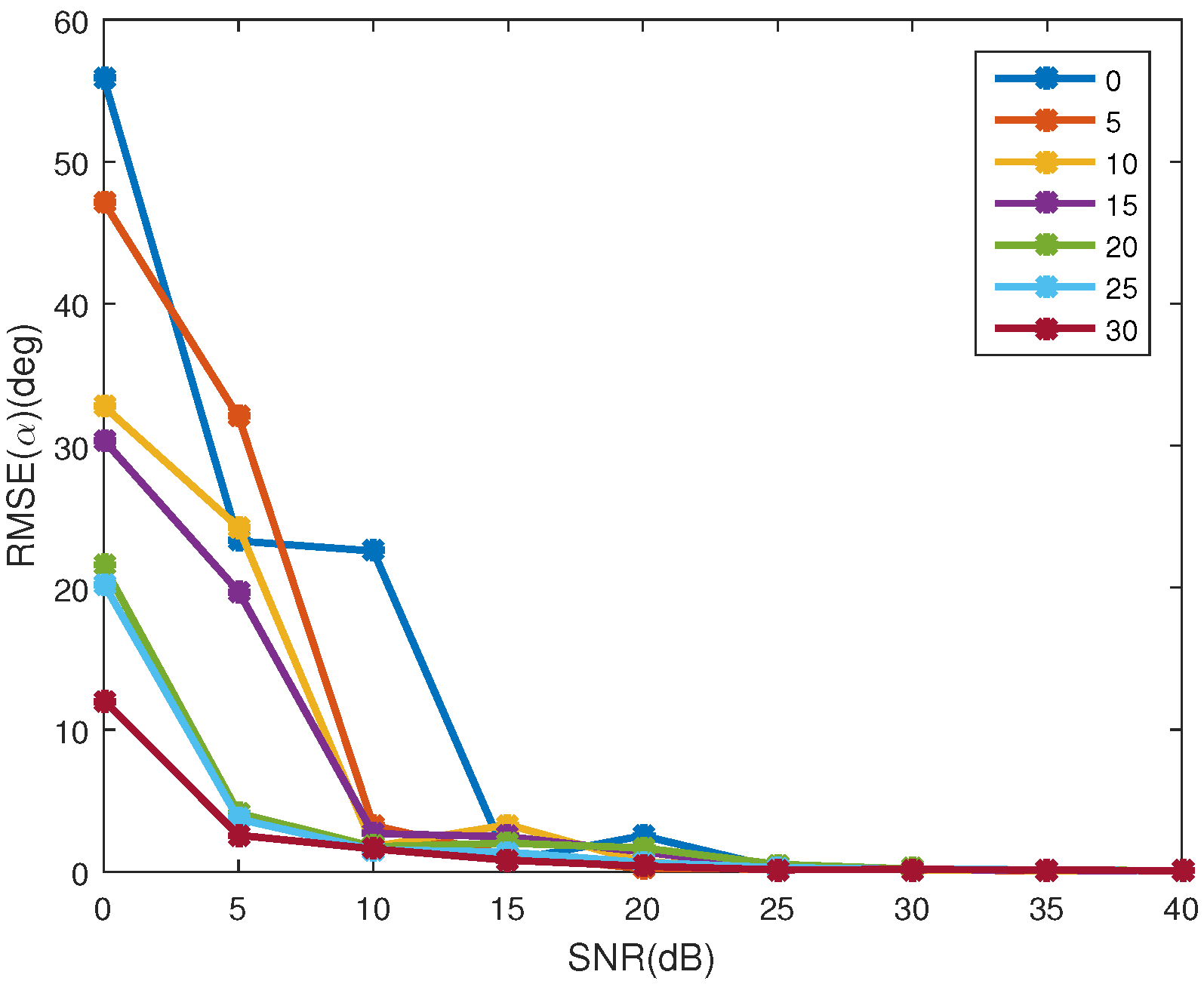

4.1. Influence of Noise

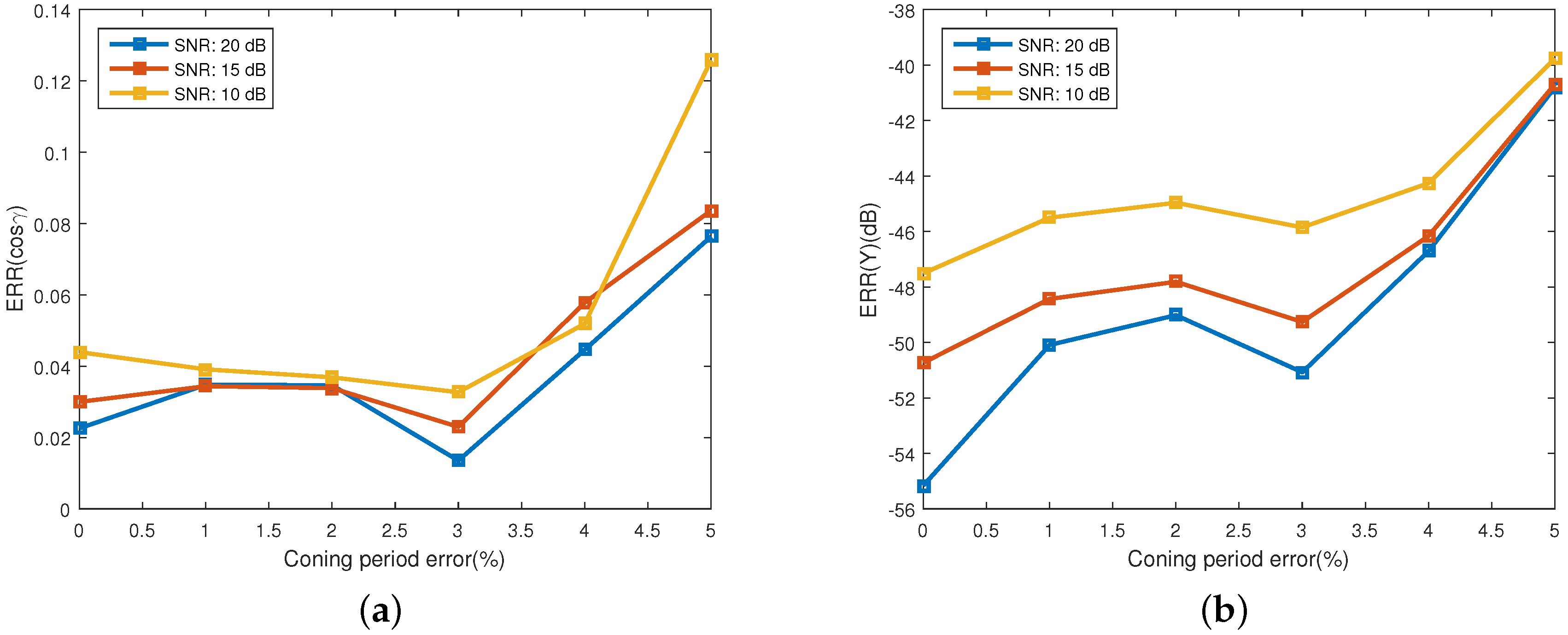

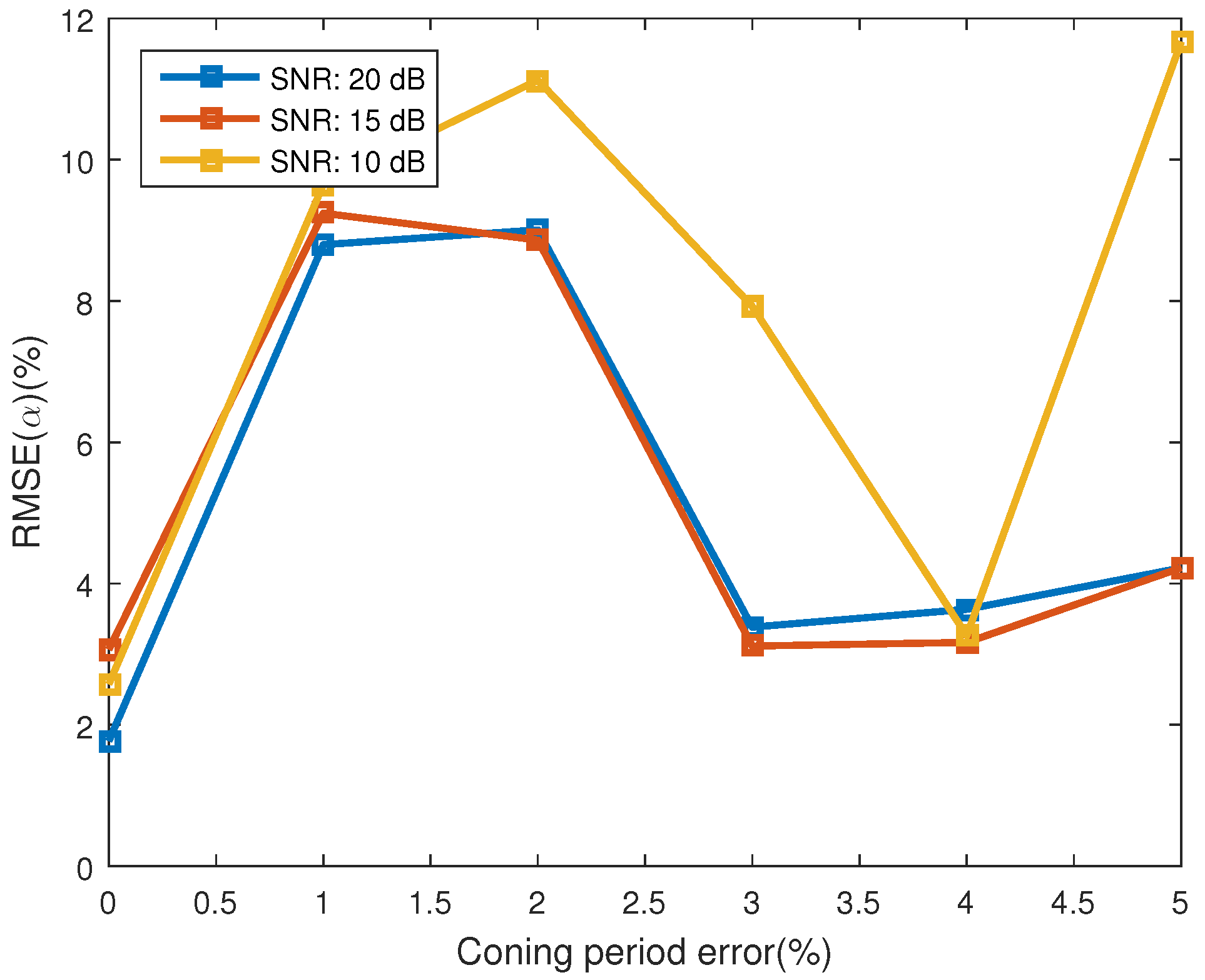

4.2. Influence of the Estimating Error for Coning Period

4.3. Influence of Coning Angle

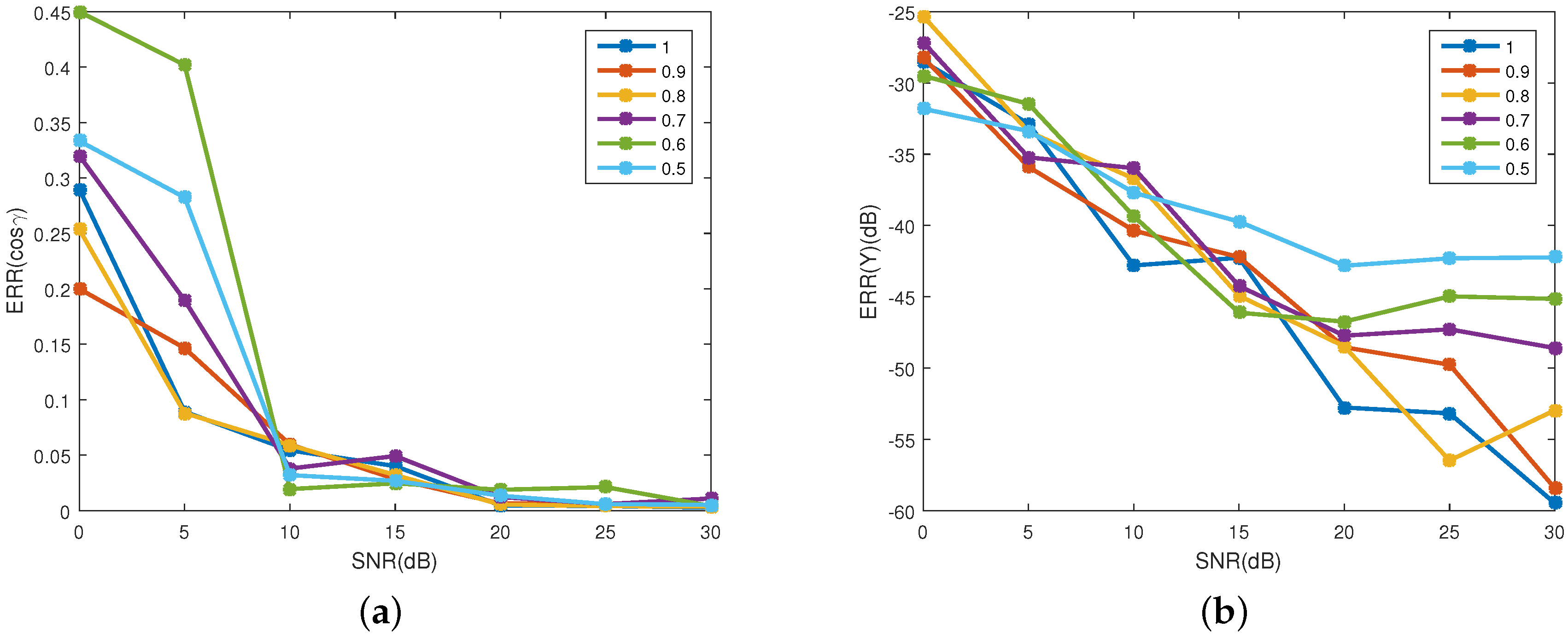

4.4. Influence of Reflected Energy

4.5. Influence of Imaging

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Resch, C.L. Neural network for exo-atmospheric target discrimination. Proc. SPIE Int. Soc. Opt. Eng. 1998, 3371, 119–128. [Google Scholar]

- Cayouette, P.; Labonte, G.; Morin, A. Probabilistic neural networks for infrared imaging target discrimination. Proc. SPIE Int. Soc. Opt. Eng. 2003, 5426. [Google Scholar] [CrossRef]

- Chen, V.C.; Li, F.; Ho, S.S.; Wechsler, H. Micro-Doppler effect in radar: Phenomenon, model, and simulation study. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 2–21. [Google Scholar] [CrossRef]

- Gao, H.; Xie, L.; Wen, S.; Kuang, Y. Micro-doppler signature extraction from ballistic target with micro-motions. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1969–1982. [Google Scholar] [CrossRef]

- Li, X.R.; Jilkov, V.P. Survey of maneuvering target tracking. Part II: Motion models of ballistic and space targets. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 96–119. [Google Scholar] [CrossRef]

- Pan, X.Y.; Wang, W.; Liu, J.; Ma, L.; Feng, D.J.; Wang, G.Y. Modulation effect and inverse synthetic aperture radar imaging of rotationally symmetric ballistic targets with precession. Iet Radar Sonar Navig. 2013, 7, 950–958. [Google Scholar] [CrossRef]

- Lei, P.; Wang, J.; Sun, J. Classification of free rigid targets with micro-motions using inertial characteristic from radar signatures. Electron. Lett. 2014, 50, 950–952. [Google Scholar] [CrossRef]

- Zhang, W.; Li, K.; Jiang, W. Parameter estimation of radar targets with macro-motion and micro-motion based on circular correlation coefficients. IEEE Signal Process. Lett. 2015, 22, 633–637. [Google Scholar] [CrossRef]

- Omar, M.; Hassan, M.I.; Saito, K.; Alloo, R. IR self-referencing thermography for detection of in-depth defects. Infrared Phys. Technol. 2005, 46, 283–289. [Google Scholar] [CrossRef]

- Omar, M.; Hassan, M.; Donohue, K.; Saito, K.; Alloo, R. Infrared thermography for inspecting the adhesion integrity of plastic welded joints. Ndt E Int. 2006, 39, 1–7. [Google Scholar] [CrossRef]

- Resch, C. Exo-atmospheric discrimination of thrust termination debris and missile segments. Johns Hopkins APL Tech. Dig. 1998, 19, 315–321. [Google Scholar]

- Alam, M.S.; Bhuiyan, S.M. Trends in correlation-based pattern recognition and tracking in forward-looking infrared imagery. Sensors 2014, 14, 13437–13475. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.Z.; Chen, J.; Hou, Q.; Fu, H.X.; Dai, Z.; Jin, G.; Li, R.Z.; Liu, C.J. Sparse representation for infrared Dim target detection via a discriminative over-complete dictionary learned online. Sensors 2014, 14, 9451–9470. [Google Scholar] [CrossRef] [PubMed]

- Zhong, X.; Huo, X.; Ren, C.; Labed, J.; Li, Z.L. Retrieving land surface temperature from hyperspectral thermal infrared data using a multi-channel method. Sensors 2016, 16. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z. Research on Techniques of Detection and Discrimination of Point Target in IR Image. Ph.D. Thesis, National University of Defense Technology, Changsha, China, 2005. [Google Scholar]

- Wang, J.; Yang, C. Exo-atmospheric target discrimination using probabilistic neural network. Chin. Opt. Lett. 2011, 9. [Google Scholar] [CrossRef]

- Silberman, G.L. Parametric classification techniques for theater ballistic missile defense. Johns Hopkins APL Tech. Dig. 1998, 19, 322–339. [Google Scholar]

- Andrew, M.; Sessler, J.M.C.; Dietz, B. Countermeasures: A Technical Evaluation of the Operational Effectiveness of the Planned US National Missile Defense System. April 2000. Available online: http://www.ucsusa.org/sites/default/files/legacy/assets/documents/nwgs/cm_all.pdf (accessed on 16 October 2016).

- Macumber, D.; Gadaleta, S.; Floyd, A.; Poore, A. Hierarchical closely spaced object (CSO) resolution for IR sensor surveillance. Proc. SPIE 2005, 5913, 32–46. [Google Scholar]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004; p. 1859. [Google Scholar]

- Lei, P.; Sun, J.; Wang, J.; Hong, W. Micromotion parameter estimation of free rigid targets based on radar micro-doppler. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3776–3786. [Google Scholar] [CrossRef]

| Resolution (pixel) | 128 × 128 | Pixel size (μm) | 30 × 30 |

| Focal length (mm) | 100 | Optical aperture (cm) | 10 |

| Wavelength range (μm) | 8∼12 | Diffusion coefficient (pixel) | 0.5 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Lu, H.; Zhao, F.; Zhang, Z. Estimating Shape and Micro-Motion Parameter of Rotationally Symmetric Space Objects from the Infrared Signature. Sensors 2016, 16, 1722. https://doi.org/10.3390/s16101722

Wu Y, Lu H, Zhao F, Zhang Z. Estimating Shape and Micro-Motion Parameter of Rotationally Symmetric Space Objects from the Infrared Signature. Sensors. 2016; 16(10):1722. https://doi.org/10.3390/s16101722

Chicago/Turabian StyleWu, Yabei, Huanzhang Lu, Fei Zhao, and Zhiyong Zhang. 2016. "Estimating Shape and Micro-Motion Parameter of Rotationally Symmetric Space Objects from the Infrared Signature" Sensors 16, no. 10: 1722. https://doi.org/10.3390/s16101722