1. Introduction

Remote sensing image scene classification, which involves dividing images into different categories without semantic overlap [

1], has recently drawn great attention due to the increasing availability of high-resolution remote sensing data. However, it is difficult to achieve satisfactory results in scene classification directly based on low-level features, such as the spectral, textural, and geometrical attributes, because of the so-called “semantic gap” between low-level features and high-level semantic concepts, which is caused by the object category diversity and the distribution complexity in the scene.

To overcome the semantic gap, many different scene classification techniques have been proposed. The bag-of-visual-words (BoVW) model, which is derived from document classification in text analysis, is one of the most popular methods in scene classification. In consideration of the spatial information loss, many BoVW extensions have been proposed [

2,

3,

4,

5,

6,

7,

8]. Spatial pyramid co-occurrence [

2] was proposed to compute the co-occurrences of visual words with respect to “spatial predicates” over a hierarchical spatial partitioning of an image, to capture both the absolute and relative spatial arrangement of the words. The topic model [

9,

10,

11,

12,

13,

14,

15,

16], as used for document modeling, text classification, and collaborative filtering, is another popular model for scene classification. P-LDA and F-LDA [

9] were proposed based on the latent Dirichlet allocation (LDA) model for scene classification. Because of the weak representative power of a single feature, multifeature fusion has also been adopted in recent years. The semantic allocation level (SAL) multifeature fusion strategy based on the probabilistic topic model (PTM) (SAL-PTM) [

10] was proposed to combine three complementary features, i.e., the spectral, texture, and scale-invariant feature transform (SIFT) features. However, in the traditional methods, feature representation plays a big role and involves low-level feature selection and middle-level feature design. As a lot of prior information is required, this limits the application of these methods in other datasets or other fields.

To automatically learn features from the data directly, without low-level feature selection and middle-level feature design, a number of methods based on deep learning have recently been proposed. A new branch of machine learning theory—deep learning—has been widely studied and applied to facial recognition [

17,

18,

19,

20], scene recognition [

21,

22], image super-resolution [

23], video analysis [

24], and drug discovery [

25]. Differing from the traditional feature extraction methods, deep learning, as a feature representation learning method, can directly learn features from raw data without prior information. In the remote sensing field, deep learning has also been studied for hyperspectral pixel classification [

26,

27,

28,

29], object detection [

30,

31,

32] and scene classification [

33,

34,

35,

36,

37,

38,

39,

40,

41]. The auto-encoder [

37] was the first deep learning method introduced to remote sensing image scene classification, combining a sample selection strategy based on saliency with the auto-encoder to extract features from the raw data, achieving a better classification accuracy than the traditional remote sensing image scene classification methods such as BoVW and LDA. The highly efficient “enforcing lifetime and population sparsity” (EPLS) algorithm, another sparse strategy, was introduced into the auto-encoder to ensure two types of feature sparsity: population and lifetime sparsity [

38]. Zou et al. [

39] translated the feature selection problem into a feature reconstruction problem based on a deep belief network (DBN) and proposed a deep learning-based method, where the features learned by the DBN are eliminated once their reconstruction errors exceed the threshold. Although the auto-encoder-based algorithms can acquire excellent classification accuracies when compared with the traditional methods, they do need pre-training, and can be regarded as convolutional neural networks (CNNs) in practice. Zhang et al. [

40] combined multiple CNN models based on boosting theory, achieving a better accuracy than the auto-encoder methods.

However, most previous methods based on deep learning model such as CNN took images with single scale for training, by cropping the fixed scale patches from image or resizing the image to fixed single and did not consider the object scale variation problem in image caused by the altitude or angle change of the sensor, etc. The features extracted by these methods are not robust to the object scale, leading to unsatisfactory remote sensing image scene classification results. An example of scale variation of object is given in

Figure 1, where the scale of the storage tank and airplane changed a lot which may be caused by the altitude or angle change of the sensor.

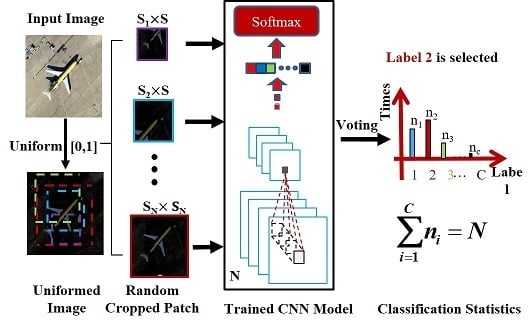

In order to solve the object scale variation problem, scene classification based on a deep random-scale stretched convolutional neural network (SRSCNN) is proposed in this paper. In SRSCNN, patches with a random scale are cropped from the image, and patch stretching is applied, which simulates the object scale variation to ensure that the CNN learns robust features from the remote sensing images.

The major contributions of this paper are as follows:

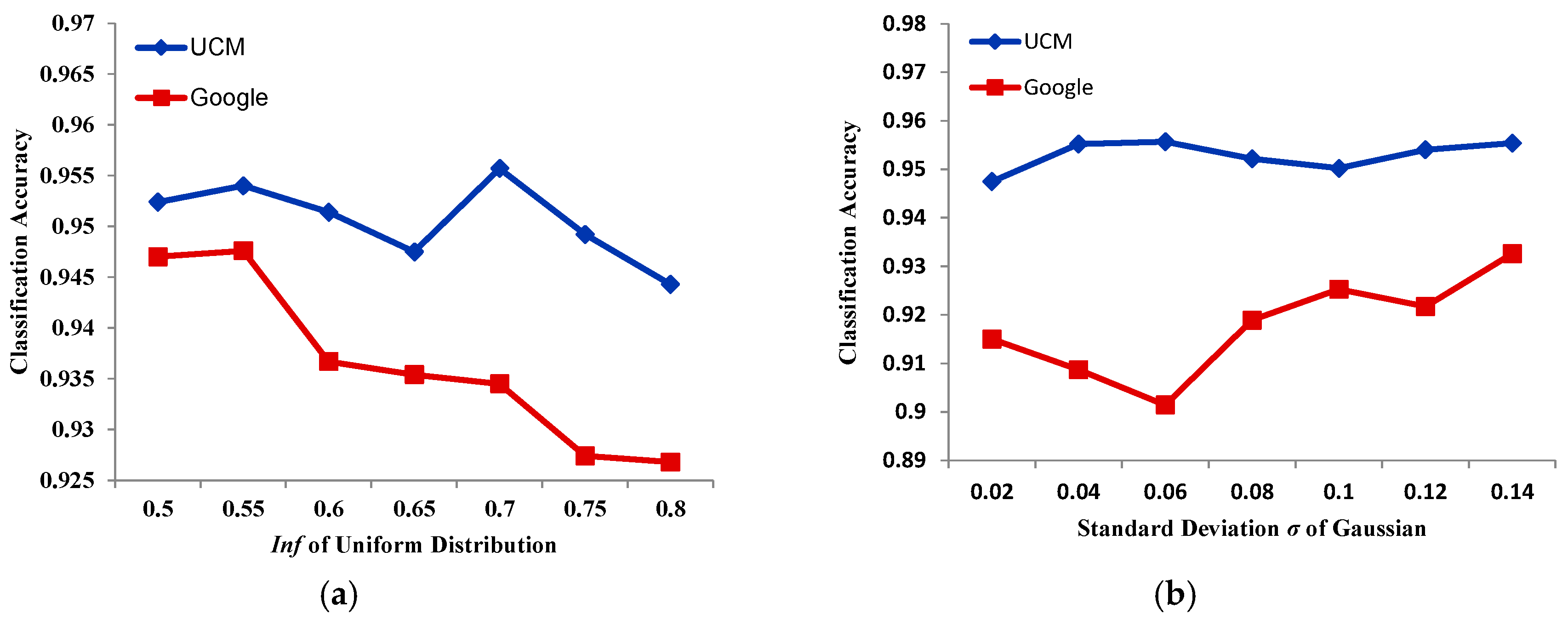

(1) The random spatial scale stretching strategy. In SRSCNN, random spatial scale stretching is proposed to solve the problem of the dramatic scale variation of objects in the scene. Some objects such as airplanes and storage tanks are very important in remote sensing image scene classification. However, the object scale variation can lead to weak feature representation for some scenes, which in turn leads to wrong classification. In order to solve this problem, the assumption that the object scale variation satisfies a uniform or a Gaussian distribution is adopted in this paper, and the strategy of cropping patches with a random scale following a certain distribution is applied to simulate the object variation in the scene, forcing the model to extract features that are robust to scale variation.

(2) The robust scene classification strategy. To fuse the information from different-scale patches with different locations in the image, thereby further improving the performance, SRSCNN applies multiple views of one image and conducts fusion of the multiple views. The image is thus classified multiple times and its label decided by voting. According to VGGNet [

42] and GoogLeNet [

43], using a large set of crops of multiple scales can improve classification accuracy. However, unlike GoogLeNet, where only four scales are applied in the test phase, more continuous scales are adopted in the proposed method. In this paper, patches with a random position and scale are cropped and then stretched to a fixed scale to be classified by the trained CNN model. Finally, the whole image’s label is decided by selecting the label that occurs the most.

The proposed SRSCNN was evaluated and compared with conventional remote sensing image scene classification methods and methods based on deep learning. Three datasets—the 21-class UC Merced (UCM) dataset, the 12-class Google dataset of SIRI-WHU, and the 8-class Wuhan IKONOS dataset—were used in the testing. The experimental results show that the proposed method can obtain a better classification accuracy than the other methods, which demonstrates the superiority of the proposed method.

The remainder of this paper is organized as follows.

Section 2 makes a brief introduction to CNNs.

Section 3 presents the proposed scene classification method based on a deep random-scale stretched convolutional neural network, namely SRSCNN. In

Section 4, the experimental results are provided. The discussion is given in

Section 5. Finally, the conclusion is given in

Section 6.

2. Convolutional Neural Networks

CNNs are a popular type of deep learning model consisting of convolutional layers, pooling layers, fully connected layers, and a softmax layer, as shown in

Figure 2, which is described in

Section 3. CNNs are appropriate for large-scale image processing because of the sparse interactions and weight sharing resulting from the convolution computation. In CNNs, the sparse interaction means that the units in a higher layer are connected with units with a limited scale, i.e., the receptive field in the lower layer. Weight sharing means that the units in the same layer share the same connection weights, i.e., the convolution filters, decreasing the number of parameters in the CNN. The convolution computation makes it possible for the response of the units of the deepest layer of the network to be dependent only upon the shape of the stimulus pattern, and they are not affected by the position where the pattern is presented. Given a convolutional layer, its output can be obtained as follows:

where

denotes the output of layer

,

is the input of layer

, and

is the convolution filters of layer

.

In recent years, more complex structures and deeper networks have been developed. Lin et al. [

44] proposed a “network in network” structure to enforce the abstract ability of the convolutional layer. Based on the method proposed by Lin et al. [

44], Szegedy et al. [

43] designed a more complex network structure by applying an inception module. In AlexNet [

45], there are eight layers, in addition to the pooling and contrast normalization layers; however, in VGGNet and GoogLeNet, the depths are 19 and 22, respectively. More recently, much deeper networks have been proposed [

46,

47].

Due to the excellent performance of CNNs in image classification, a number of methods based on CNNs for remote sensing scene classification have been proposed, significantly improving classification accuracy. However, these methods do not consider the object scale variation problem in the remote sensing data, which means that the extracted features lack robustness to the object scale change, leading to misclassification of the scene image. The objects contained in the scene image play an important role in scene classification [

48,

49]. However, the same objects in remote sensing images can have different scales, as shown in

Figure 3, resulting in them being difficult to capture. It is easy to classify a scene image containing objects with a common scale; however, those images containing objects with a changed scale can easily be wrongly classified. Taking the two images of storage tanks and airplanes in

Figure 3 as examples, the right images are easy to classify, but it is almost impossible to recognize the left images as storage tanks/airplanes because of the different scales of the images.

3. Scene Classification for HSR Imagery Based on a Deep Random-Scale Stretched Convolutional Neural Network

To solve this object scale problem, the main idea in this paper is to generate multiple-scale samples to train the CNN model, forcing the trained CNN model to correctly classify the same image with different scales. To help make things easier, the scale variation is modeled as follows in this paper:

where

is the true scale;

denotes the scale variation factor, obeying a normal or uniform distribution, i.e.,

or

; and

represents the changed scale. Once

and

are known,

can be obtained by

, and given an image

I with scale

, image

is obtained by stretching

into

and then feeding it into the CNN to extract the features. According to this assumption, the goal of making the features extracted by the CNN robust to scale has been transformed into making the features robust to

, i.e., the trained CNN can correctly recognize image

with various values of

. To achieve this, for each image, multiple samples

are sampled, and multiple corresponding stretched images

are obtained to force the CNN to be robust to α in the training phase. Meanwhile, in the CNN, in order to increase the number of image samples, the patches cropped from the image are fed into the CNN, instead of the whole image. Assuming that the scale of the patches is

, the patches should be cropped from the stretched image with scale

. Finally, there are three steps to generating the patches that are fed into the CNN, as follows:

(1) Sample from or ;

(2) Stretch an image of to , where ;

(3) Crop a patch with scale from the stretched image obtained in step 2.

However, in practice, due to the linearity of Equation (2), the above steps can be translated into the following steps to save memory:

(1) Sample from or ;

(2) Compute the cropping scale and crop a patch with scale from image . In this step, the upper-left corner of the cropped patch in image is determined by randomly selecting an element from where is the samples and lines of the whole image .

(3) Stretch the cropped patch obtained in step 2 into .

For every image in the dataset, we repeat the above three steps and finally obtain multiple-scale samples from the images, as shown in

Figure 4. In this paper, the two kinds of distribution for α are respectively tested, and the results are discussed in

Section 5. The parameter

is set to 1.2 by experience, and bilinear interpolation is adopted in the random-scale stretching as a trade-off between computational efficiency and classification accuracy. In the proposed method, there will be many patches cropped from every image. However, the number of patches is not determined explicitly. Instead, we determine the number of patches by determining the number of training iterations. Assuming that the number of training samples is

m, for training, the number of iterations is set as

N, where

N is 70 K in this paper, and for each iteration, there are

n patches cropped from the

n (set as 60 in this paper) images and fed into the CNN model. Therefore, for the training, the number of patches required is

N ×

n, which means that the number of patches from every training image is

. In testing, the cropped patch number is set by experience.

On the one hand, this can be regarded as a data augmentation strategy for remote sensing image scene classification, which could be combined with other data augmentation methods such as occlusion, rotation, mirroring, etc. On the other hand, the random-scale stretching is a process which adds structure noise to the input. Differing from the denoising auto-encoders, which add value noise to the input to force the model to learn robust features, SRSCNN focuses on structure noise, i.e., the scale change of the objects. Similar to the denoising auto-encoders, the input of the model is corrupted with random noise following a definite statistical distribution, i.e., a uniform or normal distribution.

3.1. SRSCNN

The proposed SRSCNN method integrates random-scale stretching and a CNN, and can be divided into three parts: (1) data pre-processing; (2) random-scale stretching; and (3) CNN model training. In this paper, the image is represented as a tensor with dimensions of H × W × 3, where H and W represent the height and width of the image, respectively.

Data pre-processing: To accelerate the learning convergence of the CNN, normalization is required. This is usually followed by the z-score to centralize the data distribution. However, to keep things simple, in this paper, pixels are normalized to [0, 1] by dividing them by the maximum pixel value:

where

is the normalized image, and

is the maximum pixel value in the image.

Random-scale stretching: In the proposed method, random-scale stretching is integrated with random rotation, which are common data augmentation methods in CNN training. Given a normalized image

, the image output

of the random-scale stretching is obtained as follows:

where

stands for the random-scale stretching, and

denotes the random rotation of

degrees, where

is 0, 90, 180, or 270.

CNN model training: After the random-scale stretching, the image is fed into the CNN model, which consists of convolutional layers, pooling layers, a fully connected layer, and a softmax layer.

Convolutional layers: Differing from the traditional features utilized in scene classification, where spectral features such as the spectral mean and standard deviation, and spatial features such as SIFT, are selected and extracted artificially, the convolutional layers can automatically extract features from the data. There are multiple convolution filters in each layer, and every convolution filter corresponds to a feature detector. For convolutional layer

t (

t ≥ 1), given the input

, the output

of convolutional layer

t can be obtained by Equations (6) and (7):

where

represents the

kth convolutional filter corresponding to the

ith output feature map,

denotes the

kth feature map of

,

is the bias corresponding to the

ith output feature map,

is the convolution computation, and

f is the activation function,

is

ith output feature map of

. As Equation (6) shows, the convolution is a linear mapping, and is always followed by a non-linear activation function f such as sigmoid, tanh, SoftPlus, ReLU, etc. In this paper, ReLU is adopted as the activation

f due to its superiority in convergence and its sparsity compared with the other activation functions [

50,

51,

52]. ReLU is a piecewise function:

Pooling layers: Following the convolutional layers, the pooling layers expect to achieve a more compact representation by aggregating the local features, which is an approach that is more robust to noise and clutter and invariant to image transformation [

53]. The popular pooling methods include average pooling and max pooling. Max pooling is adopted in this paper:

where

is the scale of the pooling kernel.

Fully connected layer: The fully connected layer can be viewed as a special convolutional layer whose output height and width are 1, i.e., the output is a vector. In addition, in this paper, the fully connected layer is followed by dropout [

54], which is a regularizer to prevent co-adaptation since a unit cannot rely on the presence of other particular units because of the random presence of them in the training phase.

Softmax layer: In this paper, softmax is added to classify the features extracted by the CNN. As a generalization of logistic regression for a multi-class problem, softmax outputs a vector whose every element represents the possibility of a sample belonging to each class, as shown in Equation (10):

where

represents the possibility of sample

i’s label being

t. Based on maximum-likelihood theory, loss function of softmax can be obtained as follows:

where

is the number of samples, and the second term is the regularization term, which is always the L2-norm, to avoid overfitting.

is the coefficient to balance these two terms. The chain rule is applied in the back-propagation for computing the gradient to update the parameters, i.e., the weight

and bias

in CNN. Take the layer t as an example. To determine the values of

and bias

in layer

t, the stochastic gradient descent method is performed:

(1) Compute the objective loss function in Equation (11);

(2) Based on the chain rule, compute the partial derivative of

and bias

:

(3) Update the values of

and bias

:

where

is the learning rate. In the training phase, the above three steps are repeated until the required number of iterations (set as 70 K in this paper) is reached. The procedure of SRSCNN is presented in Algorithm 1, where random rotation is integrated with random-scale stretching.

| Algorithm 1. The SRSCNN procedure |

| Input: |

| -input dataset D = {(I, y) | I∈RH×W×3, y∈{1, 2, 3, …C} |

| -scale of patch R |

| -batch size m |

| -scale variation factor obeying distribution d |

| -defined CNN structure Net |

| -maximum iteration times L |

| Output: |

| -Trained CNN model |

| Algorithm: |

| 1. randomly initialize Net |

| 2. for l = 1 to L do |

| 3. generate a small dataset P with |P| = m from D |

| 4. for I = 1 to m do |

| 5. normalize IiP to [0, 1] by dividing by the maximum pixel value to obtain the normalized image Iin. |

| 6. generate a random-scale stretched patch pi from Iin. |

| 7. P = P − {Ii}, P = P∪{pi} |

| 8. end for |

| 9. Feed P to Net, update parameters W and b in Net |

| 10. end for |

According to recent deep learning theory [

42,

43], representation depth is beneficial for classification accuracy. However, the structure like GoogLeNet and VGGNet cannot be directly used because of the small size of remote sensing datasets compared with the ImageNet dataset with millions of images. Therefore, in this paper, a network structure similar to VGGNet is adopted, making a trade-off between the depth and the number of images in the dataset, where every two convolutional layers are stacked. The main structure configuration adopted in this paper is shown in

Table 1. In

Table 1, the convolutional layer is denoted as “Conv

N–

K”, where

N denotes the convolutional kernel size and

K denotes the number of convolutional kernels. The fully connected layer is denoted as “FC–

L”, where

L denotes the number of units in the fully connected layer. The effect of

L on the scene classification accuracy is analyzed in

Section 5.

3.2. Remote Sensing Scene Classification

In the proposed method, the different patches from the same image contain different information, so every feature extracted from a patch can be viewed as a perspective of the scene label of the image where the patch is cropped from. To further improve the scene classification performance, a robust scene classification strategy is adopted, i.e., multi-perspective fusion. SRSCNN randomly crops a patch with a random scale from the image and predicts its label using the trained CNN model, under the assumption that the patch’s label is identical to the image’s label. For simplicity, in this paper, the perspectives have identical weights, and voting is finally adopted, which means that SRSCNN classifies a sample multiple times and decides its label by selecting the class for which it is classified the most times. In

Figure 5, multiple patches with different scales and different locations are cropped from the image, and these patches are then stretched to a specific scale as the input of the trained model to predict its label. Finally, the labels belonging to these patches are counted and the label occurring the most times is selected as the label of the whole image (Class 2 is selected in the example in

Figure 5). To demonstrate the effectiveness of the multi-perspective fusion, three tests were performed on three datasets, which are described in

Section 4.

4. Experiments and Results

The UCM dataset, the Google dataset of SIRI-WHU, and the Wuhan IKONOS dataset were used to test the proposed method. The traditional methods of BoVW, LDA, the spatial pyramid co-occurrence kernel++ (SPCK++) [

2], the efficient spectral-structural bag-of-features scene classifier (SSBFC) [

7], locality-constrained linear coding (LLC) [

7], SPM+SIFT [

7], SAL-PTM [

10], the Dirichlet-derived multiple topic model (DMTM) [

14], , SIFT+SC [

55], the local Fisher kernel-linear (LFK-Linear), the Fisher kernel-linear (FK-Linear), the Fisher kernel incorporating spatial information (FK-S) [

56], and methods based on deep learning, i.e., saliency-guided unsupervised feature learning (S-UFL) [

37], the gradient boosting random convolutional network (GBRCN) [

40], the large patch convolutional neural network (LPCNN) [

41], and the multiview deep convolutional neural network (M-DCNN) [

36], were compared. In the experiments, 80% of the samples were randomly selected from the dataset as the training samples, and the rest were used as the test samples. The experiments were performed on a personal computer equipped with dual Intel Xeon E5-2650 v2 processors, a single Tesla K20m GPU, and 64 GB of RAM, running Centos 6.6 with the CUDA 6.5 release. For each experiment, the training was stopped after 70 K iterations, taking about 2.5 h. Each experiment on each dataset was repeated five times, and the average classification accuracy was recorded. To keep things simple, CCNN denotes the common CNN without random-scale stretching and multi-perspective fusion, CNNV denotes the common CNN with multi-perspective fusion but not random-scale stretching, SRSCNN-NV denotes the CNN with random-scale stretching but not multi-perspective fusion.

4.1. Experiment 1: The UCM Dataset

To demonstrate the effectiveness of the proposed SRSCNN, the 21-class UCM land-use dataset collected from large optical images of the U.S. Geological Survey was used. The UCM dataset covers various regions of the United States, and includes 21 scene categories, with 100 scene images per category. Each scene image consists of 256 × 256 pixels, with a spatial resolution of one foot per pixel.

Figure 6 shows representative images of each class, i.e., agriculture, airplane, baseball diamond, beach, buildings, chaparral, dense residential, forest, freeway, golf course, harbor, intersection, medium residential, mobile home park, overpass, parking lot, river, runway, sparse residential, storage tanks, and tennis court.

From

Table 2, it can be seen that SRSCNN performs well and achieves the best classification accuracy. The main reason for the comparatively high accuracy achieved by SRSCNN when compared with the non-deep learning methods such as BoVW, LDA, and LLC is that SRSCNN, as a deep learning method, can extract the essential features from the data directly, without prior information, and is a joint learning method which combines feature extraction and scene classification into a whole, leading to the interaction between feature extraction and scene classification during the training phase. Compared with the methods based on deep learning, i.e., S-UFL, GBRCN, LPCNN, and M-DCNN, SRSCNN again achieves a comparatively high classification accuracy. The reason SRSCNN achieves a better accuracy is that the objects in scene classification make a big difference; however, the previous methods based on deep learning do not consider the scale variation of the objects, resulting in a challenge for the scene classification. In contrast, in order to solve this problem, random-scale stretching is adopted in SRSCNN during the training phase to ensure that the extracted features are robust to scale variation. In order to further demonstrate the effectiveness of the random-scale stretching, two experiments were conducted based on CCNN and SRSCNN-NV, respectively. From

Table 2, it can be seen that SRSCNN-NV outperforms CCNN by 1.02%, which demonstrates the effectiveness of the random-scale stretching for remote sensing images. Meanwhile, compared with SRSCNN-NV, SRSCNN is 2.99% better, which shows the power of the multi-perspective fusion. According to the above comparison and analysis, it can be seen that the random-scale stretching and multi-perspective fusion enable SRSCNN to obtain the best results in remote sensing image scene classification. The Kappa of SRSCNN for the UCM is 0.95.

From

Figure 7a, it can be seen that the 21 classes can be recognized with an accuracy of at least 85%, and most categories are recognized with an accuracy of 100%, i.e., agriculture, airplane, baseball diamond, beach, etc. From

Figure 7b, it can be seen that some representative scene images on the left are recognized correctly by SRSCNN and CNNV. It can also be seen that when the scale of the objects varies, CNNV cannot correctly recognize them, but SRSCNN does, due to its ability to extract features that are robust to scale variation. Taking the airplane scenes as an example, the two images on the left are easy to classify, because the scales of the airplanes in these images are normal; however, the airplanes in the right images are very difficult to recognize, because of their different scale, leading to misclassification by CNNV. However, the proposed SRSCNN can still recognize them correctly due to its ability to extract features that are robust to scale change.

4.2. The Google Dataset of SIRI-WHU

The Google dataset of SIRI-WHU designed by the Intelligent Data Extraction and Analysis of Remote Sensing (RSIDEA) Group of Wuhan University contains 12 scene classes, with 200 scene images per category, and each scene image consists of 200 × 200 pixels, with a spatial resolution of 2 m per pixel.

Figure 8 shows representative images of each class, i.e., meadow, pond, harbor, industrial, park, river, residential, overpass, agriculture, commercial, water, and idle land.

From

Table 3, it can be seen that SRSCNN performs well and achieves the best classification accuracy. The main reason for the comparatively high accuracy achieved by SRSCNN when compared with the traditional scene classification methods such as BoVW, LDA, and LLC is that SRSCNN, as a deep learning method, can extract the essential features from the data directly, without prior information, and combines feature extraction and scene classification into a whole, leading to the interaction between feature learning and scene classification. Compared with the deep learning methods, S-UFL and LPCNN, SRSCNN performs 19.92% and 4.88% better, respectively. The reason SRSCNN achieves a better accuracy is that S-UFL and LPCNN do not consider the scale variation of objects, resulting in a challenge for the scene classification. In contrast, in order to meet this challenge, random-scale stretching is adopted in SRSCNN during the training phase to ensure that the extracted features are robust to scale variation. Compared with SSBFC, DMTM, and FK-S, with only 50% of the samples randomly selected from the dataset as the training samples, the proposed method still performs the best, which is shown in

Table 4. In order to further demonstrate the effectiveness of the random-scale stretching, two experiments were conducted based on CCNN and SRSCNN-NV, respectively. From

Table 3, it can be seen that SRSCNN-NV outperforms CCNN by 2.80%, demonstrating the effectiveness of the random-scale stretching for remote sensing images. Meanwhile, compared with SRSCNN-NV, SRSCNN is 3.70% better, showing the power of the multi-perspective fusion. According to the above comparison and analysis, it can be seen that the random-scale stretching and multi-perspective fusion enable SRSCNN to obtain the best results in remote sensing image scene classification. The Kappa of SRSCNN for the Google dataset of SIRI-WHU is 0.9364 when 80% training samples selected.

From

Figure 9a, it can be seen that most of the classes can be recognized with an accuracy of at least 92.5%, except for river, overpass, and water. Meadow, harbor, and idle land are recognized with an accuracy of 100%. From

Figure 9b, it can be seen that some representative scene images on the left are recognized correctly by SRSCNN and CNNV, i.e., CNN with voting but not random-scale stretching. It can also be seen that when the object scale varies, CNNV cannot correctly recognize the objects, but SRSCNN correctly recognizes them due to its ability to extract features that are robust to scale variation.

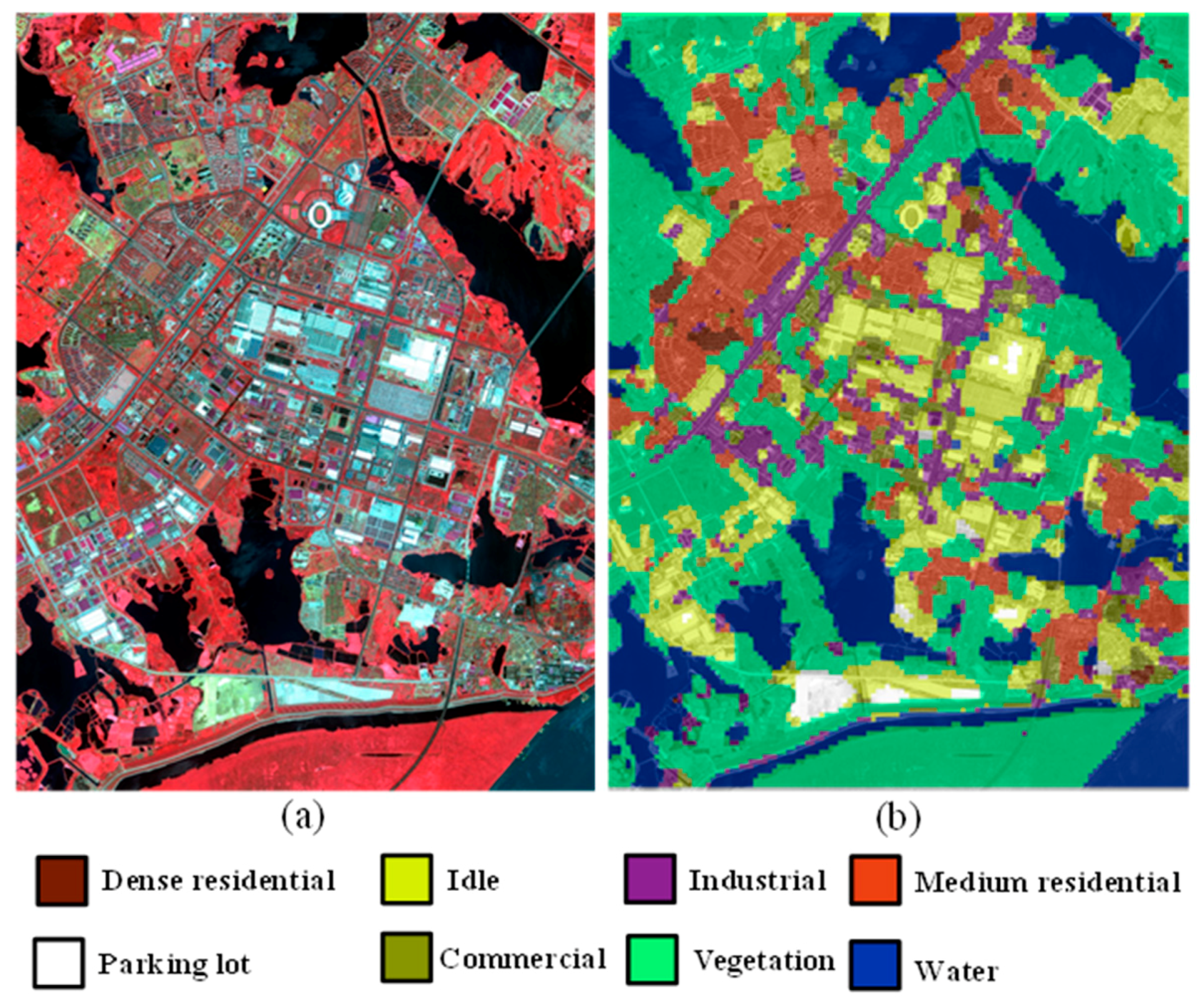

4.3. Experiment 3: The Wuhan IKONOS Dataset

The HSR images in the Wuhan IKONOS dataset were acquired over the city of Wuhan in China by the IKONOS sensor in June 2009. The spatial resolutions of the panchromatic images and the multispectral images are 1 m and 4 m, respectively. In this paper, all the images in the Wuhan IKONOS dataset were obtained by Gram–Schmidt pan-sharpening with ENVI 4.7 software. The Wuhan IKONOS dataset contains eight scene classes, with 30 scene images per category, and each scene image consists of 150 × 150 pixels, with a spatial resolution of 1 m per pixel. These images were cropped from a large image obtained over the city of Wuhan in China by the IKONOS sensor in June 2009, with a size of 6150 × 8250 pixels.

Figure 10 shows representative images of each class, i.e., dense residential, idle, industrial, medium residential, parking lot, commercial, vegetation, and water.

From

Table 5, it can be seen that SRSCNN performs well and achieves a better classification accuracy than BoVW, LDA, P-LDA, FK-Linear, and LFK-Linear. However, compared with BoVW and P-LDA, the methods based on CNN such as CCNN and SRSCNN-NV perform worse, and accuracy improvement is limited in SRSCNN. The reason is that the large dataset is usually required to train the CNN model, but there are only about 200 images for training. In

Table 5, there is no big difference in accuracy between CCNN and SRSCNN-NV. The main reason for this is that this dataset is cropped from the same image, and the effect of the scale change problem is small. However, compared with SRSCNN-NV, SRSCNN performs 10.03% better, and still performs the best. The Kappa of SRSCNN for the Wuhan IKONOS dataset is 0.8333.

An annotation experiment on the large IKONOS image was conducted with the trained model. During the annotation of the large image, the large image was split into a set of scene images where the image size and spacing are set to

pixel and

pixels, respectively. The set of scene images is denoted as

. Then the images in

were classified by the trained model. Finally, the annotation result can be obtained by assembling the images in

. In addition, for the overlapping pixels between adjacent images, their class labels were determined by the majority voting rule. The annotation result is shown in

Figure 11.

6. Conclusions

In this paper, SRSCNN, a novel algorithm based on a deep convolutional neural network, has been proposed and implemented to solve the object scale change problem in remote sensing scene classification. In the proposed algorithm, random-scale stretching is proposed to force the CNN model to learn a feature representation that is robust to object scale variation. During the course of the training, a patch with a random scale and location is cropped and then stretched into a fixed scale to train the CNN model. In the testing stage, multiple patches with a random scale and location are cropped from each image to be the inputs of the trained CNN. Finally, under the assumption that the patches have the same label as the image, multi-perspective fusion is adopted to combine the prediction of every patch to decide the final label of the image.

To test the performance of the SRSCNN, three datasets, mainly covering the urban regions of the United States and China, i.e., the 21-class UCM dataset, the 12-class Google dataset of SIRI-WHU, and the Wuhan IKONOS dataset, are used. The results of the experiments consistently showed that the proposed method can obtain a high classification accuracy. When compared with the traditional methods such as BoVW and LDA, and other methods based on deep learning, such as S-UFL and GBRCN, SRSCNN obtains the best classification accuracy, demonstrating the effectiveness of random-scale stretching for remote sensing image scene classification.

Nevertheless, the large dataset is required when the CNN model is trained for remote sensing scene classification. In addition, it is still a challenge for the proposed method when only the small dataset is available for training. Semi-supervised learning, as one of the popular techniques for training with the labeled data and unlabeled data, should be considered to meet this challenge. In practical use, multiple spatial resolution scenes, where the spatial resolution may be 0.3 m for some scene classes such as tennis court, and 30 m for a scene class such as island, are often processed together. Hence, in our future work, we plan to explore multiple spatial resolution scene classification, based on the semi-supervised CNN model.