Abstract

Studies show that feedback greatly improves student learning outcomes, but achieving this level of personalization at scale is a complex task, especially in the diverse and open environment of Massive Open Online Courses (MOOCs). This research provides a novel method for using cutting-edge artificial intelligence technology to enhance the feedback mechanism in MOOCs. The main goal of this research is to leverage AI’s capabilities to automate and refine the MOOC feedback process, with special emphasis on courses that allow students to learn at their own pace. The combination of LangChain—a cutting-edge framework specifically designed for applications that use language models—with the OpenAI API forms the basis of this work. This integration creates dynamic, scalable, and intelligent environments that can provide students with individualized, insightful feedback. A well-organized assessment rubric directs the feedback system, ensuring that the responses are both tailored to each learner’s unique path and aligned with academic standards and objectives. This initiative uses Generative AI to enhance MOOCs, making them more engaging, responsive, and successful for a diverse, international student body. Beyond mere automation, this technology has the potential to transform fundamentally how learning is supported in digital environments and how feedback is delivered. The initial results demonstrate increased learner satisfaction and progress, thereby validating the effectiveness of personalized feedback powered by AI.

1. Introduction

Since 2022, the landscape of generative artificial intelligence (GenAI) has undergone a significant transformation, underscored by the advancements in machine learning algorithms, particularly in deep learning. These advancements have enabled AI systems, such as OpenAI’s GPT (Generative Pre-trained Transformer) series [1], Google’s BERT (Bidirectional Encoder Representations from Transformers) [2], and DALL·E for image creation [3], to understand and produce human-like text, images, and even code [4]. Such capabilities have not only showcased the potential for these systems to perform complex tasks that were once the exclusive domain of human intelligence but have also opened a vast array of application opportunities within the educational sector. For instance, GPT-4, with its advanced text-generation capabilities, has been leveraged to create dynamic educational content, simulate tutoring sessions, and provide personalized feedback to students. Similarly, BERT’s nuanced understanding of language’s structure and context has been applied to develop more sophisticated educational chatbots and automated question-answering systems. Moreover, DALL·E’s ability to generate images from textual descriptions offers innovative ways to create visual learning materials and aids. These tools exemplify the broad spectrum of generative AI applications in education, promising a future where learning is more accessible, personalized, and engaging for students across the globe [5].

The recent surge in generative AI’s capabilities highlights its transformative potential in reshaping online education, especially within the MOOCs framework. Despite MOOCs’ role in democratizing education, their ability to deliver a deeply engaging and personalized learning experience is often compromised by design limitations [6]. These include a heavy reliance on multiple-choice exams and auto-graded assignments which, while scalable, tend to restrict the depth of learning, diminish the quality of feedback, and impede student engagement.

However, the widespread adoption of MOOCs introduces distinct challenges that simultaneously highlight the potential benefits of generative AI in education. By nature, MOOCs are designed to accommodate large audiences, promoting self-directed learning through primarily asynchronous content delivery. The efficiency of this format comes at the cost of personalized interactions and comprehensive assessment feedback—critical components of effective learning experiences.

This discrepancy between the ideal personalized educational experience and the prevailing MOOC delivery mechanisms underscores the critical role generative AI can play. By harnessing AI’s ability to generate customized, engaging content and nuanced feedback, there is a significant opportunity to bridge these gaps. Generative AI not only promises to enhance the MOOC model by infusing it with personalized learning pathways but also aims to elevate the overall student experience on a global scale, making education more accessible, interactive, and fulfilling.

In light of the identified challenges, this project aimed to conduct an exploratory study to assess the degree of student satisfaction with personalized and automated feedback for the learning activities within MOOCs. For this purpose, a tool was developed that enables the generation of personalized and automated feedback for the learning activities within MOOCs. This tool used the LangChain framework to leverage advanced AI capabilities, such as those found in the GPT series [7].

This methodology involves using a structured evaluation rubric, defined by teaching staff, to categorize learner responses and generate context-specific feedback. This system capitalizes on the data-aware and agentive properties of LangChain and is designed to enhance the learning experience by providing timely and individualized feedback, crucial for student engagement and success in online educational environments. The tool’s effectiveness in delivering relevant feedback will be assessed to support students’ autonomous learning in MOOCs.

The structure of the paper is organized as follows: after the introduction, we delve into the theoretical framework and the state of the art of AI applications in education. The value of the feedback for training activities conducted by teachers and the ChatGPT tool is discussed in the third section. The fourth section presents the technological context of the experience of using the ChatGPT API. On the other hand, an instrument to measure the experience is being developed; it will take the form of a survey, where students will be asked about several factors. The results are examined and supported by the findings of the previously described survey in the fifth part. Lastly, a list of future study directions and the work’s findings are provided.

2. Literature Review

MOOCs have transformed the field of online education by providing students with the opportunity to complete their coursework at their preferred speed and convenience. However, MOOCs face a substantial obstacle in terms of student retention, as completion rates are remarkably low. There are multiple reasons for this high dropout rate, including financial constraints, a lack of essential basic knowledge, insufficient participation in discussion forums due to irrelevant conversations, difficulties in understanding the material without accessible support, time restrictions due to other responsibilities and priorities, and procrastination that eventually leads to withdrawal from the course. Additionally, a significant factor contributing to student dropout is the lack of feedback on their activities; students often feel disconnected and unsupported due to the absence of personalized and constructive feedback, which is crucial for their learning and development. This lack of engagement and interaction can lead to a sense of isolation and decreased motivation, prompting students to abandon their courses [8,9,10].

In this context, the importance of effective feedback mechanisms in MOOCs and other online education settings has been progressively recognized. Educational research continuously emphasizes the crucial role of timely and personalized feedback in improving learning outcomes and student involvement, which has the potential to address some of the problems that contribute to high dropout rates. Personalized feedback involves tailoring answers to individual learner characteristics, learning progress, and specific educational needs [11]. However, providing personalized feedback to a large number of students is a challenging task, especially when there are a limited number of instructors available in MOOC contexts. This discrepancy greatly impedes the ability to provide individualized feedback, further aggravated by prevailing evaluation methods in MOOCs, which prioritize memorization and often yield evaluations with limited constructive input [12,13].

2.1. The Importance of Feedback

The importance of feedback in educational environments transcends academic instruction and is fundamental to the learning process. In addition to reinforcing the knowledge that has been acquired, feedback corrects misconceptions and guides students in the direction of attaining their educational objectives. This is emphasized by Hattie and Timperley [14], who state that “Feedback is among the most influential factors on achievement and learning”. This claim is substantiated by their comprehensive meta-analysis, which demonstrates that students’ performance can be significantly improved through the application of effective feedback. This, in turn, can effectively bridge the divide between a student’s present comprehension and intended learning objectives.

In the domain of online education, particularly within MOOCs, the role of feedback assumes even greater importance. Due to the inherent limitations to direct interaction on these platforms, instructor–student feedback is an even more vital form of engagement. According to Nicol and Macfarlane-Dick [15], effective feedback practices enable learners to develop into self-regulated learners who are able to oversee, control, and guide their own learning processes. This viewpoint is consistent with the concept that feedback in MOOCs has the potential to overcome both geographical separation and restricted engagement, serving as a conduit to link students with their academic community and course material. Moreover, the ability of feedback to mitigate the common feeling of isolation experienced in MOOCs is crucial. According to Shute [16], learner-specific feedback can substantially increase motivation and engagement, which are critical success factors in online learning environments. Through the provision of individualized, implementable observations regarding students’ progress, feedback cultivates an educational environment that appreciates the unique trajectory of every learner, stimulates a feeling of inclusion, and motivates proactive engagement.

MOOC learner populations are heterogeneous in nature, comprising individuals with distinct origins, abilities, and goals; thus, an approach to feedback that is both flexible and individualized is required. This methodology not only attends to immediate educational requirements but also facilitates the growth of metacognitive abilities, empowering students to evaluate their own learning progress, recognize areas that require enhancement, and establish attainable objectives. As noted by Boud and Molloy [17], the ultimate purpose of feedback should be to equip students with the skills necessary for lifelong learning through the development of their capacity for self-evaluation.

It has been suggested that peer evaluation could be utilized to address the scalability issue associated with delivering personalized feedback in MOOCs. Participant evaluation of one another’s work according to criteria established by the course instructors is required. Although this approach provides a means to generate feedback in a scalable manner, it also presents a number of constraints. To begin with, a lack of consistency and occasionally unreliable feedback can result from the variability in the ability of peer evaluators to accurately assess work [18]. Students might be devoid of the requisite knowledge or expertise to offer constructive criticism, which could result in perplexity and discontentment among learners. Furthermore, the quality of the feedback may be compromised occasionally as a result of peer evaluation, which is influenced by the varied language skills and educational backgrounds of MOOC participants. The very diversity that characterizes MOOCs presents difficulties when it comes to guaranteeing that peer evaluations are constructive and in line with instructional objectives. Moreover, the minimal motivation and accountability exhibited by students who conduct peer evaluations may exacerbate the inefficacy of this feedback system. These obstacles emphasize the need for innovative strategies that can deliver dependable, individualized, and scalable feedback in MOOCs. The potential of GenAI to enhance the feedback mechanisms in online learning environments is highly promising. Educators’ potential use of generative AI to overcome the limitations of peer assessment holds promise for ensuring that students receive timely, accurate, and constructive feedback that is individualized to their learning requirements.

2.2. Generative AI for Feedback

Based on the importance of the feedback highlighted in the previous section and recognizing the limitations of peer review in MOOC environments, we turn our attention to the potential of generative AI to transform the feedback process. Advanced machine learning algorithms and large linguistic models (LLMs) such as ChatGPT, Gemini, an Copilot represent a subset of generative AI technologies capable of understanding, interpreting, and generating human-like text [19]. This ability enables them to produce answers that are not only logically coherent, but also relevant to the situation at hand, covering a wide range of uses such as the development of intelligent tutoring systems that can guide students through complex problem-solving processes, the creation of adaptive learning materials that adjust to individual learning progress, and the facilitation of simulated conversations for language learning [20,21]. These applications underscore the versatility and potential of generative AI to support diverse aspects of the learning process.

In contrast to conventional automated feedback systems [22], which frequently depend on inflexible algorithms founded on rules, generative AI has the capability to customize its responses in order to accommodate the unique requirements and circumstances of individual learners. The capability to adjust renders generative AI a potent instrument in reshaping the MOOC feedback procedure. The provision of personalized feedback in MOOCs is hindered by a substantial logistical and resource-intensive obstacle in the form of scalability challenges. Generative AI possesses the capability to mitigate this divide through the automation of the feedback procedure while preserving a substantial level of customization [23,24]. It possesses the capability to assess student submissions, pinpoint areas that require enhancement, and provide constructive criticism in an engaging and enlightening fashion. Furthermore, generative AI has the capability to provide feedback in a multitude of formats, accommodating the diverse preferences students have as to how they learn; thus, it enhances the educational experience as a whole.

Recent research in the field of educational feedback emphasizes a significant shift towards learner-oriented processes [25]. This evolution reflects a departure from traditional feedback models, such as those proposed by Hattie and Timperley [14], which have been foundational but may not fully address the dynamic needs of today’s diverse learner populations. In response to these developments, our review incorporates a discussion on how current feedback frameworks are adapting to be more learner-centered, facilitating a more personalized learning experience that actively engages students in their educational journeys.

Significant research has also been conducted on the potential of GPT models to provide feedback, notably by Dai et al. [26]. Their study, “Can large language models provide feedback to students? A case study on ChatGPT”, explores the capabilities of ChatGPT in delivering detailed and coherent feedback that not only aligns closely with instructors’ assessments but also enhances the feedback by detailing the process of task completion, thus supporting the development of learning skills. Their findings indicate that ChatGPT can generate feedback that is often more detailed than that provided by human instructors and exhibits a high degree of agreement with the instructors on the subjects of the students’ assignments [27]. These insights have spurred further investigations into the practical applications of GPT models, leading this work to the conceptualization of a tool that utilizes a rubric defined by educators to generate personalized feedback automatically. This tool aims to assess student perceptions and explore the scalability of the solution, considering the economic model of token-based GPT tools.

An inherent strength of generative AI lies in its capacity to acquire knowledge and develop gradually [28]. Through the analysis of substantial quantities of data, such as student responses and interactions, these systems are capable of consistently improving their feedback mechanisms in order to maintain their efficacy and relevance. This particular ability holds significant value within the realm of education, as pedagogical approaches and the requirements of learners are perpetually changing.

3. Implementation of the Pilot Tool

In this experiment, the course titled “Transforming Education with AI: ChatGPT” was conducted from 27 March to 11 May 2023, and was attended by 5482 registered students. The curriculum was organized into four lessons that encompassed topics such as the basics of ChatGPT, its potential impact on education, methods for its integration into teaching and learning practices, generative tools, and the ethical use of AI in educational contexts [29]. The instructional material primarily consisted of AI-generated video content, supplemented by collaborative exercises on platforms like Padlet. Furthermore, student engagement was facilitated through the sharing of views and experiences using Google Slides presentations, aiming to create a community of educators interested in the application of generative AI tools to refine teaching practices.

Expanding upon the course’s fundamental framework, we integrated an automated feedback mechanism into it with the objective of transforming the MOOCs evaluation procedure. The integration of a sophisticated language model and a structured evaluation rubric into this system was achieved using a meticulous design. As a result, the system possesses the capability to thoroughly comprehend and analyze learners’ responses. By categorizing these responses based on clearly defined evaluation standards, the system was in a position to produce feedback that was contextualized. This took advantage of the data-aware and agentic functionalities that are intrinsic to the LangChain framework.

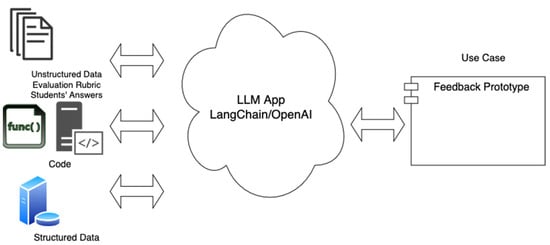

The execution of this feedback system was distinguished by a number of essential elements: (a) The integration of a structured rubric and prompts—the initial phase entailed the creation of an elaborate assessment rubric accompanied by prompts that unambiguously specified the criteria for evaluation and the diverse tiers of achievement. The purpose of this rubric was to function as the fundamental element of the automated feedback system, providing precise and transparent guidance throughout the evaluation process. (b) Language model involvement—by employing the LangChain framework, we combined state-of-the-art language models, including GPT-4, to enable students to engage in conversations that were both substantive and contextually appropriate. The role of these models was to analyze, interpret, and produce feedback in accordance with the predetermined criteria outlined in the rubric. (c) The generation of personalized feedback—by capitalizing on the agentic capabilities of LangChain, the automated system was engineered to generate individualized feedback for every participant. The feedback provided was customized to target particular areas that were identified by the rubric. Its purpose was to be instructive and contextual, directing learners towards significant growth. (d) Adaptability and scalability—improving the scalability of feedback distribution within the MOOC was a principal aim of this endeavor, facilitating the timely and individualized provision of feedback to a substantial student body. Furthermore, the system was designed with adaptive capabilities, allowing it to be modified in accordance with user input to promote ongoing enhancements. Figure 1 presents the architectural structure of the developed system.

Figure 1.

GenAI feedback tool’s architecture, based on the students’ answers.

Consistent with the overarching objective of our experimental MOOC, titled “Transforming Education with AI: ChatGPT”, the initial exercise was thoughtfully crafted to prompt students to make a critical examination of the attributes and constraints of ChatGPT, in addition to its capacity to fundamentally transform pedagogical and learning procedures. The objective of this activity was to empower students to identify the potential of ChatGPT in the field of education, comprehend its practical uses, and reflect on how it might enhance the teaching–learning experience while also recognizing the difficulties that may arise from incorporating this technology into academic environments.

In order to promote this, learners were required to submit an analytical essay that investigated the aforementioned aspects. With meticulous planning, the assignment, rubric, and LangChain framework were all developed to facilitate an accurate evaluation of the submissions by the automated feedback system. The evaluation criteria for the rubric encompassed critical thinking, analysis profundity, comprehension of the educational applications of ChatGPT, and the students’ capacity to anticipate the limitations and opportunities, as well as the transformative potential, of AI in education.

In alignment with the primary aim of our experimental MOOC entitled “Transforming Education with AI: ChatGPT”, the initial task was deliberately designed to stimulate students’ critical thinking and self-reflection concerning the qualities and limitations of ChatGPT, as well as its potential to revolutionize teaching and learning methodologies. The aim of this exercise was to provide students with the ability to discern the potential of ChatGPT within the realm of education, grasp its pragmatic applications, and contemplate how it could enrich the pedagogical process. Additionally, students were expected to acknowledge the challenges that could emerge when integrating this technology into academic settings.

To encourage this, students were asked to submit a second analytical essay that examined the aforementioned facets of ChatGPT. The assignment, rubric, and LangChain framework were all meticulously designed to enable the automated feedback system to conduct an accurate evaluation of their submissions. The assessment standards for the rubric included the following: an ability to think critically; conduct in-depth analyses; understand the educational uses of ChatGPT; and foresee the constraints, opportunities, and transformative potential of artificial intelligence in the field of education.

By integrating the unique capabilities of the LangChain framework into our MOOC experiment, specifically for the initial task, we were able to effectively utilize its data-aware and agentic characteristics. The data-aware functionality of LangChain is particularly advantageous, as it enables the seamless incorporation of language models from an extensive variety of data sources. By utilizing this functionality, our automated feedback system was capable of accessing a broader range of information. As a result, the feedback delivered to students was not only tailored to their individual needs but also firmly rooted in an extensive comprehension of the subject. Through the integration of language models with a wide range of datasets, we implemented a data-centric approach that substantially enhanced the system’s ability to provide feedback that is contextually appropriate.

Furthermore, the agentic nature of LangChain revolutionized our language model, elevating it from a passive participant to an engaged contributor in the educational process. The system was capable of engaging with student submissions in a more nuanced and interactive fashion due to this dynamic functionality. By adjusting its responses in accordance with the unique content of each essay, it enabled a form of feedback that was not only flexible but also exceptionally pertinent to the personalized educational trajectory of every student.

The application of LangChain in this endeavor required us to strategically customize the AI model to correspond with the academic material of our MOOC. This process was governed by carefully specified rubrics that were in line with our intended learning outcomes. By meticulously preparing, we ensured that the feedback produced by the AI model was both precise and significant, thereby directly aiding in the achievement of the pedagogical objectives of the exercise.

An essential element of our implementation approach involved the examination of bespoke documents—such as student essays, prompts, and rubrics—that were utilized to generate responses for the AI model. By adopting this methodology, our system was capable of not only responding to particular inquiries expressed by the students, but also situating its feedback within the wider thematic framework of the exercise. Through the implementation of this overarching and integrated strategy, our objective was to augment the educational encounter, thereby garnering greater interest and applicability among the participants in our MOOC.

4. Results

In the first implementation of the pilot project, a total of 207 individuals participated in the proposed activity, with the help of the feedback tool. The responses generated during the pilot project were appropriate, and a group of educators reviewed these responses and deemed them satisfactory. Subsequently, participants in the activity were invited to complete the study questionnaire, and a total of 160 responses were recorded and analyzed.

In this experiment, we used the Likert scale as the fundamental tool for evaluating both student responses and their perceptions of the feedback system. The Likert scale is a well-established and versatile method for quantifying subjective data, enabling us to assess the quality of student responses and analyze their satisfaction with the automated feedback provided.

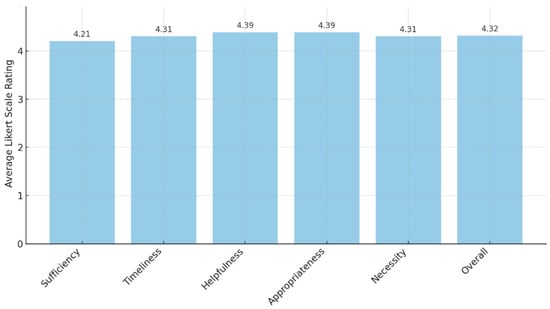

We used the Likert scale to collect data on student perceptions, allowing us to understand their satisfaction with the automated feedback system, the perceived effectiveness of the AI-driven interactions, and areas where improvements were needed. This data-driven approach guides our ongoing efforts to enhance the system and create a more engaging and effective learning environment within MOOCs. The participants, when asked to evaluate the (a) sufficiency of the feedback provided by the GenAI tool, expressed their generally positive sentiment, with an average Likert scale rating of 4.21 out of 5. This indicates that a majority of the respondents perceived the feedback to be substantial and meeting their educational needs. The high rating suggests that the AI-generated feedback was deemed comprehensive and fulfilling, demonstrating a positive perception of the tool’s ability to provide an adequate amount of information to the learners.

Learners rated the (b) timeliness of the feedback from the GenAI tool with an average score of 4.31 out of 5, indicating a high level of satisfaction regarding the promptness of responses. This positive evaluation suggests that learners appreciated the tool’s ability to provide timely feedback, enhancing their learning experience by ensuring a quick turnaround in addressing queries or assessing their progress. This result emphasizes the efficiency of the AI system in delivering timely responses to support the students’ ongoing learning process.

Learners assigned an average Likert scale rating of 4.39 out of 5 to the (c) helpfulness of the feedback generated by the GenAI tool. This high score reflects a consensus among respondents that the feedback provided by the tool was instrumental to their learning journey. The positive evaluation indicates that students perceived the AI-generated feedback as valuable and beneficial in guiding their understanding, contributing positively to their overall learning outcomes.

Learners consistently rated the (d) appropriateness of the feedback from the AI-based learning tool with an average score of 4.39 out of 5. This suggests that respondents found the feedback to be fitting and relevant to their queries or performance. The high rating indicates that the AI system effectively tailored its responses to the specific needs of the learners, contributing to a positive user experience and reinforcing the appropriateness of the feedback provided.

Learners evaluated the (e) necessity of the feedback for their improvement with an average score of 4.31 out of 5. This positive assessment highlights the perceived importance of the AI-generated feedback in facilitating the enhancement of their skills and knowledge. This result suggests that learners acknowledged the feedback as a crucial element in their learning process, indicating a strong correlation between AI-generated insights and the students’ continuous improvement.

Overall, with an average Likert scale rating of 4.32 out of 5 (86%), the feedback variable, as perceived by the students, reflects a high level of satisfaction and effectiveness in the AI-based learning tool’s provision of feedback. The positive responses across all variables underscore the tool’s capability to deliver comprehensive, timely, helpful, appropriate, and necessary feedback, contributing significantly to the overall positive learning experience reported by the participants.

The Likert scale evaluations of the students’ perceptions of the feedback provided by the AI-based learning tool are presented in Figure 2. High levels of satisfaction and effectiveness are evident in each category—sufficiency, timeliness, usefulness, appropriateness, necessity, and overall evaluation—which signifies that the tool effectively delivers feedback that is comprehensive, timely, useful, appropriate, and necessary. This significantly contributes to the participants’ reported positive learning experience.

Figure 2.

Student perceptions of GenAI tool feedback.

5. Discussion

The integration of ChatGPT into the learning environment through the LangChain library opens up avenues for diverse modes of interaction, fostering a Socratic conversation with students. The positive feedback received from students regarding the sufficiency, timeliness, helpfulness, appropriateness, and necessity of AI-generated feedback, as measured by their Likert scale ratings, substantiates the potential of leveraging ChatGPT for educational purposes. This approach not only enhances the learning experience but also significantly boosts engagement, especially in MOOC environments where high dropout rates are a concern, as indicated by studies [8,9,10]. By automating interactions with ChatGPT, educators can harness the capabilities of this language model to create engaging and intellectually stimulating dialogues with students, enhancing the overall learning experience.

One key advantage highlighted in the Likert scale ratings is the perceived sufficiency of the AI-generated feedback. This implies that, through systematic and strategic prompts, educators can ensure that ChatGPT imparts comprehensive information, addressing the specific needs of individual students. Furthermore, the positive scores for timeliness and helpfulness suggest that integrating ChatGPT into the learning environment allows for real-time and valuable interactions. The scalability of such interactions ensures that a large number of students can benefit simultaneously, providing instant feedback to maintain their motivation and engagement throughout the learning process.

The LangChain library serves as a crucial facilitator in this automated interaction, allowing for seamless communication with ChatGPT. This integration not only streamlines the process but also empowers educators to tailor their approach based on the specific learning objectives and challenges faced by their students. Consequently, the positive responses to the feedback variables indicate the potential effectiveness of this approach in creating a dynamic and responsive educational environment. As we explore the possibilities of automating interactions with ChatGPT, it becomes evident that such advancements hold promise for transforming traditional educational practices and fostering a more personalized and engaging learning experience for students.

As educational institutions increasingly embrace artificial intelligence (AI) technologies like ChatGPT, the realization of their full potential necessitates a nuanced understanding and strategic consideration of several of their key aspects. The successful integration of AI-driven educational interactions relies heavily on effective interaction design, personalization strategies, the delicate equilibrium between automation and the human touch, ongoing model improvement, scalability without compromising quality, an adaptability to student diversity, the establishment of a feedback loop with educators, ethical considerations, and the ability to sustain student motivation and engagement. These aspects collectively form a comprehensive framework that not only harnesses the capabilities of AI for educational advancement but also ensures its alignment with ethical standards and pedagogical objectives. In this article, we delve into the intricacies of each facet, examining their implications and roles within the broader context of AI-powered educational interactions. Through this exploration, we aim to provide insights that guide the effective implementation of AI technologies, fostering an enriched and ethically sound learning experience for students. The following are a description of the aspects to be considered:

- (a)

- Effective interaction design is paramount in the integration of ChatGPT into educational settings. The design of well-crafted prompts and interactions plays a crucial role in steering conversations with ChatGPT in a productive direction. By ensuring that the generated feedback aligns with educational goals, effective interaction design lays the foundation for a seamless and purposeful integration of AI-driven interactions in the learning environment.

- (b)

- Personalization emerges as a critical factor in the success of AI-powered interactions. Tailoring feedback and responses to the individual needs of each student enhances engagement and contributes to a more meaningful and customized learning experience. The ability to cater to diverse student requirements highlights the importance of incorporating personalization mechanisms in the design of educational AI systems.

- (c)

- Balancing automation and the human touch is a nuanced consideration in the implementation of AI-driven educational interactions. While automation offers efficiency, recognizing the need for a human touch in certain interactions is crucial. Instances that demand accuracy, empathy, or understanding beyond the model’s capabilities may benefit from human intervention. Achieving a balance between the advantages of automation and the nuance of human interaction is key to optimizing the educational benefits of AI.

- (d)

- Continuous model improvement underscores the iterative nature of AI systems’ development. the Regular refinement of prompts and interaction patterns is vital for enhancing the quality of responses and overall user experience. This iterative approach ensures that the AI model remains adaptive to evolving educational needs, effectively addressing the intricacies of student queries and staying at the forefront of educational technology advancements.

- (e)

- Scalability without compromising quality is a significant advantage of AI-powered interactions. The ability to provide scalable real-time feedback to students, however, should not come at the cost of compromising the quality of the feedback. Maintaining a balance between scalability and quality is imperative to ensuring that a large number of students can benefit without sacrificing the educational value of these interactions.

- (f)

- Adapting to student diversity highlights the importance of flexibility in AI-driven educational interaction systems. These systems should be adaptable to varying levels of student proficiency and their diverse preferences about how they learn. Such flexibility ensures that the technology remains inclusive and effective across a broad spectrum of students, promoting equitable educational experiences.

- (g)

- The feedback loop with educators emphasizes the collaborative nature of AI systems’ development. Establishing a feedback loop with educators is crucial for fine-tuning the AI system to align better with educational goals. Educators’ insights and assessments contribute to ongoing improvements, creating a collaborative environment that enhances the effectiveness of AI-powered educational interactions.

- (h)

- Ethical considerations are paramount in the development and deployment of AI-driven educational interactions. A thoughtful consideration of ethical implications, including issues related to bias and data privacy, is necessary. Addressing these concerns ensures that the technology upholds ethical standards, safeguards the well-being of students, and fosters a trustworthy and responsible use of AI in education.

Overall, these aspects collectively contribute to the effective implementation of AI-driven educational interactions, fostering a positive and enriching learning experience for students while upholding ethical standards and promoting collaboration between educators and technology.

6. Conclusions and Future Work

In the integration of AI-driven technologies like ChatGPT into education, finding the delicate equilibrium between automation and the human touch emerges as a key consideration. While automation ensures efficiency and scalability, recognizing the nuances where human intervention is crucial is part of the accuracy, empathy, and personalized guidance needed in certain educational interactions. Striking this balance allows for the optimal use of AI capabilities while maintaining the human-centric qualities essential for effective teaching and learning.

The deployment of AI in education necessitates a steadfast commitment to ethical considerations. Ensuring fairness, mitigating biases, and safeguarding data privacy are integral aspects of responsible AI use. Addressing these ethical considerations not only upholds our standards of integrity and equity but also establishes a foundation of trust among students, educators, and stakeholders. Its ethical implementation is not just a regulatory requirement but a fundamental principle for fostering a positive and responsible AI-infused educational environment.

The iterative refinement of AI models and their interaction patterns, as well as their adaptability to evolving educational needs, are critical for their long-term relevance and effectiveness. As technology and educational methodologies evolve, continuous improvement ensures that AI systems remain responsive and aligned with the dynamic landscape of education. By embracing a culture of continuous learning and adaptation, educational institutions can harness the full potential of AI technologies to enhance the learning experience and meet the diverse needs of students and educators alike.

This study is not without limitations. Firstly, it was conducted with a relatively small group of students within a MOOC, which suggests that future research could benefit from a larger sample size to enhance the generalizability of the findings, as well as conduct a comparative study between an experimental and a control group.

Additionally, there is potential to further investigate how the use of generative AI impacts skill acquisition, which could provide deeper insights into the effectiveness of AI-driven educational tools. Lastly, the surveys in this study were administered at the end of the course, which may reflect participants’ overall satisfaction with the course. Future studies might consider implementing a longitudinal or cross-sectional research design to isolate the effects of generative AI more accurately on learning outcomes and satisfaction over time.

As a future line of research, it would be interesting to develop a theoretical model that allows us to analyze the behavior of students in the face of feedback generated by generative AI, which would allow us to study its positive or negative impact on the study variables. Furthermore, other types of confidentiality and security constructs generated by the use of generative AI models could be considered. These findings would contribute to a greater understanding of the implications of the use of generative AI in the learning and training processes of students and teachers.

Author Contributions

Conceptualization, M.M.-C., J.A.M. and R.B.; methodology, M.M.-C. and R.B.; software, H.R.A.-S.; validation, J.A.M.; formal analysis, M.M.-C.; investigation, M.M.-C. and H.R.A.-S.; resources, H.R.A.-S.; data curation, H.R.A.-S.; writing—original draft preparation, M.M.-C., H.R.A.-S., J.A.M., R.B., R.H.-R. and A.M.T.; writing—review and editing, M.M.-C., H.R.A.-S., J.A.M., R.B., R.H.-R. and A.M.T..; visualization, H.R.A.-S.; supervision, R.H.-R.; project administration, R.B.; funding acquisition, H.R.A.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://openai.com/index/language-unsupervised (accessed on 12 February 2024).

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Johnson, K. OpenAI Debuts DALL-E for Generating Images from Text. VentureBeat. 5 January 2021. Available online: https://venturebeat.com/business/openai-debuts-dall-e-for-generating-images-from-text/ (accessed on 12 February 2024).

- Rai, L.; Deng, C.; Liu, F. Developing Massive Open Online Course Style Assessments using Generative AI Tools. In Proceedings of the 2023 IEEE 6th International Conference on Electronic Information and Communication Technology (ICEICT), Qingdao, China, 21–24 July 2023; pp. 1292–1294. [Google Scholar] [CrossRef]

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. In Proceedings of the 2023 IEEE Global Engineering Education Conference (EDUCON), Kuwait, Kuwait, 1–4 May 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Chan, M.M.; Plata, R.B.; Medina, J.A.; Alario-Hoyos, C.; Rizzardini, R.H.; de la Roca, M. Analysis of behavioral intention to use cloud-based tools in a MOOC: A technology acceptance model approach. J. Univers. Comput. Sci. 2018, 24, 1072–1089. [Google Scholar]

- Auffarth, B. Generative AI with LangChain: Build Large Language Model (LLM) Apps with Python, ChatGPT and Other LLMs; Packt Publishing: Birmingham, UK, 2023. [Google Scholar]

- Hew, K.F.; Cheung, W.S. Students’ and instructors’ use of massive open online courses (MOOCs): Motivations and challenges. Educ. Res. Rev. 2014, 12, 45–58. [Google Scholar] [CrossRef]

- Guetl, C.; Rizzardini, R.H.; Chang, V.; Morales, M. Attrition in MOOC: Lessons learned from drop-out students. In Proceedings of the Learning Technology for Education in Cloud. In Proceedings of the MOOC and Big Data: Third International Workshop, LTEC 2014, Santiago, Chile, 2–5 September 2014; Proceedings 3. pp. 37–48. [Google Scholar] [CrossRef]

- Conole, G. MOOCs as Disruptive Technologies: Strategies for Enhancing the Learner Experience and Quality of MOOCs. RED, Revista de Educación a Distancia. Número 39. 2013. Available online: http://www.um.es/ead/red/39/conole.pdf (accessed on 15 January 2014).

- Krathwohl, D.R.; Anderson, L.W. A Taxonomy for Learning, Teaching, and Assessing: A revision of Bloom’s Taxonomy of Educational Objectives. Longman. Available online: https://eduq.info/xmlui/handle/11515/18345 (accessed on 14 February 2024).

- Kolowich, S. The Professors Behind the MOOCs Hype. The Chronicle of Higher Education. Available online: https://www.chronicle.com/article/the-professors-behind-the-mooc-hype/ (accessed on 14 February 2024).

- Floratos, N. Impact of Feedback in Students’ Engagement: The Case of MOOCs. Ph.D. Thesis, Universitat Oberta de Catalunya, Barcelona, Spain, 2021. Available online: http://hdl.handle.net/10803/687741 (accessed on 12 February 2024).

- Hattie, J.; Timperley, H. The Power of Feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Shute, V.J. Focus on Formative Feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Boud, D.; Molloy, E. Feedback in Higher and Professional Education: Understanding It and Doing It Well; Routledge: London, UK, 2013. [Google Scholar]

- Falchikov, N.; Goldfinch, J. Student peer assessment in higher education: A meta-analysis comparing peer and teacher marks. Rev. Educ. Res. 2000, 70, 287–322. [Google Scholar] [CrossRef]

- Sarker, I. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef] [PubMed]

- Moore, S.; Tong, R.; Singh, A.; Liu, Z.; Hu, X.; Lu, Y. Empowering Education with LLMs—The Next-Gen Interface and Content Generation. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky. AIED 2023. Communications in Computer and Information Science; Wang, N., Rebolledo-Mendez, G., Dimitrova, V., Matsuda, N., Santos, O.C., Eds.; Springer: Cham, Switzerland, 2023; Volume 1831. [Google Scholar] [CrossRef]

- Tsai, M.; Ong, C.W.; Chen, C. Exploring the Use of Large Language Models (LLMs) in Chemical Engineering Education: Building Core Course Problem Models. Educ. Chem. Eng. 2023, 44, 71–95. [Google Scholar] [CrossRef]

- Swacha, J. Exercise Solution Check Specification Language for Interactive Programming Learning Environments. Open Access Ser. Inform. (OASIcs) 2017, 56, 6:1–6:8. [Google Scholar] [CrossRef]

- Xiao, C.; Xu, S.; Zhang, K.; Wang, Y.; Xia, L. Evaluating Reading Comprehension Exercises Generated by LLMs: A Showcase of ChatGPT in Education Applications. In Proceedings of the Workshop on Innovative Use of NLP for Building Educational Applications, Toronto, ON, Canada, 13 July 2023. [Google Scholar]

- Gabbay, H.; Cohen, A. Exploring the Connections Between the Use of an Automated Feedback System and Learning Behavior in a MOOC for Programming. In Educating for a New Future: Making Sense of Technology-Enhanced Learning Adoption. EC-TEL 2022; Hilliger, I., Muñoz-Merino, P.J., De Laet, T., Ortega-Arranz, A., Farrell, T., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13450. [Google Scholar] [CrossRef]

- Henderson, M.; Ajjawi, R.; Boud, D.; Molloy, E. Identifying feedback that has impact. In The Impact of Feedback in Higher Education: Improving Assessment Outcomes for Learners; Springer International Publishing: Cham, Switzerland, 2019; pp. 15–34. [Google Scholar]

- Dai, W.; Lin, J.; Jin, H.; Li, T.; Tsai, Y.S.; Gašević, D.; Chen, G. Can large language models provide feedback to students? A case study on ChatGPT. In Proceedings of the 2023 IEEE International Conference on Advanced Learning Technologies (ICALT), Orem, UT, USA, 10–13 July 2023; pp. 323–325. [Google Scholar]

- Lin, J.; Thomas, D.R.; Han, F.; Gupta, S.; Tan, W.; Nguyen, N.D.; Koedinger, K.R. Using large language models to provide explanatory feedback to human tutors. arXiv 2023, arXiv:2306.15498. [Google Scholar]

- Dai, W.; Tsai, Y.S.; Lin, J.; Aldino, A.; Jin, F.; Li, T.; Gasevic, D. Assessing the Proficiency of Large Language Models in Automatic Feedback Generation: An Evaluation Study. 2023. Available online: https://osf.io/s7dvy/download (accessed on 14 February 2024).

- Amado-Salvatierra, H.R.; Morales-Chan, M.; Hernandez-Rizzardini, R.; Rosales, M. Exploring Educators’ Perceptions: Artificial Intelligence Integration in Higher Education. In Proceedings of the 2024 IEEE World Engineering Education Conference (EDUNINE), Guatemala City, Guatemala, 10–13 March 2024; pp. 1–5. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).