Age Estimation of Faces in Videos Using Head Pose Estimation and Convolutional Neural Networks

Abstract

:1. Introduction

2. Related Work

3. Proposed Method

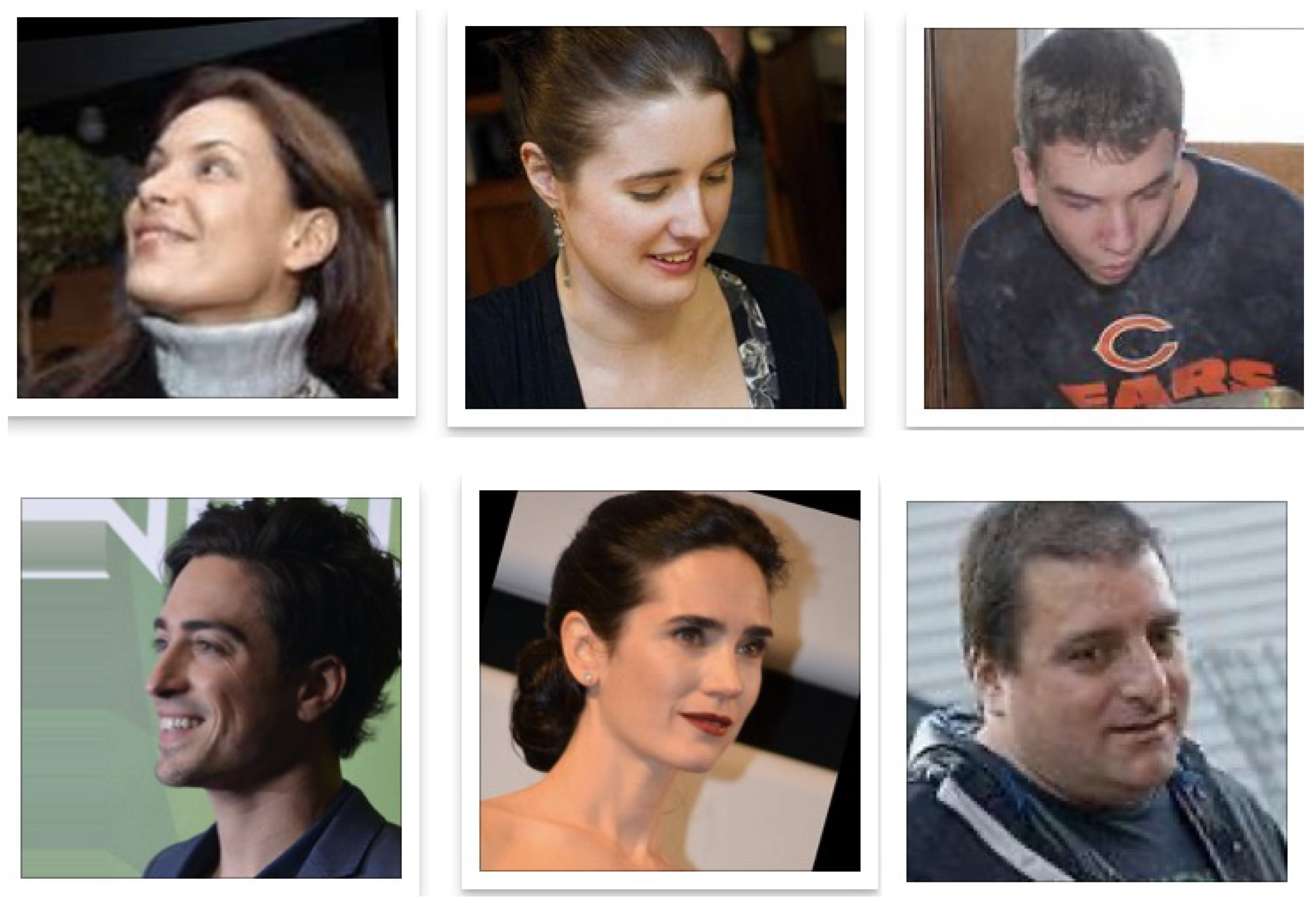

3.1. Datasets

3.2. Face Alignment

3.3. Age Estimation

3.4. Head Pose Estimation

4. Experiments

4.1. Implementation Details

4.2. Results and Comparison

4.2.1. Head Pose Estimation

4.2.2. Testing on Facial Image Datasets

4.2.3. Testing with Different Threshold

4.2.4. Testing on Facial Video Datasets

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DRFs | Deep regression forests |

| CACD | Cross-Age Celebrity Dataset |

| AFAD | Asian Face Age Dataset |

| CNN | Convolutional neural network |

| SIAM | Spatially-indexed attention model |

| FC | Fully-connected |

| MAE | Mean absolute error |

| SGD | Stochastic gradient descent |

References

- Lanitis, A.; Draganova, C.; Christodoulou, C. Comparing different classifiers for automatic age estimation. IEEE Trans. Syst. Man Cybern. Part Cybern. 2004, 34, 621–628. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Otto, C.; Jain, A.K. Age estimation from face images: Human vs. machine performance. In Proceedings of the ICB, Madrid, Spain, 4–7 June 2013; pp. 1–8. [Google Scholar]

- Geng, X.; Zhou, Z.-H.; Zhang, Y.; Li, G.; Dai, H. Learning from facial aging patterns for automatic age estimation. In Proceedings of the ACM International Conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006; pp. 307–316. [Google Scholar]

- Lanitis, A.; Taylor, C.J.; Cootes, T.F. Toward automatic simulation of aging effects on face images. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 442–455. [Google Scholar] [CrossRef]

- Song, Z.; Ni, B.; Guo, D.; Sim, T.; Yan, S. Learning universal multi-view age estimator using video context. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 241–248. [Google Scholar]

- Shan, C.; Porikli, F.; Xiang, T.; Gong, S. (Eds.) Video Analytics for Business Intelligence. In Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Han, H.; Otto, C.; Liu, X.; Jain, A.K. Demographic estimation from face images: Human vs. machine performance. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1148–1161. [Google Scholar] [CrossRef] [PubMed]

- Guo, G.; Mu, G.; Fu, Y.; Huang, T. Human age estimation using bio-inspired features. In Proceedings of the IEEE CVPR, Miami, FL, USA, 20–25 June 2009; pp. 112–119. [Google Scholar]

- Ramanathan, N.; Chellappa, R.; Biswas, S. Age progression in human faces: A survey. J. Vis. Lang. Comput. 2009, 15, 3349–3361. [Google Scholar]

- Chen, S.; Zhang, C.; Dong, M.; Le, J.; Rao, M. Using ranking-CNN for age estimation. In Proceedings of the IEEE ICCV, Venice, Italy, 22–29 October 2017; pp. 5183–5192. [Google Scholar]

- Shen, W.; Guo, Y.; Wang, Y.; Zhao, K.; Wang, B.; Yuille, A. Deep Regression Forests for Age Estimation. In Proceedings of the IEEE CVPR, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2304–2313. [Google Scholar]

- Niu, Z.; Zhou, M.; Wang, L.; Gao, X.; Hua, G. Ordinal regression with multiple output cnn for age estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4920–4928. [Google Scholar]

- Ruiz, N.; Chong, E.; Rehg, J.M. Fine-grained head pose estimation without key-points. In Proceedings of the CVPR Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2074–2083. [Google Scholar]

- Zhu, X.; Lei, Z.; Liu, X.; Shi, H.; Li, S.Z. Face alignment across large poses: A 3d solution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 146–155. [Google Scholar]

- Chen, B.; Chen, C.; Hsu, W.H. Face recognition and retrieval using cross-age reference coding with cross-age celebrity dataset. IEEE Trans. Multimed. 2015, 17, 804–815. [Google Scholar] [CrossRef]

- Kwonand, Y.; Lobo, N. Age classification from facial images. In Proceedings of the IEEE CVPR, Seattle, WA, USA, 21–23 June 1994; pp. 762–767. [Google Scholar]

- Yi, D.; Lei, Z.; Li, S.Z. Age estimation by multi-scale convolutional network. In Proceedings of the IEEE ICCV, Santiago, Chile, 7–13 December 2015; pp. 144–158. [Google Scholar]

- Rothe, R.; Timofte, R.; Gool, L.V. Deep expectation of real and apparent age from a single image without facial landmarks. Int. J. Comput. Vis. 2016, 126, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Han, H.; Shan, S.; Chen, X. Multi-task learning for joint prediction of heterogeneous face attributes. In Proceedings of the IEEE FG, Washington, DC, USA, 30 May–3 June 2017; pp. 173–179. [Google Scholar]

- Han, H.; Jain, A.K.; Wang, F.; Shan, S.; Chen, X. Heterogeneous face attribute estimation: A deep multi-task learning approach. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2597–2609. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ji, Z.; Lang, C.; Li, K.; Xing, J. Deep Age Estimation Model Stabilization from Images to Videos. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 20–24 August 2018. [Google Scholar]

- Pei, W.; Dibeklioğlu, H.; Baltrušaitis, T.; Tax, D. Attended End-to-End Architecture for Age Estimation From Facial Expression Videos. IEEE Trans. Image Process. 2019, 29, 1972–1984. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, X.; Ramanan, D. Face detection, pose estimation, and landmark localization in the wild. In Proceedings of the IEEE CVPR, Providence, RI, USA, 16–21 June 2012; pp. 2879–2886. [Google Scholar]

- Belhumeur, P.N.; Jacobs, D.W.; Kriegman, D.; Kumar, N. Localizing parts of faces using a consensus of exemplars. In Proceedings of the IEEE CVPR, Colorado Springs, CO, USA, 20–25 June 2011; pp. 545–552. [Google Scholar]

- Zhou, E.; Fan, H.; Cao, Z.; Jiang, Y.; Yin, Q. Extensive facial landmark localization with coarse-to-fine convolutional network cascade. In Proceedings of the IEEE ICCVW, Sydney, NSW, Australia, 2–8 December 2013; pp. 386–391. [Google Scholar]

- Sagonas, C.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 faces in-the-wild challenge: The first facial landmark localization challenge. In Proceedings of the IEEE ICCVW, Sydney, NSW, Australia, 2–8 December 2013; pp. 397–403. [Google Scholar]

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: The extended M2VTS database. In Proceedings of the Second International Conference on Audio and Video-Based Biometric Person Authentication, Washington, DC, USA, 22–24 March 1999; Volume 964, pp. 965–966. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A.; Very Deep Convolutional NETWORKS for large-Scale Image Recognition. CoRR abs/1409.1556. 2014. Available online: https://arxiv.org/abs/1409.1556 (accessed on 30 September 2021).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. arXiv 2016, arXiv:1512.03385. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2d & 3d face alignment problem? (and a dataset of 230,000 3d facial landmarks). In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Singh, T.; Mohadikar, M.; Gite, S.; Patil, S.; Pradhan, B.; Alamri, A. Attention Span Prediction Using Head-Pose Estimation With Deep Neural Networks. IEEE Access 2021, 9, 142632–142643. [Google Scholar] [CrossRef]

| Methods | Yaw | Pitch | Roll | Average |

|---|---|---|---|---|

| Dlib [34] | 23.153 | 13.633 | 10.545 | 15.777 |

| Fan [33] | 6.358 | 12.277 | 8.714 | 9.116 |

| CPAM [35] | 1.479 | 1.804 | 1.869 | 1.697 |

| Multiloss CNN | 6.470 | 6.559 | 5.436 | 6.155 |

| Ground truth landmarks | 5.924 | 11.756 | 8.271 | 8.651 |

| Subset | AFAD | CACD |

|---|---|---|

| Frontal | 3.73 | 4.59 |

| Nonfrontal | 4.97 | 5.65 |

|

Threshold (Degree) | AFAD | CACD | ||

|---|---|---|---|---|

| MAE | Number | MAE | Number | |

| 50 | 3.97 | 59,173 | 4.87 | 18,023 |

| 40 | 3.84 | 57,232 | 4.70 | 16,842 |

| 30 | 3.73 | 53,983 | 4.59 | 15,145 |

| 20 | 3.72 | 36,748 | 4.58 | 10,398 |

| 10 | 3.71 | 18,753 | 4.58 | 7569 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Bao, Y. Age Estimation of Faces in Videos Using Head Pose Estimation and Convolutional Neural Networks. Sensors 2022, 22, 4171. https://doi.org/10.3390/s22114171

Zhang B, Bao Y. Age Estimation of Faces in Videos Using Head Pose Estimation and Convolutional Neural Networks. Sensors. 2022; 22(11):4171. https://doi.org/10.3390/s22114171

Chicago/Turabian StyleZhang, Beichen, and Yue Bao. 2022. "Age Estimation of Faces in Videos Using Head Pose Estimation and Convolutional Neural Networks" Sensors 22, no. 11: 4171. https://doi.org/10.3390/s22114171

APA StyleZhang, B., & Bao, Y. (2022). Age Estimation of Faces in Videos Using Head Pose Estimation and Convolutional Neural Networks. Sensors, 22(11), 4171. https://doi.org/10.3390/s22114171