Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley

Abstract

:1. Introduction

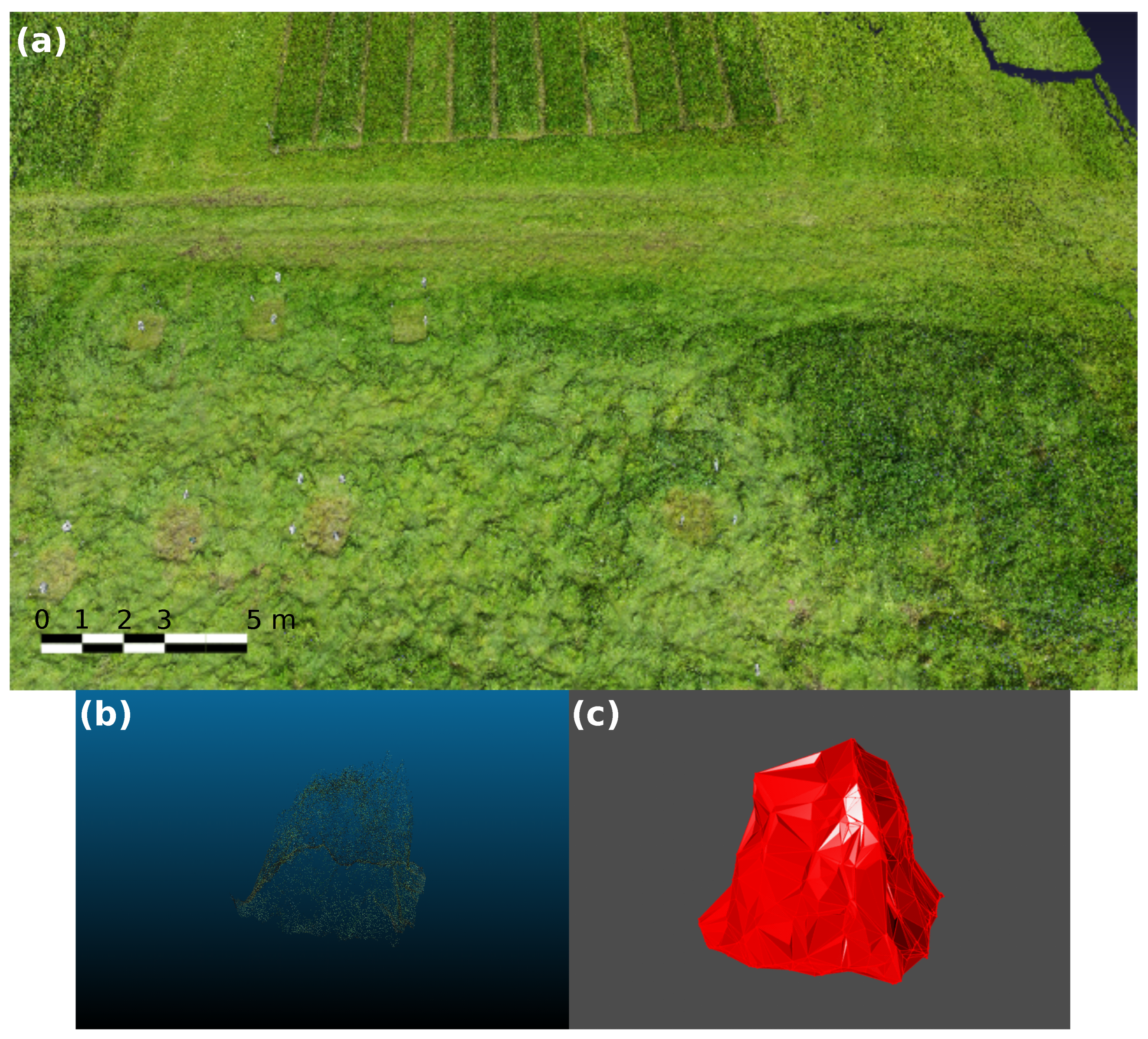

2. Materials and Methods

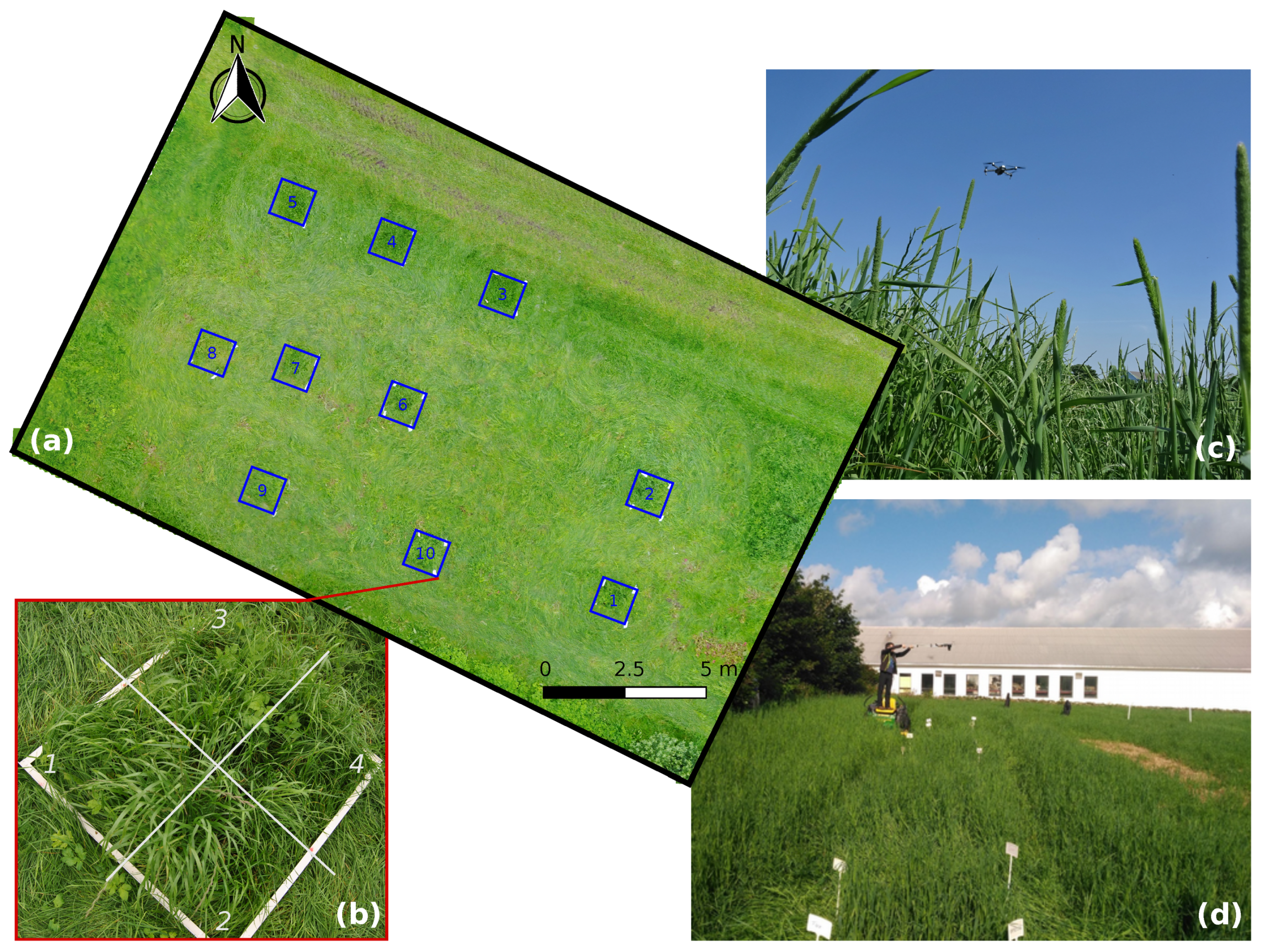

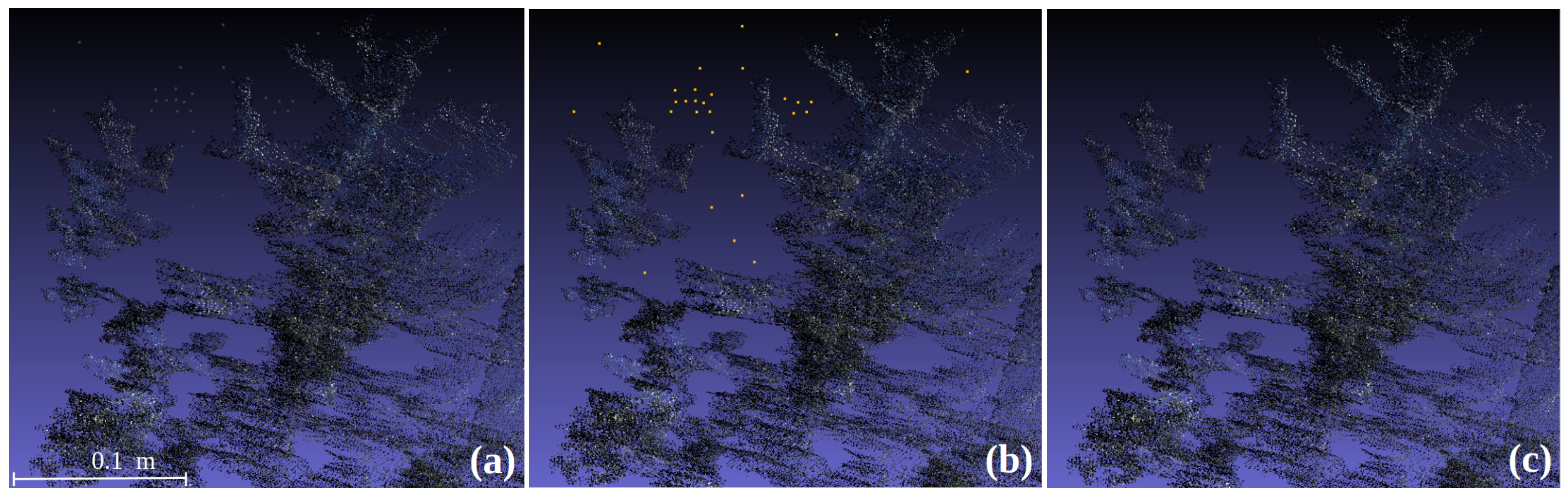

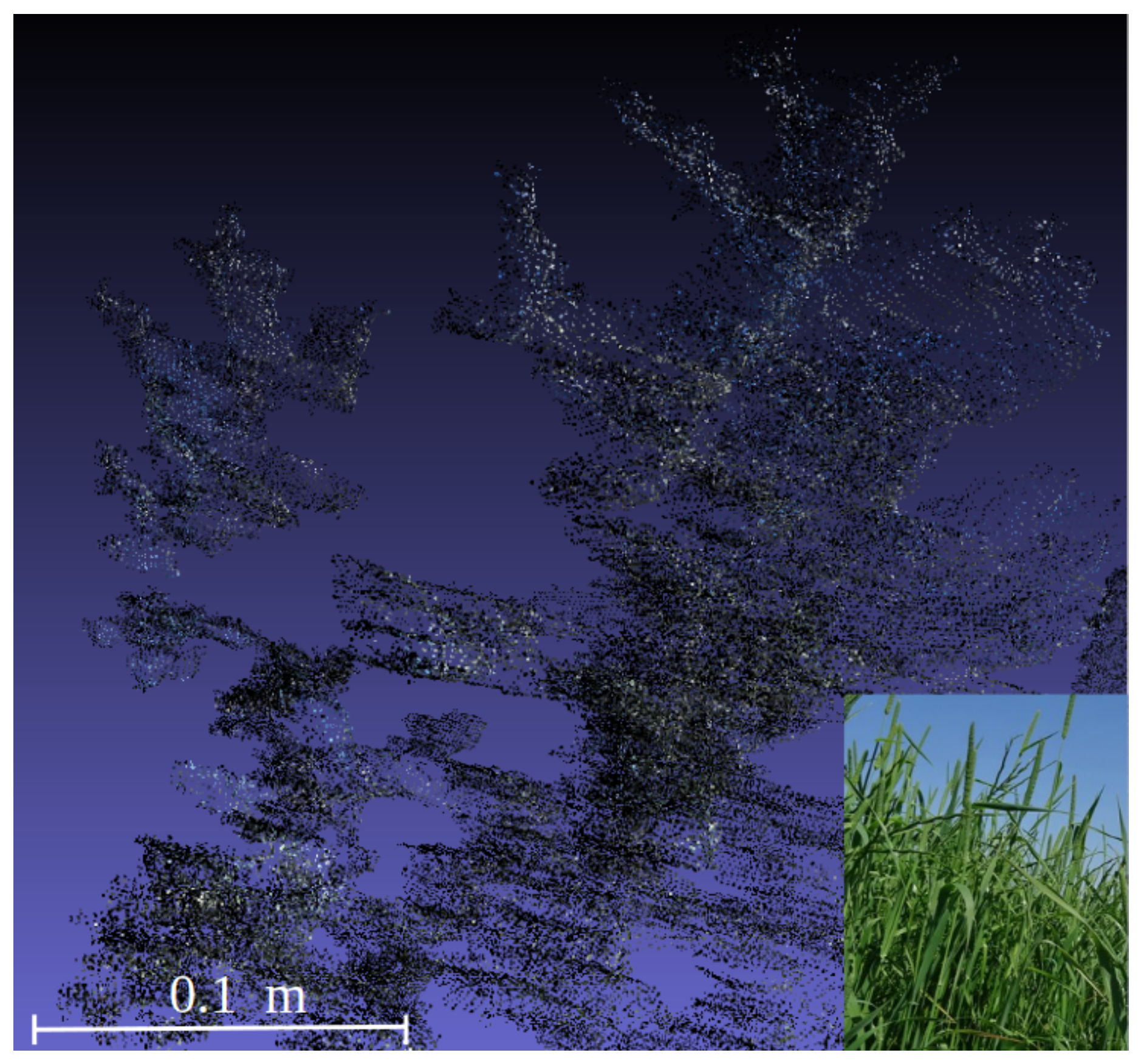

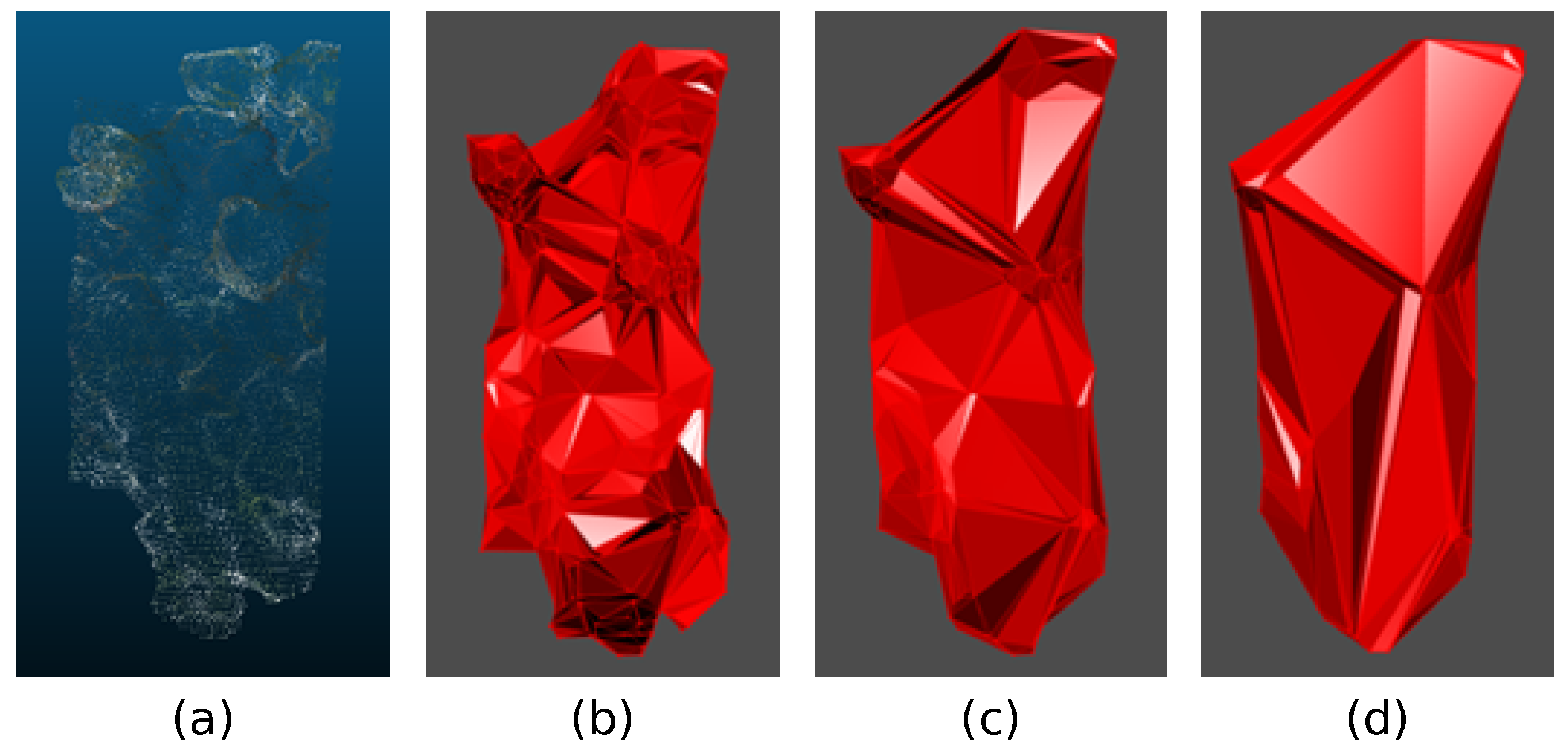

2.1. Experiments and Modeling Systems

2.2. Statistical Analysis

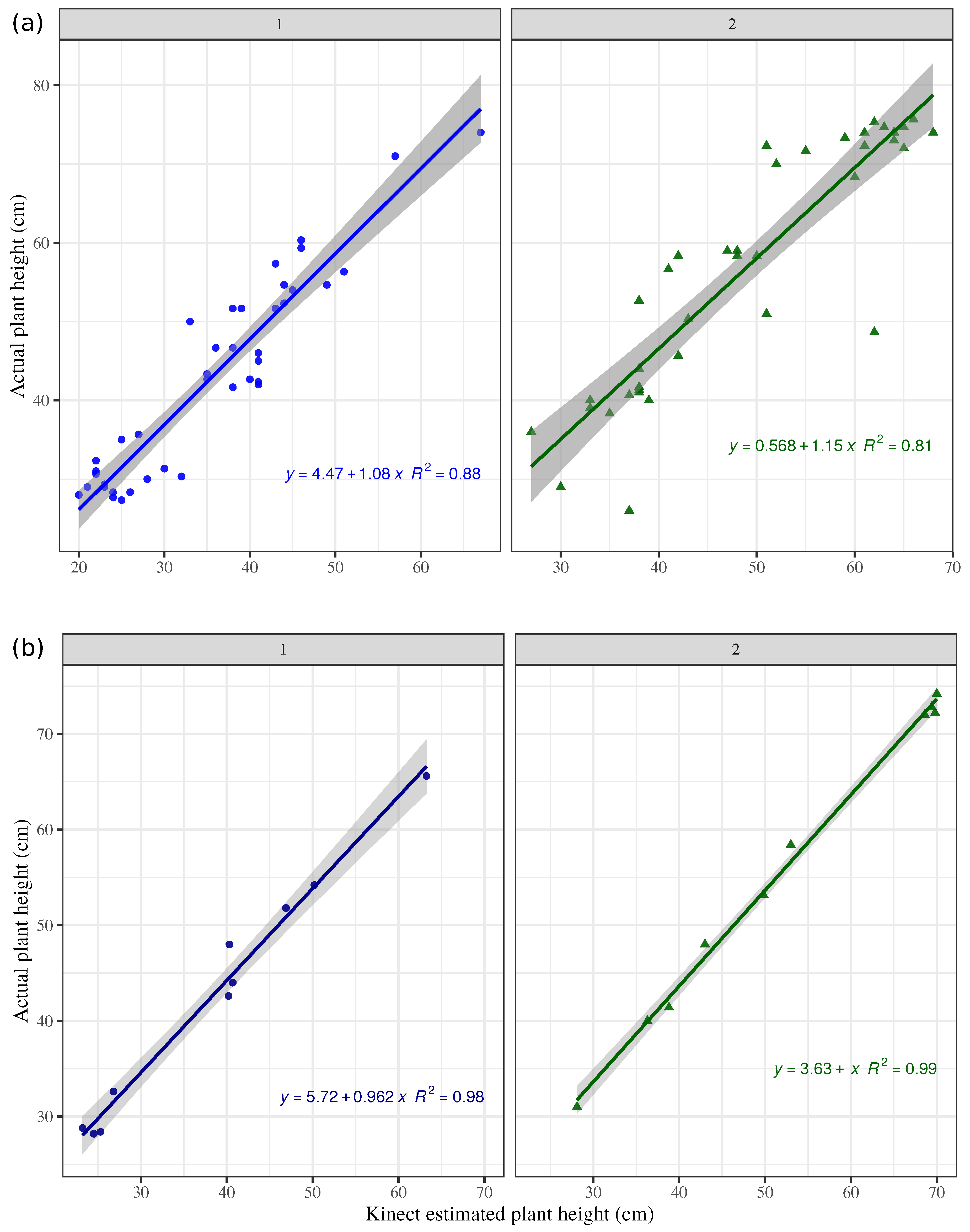

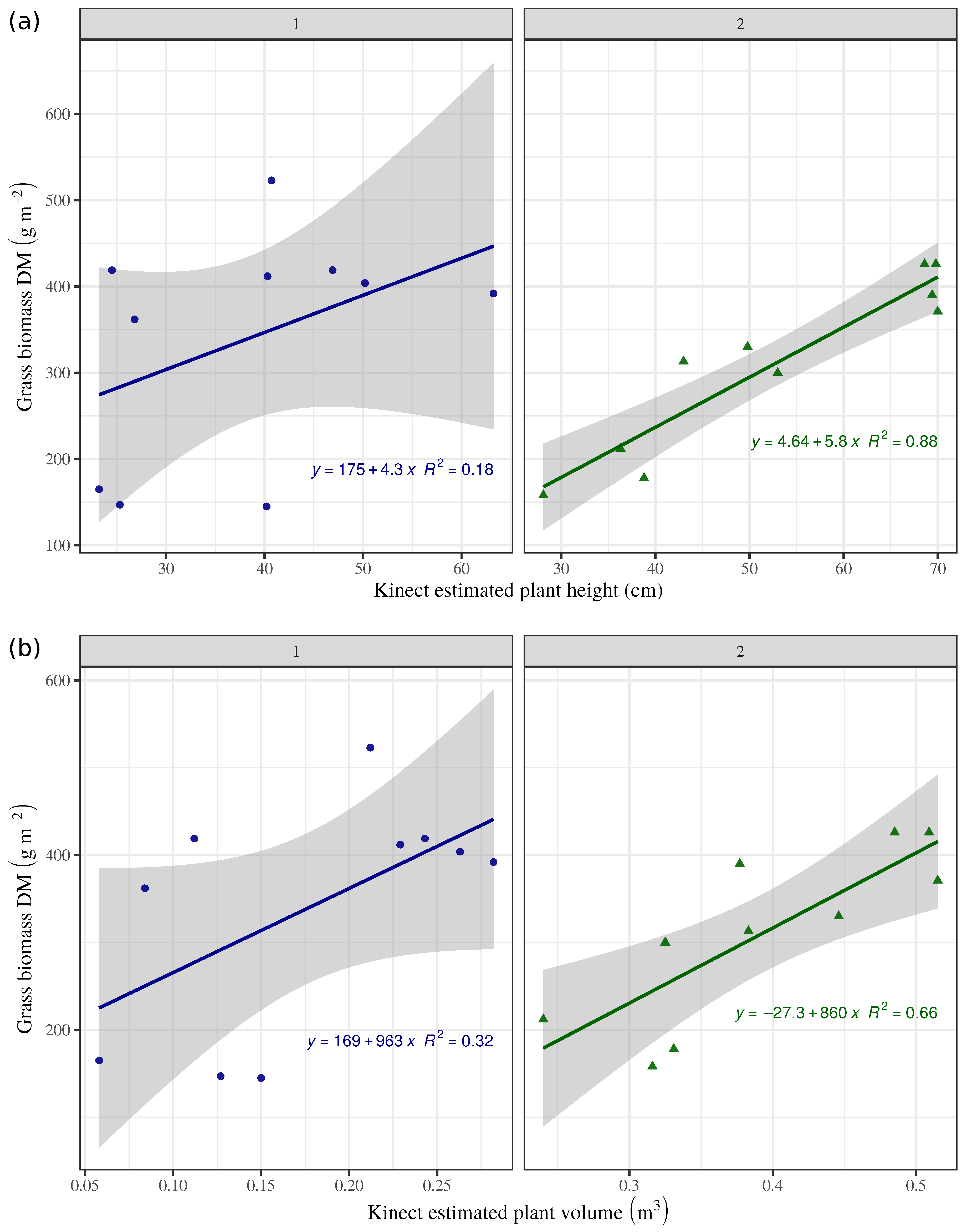

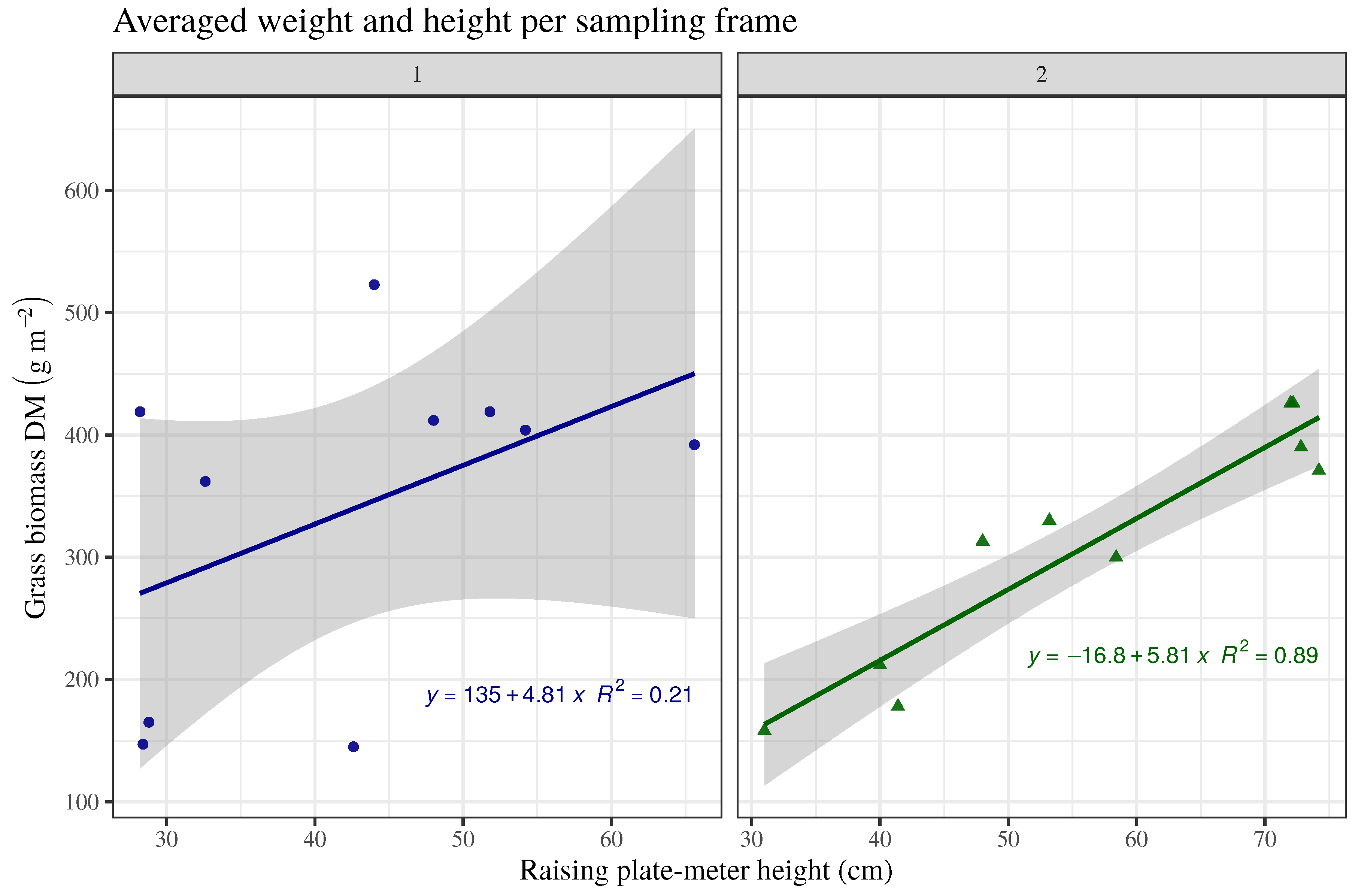

3. Results and Discussion

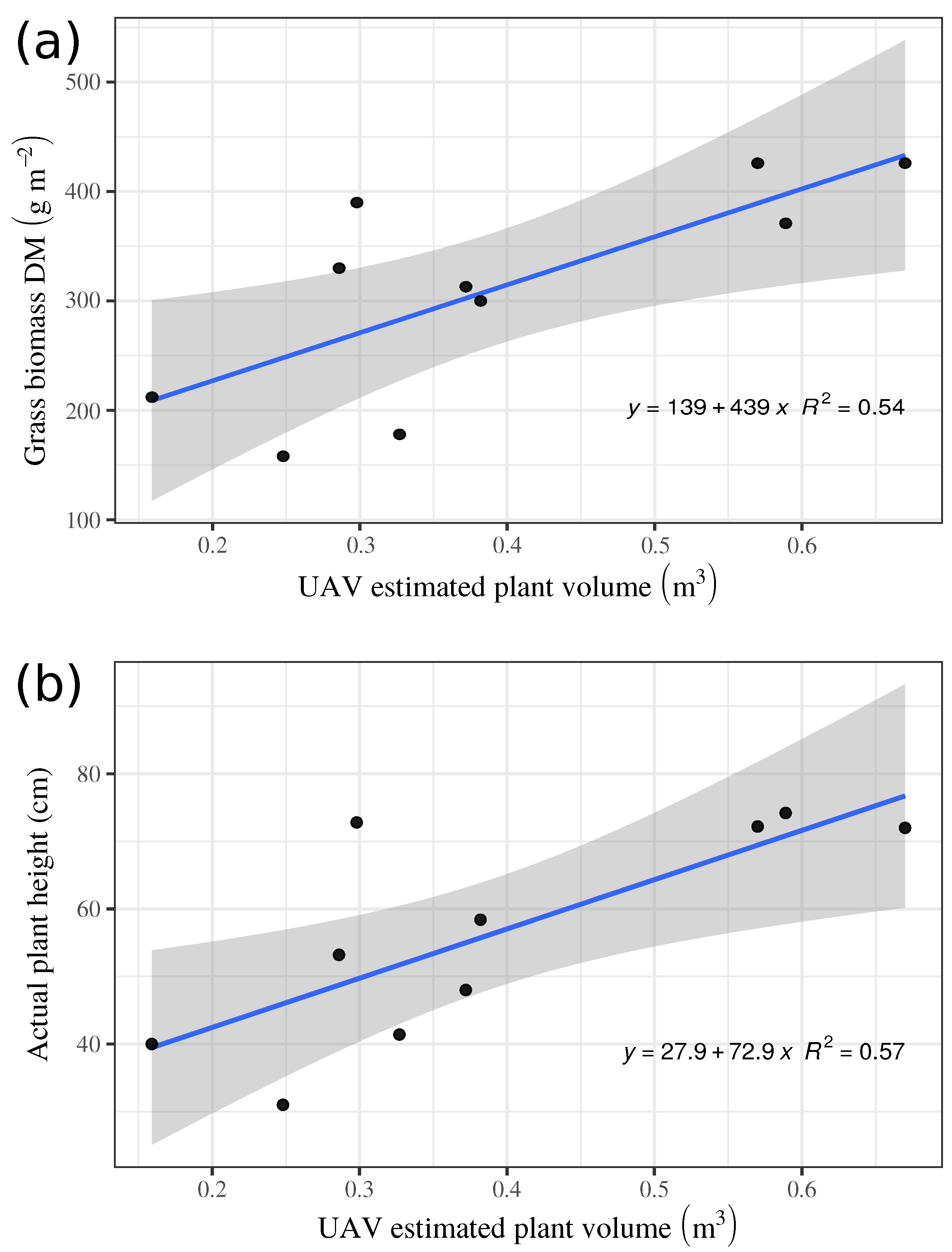

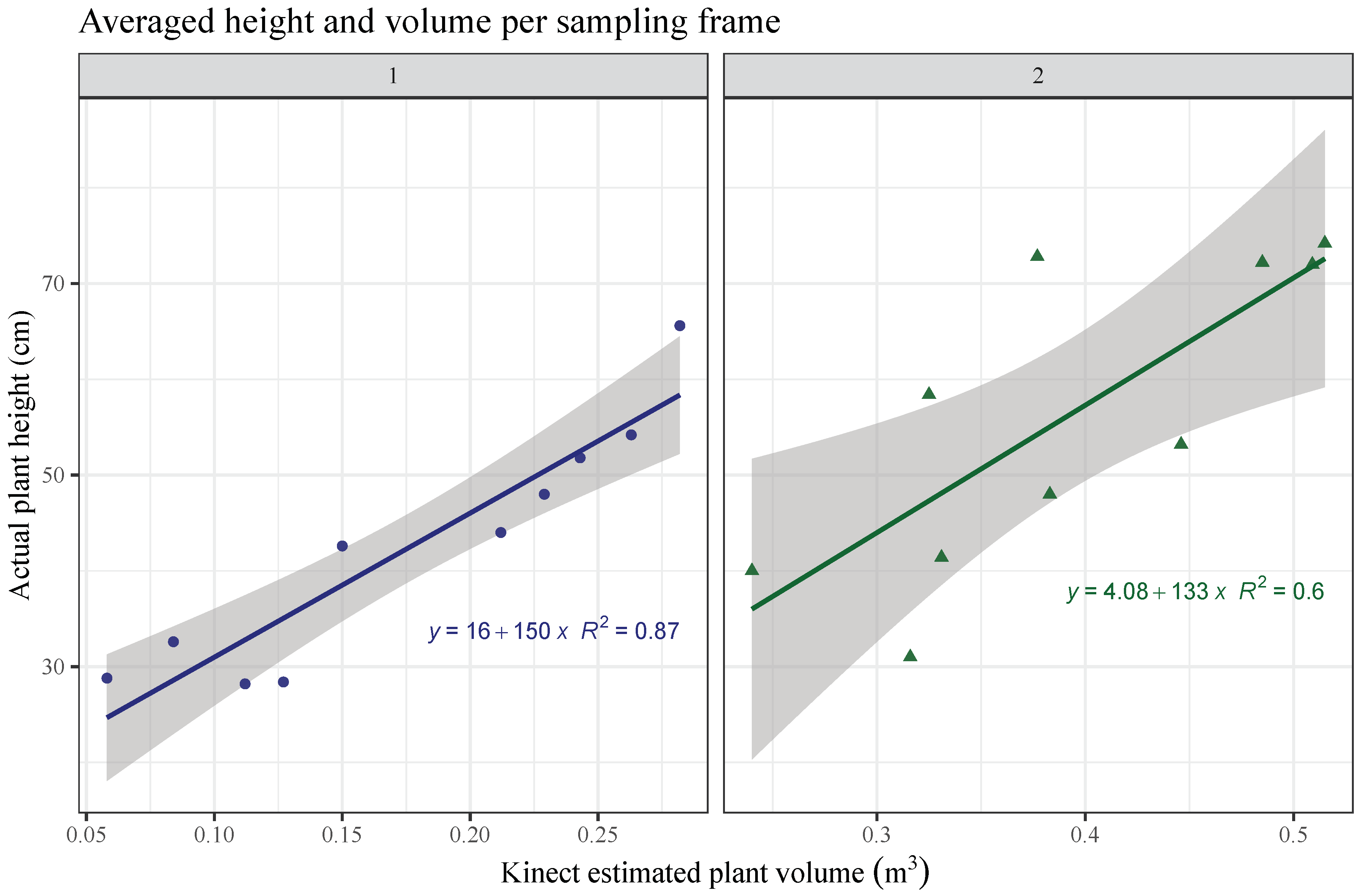

Plant Height, Volume and Biomass

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bareth, G.; Schellberg, J. Replacing Manual Rising Plate Meter Measurements with Low-cost UAV-Derived Sward Height Data in Grasslands for Spatial Monitoring. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2018, 86, 157–168. [Google Scholar] [CrossRef]

- Coppens, F.; Wuyts, N.; Inzé, D.; Dhondt, S. Unlocking the potential of plant phenotyping data through integration and data-driven approaches. Curr. Opin. Syst. Biol. 2017, 4, 58–63. [Google Scholar] [CrossRef]

- Fahlgren, N.; Gehan, M.A.; Baxter, I. Lights, camera, action: high-throughput plant phenotyping is ready for a close-up. Curr. Opin. Plant Biol. 2015, 24, 93–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Heege, H.J.; Thiessen, E. Sensing of Crop Properties. In Precision in Crop Farming: Site Specific Concepts and Sensing Methods: Applications and Results; Heege, H.J., Ed.; Springer: Dordrecht, The Netherlands, 2013; pp. 103–141. [Google Scholar]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating Biomass and Nitrogen Amount of Barley and Grass Using UAV and Aircraft Based Spectral and Photogrammetric 3D Features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef]

- Senf, C.; Pflugmacher, D.; Heurich, M.; Krueger, T. A Bayesian hierarchical model for estimating spatial and temporal variation in vegetation phenology from Landsat time series. Remote Sens. Environ. 2017, 194, 155–160. [Google Scholar] [CrossRef]

- Hopkins, A. Grass: Its Production and Utilization; British Grassland Society: Kenilworth, UK, 2000. [Google Scholar]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.T.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R.R. High Throughput Determination of Plant Height, Ground Cover, and Above-Ground Biomass in Wheat with LiDAR. Front. Plant Sci. 2018, 9, 237. [Google Scholar] [CrossRef] [PubMed]

- Glenn, E.P.; Huete, A.R.; Nagler, P.L.; Nelson, S.G. Relationship Between Remotely-sensed Vegetation Indices, Canopy Attributes and Plant Physiological Processes: What Vegetation Indices Can and Cannot Tell Us About the Landscape. Sensors 2008, 8, 2136–2160. [Google Scholar] [CrossRef] [Green Version]

- Fitzgerald, G.J. Characterizing vegetation indices derived from active and passive sensors. Int. J. Remote Sens. 2010, 31, 4335–4348. [Google Scholar] [CrossRef]

- Capolupo, A.; Kooistra, L.; Berendonk, C.; Boccia, L.; Suomalainen, J. Estimating Plant Traits of Grasslands from UAV-Acquired Hyperspectral Images: A Comparison of Statistical Approaches. ISPRS Int. J. GeoInf. 2015, 4, 2792–2820. [Google Scholar] [CrossRef] [Green Version]

- Edirisinghe, A.; Hill, M.J.; Donald, G.E.; Hyder, M. Quantitative mapping of pasture biomass using satellite imagery. Int. J. Remote Sens. 2011, 32, 2699–2724. [Google Scholar] [CrossRef]

- Peteinatos, G.; Weis, M.; Andújar, D.; Rueda-Ayala, V.; Gerhards, R. Potential use of ground-based sensor technologies for weed detection. Pest Manag. Sci. 2014, 70, 190–199. [Google Scholar] [CrossRef]

- Fonseca, R.; Creixell, W.; Maiguashca, J.; Rueda-Ayala, V. Object detection on aerial image using cascaded binary classifier. In Proceedings of the 2016 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 18–20 October 2016; pp. 1–6. [Google Scholar]

- Andújar, D.; Escolà, A.; Dorado, J.; Fernández-Quintanilla, C. Weed discrimination using ultrasonic sensors. Weed Res. 2011, 51, 543–547. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Grift, T.E. A LIDAR-based crop height measurement system for Miscanthus giganteus. Comput. Electron. Agric. 2012, 85, 70–76. [Google Scholar] [CrossRef]

- Andújar, D.; Dorado, J.; Fernández-Quintanilla, C.; Ribeiro, A. An Approach to the Use of Depth Cameras for Weed Volume Estimation. Sensors 2016, 16, 972. [Google Scholar] [CrossRef] [PubMed]

- Andújar, D.; Escolà, A.; Rosell-Polo, J.R.; Fernández-Quintanilla, C.; Dorado, J. Potential of a terrestrial LiDAR-based system to characterise weed vegetation in maize crops. Comput. Electron. Agric. 2013, 92, 11–15. [Google Scholar] [CrossRef] [Green Version]

- Andújar, D.; Rueda-Ayala, V.; Moreno, H.; Rosell-Polo, J.R.; Escolà, A.; Valero, C.; Gerhards, R.; Fernández-Quintanilla, C.; Dorado, J.; Griepentrog, H.W. Discriminating Crop, Weeds and Soil Surface with a Terrestrial LIDAR Sensor. Sensors 2013, 13, 14662–14675. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rueda-Ayala, V.; Peteinatos, G.; Gerhards, R.; Andújar, D. A Non-Chemical System for Online Weed Control. Sensors 2015, 15, 7691–7707. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, Y.; Li, C.; Paterson, A.H. High throughput phenotyping of cotton plant height using depth images under field conditions. Comput. Electron. Agric. 2016, 130, 57–68. [Google Scholar] [CrossRef]

- Rosell-Polo, J.R.; Auat-Cheein, F.; Gregorio, E.; Andújar, D.; Puigdomènech, L.; Masip, J.; Escolà, A. Chapter Three—Advances in Structured Light Sensors Applications in Precision Agriculture and Livestock Farming. In Advances in Agronomy; Academic Press: Cambridge, MA, USA, 2015; Volume 133, pp. 71–112. [Google Scholar]

- Yandún Narváez, F.J.; del Pedregal, J.S.; Prieto, P.A.; Torres-Torriti, M.; Cheein, F.A.A. LiDAR and thermal images fusion for ground-based 3D characterisation of fruit trees. Biosyst. Eng. 2016, 151, 479–494. [Google Scholar] [CrossRef]

- Wang, W.; Li, C. Size estimation of sweet onions using consumer-grade RGB-depth sensor. J. Food Eng. 2014, 142, 153–162. [Google Scholar] [CrossRef]

- Correa, C.; Valero, C.; Barreiro, P.; Ortiz-Cañavate, J.; Gil, J. Usando Kinect como sensor para pulverización inteligente. In VII Congreso Ibérico de Agroingeniería y Ciencias Hortícolas; UPM: Madrid, Spain, 2013; pp. 1–6. [Google Scholar]

- Bengochea-Guevara, J.M.; Andújar, D.; Sánchez-Sardana, F.L.; Cantuña, K.; Ribeiro, A. A Low-Cost Approach to Automatically Obtain Accurate 3D Models of Woody Crops. Sensors 2018, 18, 30. [Google Scholar] [CrossRef] [PubMed]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-Throughput 3-D Monitoring of Agricultural-Tree Plantations with Unmanned Aerial Vehicle (UAV) Technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef] [PubMed]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species classification using Unmanned Aerial Vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.; Peña, J.; Bengochea-Guevara, J.; Höglind, M.; Rueda-Ayala, C.; Andújar, D. Novel Systems for Pasture Characterization Using RGB-D Cameras and UAV-imagery. In Proceedings of the AgEng conference, Session 14: Robotic Systems in Pastures, Wageningen, The Netherlands, 8–12 July 2018. [Google Scholar]

- Curless, B.; Levoy, M. A Volumetric Method for Building Complex Models from Range Images. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 4–9 August 1996; pp. 303–312. [Google Scholar]

- Roth, S.D. Ray casting for modeling solids. Comput. Graph. Image Process. 1982, 18, 109–144. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Mücke, E.P. Three-dimensional Alpha Shapes. ACM Trans. Graph. 1994, 13, 43–72. [Google Scholar] [CrossRef]

- Lafarge, T.; Pateiro-Lopez, B. alphashape3d: Implementation of the 3D Alpha-Shape for the Reconstruction of 3D Sets from a Point Cloud. Available online: https://cran.r-project.org/web/packages/alphashape3d/alphashape3d.pdf (accessed on 21 December 2017).

- de Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Bareth, G.; Bendig, J.; Tilly, N.; Hoffmeister, D.; Aasen, H.; Bolten, A. A Comparison of UAV- and TLS-derived Plant Height for Crop Monitoring: Using Polygon Grids for the Analysis of Crop Surface Models (CSMs). Photogramm. Fernerkun. Geoinf. 2016, 2016, 85–94. [Google Scholar] [CrossRef]

- Anderson, J.E.; Plourde, L.C.; Martin, M.E.; Braswell, B.H.; Smith, M.L.; Dubayah, R.O.; Hofton, M.A.; Blair, J.B. Integrating waveform lidar with hyperspectral imagery for inventory of a northern temperate forest. Remote Sens. Environ. 2008, 112, 1856–1870. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rueda-Ayala, V.P.; Peña, J.M.; Höglind, M.; Bengochea-Guevara, J.M.; Andújar, D. Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley. Sensors 2019, 19, 535. https://doi.org/10.3390/s19030535

Rueda-Ayala VP, Peña JM, Höglind M, Bengochea-Guevara JM, Andújar D. Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley. Sensors. 2019; 19(3):535. https://doi.org/10.3390/s19030535

Chicago/Turabian StyleRueda-Ayala, Victor P., José M. Peña, Mats Höglind, José M. Bengochea-Guevara, and Dionisio Andújar. 2019. "Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley" Sensors 19, no. 3: 535. https://doi.org/10.3390/s19030535