Developing a Machine Learning-Based Software Fault Prediction Model Using the Improved Whale Optimization Algorithm †

Abstract

:1. Introduction

2. Review of Related Work

3. Proposed Work

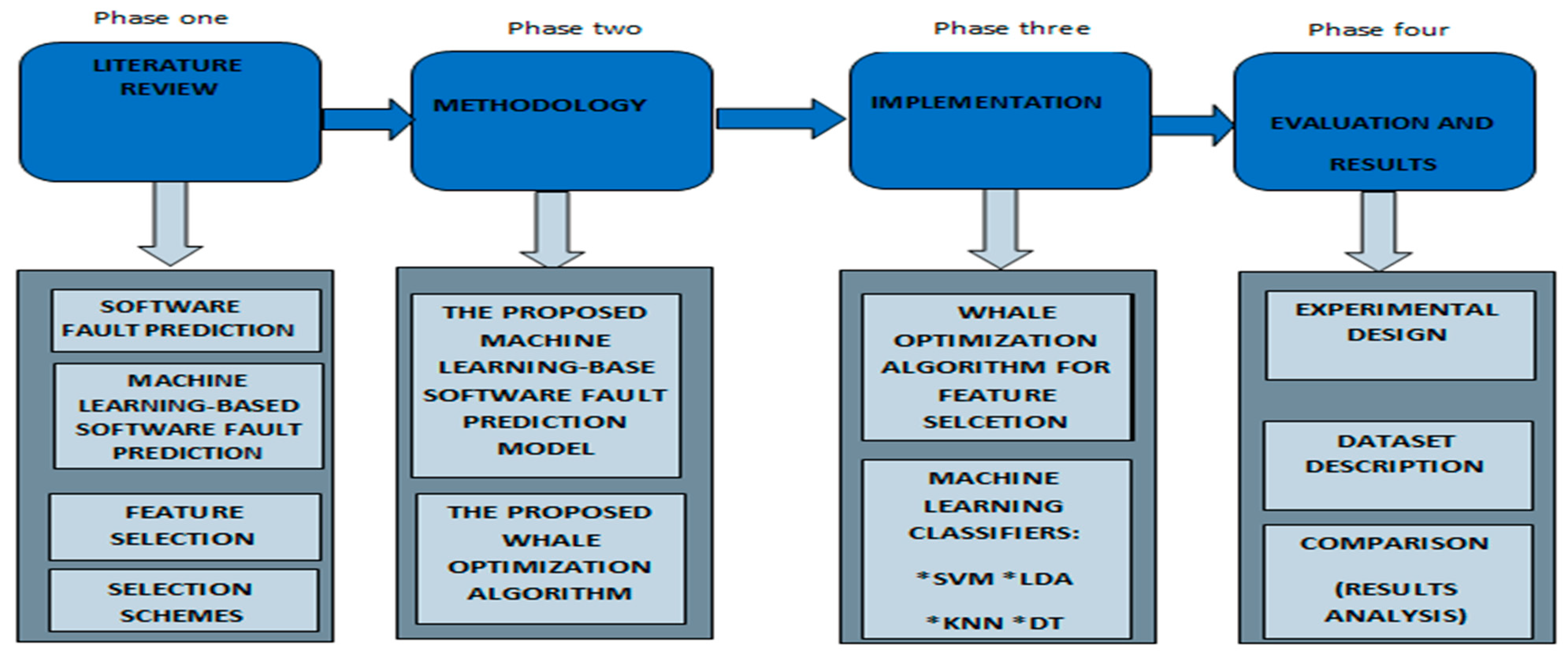

3.1. The Research Workflow

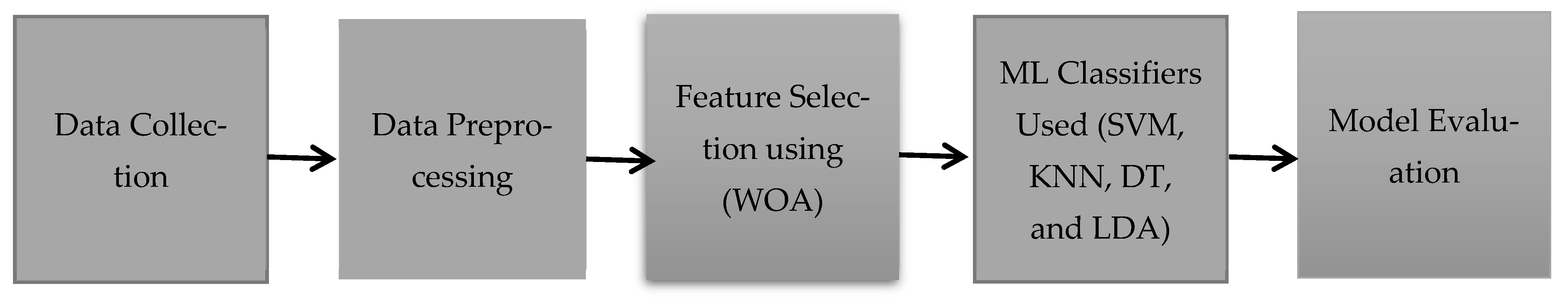

3.2. The Proposed ML-Based SFP Model

3.3. The Proposed Enhanced Whale Optimization Algorithm

- The population is sorted based on each individual’s evaluation scores;

- The poorest-performing fraction of the population is removed;

- The eliminated individuals are replaced with individuals from the top-performing fraction, with each of the best individuals creating one offspring. These offspring subsequently replace one of the previously removed, lower-performing individuals.

4. Discussion of Results

4.1. Implementation Environment

4.2. Proposed Model Performance

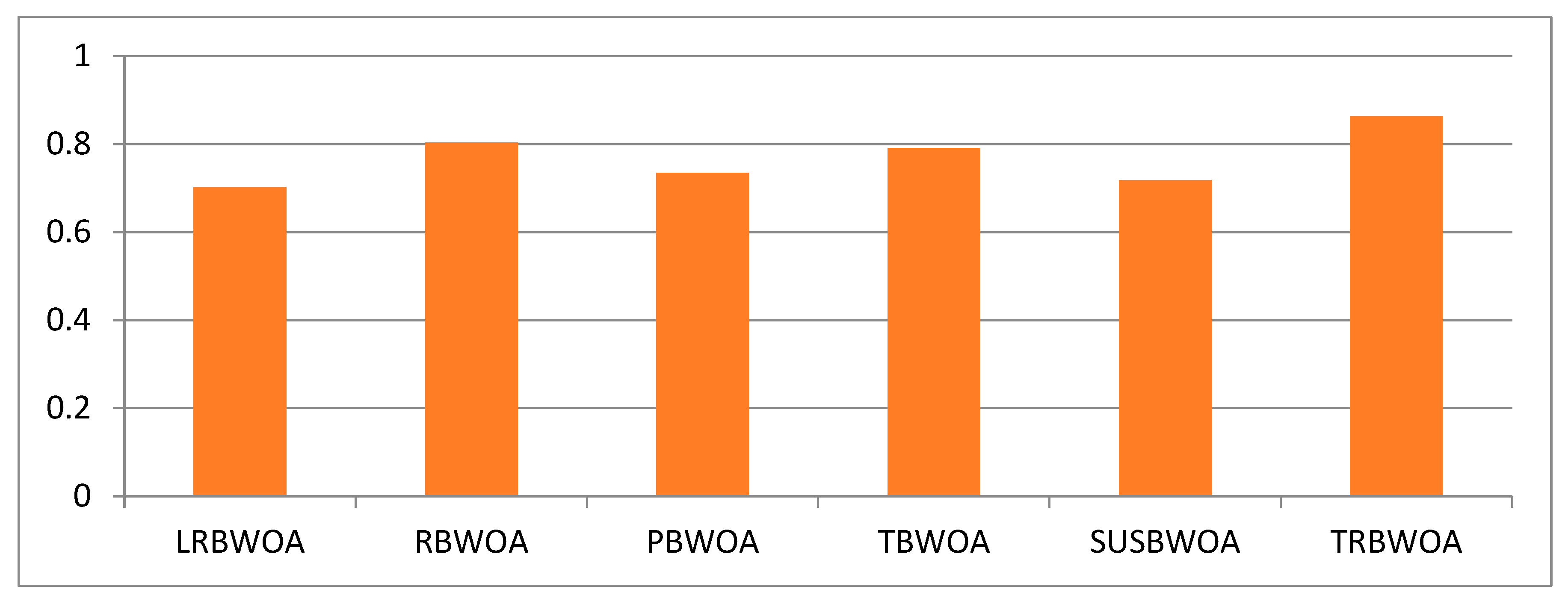

4.3. Results Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rathore, S.S.; Kumar, S. A study on software fault prediction techniques. Artif. Intell. Rev. 2019, 51, 255–327. [Google Scholar] [CrossRef]

- Singh, P.D.; Chug, A. Software defect prediction analysis using machine learning algorithms. In Proceedings of the 2017 7th International Conference on Cloud Computing, Data Science & Engineering-Confluence, Noida, India, 12–13 January 2017. [Google Scholar]

- Cai, J.; Luo, J.; Wang, S.; Yang, S. Feature selection in machine learning: A new perspective. Neurocomputing 2018, 300, 70–79. [Google Scholar] [CrossRef]

- Hassouneh, Y.; Turabieh, H.; Thaher, T.; Tumar, I.; Chantar, H.; Too, J. Boosted whale optimization algorithm with natural selection operators for software fault prediction. IEEE Access 2021, 9, 14239–14258. [Google Scholar] [CrossRef]

- Heidari, A.A.; Aljarah, I.; Faris, H.; Chen, H.; Luo, J.; Mirjalili, S. An enhanced associative learning-based exploratory whale optimizer for global optimization. Neural Comput. Appl. 2020, 32, 5185–5211. [Google Scholar] [CrossRef]

- Bowes, D.; Hall, T.; Petrić, J. Software defect prediction: Do different classifiers find the same defects? Softw. Qual. J. 2018, 26, 525–552. [Google Scholar] [CrossRef]

- Pandey, S.K.; Mishra, R.B.; Tripathi, A.K. Machine learning based methods for software fault prediction: A survey. Expert Syst. Appl. 2021, 172, 114595. [Google Scholar] [CrossRef]

- Suryadi, A. Integration of feature selection with data level approach for software defect prediction. Sink. J. Dan Penelit. Tek. Inform. 2019, 4, 51–57. [Google Scholar] [CrossRef]

- Bahaweres, R.B.; Suroso, A.I.; Hutomo, A.W.; Solihin, I.P.; Hermadi, I.; Arkeman, Y. Tackling feature selection problems with genetic algorithms in software defect prediction for optimization. In Proceedings of the 2020 International Conference on Informatics, Multimedia, Cyber and Information System (ICIMCIS), Jakarta, Indonesia, 19–20 November 2020. [Google Scholar]

- Alkhasawneh, M.S. Software Defect Prediction through Neural Network and Feature Selections. Appl. Comput. Intell. Soft Comput. 2022, 2022, 2581832. [Google Scholar] [CrossRef]

- Chantar, H.; Mafarja, M.; Alsawalqah, H.; Heidari, A.A.; Aljarah, I.; Faris, H. Feature selection using binary grey wolf optimizer with elite-based crossover for Arabic text classification. Neural Comput. Appl. 2020, 32, 12201–12220. [Google Scholar] [CrossRef]

- Goyal, S. Software fault prediction using evolving populations with mathematical diversification. Soft Comput. 2022, 26, 13999–14020. [Google Scholar] [CrossRef]

- Shrivastava, D.; Sanyal, S.; Maji, A.K.; Kandar, D. Bone cancer detection using machine learning techniques. In Smart Healthcare for Disease Diagnosis and Prevention; Elsevier: Amsterdam, The Netherlands, 2020; pp. 175–183. [Google Scholar]

- Khurma, R.A.; Alsawalqah, H.; Aljarah, I.; Elaziz, M.A.; Damaševičius, R. An enhanced evolutionary software defect prediction method using island moth flame optimization. Mathematics 2021, 9, 1722. [Google Scholar] [CrossRef]

| Dataset | Version | # Instances | # Defective Instances | % Defective Instances |

|---|---|---|---|---|

| Ant | 1.7 | 745 | 166 | 0.223 |

| Camel | 1.2 | 608 | 216 | 0.355 |

| Camel | 1.4 | 872 | 145 | 0.166 |

| Camel | 1.6 | 965 | 188 | 0.195 |

| Jedit | 3.2 | 272 | 90 | 0.331 |

| Jedit | 4.0 | 306 | 75 | 0.245 |

| Jedit | 4.1 | 312 | 79 | 0.253 |

| Jedit | 4.2 | 367 | 48 | 0.131 |

| log4j | 1.0 | 135 | 34 | 0.252 |

| log4j | 1.1 | 109 | 37 | 0.339 |

| log4j | 1.2 | 205 | 189 | 0.922 |

| Lucene | 2.0 | 195 | 91 | 0.467 |

| Lucene | 2.2 | 247 | 144 | 0.583 |

| Lucene | 2.4 | 340 | 203 | 0.597 |

| Datasets | Accuracy | Precision | Recall | F1 Score | AUC |

|---|---|---|---|---|---|

| Ant-1.7 | 0.839 | 0.650 | 0.533 | 0.519 | 0.687 |

| Camel-1.2 | 0.706 | 0.600 | 0.102 | 0.239 | 0.604 |

| Camel-1.4 | 0.817 | 0.04 | 0.05 | 0.561 | 0.493 |

| Camel-1.6 | 0.877 | 0.433 | 0.224 | 0.440 | 0.605 |

| Jedit-3.2 | 0.763 | 0.900 | 0.528 | 0.680 | 0.699 |

| Jedit-4.0 | 0.922 | 0.800 | 0.386 | 0.421 | 0.734 |

| Jedit-4.1 | 0.730 | 0.875 | 0.486 | 0.513 | 0.819 |

| Jedit-4.2 | 0.978 | 0.0 | 0.500 | 0.500 | 0.892 |

| Log4j-1.0 | 0..740 | 0.433 | 0.661 | 0.322 | 0.736 |

| Log4j-1.1 | 0.829 | 0.725 | 0.625 | 0.625 | 0.705 |

| Log4j-1.2 | 0.902 | 0.902 | 0.950 | 0.948 | 0.500 |

| Lucene-2.0 | 0.767 | 0.300 | 0.873 | 0.518 | 0.676 |

| Lucene-2.2 | 0.580 | 0.650 | 0.850 | 0.701 | 0.850 |

| Lucene-2.4 | 0.806 | 0.588 | 0.753 | 0.740 | 0.849 |

| Datasets | Accuracy | Precision | Recall | F1 Score | AUC |

|---|---|---|---|---|---|

| Ant-1.7 | 0.832 | 0.581 | 0.600 | 0.533 | 0.745 |

| Camel-1.2 | 0.715 | 0.656 | 0.504 | 0.275 | 0.623 |

| Camel-1.4 | 0.834 | 1.0 | 0.333 | 0.365 | 0.517 |

| Camel-1.6 | 0.878 | 0.333 | 0.438 | 0.244 | 0.605 |

| Jedit-3.2 | 0.781 | 0.946 | 0.523 | 0.647 | 0.863 |

| Jedit-4.0 | 0.874 | 0.500 | 0.387 | 0.463 | 0.850 |

| Jedit-4.1 | 0.777 | 0.827 | 0.481 | 0.540 | 0.732 |

| Jedit-4.2 | 0.846 | 1.0 | 0.450 | 0.500 | 0.801 |

| Log4j-1.0 | 0..919 | 0.767 | 0.725 | 0.400 | 0.766 |

| Log4j-1.1 | 0.815 | 0.950 | 0.777 | 0.867 | 0.625 |

| Log4j-1.2 | 0.864 | 0.902 | 0.433 | 0.949 | 0.743 |

| Lucene-2.0 | 0.902 | 0.444 | 0.876 | 0.381 | 0.812 |

| Lucene-2.2 | 0.766 | 0.756 | 0.629 | 0.812 | 0.600 |

| Lucene-2.4 | 0.618 | 0.657 | 0.753 | 0.690 | 0.749 |

| Datasets | LRBWOA | RBWOA | PBWOA | TBWOA | SUSBWOA | TRWOA |

|---|---|---|---|---|---|---|

| Ant-1.7 | 0.690 | 0.687 | 0.664 | 0.688 | 0.664 | 0.987 |

| Camel-1.2 | 0.635 | 0.609 | 0.604 | 0.606 | 0.612 | 0.608 |

| Camel-1.4 | 0.531 | 0.585 | 0.582 | 0.587 | 0.589 | 0.555 |

| Camel-1.6 | 0.593 | 0.575 | 0.575 | 0.567 | 0.576 | 0.679 |

| Jedit-3.2 | 0.803 | 0.744 | 0.735 | 0.736 | 0.722 | 0.955 |

| Jedit-4.0 | 0.569 | 0.560 | 0.571 | 0.550 | 0.567 | 0.655 |

| Jedit-4.1 | 0.569 | 0.641 | 0.634 | 0.625 | 0.620 | 0.855 |

| Jedit-4.2 | 0.782 | 0.718 | 0.701 | 0.725 | 0.730 | 0.665 |

| Log4j-1.0 | 0.777 | 0.644 | 0.640 | 0.653 | 0.657 | 0.638 |

| Log4j-1.1 | 0.562 | 0.605 | 0.713 | 0.704 | 0.712 | 0.777 |

| Log4j-1.2 | 0.597 | 0.486 | 0.643 | 0.660 | 0.627 | 0.925 |

| Lucene-2.0 | 0.504 | 0.560 | 0.499 | 0.496 | 0.762 | 0.751 |

| Lucene-2.2 | 0.533 | 0.650 | 0.528 | 0.551 | 0.507 | 0.654 |

| Lucene-2.4 | 0.642 | 0.634 | 0.615 | 0.634 | 0.536 | 0.761 |

| Datasets | LRBWOA | RBWOA | PBWOA | TBWOA | SUSBWOA | TRBWOA |

|---|---|---|---|---|---|---|

| Ant-1.7 | 0.657 | 0.657 | 0.682 | 0.691 | 0.704 | 0.745 |

| Camel-1.2 | 0.524 | 0.582 | 0.519 | 0.525 | 0.517 | 0.623 |

| Camel-1.4 | 0.555 | 0.499 | 0.511 | 0.517 | 0.511 | 0.517 |

| Camel-1.6 | 0.474 | 0.556 | 0.496 | 0.505 | 0.505 | 0.605 |

| Jedit-3.2 | 0.703 | 0.780 | 0.735 | 0.756 | 0.719 | 0.863 |

| Jedit-4.0 | 0.544 | 0.569 | 0.552 | 0.634 | 0.557 | 0.850 |

| Jedit-4.1 | 0.619 | 0.654 | 0.650 | 0.500 | 0.647 | 0.732 |

| Jedit-4.2 | 0.679 | 0.804 | 0.662 | 0.669 | 0.689 | 0.801 |

| Log4j-1.0 | 0.488 | 0.761 | 0.607 | 0.679 | 0.604 | 0.766 |

| Log4j-1.1 | 0.660 | 0.633 | 0.691 | 0.516 | 0.677 | 0.625 |

| Log4j-1.2 | 0.625 | 0.500 | 0.520 | 0.516 | 0.524 | 0.743 |

| Lucene-2.0 | 0.546 | 0.601 | 0.545 | 0.531 | 0.551 | 0.812 |

| Lucene-2.2 | 0.640 | 0.476 | 0.593 | 0.715 | 0.586 | 0.600 |

| Lucene-2.4 | 0.639 | 0.619 | 0.573 | 0.792 | 0.583 | 0.749 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abubakar, H.; Umar, K.; Auwal, R.; Muhammad, K.; Yusuf, L. Developing a Machine Learning-Based Software Fault Prediction Model Using the Improved Whale Optimization Algorithm. Eng. Proc. 2023, 56, 334. https://doi.org/10.3390/ASEC2023-16307

Abubakar H, Umar K, Auwal R, Muhammad K, Yusuf L. Developing a Machine Learning-Based Software Fault Prediction Model Using the Improved Whale Optimization Algorithm. Engineering Proceedings. 2023; 56(1):334. https://doi.org/10.3390/ASEC2023-16307

Chicago/Turabian StyleAbubakar, Hauwa, Kabir Umar, Rukayya Auwal, Kabir Muhammad, and Lawan Yusuf. 2023. "Developing a Machine Learning-Based Software Fault Prediction Model Using the Improved Whale Optimization Algorithm" Engineering Proceedings 56, no. 1: 334. https://doi.org/10.3390/ASEC2023-16307

APA StyleAbubakar, H., Umar, K., Auwal, R., Muhammad, K., & Yusuf, L. (2023). Developing a Machine Learning-Based Software Fault Prediction Model Using the Improved Whale Optimization Algorithm. Engineering Proceedings, 56(1), 334. https://doi.org/10.3390/ASEC2023-16307