Managing the Performance of Asset Acquisition and Operation with Decision Support Tools

Abstract

:1. Introduction

2. Background

Decision Support Tools

3. Research Approach

3.1. Case Study—National Grid Electricity Transmissions (NGET)

3.2. Defining the Approach Requirements

4. The DST Performance Management Approach

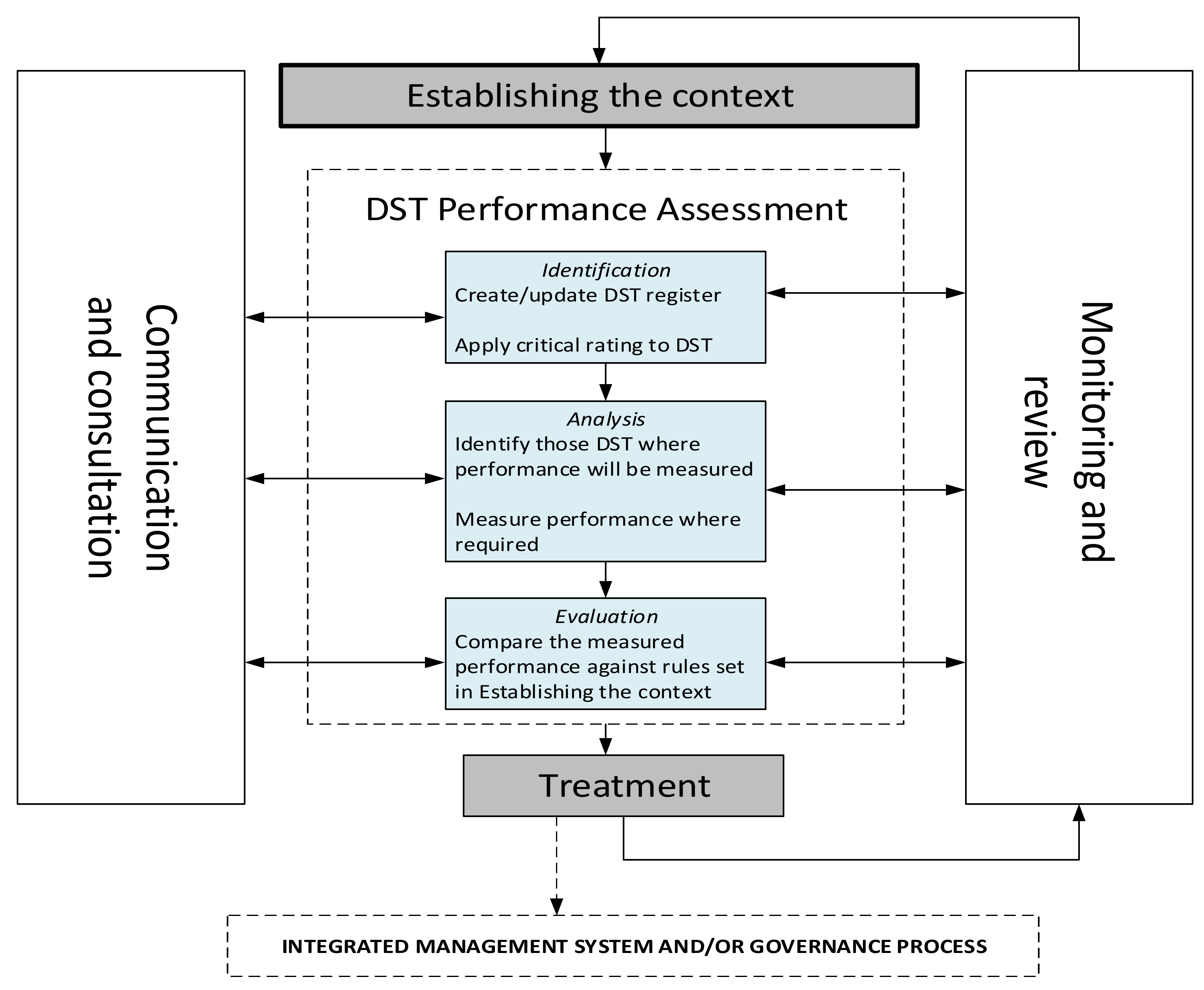

The DST Performance Management Process

- Establishing how critical a DST is;

- Determining which DSTs shall have their performance measured;

- Evaluation of DST performance assessments;

- The treatment applied given a certain performance assessment outcome.

5. Evaluation

- Are there any stakeholders who have been missed/should not be included?

- Are there any stakeholder requirements that have been missed/should not be included?

- Are there any approach requirements which are missing/should not be included?

- Does the process appear to satisfy the ten approach requirements?

6. Conclusions

7. Future Work

- The aim of this research was to create a conceptual approach for measuring DST performance which is both rigorous and practical. The logic and usability of the process have been validated by NGET subject matter experts. However, it is accepted that what may be logical and useable in theory, may not be so in practice. NGET have declared an intention to implement the process within their organisation [69]. Research efforts should look to conduct field test validation studies of both the process and underpinning techniques within both NGET and other organisations;

- The transferability of the approach to the water sector was evaluated as part of the programme of work [13]. Although providing some evidence, further studies across a wider sample of sectors and organisations are required;

- Key to the uptake of the approach by industry is being able to demonstrate that it has value. In this regard, methods and studies to assess the whole life cost versus the benefit of DST performance management are required.

Author Contributions

Funding

Conflicts of Interest

References

- PricewaterhouseCoopers LLP; Oxford Economics. Capital Project and Infrastructure Spending Outlook to 2025. 2015. Available online: https://www.gihub.org/resources/publications/capital-project-and-infrastructure-spending-outlook-to-2025/ (accessed on 7 June 2020).

- McKinsey Global Institute. Infrastructure Productivity: How to Save $1 Trillion a Year; McKinsey Global Institute: New York, NY, USA, 2013. [Google Scholar]

- Alavi, M.; Joachimsthaler, E.A. Revisiting DSS implementation research: A meta-analysis of the literature and suggestions for researchers. MIS Q. Manag. Inf. Syst. 1992, 16, 95–113. [Google Scholar] [CrossRef] [Green Version]

- Finlay, P.N.; Forghani, M. A classification of success factors for decision support systems. J. Strateg. Inf. Syst. 1998, 7, 52–70. [Google Scholar] [CrossRef]

- Hicks, B.J.; Matthews, J. The barriers to realising sustainable process improvement: A root cause analysis of paradigms for manufacturing systems improvement. Int. J. Comput. Integr. Manuf. 2010, 23, 585–602. [Google Scholar] [CrossRef] [Green Version]

- JISC. Change Management. Available online: https://www.jisc.ac.uk/guides/change-management (accessed on 28 January 2016).

- Salazar, A.J.; Sawyer, S. Information Technology in Organizations and Electronic Markets; World Scientific Publishing Co: Singapore, 2007. [Google Scholar]

- Sauer, C. Why Information Systems Fail: A Case Study Approach; Alfred Waller Ltd.: Henley-on-Thames, UK, 1993. [Google Scholar]

- Streit, J.; Pizka, M. Why Software Quality Improvement Fails (and How to Succeed Nevertheless). In Proceedings of the ICSE ’11: Proceedings of the 33rd International Conference on Software Engineering, Honolulu, HI, USA, 21–18 May 2011; ACM: New York, NY, USA, 2011; pp. 726–735. [Google Scholar]

- Studer, Q. Making process improvement stick. Healthc. Financ. Manag. 2014, 68, 90–96. [Google Scholar]

- The Standish Group Report. The Chaos Report. Available online: http://www.standishgroup.com/outline (accessed on 26 January 2018).

- Van Dyk, D.J.; Pretorius, L. A Systems Thinking Approach to the Sustainability of Quality Improvement Programmes. S Afr. J. Ind. Eng. 2014, 25, 71. [Google Scholar] [CrossRef] [Green Version]

- Lattanzio, S. Asset Management Decision Support Tools: A Conceptual Approach for Managing their Performance; The University of Bath: Bath, UK, 2018. [Google Scholar]

- ISO. ISO/TC 251 Managing Assets in the Context of Asset Management; International Organization for Standardization: Geneva, Switzerland, 2017. [Google Scholar]

- Van Der Lei, T.; Herder, P.; Wijnia, Y. Asset Management: The State of the Art in Europe from A Life Cycle Perspective; Springer Netherlands: Dordrecht, The Netherlands, 2012; ISBN 9789400727243. [Google Scholar]

- Zuashkiani, A.; Schoenmaker, R.; Parlikad, A.; Jafari, M. A critical examination of asset management curriculum in Europe, North America and Australia. In Proceedings of the Asset Management Conference 2014, London, UK, 27–28 November 2014. [Google Scholar]

- Too, E.G. A Framework for Strategic Infrastructure Asset Management. In Definitions, Concepts and Scope of Engineering Asset Management Engineering Asset Management Review; Springer: London, UK, 2010; Volume 1, pp. 31–62. [Google Scholar]

- Ofgem. Refocusing Ofgem’s ARM 2002 Survey to BSI-PAS 55 Certification; 2005. Available online: https://www.ofgem.gov.uk/publications-and-updates/refocusing-ofgems-arm-2002-survey-bsi-pas-55-certification (accessed on 7 June 2020).

- British Standards Institute. PAS 55; British Standards Institute: London, UK, 2008. [Google Scholar]

- BS ISO 55000 Series: 2014 Asset Management; BSI Group: London, UK, 2014.

- Papacharalampou, C.; McManus, M.; Newnes, L.B.; Green, D. Catchment metabolism: Integrating natural capital in the asset management portfolio of the water sector. J. Clean. Prod. 2017, 142, 1994–2005. [Google Scholar] [CrossRef] [Green Version]

- Gibbons, P. People as Assets. In Assets; Institute of Asset Management: London, UK, 2019; pp. 9–11. [Google Scholar]

- Kaney, M. Data, data everywhere—But how do we use it? In Assets; Institute of Asset Management: London, UK, 2018; p. 8. [Google Scholar]

- BS ISO/IEC 19770-1:2017 Information Technology—IT Asset Management—Part 1: IT Asset Management Systems—Requirements. 2017. Available online: https://www.iso.org/obp/ui/#iso:std:iso-iec:19770:-1:ed-3:v1:en (accessed on 7 June 2020).

- Bhamidipati, S. Simulation framework for asset management in climate-change adaptation of transportation infrastructure. Transp. Res. Procedia 2015, 8, 17–28. [Google Scholar] [CrossRef]

- Doorley, R.; Pakrashi, V.; Szeto, W.Y.; Ghosh, B. Designing cycle networks to maximize health, environmental, and travel time impacts: An optimization-based approach. Int. J. Sustain. Transp. 2020, 14, 361–374. [Google Scholar] [CrossRef]

- Havelaar, M.; Jaspers, W.; Wolfert, A.; Van Nederveen, G.A.; Auping, W.L. Long-term planning within complex and dynamic infrastructure systems. In Proceedings of the Life-Cycle Analysis and Assessment in Civil Engineering: Towards an Integrated Vision: 6th International Symposium on Life-Cycle Civil Engineering (IALCCE 2018), Ghent, Belgium, 28–31 October 2018; pp. 993–998. [Google Scholar]

- Shahtaheri, Y.; Flint, M.M.; de la Garza, J.M. Sustainable Infrastructure Multi-Criteria Preference Assessment of Alternatives for Early Design. Autom. Constr. 2018, 96, 16–28. [Google Scholar] [CrossRef]

- Ouammi, A.; Achour, Y.; Zejli, D.; Dagdougui, H. Supervisory Model Predictive Control for Optimal Energy Management of Networked Smart Greenhouses Integrated Microgrid. IEEE Trans. Autom. Sci. Eng. 2020, 17, 117–128. [Google Scholar] [CrossRef]

- Rahmawati, S.D.; Whitson, C.H.; Foss, B.; Kuntadi, A. Integrated field operation and optimization. J. Pet. Sci. Eng. 2012, 81, 161–170. [Google Scholar] [CrossRef]

- Roberts, K.P.; Turner, D.A.; Coello, J.; Stringfellow, A.M.; Bello, I.A.; Powrie, W.; Watson, G.V.R. SWIMS: A dynamic life cycle-based optimisation and decision support tool for solid waste management. J. Clean. Prod. 2018, 196, 547–563. [Google Scholar] [CrossRef] [Green Version]

- Smith, J.S.; Safferman, S.I.; Saffron, C.M. Development and application of a decision support tool for biomass co-firing in existing coal-fired power plants. J. Clean. Prod. 2019, 236. [Google Scholar] [CrossRef]

- Dunn, R.; Harwood, K. Bridge Asset Management in Hertfordshire-Now and in the future. In Proceedings of the Asset Management Conference 2015, London, UK, 25–26 November 2015. [Google Scholar]

- Elsawah, H.; Bakry, I.; Moselhi, O. Decision support model for integrated risk assessment and prioritization of intervention plans of municipal infrastructure. J. Pipeline Syst. Eng. Pract. 2016, 7. [Google Scholar] [CrossRef]

- Geelen, C.V.C.; Yntema, D.R.; Molenaar, J.; Keesman, K.J. Monitoring Support for Water Distribution Systems based on Pressure Sensor Data. Water Resour. Manag. 2019, 33, 3339–3353. [Google Scholar] [CrossRef] [Green Version]

- Maniatis, G.; Williams, R.D.; Hoey, T.B.; Hicks, J.; Carroll, W. A decision support tool for assessing risks to above-ground river pipeline crossings. Proc. Inst. Civ. Eng. Water Manag. 2020, 173, 87–100. [Google Scholar] [CrossRef] [Green Version]

- Alian, A. Processing the past, predicting the future. In Assets; Institute of Asset Management: London, UK, 2018; pp. 12–15. [Google Scholar]

- Black, M. Navigating Data. In Assets; Institute of Asset Management: London, UK, 2018; pp. 14–16. [Google Scholar]

- DiMatteo, S. From manual to automatic. In Assets; Institute of Asset Management: London, UK, 2019; pp. 16–17. [Google Scholar]

- Herrin, C. Towering Achievements. In Assets; Institute of Asset Management: London, UK, 2019; p. 22. [Google Scholar]

- Hobbs, R. A new model for railways. In Assets; Institute of Asset Management: London, UK, 2020; pp. 24–26. [Google Scholar]

- Jacobs. Sifting the data lake. In Assets; Institute of Asset Management: London, UK, 2020; p. 5. [Google Scholar]

- IAM. Subject 8: Life Cycle Value Realisation; Institute of Asset Management: London, UK, 2015. [Google Scholar]

- Institute of asset management. Available online: https://theiam.org/knowledge/subject-8-life-cycle-value-realisation/#:~:text=The%20Life%20Cycle%20Value%20Realisation,or%20renewal%2Fdisposal%20of%20assets (accessed on 8 June 2020).

- Arnott, D. Decision support systems evolution: Framework, case study and research agenda. Eur. J. Inf. Syst. 2004, 13, 247–259. [Google Scholar] [CrossRef]

- Courbon, J.-C. User-Centred DSS Design and Implementation. In Implementing Systems for Supporting Management Decisions: Concepts; Methods and Experiences, Chapman & Hall: London, UK, 1996. [Google Scholar]

- Keen, P.G.W. Adaptive Design for Decision Support Systems. ACM Sigmis Database 1980, 1, 15–25. [Google Scholar] [CrossRef]

- Sprague, R.H. A framework for the development of decision support systems. MIS Q. 1980, 4, 1–26. [Google Scholar] [CrossRef]

- Denscombe, M. The Good Research Guide, 4th ed.; Open University Press: Berkshire, UK, 2010. [Google Scholar]

- National Grid. National Grid Annual Report and Accounts 2016/17; 2017. Available online: https://investors.nationalgrid.com/news-and-reports/reports/2016-17/plc (accessed on 7 June 2020).

- National Grid. Policy Statement (Transmission), WLVF PS(T); National Grid: Warwick, UK, 2013. [Google Scholar]

- National Grid. Network Output Measures Methodology; National Grid: Warwick, UK, 2010. [Google Scholar]

- National Grid. National Grid Analytics and Our SAM Journey So Far; National Grid: Warwick, UK, 2015. [Google Scholar]

- Alter, S. A taxonomy of Decision Support Systems. Sloan Manag. Rev. 1977, 19, 39. [Google Scholar]

- National Grid. SAM Benefits Update <Restricted Access>; National Grid: Warwick, UK, 2017. [Google Scholar]

- National Grid. Strategy Enabler: The Strategic Asset Management (SAM) Programme; National Grid: Warwick, UK, 2015. [Google Scholar]

- National Grid. Electricity Transmission EUC output <Restricted Output>; National Grid: Warwick, UK, 2017. [Google Scholar]

- Derrick, D. Transcription of Semi Structured Interview between Susan Lattanzio (SL) University of Bath and Derrick Dunkley (DD); National Grid plc. Conducted 04/02/2015 <<Restricted Access>>; Susan, L., Ed.; National Grid: Warwick, UK, 2015. [Google Scholar]

- Callele, D.; Wnuk, K.; Penzenstadler, B. New Frontiers for Requirements Engineering. In Proceedings of the 2017 IEEE 25th International Requirements Engineering Conference (RE), Lisbon, Portugal, 4–8 September 2017; pp. 184–193. [Google Scholar] [CrossRef]

- Freeman, R.E. Strategic Management a Stakeholder Approach; Pitman: Boston, MA, USA, 1984. [Google Scholar]

- Zowghi, D.; Coulin, C. Requirements Elicitation: A survey of Techniques, Approaches, and Tools. In Engineering and Managing Software Requirements; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef] [Green Version]

- BS EN ISO 9001: 2015 Quality Management System Requirements; BSI Group: London, UK, 2015.

- BS ISO 31000: 2009 Risk Management-Principles and Guidelines; BSI Group: London, UK, 2009.

- BS ISO 31000 Series: 2018 Risk Management—Guidelines; BSI Group: London, UK, 2018.

- BS EN ISO 9000: 2015 Quality Managment Systems; BSI Group: London, UK, 2015.

- Bell, J. Doing Your Research Project; Open University Press: Maidenhead, UK, 2010. [Google Scholar]

- Gray, D.E. Doing Research in the Real World; SAGE Publications: London, UK, 2014. [Google Scholar]

- ENA. Smarter Networks Portal. Available online: https://www.smarternetworks.org/project/nia_nget0153/documents (accessed on 4 May 2020).

- Lattanzio, S. Making the Right Choice. In Assets; Institute of Asset Management: London, UK, 2017; p. 8. [Google Scholar]

- Lattanzio, S. Decision Governance. In Qual. World; Chartered Institute of Quality Management: London, UK, 2019. [Google Scholar]

| Key Concepts | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Stakeholder Requirements | Process Based | Process Integration | Communication & Consultation | Evolving | Monitoring and Continual Improvement | Life Cycle Approach | Defined Leadership | Contextual | Risk Based |

| Life-cycle value achieving customer requirements and over delivery of regulatory performance | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Industry compliant conforms to ISO 55000 and ISO 31000 | ✓ | ✓ | |||||||

| Adaptable to asset base, satisfies data requirements and organisation systems | ✓ | ✓ | ✓ | ||||||

| Performance to be agile. Accurate tool that produces validated results | ✓ | ✓ | |||||||

| Technical competence reflecting asset position and network risk | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Life-cycle management, safe, credible, economic and efficient. | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Value, safe environmentally. Adhering to International Standard | ✓ | ✓ | ✓ | ||||||

| Delivers credible results | ✓ | ✓ | ✓ | ||||||

| Agile can be upgraded | ✓ | ✓ | |||||||

| We know how it works | ✓ | ✓ | ✓ | ||||||

| Safe, reliable, and efficient outputs which are understood | ✓ | ✓ | ✓ | ✓ | |||||

| Consistent with consumer value mechanistic approach easy to understand translating inputs, process, outputs. | ✓ | ✓ | |||||||

| Transparent, consistent with Scots TO’s | ✓ | ||||||||

| Stable – repeatable and reproducible | ✓ | ||||||||

| Key Concept | Example excerpt from the ISO 5500x:2014 Standard coded to this theme |

| Process based | “The organization should develop processes to provide for the systematic measurement, monitoring, analysis and evaluation of the organization’s assets”. |

| Process integration | “A factor of successful asset management is the ability to integrate asset management processes, activities and data with those of other organizational functions, e.g. quality, accounting, safety, risk and human resources” |

| Consultation and communication | “Failure to both communicate and consult in an appropriate way about asset management activities can in itself constitute a risk, because it could later prevent an organization from fulfilling its objectives”. |

| Evolving | “The organization should outline how it will establish, implement, maintain and improve the system”. |

| Monitoring and continual Improvement | It is a “concept that is applicable to the assets, the asset management activities and the asset management system, including those activities or processes which are outsourced”. The identification of “Opportunities for improvement can be determined directly through monitoring the performance of the asset management system, and through monitoring asset performance”. |

| Life cycle approach | The stages of an asset’s life are undefined but “can start with the conception of the need for the asset, through to its disposal, and includes the managing of any potential post disposal liabilities”. |

| Defined leadership | The success in establishing, operating, and improving AM is dependent on the “leadership and commitment from all managerial levels” |

| Contextual | What constitutes value is contextual, it “will depend on these objectives, the nature and purpose of the organization and the needs and expectations of its stakeholders”. |

| Risk-Based | “Asset management translates the organization’s objectives into asset-related decisions, plans and activities, using a risk-based approach” |

| Respondent 1 | Respondent 2 | ||

|---|---|---|---|

| Job Title | Information Quality Officer | Analytics Development Leader | |

| 1A | Are there any stakeholders identified who you feel should not be included? If so, provide detail and reasoning. | No | No |

| 1B | Are there any approach stakeholders who you feel have not been identified? If so, provide detail and reasoning. | Suppliers, i.e., IBM, Wipro who provide services to build the DST (SAM) platform | Possibly suppliers as they would have their own input to the process. |

| 2A | Are there any stakeholder requirements you feel should not be included? If so, provide the detail and reasoning. | No | No |

| 2B | Are there any stakeholder requirements which you feel have not been identified? If so, provide the detail and reasoning. | No | No |

| 3A | Within the stakeholder requirements it was identified that the approach should conform to ISO 55000 and ISO 31000. Do you agree with that statement? YES/NO. If ‘No’ provide your reasoning. | Yes | Yes |

| 4A | Are there any approach requirements you feel should not be included? If so, provide the detail and justification. | No | No |

| 4B | Are there any approach requirements which you feel have not been identified? If so, provide the detail and justification. | No | No |

| Process Element | Risk Management Process | DST Performance Management Process |

|---|---|---|

| Communication and consultation | ✓ | ✓ |

| Monitoring and review | ✓ | ✓ |

| Establishing the context * | ✓ | ✓ |

| Assessment | Risk assessment | DST performance assessment |

| Recording and Reporting (Added in the 2018 revision of the risk management process [65]) | Separate element within the process | Incorporated within the identification stage of the DST performance assessment |

| Department | Job Title | Responsibilities | |

|---|---|---|---|

| Participant 1 | Asset Policy | Asset Management Development Engineer | Manager of DST users (including tools used within regulatory reporting) |

| Participant 2 | Process and Enablement | Information Quality Manager | Assurance of asset data Governance of asset data and information |

| Participant 3 | Asset Policy | Asset Management Development Engineer | FMEA (failure risk effect analysis) and risk modelling |

| Participant 4 | Asset Policy | Asset Management Development Engineer | DST modeler |

| Participant 5 | Asset Policy | Asset Management Development Engineer | Asset risk modeler |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lattanzio, S.; Newnes, L.; McManus, M.; Dunkley, D. Managing the Performance of Asset Acquisition and Operation with Decision Support Tools. CivilEng 2020, 1, 10-25. https://doi.org/10.3390/civileng1010002

Lattanzio S, Newnes L, McManus M, Dunkley D. Managing the Performance of Asset Acquisition and Operation with Decision Support Tools. CivilEng. 2020; 1(1):10-25. https://doi.org/10.3390/civileng1010002

Chicago/Turabian StyleLattanzio, Susan, Linda Newnes, Marcelle McManus, and Derrick Dunkley. 2020. "Managing the Performance of Asset Acquisition and Operation with Decision Support Tools" CivilEng 1, no. 1: 10-25. https://doi.org/10.3390/civileng1010002