1. Introduction: The (Elusive) Quest for Debiasing

Cognitive heuristics are a well-documented trait of human cognition. Heuristics represent an efficient way for making inferences and decisions that are sufficiently precise and sufficiently accurate for maximizing utility and achieving goals [

1,

2]. It has been observed for decades, however, that inference- and decision-making deviates from theoretically desirable, “pure” rationality [

3]. There is ample evidence that cognitive heuristics, useful though they are in general, can lead to (sometimes drastically) irrational inferences and decisions [

4]. In such instances, cognitive heuristics lead to inferences and behavior that is often referred to as “biases”.

Mitigating biases through targeted “debiasing” interventions could potentially have an enormous positive impact [

5]; not just for individuals, but for humankind as a whole. After all, even the most consequential decisions in politics or business, to name just two societal domains that deeply affect everyone’s wellbeing, are made by humans who are, by their very nature, bias-prone. Unfortunately, cognitive biases have proven to be more resilient than might be to our liking. One of the main challenges with effective debiasing interventions and strategies was outlined by [

6]: There seems to be a correlation between the amount of (cognitive) resources required for debiasing interventions and the scope of the positive impact of debiasing interventions. Ref. [

6] introduced the distinction between

procedural and

structure modifying debiasing interventions as a framework for distinguishing between two general types of debiasing that differ in terms of their resource requiremenets and the breadth of their positive impact.

On one hand, debiasing interventions that are

procedural in nature and affect a specific decision-making process quasi-mechanistically are relatively easy to devise and implement, but they usually apply only to specific decision-making situations. This means that it can be relatively easy to change and improve a

specific decision-making procedure, but those changes do not necessarily improve inference- and decision-making in general. Changing the procedure with which a decision is made affects the quality of a decision primarily from “without” (the external decision-making context) rather than from “within” (a person’s cognition). Some examples debiasing interventions that are procedural in nature are checklists [

7,

8,

9,

10], mechanisms for slowing down or pausing decision-making processes [

11,

12], and consider-the-alternative stimuli [

13,

14]. Even though there is evidence that such interventions can improve decision-making in the specific decision-making contexts in which they are applied, there is no evidence that their positive effects extend to or spill over into other decision-making contexts. For example, there is evidence that consider-the-alternative stimuli can help avoid the confirmation bias [

15] and related biases in abductive [

16] decision-making situations such as clinical diagnostic reasoning [

17,

18] or intelligence assessment [

19], but there is no plausible evidence to suggest that such targeted interventions improve inferences and decision-making beyond the narrow contexts they are applied to.

On the other hand, debiasing interventions that are structure modifying in nature and that allow individuals to modify the internal representation of the decision-making situation have a broader, more generalized scope, but they tend to be more demanding in terms of resources such as time and active cognitive effort. Debiasing interventions that are structure modifying in nature aim to affect inferences and decision-making from “within”: Rather than changing the external inference- and decision-making procedure, structure modifying debiasing interventions aim to, generally speaking, improve cognittion. Such debiasing interventions aim to tackle the metaphorical root of the problem, cognitive biases themselves, rather than change external decision-making factors in order to reduce the probability of biased decision-making.

Structure modifying debiasing interventions have been described as being aimed at

metacognition [

20,

21], the knowledge or cognition about cognitive phenomena [

22]. If we understand structure modifying debiasing interventions as interventions aimed at metacognition, then their goal can be understood as the general triggering of metacognitive experiences. Such experiences should, ideally, improve the quality of beliefs as well as the quality of decision-making. There is some evidence that metacognitive experiences, such as the ease of retrieval of relevant information, can affect the prevalence of cognitive biases [

23,

24]. More generally, the theoretical perspective of dual process theory [

25,

26] lends credibility to this metacognitive view on structure modifying debiasing interventions. If there is (an idealized) mode of slower and more deliberate thinking that is less reliant on heuristics, then structure modifying debiasing interventions aimed at inducing metacognitive experiences should be beneficial for entering that cognitive mode.

A considerable part of the existing literature on structure modifying, metacognition-related debiasing interventions is making plausible suggestions for debiasing interventions, but those suggestions are often not directly being put to the empirical test [

17,

27]. There is, however, a growing body of recent empirical explorations of carefully designed interventions that suggest that this type of more demanding and more general metacognition-related debiasing intervention can be effective [

28,

29,

30,

31]. However, there are also studies that have found no positive effects [

32,

33].

One of the main drawbacks of structure modifying debiasing interventions is that they are more costly to implement in terms of time and cognitive effort. The term “cognitive forcing” that has been proposed as a descriptor for structure modifying debiasing interventions [

34] captures that cost in a figurative way: Structure modifying debiasing interventions usually require decision-makers to learn new information and to subsequently “force” themselves to apply that knowledge in decision-making situations in which they would not normally do so.

Procedural and structure modifying debiasing interventions both have benefits and drawbacks. Given their respective benefits and drawbacks, a potential “third way” for debiasing intervention seems potentially attractive: Interventions that are easy to implement and apply, but that are not limited to specific and narrow decision-making contexts.

A “Third Way” for Debiasing Interventions?

Procedural debiasing interventions are easy to devise and implement, but their effectiveness is limited. The effectivenes of structure modifying debiasing interventions that target metacognition is probably broader and more generalized, but they are more costly to devise, implement, and apply.

In theory, debiasing interventions that combine some of the benefits of procedural and structure modifying interventions while avoiding some of their disadvantages could be an attractive “third way” for debiasing interventions. A “third way” debiasing intervention would need to be simple and easily deployable in practice while still targeting metacognitive experiences. In a recent study, Ref. [

35] tested an intervention that has such “third way” properties. The researchers designed a relatively simple checklist that can be applied in a procedural manner, without the need for time-consuming training and instructions. However, the checklist was designed to induce a form of metacognitive experience by triggering clinicians to actively think about the way they are making inferences and decisions in a given decision-making situation.

Even though specific intervention was designed specifically for the professional context of clinicians, the “third way” of debiasing interventions, I believe, merits further attention. Debiasing interventions that follow this “third way” approach could potentially prove to be a useful general addition to the repertoire of debiasing interventions. For example, “third way” debiasing interventions could be a viable option in real-world decision-making contexts that do not allow for costly structure modifying interventions, but that still require a form

The goal of the present study is to introduce and test a novel “third way” debiasing intervention. By design, the intervention of interest is compact and almost trivially easy to implement. For that reason, I refer to it as a “micro-intervention”. The micro-intervention that I introduce and test is not merely a compact tool that offers information about cognitive biases (Not least because, in one case, such a “third way” intervention that consisted of a checklist with information on cognitive biases failed to reduce error [

36]), but rather an intervention that aims to increase

epistemic rationality with little to no effort.

2. An Epistemologically Informed Micro-Intervention

2.1. The Importance of Epistemic Rationality

Cognitive biases are an impediment to rationality, and debiasing aims to increase rationality. However, what, exactly, do we mean when we refer to “rationality”? A preliminary, ex negativo answer would be to define rationality as the state of human cognition and action given the absence of cognitive biases. Such a definition, however, is not only lacking, but it is fallacious (This would be a case of denying the antecedent. The absence of cognitive biases does not automatically result in rationality because there are other factors that can cause irrational beliefs and actions.). An imaginary perfectly rational actor might not be prone to cognitive biases, but there is more to rationality than just the absence of biases.

The exact meaning and definition of rationality is not a settled question. In contemporary academic discourse, two types of rationality are generally of interest:

Instrumental rationality and

epistemic rationality. Instrumental rationality means that an actor makes decisions in such a way that they achieve their goals [

37]. Instrumental rationality is the foundation of rational choice theory, and research on cognitive heuristics and biases is usually concerned with the discrepancy between theoretically expected rational choice decision-making and the observable real-world decision-making [

38]. However, instrumental, goal-oriented decision-making is not all there is to rationality. When we are making conscious and deliberate decisions, for example, those decisions are based on a layer of doxastic (belief-like) attitudes towards reality, most important of which are, arguably, beliefs themselves. The concern with the quality of our beliefs falls into the domain of epistemic rationality.

Epistemic rationality means that an actor is concerned with adopting beliefs about reality that are as accurate and as precise as possible [

39]. That does not, however, mean that an imaginary epistemically rational actor is epistemically rational simply because they happen to hold true beliefs. For example, a belief can “accidentally” be true, but the reasons for holding that belief might be quite irrational (Such constellations can generally be described as “epistemic luck” [

40]). I might happen to believe that the Earth is spherical (a true belief), but my justification for that belief might be that all celestial bodies are giant tennis balls (an irrational justification). Epistemic rationality, therefore, is concerned with the process of arriving at a belief and not necessarily (only) with the objective veracity of a belief.

Cognitive biases affect both instrumental and epistemic rationality. Biases can prevent us from achieving goals, and they can reduce the quality of our belief-formation. From a cognitive perspective, the root cause of dysfunctional instrumental rationality are problems with epistemic rationality; irrational decisions are usually a result of inappropriate doxastic attitudes such as beliefs. “Third way” debiasing interventions that aim to induce beneficial metacognitive experiences should therefore attempt to increase epistemic rationality and not just mitigate deficits of instrumental rationality. It should be noted, for the sake of completeness, that the distinction between instrumental and epistemic rationality might be somewhat moot altogether. Epistemic rationality is the underpinning of instrumental rationality, but it can be argued that epistemic rationality itself is instrumental in nature. After all, by being epistemically rational, an actor pursues the goal of arriving at accurate and precise beliefs [

41]. For the purpose of this study, I uphold the distinction between instrumental and epistemic rationality in order to make it clear that the goal of the micro-intervention that is tested is to increase the quality of belief-formation. Ultimately, however, increasing the quality of belief-formation can be regarded as an instrumental goal.

2.2. An Epistemically Rational Actor in Practice

A debiasing intervention aimed at reducing the susceptibility to cognitive biases by increasing epistemic rationality should address two specific dimensions of epistemic rationality: Justification and credence.

Justification means that an actor is rational in holding a belief if the actor has reasons for doing so, for example because there is empirical evidence that points to the veracity of the belief [

42,

43]. Justificationism in general and evidentialism in particular are not universally accepted in contemporary epistemology [

44], but they represent a fairly uncontroversial “mainstream” view. In practical terms, the dimension of justification means that an actor is rational when they have evidence in support of the belief they are holding. For example, person A and person B might both believe that the Earth is spherical, but person A might simply rely on their intuition, whereas person B might cite some astronomical and geographical evidence as a justification. In this case, even though A and B both happen to hold a true belief, we would regard person B as more rational because their reasons for holding the belief they are holding are better (An important ongoing debate in epistemic justificationism is the question of whether rational justification is primarily internal or also external to a person’s cognition [

45]. I tend to regard the internalist view proposed by [

46] as convincing, since the question of rational belief-formation is, in many demonstrable cases, divorced from the question of whether the beliefs a person arrives at are, in fact, true. However, the internalism vs. externalism debate is not central to the concept of “third way” debiasing interventions that are explored in this paper.).

Credence refers to the idea that an actor should not merely have belief or disbelief in some proposition. Instead, it is more rational for an actor to express their attitude towards a proposition in terms of

degrees of belief, depending on the strength of the available evidence [

47]. In principle, degrees of belief could be expressed on any arbitrary scale, but the most suitable scale for expressing beliefs is probability. Indeed, one prominent school of thought regards probabilities as ultimately nothing more than expressions of degrees of belief [

48]. This view of probability is the foundation of Bayesian flavors of epistemology [

49,

50]. In practical terms, the dimension of credence means that an epistemically rational actor should probabilistically express how strongly they believe what they believe, given the available evidence. For example, if person A from the example above expressed that they believed the Earth to be round with probability 1, we might suspect that person A is being severely overconfident, given the quality of their justification (merely intuition).

2.3. Designing a “Worst-Case” Micro-Intervention

In the preceding subsection, I have outlined the argument that a debiasing intervention aimed at increasing epistemic rationality should target the dimension of justification and credence. There are many ways in which that conceptual basis of an intervention could be put into practice. For example, an intervention could consist of a formal apparatus whereby people have to explicitly state reasons for a belief they hold as well as explicitly quantify their belief on a scale from 0 to 1. Such an apparatus might represent an interesting (and attention-worthy) intervention in its own right, but it would hardly qualify as a micro-intervention. A veritable micro-intervention has to, I believe, be so trivially easy to implement in a decision-making situation that it requires zero preparation and essentially zero effort. We can describe such a design principle as a “worst-case” intervention: An intervention that can be used in real-world decision making situations even when there are no resources to implement interventions and even when the motivation on the part of the decision-makers themselves is low.

For the purpose of the present study, I design the worst-case micro-intervention as a prompt that consists of merely two questions:

The goal of this micro-intervention is to trigger metacognitive experiences that pertain to the dimensions of credence (How certain am I?) and justification (Why?). If this micro-intervention is effective, it should produce a noticeable improvement of decision-making even in worst-case circumstances (low motivation on the part of the decision-makers paired with no training or formal instructions).

In an experimental setting, the effectiveness of this micro-intervention can be compared against a control group without the micro-intervention. For the sake of completeness, another micro-intervention should be added to the experimental setting: a micro-intervention that consists of a mere stopping intervention. It is possible that the main micro-intervention that I test is effective, but that the true effect is an underlying stopping mechanism and not the epistemological aspects of the micro-intervention. Furthermore, the micro-intervention of interest consists of two separate questions. For the sake of completeness, it is appropriate to test variants of micro-interventions that consist of only one of the two questions.

2.4. The Potential Real-World Benefits of the Proposed Micro-Intervention

The “third way” micro-intervention described above is tested under experimental “laboratory” conditions; the design and results are presented below. However, first, a brief discussion of the potential real-world benefits of such an intervention is in order. If we bother to evaluate the evidence this intervention (or that of “third way” interventions in general), we should be motivated by potential tangible benefits for real-world decision-making. What might those benefits be?

It is unrealistic to expect the proposed micro-intervention to have overall large positive effects. From a purely practical perspective, we can expect problems such as attrition in the usage of the micro-intervention if it is introduced as a veritable micro-intervention (without extensive training or instruction, and without controlling whether it is applied). For example, if an organization were to introduce the proposed micro-intervention as a tool to all employees with management status (for example, in the form of an email memo or an agenda item during a meeting), many of those employees might forget about the micro-intervention after a while, or they might even fail to see its benefit in the first place. On a more conceptual level, we can expect that not all bias-related rationality deficits can be mitigated with the proposed micro-intervention because the strength of specific biases might be orders of magnitude greater than the metacognitive experiences that are induced by the micro-intervention.

I believe that the real-world benefit of the proposed micro-intervention would lie in a small relative reduction of the susceptibility to biases through what I would label as habitualized irritation. It is unrealistic to expect the proposed micro-intervention to produce great ad hoc improvements in epistemic rationality, but prompting oneself into thinking about one’s confidence in and one’s justification for a judgement or decision might shift some decisions away from a gut-level and more towards a deliberate cognitive effort.

The real-world benefit of the proposed micro-intervention (and of “third way” interventions in general) is probably greatest in organizational contexts in which the intervention is integrated into decision-making processes, whereby decision-makers are regularly prompted to use the intervention as a tool for their own decision-making. In such a setting, the risk of biased decision-making might still only slightly decrease per singular decision, but from a cumulative and aggregate perspective over time, the beneficial effects might be nontrivial.

3. Hypotheses

The effectiveness of the proposed micro-intervention can be tested empirically in an experimental setting by comparing the decision-making quality of an intervention group with that of a control group without any intervention. However, for the sake of completeness, additional comparisions can be made. Given the theoretical background, the micro-intervention that consists of both questions should have a greater effect than just one question on its own (either “How certain am I?” or “Why?”). However, even only one of the two questions should have a positive impact compared to the control group without an intervention. In addition, it is conceivable that the micro-intervention or the partial micro-interventions (that contain only one of the two questions) do have an effect, but that the effect is merely the result of slowing down a bit. Therefore, an additional stopping intervention should be tested against the micro-intervention, the partial micro-intervention, and the control group.

These assumptions lead to the following three hypotheses:

Hypothesis 1 (H1). The combined probability + justification intervention is the most effective intervention.

Hypothesis 2 (H2). The mere stopping intervention is less effective than the combined probability + justification intervention.

Hypothesis 3 (H3). All interventions (mere stopping, probability, justification, probability + justification) will have a positive effect compared to the control group without any interventions.

At first glance, Hypothesis 2 (H2) might seem redundant given Hypothesis 1 (H1), but its purpose is to explicitly describe the expectation that

and not

4. Design, Data, Methods

4.1. Design

In order to test the hypotheses, I have conducted three separate experiments. Each experiment was designed to test the effects of the micro-interventions on a different cognitive bias. Experiment 1 was designed around the

gambler’s fallacy [

51,

52], experiment 2 around the

base rate fallacy [

52], and experiment 3 around the

conjunction fallacy [

53]. I have chosen these three probability-oriented biases because they allow for the creation of clear-cut, yes-or-no scenarios and questions.

Experiment 1 consisted of the following scenario:

| John is flipping a coin (It’s a regular, fair coin.). He has flipped the coin four times - and each time, heads came up. John is about to flip the coin a fifth time. |

| |

What do you think is about to happen on the fifth coin toss?

(The intervention was placed here) |

| |

- Tails is more likely to come up than heads.

- Tails is just as likely to come up as heads. |

The correct answer for experiment one is that tails is just as likely to come up as heads. The gambler’s fallacy in this case would be to answer that Tails is more likely to come up (The probability of tails is unaffected by prior outcomes.). The order of the two answer options was randomized. The interventions that were placed after the question are summarized in

Table 1.

Each intervention represents one experimental conditions. In total, there were five experimental conditions to which the participants were randomly assigned.

Experiment 2 consisted of the following scenario:

| The local university has started using a computer program that detects plagiarism in student essays. The software has a false positive rate of 5%: It mistakes 5% of original, non-plagiarized essays for plagiarized ones. However, the software never fails to detect a truly plagiarized essay: All essays that are really plagiarized are detected - cheaters are always caught. |

| |

| Plagiarism at the university is not very common. On average, one out of a thousand students will commit plagiarism in their essays. The software has detected that an essay recently handed in by John, a university freshman, is plagiarized. |

| |

What do you think is more likely?

(The intervention was placed here) |

| |

- John has indeed committed plagiarism.

- John is innocent and the result is a false positive. |

The correct answer for experiment 2 is that it is more likely that John is innocent and the result is a false positive. Given the information in the scenario, the actual probability that John is indeed guilty is only 0.02. The information provided in the scenario of experiment 1 is adapted from a classical test of the base-rate fallacy [

54,

55]. The correct probability can be calculated by applying Bayes’ rule

as

where

stands for plagiarism and

for positive test result by the plagiarism detection software. When the numbers provided in the scenario are plugged into the equation, we arrive at the probability of around 0.02, or around 2%:

The order of the answer options in experiment 2 was randomized. The interventions (and therefore the conditions) for experiment 2 were the same as for experiment 1, as summarized in

Table 1.

Experiment 3 consisted of the following scenario:

| Lisa started playing tennis when she was seven years old. She has competed in several amateur tennis tournaments, and she even won twice. |

| |

Which of the following descriptions do you think is more likely to be true for Lisa?

(The intervention was placed here) |

| |

- Lisa is a lawyer.

- Lisa is a lawyer. She play tennis on the weekends. |

The correct answer for experiment 3 is that “Lisa is a lawyer” is more likely to be true. This experiment is a slight adaptation of the famous Linda experiment [

53]. The order of the answer options in experiment 3 was randomized, and the interventions (and therefore the conditions) for experiment 3 are, once again, the same as in experiments 1 and 2, as summarized in

Table 1.

4.2. Participants

Participants for the three experiments were recruited via the online crowdworking platform Clickworker [

56,

57]. For each experiment, the participation of 500 residents of the United States was commissioned on the crowdworking platform. Each participant was remunerated with 0.15 Euro. The mean completion time for experiment 1 was 54.4 s, for experiment 2 was 72.5 s, and for experiment 3 was 57.2 s.

In total, there were 508 participants in experiment 1, 501 participants in experiment 2, and 500 participants in experiment 3. The participants were similar in terms of age (means of 34.2, 33.8, 33.8 and standard deviations of 12.4, 12.6, 12.3 years for experiments 1, 2, and 3) and sex ratio (62.6%, 63.1%, 62.8% female for experiments 1, 2, and 3) in all three experiments. The experiments were conducted with the survey software LimeSurvey [

58]. In each experiment, participants were randomly assigned to one of the five experimental condition. The number of particpants per experimental condition in each experiment is summarized in

Table 2.

The data was collected between 8 August and 9 August 2018. The data was not manipulated after the collection; all data that was collected is part of the data analysis.

4.3. Main Data Analysis

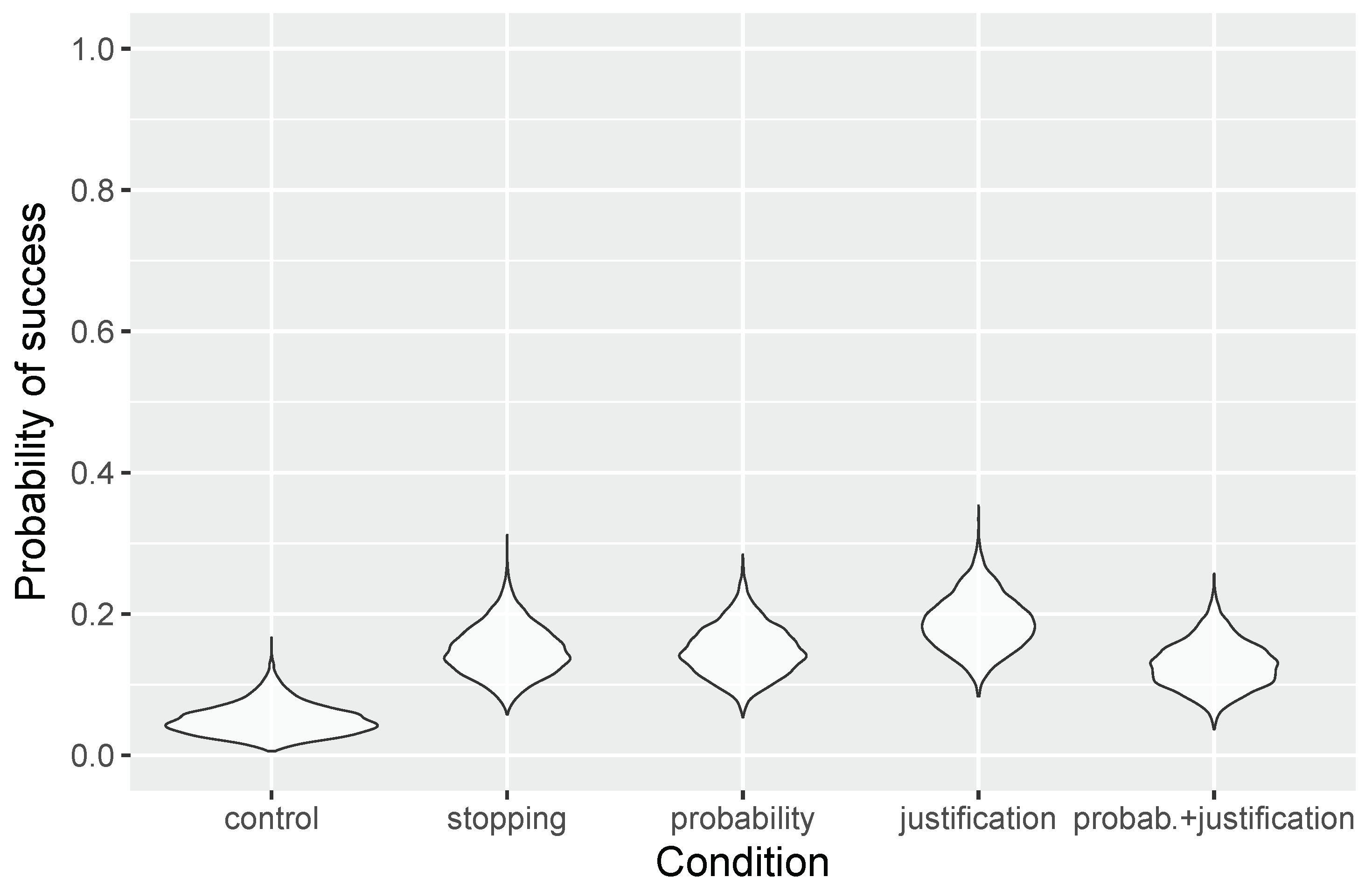

The experiments represent a between-subjects design with five levels. Since the participants in each experiment could either choose the correct or the incorrect answer, the observed data can be thought of as being generated by an underlying binomial distribution. The probability for a “success” (correct answer) is the unobserved variable of interest. I estimate this parameter as a simple Bayesian model in the following manner:

In this model, x stands for the number of successes, n for the number of trials, and for the probability for success. The prior is a flat prior, declaring that any value between 0 and 1 is equally probable for . In practical terms, a flat prior means that the posterior distribution is determined entirely by the observed data.

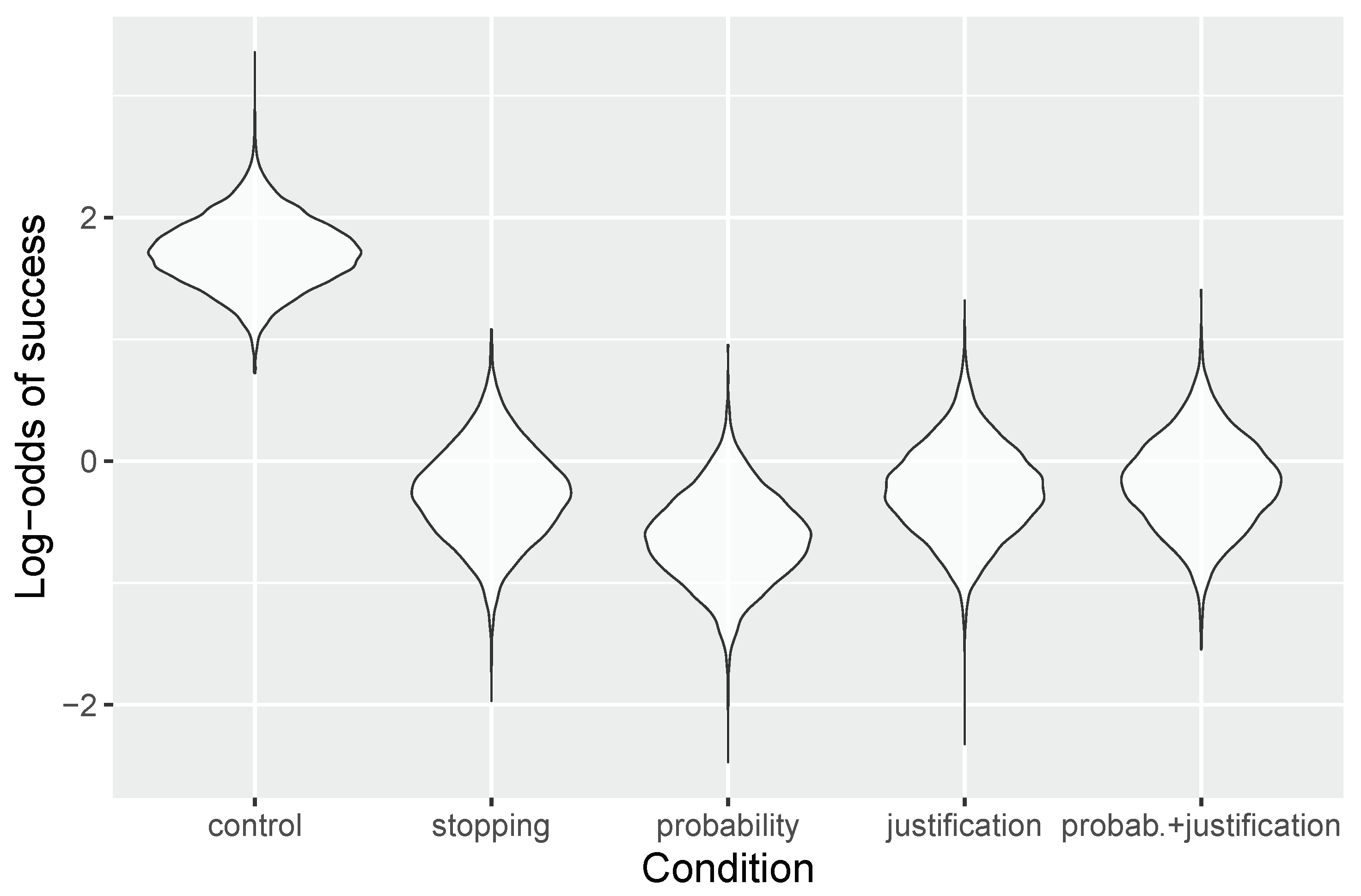

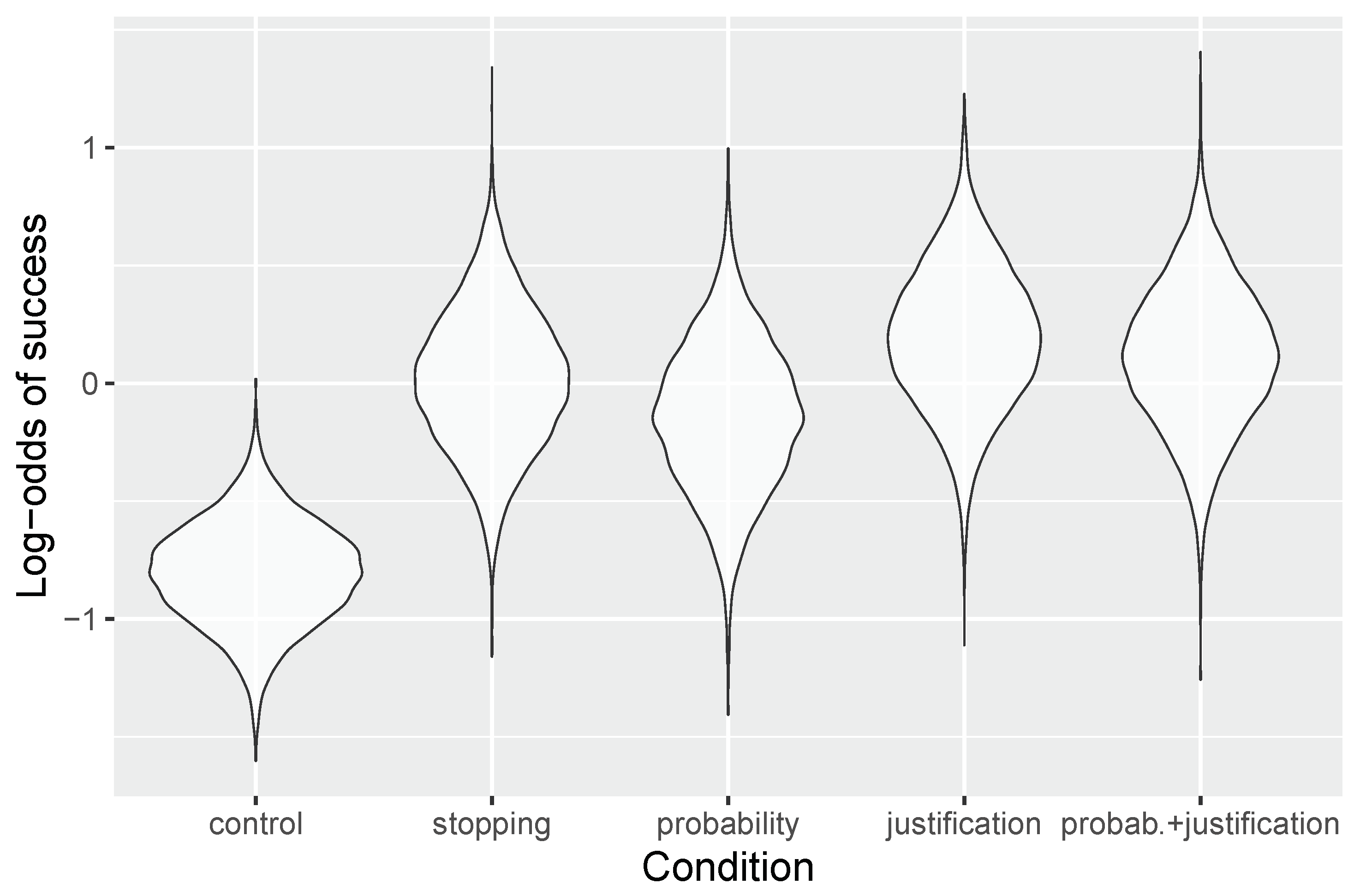

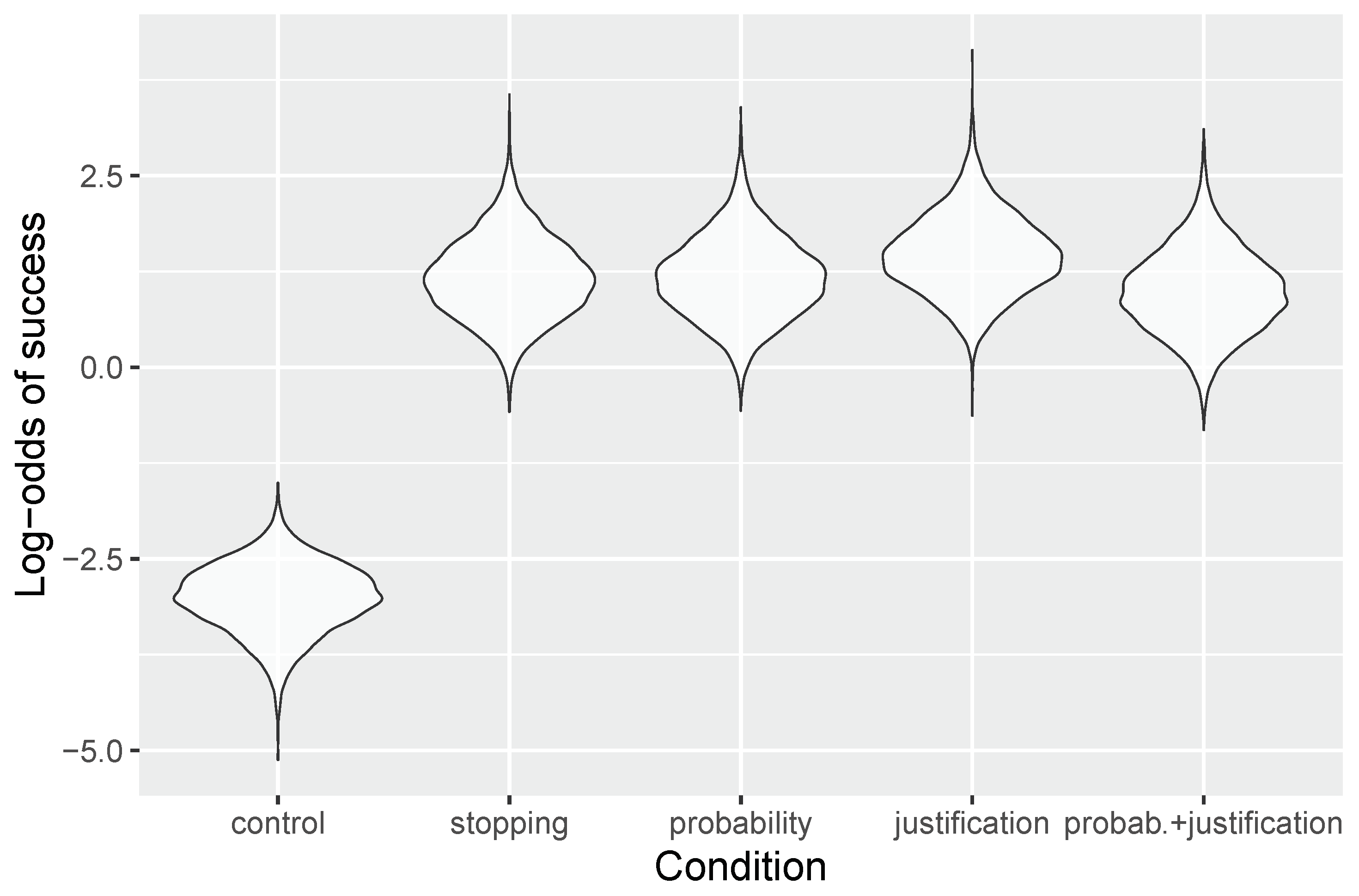

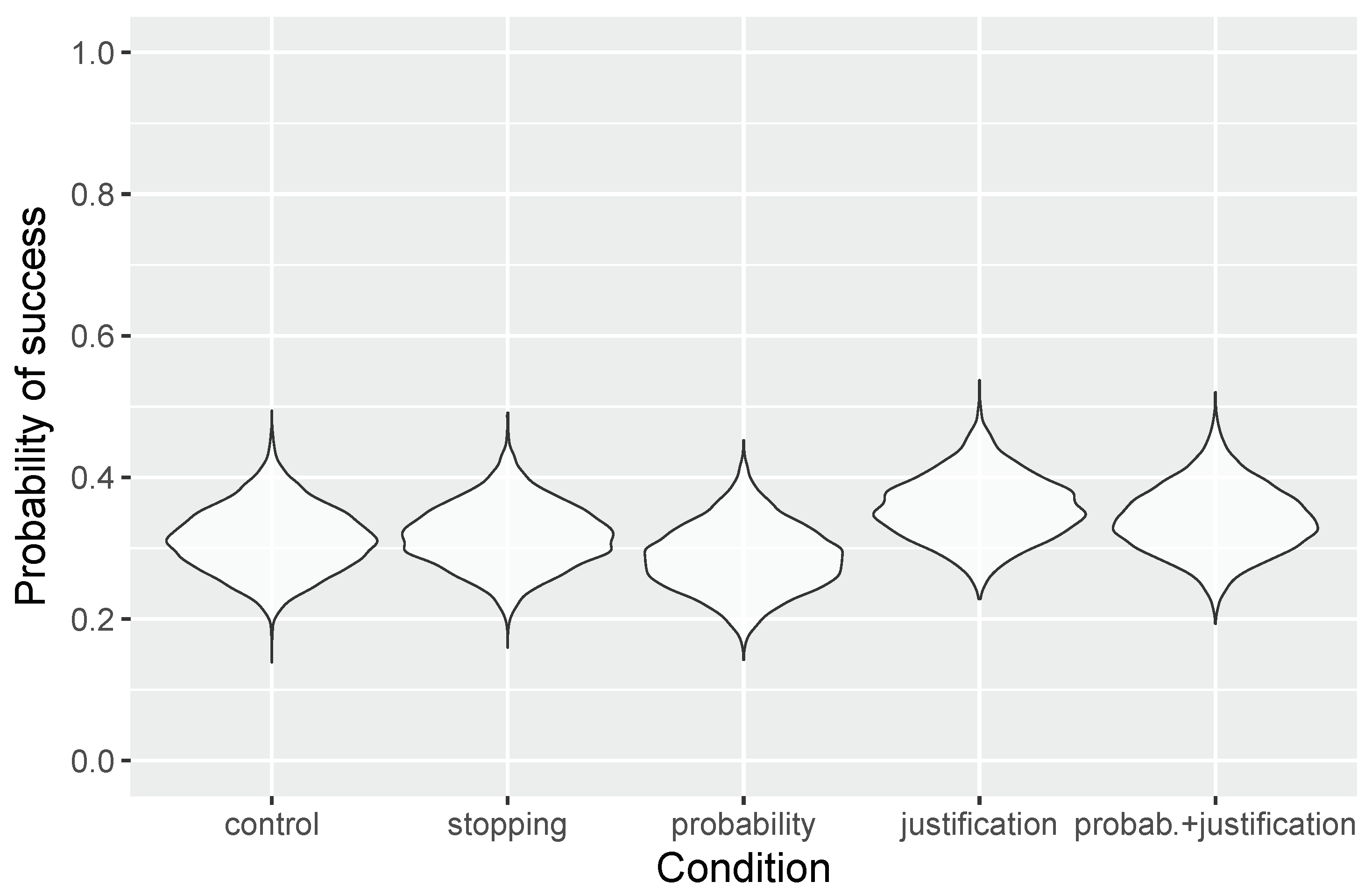

I estimate for every experimental condition in the three experiments, resulting in a total of 15 model estimates (five per experiment). The resulting posterior distributions show whether and how differs between experimental conditions. This approach is not a statistical test but a parameter estimate that quantifies uncertainty given the data.

The model estimations were performed with the probabilistic language Stan [

59] called from within the statistical environment R [

60]. For each model estimate, four MCMC (Markov chain Monte Carlo) chains were sampled, with 1000 warmup and 1000 sampling iterations per chain. All model estimates converged well, as indicated by potential scale reduction factors [

61] of

for all parameters in all models. The model estimates are reported in the following results section as graphical representations of the full posterior distributions and as numeric summaries in tabular form.

4.4. Complementary Data Analysis

For the complementary data analysis, the results of the experiments are estimated in the form of the following Bayesian logistic regression:

The benefit of estimating this regression model is that all parameter estimates (per experiment) are contained within a single model (They are not estimated separately, as in the main data analytic procedure.). The resulting model estimates allow for a more direct comparison of the effects of the different interventions. In addition, as a step towards methodological triangulation, discrepancies between the primary and the complementary data analysis yields a more precise picture.

In the logistic regression models, the priors for the parameter estimates are vague, non-committal priors that give large weight to the data [

62,

63]. For the noise distribution, I use Student’s

t-distribution rather than a normal distribution because the former is more robust against outliers [

64].

The three model estimations (one per experiment) are performed in the same manner as the model estimation of the primary data analysis: For each model, four MCMC chains were sampled, with 1000 warmup and 1000 sampling iterations per chain. All estimated parameters in all three models have potential scale reduction factors of

, indicating that the models converged well. The model estimation were again performed with the probabilistic language Stan from within the the statistical environment R, but in this instance, the analysis in Stan was executed through the package BRMS within R [

65] (Packages such as BRMS do not change how Stan works. They are interfaces that make the model and data specification in Stan easier.).

4.5. Pre-Registration

The hypotheses, the research design, and the data analysis procedure were pre-registered with the Open Science Framework prior to the collection of the data [

66]. The complementary data analysis presented in

Appendix A has not been pre-registered.

6. Discussion

6.1. Too Good to Be True

The title of this paper asks the question whether a micro-intervention can debias in a minute or less, or whether such a proposition is too good to be true. Given the experimental results, the answer seems clear: A trivially simple micro-intervention that still has a non-trivial effect is too good to be true.

In experiment 1, the micro-intervention of interest, the combined probability + justification intervention, actually caused harm by slightly decreasing the probability of choosing the correct answer. In experiments 2 and 3, the combined probability + justification intervention did not cause harm, but it was also not the most effective intervention. The isolated justification intervention worked best in experiments 2 and 3: Asking “Why?” worked noticeably better than asking “How certain am I?” and “Why?” at the same time. Overall, these results mean that all three hypotheses are clearly rejected.

6.2. What Can Be Salvaged?

The result of experiments 2 and 3 was surprising, given the theoretical background. The fact that the micro-intervention “Why?” seems to have worked best in these two experiments could indicate that triggering a metacognitive experience surrounding justification works better than triggering probabilistic reasoning. Justifying a belief might be a more natural, intuitive process than quantifying the degree of belief.

It would not be altogether surprising if probabilistic reasoning as a metacognitive experience was indeed difficult to induce ad hoc. After all, numerous cognitive biases are related to errors in probabilistic reasoning, among them the fallacies that were used in the three experiments of this study (the gambler’s fallacy, the base-rate fallacy, and the conjunction fallacy). If it were that easy to engage in probabilistic reasoning, those probability-related cognitive biases might not be as prevalent as research suggests they are. Expressing confidence in or the strength of one’s beliefs in probabilistic terms might not be suitable for micro-intervention debiasing formats because that kind of probabilistic reasoning might require some prior instructions and training.

6.3. Limitations of the Present Research Design

The research design of this study has several limitations and flaws. In experiment 1, all interventions had a negative effect; the control group did best. The interventions therefore seem to have caused a what can be described as a backfire effect. Backfire effects of debiasing interventions have been observed before [

68]. However, in view of the fact that the overall prevalence of correct answers across all groups was rather high, there might be a problem with the experiment itself. The experimental scenario might have been too poorly phrased, or the participants might have been familiar with the gambler’s fallacy through prior exposure to similar experiments.

More generally, the research design of this study is a “worst-case” design. Participants in the different experimental conditions were presented a prompt (the respective intervention), but in hindsight, it remains unknown whether the participants actually followed the prompt. That is an intentional design choice because including formal measurements for the prompts would have transformed the micro-intervention into a more complex intervention. However, that design choice has its downsides. The participants in this study were incentivized to simply click through to the end of the experiment in order to collect their prize. They had no “skin in the game”: whether or not they followed the prompts had no impact on their reward—the participants had no incentive to engage with the experiments in a serious and attentive manner because the outcome was the same even if they mindlessly clicked through the experiment. Given that the material reward is generally the primary motivation for crowdworking [

69], such inattentive participation seems likely.

Of course, since the experiments in this study are randomized, it can be expected that these design downsides were uniformly distributed across the five experimental groups. However, the “worst-case” design setting of this study probably means that there is more noise in the data than there would have been if, for example, the interventions in the form of prompts were less subtle and the participants had to, for example, at least acknowledge having read the prompts (by clicking “OK”, for example).

Another limitation of the research design is the choice of biases for the three experiments. I opted for probability-related biases mainly for the sake of convenience (Probability-related biases with clear correct answers can be easily implemented for experimental settings.). However, as has been long argued, different kinds of biases might require different kinds of debiasing interventions [

70]. In theory, any positive effect of the tested micro-intervention should extend to other types of biases, since the “third way” logic of the intervention is one of universal applicability (The interventions aims to increase epistemic rationality in general rather than reduce the risk of specific biases.). However, the present research design with its “monoculture” of probability-related biases does not allow for inferring anything about the effects when other types of biases are at play.

6.4. The Present Results and the Overall Debiasing Literature

The contribution of the present study to the debiasing literature is twofold. In empirical terms, it adds little. In conceptual terms, however, it might add some perspective.

All hypotheses that I set out to test were clearly refuted by the experimental evidence, and the main positive effect across all interventions—the mere justification intervention showed a potential improvement in two out of three experiments—is modest. These empirical findings fit with the overall picture that the debiasing literature paints: Debiasing is probably not a hopeless effort, but there is no debiasing silver bullet that can easily mitigate the risks of cognitive biases.

The conceptual perspective might be the greater contribution of this study. The debiasing literature is mostly fragmented, without noticeable strong conceptual underpinnings. In a sense, of course, that is a good thing: Empirical trial-and-error is useful and necessary, given that there is a plethora of cognitive biases that can decrease decision-making quality in many real-world decision-making contexts. At the same time, however, greater conceptual clarity could help prioritize and guide future research efforts. In this study, I have applied the distinction between procedural and structure modifying interventions as proposed by [

6], and I have added the category of “third way” interventions that aim to have the generalizability of structure modifying interventions while maintaining the simplicity of procedural interventions. More importantly, I regard structure modifying as well as “third way” interventions as being concerned mainly with increasing epistemic rationality, whereas procedural interventions are concerned with increasing instrumental rationality. Nearly all existing studies on debiasing (implicitly) focus on instrumental rationality and rational choice theory. A greater appreciation for the conceptual distinction between instrumental and epistemic rationality could add more structure to future research, and it could be a conceptual foundation for the development of novel debiasing strategies.

6.5. Directions for Future Research

Even though the empirical results of this study paint a sobering picture, future research on “third way” debiasing interventions is still in order. Reasearch on “third way” debiasing interventions is still relatively scarce, and the failure of the specific intervention tested in this study should not be interpreted as a failure of “third way” debiasing in general.

Perhaps the major lesson of the present study is that trivially simple micro-interventions that require zero learning and instructions but that still have meaningful positive impact might be little more than wishful thinking. “Third way” interventions probably need to have some non-trivial level of cognitive or other cost in order to have the potential for meaningfully inducing beneficial metacognitive experiences. That does not mean that we have to abandon the idea of small “third way” interventions altogether, but small debiasing interventions might be more effective when they are not quite as “micro” as the one tested in this study. For example, the intervention(s) tested in this study could be combined with a brief instruction on how to use the tool at hand, and why that is a good thing to do. That way, the intervention might be more salient, and potential effects could be more easily detectable.

The failure of the credence part of the micro-intervention that was tested represents another area that merits further attention. Quantifying beliefs seems not to be something that comes naturally or intuitively, making an instruction-less debiasing intervention that contains such a prompt ineffective or, as in this study, counterproductive. However, that does not mean that people have no useful probabilistic intuitions at all. For example, common verbal expressions for probability and uncertainty roughly, but plausibly map onto the numeric probability spectrum of 0 to 1 [

71]. Expressing credence might therefore be more successful if it is devised in a way that taps into that latent probabilistic intuition.

Another important general question to explore in future research is whether and how epistemologically informed debiasing interventions can be devised and implemented. Epistemologically informed interventions are interventions that do not primarily aim to mitigate rationality deficits in the form of susceptibility to (some) cognitive biases, because their goal is to increase epistemic rationality in general. That increase in epistemic rationality, in turn, should result in a reduced susceptiblity to cognitive biases. In other words: Interventions that increase epistemic rationality are not tools that help avoiding specific reasons for making specific mistakes, but tools that should universally increase the capacity for arriving at justified beliefs. Future research should explore how such debiasing interventions can be designed, and how effective they are at achieving the goal of debiasing. Doing so would result in greater theoretical diversity. The debiasing literature is currently (implicitly) mostly concerned with instrumental rationality and rational choice theory, but the epistemological perspective quality of belief-formation is just as important and scientifically deserving.