UAV Detection of Sinapis arvensis Infestation in Alfalfa Plots Using Simple Vegetation Indices from Conventional Digital Cameras

Abstract

:1. Introduction

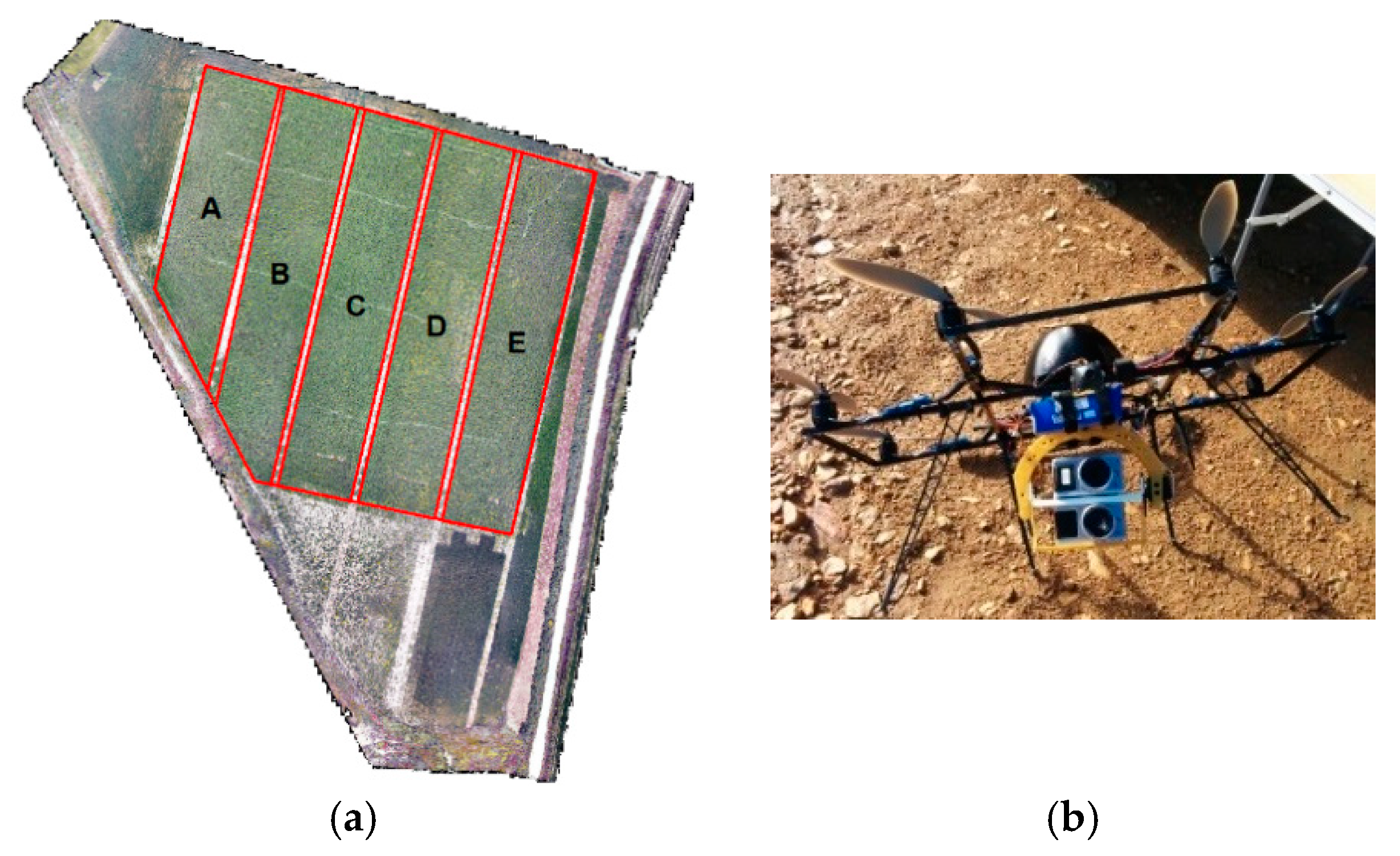

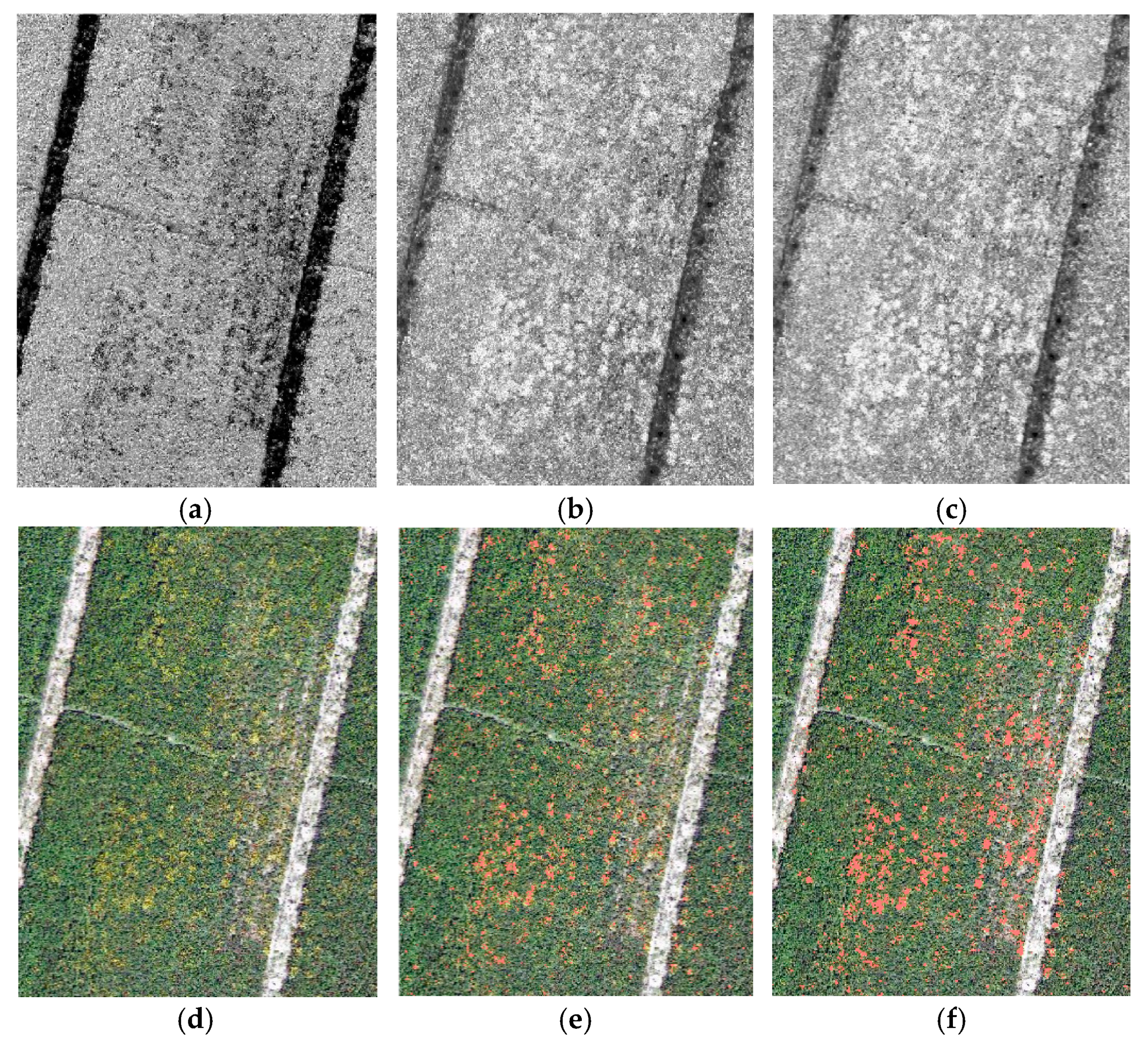

2. Materials and Methods

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Eisenbeiß, H. UAV Photogrammetry; ETH Zurich: Zurich, Switzerland, 2009. [Google Scholar]

- Teoh, C.; Hassan, D.A.; Radzali, M.M.; Jafni, J. Prediction of SPAD chlorophyll meter readings using remote sensing technique. J. Trop. Agric. Food Sci 2012, 40, 127–136. [Google Scholar]

- Kerle, N.; Heuel, S.; Pfeifer, N. Real-time data collection and information generation using airborne sensors. In Geospatial Information Technology for Emergency Response; Li, J., Zlatanova, S., Eds.; CRC Press: London, UK, 2008; pp. 59–90. [Google Scholar]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.T.; McMurtrey, J.E.; Walthall, C.L. Evaluation of Digital Photography from Model Aircraft for Remote Sensing of Crop Biomass and Nitrogen Status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Saberioon, M.M.; Amin, M.S.M.; Anuar, A.R.; Gholizadeh, A.; Wayayok, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Moorthy, I.; Miller, J.R.; Berni, J.A.J.; Zarco-Tejada, P.; Hu, B.; Chen, J. Field characterization of olive (Olea europaea L.) tree crown architecture using terrestrial laser scanning data. Agric. For. Meteorol. 2011, 151, 204–214. [Google Scholar] [CrossRef]

- Mercado-Luna, A.; Rico-García, E.; Lara-Herrera, A.; Soto-Zarazúa, G.; Ocampo-Velázquez, R.; Guevara-González, R.; Herrera-Ruiz, G.; Torres-Pacheco, I. Nitrogen determination on tomato (Lycopersicon esculentum Mill.) seedlings by color image analysis (RGB). Afr. J. Biotechnol. 2010, 9, 5326–5332. [Google Scholar]

- Sakamoto, T.; Gitelson, A.A.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Wardlow, B.D.; Suyker, A.E.; Verma, S.B.; Shibayama, M. An alternative method using digital cameras for continuous monitoring of crop status. Agric. For. Meteorol. 2012, 154–155, 113–126. [Google Scholar] [CrossRef] [Green Version]

- Hillnhütter, C.; Mahlein, A.K.; Sikora, R.A.; Oerke, E.C. Remote sensing to detect plant stress induced by Heterodera schachtii and Rhizoctonia solani in sugar beet fields. Field Crops Res. 2011, 122, 70–77. [Google Scholar] [CrossRef]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of Green-Red Vegetation Index for Remote Sensing of Vegetation Phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar] [CrossRef] [Green Version]

- Kazmi, W.; Garcia-Ruiz, F.J.; Nielsen, J.; Rasmussen, J.; Jørgen Andersen, H. Detecting creeping thistle in sugar beet fields using vegetation indices. Comput. Electron. Agric. 2015, 112, 10–19. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Ruiz, F.J.; Wulfsohn, D.; Rasmussen, J. Sugar beet (Beta vulgaris L.) and thistle (Cirsium arvensis L.) discrimination based on field spectral data. Biosys. Eng. 2015, 139, 1–15. [Google Scholar] [CrossRef]

- Bunce, J.A.; Ziska, L.H. Crop ecosystem responses to climatic change: Crop/weed interactions. In Climate Change and Global Crop Productivity; Reddy, K.R., Hodges, H., Eds.; CABI Pub: Wallingford, Oxon, UK, 2000; pp. 333–352. [Google Scholar]

- Oerke, E.C. Crop losses to pests. J. Agric. Sci. 2005, 144, 31–43. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Abbott, D.; Torres-Sánchez, J.; López-Granados, F.; De Castro, A.I.; Peña-Barragán, J.M. Configuration and specifications of an unmanned aerial vehicle (UAV) for early site specific weed management. PLoS ONE 2013, 8, e58210. [Google Scholar]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Peña, J.-M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2015, 17, 183–199. [Google Scholar] [CrossRef]

- De Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Mateen, A.; Zhu, Q. Weed detection in wheat crop using UAV for precision agriculture. Pak. J. Agric. Sci 2019, 56, 809–817. [Google Scholar]

- Hameed, S.; Amin, I. Detection of weed and wheat using image processing. In Proceedings of the 2018 IEEE 5th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Bangkok, Thailand, 22–23 November 2018; pp. 1–5. [Google Scholar]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV low-altitude remote sensing for precision weed management. Weed Technol. 2017, 32, 2–6. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.-I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.-J.; Peña, J.-M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Barrero, O.; Perdomo, S.A. RGB and multispectral UAV image fusion for Gramineae weed detection in rice fields. Precis. Agric. 2018, 19, 809–822. [Google Scholar] [CrossRef]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- Louargant, M.; Jones, G.; Faroux, R.; Paoli, J.-N.; Maillot, T.; Gée, C.; Villette, S. Unsupervised classification algorithm for early weed detection in row-crops by combining spatial and spectral information. Remote Sens. 2018, 10, 761. [Google Scholar] [CrossRef] [Green Version]

- Pflanz, M.; Nordmeyer, H.; Schirrmann, M. Weed mapping with UAS imagery and a Bag of Visual Words based image classifier. Remote Sens. 2018, 10, 1530. [Google Scholar] [CrossRef] [Green Version]

- Sánchez-Sastre, L.F.; Alte da Veiga, N.M.S.; Ruiz-Potosme, N.M.; Carrión-Prieto, P.; Marcos-Robles, J.L.; Navas-Gracia, L.M.; Martín-Ramos, P. Assessment of RGB vegetation indices to estimate chlorophyll content in sugar beet leaves in the final cultivation stage. AgriEngineering 2020, 2, 128–149. [Google Scholar]

- Kawashima, S.; Nakatani, M. An Algorithm for Estimating Chlorophyll Content in Leaves Using a Video Camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef] [Green Version]

- Murphy, R.J.; Underwood, A.J.; Jackson, A.C. Field-based remote sensing of intertidal epilithic chlorophyll: Techniques using specialized and conventional digital cameras. J. Exp. Mar. Biol. Ecol. 2009, 380, 68–76. [Google Scholar] [CrossRef]

- Penuelas, J.; Gamon, J.A.; Griffin, K.L.; Field, C.B. Assessing community type, plant biomass, pigment composition, and photosynthetic efficiency of aquatic vegetation from spectral reflectance. Remote Sens. Environ. 1993, 46, 110–118. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Fuentes-Peailillo, F.; Ortega-Farias, S.; Rivera, M.; Bardeen, M.; Moreno, M. Comparison of vegetation indices acquired from RGB and multispectral sensors placed on UAV. In Proceedings of the 2018 IEEE International Conference on Automation/XXIII Congress of the Chilean Association of Automatic Control (ICA-ACCA), Concepcion, Chile, 17–19 October 2018; pp. 1–6. [Google Scholar]

- Hassanein, M.; El-Sheimy, N. An efficient weed detection procedure using low-cost UAV imagery system for precision agriculture applications. In Proceedings of the ISPRS TC I Mid-Term Symposium “Innovative Sensing—From Sensors to Methods and Applications”, Karlsruhe, Germany, 10–12 October 2018; pp. 181–187. [Google Scholar]

| Name | Equation | Reference |

|---|---|---|

| Normalized Pigment Chlorophyll Ratio (NPCI) | (R−B)/(R+B) | [31] |

| (R−B)/(R+G+B) | [29] | |

| Normalized Difference Vegetation Index (NDVI) | (NIR-R)/(NIR+R) | [32] |

| Obtained from | Subplot | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | ||||||

| m2 | % | m2 | % | m2 | % | m2 | % | m2 | % | |

| (R−B)/(R+B+G) | 29 | 1.1 | 54 | 1.6 | 60 | 1.6 | 158 | 4.3 | 68 | 1.9 |

| NDVI and (R−B)/(R+B+G) | 29 | 1.1 | 42 | 1.2 | 27 | 0.8 | 158 | 4.3 | 46 | 1.3 |

| (R−B)/(R+B) | 28 | 1.1 | 55 | 1.6 | 77 | 2.1 | 131 | 3.5 | 54 | 1.5 |

| NDVI and (R−B)/(R+B) | 25 | 1.0 | 38 | 1.1 | 21 | 0.6 | 142 | 3.8 | 40 | 1.1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-Sastre, L.F.; Casterad, M.A.; Guillén, M.; Ruiz-Potosme, N.M.; Veiga, N.M.S.A.d.; Navas-Gracia, L.M.; Martín-Ramos, P. UAV Detection of Sinapis arvensis Infestation in Alfalfa Plots Using Simple Vegetation Indices from Conventional Digital Cameras. AgriEngineering 2020, 2, 206-212. https://doi.org/10.3390/agriengineering2020012

Sánchez-Sastre LF, Casterad MA, Guillén M, Ruiz-Potosme NM, Veiga NMSAd, Navas-Gracia LM, Martín-Ramos P. UAV Detection of Sinapis arvensis Infestation in Alfalfa Plots Using Simple Vegetation Indices from Conventional Digital Cameras. AgriEngineering. 2020; 2(2):206-212. https://doi.org/10.3390/agriengineering2020012

Chicago/Turabian StyleSánchez-Sastre, Luis Fernando, Mª Auxiliadora Casterad, Mónica Guillén, Norlan Miguel Ruiz-Potosme, Nuno M. S. Alte da Veiga, Luis Manuel Navas-Gracia, and Pablo Martín-Ramos. 2020. "UAV Detection of Sinapis arvensis Infestation in Alfalfa Plots Using Simple Vegetation Indices from Conventional Digital Cameras" AgriEngineering 2, no. 2: 206-212. https://doi.org/10.3390/agriengineering2020012