3.1. Forecast Generation

A multivariate normal distribution is used to model the one-step-ahead probability distribution of the VAR model innovation process. Latin hypercube samples from the multivariate normal distribution in conjunction with the VAR model parameter estimates and historical data are used to compute one-step-ahead probability distributions for the exchange rate returns.

An estimate of the independent factor process of the VAR-LiNGAM model is obtained from its estimated innovation process. Kernel density estimation with a normal probability window is used to estimate the probability distributions of the VAR-LiNGAM independent factor processes. Latin hypercube samples from the independent factor process distributions are transformed into one-step-ahead distributions of the VAR-LiNGAM innovation processes. The innovation process distribution samples plus the VAR-LiNGAM model parameter estimates and historical data are used to compute one-step-ahead probability distributions for the exchange rate returns.

Kernel density estimation with a normal probability window is used to estimate the probability distribution of each AR innovation process. Latin hypercube samples from the innovation process distributions plus the AR model estimates and historical data are used to compute one-step-ahead probability distributions for the independent components. The forecasted probability distributions of the independent components are transformed into forecasted probability distributions of the exchange rate returns as described in Equation (23).

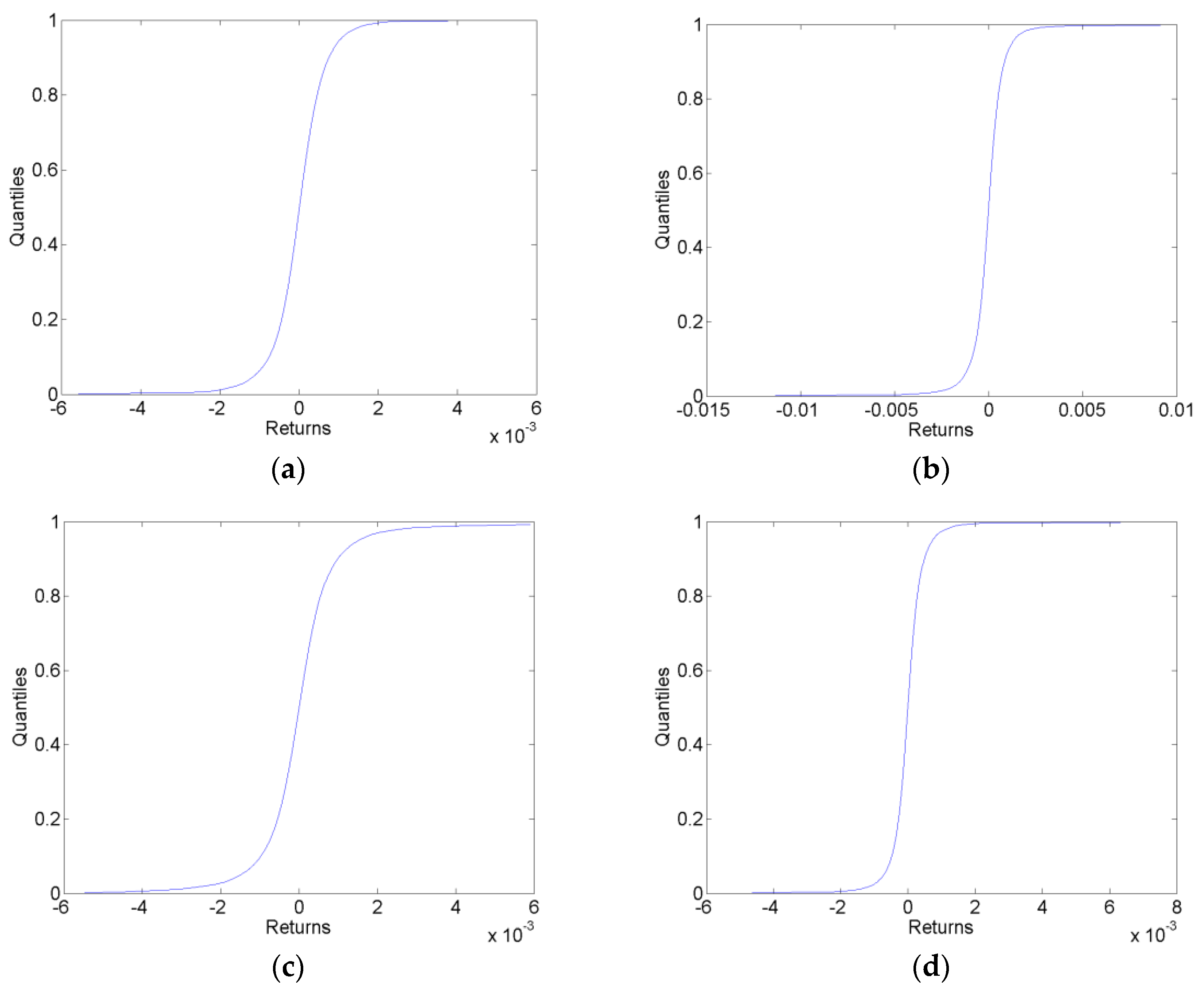

Sample one-step-ahead cumulative predictive distributions in each of the forecast data sets for the VAR-LiNGAM model are shown in

Figure 1. These sample predictive cdfs are similar to those generated by the VAR and AR models.

3.2. Forecast Evaluation

The only forecasts considered here are those for the CHF/EUR exchange rate; the forecasts of other currencies are not evaluated. For the computation of calibration functions, the fractile of each outcome is determined by comparing the outcome to the estimated cumulative predictive distribution. These fractiles are used in conjunction with the estimated cumulative predictive distributions to compute the calibration functions. The calibration functions are both plotted and used to compute goodness-of-fit test statistics.

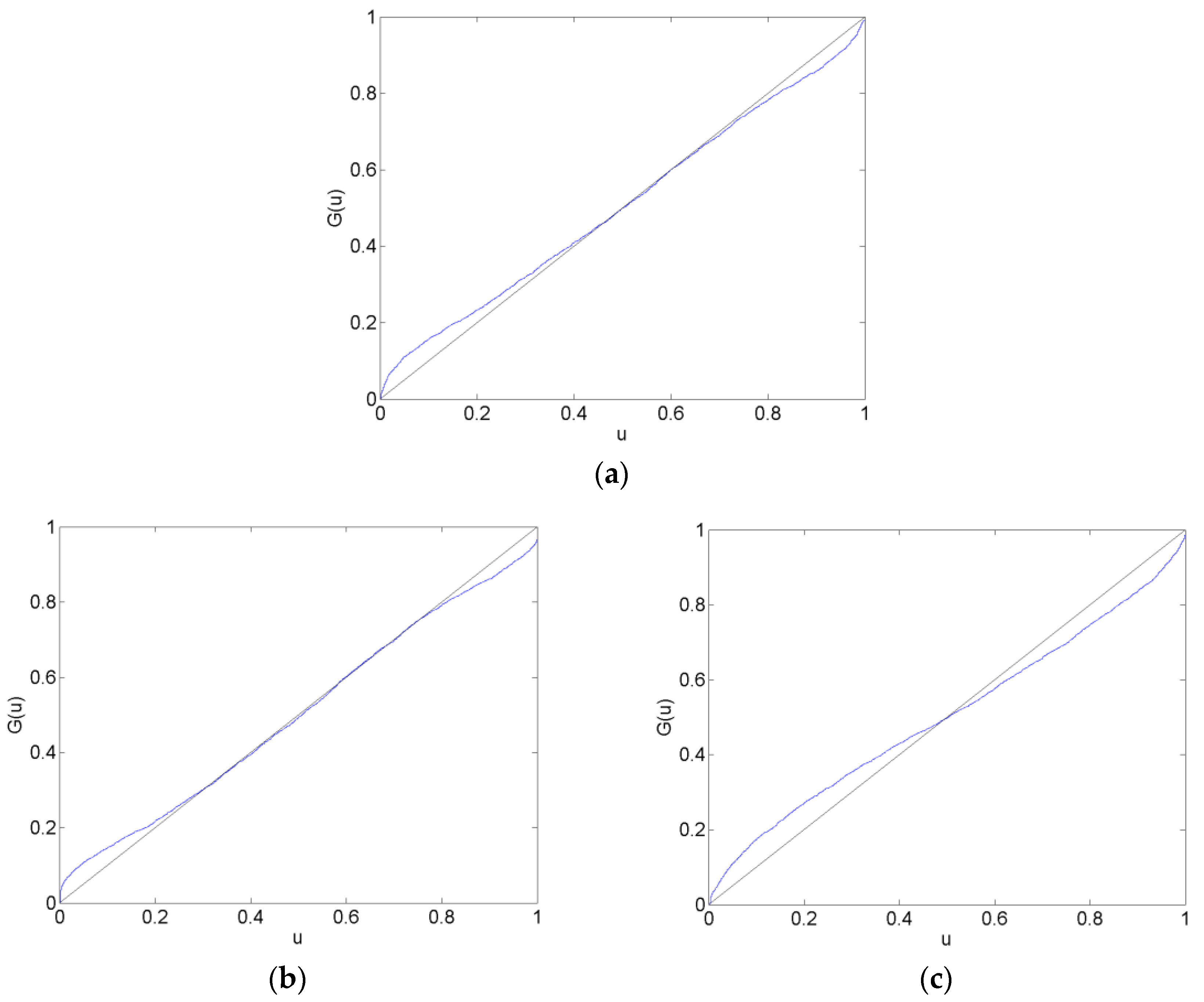

Calibration plots of the CHF/EUR for the before, surrounding, after and long after forecast data sets are in

Figure 2,

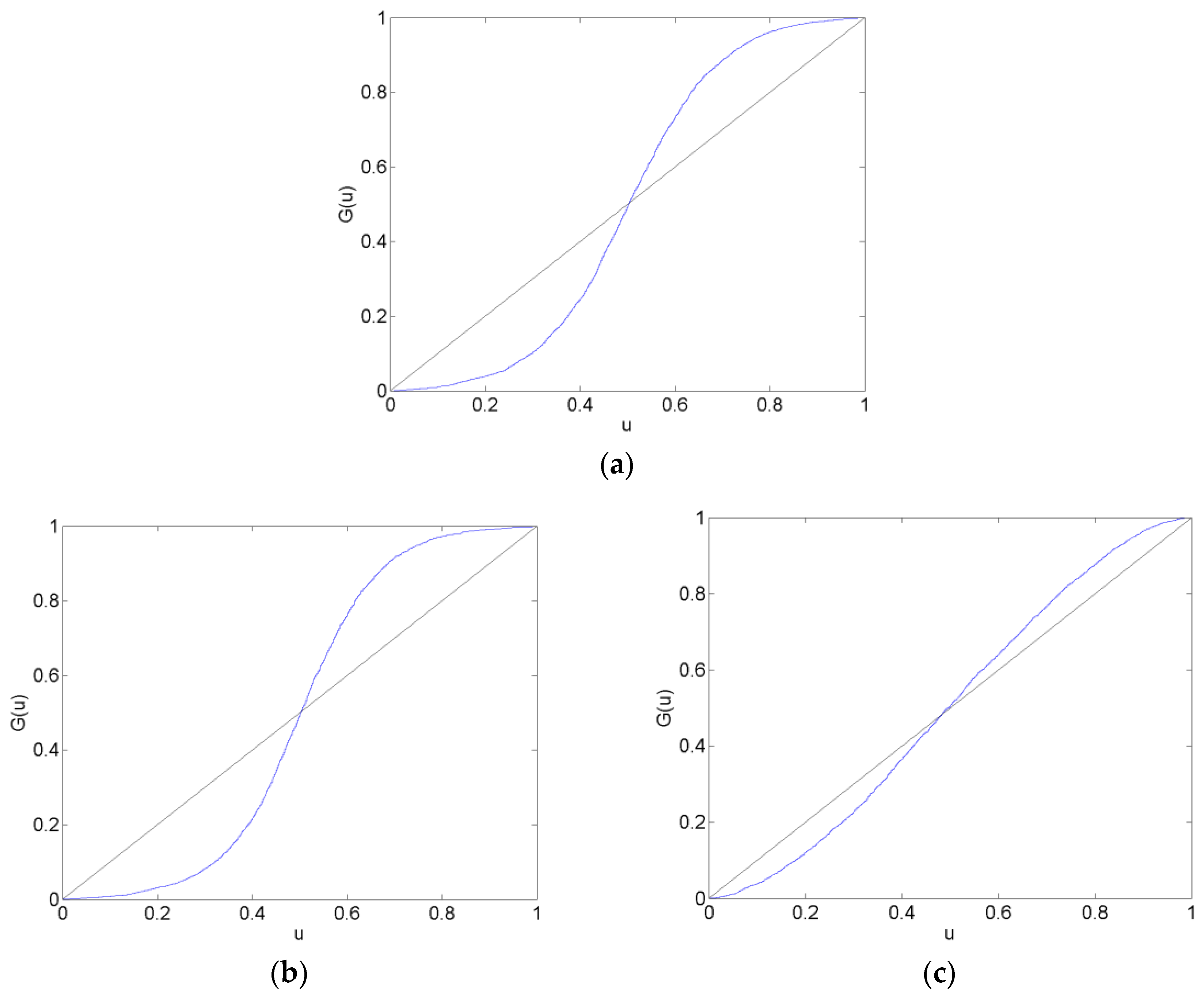

Figure 3,

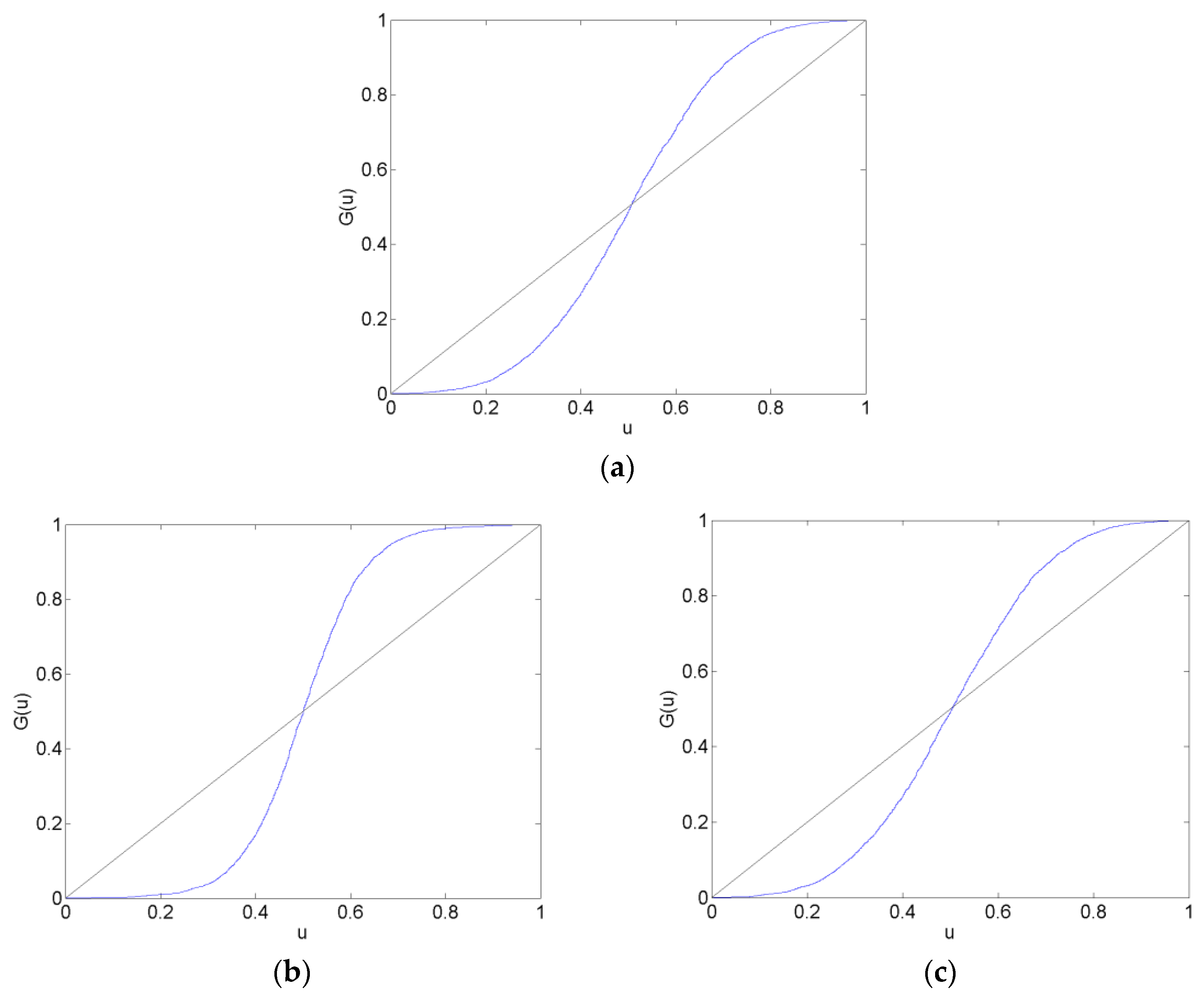

Figure 4 and

Figure 5. The calibration plots for the AR, VAR and VAR-LiNGAM models in a particular forecast data set in addition to a 45-degree line for reference are shown in each figure. Underconfidence in probability assessments is indicated where the calibration function maps above the 45-degree line, while overconfidence in assessments is indicated where the calibration function maps below the 45-degree line.

For the before forecast data set, each model exhibits underconfidence on the lower end of the calibration function and overconfidence on the upper end. For the surrounding forecast data set, the AR and VAR models exhibit overconfidence on the lower end of the calibration function and underconfidence on the upper end; the extreme ends of both of these calibration functions show the opposite behavior. The calibration function for the VAR-LiNGAM model on the surrounding data set displays the opposite behavior of the AR and VAR models with underconfidence on the lower end and overconfidence on the upper end. For the after and long after data sets, the calibration functions for all models exhibit a large degree of overconfidence on the lower end and a large degree of underconfidence on the upper end.

Overall, the calibration plots show that all models are better calibrated (i.e., map closer to the 45-degree line) in the before and surrounding data sets than in the after and long after data sets. Forecasts are less calibrated after the placement of the floor on the CHF/EUR exchange rate; it appears that the Swiss National Bank’s market intervention had a negative effect on the calibration of the time-series models in the longer run.

Chi-squared goodness-of-fit tests are performed to test each time-series model for calibration during each forecast data set. The null hypothesis that the forecasts are well calibrated is rejected with a

p-value near zero in every data set for every time-series model; no time-series model forecasts are well calibrated in any of the time periods under consideration. Some of the calibration functions appear to map closely to the 45-degree reference line, such as in

Figure 2a,b. Nevertheless, none of the calibration functions shown in any of

Figure 2,

Figure 3,

Figure 4 and

Figure 5 reflect forecasts that are well calibrated according to the goodness-of-fit test.

In some of

Figure 2,

Figure 3,

Figure 4 and

Figure 5, the calibration problems appear to be in the tails of the distributions, such as in

Figure 2a,b. Generating forecasts with distributions estimated via kernel density estimation with a normal probability window might be the source of this bad tail behavior. In the calibration plots that show bad tail behavior, the miscalibration of each tail is in the opposite direction; for example, in

Figure 2b, the calibration function shows underconfidence on the low end and overconfidence on the upper end. If the normal probability widow was to blame for this poor tail performance, it would likely produce tails that were too heavy or too light at both ends of the distribution. For instance, if kernel density estimation with a normal probability window produced a distribution with tails that were too light to reflect the distribution of returns, then the corresponding calibration function would show underconfidence at both ends of the plot. Additionally, since other figures show that the problem with calibration is more in the central part of the distribution than in the tails, such as

Figure 3a,b, it is unlikely that the normal probability window is the culprit for bad calibration.

In addition to the calibration tests, the mean-squared error (MSE) and the probability score metrics are used to rank the probability forecasting systems. The mean-squared errors of each model’s forecasts are reported in

Table 2, and the probability scores of each model’s forecasts are reported in

Table 3. The VAR and VAR-LiNGAM models both have the same MSE on each data set because they are both driven by the innovations of the VAR model (see Equation (26)).

The MSE results indicate that no model consistently outperforms the others. The VAR and VAR-LiNGAM models perform the best in the before and long after data sets, while the AR model performs the best in the surrounding and after data sets. This may indicate that all models have roughly the same forecasting performance or that the VAR and VAR-LiNGAM models perform better in periods isolated from structural change.

In contrast, the probability score rankings show that the VAR model outperforms the other models in all but the long after data set in which the VAR-LiNGAM’s performance is slightly better. Because the simple VAR model outperforms the other models that are built using independent components, the probability score results indicate that there is no gain in forecasting performance when using independent components. Additionally, the probability score ranks the AR forecasts higher than the VAR-LiNGAM forecasts in all periods but the last; this may indicate that in some cases the multivariate VAR-LiNGAM model provides no advantage over the univariate AR model.

The VAR and VAR-LiNGAM models generate better forecasts in the long after period according to the MSE and the probability score. This is some indication that the VAR-LiNGAM model performs better than the AR model after market intervention has been in effect for some period of time.

3.3. Change in the Causal Structure

The results from the LiNGAM algorithm show that there is evidence that the causal relationships among the exchange rates changed after the intervention by the Swiss National Bank.

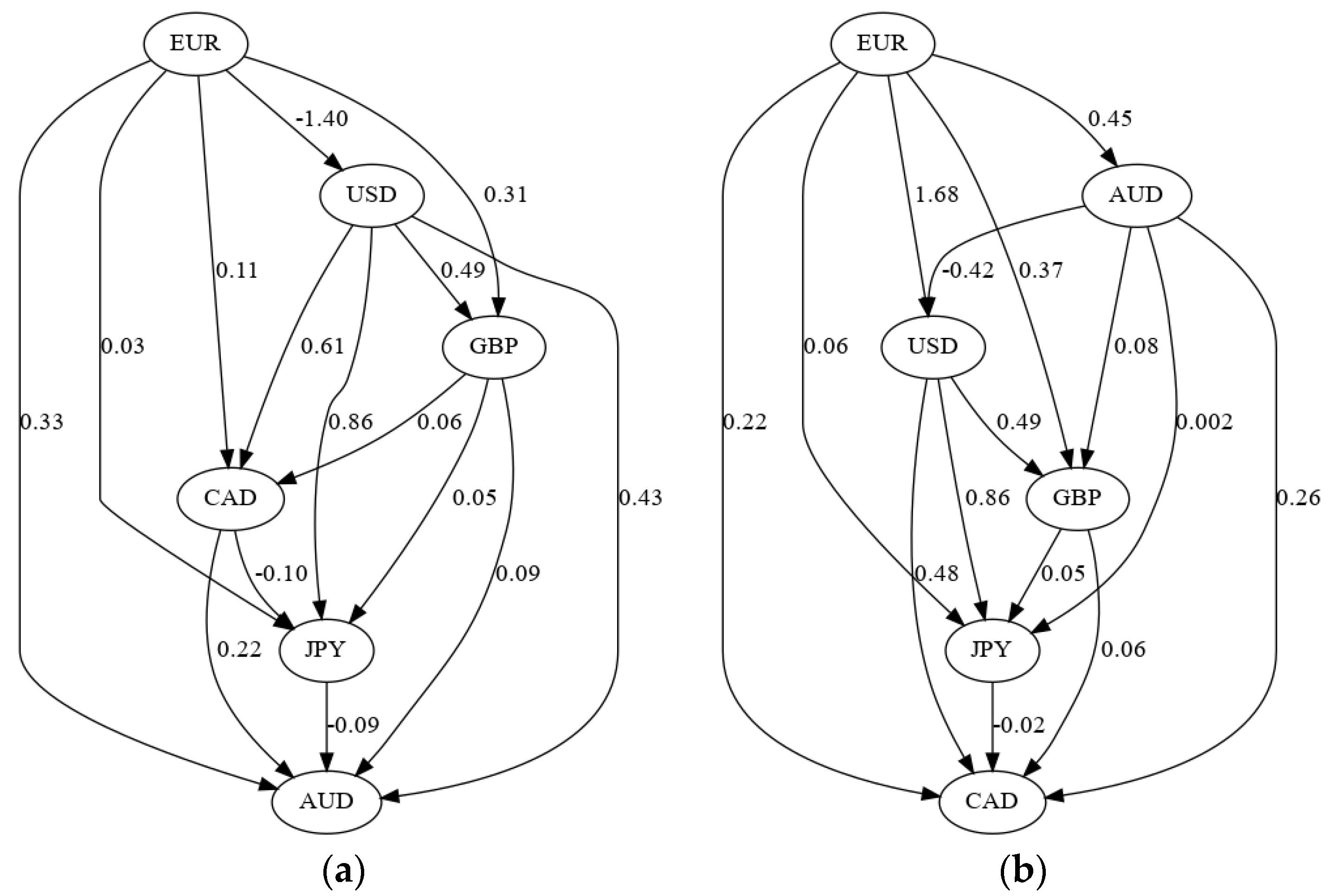

Table A5 reports the causal effect matrices for the different estimation data sets. These matrices show the causal effects from currencies listed in the columns to the currencies listed in the rows. For example, the first row of

Table A5a shows that the AUD exchange rate is positively affected by the CAD, EUR, GBP and USD and negatively affected by the JPY. The causal effects contained in these matrices can be represented graphically by directed acyclic graphs. The causal structure of the currencies before the SNB intervention is shown in

Figure 6a and the structure after the intervention is shown in

Figure 6b.

Figure 6 shows that several causal relationships reversed direction following the SNB intervention:

CAD → AUD changed to AUD → CAD

GBP → AUD changed to AUD → GBP

JPY → AUD changed to AUD → JPY

USD → AUD changed to AUD → USD

CAD → JPY changed to JPY → CAD

and the EUR → USD relationship changed sign from negative to positive. These graphs show that the policy change by the SNB altered the causal structure underlying the six major currencies. This result adds supporting evidence to the Lucas critique [

28], wherein Lucas hypothesized that a policy change could change the structure of an econometric model.

It is difficult to say why this causal change occurred. One possible explanation is that the Swiss franc is a safe haven currency and a funding currency for currency carry trades [

29], so the changes could be due to a change in the risk of the Swiss franc that affects its usefulness in either of these roles. A typical carry trade in 2011–2012 would have invested in the Australian dollar (a high-yielding currency) and been funded by the Swiss franc (a low-yielding currency) [

29]. Thus, the changing causal relationships with the Australian dollar could be due to a change in the risk characteristics of the carry trade when the SNB imposed its floor on the CHF/EUR.