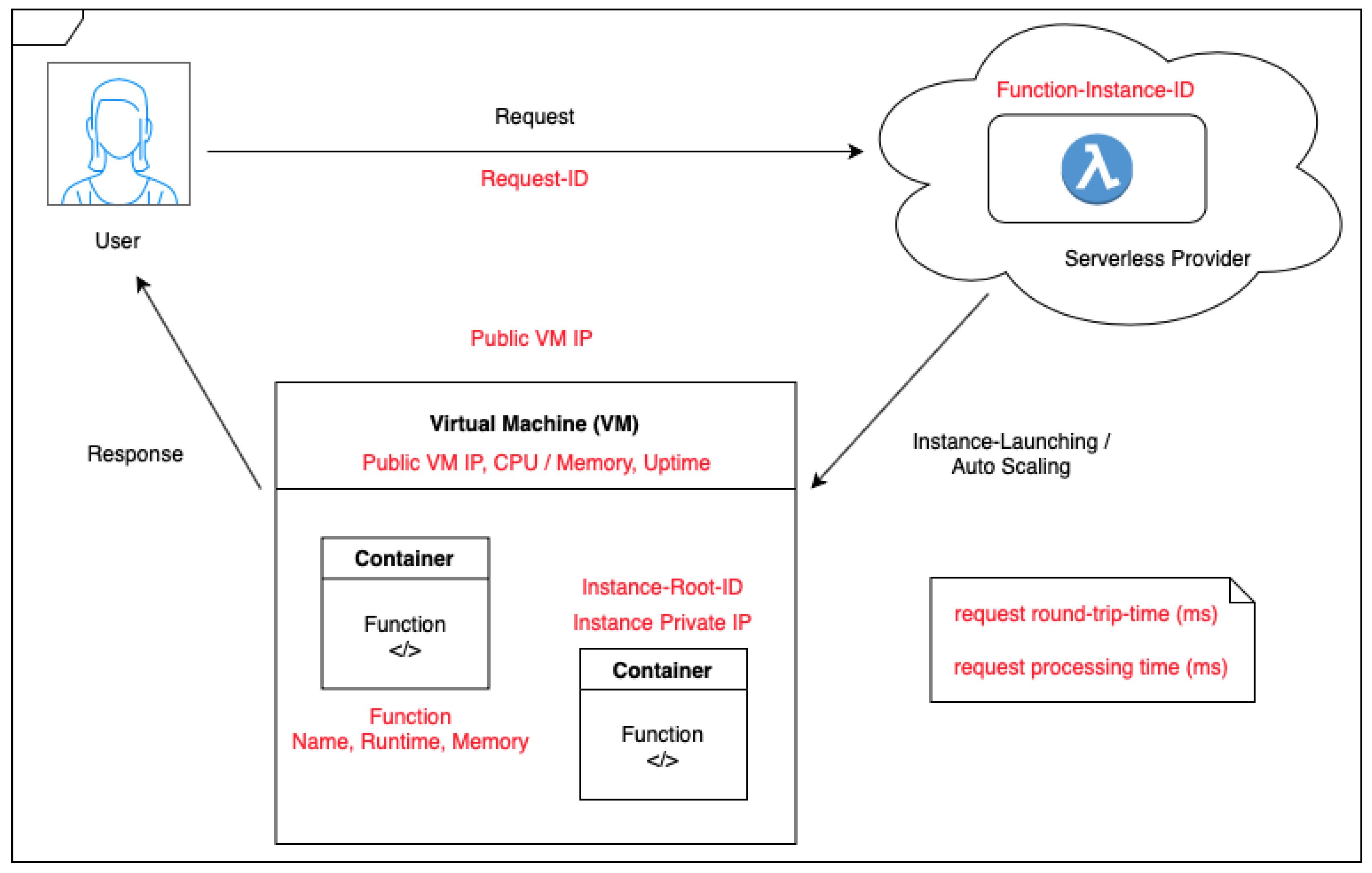

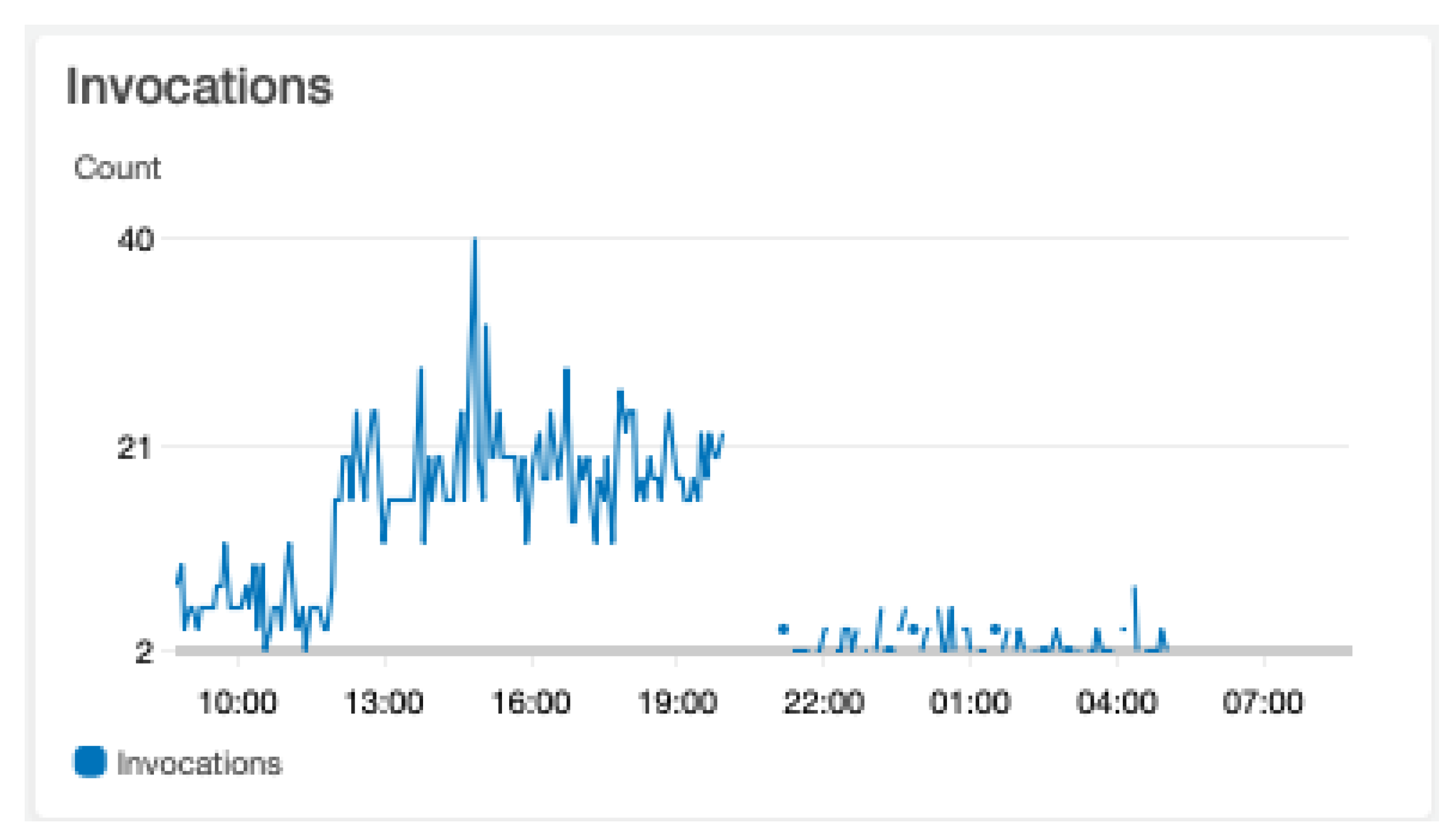

The second set of experiments focused on the networking aspects of the scaling practices to measure performance directly, based on the request-response rate and the allocation of VMs for a single function instance. Thus, the results from the measurement functions were used to identify private instance IPs and public VM IPs and determine the distribution of the incoming workloads across the backend infrastructure on a large scale. Through the usage of an AWS Gateway address, Lambda was invoked via HTTP calls and the TCP stack, which were tested on performance as well. An increased invocation rate challenges the network requiring tighter latency bounds, as concurrency and contention play an important part in the performance. The data from the 24-h interval run to collect data on AWS Lambda infrastructure traffic and performance is visualised in a distribution of the IP addresses and requests over the interval considered.

4.2.2. Findings

Further manipulation of the dataset has been carried out in R Studio to determine results regarding auto-scaling and its impact on load balancing. As concluded from the previous tests, the change of private and public VM IP addresses simultaneously indicates the use of a different instance to handle the request. Thus, the private instance IPs were isolated from the logs and subjected to a time series analysis to determine changes of the VM with the incoming request rate.

For Interval 1, with a request rate of one invocation every 1–5 min over 5 h, only one IP address was identified for 50 observations. In this case, subsequent requests were sent to the same VM and it can be concluded that the instance remained warm over the entire period. The result is displayed in

Figure 10.

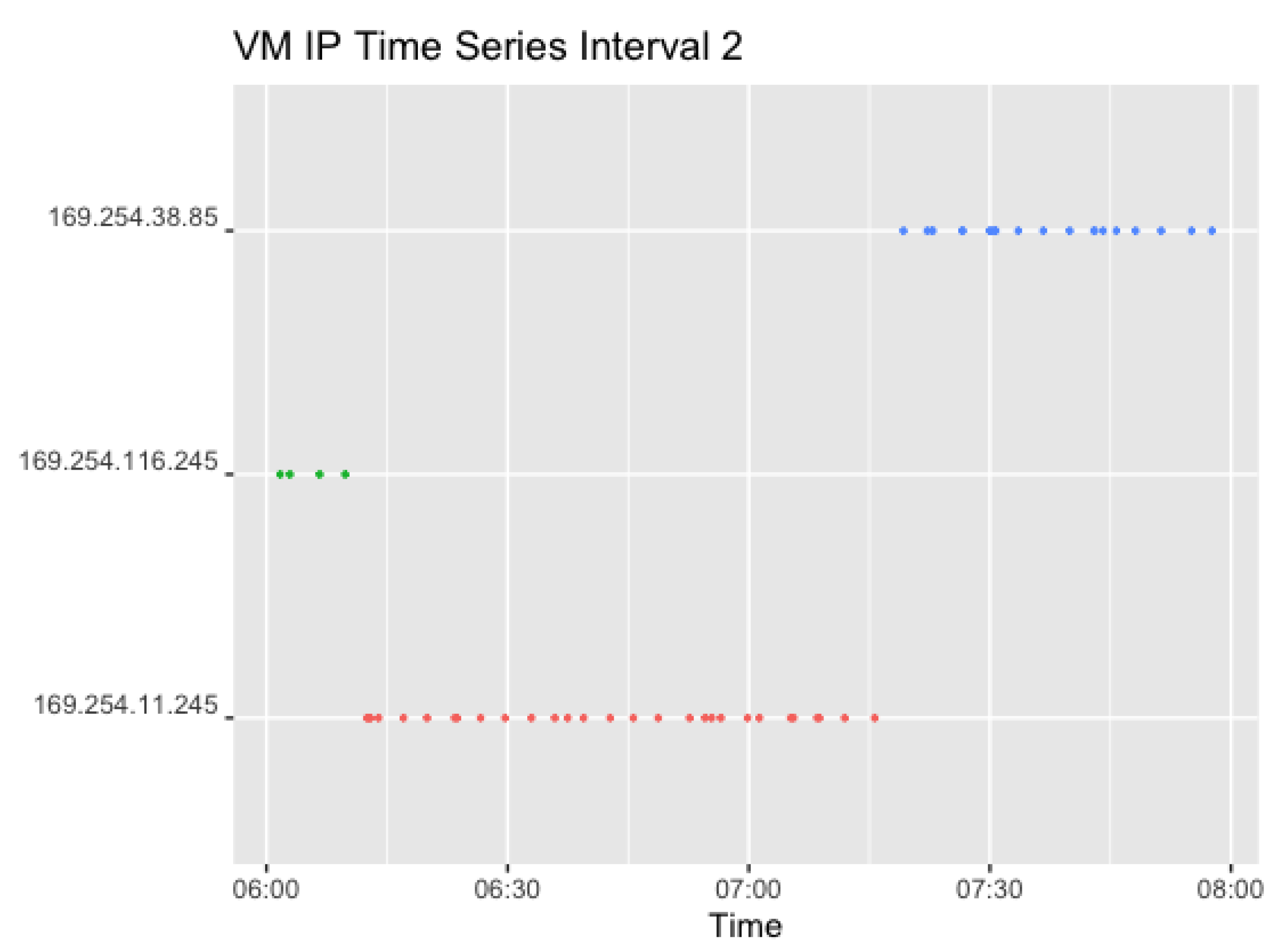

Figure 11 shows the corresponding plots for Interval 2. Interval 2 sends a request in a 0–4 min random interval, which resulted in 54 observations. The difference in color indicates a different VM, which changes twice in the given interval. The first IP (169.254.116.245) was used further following Interval 1.

Figure 12 shows the corresponding plots for Interval 3. Interval 3 sends two concurrent requests in an interval of 0–3 min, which resulted in 306 observations. Here, the graph shows two IPs for each timestamp.

This shows that the IPs in Interval 3 changed thrice within the given time frame. However, Interval 3 lasted 2 h longer than Interval 2. In addition to that, only one VM was changed at a time, as can be seen for the first time around 9:30 pm. In addition, the IP at the end of the second interval (169.254.38.85) and thus the VM was used further in the next interval, and changed after a total of around 2 h of usage. The second VM also changed after around 2 h.

Figure 13 and

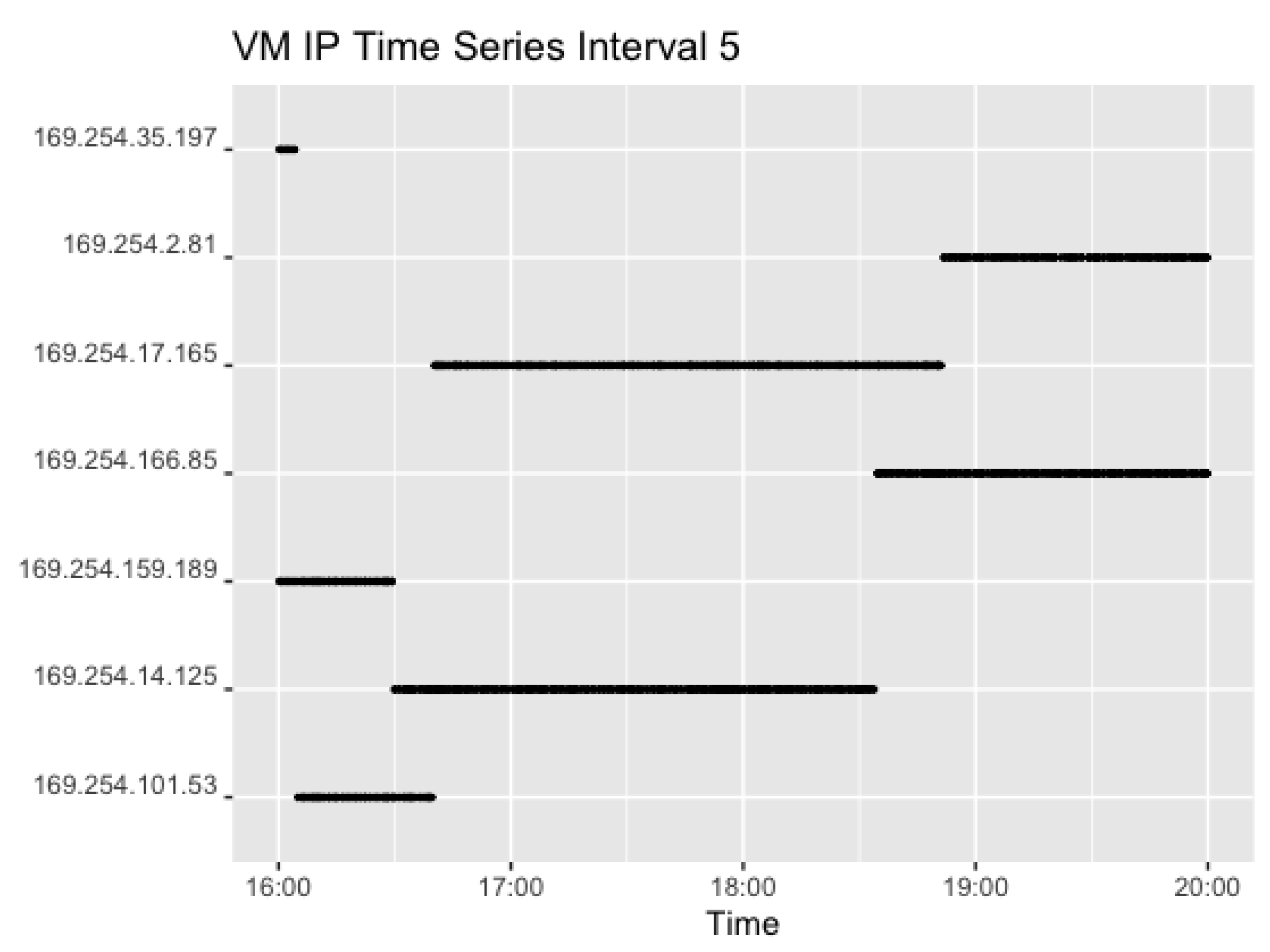

Figure 14 show the corresponding plots for Interval 4 and 5. Interval 4 sent four concurrent requests in a 0–3-min interval while Interval 5 sent two concurrent requests in an interval of 0–2 min. Hence,

Figure 14 shows two IPs for each timestamp. That resulted in 930 observations for Interval 4 and 920 observations for Interval 5.

Interval 4 shows the use of the set of IP addresses following the end of Interval 3, but added two extra as the number of concurrent requests rose. Again, the set of VMs changed after around 2 h. In Interval 5, however, all four IPs previously used in Interval 4 stayed warm, but seem to have been reused after pausing. This is most likely caused by the high request rate, where the instances are needed when the previous requests have not yet returned. Lastly,

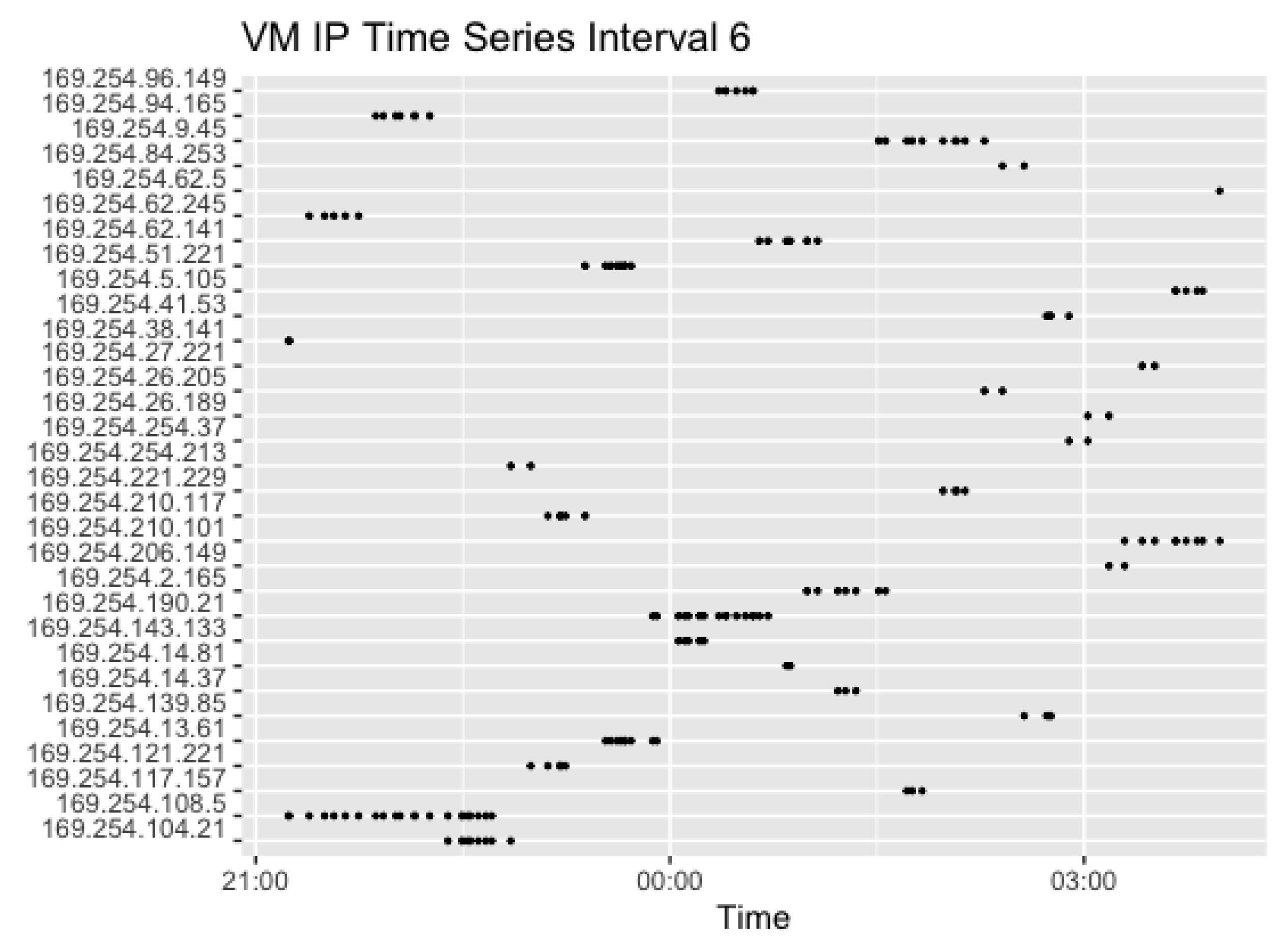

Figure 15 shows the corresponding plot for Interval 6. In the last interval, the request rate of two concurrent requests with a random sleep time of 0–10 min resulted in 174 observations overall.

In this Interval, the IP address was changing more frequently. This is likely to be caused by a low request rate of up to 10 min. With a smaller random generated sleep time, the VM may be idle and available for reuse. However, with a longer wait period, the likelihood of the VM getting cold, requiring the launch of a new VM, increases.

Counting the overall usage of Instance IP Addresses,

Table 10 gives an overview of the number of invocations indicated by the number of instances used, and the number of VMs indicated by the public IPs used, for each interval.

There are 51 distinct IP addresses in the dataset. The distribution of the IP addresses shows that, unlike in Measurement Framework results, VMs were reused more than twice, and reused immediately afterwards. During Interval 2 (0–2 min sleep time), Interval 3 (0–3 min sleep time), Interval 4 (0–4 min sleep time) and Interval 5 (0–1 min sleep time), the IP addresses remained stable for a certain period before changing to another set. This happens after around 2 h, regardless of a continuing request rate. In Interval 6, however, the addresses changed with almost every invocation. This indicates that AWS tends to schedule subsequent requests to the same, warm VMs for the most part. However, the more IP addresses change, the longer is the wait time between intervals, which indicates that instances go cold after around 10 min. In between intervals, however, the same VMs used during the last invocations of the previous intervals continued to be used, and more instances are launched in the case of a higher request rate. In addition to that, AWS was observed to not reuse VMs after changing the settings, and seem to not place more than one instance on a VM, thus confirming the results from the measurement test.

A major finding of the time series analysis is that VMs seem to change regardless of the changes in the request-rate. That means, that AWS was observed to allocate subsequent requests to another VM after a certain time automatically, regardless of the incoming request frequency changes. That appears true also for concurrent requests, whereas only one VM IP changes at a time. In the event of allocating the traffic to another resource, the previous instance must go cold and a new instance must be launched on that VM. As a result, this increases the execution time and thus explains the changing duration as displayed in

Figure 9. However, the data do not provide enough detail on the exact timing of the changes, which remains subject to further investigation. It can be concluded that each instance has not only an idle-time but also a life-time.

Architecture: In comparison with the previous results from the measurement framework tests, the data from the Traffic Distribution Analysis logs again show the same private instance IP for the Lambda functions. As a link local network, it is possible that the IP is changing regardless within clusters, as it can be allocated within each network domain. This would suggest that AWS allocates the IP not to an instance, but to an account. However, investigation of this requires further discussion.

In terms of the underlying hardware, all 2432 observations were analysed in terms of CPU usage and memory. In line with the findings from the Function Distribution Measurement, requests ended at the same type of server with an Intel(R) Xeon(R) Processor @ 2.50GHz and 2 vCPU for the majority of observations. However, 16 observation were placed on a Intel(R) Xeon(R) Processor @ 3.00GHz.

Table 11 overviews the range of the collected data.

According to AWS, CPU is shared in fractions of milliseconds, in which each running instance can use it. The share itself is proportional to memory, meaning that theoretically, a higher memory requirement will receive more CPU power [

4]. In the case of the file upload stream, however, the share is attached to the allocated 128 MB memory, of which no more than 77 MB is used as described previously. The cached memory variable provides information that, among all executions, usage averaged between 71 kB and 77 MB.

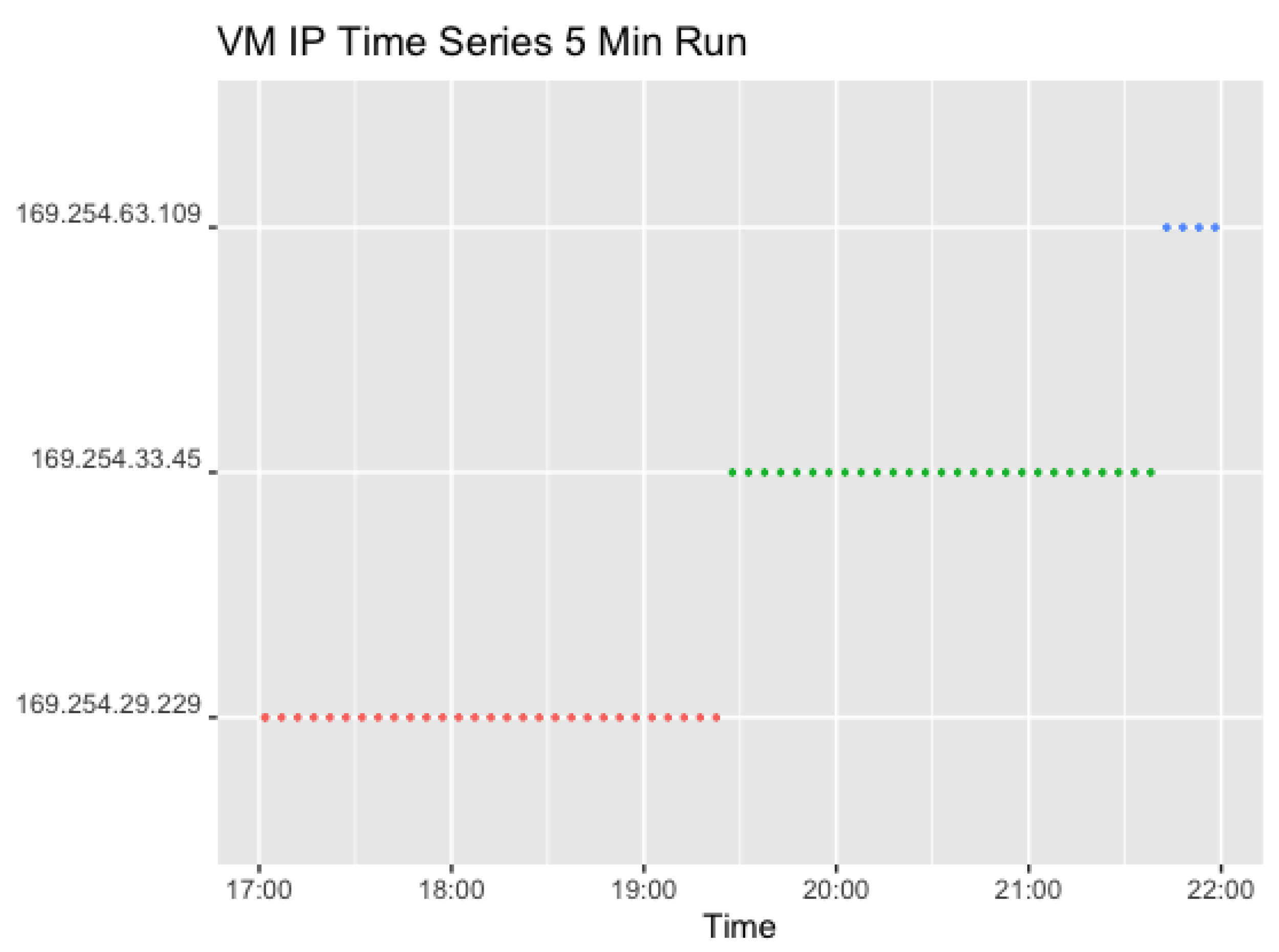

Additional Interval Run: To further explore these findings, a second test run was carried out. The goal was specifically to target the automatic change of VMs. Two sets over 4 h with a request rate of either 5- or 12-min intervals were used to investigate the resource allocation with warm and cold VMs, and the handling of subsequent calls without the influence of randomisation. The test found three distinct IPs for the 5-min run with single requests and 51 distinct IPs for the 12-min intervals with two concurrent requests. There were 61 invocations in the first run and 51 for the second.

As the request settings did not contain any randomised values, the data can be used to validate further the theory resulting from the previous experiment that AWS changes the VM used automatically after a certain period, despite a steady request rate and corresponding warm instances. The results for the 5 min run are illustrated in

Figure 16.

Figure 16 again indicates a change in VM after a little over 2 h, which occurs twice in the dataset. The

initDuration variable logged by AWS Lambda appeared two times accordingly. As public and private IPs change at the same time, this further validates the conclusion from previous experiments that each private VM IP is attached to a single public IP which does not just change by random IP allocation.

The first change in IP addresses can be observed after the first 30 invocations, and the second after the following 27 invocations. The experiment started at 27 July 2020 17:01:43 (local time). It was ensured that there were no previous invocations for 30 min before starting the experiment. At 27 July 2020 19:27:32 (local time), the first change of IP addresses can be identified. Subtracting these times results in a period of 2 h 24 min 49 s. For the second change, the calculation shows that the VM was running for 2 h 15 min 31 s before changing.

Table 12 overviews the

“REPORT” variables of the previous request and the request where the VM first changes.

In the example above, the total execution duration for a new VM event changed around 364.23%, which thereby increased the billed duration as well. The initDuration made up 17.21% of the total duration, which is similar to the percentage taken from the measurement framework tests. Moving on to the subsequent request, the execution duration decreased again to a normal level (374.34 ms).

In line with the findings of Interval 6, this shows 51 distinct IP addresses, resulting in a new pair at each invocation. The larger wait time between workloads, therefore, leads to the instances going cold. A new instance is initialised every time.

“Hello World” Run: A separate function instance only returning a simple Hello World message was used to be compared to the File Upload Stream. The average execution time of the Hello World Function according to Cloud Watch Insights lay between 15 and 79 ms with a mean duration of 36.6 ms. Compared with the File Upload Stream execution mean of 346.83 ms, the execution time increased by around 840% with the integration of the API Gateway, S3 and the calculations of the underlying procs files.

To further investigate the automatic changing of VMs, the request rate was set for 1 and 10 min for this test run, resulting in 288 observations overall. The results again validate the previous findings of the automatic change in VMs after around 2 h. However, there is not a change with every request for the 10-min interval. A separate test with an 11-min interval again showed no change, but it did with a 12-min interval. Therefore, it can be concluded that an instance has an idle time of around 12 min.

Figure 17 overviews the results.

The Hello World function was also deployed in a different region, eu-west-2, to investigate possible changes in traffic. The IP addresses identified were within the AWS IP range of 3.x, 18.x, 32.x, 35.x, 52.x and 54.x. across all IPs collected in the study, but with a different location in London, UK. However, the private instance IP remains the same as in the us-west-1 region (169.254.76.1).

Postman Results: With the use of the REST API Gateway for Lambda, an HTTP POST call can be made over TCP, where a three-way-handshake is made before the request is sent out through the webserver. In this way, it is possible to trigger the function directly from the client’s browser, including a data transfer. Carrying out a sub-experiment with Postman [

18] to further break down the network protocol stack for the TCP packets for the File Upload Stream, we can determine the time taken to fulfill the three-way-handshake (SYN-ACK time) and Round-Trip time for different request timings, to identify the impact of the gateway on load balancing and latency. In addition, these results may serve to determine the effect of Cold-Start and the client socket on network latency.

Table 13 summarises the results for the Round-Trip time for the wait periods of 20, 15, 12, 10, 5 and 1 min, as well as 1 s.

The lowering Round-Trip-time over more frequent requests indicates usage of a warm instance. However, the decreasing rate flattens slightly over a request rate of 1–10 min. For the 15-min intervals, a peak can be observed, which is likely caused by normal fluctuations. In general, the most drastic change can be observed between 12 and 10 min of sleep time, where the Round-Trip-time decreases over 1 ms. The idle period must, therefore, lie in that range, which matches the previous findings.

Compared to the Execution Time on AWS Lambda for the Traffic Distribution Analysis averaging around 346 ms, the Postman Test shows results between 323.11 and 2110.79 ms with an average of 1027.36 ms. The time difference between the execution duration and Round-Trip time must be caused by provisioning the VM, the connection time and data exchange. This accounts for 1–1.5 s, which appears also in line with the previous results.

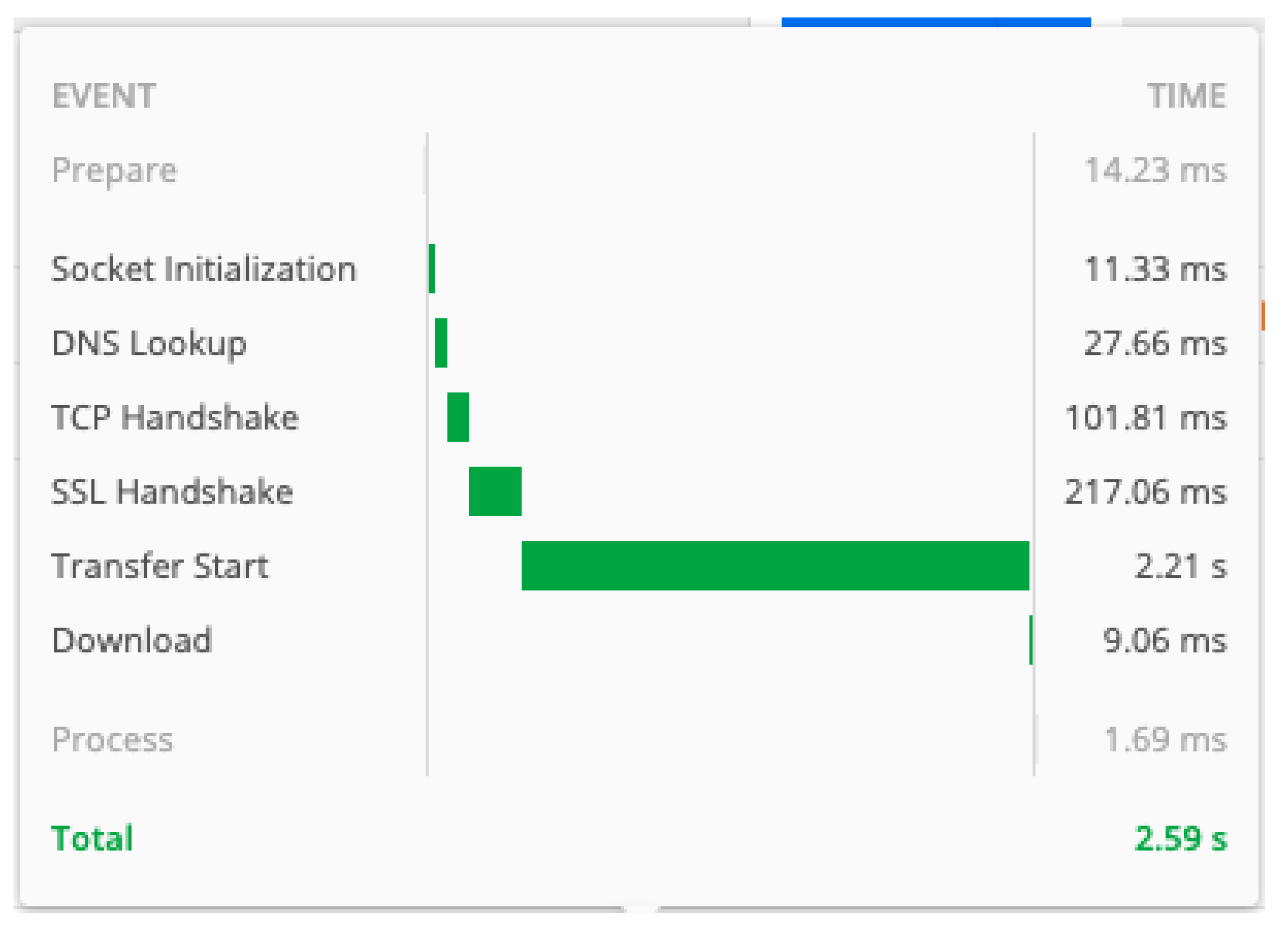

For the initial cold run, the time required to fulfill the request for each process is shown in

Figure 18.

The Postman results show that the transfer time makes up the most of the request time. It varies depending on the interval, while the TCP Handshake time remains stable. It is likely that the socket initialisation, DNS, TCP and SSL transports are managed by a separate proxy as such calculations are expensive and AWS would benefit from shifting them to a faster hardware. Comparing the TCP and Data Transfer Time,

Figure 19 provides an overview and confirms the steady TCP stack implementation time.

To sum up, the Postman Tests provide evidence that the increased latency with long idle times is due to a longer Data Transfer Time, presumably caused by the Cold-Start. The network TCP stack implementation however remains stable, so that the reason for the longer Data Transfer Time must be a delay in the data availability, implicating cache availability at the server. That again is most likely influenced by the connection to AWS S3. As the applied API Gateway is a REST service, its stateless nature and session affinity enables a wait time for the request processing. However, this leads to a decrease in performance.