gEYEded: Subtle and Challenging Gaze-Based Player Guidance in Exploration Games

Abstract

:1. Introduction

- Introducing gaze-based player guidance in exploration games

- Investigation of gaze-based guidance in comparison to a crosshair-variant in exploration games

2. Related Work

2.1. Subtle Gaze Direction

2.2. Overt Gaze Direction

2.3. Gaze Direction in Games

3. Our Approach

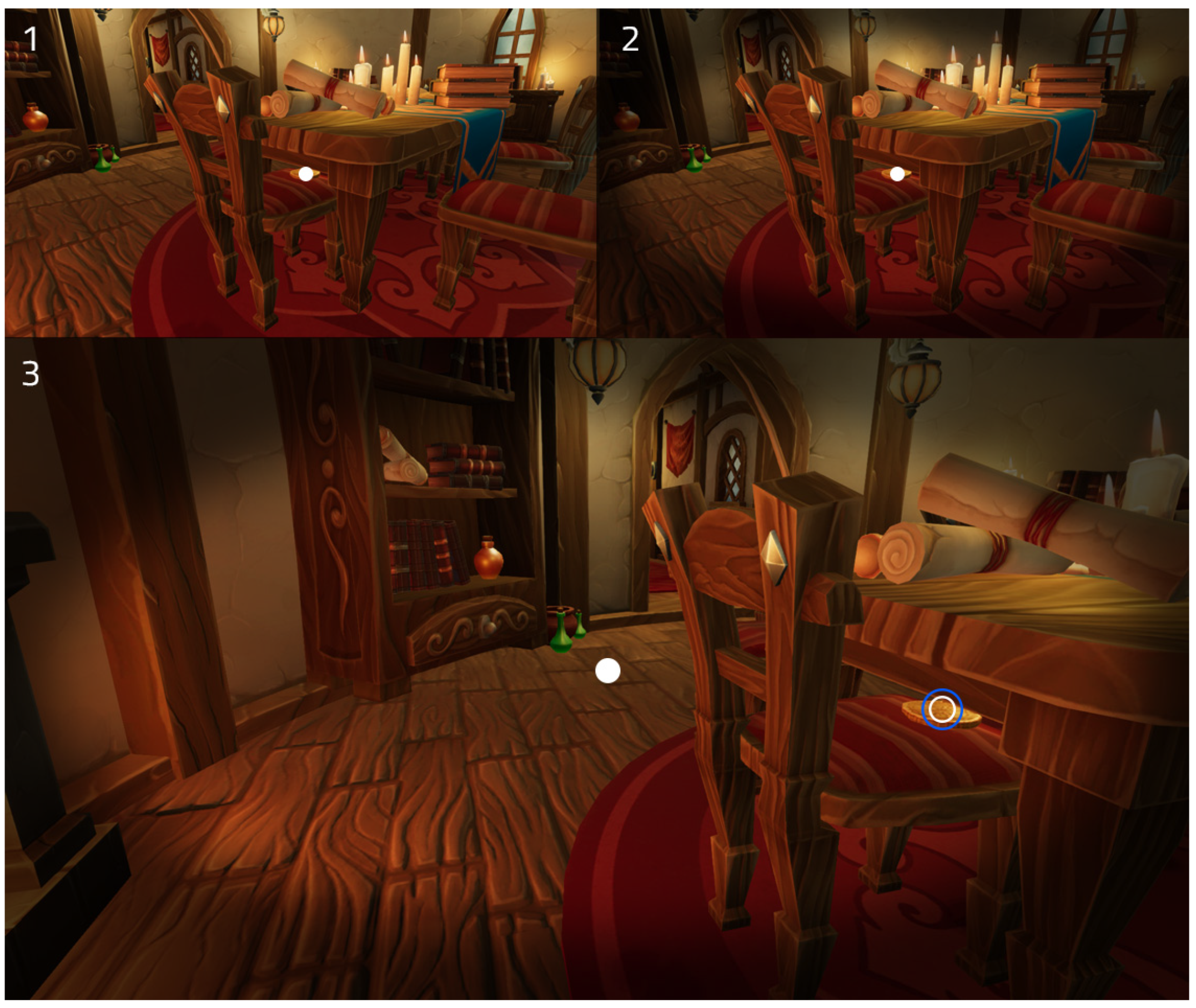

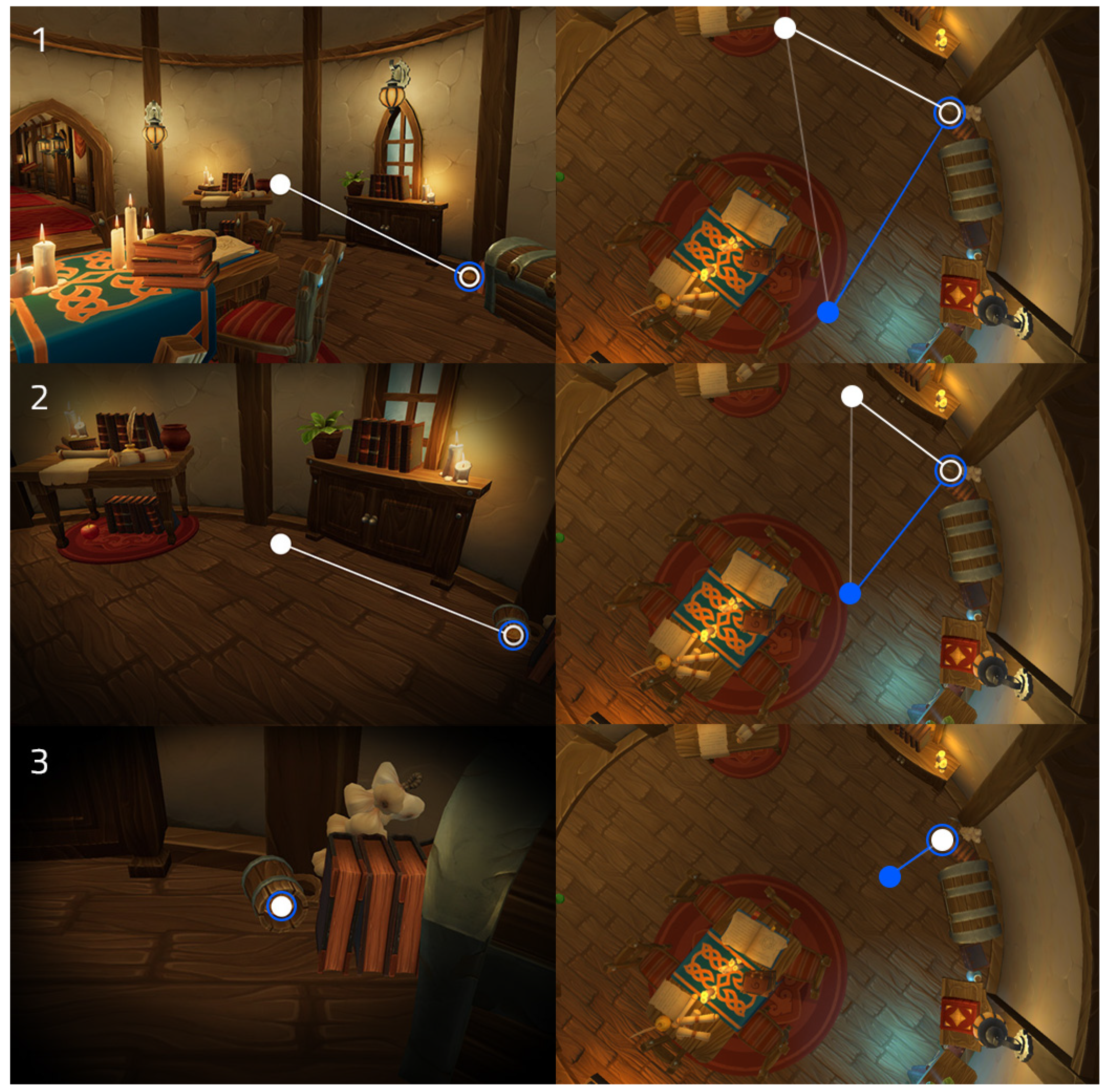

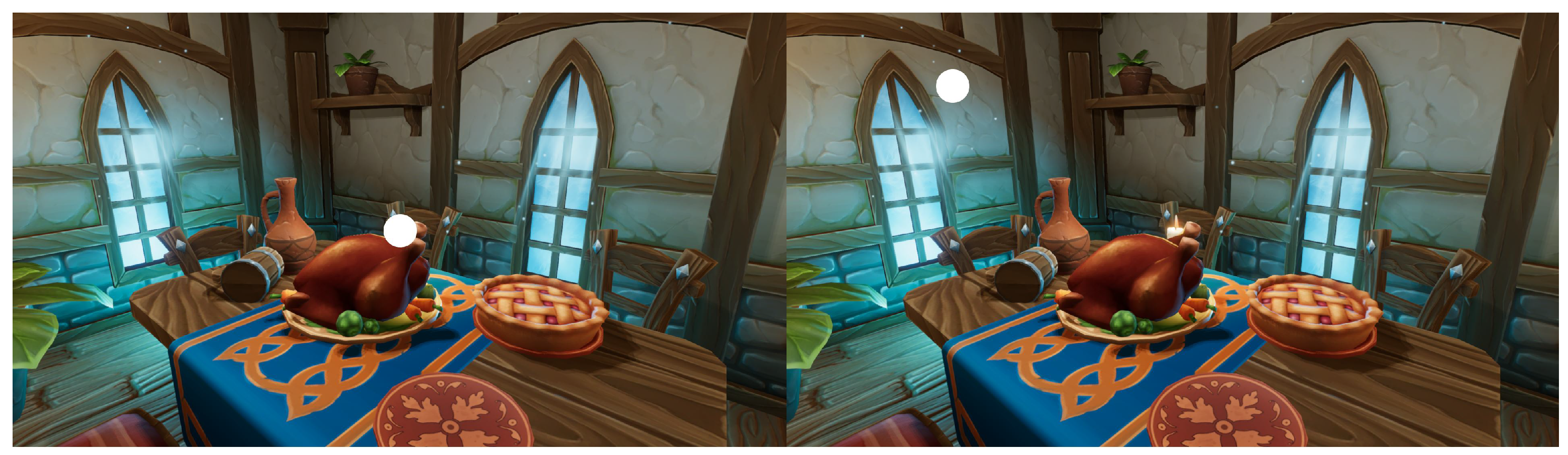

3.1. Game Prototype

4. Comparative Study

4.1. Conditions

- the distance between the player’s avatar and the gaze-sensitive area (i.e., the effect only kicks in, when the player is in close distance to the object—in our case: 2 m in Unity)

- the distance between the gaze position and the coin in screen space within a gaze-sensitive area (i.e., the closer the gaze position is in relation to a coin, the stronger the effect—in the game prototype, a gradual intensity transition was implemented: 1/2 screen width distance between gaze and coin: 0% effect strength; 0 distance: 100% effect strength)

4.2. Technical Setup

4.3. Hypotheses

4.4. Participants and Procedure

4.5. Measures

- Emotional involvement (EmIn): “To what extent did you feel that the game was something fun you were experiencing, rather than something you were just doing?” (rated on a seven-point Likert scale ranging from “not at all” to “very much so”).

- Challenge (Chal): “To what extent did you find the game challenging?” (rated on a seven-point Likert scale ranging from “not at all” to “very difficult”).

4.6. Data Analysis

5. Results

5.1. Analysis of Quantitative Data

5.1.1. H1: Game Experience

5.1.2. H2: Game Performance

5.1.3. H3: Game Difficulty

5.2. Analysis of Qualitative Data

5.2.1. Gaze as a Special Skill

5.2.2. Challenge and Novelty

5.2.3. The Meaning and Use of Gaze

6. Discussion

6.1. Game Experience

6.2. Game Performance

6.3. Game Challenge

6.4. General Applicability

6.5. Limitations

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| Crosshair Guidance | CrossG |

| Gaze guidance | GazeG |

| No Guidance | NoG |

| Overt Gaze Direction | OGD |

| Subtle Gaze Direction | SGD |

| Virtual reality | VR |

| Augmented reality | AR |

| Playful Interactive Environments | PIE |

| Immersive Experience Questionnaire | IEQ |

| Cognitive involvement | Coin |

| Emotional involvement | EmIn |

| Control | Cont |

| Challenge | Chal |

| Total immersion | Toim |

| Repeated-measures analysis of variance | rANOVA |

References

- Velloso, E.; Fleming, A.; Alexander, J.; Gellersen, H. Gaze-supported gaming: MAGIC techniques for first person shooters. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play, CHI PLAY ’15, London, UK, 5–7 October 2015; ACM: New York, NY, USA, 2015; pp. 343–347. [Google Scholar] [CrossRef]

- Pfeuffer, K.; Alexander, J.; Gellersen, H. GazeArchers: Playing with individual and shared attention in a two-player look&shoot tabletop game. In Proceedings of the 15th International Conference on Mobile and Ubiquitous Multimedia, MUM ’16, Rovaniemi, Finland, 12–15 December 2016; ACM: New York, NY, USA, 2016; pp. 213–216. [Google Scholar] [CrossRef]

- Menges, R.; Kumar, C.; Wechselberger, U.; Schaefer, C.; Walber, T.; Staab, S. Schau genau! A gaze-controlled 3D game for entertainment and education. J. Eye Mov. Res. 2017, 10, 220. [Google Scholar]

- Lankes, M.; Rammer, D.; Maurer, B. Eye contact: Gaze as a connector between spectators and players in online games. In Entertainment Computing—ICEC 2017; Munekata, N., Kunita, I., Hoshino, J., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 310–321. [Google Scholar]

- Lankes, M.; Newn, J.; Maurer, B.; Velloso, E.; Dechant, M.; Gellersen, H. EyePlay revisited: Past, present and future challenges for eye-based interaction in games. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts, CHI PLAY ’18 Extended Abstracts, Melbourne, VIC, Australia, 28–31 October 2018; ACM: New York, NY, USA, 2018; pp. 689–693. [Google Scholar] [CrossRef]

- Antunes, J.; Santana, P. A study on the use of eye tracking to adapt gameplay and procedural content generation in first-person shooter games. Multimodal Technol. Interact. 2018, 2, 23. [Google Scholar] [CrossRef]

- Navarro, D.; Sundstedt, V. Simplifying game mechanics: Gaze as an implicit interaction method. In Proceedings of the SIGGRAPH Asia 2017 Technical Briefs, SA ’17, Bangkok, Thailand, 27–30 November 2017; ACM: New York, NY, USA, 2017; pp. 4:1–4:4. [Google Scholar] [CrossRef]

- Duchowski, A.T. Serious gaze. In Proceedings of the 2017 9th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), Athens, Greece, 6–8 September 2017; pp. 276–283. [Google Scholar] [CrossRef]

- Abbaszadegan, M.; Yaghoubi, S.; MacKenzie, I.S. TrackMaze: A comparison of head-tracking, eye-tracking, and tilt as input methods for mobile games. In Human-Computer Interaction. Interaction Technologies; Kurosu, M., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 393–405. [Google Scholar]

- Dechant, M.; Heckner, M.; Wolff, C. Den Schrecken im Blick: Eye tracking und survival horrorspiele. In Mensch & Computer 2013 Workshopband; Boll, S., Maaß, S., Malaka, R., Eds.; Oldenbourg Verlag: München, Germany, 2013; pp. 539–542. [Google Scholar]

- Ubisoft Montreal. Far Cry 5; Game [SNES]; Ubisoft: Rennes, France, 2018; Last played February 2019. [Google Scholar]

- Ubisoft Montreal. Assassin’s Creed Odyssey; Game [Microsoft Windows, PS4, XboxOne]; Ubisoft: Rennes, France, 2018; Last played February 2019. [Google Scholar]

- Tobii. Tobii Gaming, PC Games with Eye Tracking, Top Games from Steam, Uplay. 2018. Available online: https://tobiigaming.com/games/ (accessed on 2 April 2019).

- HTC. HTC Vive Pro Eye. 2019. Available online: https://www.vive.com/eu/pro-eye/ (accessed on 15 May 2019).

- Velloso, E.; Carter, M. The emergence of EyePlay: A survey of eye interaction in games. In Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play, CHI PLAY ’16, Austin, TX, USA, 16–19 October 2016; ACM: New York, NY, USA, 2016; pp. 171–185. [Google Scholar] [CrossRef]

- Tobii. How to Play Assassin’s Creed Origins with Tobii Eye Tracking. 2018. Available online: https://www.youtube.com/watch?time_continue=2&v=ZSoDSiI0mZw (accessed on 25 February 2019).

- Lintu, A.; Carbonell, N. Gaze Guidance through Peripheral Stimuli; Centre de recherche INRIA Nancy: Rocquencourt, France, 2009. [Google Scholar]

- De Koning, B.B.; Jarodzka, H. Attention guidance strategies for supporting learning from dynamic visualizations. In Learning from Dynamic Visualization; Springer: Cham, Switzerland, 2017; pp. 255–278. [Google Scholar]

- Lin, Y.C.; Chang, Y.J.; Hu, H.N.; Cheng, H.T.; Huang, C.W.; Sun, M. Tell me where to look: Investigating ways for assisting focus in 360 video. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 2535–2545. [Google Scholar]

- Cole, F.; DeCarlo, D.; Finkelstein, A.; Kin, K.; Morley, R.K.; Santella, A. Directing gaze in 3D models with stylized focus. Render. Technol. 2006, 2006, 17. [Google Scholar]

- Barth, E.; Dorr, M.; Böhme, M.; Gegenfurtner, K.; Martinetz, T. Guiding the mind’s eye: improving communication and vision by external control of the scanpath. In Proceedings of Human Vision and Electronic Imaging XI; International Society for Optics and Photonics: Washington, DC, USA, 2006; Volume 6057, p. 60570D. [Google Scholar]

- Bailey, R.; McNamara, A.; Sudarsanam, N.; Grimm, C. Subtle gaze direction. ACM Trans. Graph. (TOG) 2009, 28, 100. [Google Scholar] [CrossRef]

- Hata, H.; Koike, H.; Sato, Y. Visual guidance with unnoticed blur effect. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Bari, Italy, 7–10 June 2016; ACM: New York, NY, USA, 2016; pp. 28–35. [Google Scholar]

- Grogorick, S.; Stengel, M.; Eisemann, E.; Magnor, M. Subtle gaze guidance for immersive environments. In Proceedings of the ACM Symposium on Applied Perception, Cottbus, Germany, 16–17 September 2017; ACM: New York, NY, USA, 2017; p. 4. [Google Scholar]

- Schell, J. The Art of Game Design: A Book of Lenses; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2008. [Google Scholar]

- Astronauts, T. The Vanishing of Ethan Carter; Game [Microsoft Windows], 2014; The Astronauts: Warsaw, Poland, 2014; Last played June 2018. [Google Scholar]

- Brandse, M.; Tomimatsu, K. Using color guidance to improve on usability in interactive environments. In HCI International 2014—Posters’ Extended Abstracts; Stephanidis, C., Ed.; Springer International Publishing: Cham, Switzerland, 2014; pp. 3–8. [Google Scholar]

- Gibson, J. Introduction to Game Design, Prototyping, and Development: From Concept to Playable Game with Unity and C#, 1st ed.; Addison-Wesley Professional: Boston, MA, USA, 2014. [Google Scholar]

- Totten, C. An Architectural Approach to Level Design; Taylor & Francis: Abingdon-on-Thames, UK, 2014. [Google Scholar]

- Sparrow, G. What Remains of Edith Finch; Game [Microsoft Windows, PS4, XboxOne], 2017; Giant Sparrow: Santa Monica, CA, USA, 2017; Last played February 2019. [Google Scholar]

- Rogers, S. Level Up! The Guide to Great Video Game Design, 2nd ed.; Wiley Publishing: Hoboken, NJ, USA, 2014. [Google Scholar]

- Castillo, T.; Novak, J. Game Development Essentials: Game Level Design, 1st ed.; Delmar Learning: Clifton Park, NY, USA, 2008. [Google Scholar]

- Isokoski, P.; Joos, M.; Spakov, O.; Martin, B. Gaze controlled games. Univers. Access Inf. Soc. 2009, 8, 323–337. [Google Scholar] [CrossRef]

- Sundstedt, V.; Bernhard, M.; Stavrakis, E.; Reinhard, E.; Wimmer, M. Visual attention and gaze behavior in games: An object-based approach. In Game Analytics: Maximizing the Value of Player Data; Seif El-Nasr, M., Drachen, A., Canossa, A., Eds.; Springer: London, UK, 2013; pp. 543–583. [Google Scholar] [CrossRef]

- Sheikh, A.; Brown, A.; Watson, Z.; Evans, M. Directing Attention in 360-Degree Video. In Proceedings of the International Broadcasting Convention, IBC 2016, Amsterdam, The Netherlands, 8–12 September 2016; IBC: London, UK, 2016. [Google Scholar]

- Khan, A.; Matejka, J.; Fitzmaurice, G.; Kurtenbach, G. Spotlight: Directing users’ attention on large displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; ACM: New York, NY, USA, 2005; pp. 791–798. [Google Scholar]

- Sato, Y.; Sugano, Y.; Sugimoto, A.; Kuno, Y.; Koike, H. Sensing and controlling human gaze in daily living space for human-harmonized information environments. In Human-Harmonized Information Technology; Springer: Cham, Switzerland, 2016; Volume 1, pp. 199–237. [Google Scholar]

- Vig, E.; Dorr, M.; Barth, E. Learned saliency transformations for gaze guidance. In Human Vision and Electronic Imaging XVI; International Society for Optics and Photonics: Washington, DC, USA, 2011; Volume 7865, p. 78650W. [Google Scholar]

- Kosara, R.; Miksch, S.; Hauser, H. Focus+ context taken literally. IEEE Comput. Graph. Appl. 2002, 22, 22–29. [Google Scholar] [CrossRef]

- Ben-Joseph, E.; Greenstein, E. Gaze Direction in Virtual Reality Using Illumination Modulation and Sound; research report; Leland Atanford Junior University: Stanford, CA, USA, 2016. [Google Scholar]

- Team Bondi. L.A. Noire; Game [Microsoft Windows], 2011; Team Bondi: Sydney, Australia, 2011; Last played February 2018. [Google Scholar]

- Creative Assembly. Alien: Isolation; Game [Microsoft Windows], 2014; Creative Assembly: Horsham, UK, 2014; Last played January 2018. [Google Scholar]

- MercurySteam; Nintendo EPD. Metroid: Samus Returns; Game [Nintendo 3DS], 2017; MercurySteam and Nintendo EPD: Kyoto, Japan, 2017; Last played May 2018. [Google Scholar]

- Nintendo EAD. Zelda: A Link between Worlds; Game [Nintendo 3DS], 2013; Nintendo EAD: Kyoto, Japan, 2013; Last played September 2018. [Google Scholar]

- Galactic Cafe. The Stanley Parable; Game [Microsoft Windows], 2013; Galactic Cafe: Austin, TX, USA, 2013; Last played February 2018. [Google Scholar]

- The Chinese Room; SCE Santa Monica Studio. Everybody’s Gone to the Rapture; Game [Microsoft Windows], 2016; The Chinese Room and SCE Santa Monica Studio: Brighton, UK, 2016; Last played May 2018. [Google Scholar]

- Fagerholt, E.; Lorentzon, M. Beyond the HUD—User Interfaces for Increased Player Immersion in FPS Games. Master’s Thesis, Chalmers University of Technology, Göteborg, Sweden, 2009; p. 118. [Google Scholar]

- Unity. Vignette. 2019. Available online: https://docs.unity3d.com/Manual/PostProcessing-Vignette.html (accessed on 24 May 2019).

- Lutteroth, C.; Penkar, M.; Weber, G. Gaze vs. mouse: A fast and accurate gaze-only click alternative. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, UIST ’15, Charlotte, NC, USA, 11–15 November 2015; ACM: New York, NY, USA, 2015; pp. 385–394. [Google Scholar] [CrossRef]

- Bednarik, R.; Tukiainen, M. Gaze vs. Mouse in Games: The Effects on User Experience; research report; University of Joensuu: Joensuu, Finnland, 2008. [Google Scholar]

- Kasprowski, P.; Harezlak, K.; Niezabitowski, M. Eye movement tracking as a new promising modality for human computer interaction. In Proceedings of the 17th International Carpathian Control Conference (ICCC), Tatranska Lomnica, Slovakia, 29 May–1 June 2016. [Google Scholar] [CrossRef]

- Isokoski, P.; Martin, B. Eye Tracker Input in First Person Shooter Games. In Proceedings of the COGAIN, Turin, Italy, 4–5 September 2006; pp. 78–81. [Google Scholar]

- Dorr, M.; Pomarjanschi, L.; Barth, E. Gaze beats mouse: A case study on a gaze-controlled breakout. PsychNology J. 2009, 7, 197–211. [Google Scholar]

- Dechant, M.; Stavness, I.; Mairena, A.; Mandryk, R.L. Empirical Evaluation of Hybrid Gaze-Controller Selection Techniques in a Gaming Context. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play, CHI PLAY ’18, Melbourne, VIC, Australia, 28–31 October 2018; ACM: New York, NY, USA, 2018; pp. 73–85. [Google Scholar] [CrossRef]

- Hild, J.; Gill, D.; Beyerer, J. Comparing Mouse and MAGIC Pointing for Moving Target Acquisition. In Proceedings of the Symposium on Eye Tracking Research and Applications, ETRA ’14, Safety Harbor, FL, USA, 26–28 March 2014; ACM: New York, NY, USA, 2014; pp. 131–134. [Google Scholar] [CrossRef]

- Unity. Unity. 2018. Available online: https://unity3d.com/ (accessed on 13 January 2019).

- Medieval Cartoon Furniture Pack. 2019. Available online: https://assetstore.unity.com/packages/3d/environments/fantasy/medieval-cartoon-furniture-pack-15094 (accessed on 22 April 2019).

- Gaming, T. Tobii Eye Tracker 4C. 2018. Available online: https://tobiigaming.com/product/tobii-eye-tracker-4c/ (accessed on 22 March 2019).

- Gaming, T. Tobii Unity SDK for Desktop. 2018. Available online: http://developer.tobii.com/tobii-unity-sdk/ (accessed on 22 March 2019).

- Entertainment Software Association. Essential Facts about the Computer and Video Game Industry. 2018. Available online: https://www.theesa.com/wp-content/uploads/2019/03/ESA_EssentialFacts_2018.pdf (accessed on 10 February 2019).

- Tobii. Tobii SDK Guide. 2019. Available online: https://developer.tobii.com/tobii-sdk-guide/ (accessed on 3 May 2019).

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum.-Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Iacovides, I.; Cox, A.L. Moving Beyond Fun: Evaluating Serious Experience in Digital Games. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI ’15, Seoul, Korea, 18–23 April 2015; ACM: New York, NY, USA, 2015; pp. 2245–2254. [Google Scholar] [CrossRef]

- Rigby, J.M.; Brumby, D.P.; Cox, A.L.; Gould, S.J.J. Watching movies on Netflix: Investigating the effect of screen size on viewer immersion. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, MobileHCI ’16, Florence, Italy, 6–9 September 2016; ACM: New York, NY, USA, 2016; pp. 714–721. [Google Scholar] [CrossRef]

- Iacovides, I.; Cox, A.; Kennedy, R.; Cairns, P.; Jennett, C. Removing the HUD: The Impact of Non-Diegetic Game Elements and Expertise on Player Involvement. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play, CHI PLAY ’15, London, UK, 5–7 October 2015; ACM: New York, NY, USA, 2015; pp. 13–22. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef] [Green Version]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience; Harper Perennial: New York, NY, USA, 1991. [Google Scholar]

- Sweetser, P.; Wyeth, P. GameFlow: A model for evaluating player enjoyment in games. Comput. Entertain. 2005, 3, 3. [Google Scholar] [CrossRef]

- Piumsomboon, T.; Lee, G.; Lindeman, R.W.; Billinghurst, M. Exploring natural eye-gaze-based interaction for immersive virtual reality. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 36–39. [Google Scholar] [CrossRef]

- Menges, R.; Kumar, C.; Sengupta, K.; Staab, S. eyeGUI: A novel framework for eye-controlled user interfaces. In Proceedings of the 9th Nordic Conference on Human-Computer Interaction, NordiCHI ’16, Gothenburg, Sweden, 23–27 October 2016; ACM: New York, NY, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Kumar, C.; Menges, R.; Staab, S. Eye-Controlled Interfaces for Multimedia Interaction. IEEE MultiMedia 2016, 23, 6–13. [Google Scholar] [CrossRef]

- Lankes, M.; Mirlacher, T.; Wagner, S.; Hochleitner, W. Whom are you looking for?: The effects of different player representation relations on the presence in gaze-based games. In Proceedings of the First ACM SIGCHI Annual Symposium on Computer-Human Interaction in Play, CHI PLAY ’14, Toronto, ON, Canada, 19–21 October 2014; ACM: New York, NY, USA, 2014; pp. 171–179. [Google Scholar] [CrossRef]

- Bayliss, P. Beings in the game-world: Characters, avatars, and players. In Proceedings of the 4th Australasian Conference on Interactive Entertainment, IE’07, Melbourne, Australia, 3–5 December 2007; RMIT University: Melbourne, Australia, 2007; pp. 1–6. [Google Scholar]

- Horti, S. Eye Tracking for Gamers: Seeing is Believing. 2018. Available online: https://www.techradar.com/news/eye-tracking-for-gamers-seeing-is-believing (accessed on 23 July 2018).

- Frictional Games. Amnesia: The Dark Descent; Game [Microsoft Windows], 2010; Frictional Games: Helsingborg, Sweden, 2010; Last played October 2013. [Google Scholar]

- Rollings, A.; Adams, E. Andrew Rollings and Ernest Adams on Game Design; New Riders Publishing: Indianapolis, IN, USA, 2003. [Google Scholar]

- Nintendo EAD. Super Mario World; Game [SNES], 1992; Nintendo EAD: Kyoto, Japan, 1992; Last played May 2014. [Google Scholar]

- Blizzard Entertainment. Warcraft 3; Game [Microsoft Windows], 2002; Blizzard Entertainment: Irvine, CA, USA, 2002; Last played March 2012. [Google Scholar]

- CD Projekt Red. Witcher 3: Wild Hunt; Game [Microsoft Windows], 2015; CD Projekt: Warsaw, Poland, 2015; Last played October 2017. [Google Scholar]

- EA Romania, E. FIFA 18; Game [Microsoft Windows], 2017; EA Sports: San Mateo, California, USA, 2017; Last played January 2018. [Google Scholar]

- ACES Game Studio. Microsoft Flight Simulator X; Game [Microsoft Windows], 2006; Microsoft Studios: Redmond, WA, USA, 2006; Last played April 2015. [Google Scholar]

- Maxis. SimCity 2000; Game [Microsoft Windows], 1993; Maxis: Redwood, CA, USA, 1993; Last played March 2016. [Google Scholar]

- Revolution Software. Broken Sword: The Shadow of the Templars; Game [Microsoft Windows], 1996; Virgin Interactive Entertainment: London, UK, 1996; Last played June 2017. [Google Scholar]

- Leap, M. Magic Leap One: Creator Edition. 2018. Available online: https://www.magicleap.com/magic-leap-one (accessed on 9 June 2019).

- Nacke, L.E.; Bateman, C.; Mandryk, R.L. BrainHex: Preliminary results from a neurobiological gamer typology survey. In Entertainment Computing—ICEC 2011; Anacleto, J.C., Fels, S., Graham, N., Kapralos, B., Saif El-Nasr, M., Stanley, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 288–293. [Google Scholar]

| GazeG | CrossG | NoG | p | |||

|---|---|---|---|---|---|---|

| IEQ: Control | 5.15 (0.50) | 4.57 (0.78) | 4.88 (0.63) | 6.96 | 0.00 | 0.31 |

| IEQ: Emotional Involvement | 4.08 (0.73) | 3.51 (0.79) | 3.56 (0.99) | 16.11 | 0.00 | 0.41 |

| IEQ: Total Immersion | 7.42 (1.28) | 6.46 (1.56) | 6.25 (1.94) | 4.78 | 0.01 | 0.27 |

| Metric: Coins collected | 6.79 (1.50) | 5.88 (1.78) | 4.25 (1.39) | 26.46 | 0.00 | 0.54 |

| IEQ: Challenge | 5.01 (0.52) | 5.06 (0.66) | 5.49 (0.63) | 9.00 | 0.00 | 0.28 |

| Genre & Game Example | Gaze-Based Guidance Function & Gameplay Example |

|---|---|

| Action Games: Super Mario World [77] | Power-Ups & Strategies indicator: Via gaze players could be made aware of strategies to overcome obstacles (e.g., an indication of the enemies’ weak spots) and of the location of power-ups (e.g., mushrooms, fire flowers) that are hidden in a level area. |

| Strategy Games: Warcraft III: Reign of Chaos [78] | Fog of war add-on: Although players cannot directly see through the fog of war, they could be informed about the enemy movement in a particular area through a gaze-based vignetting effect (without revealing the exact position and the type of units), enabling them to develop a counter-strategy. |

| Role-Playing Games: Witcher 3: Wild Hunt [79] | Extended witcher senses: In the game the player has sharpened senses (i.e., the Witcher senses—visual highlighting) that help him/her to identify relevant game objects to solve a quest; the integration of gaze could offer a more challenging and more rewarding experience by only indicating the objects’ location. |

| Sports Games: FIFA 2019 [80] | Team coordinator: In soccer, players could be guided via gaze to look at one of the members that want to interact with them (e.g., pass the ball), which would have a beneficial effect on the team coordination. |

| Vehicle Sims: MS Flight Simulator [81] | Advanced cockpit tutorial: In a tutorial for players with intermediate skills (e.g., start plane engines and take off), players could be guided to relevant areas of the cockpit to solve the assignment (without revealing the exact location). |

| Management Sims: Sim City 2000 [82] | Silent counsel: Players could be made aware of positive (e.g., increase of population), negative situations (e.g., fire), and strategies (e.g., lower taxes) by not directly pointing, but just by guiding them and indicating that something relevant is happening or could be done in a segment of the city. This would give players the possibility to develop their interpretations of the given situation. |

| Adventure Games: Assassins Creed: Origins [12] | Gaze-based waypoint beacons: Instead of using markers in the map to get to the next quest, players could be guided using a gaze-based waypoint system. When a player looks at the direction (sensitive gaze area) a waypoint is located, he/she gets feedback via a vignetting effect (similar to Lost & Found). The closer he/she gets to the waypoint, the stronger the feedback. |

| Puzzle Games: Broken Sword [83] | Hint system for puzzle-solving: In point and click adventures, such Broken Sword, players can turn on a hint system (3 hints per puzzle), when they are not able to solve a given challenge. This system could offer a more interesting experience by using gaze guidance to indicate, but not showing the solution (location of a puzzle object). |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lankes, M.; Haslinger, A.; Wolff, C. gEYEded: Subtle and Challenging Gaze-Based Player Guidance in Exploration Games. Multimodal Technol. Interact. 2019, 3, 61. https://doi.org/10.3390/mti3030061

Lankes M, Haslinger A, Wolff C. gEYEded: Subtle and Challenging Gaze-Based Player Guidance in Exploration Games. Multimodal Technologies and Interaction. 2019; 3(3):61. https://doi.org/10.3390/mti3030061

Chicago/Turabian StyleLankes, Michael, Andreas Haslinger, and Christian Wolff. 2019. "gEYEded: Subtle and Challenging Gaze-Based Player Guidance in Exploration Games" Multimodal Technologies and Interaction 3, no. 3: 61. https://doi.org/10.3390/mti3030061