1. Introduction

The breakthroughs and advances in industrial technology have made modern industrial production processes more automatic and productive with complex operational functionalities. These improvements have enhanced the product quality and expanded the production scale during the past decades. The associated risk of potential failures of various components increases with the complexity and functionality of modern industrial processes. Any faults hidden in insignificance could potentially lead to colossal damages if they remain undetected. Faults are hard to be completely eliminated in chemical industry. Undetected faults in an industrial production process could be accompanied by large hidden risks, which will cause distressingly serious consequences and the subsequent indirect impacts. Degradation in product quality, as a quintessentially consequence, can ruin the brand reputation and corporate identity. Particularly to small-and-medium-sized enterprises, this perhaps can lead to capital chain rupture and disrupt the development of the enterprise. Environmental contamination is also a typical effect of chemical process accidents. Authoritativeness of local authorities may be challenged due to the duty of environmental conservation and enterprises may also risk heavy penalties. Disastrous consequences, such as casualties, usually bring inestimable lost, which should be completely avoided. Generally, with the advancement of industrial technology, the improvement of social environmental awareness and the increased demand for high-quality products, the importance of industrial process monitoring is becoming increasingly important. This paper aims to propose a novel fault diagnosis system to achieve a positive promotion in industrial process monitoring.

The various proposed fault detection and diagnosis approaches can broadly be classified into the following three main approaches: model-based approaches, knowledge-based approaches and data analysis-based approaches [

1,

2,

3,

4]. Currently, big data analytics and machine learning are popularly used in developing new fault diagnosis techniques. Previous works give plenty of diagnosis strategies based on multivariate statistical data analysis [

5,

6]. Qin [

7] gave a detailed survey on data-driven industrial process monitoring and diagnosis using multivariate statistical data analysis techniques. Ge [

8] reviewed data-driven modelling and monitoring techniques for plant-wide industrial processes. Wang et al. [

9] reviewed theoretical research and engineering applications of multivariate statistical process monitoring algorithms for the period 2008–2017. Multitudinous positive research works evidenced that various neural networks, as an excellent classifier, can provide helpful fault diagnosis results in online process monitoring. Zhang and Morris [

10] presented a fuzzy neural network for on-line process fault diagnosis where the on-line measurements are converted into fuzzy sets in the fuzzification layer of the fuzzy neural network. Zhang [

11] presented using multiple neural networks to improve the fault diagnosis performance and different approaches for aggregating multiple neural networks were investigated. Jiang et al. [

12] presented using deep learning for the fault diagnosis of rotating machinery. Li et al. [

13] presented an approach for nonlinear industrial process fault diagnosis using autoencoder embedded dictionary learning. Li et al. [

14] integrated wavelet transform with convolutional neural network for the monitoring of a large-scale fluorochemical process. The advantage of using neural networks is that the multiple independent and dependent variables can be handled synchronously by the neural network, without the need of deep knowledge on the process such as mechanistic models [

15,

16]. Process modelling and fault diagnosis using the latest development of neural networks, such as the gated recurrent neural network [

17], deep belief networks [

18] and autoencoder gated recurrent neural network [

19], have also been reported recently.

Hybrid diagnosis strategy combining neural network with multivariate statistical analysis techniques also has positive impacts on process industries [

20]. Wen and Xu [

21] integrated ReliefF, principal component analysis and deep neural network for the fault diagnosis of a wind turbine. Shang et al. [

22] presented using slow feature analysis for soft sensor development from process operation data. Yu and Zhang [

23] presented a manifold regularized stacked autoencoder-based feature learning approach for industrial process fault diagnosis. Chen et al. [

24] presented using one-dimensional convolutional auto-encoder based feature learning for the fault diagnosis of multivariate processes. Bao et al. [

25] presented a combined deep learning approach for chemical process fault diagnosis.

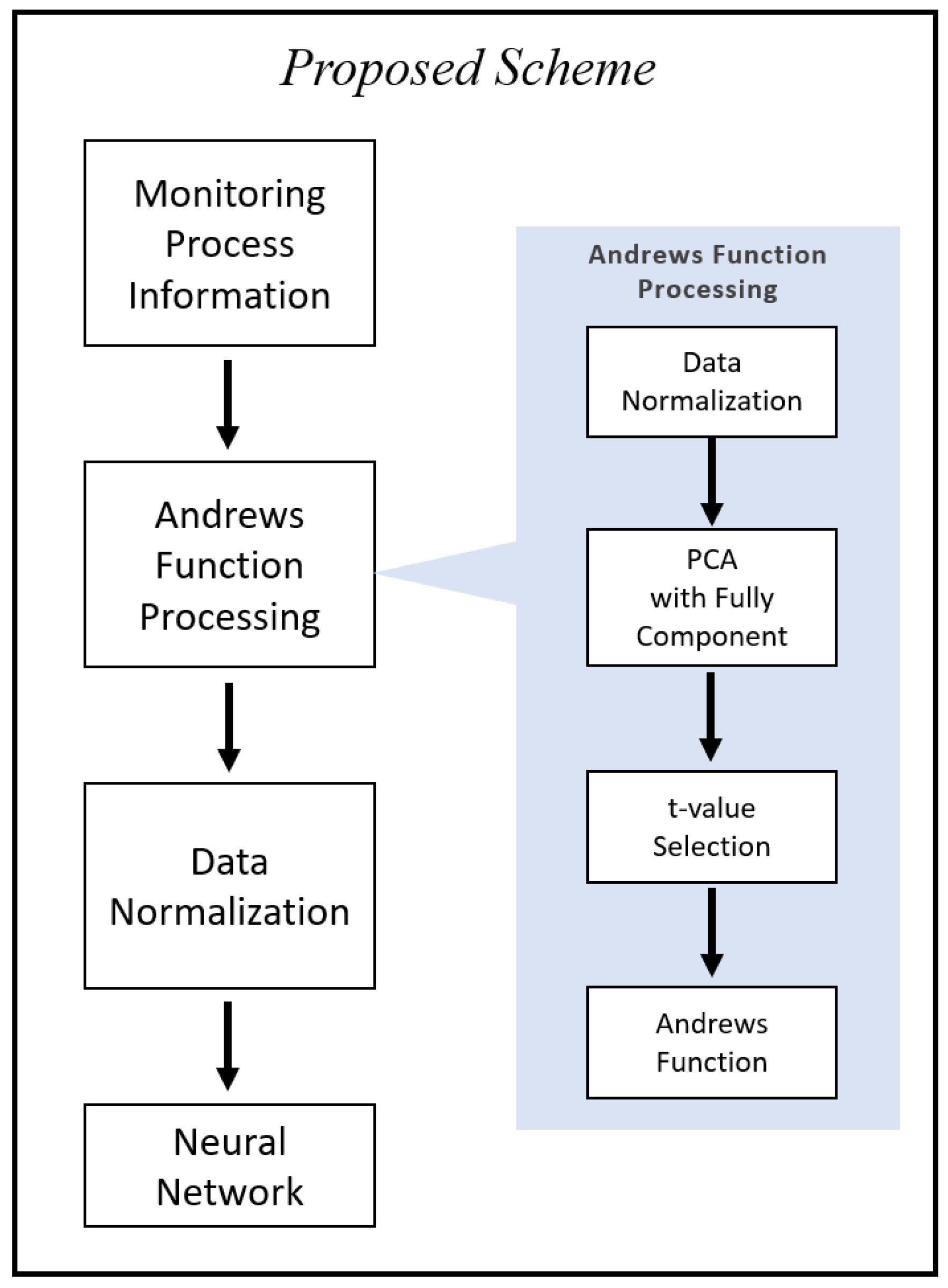

A neural network-based fault diagnosis system is typically constituted by two sub-systems. The first is a data pre-processing system to extract relevant features from online monitoring measurements, via mathematical approaches, mainly the multivariate statistical data analysis techniques. Another is a process state analysis system to obtain the diagnosis results via a classifier, generally a neural network, to handle the multi-dimensional data. Using extracted features as the neural network inputs would help the neural network in classifying the various faults than directly using the monitored process measurements as the neural network inputs. This paper intends to explore the effectiveness of Andrews plot in extracting effective features for enhanced on-line process fault diagnosis.

The proposed data-driven intelligent fault diagnosis strategy in this paper is based on a neural network as the classifier and integrates Andrews plot to preliminarily process the monitoring information with the purpose of accelerating the diagnosis speed and enhancing the reliability of fault diagnosis. Andrews plot is a very useful visualization method for analyzing multivariate data. It has been applied to many areas including analyzing data from the 2001 Parliamentary General Election in the United Kingdom [

26,

27]. Its unique advantage includes the convenience in setting the dimension of the extracted feature space. Andrews plot is efficient in dealing with the issues with large number of correlated variables. A difficulty with Andrews plot is that the proper selection of the number of features. Nevertheless, Andrews plot has a great potential for improving fault detection and diagnosis performance through extracting useful features from the original monitored process measurements. This paper presents a method for determining the important features in Andrews function which give good separations between classes. The proposed fault diagnosis method is applied to a simulated continuous stirred tank reactor (CSTR) system. In order to demonstrate its superiority, it is compared with two traditional neural network-based diagnosis schemes.

The paper is organized as follows.

Section 2 presents the proposed diagnosis system and details of parameter selection.

Section 3 introduces the case study, a CSTR system and two traditional neural network-based fault diagnosis schemes.

Section 4 presents the comparison of diagnosis performance. The determination of important features is also demonstrated.

Section 5 concludes the paper.