Nighttime Mobile Laser Scanning and 3D Luminance Measurement: Verifying the Outcome of Roadside Tree Pruning with Mobile Measurement of the Road Environment

Abstract

:1. Introduction

2. Materials and Methods

2.1. Measurement Area and Conditions

2.2. Measurement System and Data Processing

3. Results

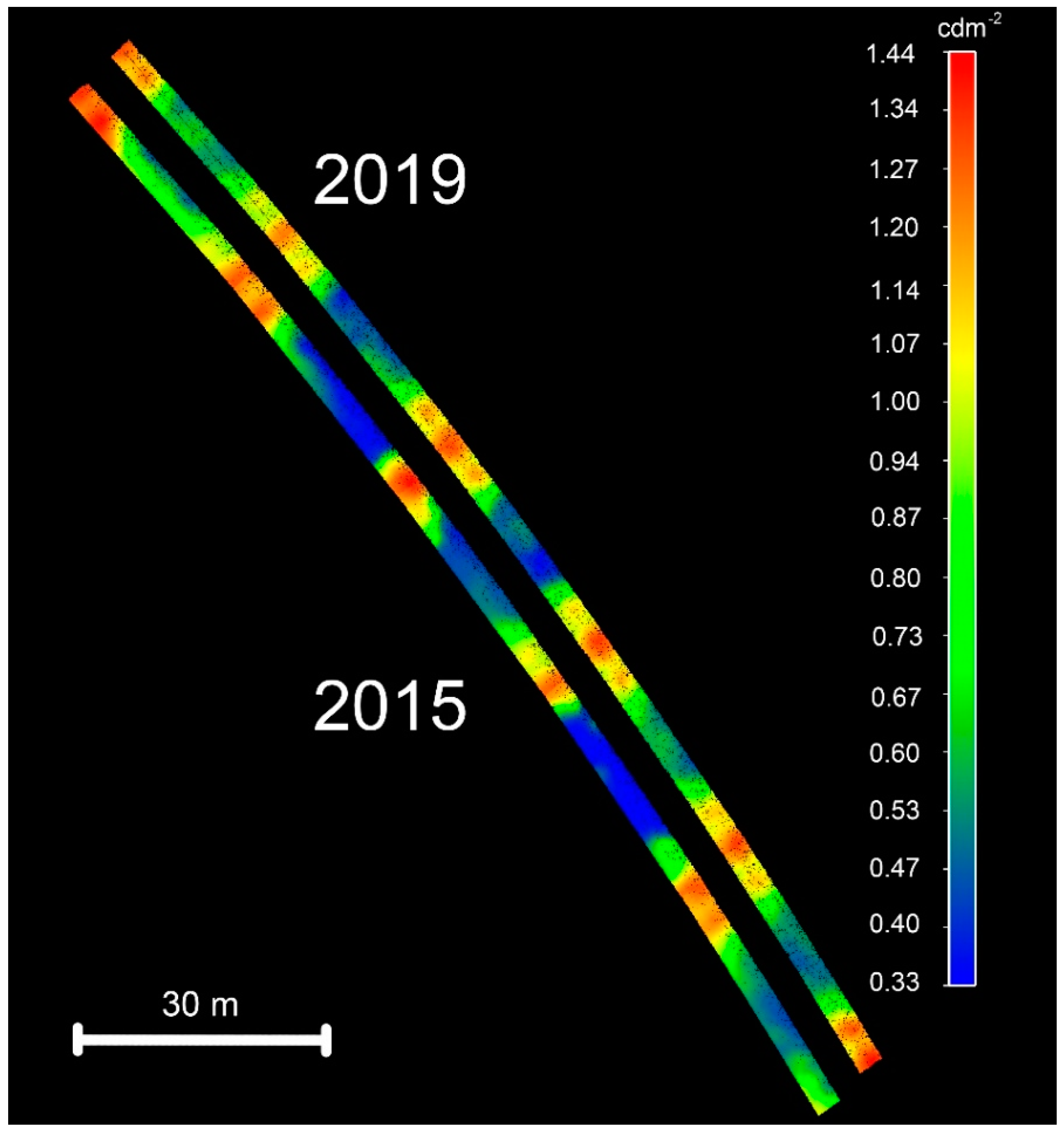

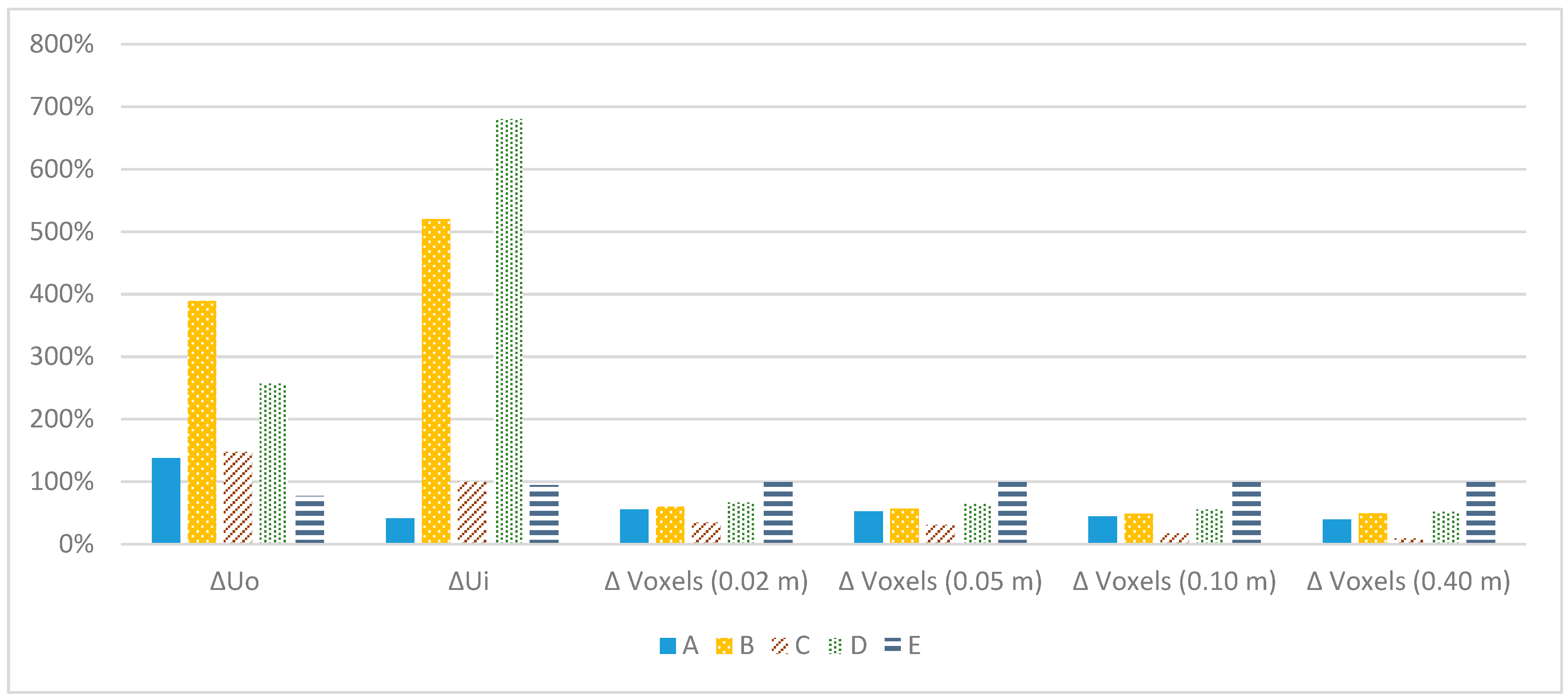

3.1. Overall and Longitudinal Luminance Uniformities

3.2. Occluding Voxel Analysis

3.3. Occluding Voxels and Luminance Uniformity Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Payne, D.M.; Fenske, J.C. An analysis of the rates of accidents, injuries and fatalities under different light conditions: A Michigan emergency response study of state police pursuits. Policing 1997, 20, 357–373. [Google Scholar] [CrossRef]

- Oya, H.; Ando, K.; Kanoshima, H.A. Research on interrelation between illuminance at intersections and reduction in traffic accidents. J. Light Vis. Environ. 2002, 26, 29–34. [Google Scholar] [CrossRef] [Green Version]

- Plainis, S.; Murray, I.J.; Pallikaris, I.G. Road traffic casualties: Understanding the night–time death toll. Inj. Prev. 2006, 12, 125–138. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sullivan, J.M.; Flannagan, M.J. Determining the potential safety benefit of improved lighting in three pedestrian crash scenarios. Accid. Anal. Prev. 2007, 39, 638–647. [Google Scholar] [CrossRef] [PubMed]

- Wanvik, P.O. Effects of road lighting: An analysis based on Dutch accident statistics 1987–2006. Accid. Anal. Prev. 2009, 41, 123–128. [Google Scholar] [CrossRef]

- Jackett, M.; Frith, W. Quantifying the impact of road lighting on road safety—A New Zealand Study. IATSS Res. 2013, 36, 139–145. [Google Scholar] [CrossRef] [Green Version]

- Yannis, G.; Kondyli, A.; Mitzalis, N. Effect of lighting on frequency and severity of road accidents. Proc. Inst. Civ. Eng. Transp. 2013, 166, 271–281. [Google Scholar] [CrossRef]

- Elvik, R. Meta–analysis of evaluations of public lighting as accident countermeasure. Transp. Res. Rec. 1995, 1485, 12–24. [Google Scholar]

- de Bellis, E.; Schulte–Mecklenbeck, M.; Brucks, W.; Herrmann, A.; Hertwig, R. Blind haste: As light decreases, speeding increases. PLoS ONE 2018, 13, e0188951. [Google Scholar] [CrossRef] [Green Version]

- Hölker, F.; Moss, T.; Griefahn, B.; Kloas, W.; Voigt, C.C.; Henckel, D.; Hänel, A.; Kappeler, P.M.; Völker, S.; Schwope, A.; et al. The dark side of light: A transdisciplinary research agenda for light pollution policy. Ecol. Soc. 2010, 15, 13. [Google Scholar] [CrossRef]

- Rodríguez, A.; Burgan, G.; Dann, P.; Jessop, R.; Negro, J.J.; Chiaradia, A. Fatal attraction of short–tailed shearwaters to artificial lights. PLoS ONE 2014, 9, e110114. [Google Scholar] [CrossRef]

- The Illuminating Engineering Society of North America. ANSI/IES RP-8-14, Roadway Lighting; The Illuminating Engineering Society of North America: NY, USA, 2014; ISBN 978-0-87995-299-0. [Google Scholar]

- European Committee for Standardization (CEN). CEN-EN 13201-3, Road lighting—Part 3: Calculation of Performance; European Committee for Standardization (CEN): Brussels, Belgium, 2015. [Google Scholar]

- Pitman, S.D.; Daniels, C.B.; Ely, M.E. Green infrastructure as life support: Urban nature and climate change. Trans. R. Soc. S. Aust. 2015, 139, 97–112. [Google Scholar] [CrossRef]

- Nieuwenhuijsen, M.J.; Khreis, H.; Triguero–Mas, M.; Gascon, M.; Dadvand, P. Fifty shades of green. Epidemiology 2017, 28, 63–71. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.R.; Li, M.H. Effects of urban parks on the local urban thermal environment. Urban For. Urban Green. 2014, 13, 672–681. [Google Scholar] [CrossRef]

- Elsadek, M.; Liu, B.; Lian, Z.; Xie, J. The influence of urban roadside trees and their physical environment on stress relief measures: A field experiment in Shanghai. Urban For. Urban Green. 2019, 42, 51–60. [Google Scholar] [CrossRef]

- Huang, Q.; Yang, M.; Jane, H.A.; Li, S.; Bauer, N. Trees, grass, or concrete? The effects of different types of environments on stress reduction. Landsc. Urban Plan. 2020, 193, 103654. [Google Scholar] [CrossRef]

- Threlfall, C.G.; Williams, N.S.; Hahs, A.K.; Livesley, S.J. Approaches to urban vegetation management and the impacts on urban bird and bat assemblages. Landsc. Urban Plan. 2016, 153, 28–39. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using laser–based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar] [CrossRef] [Green Version]

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Kukko, A.; Kaartinen, H. Detection of vertical pole–like objects in a road environment using vehicle–based laser scanning data. Remote Sens. 2010, 2, 641–664. [Google Scholar] [CrossRef] [Green Version]

- Cabo, C.; Kukko, A.; García–Cortés, S.; Kaartinen, H.; Hyyppä, J.; Ordoñez, C. An algorithm for automatic road asphalt edge delineation from mobile laser scanner data using the line clouds concept. Remote Sens. 2016, 8, 740. [Google Scholar] [CrossRef] [Green Version]

- Javanmardi, M.; Javanmardi, E.; Gu, Y.; Kamijo, S. Towards high–definition 3D urban mapping: Road feature–based registration of mobile mapping systems and aerial imagery. Remote Sens. 2017, 9, 975. [Google Scholar] [CrossRef] [Green Version]

- Balado, J.; González, E.; Arias, P.; Castro, D. Novel Approach to Automatic Traffic Sign Inventory Based on Mobile Mapping System Data and Deep Learning. Remote Sens. 2020, 12, 442. [Google Scholar] [CrossRef] [Green Version]

- Holopainen, M.; Kankare, V.; Vastaranta, M.; Liang, X.; Lin, Y.; Vaaja, M.T.; Yu, X.; Hyyppä, J.; Hyyppä, H.; Kaartinen, H.; et al. Tree mapping using airborne, terrestrial and mobile laser scanning—A case study in a heterogeneous urban forest. Urban For. Urban Green. 2013, 12, 546–553. [Google Scholar] [CrossRef]

- del–Campo–Sanchez, A.; Moreno, M.; Ballesteros, R.; Hernandez–Lopez, D. Geometric Characterization of Vines from 3D Point Clouds Obtained with Laser Scanner Systems. Remote Sens. 2019, 11, 2365. [Google Scholar] [CrossRef] [Green Version]

- Holopainen, M.; Vastaranta, M.; Kankare, V.; Kantola, T.; Kaartinen, H.; Kukko, A.; Vaaja, M.T.; Hyyppä, J.; Hyyppä, H. Mobile terrestrial laser scanning in urban tree inventory. In Proceedings of the 11th International Conference on LiDAR Applications for Assessing Forest Ecosystems (SilviLaser 2011), Hobart, Australia, 16–20 October 2011. [Google Scholar]

- Wu, J.; Yao, W.; Polewski, P. Mapping individual tree species and vitality along urban road corridors with LiDAR and imaging sensors: Point density versus view perspective. Remote Sens. 2018, 10, 1403. [Google Scholar] [CrossRef] [Green Version]

- Vaaja, M.T.; Kurkela, M.; Virtanen, J.-P.; Maksimainen, M.; Hyyppä, H.; Hyyppä, J.; Tetri, E. Luminance–corrected 3D point clouds for road and street environments. Remote Sens. 2015, 7, 11389–11402. [Google Scholar] [CrossRef] [Green Version]

- Vaaja, M.T.; Kurkela, M.; Maksimainen, M.; Virtanen, J.-P.; Kukko, A.; Lehtola, V.V.; Hyyppä, J.; Hyyppä, H. Mobile mapping of night–time road environment lighting conditions. Photogramm. J. Finland 2018, 26, 1–7. [Google Scholar] [CrossRef]

- Helsingin Karttapalvelu (Helsinki Map Service). Available online: http://kartta.hel.fi (accessed on 18 March 2020).

- Trimble MX2 Mobile Mapping System. Available online: http://www.webcitation.org/6sc5p4MvX (accessed on 18 March 2020).

- Kurkela, M.; Maksimainen, M.; Vaaja, M.T.; Virtanen, J.-P.; Kukko, A.; Hyyppä, J.; Hyyppä, H. Camera preparation and performance for 3D luminance mapping of road environments. Photogramm. J. Finland 2017, 25, 1–23. [Google Scholar] [CrossRef]

- International Electrotechnical Commission. Multimedia Systems and Equipment–Colour Measurement and Management-Part 2-1: Colour Management—Default RGB Colour Space—sRGB; IEC 61966-2-1; International Electrotechnical Commission: Geneva, Switzerland, 1999. [Google Scholar]

- Barber, D.; Mills, J.; Bryan, P.G. Laser Scanning and Photogrammetry—21st Century Metrology. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 360–366. [Google Scholar]

- Rönnholm, P.; Honkavaara, E.; Litkey, P.; Hyyppä, H.; Hyyppä, J. Integration of laser scanning and photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, 36, 355–362. [Google Scholar]

- Abdelhafiz, A.; Riedel, B.; Niemeier, W. Towards a 3D true colored space by the fusion of laser scanner point cloud and digital photos. In Proceedings of the ISPRS Working Group V/4 Workshop 3D–ARCH 2005, Mestre-Venice, Italy, 22–24 August 2005. [Google Scholar]

- Rönnholm, P.; Hyyppä, H.; Hyyppä, J.; Haggrén, H. Orientation of airborne laser scanning point clouds with multi-view, multi-scale image blocks. Sensors 2009, 9, 6008–6027. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moskal, L.M.; Zheng, G. Retrieving forest inventory variables with terrestrial laser scanning (TLS) in urban heterogeneous forest. Remote Sens. 2012, 4, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Hauglin, M.; Astrup, R.; Gobakken, T.; Næsset, E. Estimating single–tree branch biomass of Norway spruce with terrestrial laser scanning using voxel–based and crown dimension features. Scand. J. For. Res. 2013, 28, 456–469. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Yue, W.; Shu, S.; Tan, W.; Hu, C.; Huang, Y.; Wu, J.; Liu, H. A voxel–based method for automated identification and morphological parameters estimation of individual street trees from mobile laser scanning data. Remote Sens. 2013, 5, 584–611. [Google Scholar] [CrossRef] [Green Version]

- Yao, W.; Fan, H. Automated detection of 3D individual trees along urban road corridors by mobile laser scanning systems. In Proceedings of the International Symposium on Mobile Mapping Technology, Tainan, Taiwan, 6 May 2013. [Google Scholar]

- Bienert, A.; Hess, C.; Maas, H.G.; Von Oheimb, G. A voxel–based technique to estimate the volume of trees from terrestrial laser scanner data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences Commission V Symposium, Riva del Garda, Italy, 23–25 June 2014. [Google Scholar]

- Cifuentes, R.; Van der Zande, D.; Farifteh, J.; Salas, C.; Coppin, P. Effects of voxel size and sampling setup on the estimation of forest canopy gap fraction from terrestrial laser scanning data. Agric. For. Meteorol. 2014, 194, 230–240. [Google Scholar] [CrossRef]

- Jalonen, J.; Järvelä, J.; Virtanen, J.-P.; Vaaja, M.T.; Kurkela, M.; Hyyppä, H. Determining characteristic vegetation areas by terrestrial laser scanning for floodplain flow modeling. Water 2015, 7, 420–437. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.; Kankare, V.; Hyyppä, J.; Wang, Y.; Kukko, A.; Haggrén, H.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Guan, F.; et al. Terrestrial laser scanning in forest inventories. ISPRS J. Photogramm. Remote Sens. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Grau, E.; Durrieu, S.; Fournier, R.; Gastellu–Etchegorry, J.P.; Yin, T. Estimation of 3D vegetation density with Terrestrial Laser Scanning data using voxels. A sensitivity analysis of influencing parameters. Remote Sens. Environ. 2017, 191, 373–388. [Google Scholar] [CrossRef]

- Kükenbrink, D.; Schneider, F.D.; Leiterer, R.; Schaepman, M.E.; Morsdorf, F. Quantification of hidden canopy volume of airborne laser scanning data using a voxel traversal algorithm. Remote Sens. Environ. 2017, 194, 424–436. [Google Scholar] [CrossRef]

- Loghin, A.; Oniga, V.E.; Giurma–Handley, C. 3D Point Cloud Classification of Natural Environments Using Airborne Laser Scanning Data. Am. J. Eng. Res. 2018, 7, 191–197. [Google Scholar]

- Lucas, C.; Bouten, W.; Koma, Z.; Kissling, W.D.; Seijmonsbergen, A.C. Identification of linear vegetation elements in a rural landscape using LiDAR point clouds. Remote Sens. 2019, 11, 292. [Google Scholar] [CrossRef] [Green Version]

- Weinmann, M.; Weinmann, M.; Mallet, C.; Brédif, M. A classification–segmentation framework for the detection of individual trees in dense MMS point cloud data acquired in urban areas. Remote Sens. 2017, 9, 277. [Google Scholar] [CrossRef] [Green Version]

- Hůlková, M.; Pavelka, K.; Matoušková, E. Automatic classification of point clouds for highway. Acta Polytech. 2018, 58, 165–170. [Google Scholar] [CrossRef]

- Seiferling, I.; Naik, N.; Ratti, C.; Proulx, R. Green streets—Quantifying and mapping urban trees with street–level imagery and computer vision. Landsc. Urban Plan. 2017, 165, 93–101. [Google Scholar] [CrossRef]

- Julin, A.; Jaalama, K.; Virtanen, J.-P.; Maksimainen, M.; Kurkela, M.; Hyyppä, J.; Hyyppä, H. Automated Multi–Sensor 3D Reconstruction for the Web. ISPRS Int. J. Geo-Inf. 2019, 8, 221. [Google Scholar] [CrossRef] [Green Version]

- Van Renterghem, T.; Hornikx, M.; Forssen, J.; Botteldooren, D. The potential of building envelope greening to achieve quietness. Build. Environ. 2013, 61, 34–44. [Google Scholar] [CrossRef] [Green Version]

- Pandit, R.; Polyakov, M.; Tapsuwan, S.; Moran, T. The effect of street trees on property value in Perth, Western Australia. Landsc. Urban Plan. 2013, 110, 134–142. [Google Scholar] [CrossRef]

- Kondo, M.C.; Han, S.; Donovan, G.H.; MacDonald, J.M. The association between urban trees and crime: Evidence from the spread of the emerald ash borer in Cincinnati. Landsc. Urban Plan. 2017, 157, 193–199. [Google Scholar] [CrossRef] [Green Version]

- Eisenman, T.S.; Churkina, G.; Jariwala, S.P.; Kumar, P.; Lovasi, G.S.; Pataki, D.E.; Weinberger, K.R.; Whitlow, T.H. Urban trees, air quality, and asthma: An interdisciplinary review. Landsc. Urban Plan. 2019, 187, 47–59. [Google Scholar] [CrossRef]

- Mullaney, J.; Lucke, T.; Trueman, S.J. A review of benefits and challenges in growing street trees in paved urban environments. Landsc. Urban Plan. 2015, 134, 157–166. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Remondino, F.; Toschi, I.; Rodríguez-Gonzálvez, P. Investigation of indoor and outdoor performance of two portable mobile mapping systems. Proceednings of the Videometrics, Range Imaging, and Applications XIV, Munich, Germany, 26–27 June 2017. [Google Scholar]

- Tanner, A.L.; Leroux, S.J. Effect of roadside vegetation cutting on moose browsing. PLoS ONE 2015, 10, e0133155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cackowski, J.M.; Nasar, J.L. The restorative effects of roadside vegetation: Implications for automobile driver anger and frustration. Environ. Behav. 2003, 35, 736–751. [Google Scholar] [CrossRef] [Green Version]

- Gaston, K.J.; Davies, T.W.; Bennie, J.; Hopkins, J. Reducing the ecological consequences of night-time light pollution: Options and developments. J. Appl. Ecol. 2012, 49, 1256–1266. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Segment | Uo before | Uo after | Uo rel. Difference | Ui before | Ui after | Ui rel. Difference |

|---|---|---|---|---|---|---|

| A | 0.24 | 0.57 | 137.5% | 0.29 | 0.41 | 41.4% |

| B | 0.09 | 0.44 | 388.9% | 0.05 | 0.31 | 520.0% |

| C | 0.17 | 0.42 | 147.1% | 0.13 | 0.26 | 100.0% |

| D | 0.14 | 0.50 | 257.1% | 0.05 | 0.39 | 680.0% |

| E | 0.30 | 0.53 | 76.7% | 0.17 | 0.33 | 94.1% |

| Average | 0.19 | 0.49 | 157.9% | 0.14 | 0.34 | 142.9% |

| 0.02 m | 0.05 m | 0.1 m | 0.4 m | |

|---|---|---|---|---|

| A (2015) | 11,972 | 10,335 | 6584 | 634 |

| A (2019) | 5364 | 4949 | 3672 | 387 |

| –55.2% | –52.1% | –44.2% | –39.0% | |

| B (2015) | 79,148 | 68,023 | 43,503 | 3909 |

| B (2019) | 31,990 | 29,664 | 22,295 | 1999 |

| –59.6% | –56.4% | –48.8% | –48.9% | |

| C (2015) | 22,412 | 19,602 | 12,412 | 933 |

| C (2019) | 14,661 | 13,599 | 10,331 | 849 |

| –34.6% | –30.6% | –16.8% | –9.0% | |

| D (2015) | 91,272 | 78,677 | 46,808 | 3836 |

| D (2019) | 30,482 | 28,433 | 20,903 | 1864 |

| –66.6% | –63.9% | –55.3% | –51.4% | |

| E (2015) | 18,063 | 15,856 | 10,233 | 944 |

| E (2019) | 0 | 0 | 0 | 0 |

| –100.0% | –100.0% | –100.0% | –100.0% | |

| Average (2015) | 44,573.4 | 38,498.6 | 23,908.0 | 2051.2 |

| Average (2019) | 16,499.4 | 15,329.0 | 11,440.2 | 1019.8 |

| –63.2% | –60.6% | –53.0% | –49.6% |

| A | B | C | D | E | |

|---|---|---|---|---|---|

| ΔUo | 138% | 389% | 147% | 257% | 77% |

| ΔUi | 41% | 520% | 100% | 680% | 94% |

| Δ voxels (0.02 m) | 55% | 60% | 35% | 67% | 100% |

| Δ voxels (0.05 m) | 52% | 56% | 31% | 64% | 100% |

| Δ voxels (0.10 m) | 44% | 49% | 17% | 55% | 100% |

| Δ voxels (0.40 m) | 39% | 49% | 9% | 51% | 100% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maksimainen, M.; Vaaja, M.T.; Kurkela, M.; Virtanen, J.-P.; Julin, A.; Jaalama, K.; Hyyppä, H. Nighttime Mobile Laser Scanning and 3D Luminance Measurement: Verifying the Outcome of Roadside Tree Pruning with Mobile Measurement of the Road Environment. ISPRS Int. J. Geo-Inf. 2020, 9, 455. https://doi.org/10.3390/ijgi9070455

Maksimainen M, Vaaja MT, Kurkela M, Virtanen J-P, Julin A, Jaalama K, Hyyppä H. Nighttime Mobile Laser Scanning and 3D Luminance Measurement: Verifying the Outcome of Roadside Tree Pruning with Mobile Measurement of the Road Environment. ISPRS International Journal of Geo-Information. 2020; 9(7):455. https://doi.org/10.3390/ijgi9070455

Chicago/Turabian StyleMaksimainen, Mikko, Matti T. Vaaja, Matti Kurkela, Juho-Pekka Virtanen, Arttu Julin, Kaisa Jaalama, and Hannu Hyyppä. 2020. "Nighttime Mobile Laser Scanning and 3D Luminance Measurement: Verifying the Outcome of Roadside Tree Pruning with Mobile Measurement of the Road Environment" ISPRS International Journal of Geo-Information 9, no. 7: 455. https://doi.org/10.3390/ijgi9070455