Creating Choropleth Maps by Artificial Intelligence—Case Study on ChatGPT-4

Abstract

1. Introduction

2. Geo-Artificial Intelligence

3. The Current State of Large Language Models in Cartography

Prompt Engineering

- (1)

- Giving instructions: When the model is prompted with basic commands, the model will have broad answers. Therefore, a comprehensive description is necessary to obtain more accurate and relevant results.

- (2)

- Be clear and precise: This approach entails crafting prompts to be more unambiguous and specific. When faced with vague prompts, the model tends to produce outputs that are broadly applicable and may not align in a particular situation. On the other hand, a prompt that is both detailed and precise allows the model to produce content that closely matches the specific demands.

- (3)

- Try several times: Due to the unpredictable behavior of LLMs, running the model several times with the same prompt to achieve the best output helps in exploring the variations in the model’s responses, thereby boosting the probability of achieving a high-quality result.

- (4)

- Role-prompting: Role-prompting is when the model assigns a specific persona. This strategy helps the model’s response to match the expected outcome. For example, by prompting the model to assume the role of a historian, it becomes more likely to offer responses that are both detailed and contextually precise regarding historical events.

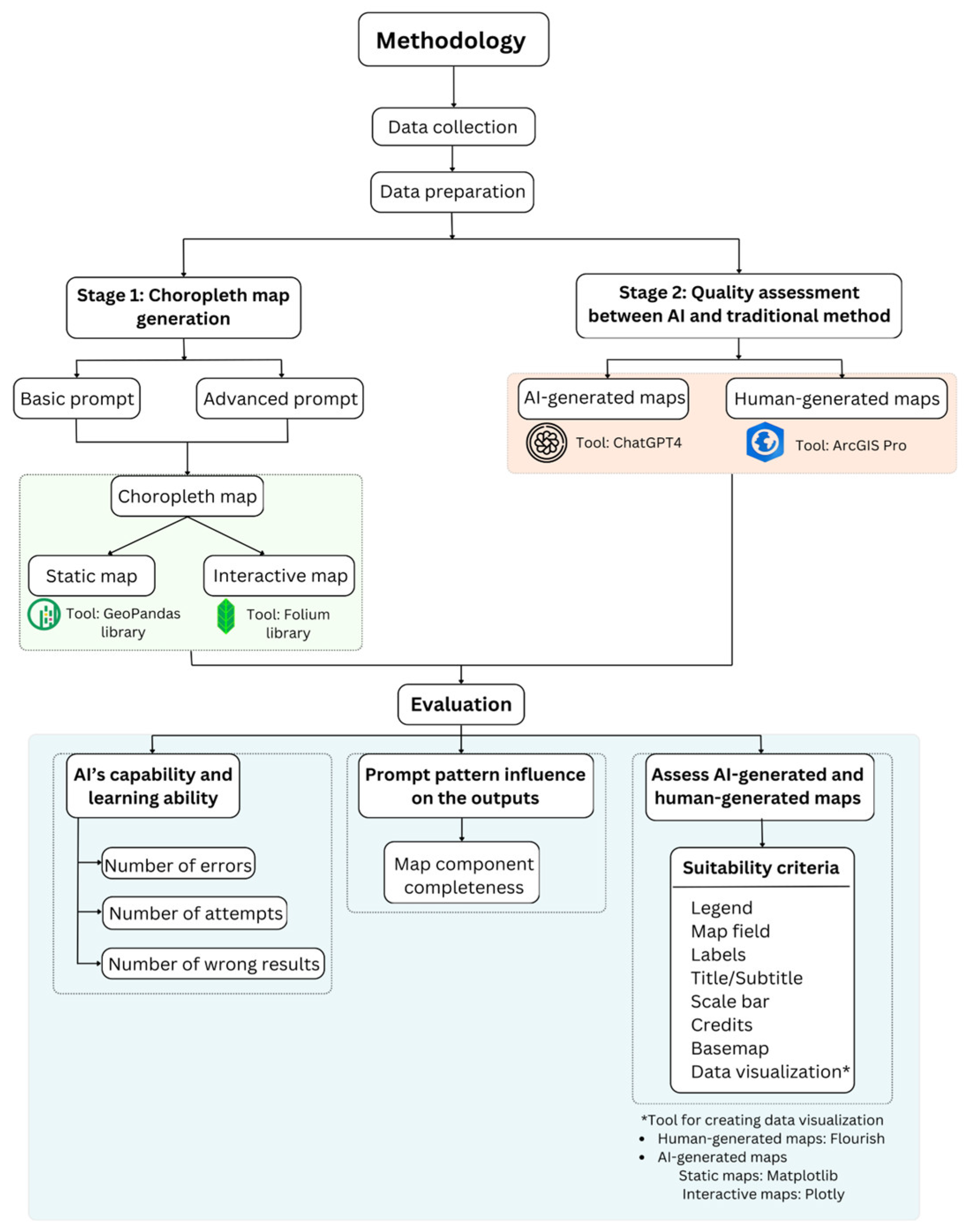

4. Methodology

4.1. Overview

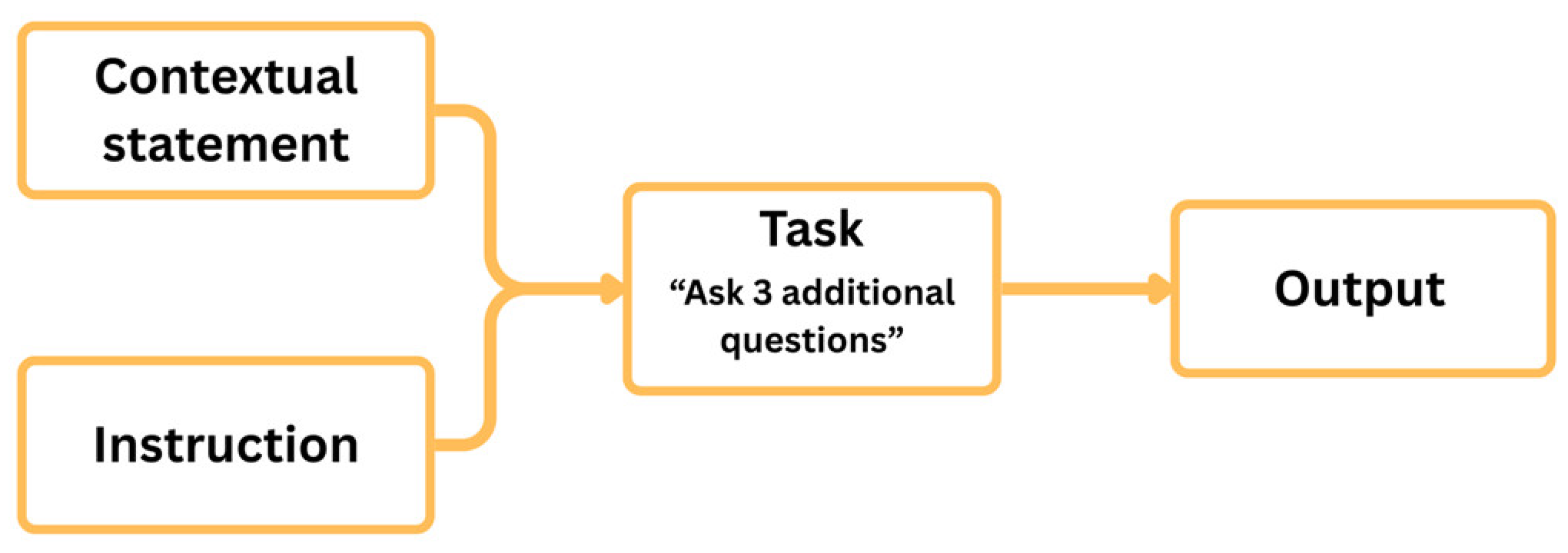

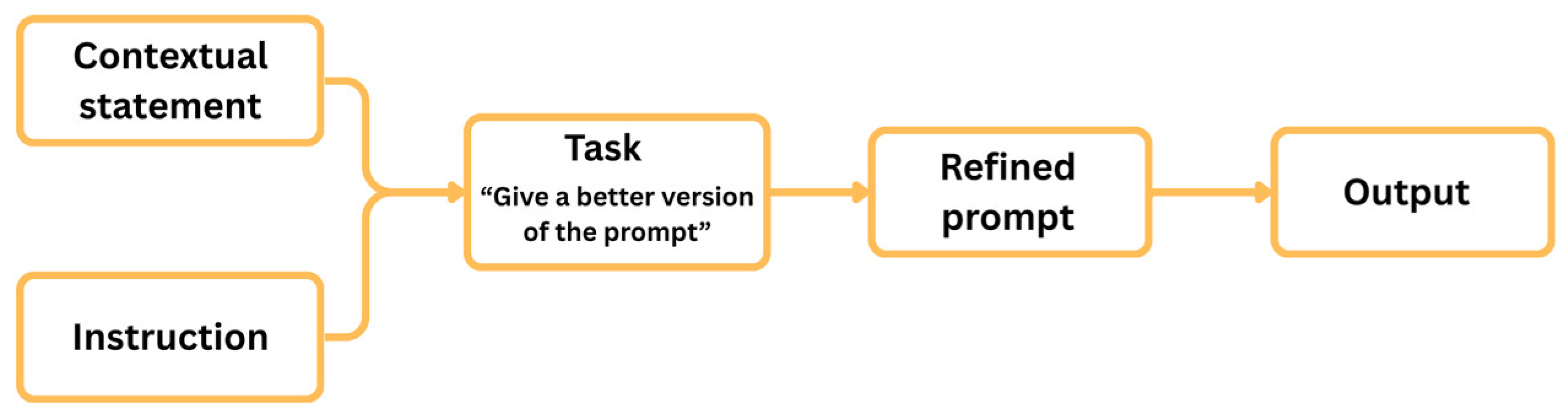

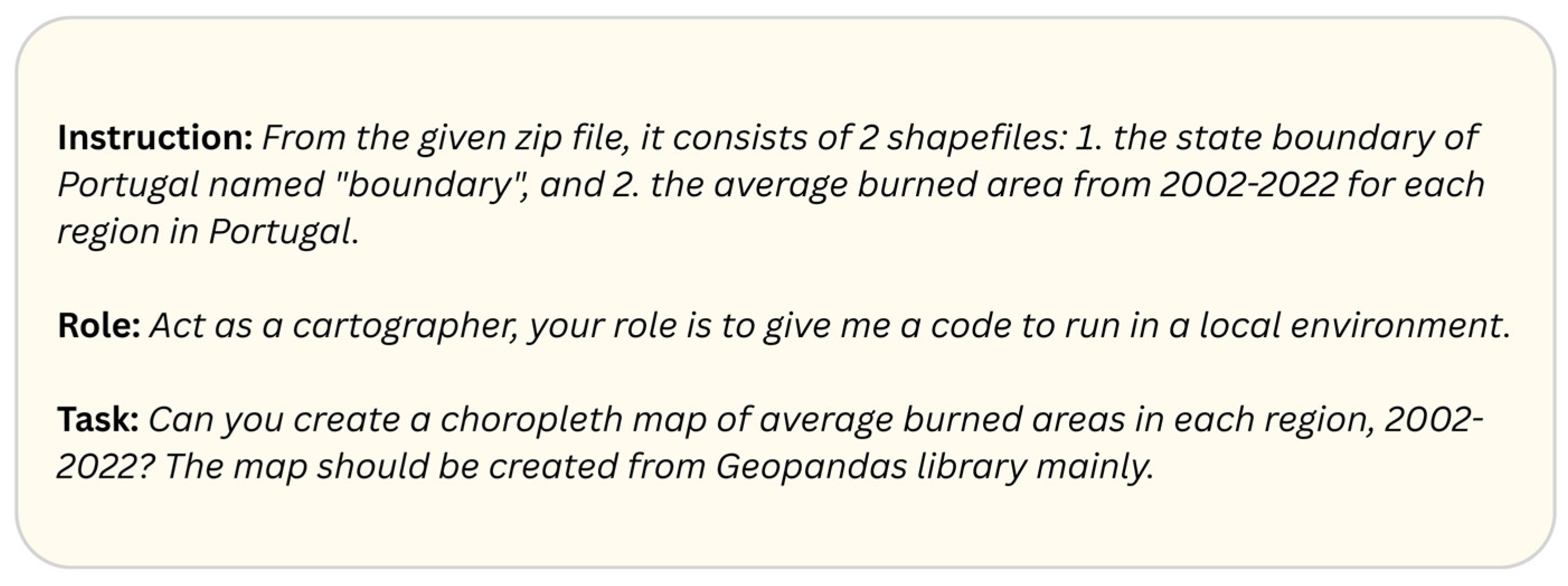

4.2. Prompt Patterns

4.2.1. Basic Prompt Pattern

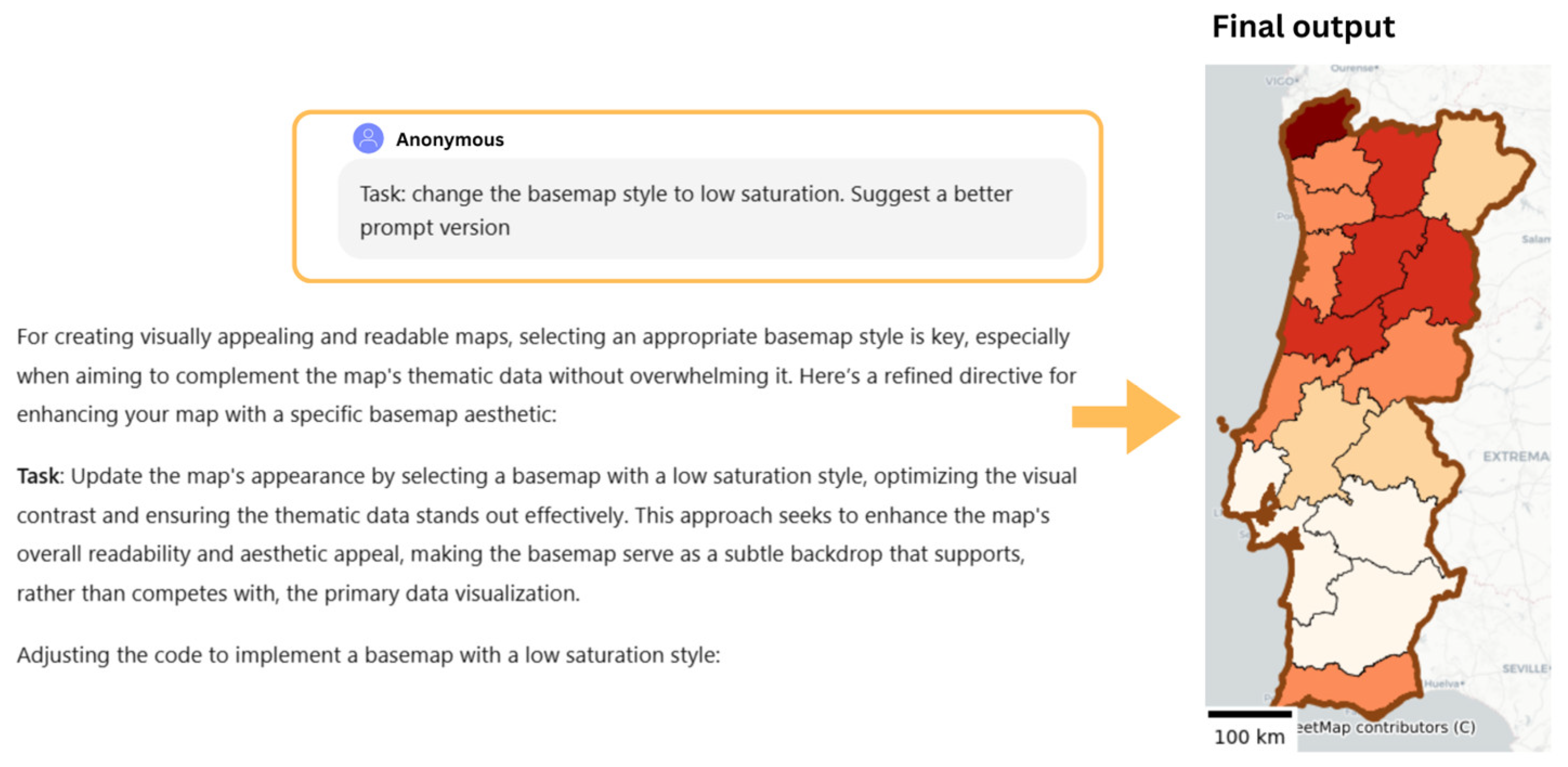

4.2.2. Advanced Prompt Pattern

4.3. Software and Technical Tools

- ChatGPT-4: A generative AI trained to generate human-like text responses from given prompts. Since it can interpret natural language input, this allows users to interact with the prompts and context of the conversation. In this study, ChatGPT4 is used as an AI tool for generating code snippets of both static and interactive maps through textual prompts. In the context of geovisualization, libraries like Folium and GeoPandas play a crucial role in this research.

- Folium: To maintain consistency in the outputs, Folium is used to generate interactive maps. Folium is a Python library used for creating interactive maps. It is built on top of the Leaflet JavaScript mapping library.

- GeoPandas: GeoPandas is used to produce the static map versions. It is an open-source Python library that extends its capabilities to handle geospatial data and plot maps by leveraging Matplotlib and several libraries.

- ArcGIS Pro: ArcGIS Pro is a GIS software developed by Esri for creating maps, managing geospatial data, and performing spatial analysis. For human-generated maps, ArcGIS Pro was used as the traditional GIS tool for comparison with the AI-generated outputs.

- 1.

- AI-generated data visualization

- Matplotlib: Matplotlib is a popular Python library used for creating static and interactive data visualization, such as charts or diagrams. In this study, data visualizations in the static maps were generated by Matplotlib.

- Plotly: An open-source data visualization library for creating interactive data visualizations. It offers a range of visualization types, from basic charts to more complex plots. Data visualization in the interactive maps was generated by Plotly in this study.

- 2.

- Human-generated data visualization

- Flourish: Flourish enables users to create interactive data visualizations. The platform supports various types of visualizations, including bar charts, pie charts, scatter plots, and more. Then, the chart for human-generated maps was created and exported by Flourish.

5. AI Map Generation

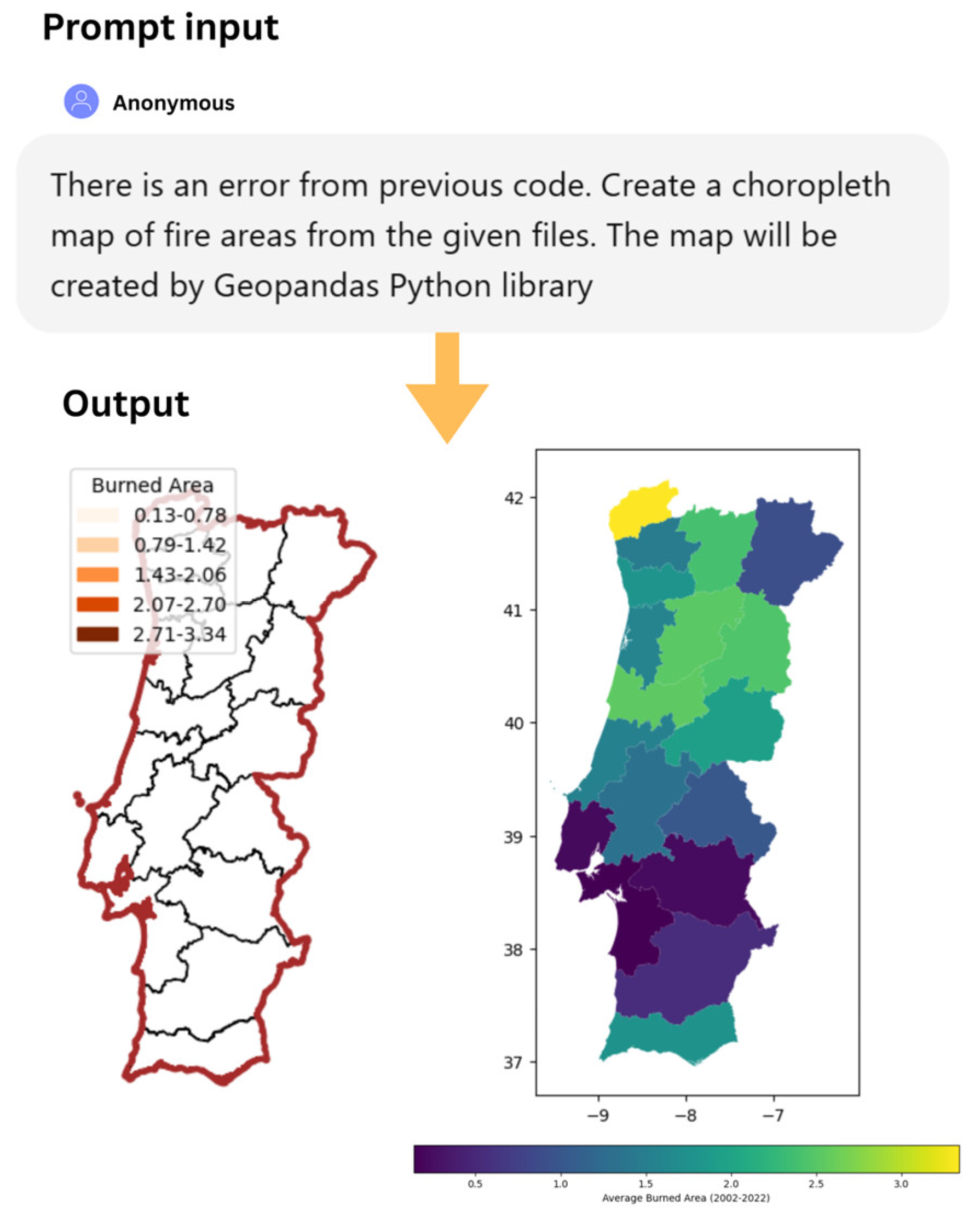

5.1. Basic Prompt on the Static Map

5.1.1. Map Field

5.1.2. Legend

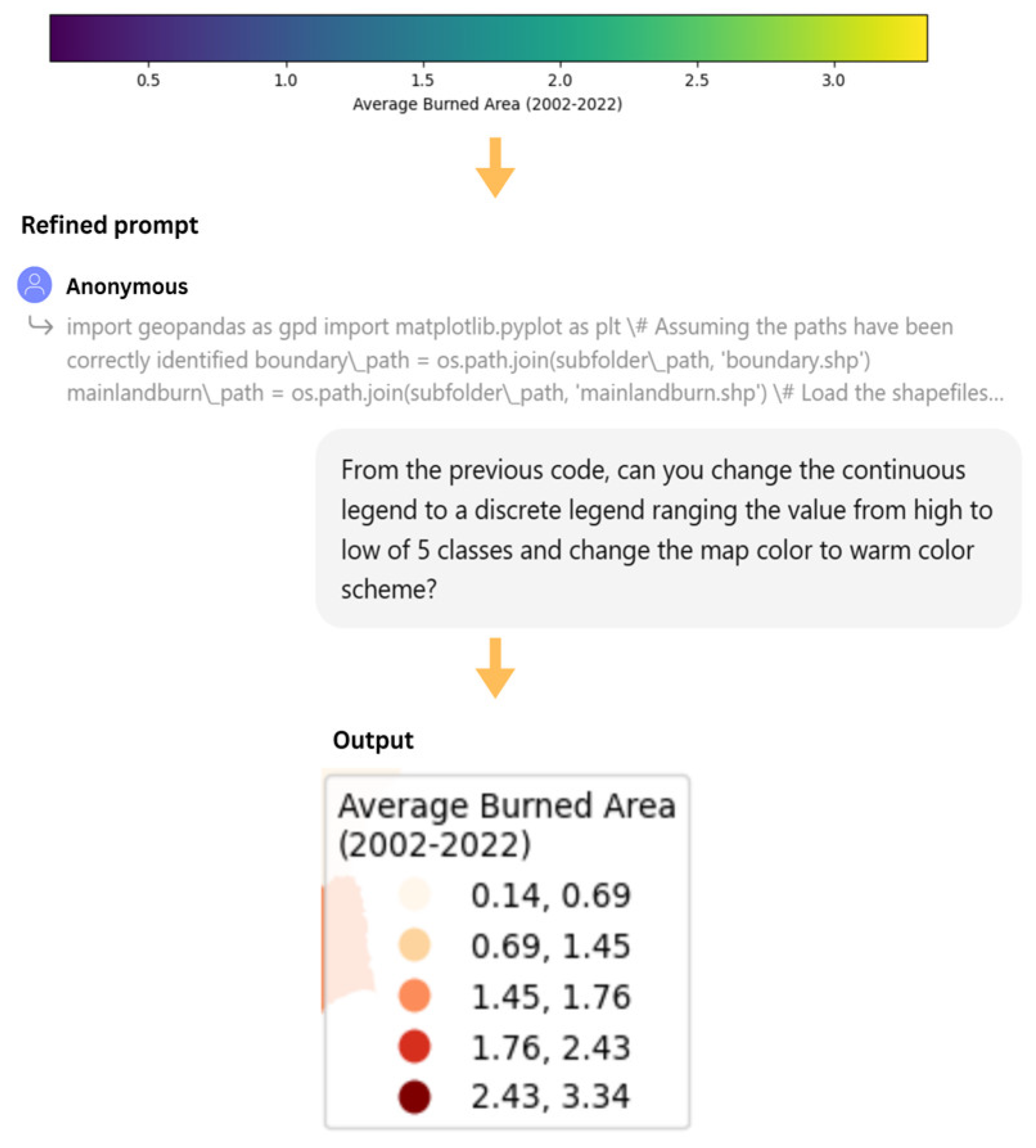

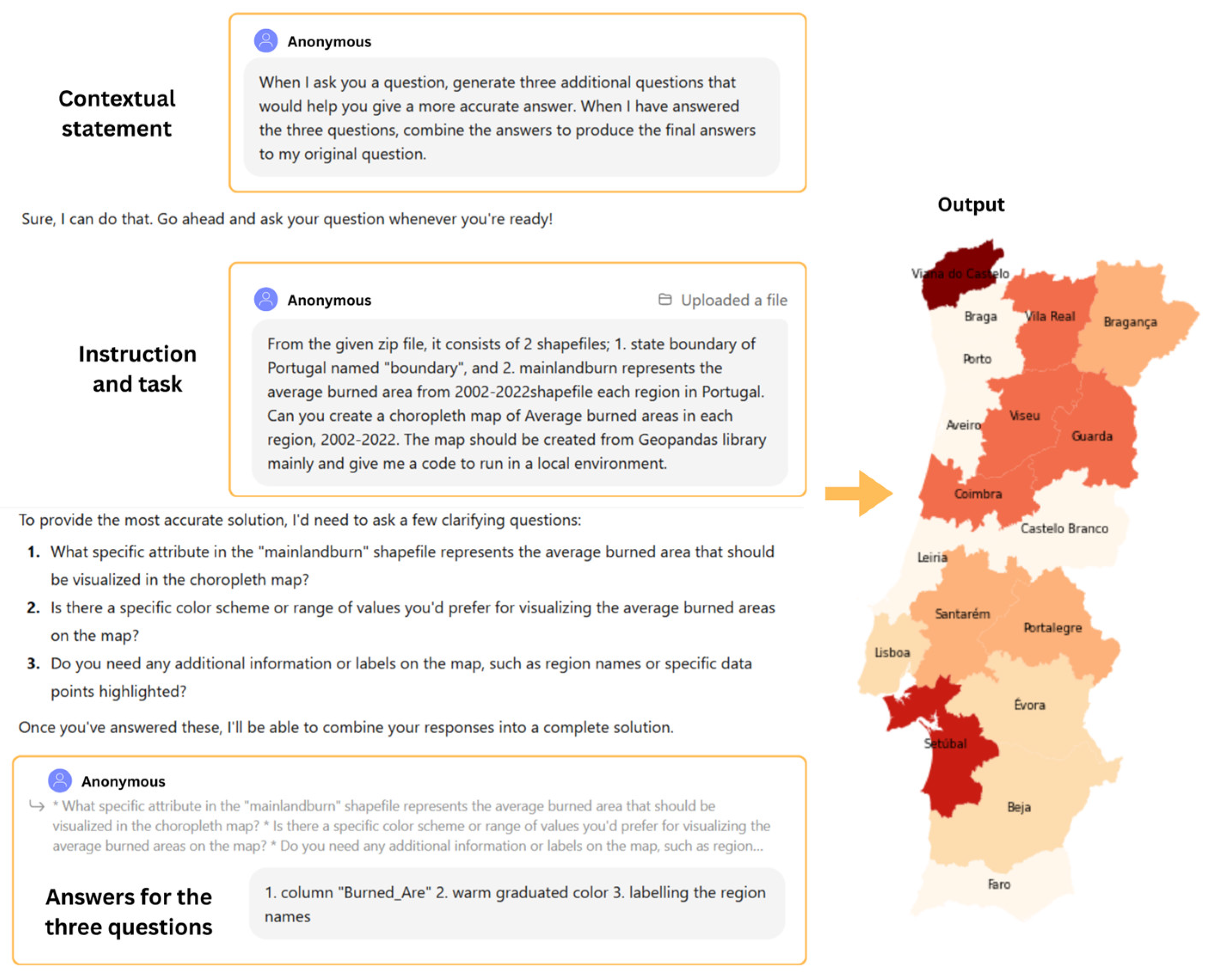

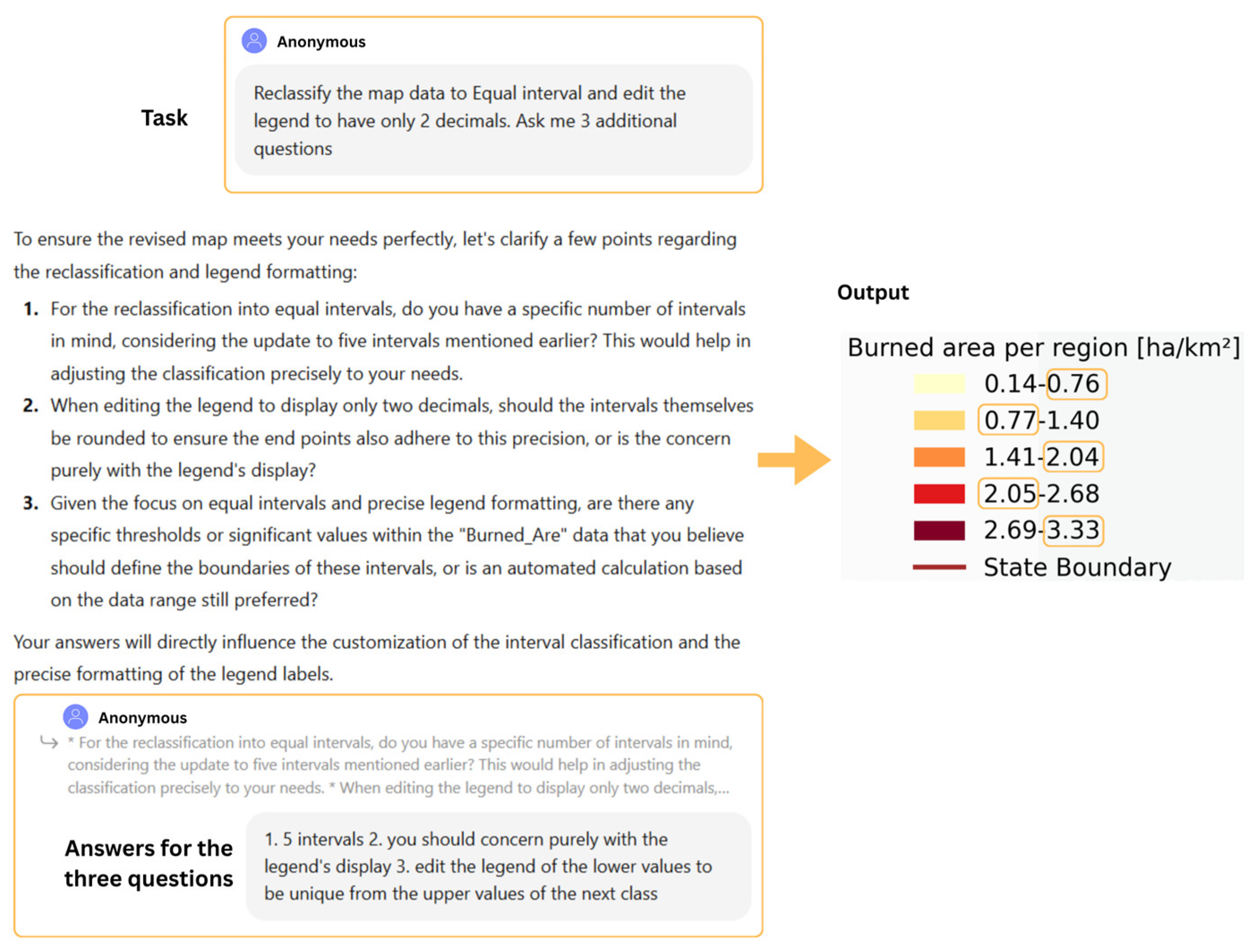

5.2. Advanced Prompt on the Static Map

5.2.1. Map Field

5.2.2. Legend

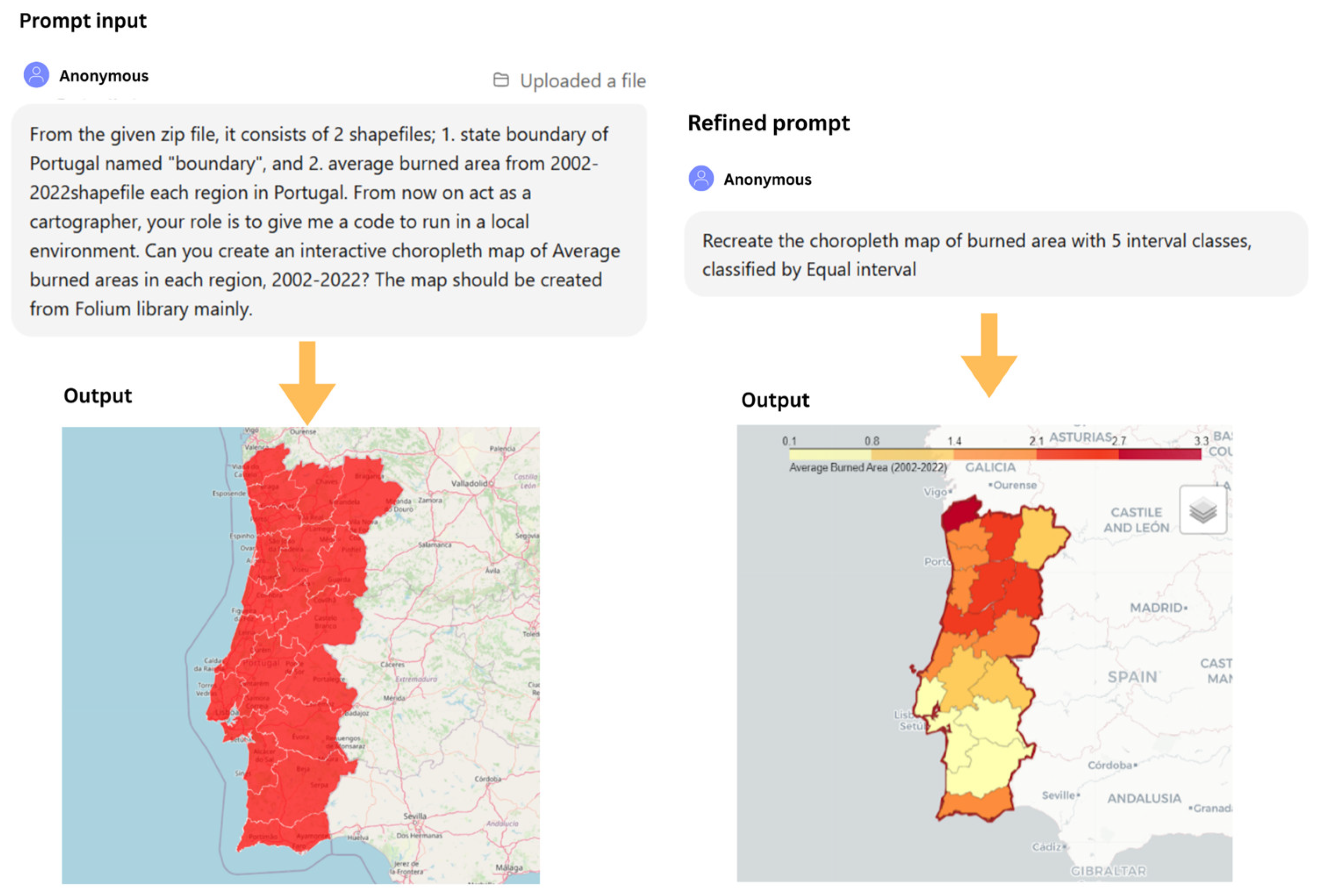

5.3. Basic Prompt on the Interactive Map

5.3.1. Map Field

5.3.2. Legend

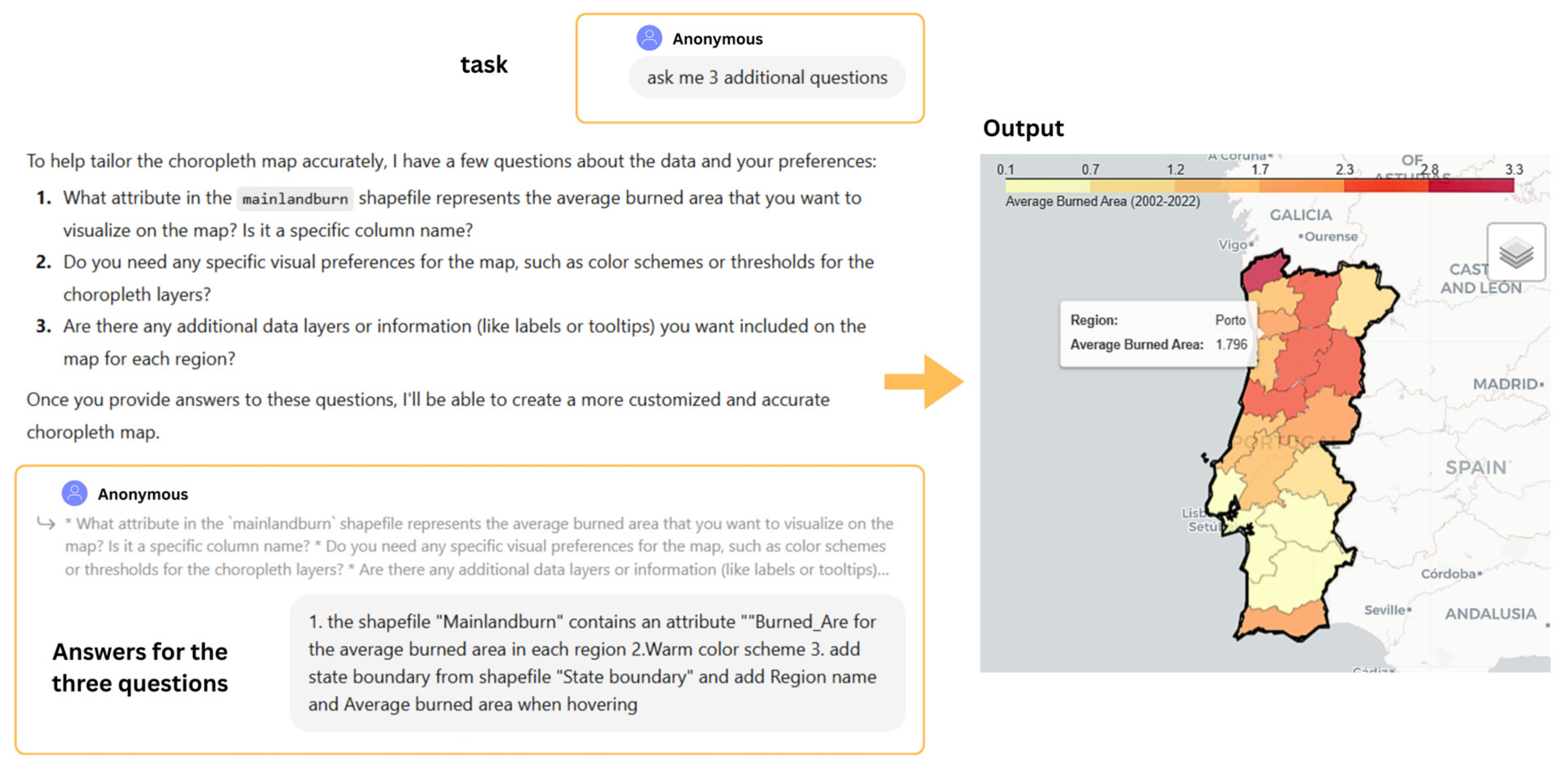

5.4. Advanced Prompt on the Interactive

5.4.1. Map Field

5.4.2. Legend

5.5. Map Compositions

5.5.1. Title and Subtitle

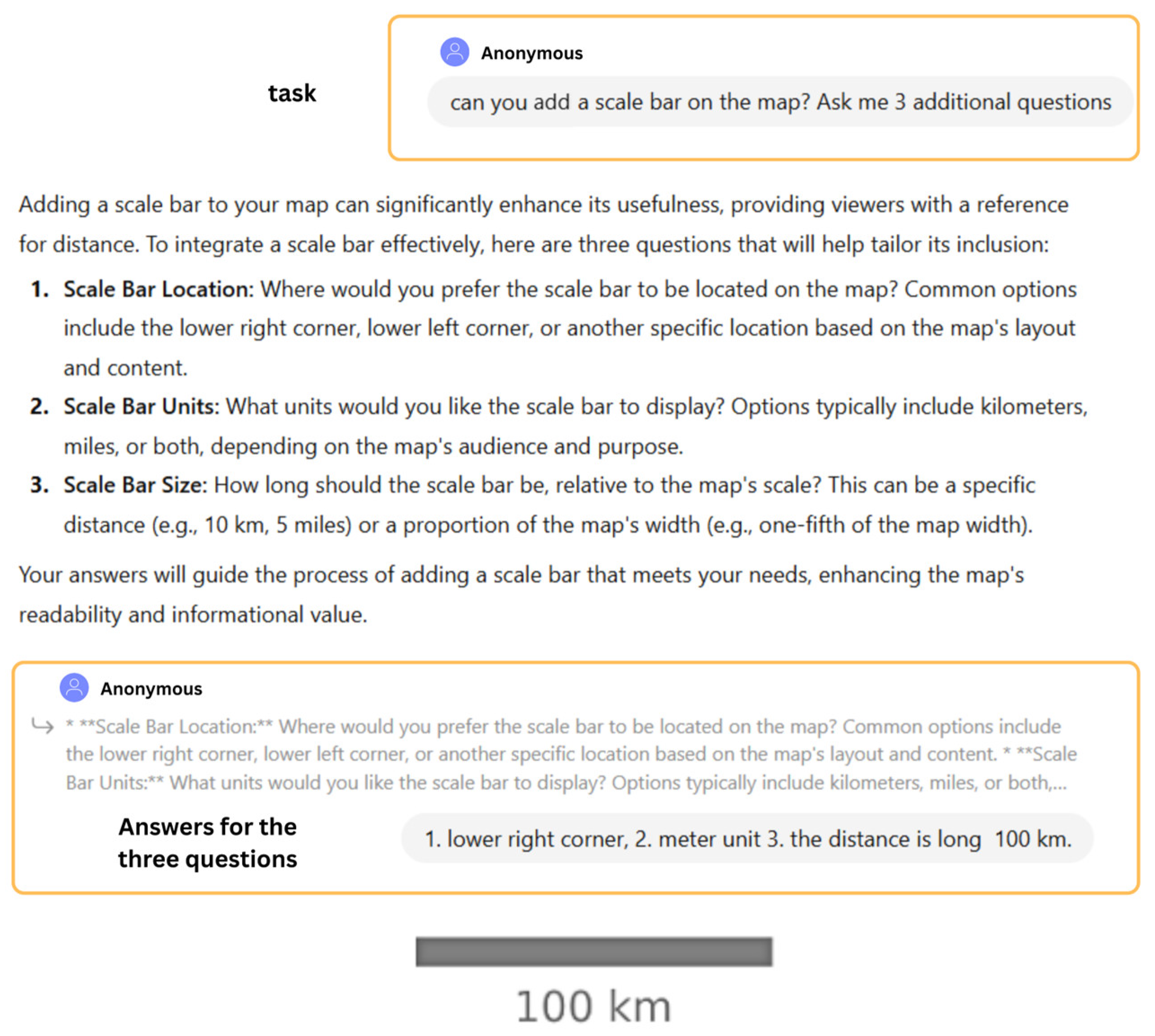

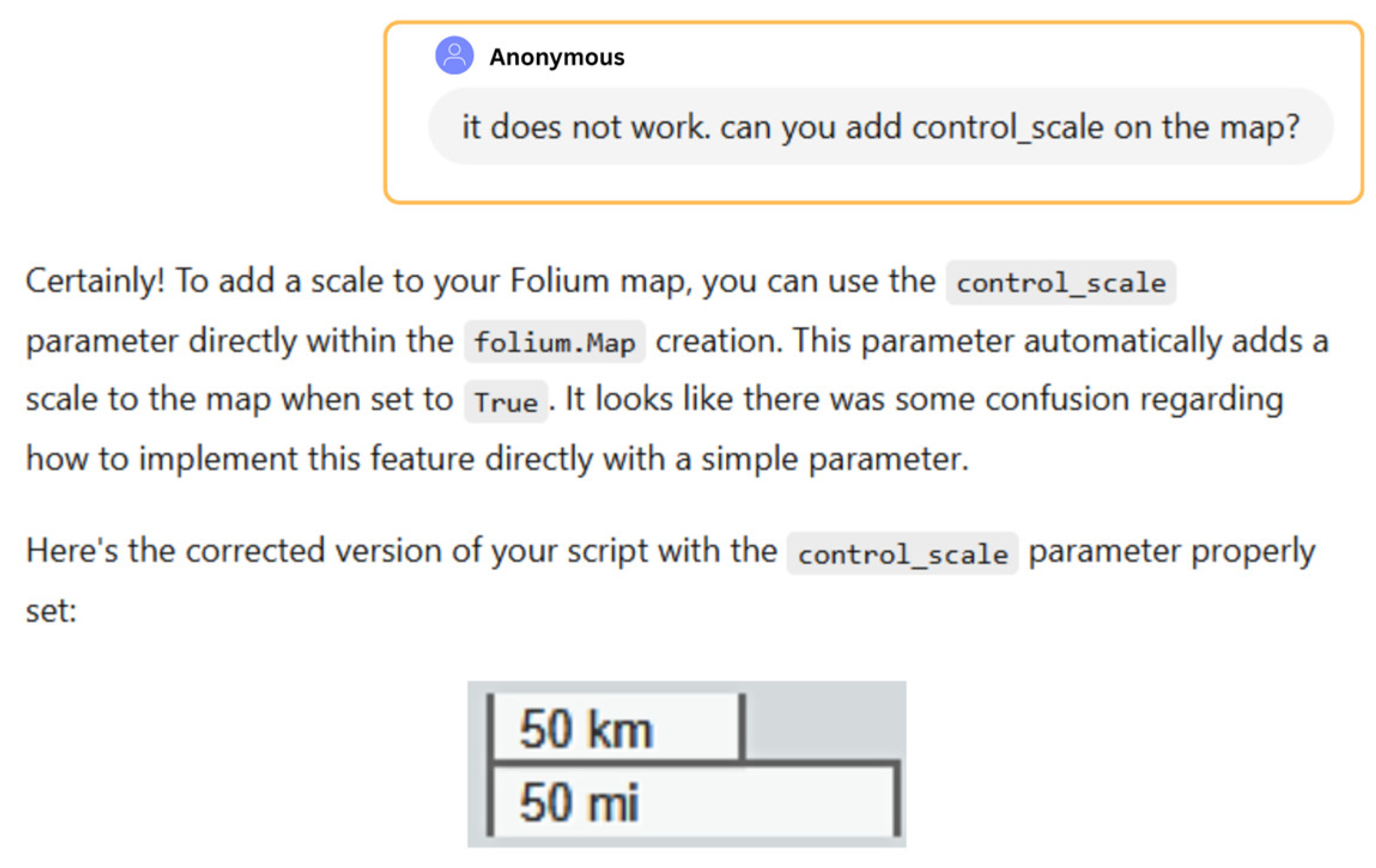

5.5.2. Scale Bar

- Scale Bar Placement: The scale bar could be placed in an inappropriate location that overlaps with the other elements. Common options for prompts include the lower right corner, lower left corner, and upper left corner, which do not obscure map details.

- Scale Bar Unit of Measurement: The scale bar utilizes ‘Matplotlib-scalebar’, which provides metric unit options (e.g., kilometers, miles, etc.). The prompt also includes the ‘Scale Bar Length’, which calculates the length of the scale bar in pixels to accurately represent the unit in kilometers.

- Scale Bar Style: To make the scale bar less dominant than the map field, the prompt can customize the scale bar color for both numeric text and bar.

5.5.3. Credits

5.5.4. Basemaps

5.5.5. Data Visualization

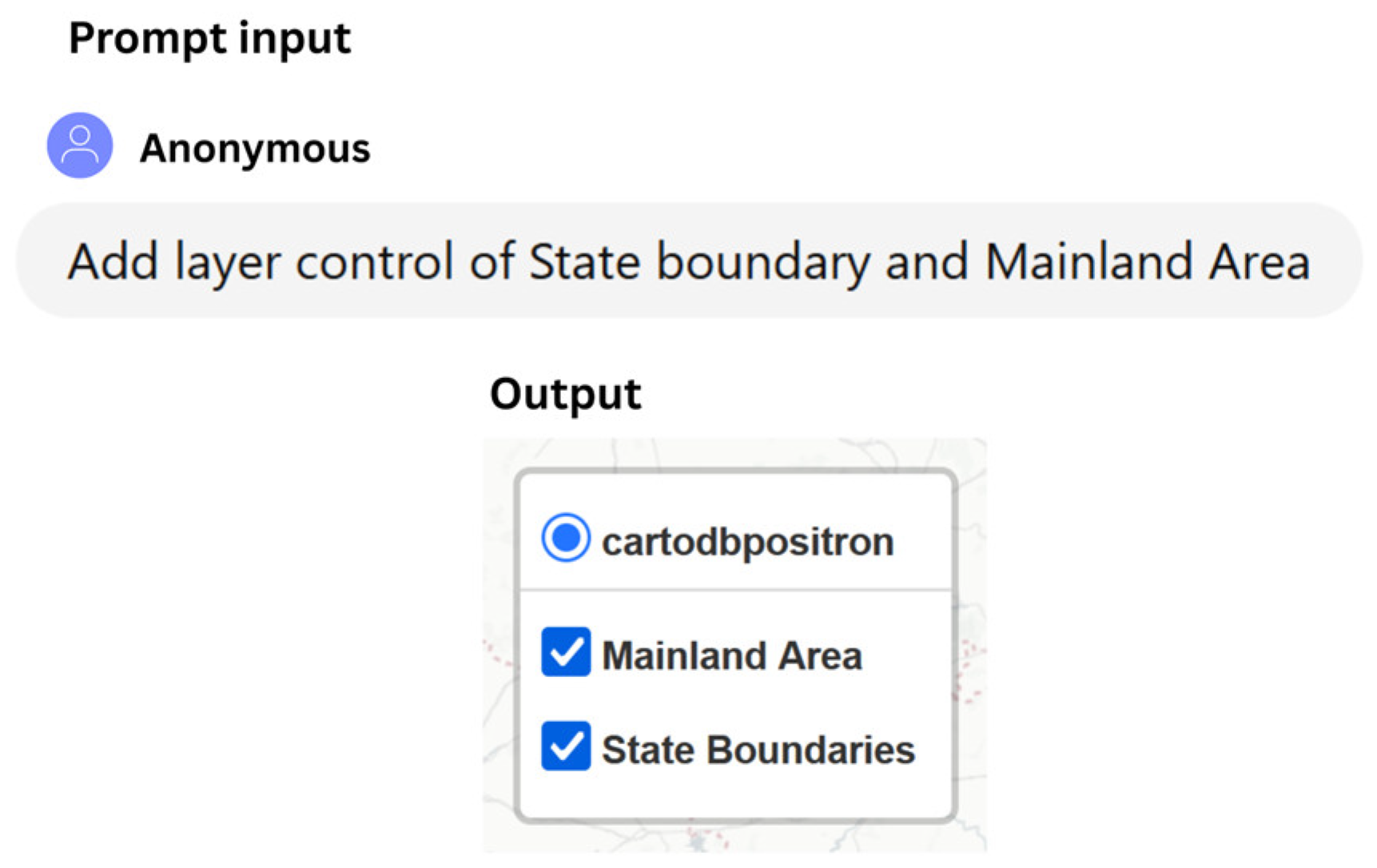

5.5.6. Tooltips and Layer Control

6. Quality Assessment

7. Discussion

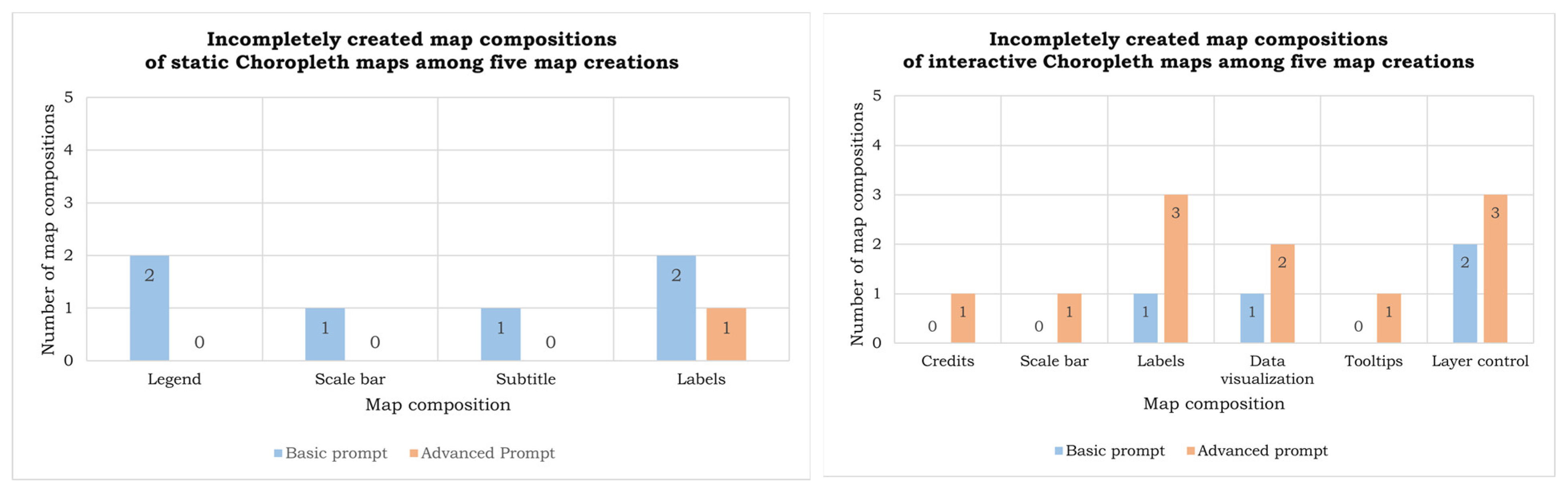

7.1. Cartographic Completeness (RQ1)

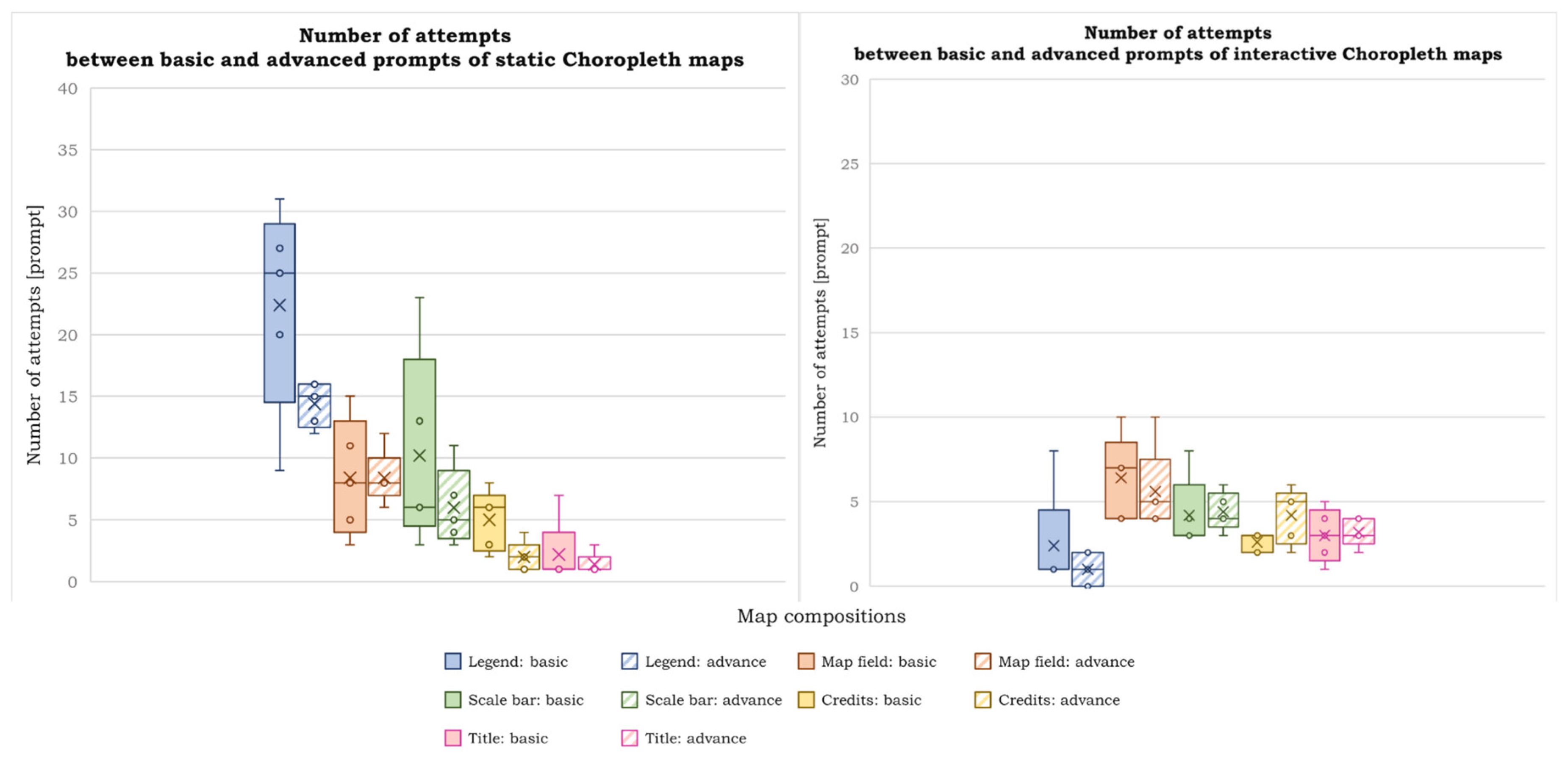

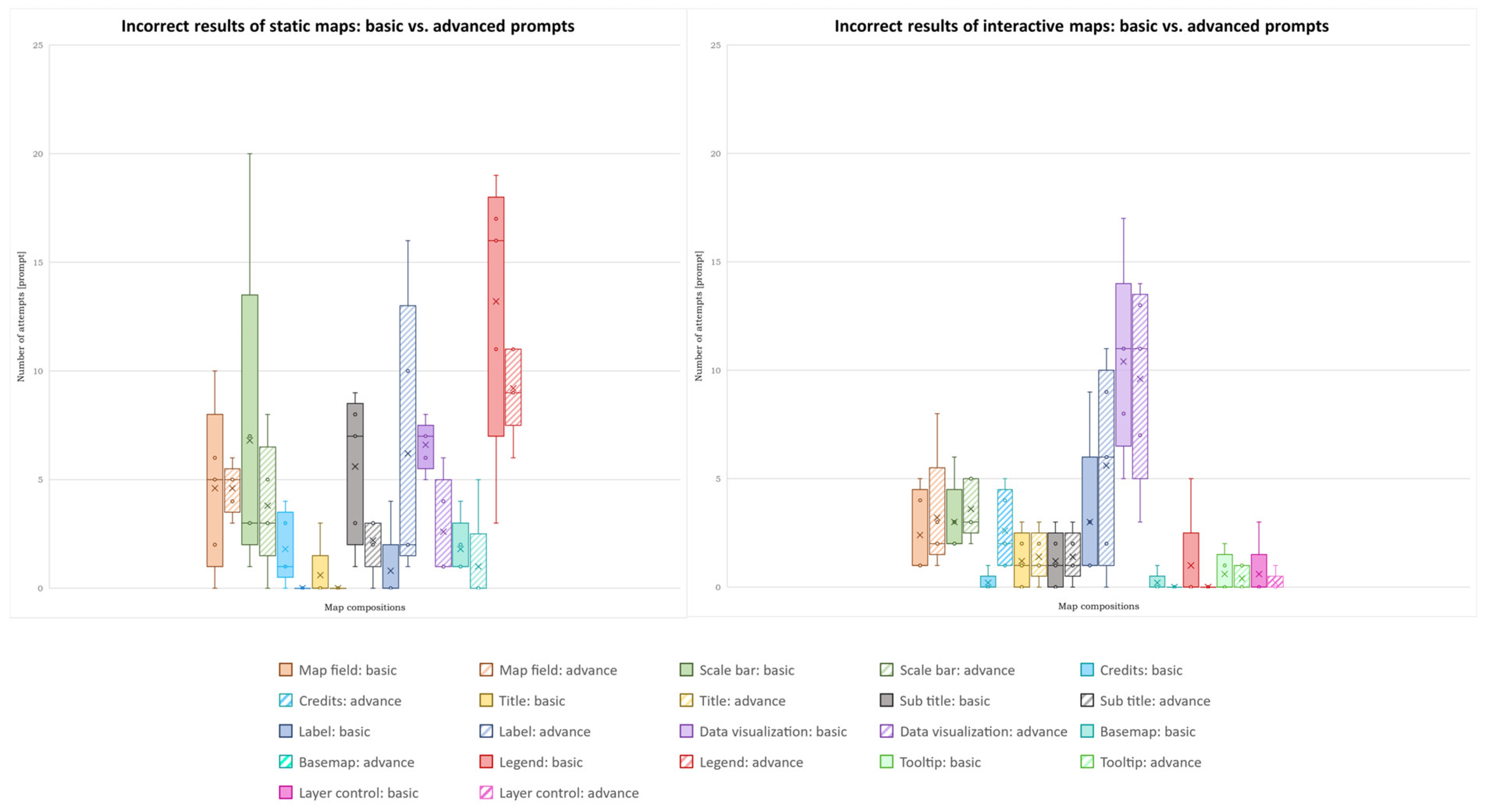

7.2. AI’s Capability in Map Generation Across Two Prompt Patterns (RQ2)

7.2.1. Number of Attempts

7.2.2. Number of Incorrect Results

7.2.3. Number of Error Messages

7.3. Map Quality: AI vs. Traditional Method (RQ3)

7.4. Comparison with Recent Work on ChatGPT-4

7.5. Time Efficiency

8. Limitations and Future Work

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

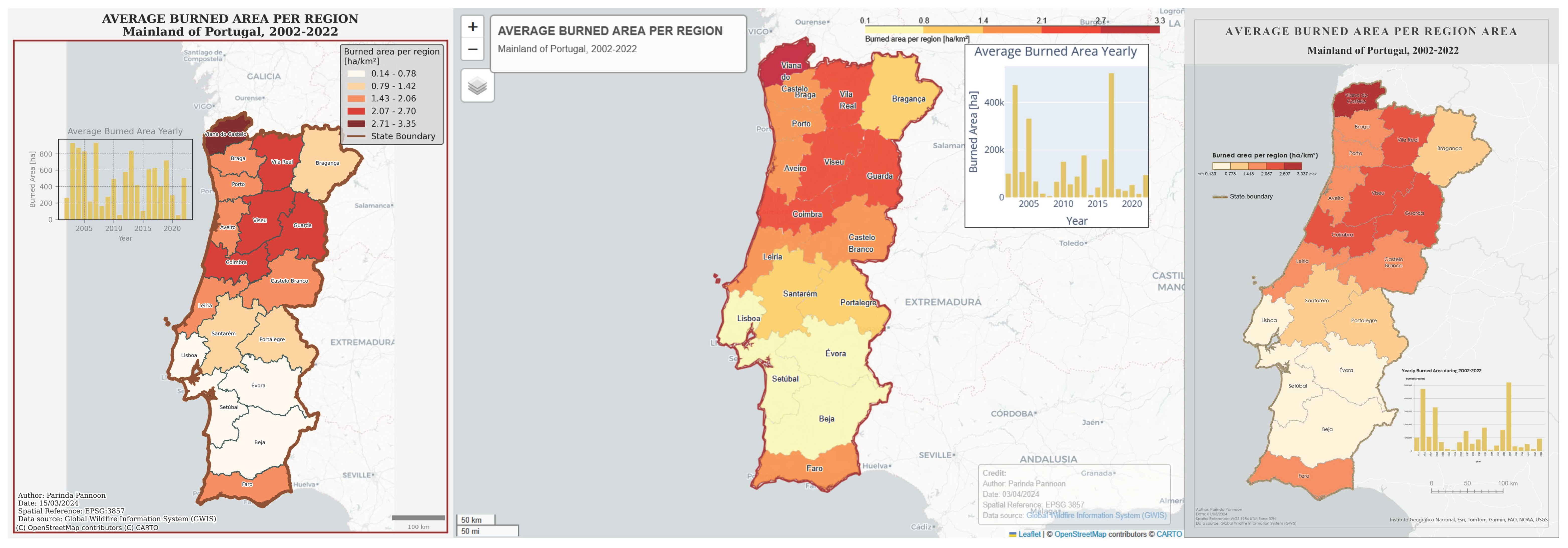

Appendix B. Static Maps

Appendix B.1. Basic Prompt Pattern

Appendix B.2. Advanced Prompt Pattern

Appendix C. Interactive Maps

Appendix C.1. Basic Prompt Pattern

Appendix C.2. Advanced Prompt Pattern

References

- Rashid, A.B.; Kausik, M.A.K. AI revolutionizing industries worldwide: A comprehensive overview of its diverse applications. Hybrid Adv. 2024, 7, 100277. [Google Scholar] [CrossRef]

- Lakshmi Aishwarya, G.; Satyanarayana, V.; Singh, M.K.; Kumar, S. Contemporary Evolution of Artificial Intelligence (AI): An Overview and Applications. In Advances in Transdisciplinary Engineering; Singari, R.M., Kankar, P.K., Eds.; IOS Press: Amsterdam, The Netherlands, 2022. [Google Scholar] [CrossRef]

- Youvan, D.C. Parallel Precision: The Role of GPUs in the Acceleration of Artificial Intelligence. 2023. Available online: https://www.researchgate.net/publication/375184141_Parallel_Precision_The_Role_of_GPUs_in_the_Acceleration_of_Artificial_Intelligence (accessed on 17 November 2025).

- Introducing ChatGPT. 2024. Available online: https://openai.com/index/chatgpt/ (accessed on 17 November 2025).

- Kovari, A. Explainable AI chatbots towards XAI ChatGPT: A review. Heliyon 2025, 11, e42077. [Google Scholar] [CrossRef]

- Acharya, V. Generative AI and the Transformation of Software Development Practices. arXiv 2025, arXiv:2510.10819. [Google Scholar] [CrossRef]

- Hong, M.K.; Hakimi, S.; Chen, Y.-Y.; Toyoda, H.; Wu, C.; Klenk, M. Generative AI for Product Design: Getting the Right Design and the Design Right. arXiv 2023, arXiv:2306.01217. [Google Scholar] [CrossRef]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Li, W.; Hsu, C.-Y. GeoAI for Large-Scale Image Analysis and Machine Vision: Recent Progress of Artificial Intelligence in Geography. ISPRS Int. J. Geo-Inf. 2022, 11, 385. [Google Scholar] [CrossRef]

- Mai, G.; Xie, Y.; Jia, X.; Lao, N.; Rao, J.; Zhu, Q.; Liu, Z.; Chiang, Y.-Y.; Jiao, J. Towards the next generation of Geospatial Artificial Intelligence. Int. J. Appl. Earth Obs. Geoinf. 2025, 136, 104368. [Google Scholar] [CrossRef]

- Lammers, R.; Li, A.; Nag, S.; Ravindra, V. Prediction models for urban flood evolution for satellite remote sensing. J. Hydrol. 2021, 603, 127175. [Google Scholar] [CrossRef]

- Kaiser, S.K.; Rodrigues, F.; Azevedo, C.L.; Kaack, L.H. Spatio-Temporal Graph Neural Network for Urban Spaces: Interpolating Citywide Traffic Volume. arXiv 2025, arXiv:2505.06292. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, C. Envisioning Generative Artificial Intelligence in Cartography and Mapmaking. arXiv 2025, arXiv:2508.09028. [Google Scholar] [CrossRef]

- Field, K. Cartography The Definitive Guide to Making Maps; Esri Press: Redlands, CA, USA, 2018. [Google Scholar]

- Openshaw, S.; Openshaw, C. Artificial Intelligence in Geography; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1997. [Google Scholar]

- Richter, K.-F.; Scheider, S. Current topics and challenges in geoAI. KI-Künstl. Intell. 2023, 37, 11–16. [Google Scholar] [CrossRef]

- Esri. History of GIS|Timeline of Early History & the Future of GIS. Available online: https://www.esri.com/en-us/what-is-gis/history-of-gis (accessed on 2 January 2024).

- Dardas, A. GeoAI Series #2: The Birth and Evolution of GeoAI. 2020. Available online: https://resources.esri.ca/education/geoai-series-2-the-birth-and-evolution-of-geoai (accessed on 22 December 2023).

- Li, S.; Dragicevic, S.; Castro, F.A.; Sester, M.; Winter, S.; Coltekin, A.; Pettit, C.; Jiang, B.; Haworth, J.; Stein, A.; et al. Geospatial big data handling theory and methods: A review and research challenges. ISPRS J. Photogramm. Remote Sens. 2016, 115, 119–133. [Google Scholar] [CrossRef]

- Netek R (2013): Interconnection of Rich Internet Application and Cloud Computing for Web Map Solutions. In Proceedings of the 13th SGEM GeoConference on Informatics, Geoinformatics and Remote Sensing, Albena, Bulgaria, 16–22 June 2013; Volume 1, pp. 753–760, ISBN 978-954-91818-9-0, ISSN 1314-2704. [CrossRef]

- Snow, S. Future Impacts of GeoAI on Mapping. Esri. 2020. Available online: https://www.esri.com/about/newsroom/arcuser/geoai-for-mapping/ (accessed on 2 January 2024).

- Minaee, S.; Mikolov, T.; Nikzad, N.; Chenaghlu, M.; Socher, R.; Amatriain, X.; Gao, J. Large Language Models: A Survey. arXiv 2024, arXiv:2402.06196. [Google Scholar] [CrossRef]

- Zhang, Y.; He, Z.; Li, J.; Lin, J.; Guan, Q.; Yu, W. MapGPT: An autonomous framework for mapping by integrating large language model and cartographic tools. Cartogr. Geogr. Inf. Sci. 2024, 51, 717–743. [Google Scholar] [CrossRef]

- Yang, N.; Wang, Y.; Wei, Z.; Wu, F. MapColorAI: Designing contextually relevant choropleth map color schemes using a large language model. Cartogr. Geogr. Inf. Sci. 2025, 1–19. [Google Scholar] [CrossRef]

- Shomer, H.; Xu, J. Automated Label Placement on Maps via Large Language Models. arXiv 2025, arXiv:2507.22952. [Google Scholar] [CrossRef]

- Wang, C.; Kang, Y.; Gong, Z.; Zhao, P.; Feng, Y.; Zhang, W.; Li, G. CartoAgent: A multimodal large language model-powered multi-agent cartographic framework for map style transfer and evaluation. Int. J. Geogr. Inf. Sci. 2025, 39, 1904–1937. [Google Scholar] [CrossRef]

- Li, Z.; Ning, H. Autonomous GIS: The next-generation AI-powered GIS. Int. J. Digit. Earth 2023, 16, 4668–4686. [Google Scholar] [CrossRef]

- Tao, R.; Xu, J. Mapping with ChatGPT. ISPRS Int. J. Geo-Inf. 2023, 12, 284. [Google Scholar] [CrossRef]

- Memduhoğlu, A. Towards AI-Assisted Mapmaking: Assessing the Capabilities of GPT-4o in Cartographic Design. ISPRS Int. J. Geo-Inf. 2025, 14, 35. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering in Large Language Models: A comprehensive review. arXiv 2023, arXiv:2310.14735. [Google Scholar] [CrossRef]

- Kang, Y.; Zhang, Q.; Roth, R. The Ethics of AI-Generated Maps: A Study of DALLE 2 and Implications for Cartography. arXiv 2023, arXiv:2304.10743. [Google Scholar] [CrossRef]

- Juhász, L.; Mooney, P.; Hochmair, H.; Guan, B. ChatGPT as a Mapping Assistant: A Novel Method to Enrich Maps with Generative AI and Content Derived from Street-Level Photographs. 2023. Available online: https://eartharxiv.org/repository/view/5480/ (accessed on 17 November 2025).

- Patel, H.; Parmar, H. Prompt Engineering For Large Language Model. 2024. Available online: https://www.researchgate.net/publication/379048840_Prompt_Engineering_For_Large_Language_Model?channel=doi&linkId=65f8a42d1f0aec67e2a6673e&showFulltext=true (accessed on 17 November 2025).

- GWIS. Global Wildfire Information System. Available online: https://gwis.jrc.ec.europa.eu/ (accessed on 25 March 2024).

- San-Miguel-Ayanz, J.; Artes, T.; Oom, D.; Ferrari, D.; Branco, A.; Pfeiffer, H.; Liberta, G.; De Rigo, D.; Durrant, T.; Grecchi, R.; et al. Global Wildfire Information System Country Profiles. 2020. Available online: https://gwis-reports.s3-eu-west-1.amazonaws.com/countriesprofile/gwis.country.profiles.pdf (accessed on 17 November 2025).

- Dobesova, Z.; Vavra, A.; Netek, R. Cartographic aspects of creation of plans for botanical garden and conservatories. Int. Multidiscip. Sci. GeoConfrence SGEM 2013, 1, 653. [Google Scholar] [CrossRef]

- Vansteenvoort, L.; Maeyer, P.D. An approach to the quality assessment of the cartographic representation of thematic information. In Proceedings of the 22th International Cartographic Conference (ICA), La Coruna, Spain, 9–16 July 2005. [Google Scholar]

- White, J.; Fu, Q.; Hays, S.; Sandborn, M.; Olea, C.; Gilbert, H.; Elnashar, A.; Spencer-Smith, J.; Schmidt, D.C. A Prompt Pattern Catalog to Enhance Prompt Engineering with ChatGPT. arXiv 2023, arXiv:2302.11382. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Pannoon, P. Automated Map Creation Using Large Language Models: A Case Study with ChatGPT-4. Master’s Thesis, Palacký University Olomouc, Olomouc, Czech Republic, 2024. Available online: https://www.geoinformatics.upol.cz/dprace/magisterske/pannoon24/docs/Pannoon_thesis.pdf (accessed on 17 November 2025).

- Konicek, J.; Netek, R.; Burian, T.; Novakova, T.; Kaplan, J. Non-Spatial Data towards Spatially Located News about COVID-19: A Semi-Automated Aggregator of Pandemic Data from (Social) Media within the Olomouc Region, Czechia. Data 2020, 5, 76. [Google Scholar] [CrossRef]

- Raza, M. LLMs vs. SLMs: The Differences in Large & Small Language Models. Splunk. 2024. Available online: https://www.splunk.com/en_us/blog/learn/language-models-slm-vs-llm.html (accessed on 10 May 2024).

- Merritt, R. What Is Retrieval-Augmented Generation Aka RAG? NVIDIA Blog. 2024. Available online: https://blogs.nvidia.com/blog/what-is-retrieval-augmented-generation/ (accessed on 17 November 2025).

| Suitability Levels | |||

|---|---|---|---|

| Map Compositions | Least Suitable | Intermediate | Most Suitable |

| 1. legend |

|

|

|

| 2. map field |

|

|

|

| Error Messages Occurred During Code Generation | ||||

|---|---|---|---|---|

| Map Compositions | Static Map | Interactive Map | ||

| Basic Prompt | Advanced Prompt | Basic Prompt | Advanced Prompt | |

| Legend | 1 | 0 | 0 | 0 |

| Map field | 12 | 0 | 3 | 6 |

| Scale bar | 4 | 0 | 1 | 0 |

| Credits | 0 | 0 | 1 | 0 |

| Data visualization | 3 | 3 | 8 | 15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pannoon, P.; Netek, R. Creating Choropleth Maps by Artificial Intelligence—Case Study on ChatGPT-4. ISPRS Int. J. Geo-Inf. 2025, 14, 486. https://doi.org/10.3390/ijgi14120486

Pannoon P, Netek R. Creating Choropleth Maps by Artificial Intelligence—Case Study on ChatGPT-4. ISPRS International Journal of Geo-Information. 2025; 14(12):486. https://doi.org/10.3390/ijgi14120486

Chicago/Turabian StylePannoon, Parinda, and Rostislav Netek. 2025. "Creating Choropleth Maps by Artificial Intelligence—Case Study on ChatGPT-4" ISPRS International Journal of Geo-Information 14, no. 12: 486. https://doi.org/10.3390/ijgi14120486

APA StylePannoon, P., & Netek, R. (2025). Creating Choropleth Maps by Artificial Intelligence—Case Study on ChatGPT-4. ISPRS International Journal of Geo-Information, 14(12), 486. https://doi.org/10.3390/ijgi14120486