PestOOD: An AI-Enabled Solution for Advancing Grain Security via Out-of-Distribution Pest Detection

Abstract

1. Introduction

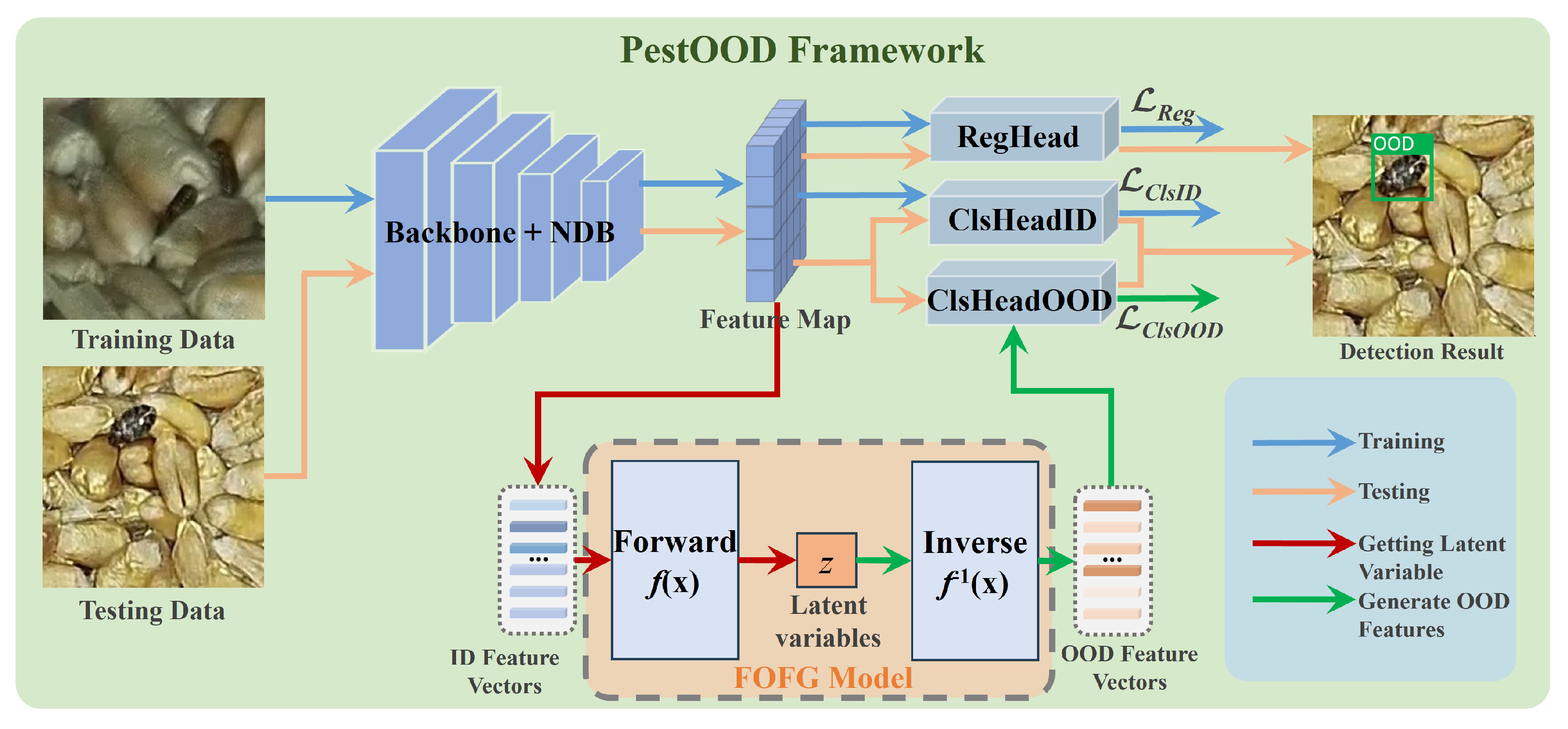

- We propose PestOOD, a framework for out-of-distribution stored-grain pest detection via flow-based feature reconstruction to achieve robust grain security.

- We propose FOFG to generate OOD features for detector training. NDB is introduced into the backbone network to prevent overfitting. Additionally, we propose an STS for effective network convergence.

- Extensive experiments demonstrate that PestOOD can effectively detect OOD objects, outperforming other SOTA methods in OOD stored-grain pest detection.

2. Related Work

2.1. Research on Pest Detection

2.2. Out-of-Distribution Object Detection

3. Methodology

3.1. Preliminaries

3.2. Flow-Based OOD Feature Generation (FOFG)

3.2.1. Objective of the FOFG

3.2.2. Method of OOD Feature Generation

3.3. Noisy DropBlock (NDB)

3.4. Stage-Wise Training Strategy (STS)

| Algorithm 1: Stage-Wise Training Strategy (STS) for PestOOD. |

|

4. Experiments

4.1. Datasets and Preprocessing

4.2. Implementation Details and Evaluation Metrics

4.3. Comparison Experiments

4.4. Ablation Studies of PestOOD

4.4.1. Impact of Temperature on Detector Performance

4.4.2. Ablative Study of Noisy DropBlock

4.5. Visualization and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deshwal, R.; Vaibhav, V.; Kumar, N.; Kumar, A.; Singh, R. Stored grain insect pests and their management: An overview. J. Entomol. Zool. Stud. 2020, 8, 969–974. [Google Scholar]

- Rustia, D.J.A.; Chiu, L.Y.; Lu, C.Y.; Wu, Y.F.; Chen, S.K.; Chung, J.Y.; Hsu, J.C.; Lin, T.T. Towards intelligent and integrated pest management through an AIoT-based monitoring system. Pest Manag. Sci. 2022, 78, 4288–4302. [Google Scholar] [CrossRef] [PubMed]

- Chithambarathanu, M.; Jeyakumar, M. Survey on crop pest detection using deep learning and machine learning approaches. Multimed. Tools Appl. 2023, 82, 42277–42310. [Google Scholar] [CrossRef] [PubMed]

- Guo, B.; Wang, J.; Guo, M.; Chen, M.; Chen, Y.; Miao, Y. Overview of pest detection and recognition algorithms. Electronics 2024, 13, 3008. [Google Scholar] [CrossRef]

- Yang, J.; Zhou, K.; Li, Y.; Liu, Z. Generalized out-of-distribution detection: A survey. Int. J. Comput. Vis. 2024, 132, 5635–5662. [Google Scholar] [CrossRef]

- Yu, W.; Yang, T.; Chen, C. Towards resolving the challenge of long-tail distribution in UAV images for object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3258–3267. [Google Scholar]

- Kennerley, M.; Wang, J.G.; Veeravalli, B.; Tan, R.T. Cat: Exploiting inter-class dynamics for domain adaptive object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025; IEEE: Seattle, WA, USA, 2024; pp. 16541–16550. [Google Scholar]

- Cui, P.; Wang, J. Out-of-distribution (OOD) detection based on deep learning: A review. Electronics 2022, 11, 3500. [Google Scholar] [CrossRef]

- Yang, S.; Wang, Y.; Wang, K.; Jui, S.; van de Weijer, J. OneRing: A simple method for source-free open-partial domain adaptation. arXiv 2022, arXiv:2206.03600. [Google Scholar]

- Wilson, S.; Fischer, T.; Dayoub, F.; Miller, D.; Sünderhauf, N. Safe: Sensitivity-aware features for out-of-distribution object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; IEEE: Paris, France, 2023; pp. 23565–23576. [Google Scholar]

- Wu, X.; Zhan, C.; Lai, Y.K.; Cheng, M.M.; Yang, J. Ip102: A large-scale benchmark dataset for insect pest recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 8787–8796. [Google Scholar]

- Liu, W.; Wang, X.; Owens, J.; Li, Y. Energy-based out-of-distribution detection. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020; pp. 21464–21475. [Google Scholar]

- Wu, A.; Deng, C.; Liu, W. Unsupervised out-of-distribution object detection via PCA-driven dynamic prototype enhancement. IEEE Trans. Image Process. 2024, 33, 2431–2446. [Google Scholar] [CrossRef] [PubMed]

- Wu, A.; Chen, D.; Deng, C. Deep feature deblurring diffusion for detecting out-of-distribution objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; IEEE: Paris, France, 2023; pp. 13381–13391. [Google Scholar]

- Liu, J.; Yu, X.; Zheng, A.; Sun, K.L.; Qi, X. Diffusion-based Data Generation for Out-of-Distribution Object Detection. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Kingma, D.P.; Dhariwal, P. Glow: Generative flow with invertible 1x1 convolutions. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montréal, QC, Canada, 3–8 December 2018; pp. 10236–10245. [Google Scholar]

- Liu, D.; Jain, M.; Dossou, B.F.; Shen, Q.; Lahlou, S.; Goyal, A.; Malkin, N.; Emezue, C.C.; Zhang, D.; Hassen, N.; et al. Gflowout: Dropout with generative flow networks. In Proceedings of the International Conference on Machine Learning (ICML), PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 21715–21729. [Google Scholar]

- Kumar, N.; Šegvić, S.; Eslami, A.; Gumhold, S. Normalizing flow based feature synthesis for outlier-aware object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Vancouver, BC, Canada, 2023; pp. 5156–5165. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Zhou, H.; Miao, H.; Li, J.; Jian, F.; Jayas, D.S. A low-resolution image restoration classifier network to identify stored-grain insects from images of sticky boards. Comput. Electron. Agric. 2019, 162, 593–601. [Google Scholar] [CrossRef]

- Li, R.; Wang, R.; Zhang, J.; Xie, C.; Liu, L.; Wang, F.; Chen, H.; Chen, T.; Hu, H.; Jia, X.; et al. An effective data augmentation strategy for CNN-based pest localization and recognition in the field. IEEE Access 2019, 7, 160274–160283. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, Q.; Wang, C.; Qian, Y.; Xue, Y.; Wang, H. MACNet: A more accurate and convenient pest detection network. Electronics 2024, 13, 1068. [Google Scholar] [CrossRef]

- Amrani, A.; Diepeveen, D.; Murray, D.; Jones, M.G.; Sohel, F. Multi-task learning model for agricultural pest detection from crop-plant imagery: A Bayesian approach. Comput. Electron. Agric. 2024, 218, 108719. [Google Scholar] [CrossRef]

- Duan, J.; Ding, H.; Kim, S. A multimodal approach for advanced pest detection and classification. arXiv 2023, arXiv:2312.10948. [Google Scholar] [CrossRef]

- Hsu, Y.C.; Shen, Y.; Jin, H.; Kira, Z. Generalized odin: Detecting out-of-distribution image without learning from out-of-distribution data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10951–10960. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020; pp. 6840–6851. [Google Scholar]

- Dinh, L.; Krueger, D.; Bengio, Y. Nice: Non-linear independent components estimation. arXiv 2014, arXiv:1410.8516. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning (ICML), PMLR, New York, NY, USA, 19–24 June 2016; pp. 1050–1059. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Dropblock: A regularization method for convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Wang, Y.; Qi, L.; Shi, Y.; Gao, Y. Feature-based style randomization for domain generalization. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5495–5509. [Google Scholar] [CrossRef]

- Li, D.; Tian, J.; Li, J.; Sun, M.; Zhou, H.; Zheng, Y. Establishment of a Dataset for Detecting Pests on the Surface of Grain Bulks. Appl. Eng. Agric. 2024, 40, 363–376. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. Unireplknet: A universal perception large-kernel convnet for audio video point cloud time-series and image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; IEEE: Seattle, WA, USA, 2024; pp. 5513–5524. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: Chennai, India, 2024; pp. 1–6. [Google Scholar]

- Amari, S.i. Backpropagation and stochastic gradient descent method. Neurocomputing 1993, 5, 185–196. [Google Scholar] [CrossRef]

- Aguilar, E.; Raducanu, B.; Radeva, P.; Van de Weijer, J. Continual evidential deep learning for out-of-distribution detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; IEEE: Paris, France, 2023; pp. 3444–3454. [Google Scholar]

- Wang, H.; Liu, W.; Bocchieri, A.; Li, Y. Can multi-label classification networks know what they don’t know? In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–14 December 2021; pp. 29074–29087. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 9–15 December 2024; pp. 107984–108011. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; IEEE: Seattle, WA, USA, 2024; pp. 16965–16974. [Google Scholar]

- Olber, B.; Radlak, K.; Chachuła, K.; Łyskawa, J.; Frątczak, P. Detecting Out-of-Distribution Objects Using Neuron Activation Patterns. In European Conference on Artificial Intelligence (ECAI); IOS Press: Kraków, Poland, 2023; pp. 1803–1810. [Google Scholar]

- Tack, J.; Mo, S.; Jeong, J.; Shin, J. Csi: Novelty detection via contrastive learning on distributionally shifted instances. Adv. Neural Inf. Process. Syst. 2020, 33, 11839–11852. [Google Scholar]

- Papamakarios, G.; Nalisnick, E.; Rezende, D.J.; Mohamed, S.; Lakshminarayanan, B. Normalizing flows for probabilistic modeling and inference. J. Mach. Learn. Res. 2021, 22, 1–64. [Google Scholar]

| Notations | Descriptions | Notations | Descriptions |

|---|---|---|---|

| x | Original features | Backbone of detector | |

| z | Latent variable | Detection head of detector | |

| Distribution of latent variable | Objective function of FOFG | ||

| Flow block | Objective function of detector | ||

| Training data | Latent variable space | ||

| Temperature | FOFG module | ||

| Bounding boxes | ID categories | ||

| Feature maps |

| Method | Evaluation Metrics | ||

|---|---|---|---|

| FPR95 ↓ | AUROC ↑ | mAP(ID) ↑ | |

| YOLOv10 | - | - | 0.812 |

| RT-DETR | - | - | 0.768 |

| FFS | 0.979 | 0.162 | 0.653 |

| DFDD | 0.757 | 0.277 | 0.676 |

| NAP | 0.579 | 0.421 | 0.667 |

| CSI | 0.507 | 0.507 | 0.650 |

| Ours | 0.425 | 0.595 | 0.727 |

| Value | Evaluation Metrics | ||

|---|---|---|---|

| FPR95 ↓ | AUROC ↑ | mAP(ID) ↑ | |

| 0.9 | 0.878 | 0.298 | 0.302 |

| 1.1 | 0.422 | 0.595 | 0.727 |

| 1.3 | 0.763 | 0.480 | 0.711 |

| Block Size | Evaluation Metrics | ||

|---|---|---|---|

| FPR95 ↓ | AUROC ↑ | mAP(ID) ↑ | |

| 0 | 0.853 | 0.196 | 0.291 |

| 0.2 | 0.448 | 0.526 | 0.740 |

| 0.4 | 0.422 | 0.595 | 0.727 |

| 0.4 1 | 0.412 | 0.527 | 0.713 |

| 0.6 | 0.413 | 0.552 | 0.753 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, J.; Ma, C.; Li, J.; Zhou, H. PestOOD: An AI-Enabled Solution for Advancing Grain Security via Out-of-Distribution Pest Detection. Electronics 2025, 14, 2868. https://doi.org/10.3390/electronics14142868

Tian J, Ma C, Li J, Zhou H. PestOOD: An AI-Enabled Solution for Advancing Grain Security via Out-of-Distribution Pest Detection. Electronics. 2025; 14(14):2868. https://doi.org/10.3390/electronics14142868

Chicago/Turabian StyleTian, Jida, Chuanyang Ma, Jiangtao Li, and Huiling Zhou. 2025. "PestOOD: An AI-Enabled Solution for Advancing Grain Security via Out-of-Distribution Pest Detection" Electronics 14, no. 14: 2868. https://doi.org/10.3390/electronics14142868

APA StyleTian, J., Ma, C., Li, J., & Zhou, H. (2025). PestOOD: An AI-Enabled Solution for Advancing Grain Security via Out-of-Distribution Pest Detection. Electronics, 14(14), 2868. https://doi.org/10.3390/electronics14142868