Department of Electrical Engineering, University of Engineering and Technology Taxila, Taxila 47050, Pakistan

Electronics 2022, 11(21), 3596; https://doi.org/10.3390/electronics11213596 - 3 Nov 2022

Cited by 10 | Viewed by 2787

Abstract

Energy disaggregation algorithms disintegrate aggregate demand into appliance-level demands. Among various energy disaggregation approaches, non-intrusive load monitoring (NILM) algorithms requiring a single sensor have gained much attention in recent years. Various machine learning and optimization-based NILM approaches are available in the literature, but

[...] Read more.

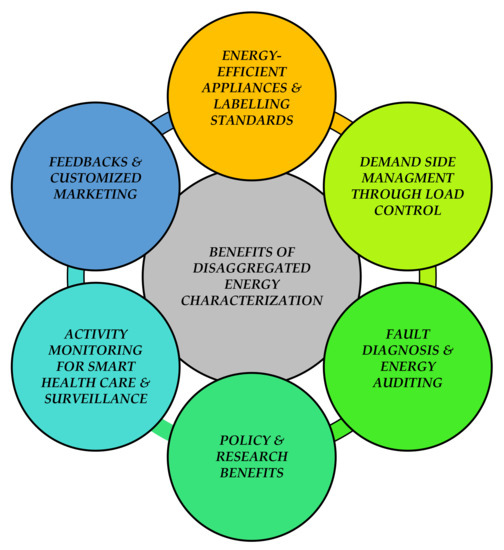

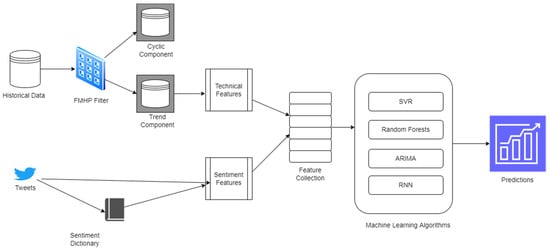

Energy disaggregation algorithms disintegrate aggregate demand into appliance-level demands. Among various energy disaggregation approaches, non-intrusive load monitoring (NILM) algorithms requiring a single sensor have gained much attention in recent years. Various machine learning and optimization-based NILM approaches are available in the literature, but bulk training data and high computational time are their respective drawbacks. Considering these drawbacks, we devised an event matching energy disaggregation algorithm (EMEDA) for NILM of multistate household appliances using smart meter data. Having limited training data, K-means clustering was employed to estimate appliance power states. These power states were accumulated to generate an event database (EVD) containing all combinations of appliance operations in their various states. Prior to matching, the test samples of aggregate demand events were decreased by event-driven data compression for computational effectiveness. The compressed test events were matched in the sorted EVD to assess the contribution of each appliance in the aggregate demand. To counter the effects of transient spikes and/or dips that occurred during the state transition of appliances, a post-processing algorithm was also developed. The proposed approach was validated using the low-rate data of the Reference Energy Disaggregation Dataset (REDD). With better energy disaggregation performance, the proposed EMEDA exhibited reductions of 97.5 and 61.7% in computational time compared with the recent smart event-based optimization and optimization-based load disaggregation approaches, respectively.

Full article

(This article belongs to the Special Issue Power System Dynamics, Operation, and Control including Renewable Energy Systems and Smart Grid: Technology and Applications)

▼

Show Figures