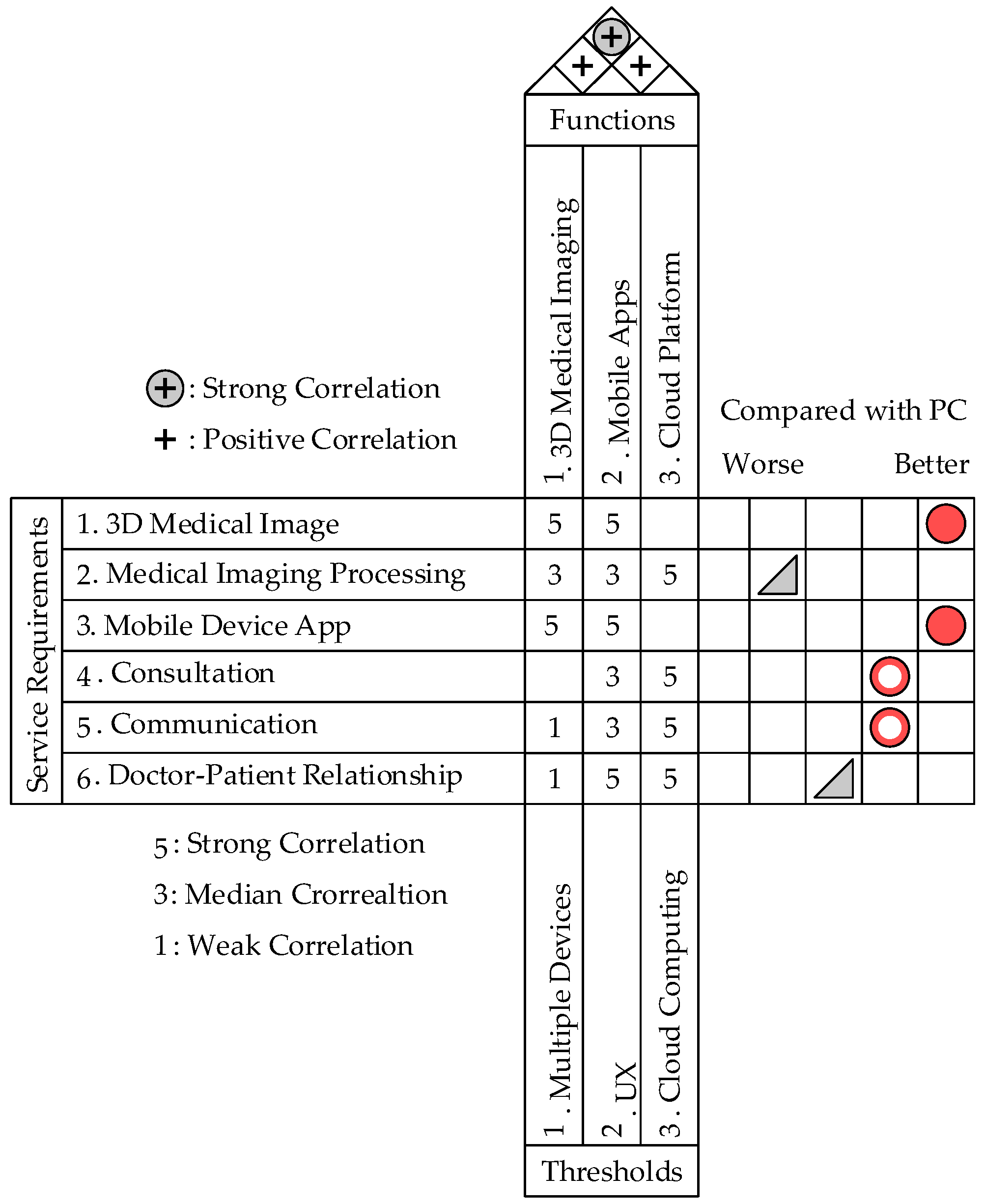

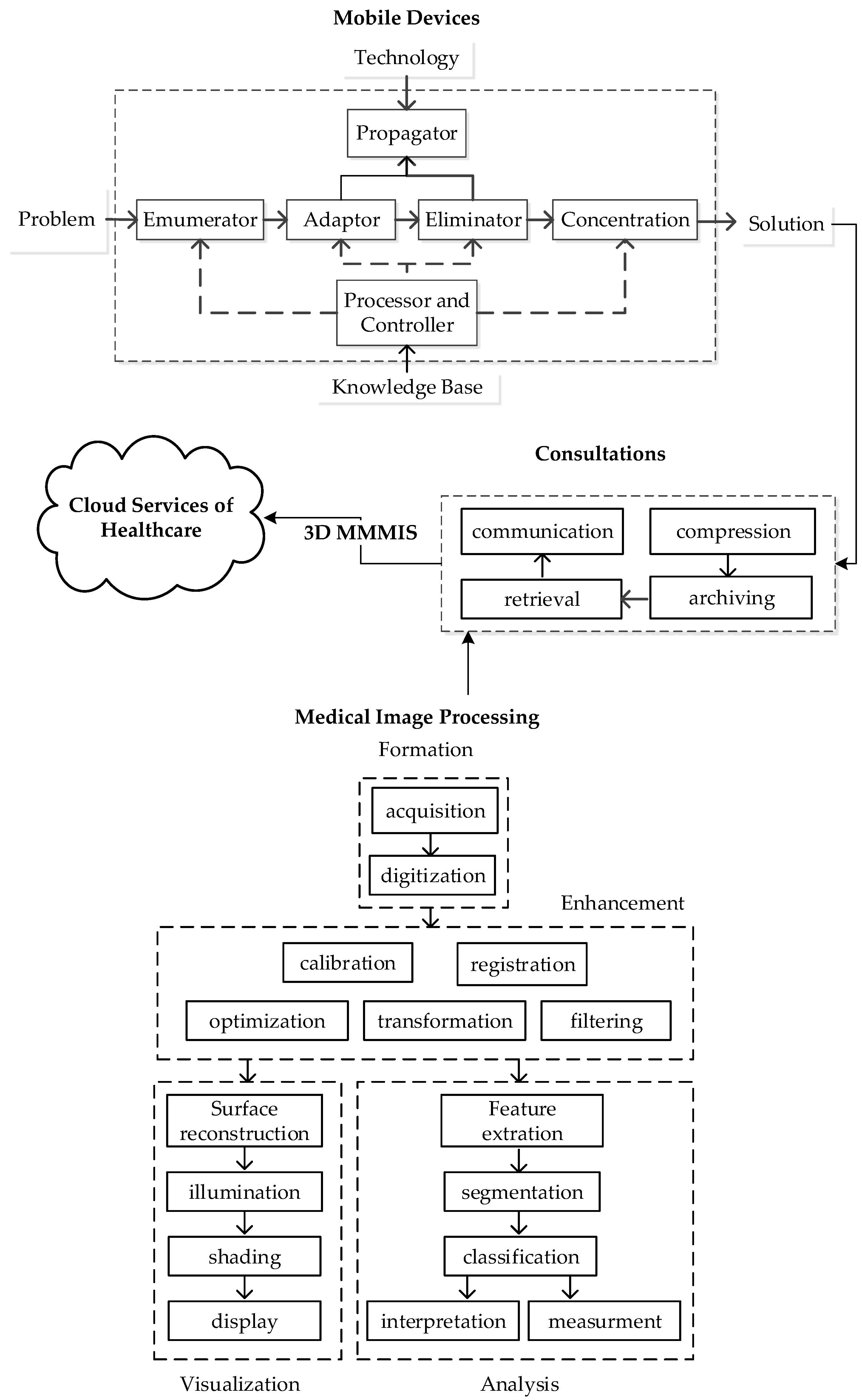

4.1. The Practical Result: Construction of the 3D MMMIS

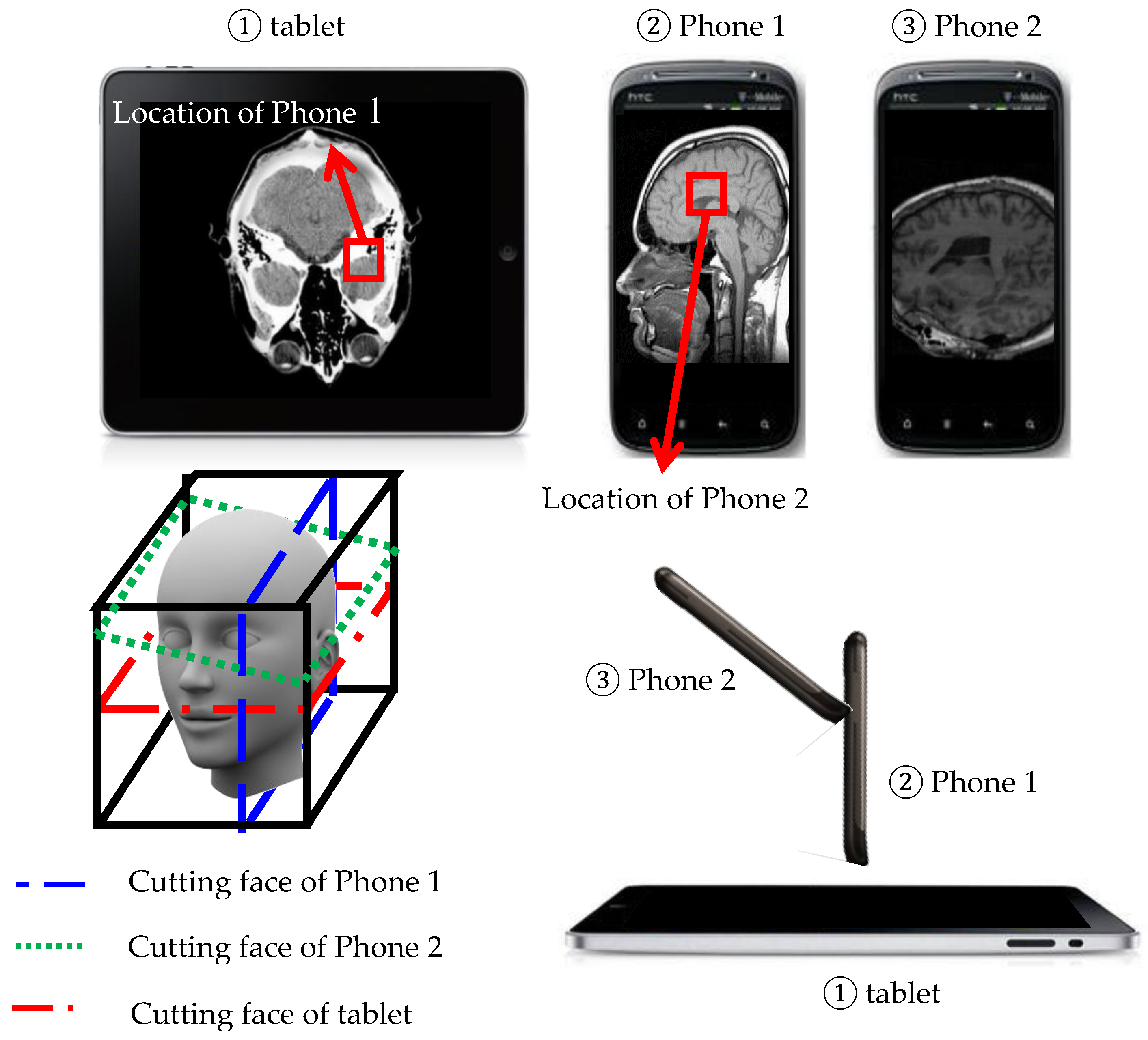

Comparing to the existing medium, we present a novel method to interact with the 3D object using handheld devices. The 3D object is placed in front of users as a virtual 3D image in the air, and users can use handheld devices, such as tablet personal computers or smartphones, to interact with them and see cutting faces. As shown in

Figure 4, we use a 3D human head as an example, and we have a tablet and two smartphones in our environment. First, the user can use a tablet put on a desk to see a horizontal cutting face of the human head and then use another handheld device, a smart phone (

Figure 4②), standing on the tablet to see a vertical cutting face relative to the cutting face on the tablet by obtaining the location on the tablet’s screen. After that, the user can also use another smartphone (

Figure 4③) to see other cutting faces with the location obtained from Smartphone 1 and with different geographical orientations. System-adapted HTC phones and a tablet with the Android operating system, HTC, New Taipei City, Taiwan, were capable of image processing and wireless streaming. Key specifications for image processing were as follows: the CPU was Quad-core 2.3 GHz; the display was a Super LCD3 capacitive touchscreen, 16 M colors. The network latency was set as the data rate per stream up to 866.7(Mbit/s) for the experiments in this study.

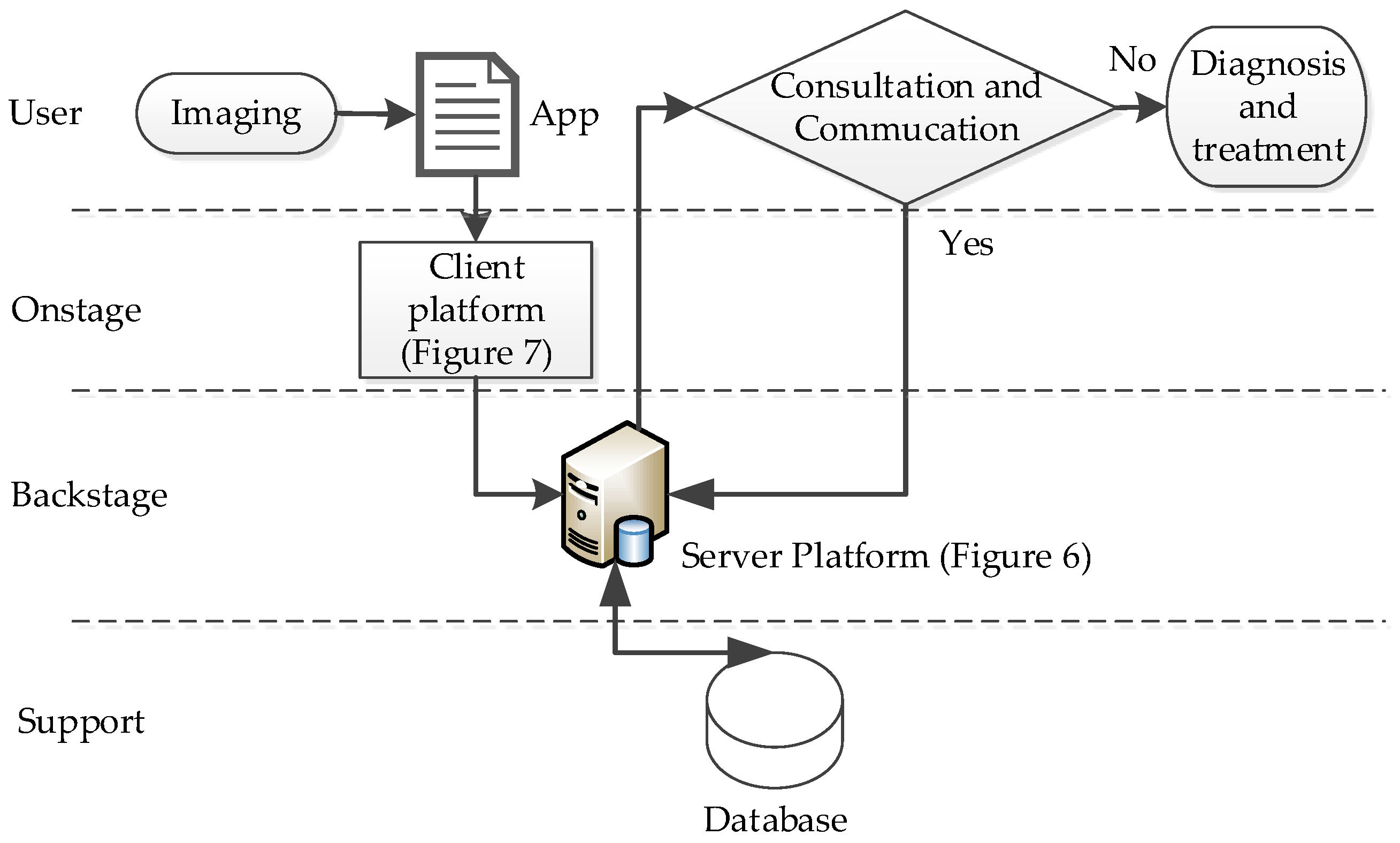

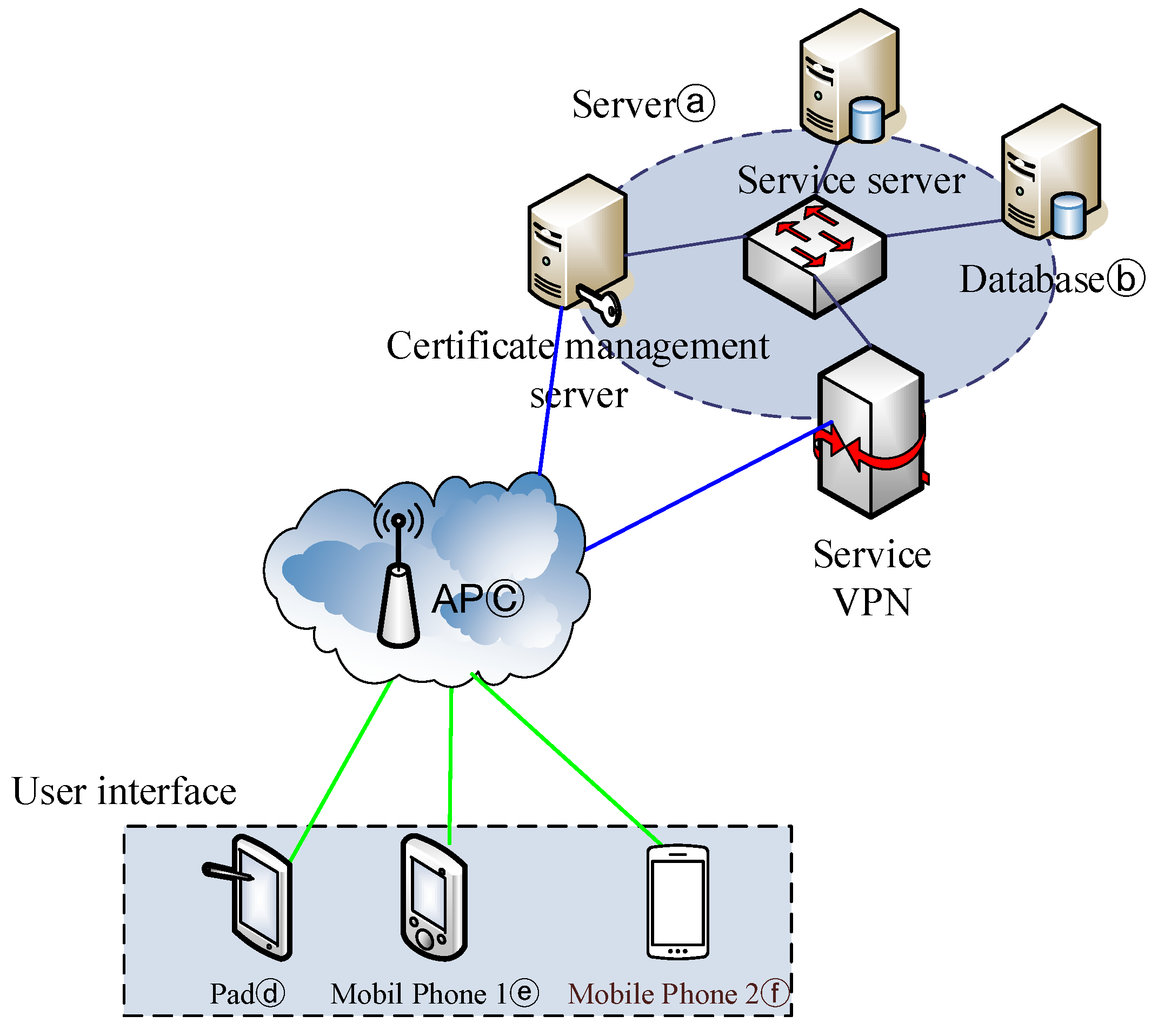

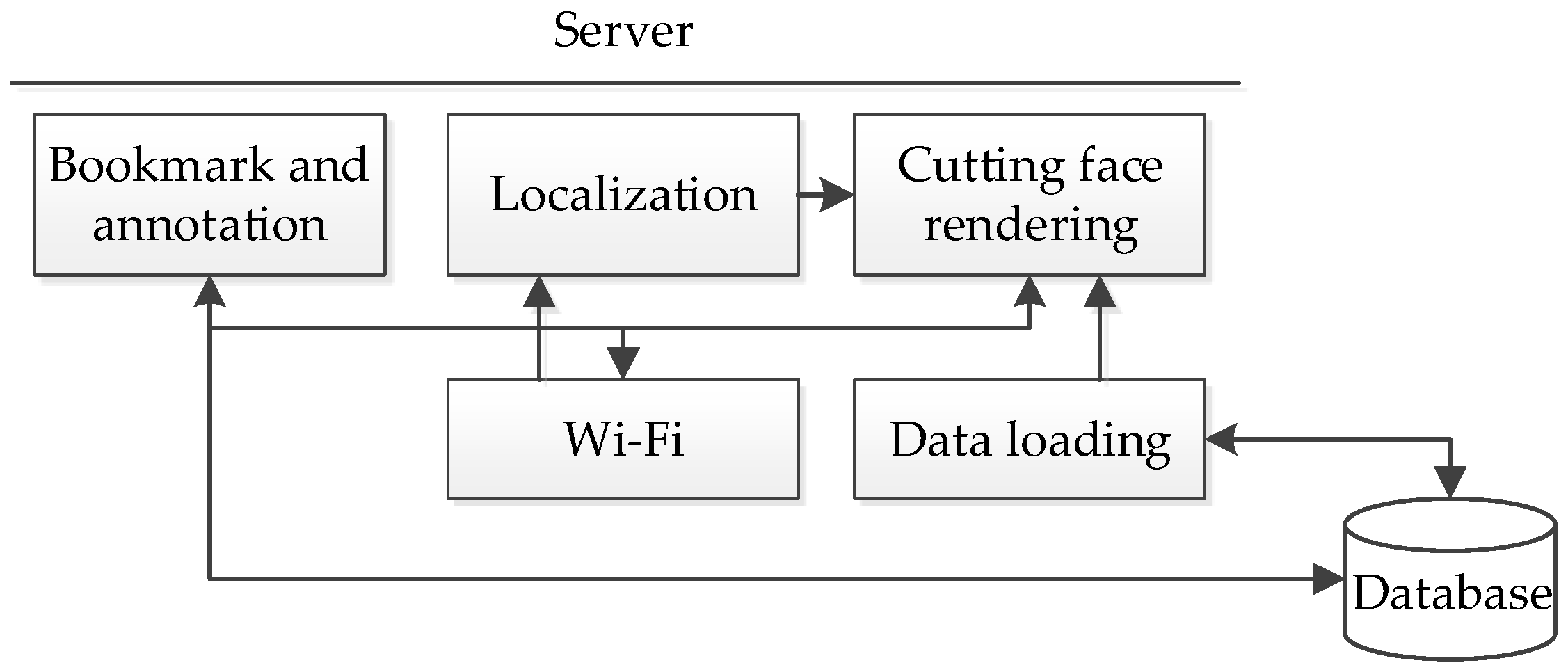

To achieve the novel 3D visualization and interaction with the 3D image via multiple handheld devices, we proposed a multi-plane 3D display system.

Figure 5 shows our system architecture. Our system employs basic client-server architecture. The server side contains a server (

Figure 5ⓐ) and a database (

Figure 5ⓑ). The server is responsible for all computing work, including processing raw data of the 3D object, constructing cutting faces and cutting faces’ location computation. The other component of the server side, the database, stores the raw data of the 3D image, bookmarks and annotations. On the other hand, the client side is a tablet PC (

Figure 5ⓓ) or smartphones (

Figure 5ⓔ,

Figure 5ⓕ), and each handheld device can connect to the Internet through a wireless access point (AP;

Figure 5ⓒ) and communicate with our server. Mobile devices used on the client side are responsible for motion capture to detect the orientation of cutting faces and the cutting faces’ location obtainment and provides a user interface for displaying cutting faces and commands’ input, such as requesting a cutting face.

According to the hardware architecture, the software architecture of our system consists of two parts. One part is the server platform, and the other part is the client platform. Each platform is divided into three layers, including the hardware layer, middleware layer and application layer. For the clarity of the software architecture, the modules in different layers are in different colors. Modules in the hardware layer are in blue, in the middleware layer are in orange and in application layer are in blue-green. The software architecture of the server platform is shown in

Figure 6. In our system, we provide three major functionalities, including rendering cutting faces, locating cutting faces, storing cutting faces as bookmarks and annotating on cutting faces, and according to the order, these functionalities are implemented by the “cutting face rendering” module, the “localization” module and the “bookmark and annotation” module in the application layer. Regarding the middleware layer, the “data loading” module will load in the raw data of the 3D object from the database and construct the 3D image for the use of rendering cutting faces. For example, in the medical image case, 2D medical images are stored in the database, and the “data loading” module will load the images and construct the 3D medical imaging at start-up of the system. In the hardware layer, the “Wi-Fi” module is in charge of client connections’ establishment and maintenance, as well as network communication, including request acceptance and corresponding response sending. More specifically, the “Wi-Fi” module will classify the requests sent from the client and forward them to the appropriate application module for further handling, as well as send back the result in response. For instance, the client may send a request to ask for the cutting face, then the “Wi-Fi” module will forward the request the “cutting face rendering” module and send back the cutting face to the client after rendering.

The diagram of the client platform is shown in

Figure 7. We provide the user a graphical user interface for the display of not only the cutting face, but also a map of the cutting face and other control inputs, such as requests for bookmarks or commands of storing annotations. As a result, in the application layer, we implement a “user interface” module that contains three sub-modules. One is the “mini map” module, which is in charge of updating the map of the cutting face when the orientation or location changes. The second is the “cutting face display” module, which is in charge of updating the cutting face image sent from the server. The third is the “bookmark and annotation” module, which handles the input commands of storing and retrieving for bookmarks or annotations, sends it to the server and then displays the corresponding results, such as the bookmark list sent from the server. Another application module, the “localization” module, manages localization events when the user wants to locate or relocate cutting faces. According to different localization methods, the “localization” module will acquire different formats of location inputs from the middleware layer or hardware layer and convert it into one mutual format, then send it to the server for location computing. The “QR code recognition” module in the middleware layer is only used when the user chooses the barcode-based localization method to the cutting faces, and it is in charge of decoding the quick response code image captured by the camera and then forwarding the decoded location information to the localization module. Another the middleware module, the “orientation” module, is responsible for computing the new rotation matrix when the orientation changes and pass it to the “mini map” module to update the map of the cutting face. In the meantime, the “orientation” module will send the rotation matrix to the server for acquiring the new cutting face. In the hardware layer, the combination of the triaxial accelerometer and magnetic sensor can detect the changes of orientation and pass the orientation angles of the handheld device to the upper layer for further processing. The “Wi-Fi” module builds the connection with the server at the start-up of the client program and is responsible for sending requests to the server and receiving a corresponding response from the server. The “touchscreen” module senses the user’s touch and forwards the touch coordinates to different upper layer modules according to different purposes. The last hardware layer module, the “camera” module, is used when the user wants to capture QR code images.

In our system, we are not doing traditional 3D rendering that converts 3D models to 2D images; instead, we propose a special rendering method to construct the cutting faces of the 3D image. In the next paragraph of “cutting face construction”, we will give a definition of a cutting face and introduce our crucial rendering method executed by rendering the cutting face module. Besides, according to our scenario, the user can see cutting faces with different orientation angles by putting handheld devices in different geographical orientations, but there exists an orientation issue, so we proposed two orientation control methods to solve it.

For clarity of cutting face construction, first, we give the definition of a cutting face. A cutting face

is constructed by two vectors

and

and their start point

where

,

,

and

is the center of the cutting face, which also represents the location of the cutting face. Besides, the observer’s optical direction is orthogonal to both

and

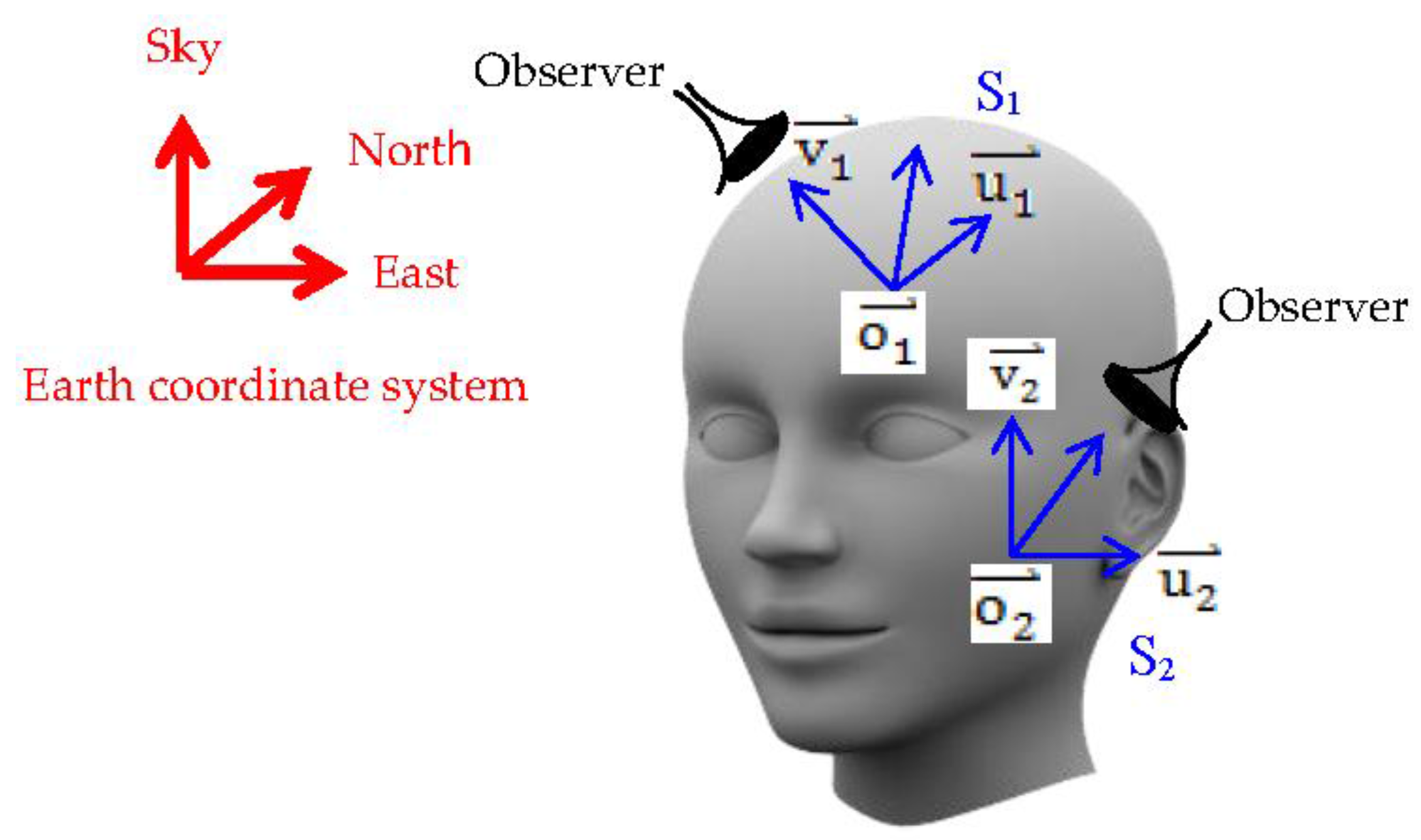

. As shown in

Figure 8, continuing with using the 3D human head as example,

and

are cutting faces with different orientations and locations. Second, we give the definition of the 3D object. The 3D object is composed of pixels, so we consider it as a pixel matrix, termed

, and we define that the 3D object is in the Earth coordinate system; therefore, each pixel of the 3D object has its exact location

in Earth coordinates, which are represented by a row vector, i.e.,

.

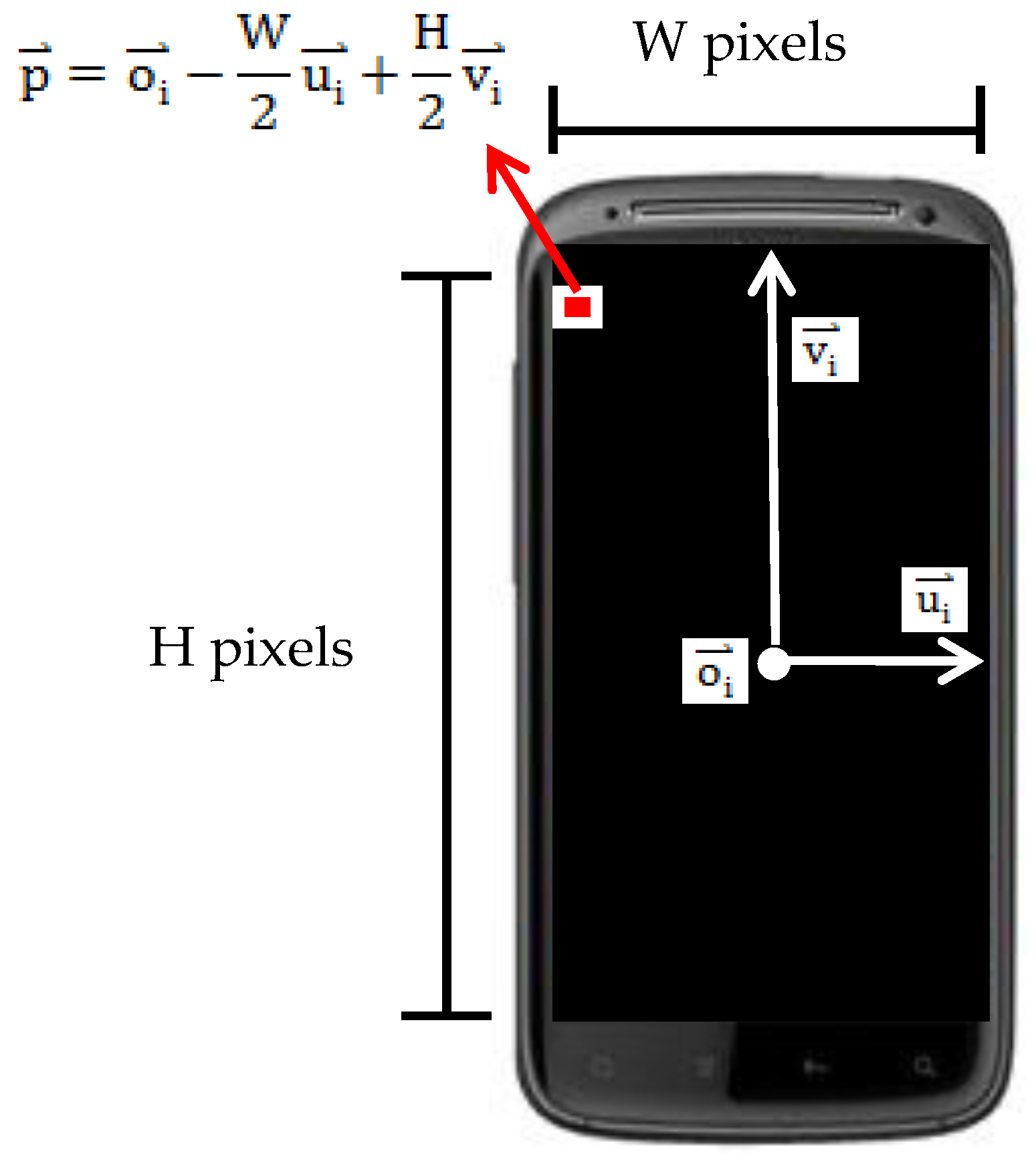

For cutting faces, we are required to find all pixels’ location of the cutting face in the 3D object cube to construct the 2D image. To achieve this goal, we can calculate each pixel’s location

of the cutting face by a simple formula:

where

and

are integer scale factors, which are responsible for controlling and identifying which pixel of the cutting face is currently calculated.

and

have upper bounds and lower bounds,

and

, where H represents the pixel height of the image and W represents the pixel width of the image, as shown in

Figure 9.

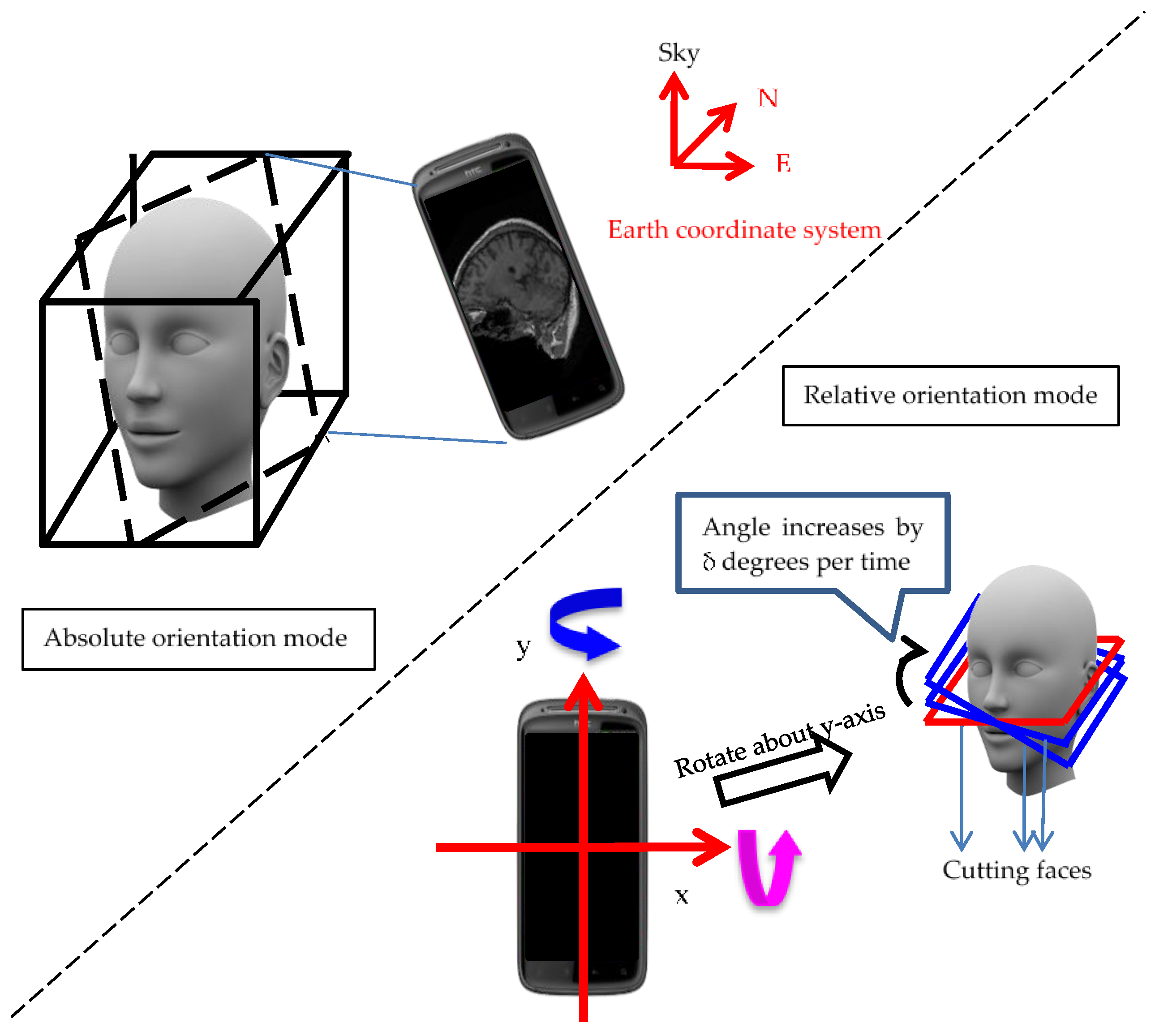

In our design of orientation control methods, the user can see any cutting face in any orientation by putting the handheld device in the user-interested geographical orientation. Therefore, users are able to see cutting faces in 360 degrees by rotating handheld devices about any axis, but if we consider the limitation of hardware ability that handheld devices do not have a monitor in the back face, we can easily find out that seeing cutting faces in 360 degrees without moving our head or body is not possible. Hence, we designed two control modes to solve this problem, and users can switch between these two modes according to their preference. The first mode is “absolute orientation mode”; the orientation angles of cutting faces follow the geographic orientations of handheld devices. The geographic orientations are detected by combining an embedded magnetic sensor and a triaxial accelerometer sensor, so users can simply rotate handheld devices to a preferred orientation, as shown in

Figure 8. The second mode is “relative orientation mode”; users can rotate handheld devices along the x-axis or y-axis of handheld devices, and once the rotation and the rotated axis are detected by the embedded triaxial accelerometer sensor, the orientation angle will slowly increase or decrease by δ degrees along the rotated axis depending on the rotation direction, as shown in

Figure 10. The advantage of this mode is that when the user finds that the monitor’s viewing angle is not comfortable, the user can switch from “absolute orientation mode” to “relative orientation mode” instead of moving his/her head or body to adapt to the monitor.

The means to obtain

and

defined in the previous section depend on orientation modes. In absolute orientation mode, embedded sensors including a magnetic sensor and a triaxial accelerometer sensor will give rotation angles α(roll), β(pitch) and γ(yaw), when the sensors detect that the handheld devices are rotated. Then, the orientation module will compute a rotation matrix according to the information of rotation angles. The rotation matrix is listed below:

The first column is the transposed vector of

, and the second column is the transposed vector of

. Differently, in relative orientation mode, the embedded triaxial accelerometer sensor will give gravity values in three axes, and we can analyze the measured value to obtain the information about the axis about which the handheld device is rotating. Then, we increase or decrease the rotation angle of the cutting face manually by δ degrees per time unit along the detected rotation axis. Below is the formula for computing the rotation matrix:

where t represents time and

is the rotation matrix that rotates the cutting face by δ degrees per time unit. The first column of

is the transposed vector of

, and the second column of

is the transposed vector of

.

In contrast with obtaining and , the means of obtaining are the same in two modes. We provide two localization methods, which will be introduced in next section, to obtain .

The methods introduced above are all about how to render cutting faces, but the location of cutting faces is just as important as the rendering of cutting faces. Therefore, we provide two localization methods, the touch-based localization method and the barcode-based localization method, to locate cutting faces and one relocation method to relocate cutting faces after applying localization methods. All methods introduced in this section are implemented by cooperation of localization modules in both the client and server sides.

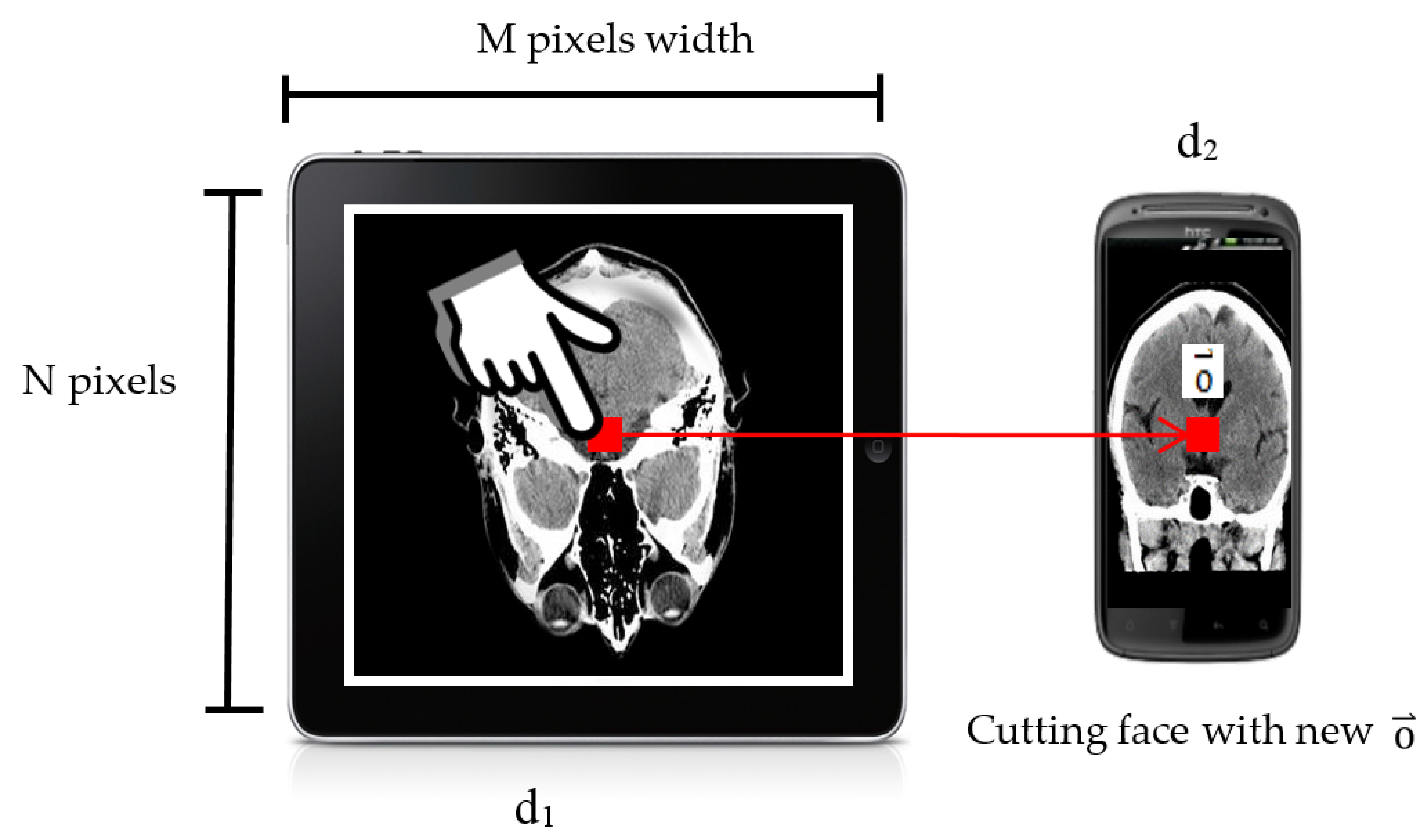

Basically, the user just has to touch the location he/she wants to the new cutting face on one handheld device, such as a tablet, and the new cutting face will show on the screen of another handheld device, such as a smartphone, as shown in

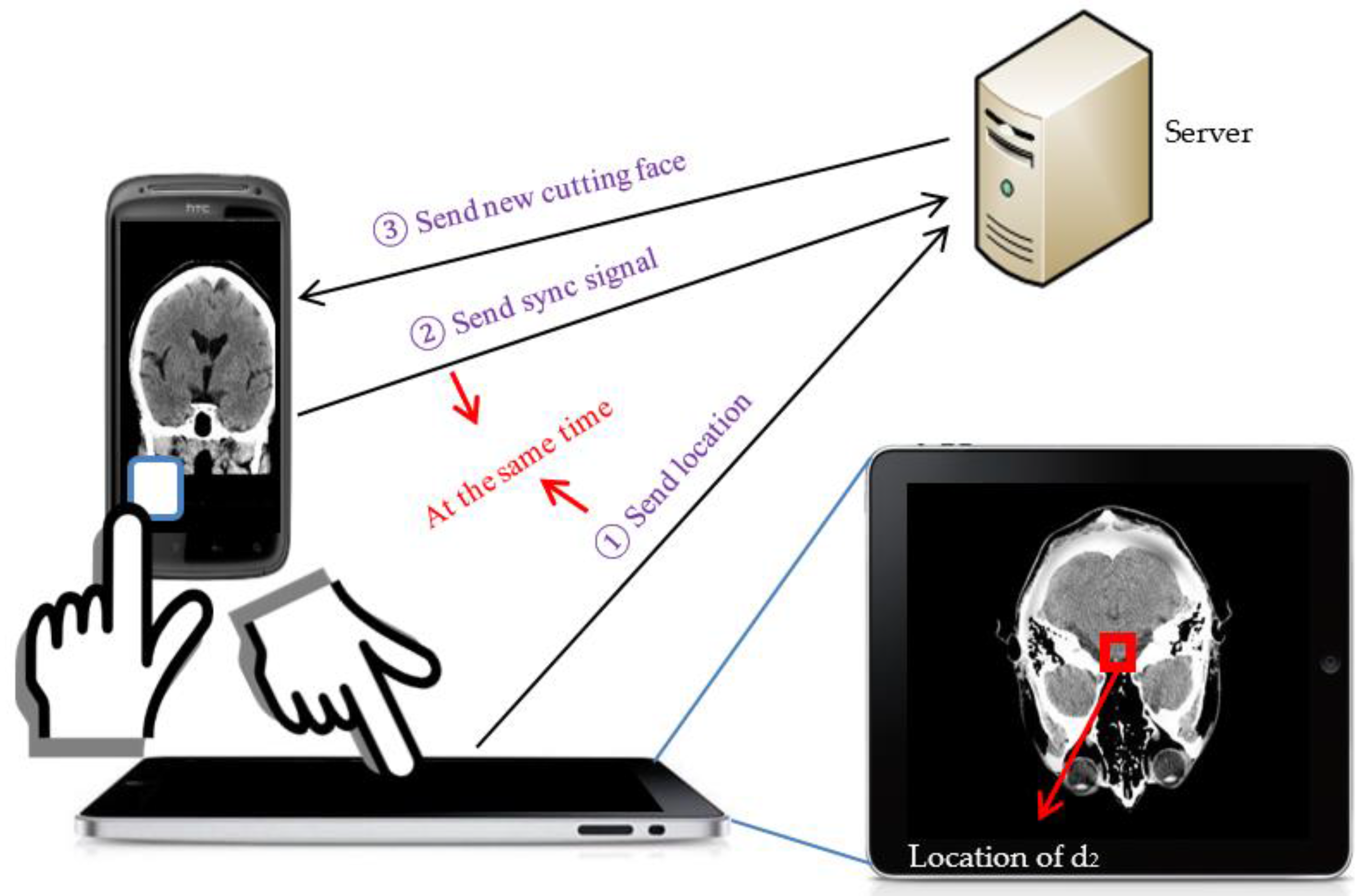

Figure 11. However, in a more than two handheld devices environment, there exist some identification issues. When we have more than two handheld devices in our environment, we have to identify the locating handheld device, which will show the new cutting face, and the to be-located handheld device, which is responsible for providing a location. Therefore, we provide an identification scheme to identify and pair these two handheld devices. We use a figure to illustrate the operations of the touch-based localization method with the identification phase. In

Figure 12, we have a server that is in charge of constructing cutting faces, one tablet, d

1, for location selection and one smartphone, d

2, for showing the cutting face with the location obtained from the tablet. First, the user touches d

1 to select and send the new location to the server. In the meantime, the user touches the synchronization button on d

2 to send a synchronization signal to the server. Then, the server will record timestamps when it receives the location and synchronization signal. After that, the server will compare the timestamps to see whether they are synchronous, and if they are synchronous, the computing server will construct the new cutting face with the new location and send it back to the smartphone.

The barcode-based localization method is designed for the condition that the user has handheld devices in both hands, so the touch-based localization method is not convenient for the user. Considering this situation, we provide a method that utilizes the image recognition technique to recognize the location by capturing an image on the screen. At the beginning, we want to do pixel level localization as the touch-based localization method does, but due to the limitation of technology that the processing time of the arbitrary image recognition without geometric pattern matching is too long, we finally determined to employ the 2D code, quick response code, as our solution. The quick response code has three main advantages. The first advantage is that it can directly encode any literal information, like location information inside codes, so that we can save the time of doing additional data access. The second advantage is that decoding quick response codes only takes a little bit of time that it is almost a real-time action. The last advantage is that we can generate any size of quick response code according to our preference. The third advantage allows us to generate small quick response codes, so that we can use more codes to represent locations on the screen, and this makes the accuracy of location more acceptable.

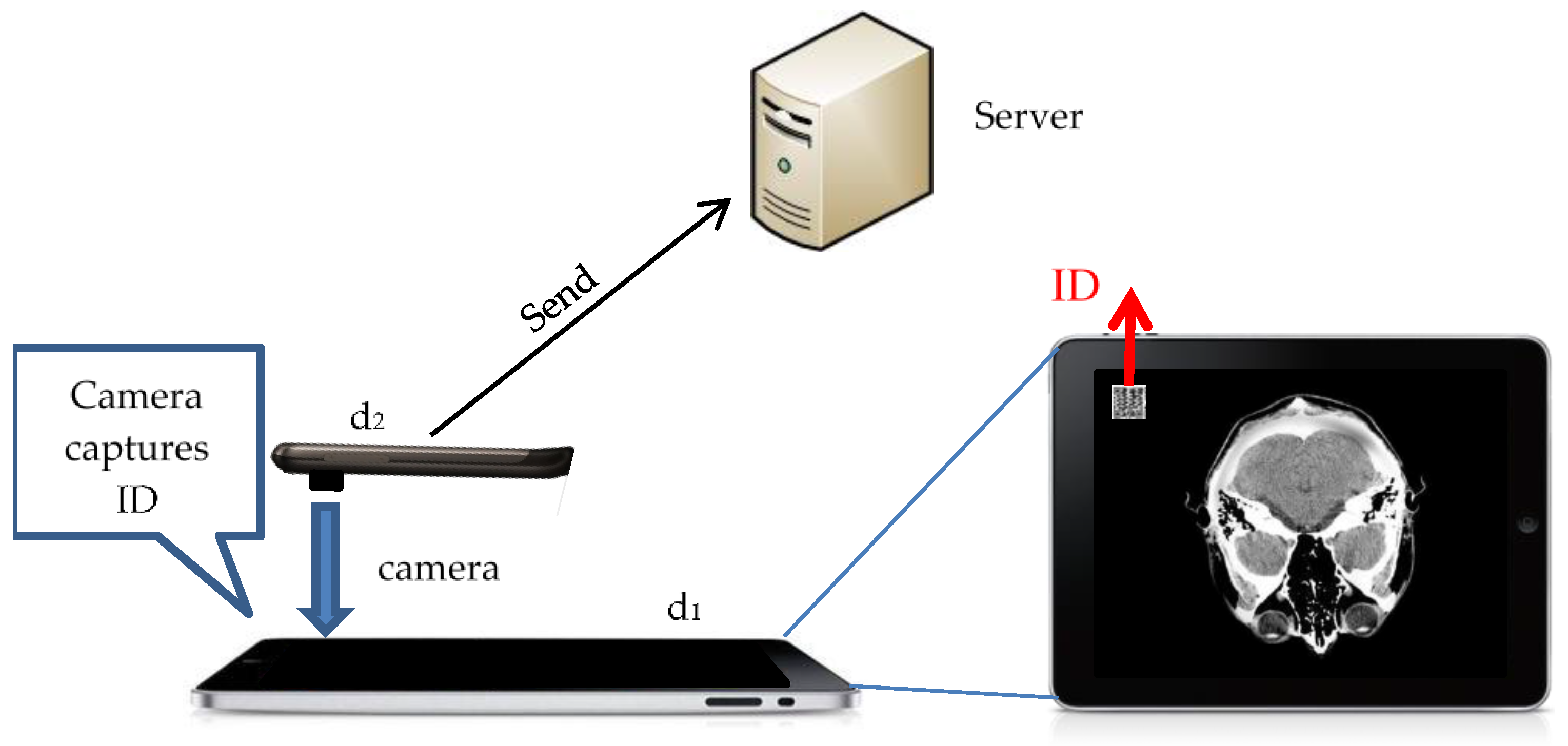

The main idea of the barcode-based localization method is that we will show quick response codes on screen, and each quick response code represents one location. Then, the user can use the camera to capture the quick response codes on screen, and by employing the image recognition technique, we can get locations after decoding quick response codes. However, the barcode-based localization method also has an identification issue as the touch-based localization method does. As a result, we provide a barcode version identification scheme to solve this problem, as shown in

Figure 13. In the figure, we have a server that is in charge of constructing cutting faces, one tablet, d

1, for location selection, and one smartphone, d

2, for showing the cutting face with the location obtained from the tablet. First, before the localization phase, the user should use the camera on d

2 to capture the ID quick response code on the screen of d

1. Then, d

2 will send the decoded ID to the server for pairing. The server will pair d

1 and d

2. After that, each time when the user wants to get the location by using d

2, our system will know that the location is supposed to come from d

1.

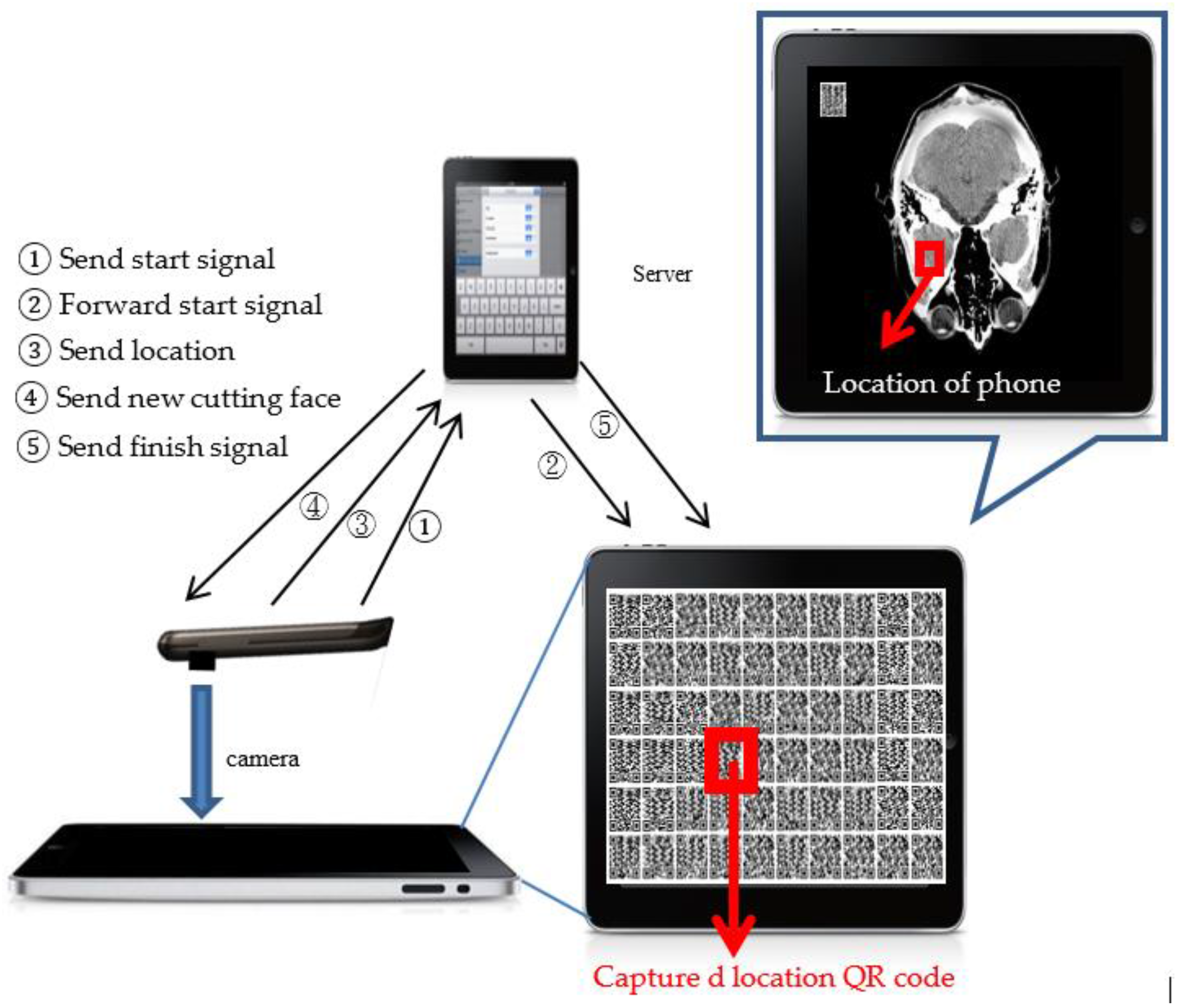

Regarding localization, we use another figure to illustrate the detail of localization operations. As shown in

Figure 14, first, d

2 will send a start signal to the server, and then, the server will forward the start signal to d

1 for notifying d

1 to open the quick response code map. Then, the user can use the camera on d

1 to capture the quick response code on the interested location and sends it to the server. After getting the location, the server can construct a new cutting face with the new location and send it back to d

2, and in the meantime, the server will send a finish signal to notify d

1 to close the quick response code map. Then, a complete localization is accomplished.

In our system, the location sent from d

2 to the server only represents the location on screen, but does not represent the location on the cutting face. Hence, we need to map the location on the screen to the location on the cutting face. Below, we will introduce our mapping method.

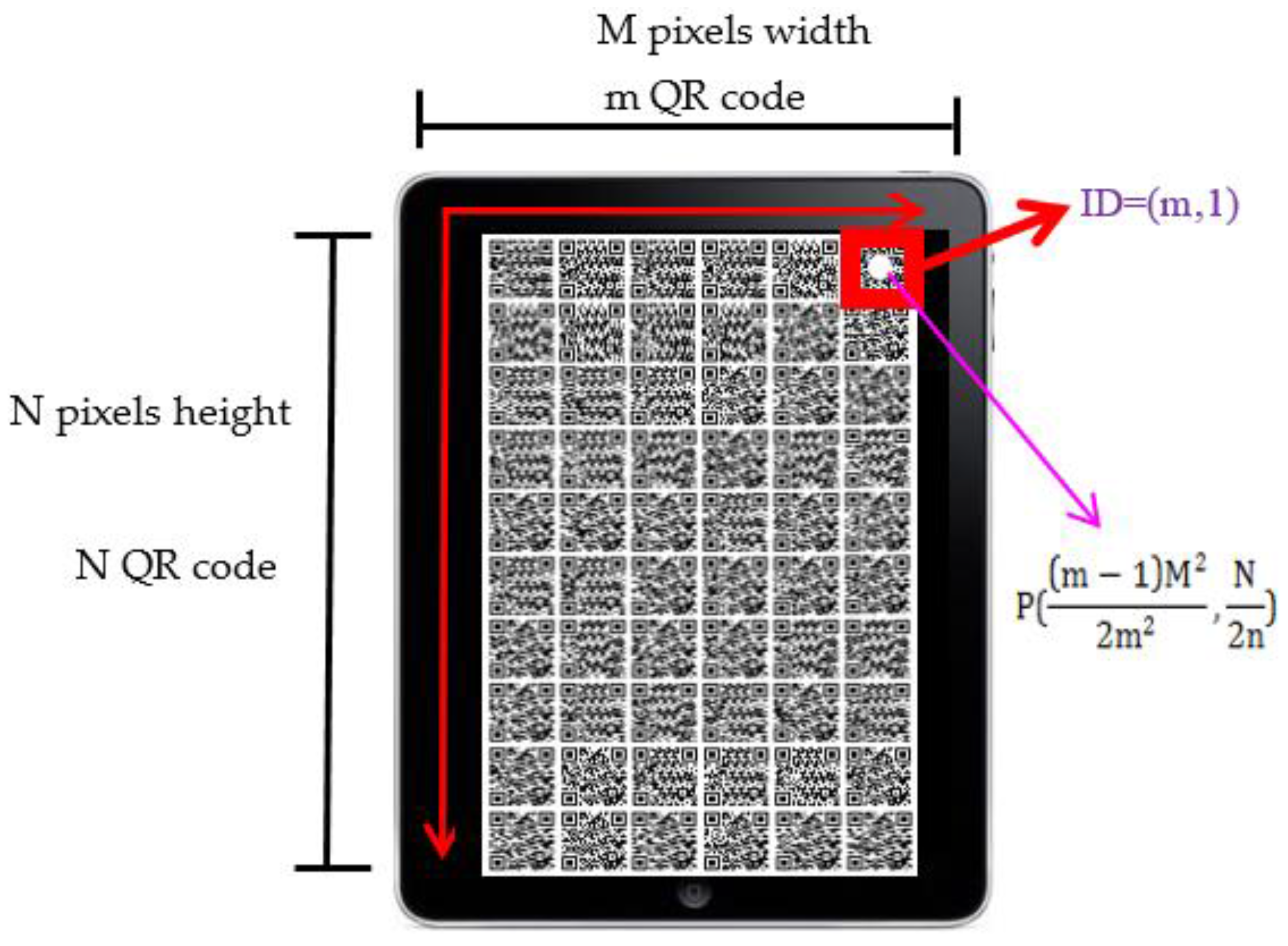

Figure 15 shows the layout of quick response codes on the screen. The total number of quick response codes depends on the dimension of the cutting face. We will calculate the ratio of the cutting face’s height and width and then estimate the number of quick response codes. In

Figure 13, we assume that the cutting face is M pixels in width and N pixels in height, and it can afford m quick response codes in a row and n quick response codes in a column. Furthermore, we give each quick response code an ID to distinguish them. The ID is represented by the combination of the quick response code’s position in the x-axis and y-axis of the map, i.e.,

. For example, in

Figure 15, the quick response code on the bottom right of the screen has an

. The basic idea of our mapping method is that we overlap the quick response code map and the cutting face, and the pixel point,

, which exactly mapped to the center of quick response code, is the location that the quick response code represents. The pixel point’s location on the cutting face,

, can be calculated by the following equations:

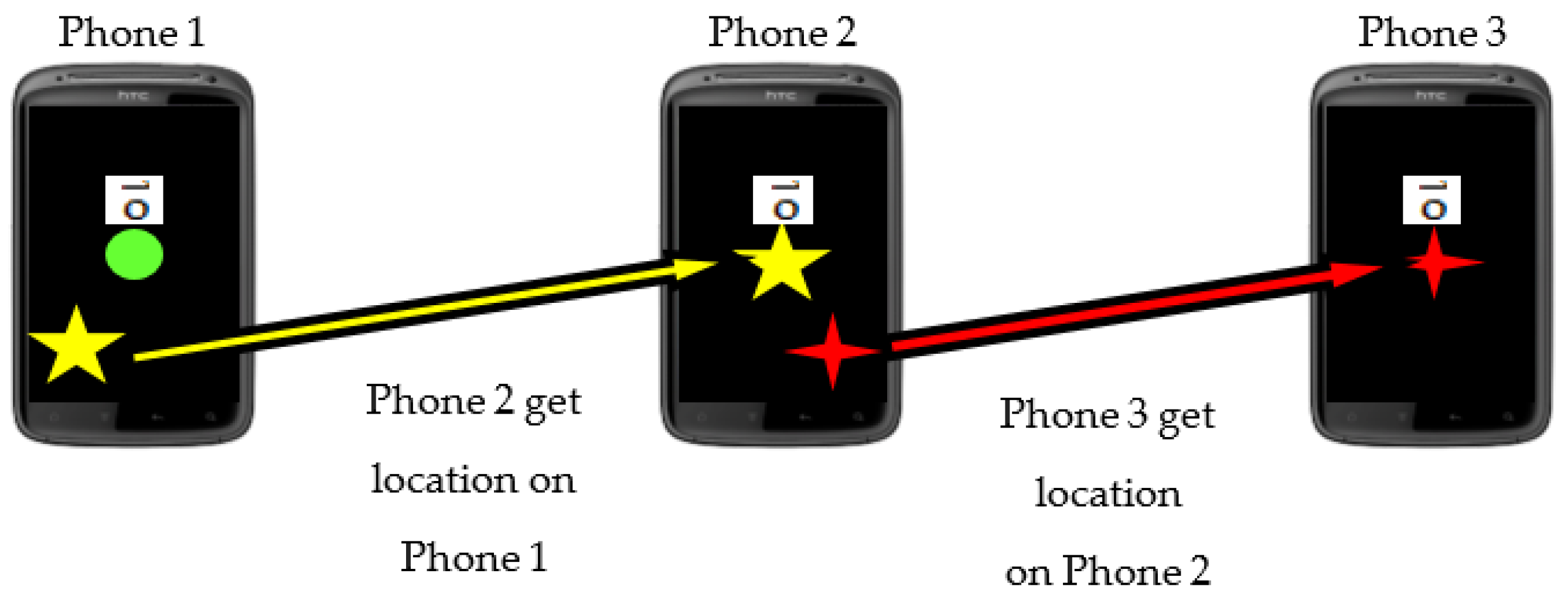

It is worth noting that according to our localization methods’ characteristics, we can find an interesting phenomenon called the cascade event; the localization events can connect like a chain that one handheld device can get the location from another handheld device and offer a location to the other handheld device, as shown in

Figure 16.

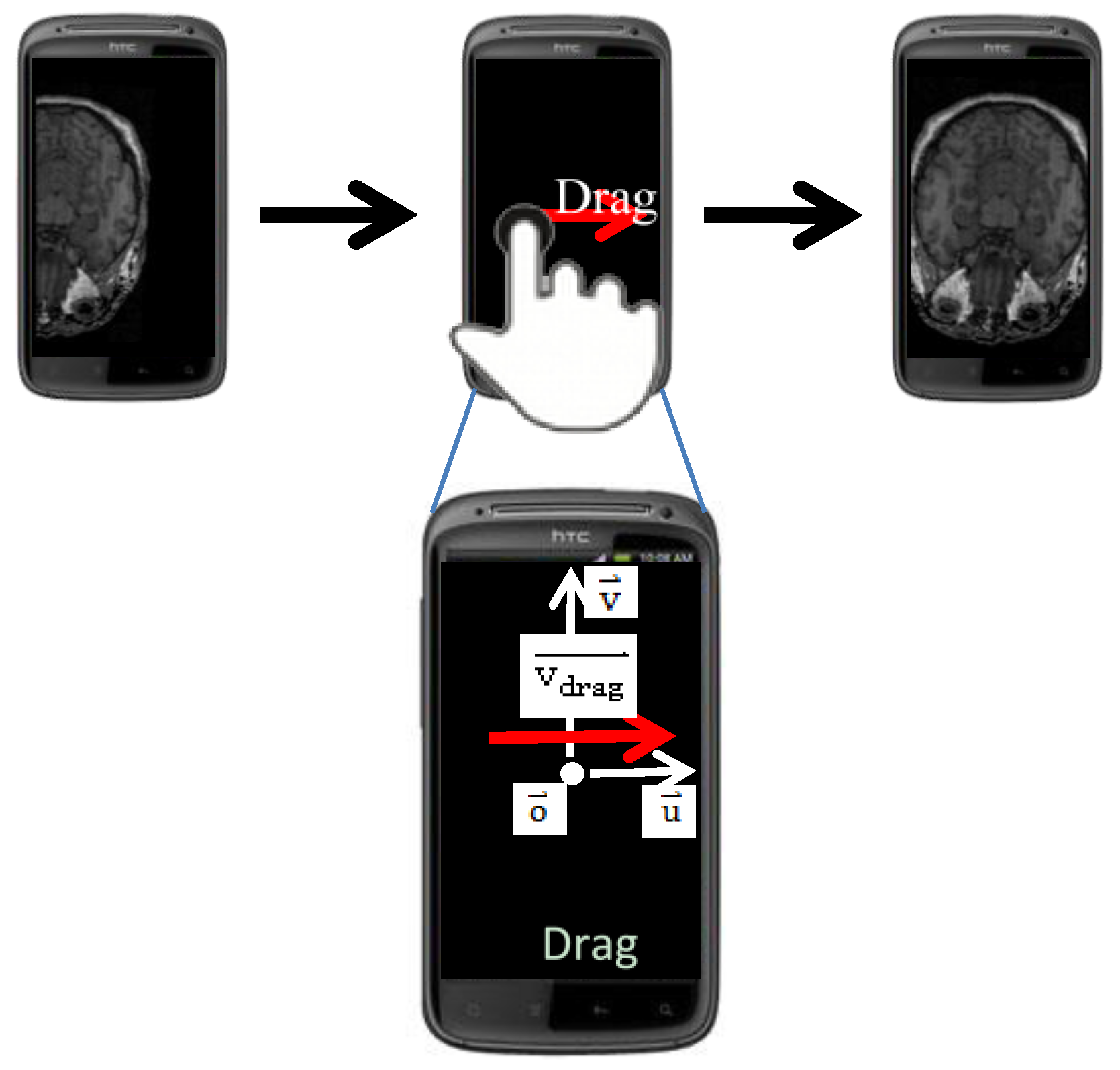

The localization methods mentioned above offer users a way to locate cutting faces in an absolute manner. In addition, we provide a relocation method for users to do minus adjustment to the location of cutting faces in a relative manner. In the relocation method, user can use touch gestures to move

the location of the cutting face. We designed two categories of touch gestures to move

in different dimensions. One of the categories contains only one gesture called drag. Drag is responsible for moving

on the

plane, as shown in

Figure 17.

is the moving vector, which defines the moving direction and the amount of movement. The new location of the cutting face,

, can be calculated by the following equation:

where

is a projected vector, which is projected from

to

, and

is another projected vector, which is projected from

to

.

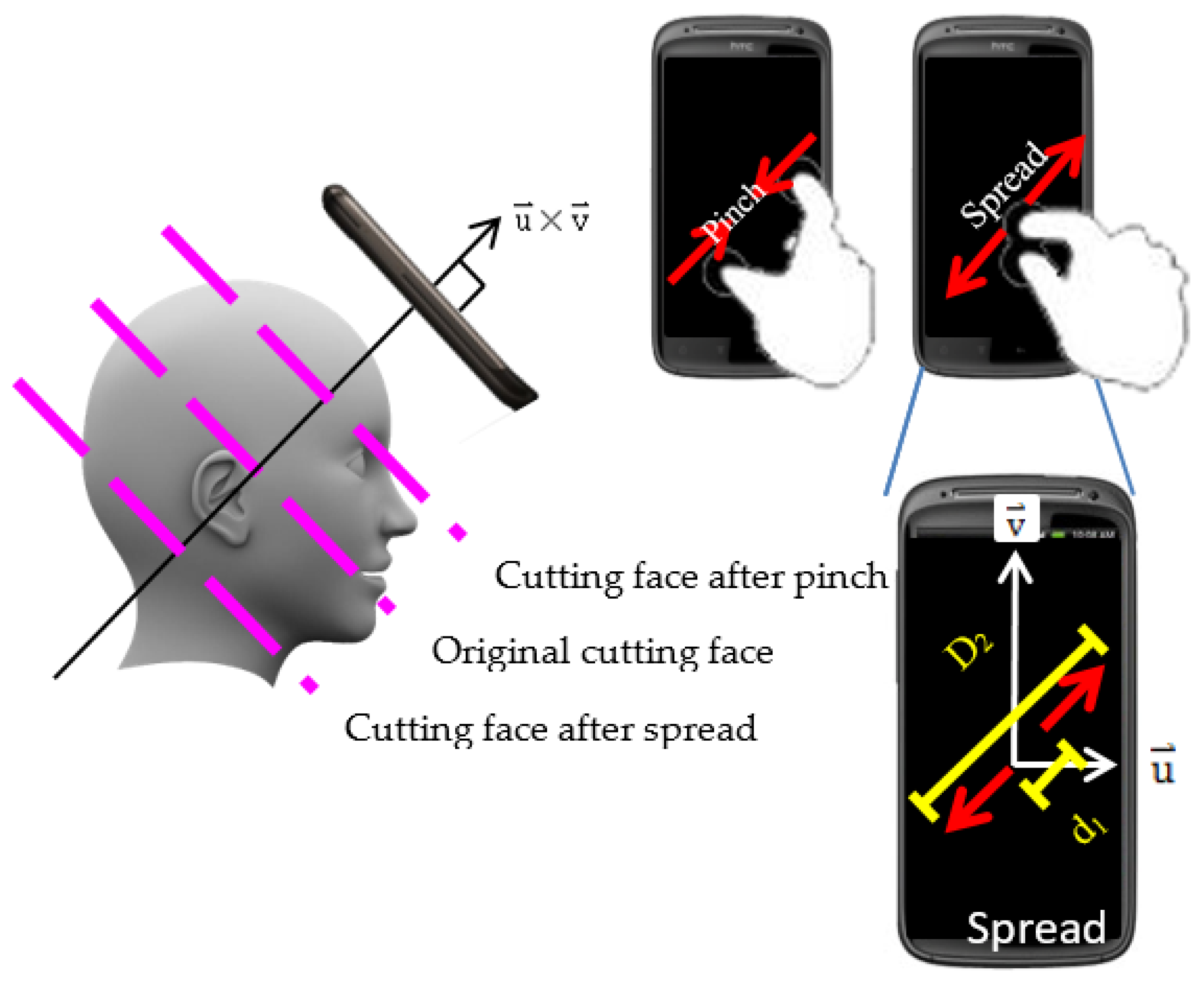

The other category contains two gestures, pinch and spread. The gestures in this category are responsible for moving

along the axis of

, as shown in

Figure 18.

Pinch moves

in the direction of

, and spread moves in the inverse direction. The new location of cutting face,

, can be calculated by the following equation:

where

is a scalar factor to control the amount of

’s movement.

is defined as

∙

is the start distance between two fingers before making a gesture, and

is the finish distance between two fingers after making a gesture.

is the notation for

.

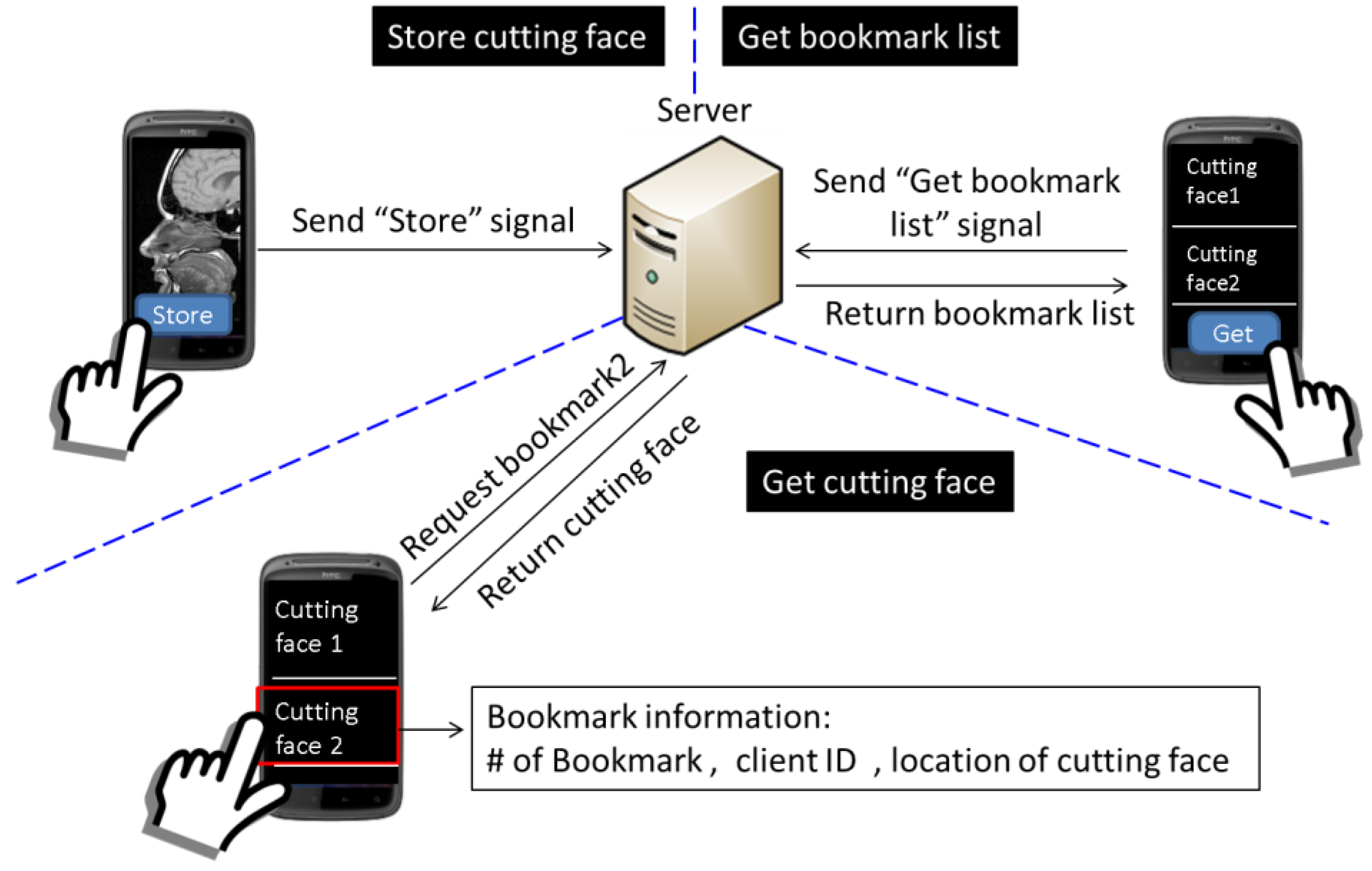

Considering user experience, it may be difficult for the user to re-access a specific cutting face with exactly the same orientation as the cutting face’s, which she/he has accessed earlier. As a result, we design a function called bookmark for users to record any cutting face that she/he thinks is important or interesting, which she/he may re-access the other day. The processes of storing the cutting face and retrieving the cutting face are shown in

Figure 19. If the user wants to store the current cutting face as a bookmark, the user can send a “store” signal to the server, and then, the server will record the metadata of the cutting face for the use of reconstruction and produce corresponding bookmark information. The bookmark information includes the sequence number of this new bookmark, the client ID, which indicates the owner of the cutting face, and the location of the cutting face in the 3D image. Then, if the user wants to re-access the cutting face, the user can send a “get bookmark list” signal to the server to retrieve the information of bookmarks and then select the desired bookmark to get the cutting face.

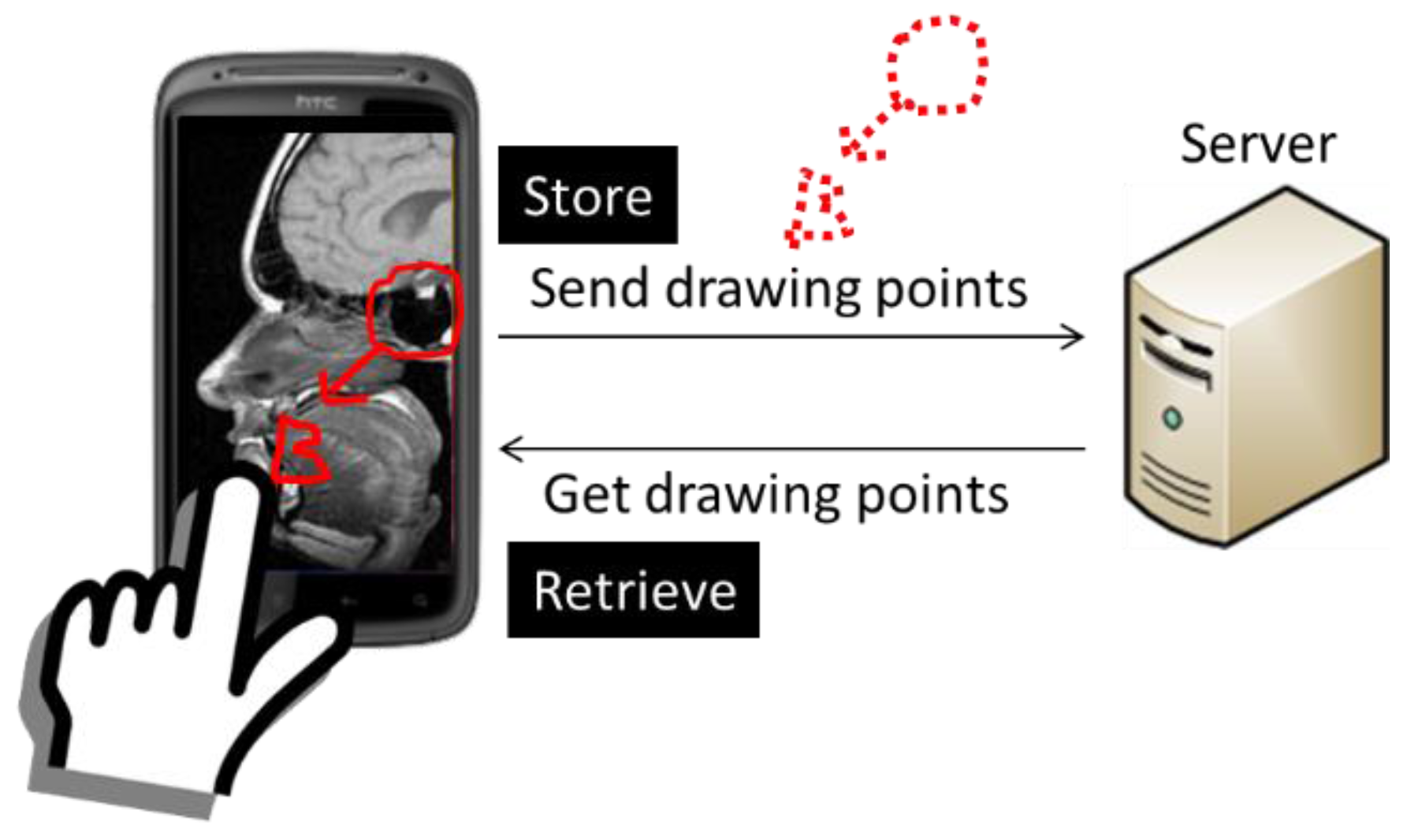

Furthermore, the user may want to put marks on cutting faces as reminders or just write notes on cutting faces to indicate some important information. Therefore, we designed another function, annotation, which allows the user to annotate directly on cutting faces. For example, if the 3D object is a medical 3D image, such as a computed tomography image, doctors may want to mark the part of the disease, and with the annotation function, doctors can easily mark it. As shown in

Figure 20, the user can touch the screen to annotate on the cutting face, and the client program will send the drawing points to the server. Then, the server will store the cutting face with annotation on it as a new bookmark. If the user wants to re-access this cutting face, the drawing points will be sent with the cutting face to the user, and the client program will rebuild the annotation by drawing a quadratic Bézier curve between each of the two points.

In our system, the information of bookmarks is open to everyone, which means users can access others’ bookmarks. Therefore, users can easily share cutting faces or other information through bookmarks. The bookmark and annotation functions are implemented by cooperation of the “bookmark and annotation” module in both the client and server sides.

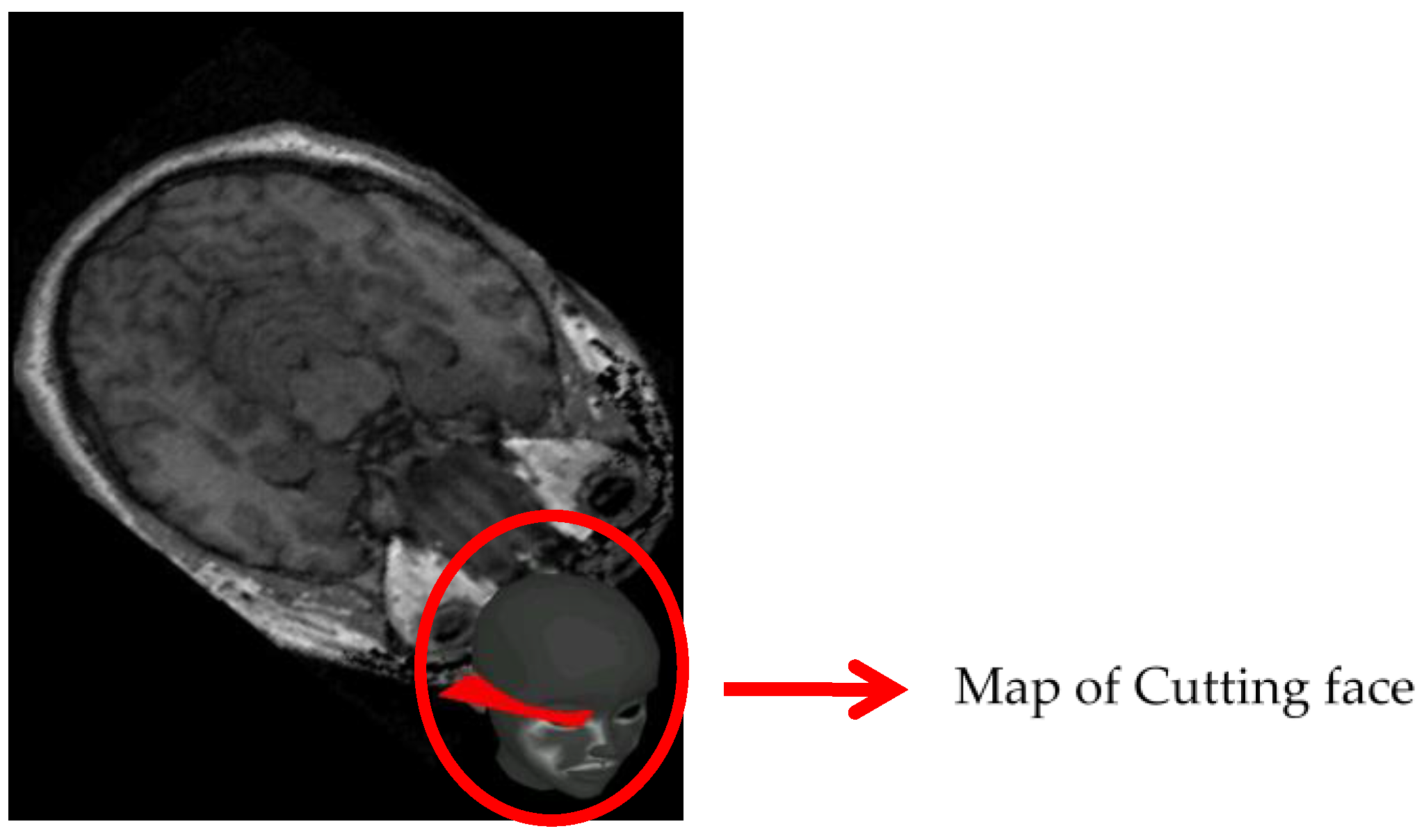

In our system, the 3D object is virtual, such that user cannot see it directly, but by using a handheld device to interact with it, the user can see cutting faces of the 3D object. However, only seeing cutting faces without other information on the screen, the user cannot have a perfect idea about the positions and orientations of the cutting faces relative to the 3D object. Therefore, we provide a small map, which will permanently show on screen and real-time update the position and orientation of the cutting face relative to the 3D object. As a result, the user can easily imagine the relationship between the cutting face and the 3D object.

Figure 21 gives an example of the map. This function is implemented by the mini map module in the client side.