1. Introduction

Federated learning (FL) represents a transformative paradigm in distributed ML, enabling multiple organizations to collaboratively train models while maintaining data sovereignty and privacy [

1]. This approach has gained significant traction across critical domains including healthcare, finance, and telecommunications, where data sharing faces stringent regulatory constraints and institutional policies [

2]. However, the deployment of FL systems in real-world environments reveals fundamental challenges that extend beyond traditional ML concerns. Contemporary FL frameworks primarily focus on algorithmic efficiency and basic privacy preservation through techniques such as differential privacy and secure aggregation [

3]. While these approaches address core technical requirements, they inadequately address the complex web of trust, compliance, and security concerns that govern modern data-driven organizations. Healthcare institutions must comply with HIPAA regulations, financial organizations operate under PCI DSS requirements, and research institutions increasingly adopt FAIR (Findability, Accessibility, Interoperability, Reusability) principles for data management [

4]. These requirements create a multifaceted compliance landscape that existing FL systems struggle to navigate systematically.

The challenge becomes more pronounced when considering the heterogeneous nature of FL participants. Each organization brings distinct security policies, data governance frameworks, and compliance requirements that must be harmonized without compromising the collaborative learning process [

5]. Traditional approaches attempt to address these concerns through ad-hoc modifications to FL algorithms or external compliance checking systems, resulting in fragmented solutions that lack systematic integration and coverage.

Recent developments in trustworthy AI have highlighted the critical importance of incorporating ethical, legal, and social considerations directly into ML systems [

6]. The IEEE 3187-2024 standard [

7] for trustworthy federated ML establishes guidelines for developing federated systems that maintain trust, transparency, and accountability throughout the learning lifecycle [

7]. Similarly, the FUTURE-AI initiative represents an effort to establish international guidelines for trustworthy AI development [

8,

9,

10].

Aspect-oriented programming (AOP) emerges as a compelling paradigm for addressing these cross-cutting concerns in FL systems [

11]. AOP enables the modular implementation of concerns that span multiple components of a system, allowing developers to separate core business logic from cross-cutting aspects such as security, logging, and compliance [

12]. In the context of FL, AOP provides a natural framework for integrating trust and compliance requirements without modifying core learning algorithms, thereby maintaining algorithmic integrity while ensuring coverage of regulatory and institutional requirements.

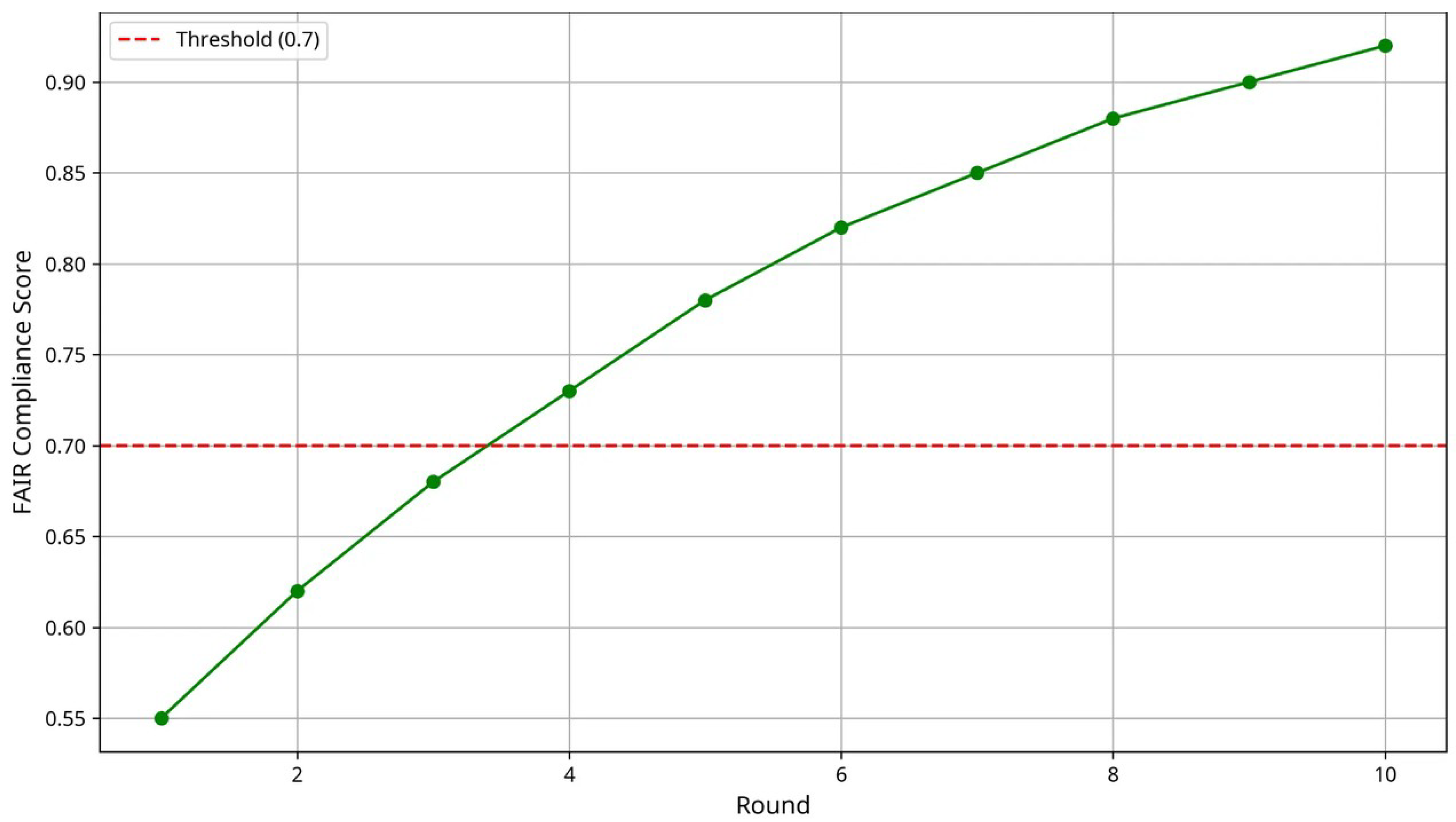

This paper introduces AspectFL, an AOP framework specifically designed for trustworthy and compliant FL systems. AspectFL addresses the fundamental challenge of integrating multiple cross-cutting concerns into FL through a sophisticated aspect weaving mechanism that intercepts execution at critical joinpoints throughout the learning lifecycle. Our framework implements four core aspects that collectively address the primary trust and compliance challenges in FL environments. The FAIRCA ensures that FL processes adhere to FAIR principles by continuously monitoring and enforcing findability, accessibility, interoperability, and reusability requirements. This aspect implements automated metadata generation, endpoint availability monitoring, standard format validation, and documentation tracking to maintain FAIR compliance throughout the FL process.

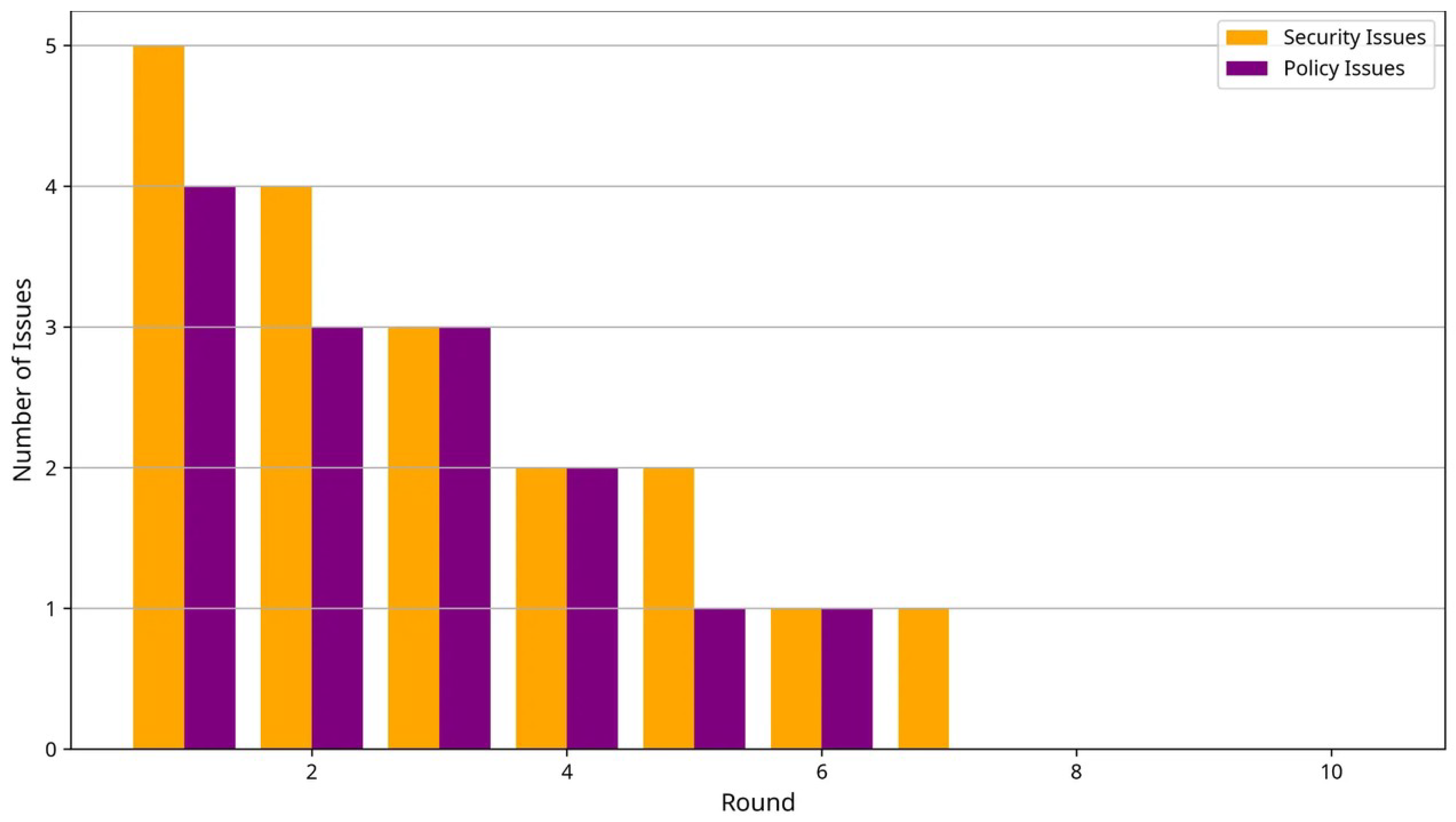

The Security Aspect provides threat detection and mitigation capabilities through real-time anomaly detection, integrity verification, and privacy-preserving mechanisms. This aspect employs advanced statistical methods to identify potential attacks, implements differential privacy mechanisms for enhanced privacy protection, and maintains continuous security monitoring throughout the FL lifecycle. The Provenance Aspect establishes audit trails and lineage tracking for all FL activities, enabling complete traceability of data usage, model evolution, and decision-making processes. This aspect implements a sophisticated provenance graph that captures fine-grained information about data sources, processing steps, and model updates, providing the foundation for accountability and reproducibility in FL systems [

12,

13].

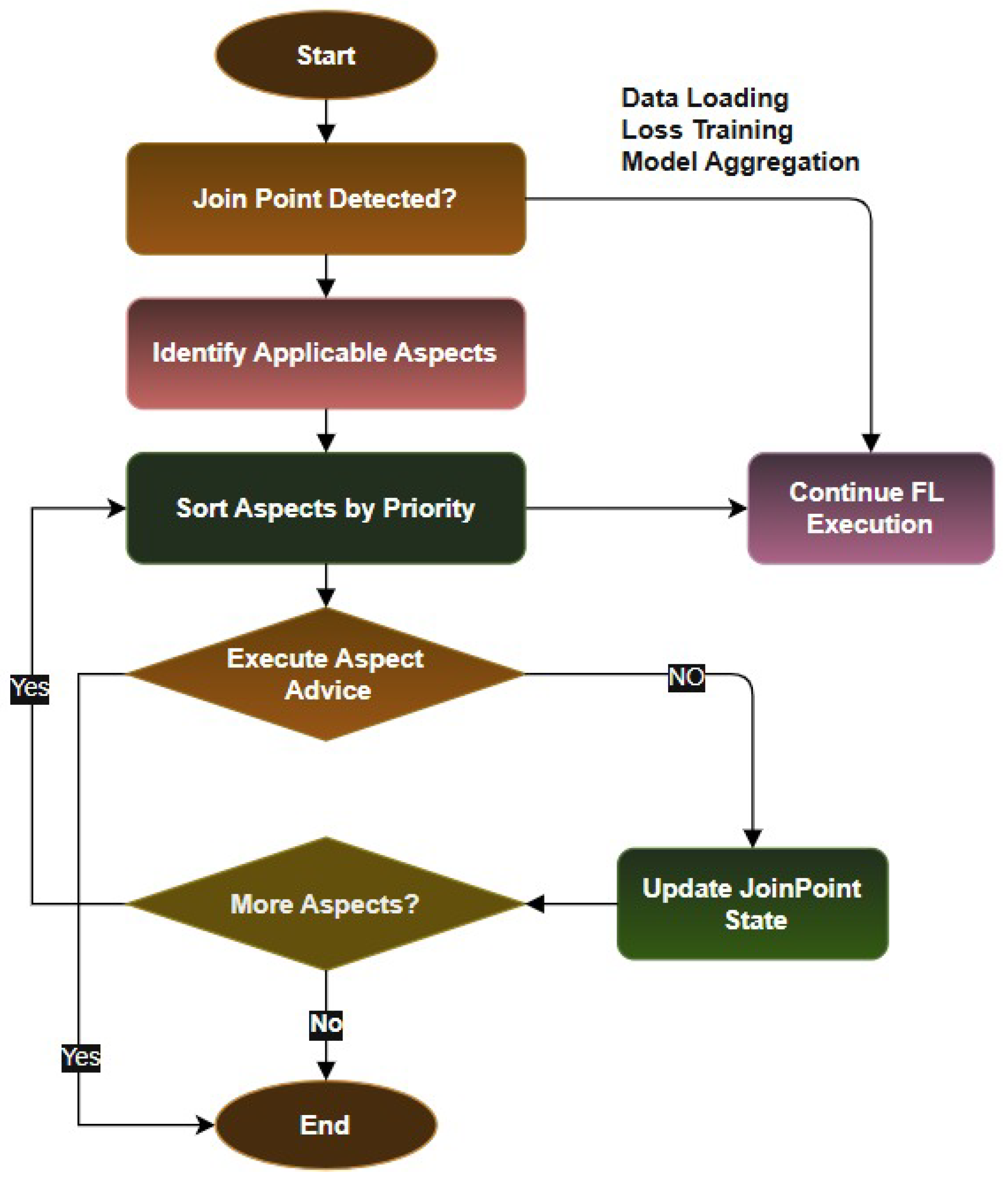

The Institutional Policy Aspect (IPA) enables dynamic enforcement of organization-specific policies and regulatory requirements through a flexible policy engine that supports complex policy hierarchies, conflict resolution mechanisms, and real-time compliance monitoring. This aspect allows organizations to define and enforce custom policies while participating in FL collaborations, ensuring that institutional requirements are maintained throughout the learning process. AspectFL’s aspect weaver employs a priority-based execution model that ensures proper ordering of aspect execution while maintaining system performance and scalability. The weaver intercepts FL execution at predefined joinpoints corresponding to critical phases such as data loading, local training, model aggregation, and result distribution [

14]. At each joinpoint, applicable aspects are identified, sorted by priority, and executed in sequence, with each aspect having the opportunity to modify the execution context and enforce relevant policies.

Our mathematical framework provides formal guarantees for the security, privacy, and compliance properties of AspectFL. We prove that the aspect weaving process preserves the convergence properties of underlying FL algorithms while providing enhanced security and compliance capabilities. The framework includes formal definitions of trust metrics, compliance scores, and security properties, enabling quantitative assessment of system trustworthiness. We demonstrate AspectFL’s effectiveness through experiments on healthcare and financial datasets, representing two critical domains with stringent compliance requirements. Our experiments include a detailed and reproducible validation on the real-world MIMIC-III dataset to demonstrate external validity and robustness under realistic data distributions. Our healthcare experiments simulate a consortium of hospitals collaborating on medical diagnosis tasks while maintaining HIPAA compliance and institutional data governance policies. The financial experiments model a banking consortium developing fraud detection capabilities while adhering to PCI DSS requirements and financial regulations.

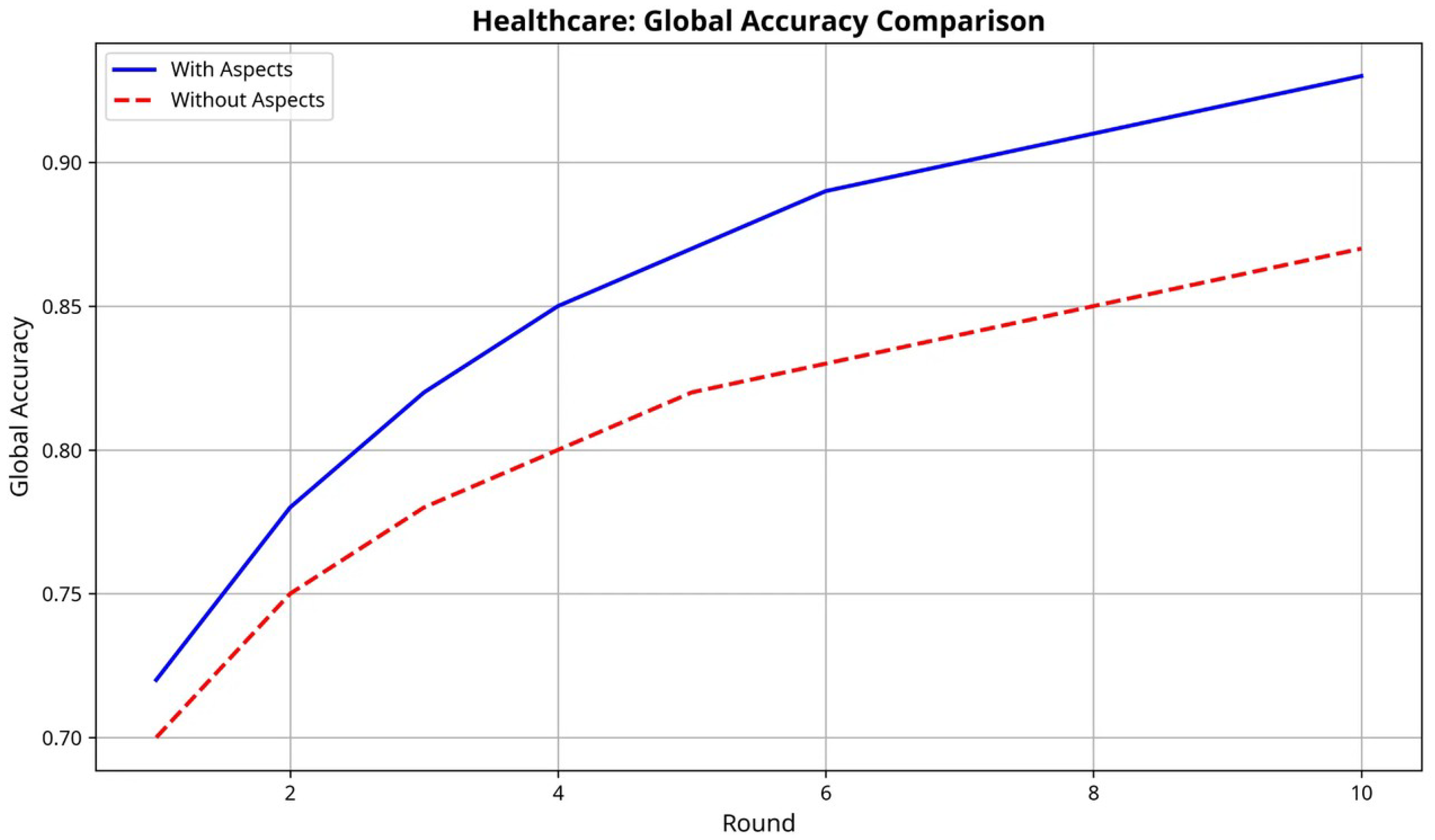

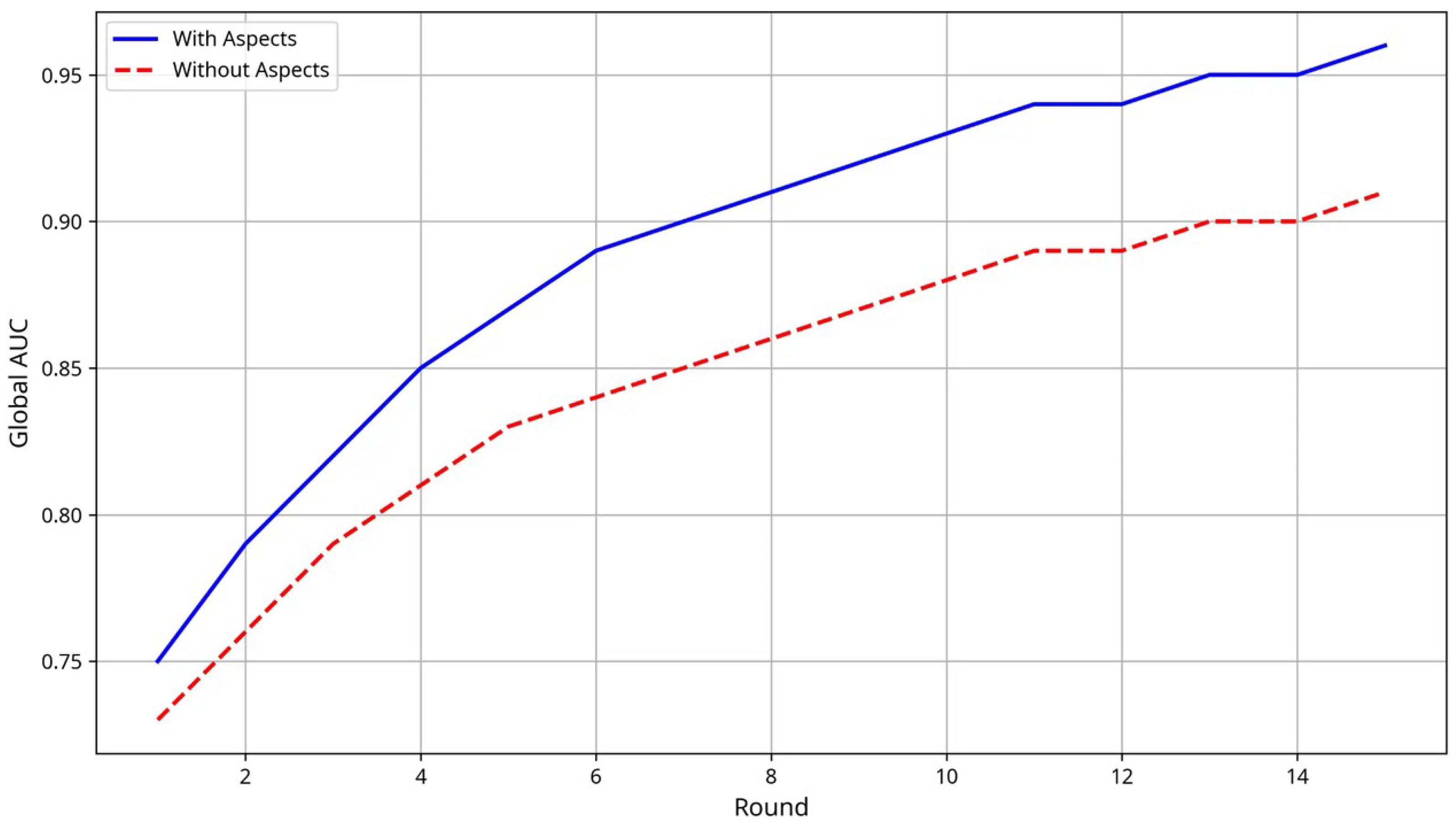

Experimental results demonstrate that AspectFL achieves significant improvements in both learning performance and compliance metrics compared to traditional FL approaches. In healthcare scenarios, AspectFL shows 4.52% AUC improvement while maintaining FAIR compliance scores of 0.762, security scores of 0.798, and policy compliance rates of 84.3%. Financial experiments show 0.90% AUC improvement with FAIR compliance scores of 0.738, security scores of 0.806, and policy compliance rates of 84.3%.

The contributions of this work include (1) the first AOP framework for FL that systematically addresses trust, compliance, and security concerns; (2) a novel aspect weaving mechanism specifically designed for FL environments with formal guarantees for security and compliance properties; (3) implementation of FAIR principles, security mechanisms, provenance tracking, and policy enforcement in FL contexts; (4) extensive experimental validation demonstrating improved performance and compliance across healthcare and financial domains; and (5) an open-source implementation with complete code, datasets, and deployment tools for community adoption to ensure full reproducibility.

4. Implementation and Core Aspects

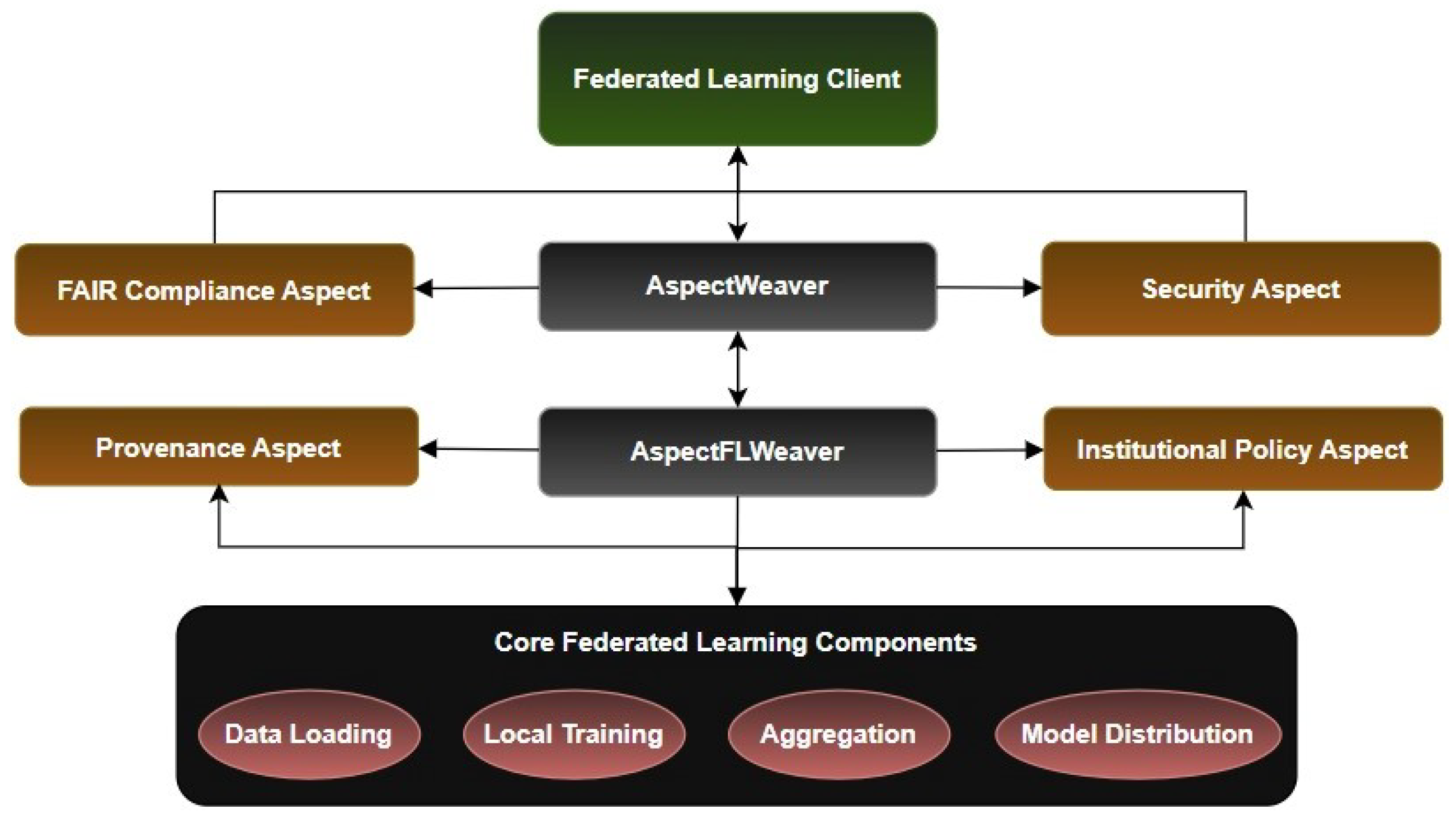

Figure 2 shows core FL components (data loading, local training, aggregation, and model distribution) that are intercepted by AspectFLWeaver, which orchestrates execution of four modular aspects: FAIR Compliance, Security, Provenance, and Institutional Policy. This design enables dynamic and scalable integration of trust, compliance, and traceability mechanisms. Each aspect addresses specific cross-cutting concerns while maintaining modularity and reusability across different FL scenarios.

4.3. Security Aspect Implementation

The Security Aspect provides threat detection, prevention, and mitigation capabilities through real-time monitoring and adaptive response mechanisms. This aspect employs multiple security techniques to address the diverse threat landscape in FL environments. The anomaly detection component implements statistical and ML-based methods to identify potentially malicious behavior from FL participants. The aspect maintains baseline profiles for each participating organization based on historical data characteristics, model update patterns, and communication behaviors. During each FL round, the aspect compares current behavior against established baselines to identify significant deviations that may indicate malicious activity. The security threat detection algorithm (Algorithm 4) combines statistical and ML approaches to identify potential security threats, providing adaptive protection against evolving attack strategies.

| Algorithm 4 Security Threat Detection Algorithm |

- Require:

Model update , Historical updates , Context - Ensure:

Threat assessment - 1:

ComputeMean() - 2:

ComputeStdDev() - 3:

{Statistical anomaly score} - 4:

MLAnomalyDetector(, ) {ML-based anomaly score} - 5:

- 6:

if then - 7:

{severity: HIGH, action: EXCLUDE, confidence: } - 8:

else if - 9:

{severity: MEDIUM, action: MONITOR, confidence: } - 10:

else - 11:

{severity: LOW, action: ACCEPT, confidence: } - 12:

end if - 13:

RecordThreatAssessment(, , ) - 14:

return

|

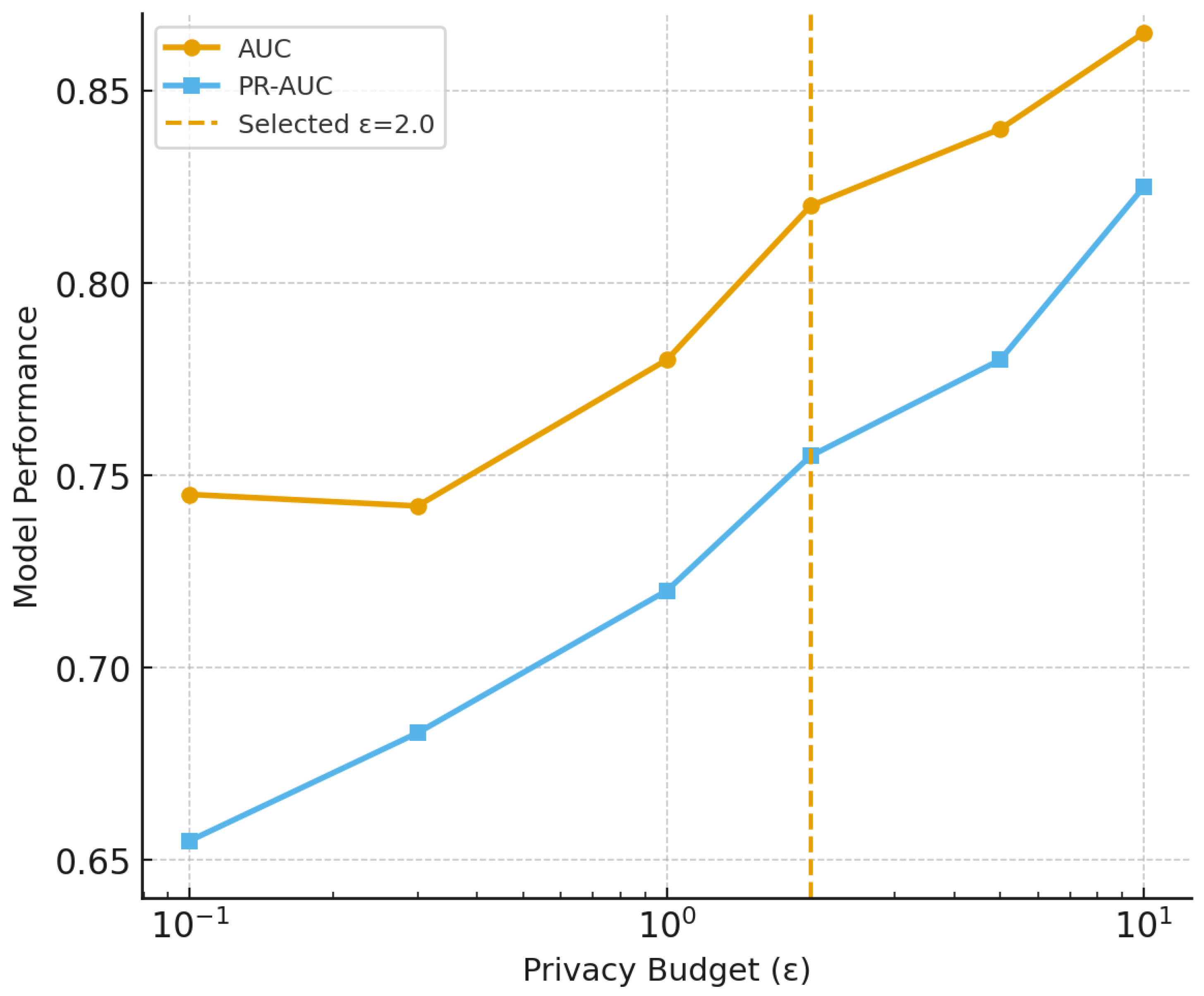

Our differential privacy implementation employs local differential privacy using the Gaussian mechanism, where noise is added by each client before transmitting model updates. We specify and for healthcare scenarios, and and for financial applications, reflecting higher privacy requirements in financial contexts. The sensitivity parameter was empirically estimated for normalized model updates through calibration runs. The aspect employs multiple anomaly detection algorithms including isolation forests, one-class support vector machines, and autoencoder-based approaches to capture different types of anomalous behavior. For model updates, the aspect analyzes parameter magnitudes, gradient directions, and update frequencies to identify potential model poisoning attacks. For data-related anomalies, the aspect examines statistical properties, distribution characteristics, and quality metrics to detect data poisoning attempts.

The threat assessment component implements a threat modeling framework that evaluates the severity and impact of detected anomalies. The aspect maintains a threat intelligence database that includes known attack patterns, vulnerability signatures, and mitigation strategies. When anomalies are detected, the aspect correlates them with known threat patterns to assess the likelihood and potential impact of security incidents. The aspect implements adaptive threat response mechanisms that automatically adjust security measures based on assessed threat levels. For low-severity threats, the aspect may increase monitoring frequency or request additional validation information. For high-severity threats, the aspect can temporarily exclude suspicious participants, require additional authentication, or trigger incident response procedures.

The privacy preservation component implements differential privacy mechanisms that add calibrated noise to model updates and aggregated statistics. The aspect supports multiple privacy models including central differential privacy, local differential privacy, and personalized differential privacy to accommodate different privacy requirements and trust models. The aspect implements adaptive privacy budget allocation mechanisms that optimize the trade-off between privacy protection and model utility. The privacy budget is dynamically allocated across FL rounds based on data sensitivity, participant trust levels, and utility requirements. The aspect also implements privacy accounting mechanisms that track cumulative privacy expenditure and ensure that privacy guarantees are maintained throughout the FL process.

The integrity verification component implements cryptographic mechanisms to ensure the authenticity and integrity of all FL communications. The aspect generates and verifies digital signatures for model updates, data summaries, and control messages using an established public key infrastructure. For data integrity, the aspect computes and validates cryptographic hashes of datasets and model artifacts. The aspect implements secure communication protocols that provide end-to-end encryption for all FL communications. The protocols support perfect forward secrecy, mutual authentication, and protection against man-in-the-middle attacks. The aspect also implements secure aggregation protocols that enable computation of aggregate statistics without revealing individual contributions.

4.4. Provenance Aspect Implementation

The Provenance Aspect establishes audit trails and lineage tracking for all FL activities, enabling complete traceability and accountability throughout the learning process. This aspect implements a sophisticated provenance model based on the W3C PROV standard, adapted for FL environments. The provenance data model captures three primary types of entities: data entities representing datasets, model artifacts, and computed results; activity entities representing FL operations such as training, aggregation, and evaluation; and agent entities representing organizations, individuals, and software systems involved in the FL process. The provenance-aware aggregation algorithm (Algorithm 5) represents a key innovation of AspectFL, incorporating data quality, trust scores, security assessments, and policy compliance into the model aggregation process. This approach ensures that the global model reflects not only the statistical properties of participant contributions but also their trustworthiness and compliance characteristics.

| Algorithm 5 Provenance-Aware Aggregation Algorithm |

- Require:

Model updates , Context - Ensure:

Aggregated model - 1:

{Initialize weights} - 2:

for each update do - 3:

ComputeDataQuality(, ) - 4:

ComputeTrustScore(, ) - 5:

ComputeSecurityScore(, ) - 6:

ComputePolicyCompliance(, ) - 7:

- 8:

- 9:

RecordProvenance(, , , ) - 10:

end for - 11:

Normalize weights: - 12:

- 13:

RecordAggregationProvenance(, , , ) - 14:

return

|

The aspect maintains a distributed provenance graph that captures fine-grained relationships between provenance entities. The graph includes derivation relationships that track how model updates are derived from training data, attribution relationships that identify responsible agents for specific activities, and temporal relationships that establish the chronological order of FL events. During the data loading phase, the aspect captures metadata about data sources, including data collection procedures, preprocessing steps, quality assessments, and access permissions. The aspect generates unique identifiers for all data entities and establishes provenance links to source systems and responsible agents.

During local training phases, the aspect records detailed information about training procedures, including hyperparameter settings as shown in

Table 1, optimization algorithms, convergence criteria, and computational resources utilized. The aspect captures the relationship between input data, training algorithms, and resulting model updates, enabling complete reconstruction of the training process.

During aggregation phases, the aspect tracks the combination of individual model updates into global models, recording aggregation algorithms, weighting schemes, and quality control measures. The aspect maintains provenance links between individual contributions and aggregated results, enabling attribution of global model properties to specific participants.

The aspect implements provenance query mechanisms that enable stakeholders to trace the lineage of specific model predictions, identify the data sources that contributed to particular model components, and assess the impact of individual participants on global model performance. These capabilities support accountability requirements and enable detailed analysis of FL outcomes.

The provenance quality assessment component evaluates the completeness and accuracy of captured provenance information. The aspect implements automated validation mechanisms that verify the consistency of provenance relationships, identify missing provenance information, and assess the reliability of provenance sources.

5. Experimental Evaluation