SP-TeachLLM: An LLM-Driven Framework for Personalized and Adaptive Programming Education

Abstract

1. Introduction

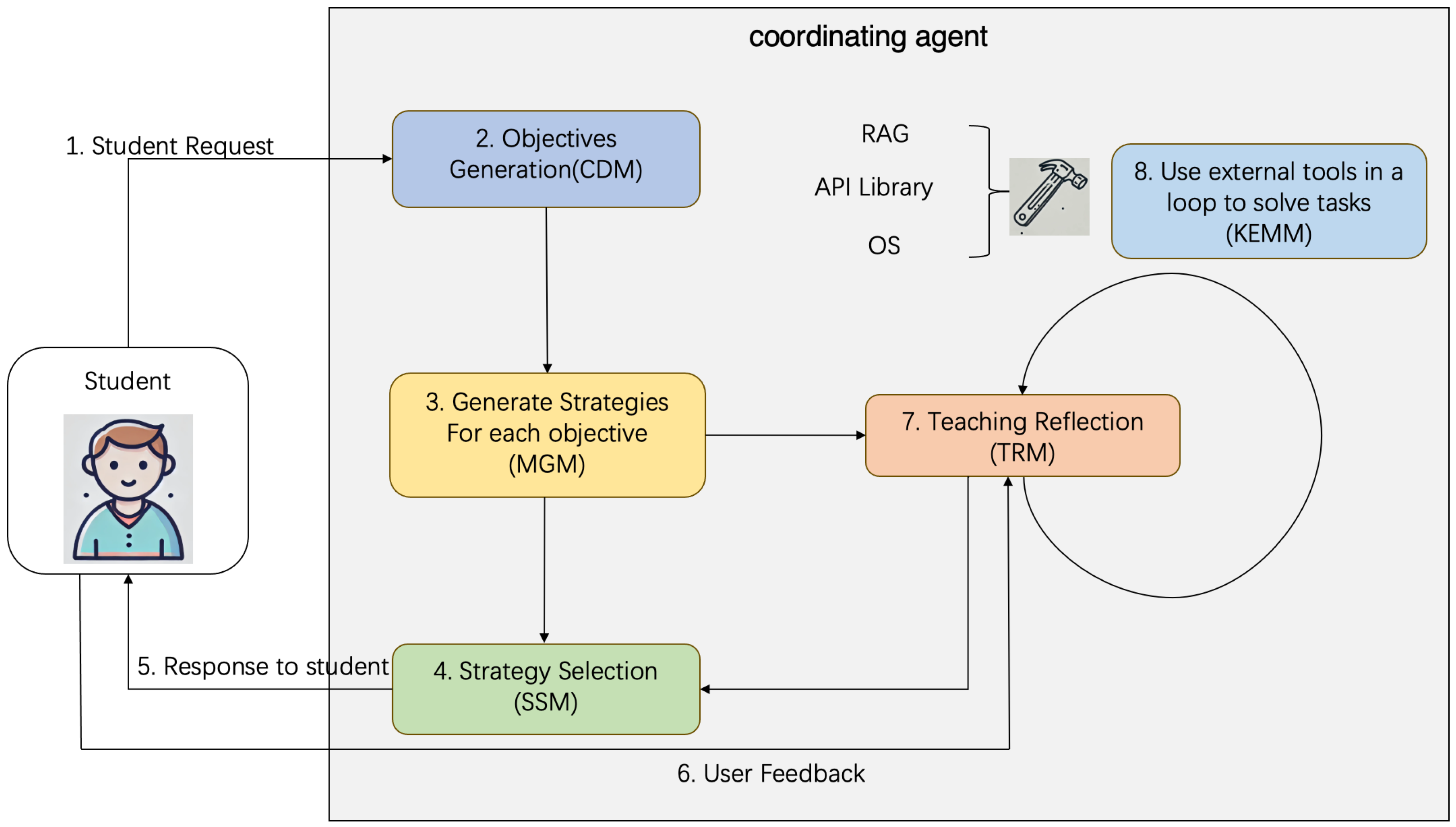

- We propose SP-TeachLLM, a novel multi-module LLM-based teaching assistance framework that integrates cognitive theories with adaptive learning mechanisms.

- We design a comprehensive set of collaborative modules—including task decomposition, multi-strategy generation, reflective learning, and memory enhancement—to achieve dynamic, explainable, and context-aware instructional planning.

- We introduce a reinforcement learning-based optimization mechanism that continuously improves strategy selection and personalization over time.

- We validate the effectiveness of SP-TeachLLM through extensive experiments on multiple programming education benchmarks, demonstrating superior adaptability and pedagogical performance compared with existing LLM-based tutoring systems.

2. Related Work

2.1. Intelligent Tutoring Systems (ITS)

2.2. Large Language Models for Intelligent Tutoring Systems

2.3. Educational Data Mining and Learning Analytics Technologies

2.4. Reinforcement Learning and Knowledge Graph Technologies

- Systematic Instructional Planning: End-to-end support from learning objective decomposition to teaching strategy optimization ensures process coherence.

- Deep Personalization: By combining LLM-based reasoning with learner modeling, the system delivers adaptive and student-centered teaching.

2.5. Planning Capabilities of LLM Agents

3. Method

3.1. Problem Formulation

3.2. SP-TeachLLM Framework Overview

3.3. Pedagogical Foundations of SP-TeachLLM

3.4. Curriculum Decomposition Module (CDM)

3.5. Multi-Strategy Generation Module (MGM)

3.6. Strategy Selection Module (SSM)

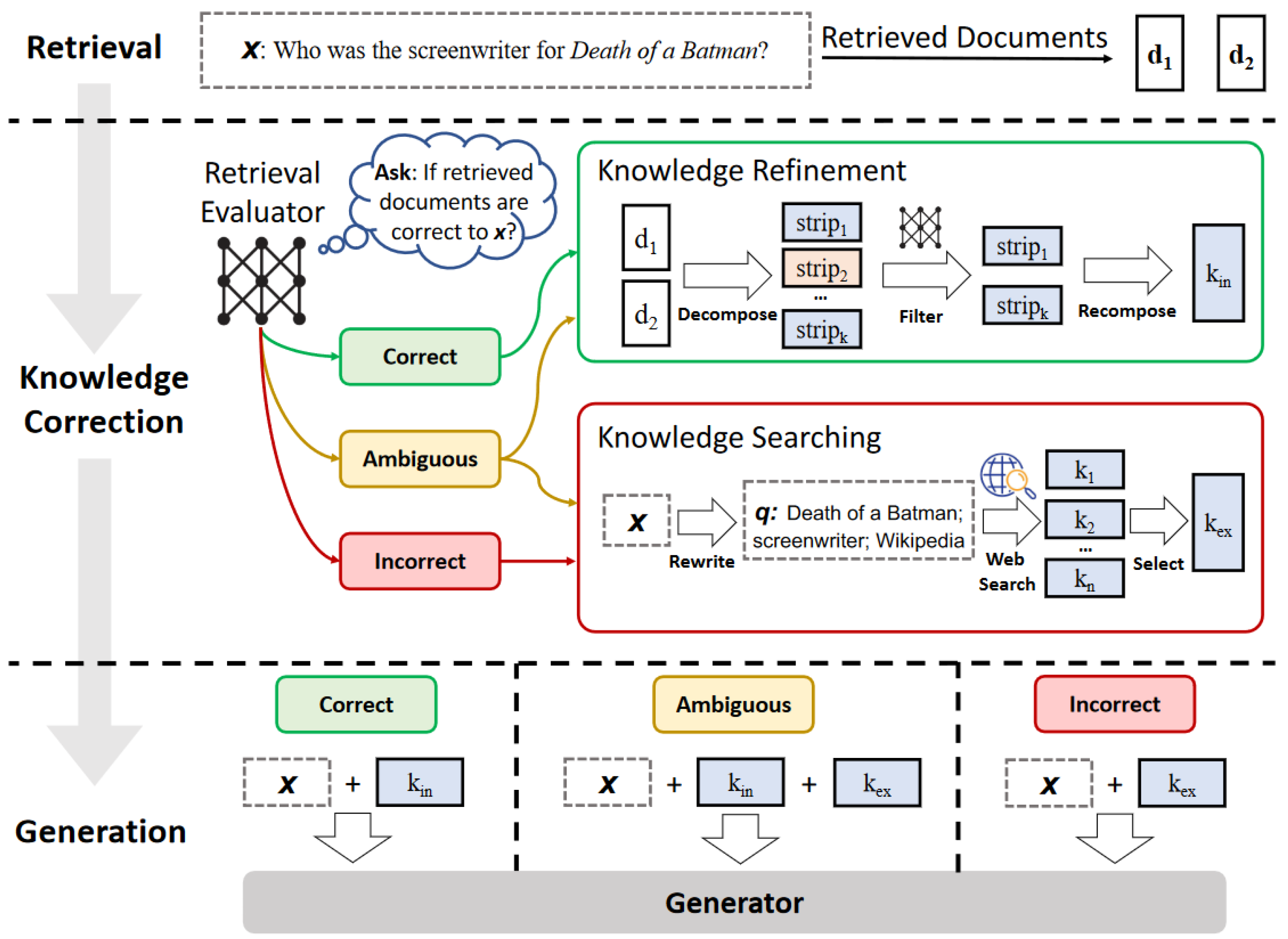

3.7. Knowledge and Experience Memory Module (KEMM)

3.8. Framework Implementation for Computer Science Education

3.9. Integration of Educational Theories

4. Experiments

4.1. Benchmarks for Code Generation

4.1.1. HumanEval

4.1.2. MBPP (Mostly Basic Programming Problems)

4.2. Experimental Setup

4.2.1. AI Tutor Models

4.2.2. Student Models

4.2.3. Experimental Procedure

4.3. Experimental Results

4.4. Ablation Study

- Without Strategy Selection Module (SSM): The reinforcement learning-based strategy selection mechanism was disabled, and a fixed non-adaptive strategy was applied to all students.

- Without Curriculum Decomposition Module (CDM): Learning objectives were not decomposed into sub-objectives, forcing the system to teach the entire concept in a single, monolithic session.

- Without Knowledge and Experience Memory Module (KEMM): The system did not retain knowledge from previous teaching sessions, eliminating adaptive behavior based on learning history.

- Without Multi-Strategy Generation Module (MGM): Only a single, predefined teaching strategy was generated for each sub-objective, removing strategic diversity.

- Without Teaching Reflection Module (TRM): No post-session reflection or adjustment was performed, preventing iterative improvement of teaching strategies.

5. Discussion

6. Study Limitations and Scope

7. Conclusions and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CDM | Curriculum Decomposition Module |

| CRAG | Corrective Retrieval-Augmented Generation |

| CGA | Code Generation Accuracy |

| DSA | Data Structures and Algorithms Agent |

| KEMM | Knowledge and Experience Memory Module |

| LLM | Large Language Model |

| MGM | Multi-Strategy Generation Module |

| MLA | Machine Learning Agent |

| PFA | Programming Fundamentals Agent |

| PPO | Proximal Policy Optimization |

| RL | Reinforcement Learning |

| SSM | Strategy Selection Module |

| TRM | Teaching Reflection Module |

| SP-TeachLLM | Self-Planning Teaching Framework with Large Language Models |

References

- Timchenko, V.V.; Trapitsin, S.Y.; Apevalova, Z.V. Educational technology market analysis. In Proceedings of the 2020 International Conference Quality Management, Transport and Information Security, Information Technologies (IT&QM&IS), Yaroslavl, Russia, 7–11 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 612–617. [Google Scholar]

- Luckin, R.; Holmes, W.; Griffiths, M.; Forcier, L.B. Intelligence Unleashed: An Argument for AI in Education; Pearson Education: Singapore, 2016. [Google Scholar]

- Zhong, Y.; Bi, T.; Wang, J.; Zeng, J.; Huang, Y.; Jiang, T.; Wu, Q.; Wu, S. Empowering the V2X network by integrated sensing and communications: Background, design, advances, and opportunities. IEEE Netw. 2022, 36, 54–60. [Google Scholar] [CrossRef]

- Huang, Y.; Zhong, Y.; Wu, Q.; Dutkiewicz, E.; Jiang, T. Cost-effective foliage penetration human detection under severe weather conditions based on auto-encoder/decoder neural network. IEEE Internet Things J. 2018, 6, 6190–6200. [Google Scholar] [CrossRef]

- Brown, T.B. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Z.; Huang, X.; Wu, C.; Liu, Q.; Jiang, G.; Pu, Y.; Lei, Y.; Chen, X.; Wang, X.; et al. When large language models meet personalization: Perspectives of challenges and opportunities. World Wide Web 2024, 27, 42. [Google Scholar] [CrossRef]

- Koedinger, K.R.; D’Mello, S.; McLaughlin, E.A.; Pardos, Z.A.; Rosé, C.P. Data mining and education. Wiley Interdiscip. Rev. Cogn. Sci. 2013, 4, 27–37. [Google Scholar] [CrossRef] [PubMed]

- Bloom, B.S.; Engelhart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals. Handbook I: Cognitive Domain; Longmans, Green and Co.: London, UK, 1956. [Google Scholar]

- Sweller, J. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Bruner, J. Toward a Theory of Instruction; Harvard University Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Carbonell, J.R. AI in CAI: An artificial-intelligence approach to computer-assisted instruction. IEEE Trans. Man-Mach. Syst. 1970, 11, 190–202. [Google Scholar] [CrossRef]

- Anderson, J.R.; Corbett, A.T.; Koedinger, K.R.; Pelletier, R. Cognitive tutors: Lessons learned. J. Learn. Sci. 1995, 4, 167–207. [Google Scholar] [CrossRef]

- Corbett, A.T.; Anderson, J.R. Knowledge tracing: Modeling the acquisition of procedural knowledge. User Model. User-Adapt. Interact. 1994, 4, 253–278. [Google Scholar] [CrossRef]

- Piech, C.; Bassen, J.; Huang, J.; Ganguli, S.; Sahami, M.; Guibas, L.J.; Sohl-Dickstein, J. Deep knowledge tracing. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 505–513. [Google Scholar]

- Baker, R.S. Stupid tutoring systems, intelligent humans. Int. J. Artif. Intell. Educ. 2016, 26, 600–614. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Kikalishvili, S. Unlocking the potential of GPT-3 in education: Opportunities, limitations, and recommendations for effective integration. Interact. Learn. Environ. 2024, 32, 5587–5599. [Google Scholar] [CrossRef]

- Mazzullo, E.; Bulut, O.; Wongvorachan, T.; Tan, B. Learning analytics in the era of large language models. Analytics 2023, 2, 877–898. [Google Scholar] [CrossRef]

- Pilicita, A.; Barra, E. LLMs in Education: Evaluation GPT and BERT Models in Student Comment Classification. Multimodal Technol. Interact. 2025, 9, 44. [Google Scholar] [CrossRef]

- Giannakos, M.; Azevedo, R.; Brusilovsky, P.; Cukurova, M.; Dimitriadis, Y.; Hernandez-Leo, D.; Järvelä, S.; Mavrikis, M.; Rienties, B. The promise and challenges of generative AI in education. Behav. Inf. Technol. 2025, 44, 2518–2544. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar] [CrossRef]

- Baker, R.S.; Yacef, K. The state of educational data mining in 2009: A review and future visions. J. Educ. Data Min. 2009, 1, 3–17. [Google Scholar]

- Yudelson, M.V.; Koedinger, K.R.; Gordon, G.J. Individualized bayesian knowledge tracing models. In Proceedings of the Artificial Intelligence in Education: 16th International Conference, AIED 2013, Memphis, TN, USA, 9–13 July 2013; Proceedings 16. Springer: Berlin/Heidelberg, Germany, 2013; pp. 171–180. [Google Scholar]

- Papamitsiou, Z.; Economides, A.A. Learning analytics and educational data mining in practice: A systematic literature review of empirical evidence. Educ. Technol. Soc. 2014, 17, 49–64. [Google Scholar]

- Narvekar, S.; Stone, P. Learning curriculum policies for reinforcement learning. arXiv 2018, arXiv:1812.00285. [Google Scholar] [CrossRef]

- Yao, L.; Mao, C.; Luo, Y. KG-BERT: BERT for knowledge graph completion. arXiv 2019, arXiv:1909.03193. [Google Scholar] [CrossRef]

- Piaget, J. Psychology and Epistemology: Towards a Theory of Knowledge; Grossman Publishers: Manhattan, NY, USA, 1971. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.H.; Le, Q.V.; Zhou, D. Chain of thought prompting elicits reasoning in large language models. arXiv 2022, arXiv:2201.11903. [Google Scholar]

- Yao, S.; Zhao, D.; Yu, Y.; Cao, S.; Narasimhan, K.; Cao, Y.; Huang, W. ReAct: Synergizing reasoning and acting in language models. arXiv 2022, arXiv:2210.03629. [Google Scholar]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. Adv. Neural Inf. Process. Syst. 2023, 36, 11809–11822. [Google Scholar]

- Madaan, A.; Tsvetkov, Y.; Klein, D.; Abbeel, P. Self-Refine: Iterative Refinement with Self-Feedback. arXiv 2023, arXiv:2303.17651. [Google Scholar] [CrossRef]

- Shinn, N.; Labash, O.; Madaan, A.; Tsvetkov, Y.; Klein, D.; Abbeel, P. Reflexion: Language agents with verbal reinforcement learning. arXiv 2023, arXiv:2303.11366. [Google Scholar] [CrossRef]

- Zhong, Y.; Bi, T.; Wang, J.; Zeng, J.; Huang, Y.; Jiang, T.; Wu, S. A climate adaptation device-free sensing approach for target recognition in foliage environments. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1003015. [Google Scholar] [CrossRef]

- Yan, S.Q.; Gu, J.C.; Zhu, Y.; Ling, Z.H. Corrective retrieval augmented generation. arXiv 2024, arXiv:2401.15884. [Google Scholar] [CrossRef]

- Chen, M.; Tworek, J.; Jun, H.; Yuan, Q.; Pinto, H.P.D.O.; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating large language models trained on code. arXiv 2021, arXiv:2107.03374. [Google Scholar] [CrossRef]

- Austin, J.; Odena, A.; Nye, M.; Bosma, M.; Michalewski, H.; Dohan, D.; Jiang, E.; Cai, C.; Terry, M.; Le, Q.; et al. Program synthesis with large language models. arXiv 2021, arXiv:2108.07732. [Google Scholar] [CrossRef]

- Perczel, J.; Chow, J.; Demszky, D. TeachLM: Post-Training LLMs for Education Using Authentic Learning Data. arXiv 2025, arXiv:2510.05087. [Google Scholar]

- Hurst, A.; Lerer, A.; Goucher, A.P.; Perelman, A.; Ramesh, A.; Clark, A.; Ostrow, A.; Welihinda, A.; Hayes, A.; Radford, A.; et al. Gpt-4o system card. arXiv 2024, arXiv:2410.21276. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Anthropic. The Claude 3 Model Family: Opus, Sonnet, Haiku (Model Card). 2024. Available online: https://www-cdn.anthropic.com/de8ba9b01c9ab7cbabf5c33b80b7bbc618857627/Model_Card_Claude_3.pdf (accessed on 23 November 2025).

- Ye, J.; Chen, X.; Xu, N.; Zu, C.; Shao, Z.; Liu, S.; Cui, Y.; Zhou, Z.; Gong, C.; Shen, Y.; et al. A comprehensive capability analysis of gpt-3 and gpt-3.5 series models. arXiv 2023, arXiv:2303.10420. [Google Scholar] [CrossRef]

- Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Yang, A.; Fan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

| Model | Architecture | Training Emphasis |

|---|---|---|

| GPT-4o | Multimodal Transformer | Web corpus + code + multimodal reasoning |

| GPT-4 | Transformer | Diverse web corpus |

| LLaMA-3-70B | Transformer | Diverse web corpus |

| Claude 3 Opus | Transformer | Conversational and reasoning data |

| TeachLM | Post-trained Transformer | Authentic student–tutor dialogues |

| Model | Architecture | Training Data |

|---|---|---|

| Claude 3 Haiku | Transformer | Conversational and reasoning data |

| Claude 3 Sonnet | Transformer | Conversational and reasoning data |

| GPT-3.5 Turbo | Transformer | Diverse web corpus |

| LLaMA-3-8B | Transformer | Diverse web corpus |

| Teacher Model with SP-TeachLLM Framework | Claude 3 Haiku (Student Model) | Claude 3 Sonnet (Student Model) | GPT-3.5-Turbo (Student Model) | LLaMA-3-8B (Student Model) |

|---|---|---|---|---|

| Claude 3 Opus | 81.9 (+6.0%) | 79.3 (+6.3%) | 68.8 (+11.5%) | 71.7 (+9.5%) |

| GPT-4 | 79.4 (+3.5%) | 77.8 (+4.8%) | 67.3 (+10.0%) | 69.9 (+7.7%) |

| LLaMA-3-70B | 83.6 (+7.7%) | 82.1 (+9.1%) | 71.5 (+14.2%) | 75.2 (+13.0%) |

| GPT-4o | 84.6 (+8.1%) | 82.9 (+8.3%) | 72.5 (+13.9%) | 76.1 (+12.4%) |

| TeachLM | 80.8 (+5.2%) | 79.6 (+5.5%) | 70.1 (+11.7%) | 73.4 (+10.3%) |

| Teacher Model with SP-TeachLLM Framework | Claude 3 Haiku (Student Model) | Claude 3 Sonnet (Student Model) | GPT-3.5-Turbo (Student Model) | LLaMA-3-8B (Student Model) |

|---|---|---|---|---|

| Claude 3 Opus | 85.2 (+4.8%) | 84.7 (+5.3%) | 66.4 (+14.2%) | 72.5 (+15.7%) |

| GPT-4 | 83.7 (+3.3%) | 83.1 (+3.7%) | 64.9 (+12.7%) | 71.0 (+14.2%) |

| LLaMA-3-70B | 86.3 (+5.9%) | 85.7 (+6.3%) | 69.3 (+17.1%) | 75.9 (+19.1%) |

| GPT-4o | 87.5 (+7.1%) | 86.8 (+7.8%) | 70.4 (+16.2%) | 77.8 (+18.4%) |

| TeachLM | 84.5 (+4.1%) | 84.1 (+4.6%) | 67.8 (+13.5%) | 73.2 (+14.8%) |

| Configuration | Claude 3 Haiku | Claude 3 Sonnet | GPT-3.5-Turbo | LLaMA-3-8B |

|---|---|---|---|---|

| Baseline | 83.6% | 82.1% | 71.5% | 75.2% |

| No SSM | 77.5% (−6.1%) | 76.3% (−5.8%) | 65.4% (−6.1%) | 69.0% (−6.2%) |

| No CDM | 79.2% (−4.4%) | 78.1% (−4.0%) | 66.8% (−4.7%) | 70.8% (−4.4%) |

| No KEMM | 80.3% (−3.3%) | 79.0% (−3.1%) | 68.0% (−3.5%) | 72.0% (−3.2%) |

| No MGM | 81.2% (−2.4%) | 80.1% (−2.0%) | 69.0% (−2.5%) | 73.0% (−2.2%) |

| No TRM | 82.1% (−1.5%) | 81.0% (−1.1%) | 70.3% (−1.2%) | 74.2% (−1.0%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.; Sun, Y.; Yu, X. SP-TeachLLM: An LLM-Driven Framework for Personalized and Adaptive Programming Education. Information 2025, 16, 1045. https://doi.org/10.3390/info16121045

Huang S, Sun Y, Yu X. SP-TeachLLM: An LLM-Driven Framework for Personalized and Adaptive Programming Education. Information. 2025; 16(12):1045. https://doi.org/10.3390/info16121045

Chicago/Turabian StyleHuang, Sarah, Yinggang Sun, and Xiangzhan Yu. 2025. "SP-TeachLLM: An LLM-Driven Framework for Personalized and Adaptive Programming Education" Information 16, no. 12: 1045. https://doi.org/10.3390/info16121045

APA StyleHuang, S., Sun, Y., & Yu, X. (2025). SP-TeachLLM: An LLM-Driven Framework for Personalized and Adaptive Programming Education. Information, 16(12), 1045. https://doi.org/10.3390/info16121045