Multimodal Models in Healthcare: Methods, Challenges, and Future Directions for Enhanced Clinical Decision Support

Abstract

1. Introduction

2. Related Literature

3. Methodology

- RQ1: What are the current state-of-the-art multimodal models used in healthcare applications, and how are they designed?

- RQ2: Which data modalities are most commonly integrated, and what fusion techniques are utilized?

- RQ3: What datasets and evaluation metrics are used to assess the performance of these models?

- RQ4: What are the key challenges and future research directions in developing multimodal models for healthcare?

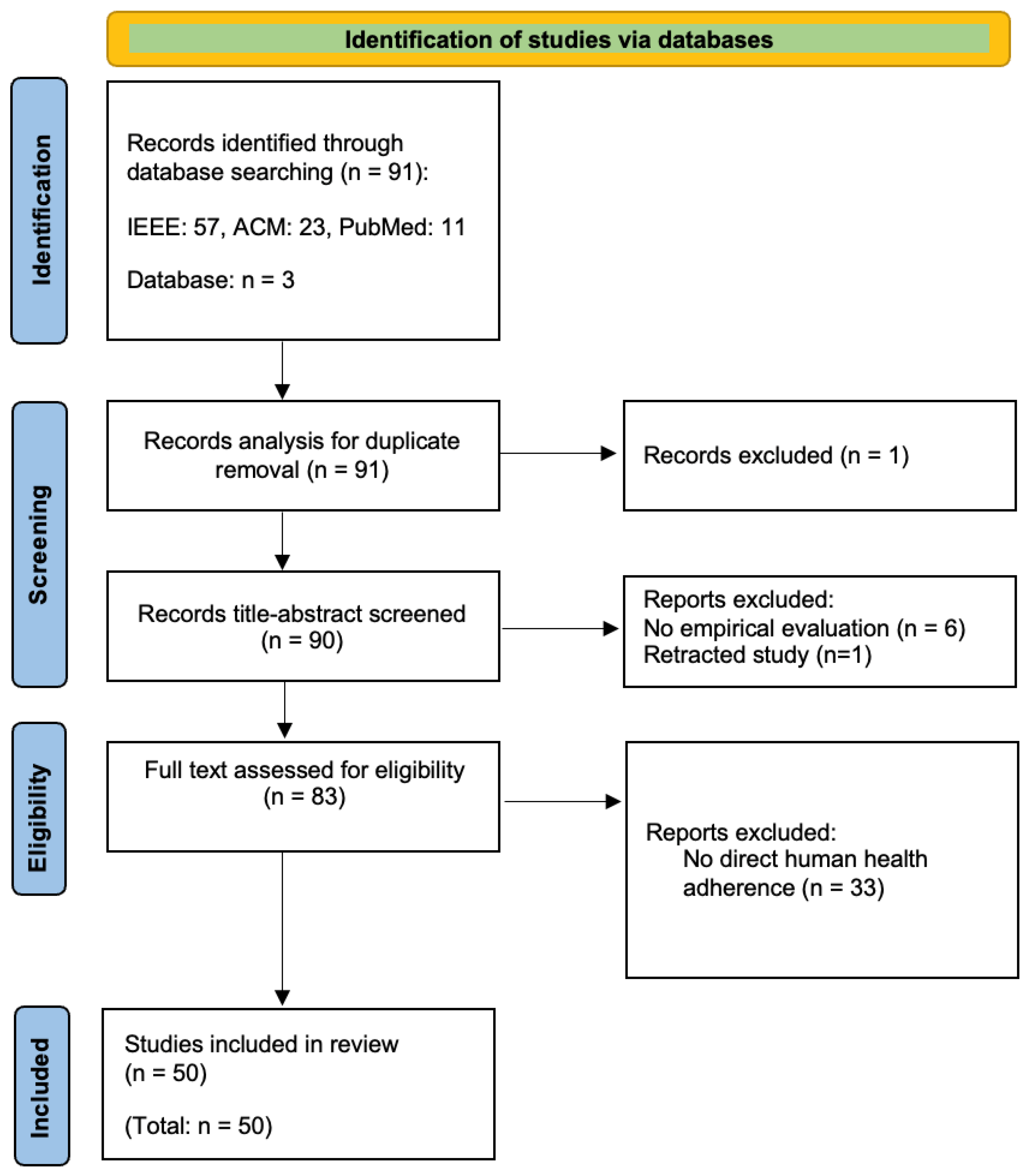

3.1. Search Databases and Queries

3.1.1. IEEE Xplore

(“Document Title”: Multimodal model) AND ((“All Metadata”: health) OR ((“All Metadata”: medical image) AND (“All Metadata”: diagnosis)))

3.1.2. ACM Digital Library

(“Document Title”: Multimodal model) AND ((“All Metadata”: health) OR ((“All Metadata”: medical image) AND (“All Metadata”: diagnosis)))

3.1.3. PubMed

(“multimodal model”[Title]) AND ((“health”) OR (“medical image”) OR (“diagnosis”))

3.2. Inclusion and Exclusion Criteria

- Inclusion Criteria: This review includes peer-reviewed articles, conference papers, and early access articles published between January 2020 and December 2024, focusing on multimodal models applied to healthcare, particularly in health monitoring, medical imaging, and diagnostics.

- Exclusion Criteria: This review excludes non-peer-reviewed publications (e.g., keynote speeches, editorials, magazine articles) and studies unrelated to multimodal models or healthcare applications.

3.3. Search Outcomes

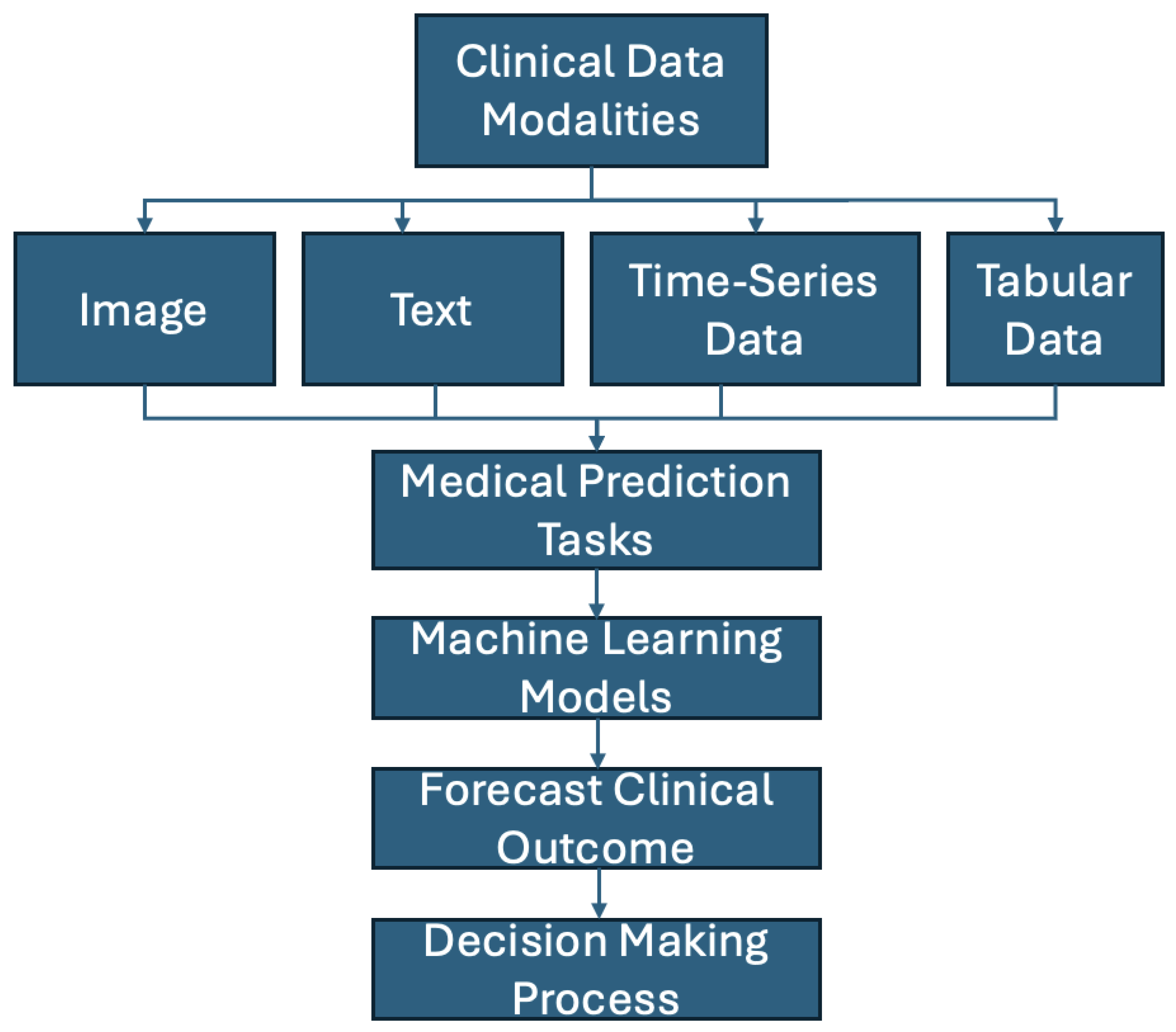

4. Data Modalities

4.1. Image Data

4.1.1. Magnetic Resonance Imaging (MRI)

4.1.2. X-Ray Imaging

4.1.3. Computed Tomography (CT)

4.1.4. Ultrasound

4.1.5. PET (Positron Emission Tomography)

4.1.6. SPECT (Single Photon Emission Computed Tomography)

4.2. Text Data

4.3. Time-Series Data

4.4. Tabular Data

5. Results

- 1.

- RQ1: What are the current state-of-the-art multimodal models used in healthcare applications, and how are they designed?

- 2.

- RQ2: What modalities are commonly integrated in these models, and what fusion techniques are employed?

- 3.

- RQ3: What datasets and evaluation metrics are used to assess the performance of these models?

- 4.

- RQ4: What are the key challenges and future research directions in developing multimodal models for healthcare?

Model Comparisons

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Y.; Yin, C.; Zhang, P. Multimodal risk prediction with physiological signals, medical images and clinical notes. Heliyon 2024, 10, e26772. [Google Scholar] [CrossRef] [PubMed]

- Yuan, M.; Bao, P.; Yuan, J.; Shen, Y.; Chen, Z.; Xie, Y.; Zhao, J.; Li, Q.; Chen, Y.; Zhang, L.; et al. Large language models illuminate a progressive pathway to artificial intelligent healthcare assistant. Med. Plus 2024, 1, 100030. [Google Scholar] [CrossRef]

- Henriksson, A.; Pawar, Y.; Hedberg, P.; Nauclér, P. Multimodal fine-tuning of clinical language models for predicting COVID-19 outcomes. Artif. Intell. Med. 2023, 146, 102695. [Google Scholar] [CrossRef] [PubMed]

- Behrad, F.; Saniee Abadeh, M. An overview of deep learning methods for multimodal medical data mining. Expert Syst. Appl. 2022, 200, 117006. [Google Scholar] [CrossRef]

- Meskó, B. The impact of multimodal large language models on health care’s future. J. Med. Internet Res. 2023, 25, e52865. [Google Scholar] [CrossRef]

- Xu, X.; Li, J.; Zhu, Z.; Zhao, L.; Wang, H.; Song, C.; Chen, Y.; Zhao, Q.; Yang, J.; Pei, Y. A comprehensive review on synergy of multi-modal data and AI technologies in medical diagnosis. Bioengineering 2024, 11, 219. [Google Scholar] [CrossRef]

- Shetty, S.; Ananthanarayana, V.S.; Mahale, A. Comprehensive review of multimodal medical data analysis: Open issues and future research directions. Acta Inform. Pragensia 2022, 11, 423–457. [Google Scholar] [CrossRef]

- Tian, D.; Jiang, S.; Zhang, L.; Lu, X.; Xu, Y. The role of large language models in medical image processing: A narrative review. Quant. Imaging Med. Surg. 2024, 14, 1108–1121. [Google Scholar] [CrossRef]

- Cai, Q.; Wang, H.; Li, Z.; Liu, X. A survey on multimodal data-driven smart healthcare systems: Approaches and applications. IEEE Access 2019, 7, 133583–133599. [Google Scholar] [CrossRef]

- Shaik, T.; Tao, X.; Li, L.; Xie, H.; Velásquez, J.D. A survey of multimodal information fusion for smart healthcare: Mapping the journey from data to wisdom. Inf. Fusion 2024, 102, 102040. [Google Scholar] [CrossRef]

- Wang, X.; He, F.; Jin, S.; Yang, Y.; Wang, Y.; Qu, H. M2Lens: Visualizing and explaining multimodal models for sentiment analysis. IEEE Trans. Vis. Comput. Graph. 2022, 28, 802–812. [Google Scholar] [CrossRef]

- Haleem, M.S.; Ekuban, A.; Antonini, A.; Pagliara, S.; Pecchia, L.; Allocca, C. Deep-learning-driven techniques for real-time multimodal health and physical data synthesis. Electronics 2023, 12, 1989. [Google Scholar] [CrossRef]

- Liu, Z.; Ding, L.; He, B. Integration of EEG/MEG with MRI and fMRI. IEEE Eng. Med. Biol. Mag. 2006, 25, 46–53. [Google Scholar] [CrossRef]

- Lin, A.L.; Laird, A.R.; Fox, P.T.; Gao, J.H. Multimodal MRI neuroimaging biomarkers for cognitive normal adults, amnestic mild cognitive impairment, and Alzheimer’s disease. Neurol. Res. Int. 2012, 2012, 907409. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Song, Z.; Han, X.; Li, H.; Tang, X. Prediction of Alzheimer’s disease progression based on magnetic resonance imaging. ACS Chem. Neurosci. 2021, 12, 4209–4223. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. NPJ Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef]

- Thion Ming, C.; Omar, Z.; Mahmood, N.H.A.; Kadiman, S. A literature survey of ultrasound- and computed-tomography-based cardiac image registration. J. Teknol. Sci. Eng. 2015, 74, 93–101. [Google Scholar] [CrossRef]

- Huang, S.C.; Pareek, A.; Zamanian, R.; Banerjee, I.; Lungren, M.P. Multimodal fusion with deep neural networks for leveraging CT imaging and electronic health record: A case-study in pulmonary embolism detection. Sci. Rep. 2020, 10, 22147. [Google Scholar] [CrossRef]

- Mouches, P.; Wilms, M.; Rajashekar, D.; Langner, S.; Forkert, N.D. Multimodal biological brain age prediction using magnetic resonance imaging and angiography with the identification of predictive regions. Hum. Brain Mapp. 2022, 43, 2554–2566. [Google Scholar] [CrossRef] [PubMed]

- Wei, L.; Osman, S.; Hatt, M.; El Naqa, I. Machine learning for radiomics-based multimodality and multiparametric modeling. Q. J. Nucl. Med. Mol. Imaging 2019, 63, 353–360. [Google Scholar] [CrossRef]

- Lv, J.; Roy, S.; Xie, M.; Yang, X.; Guo, B. Contrast agents of magnetic resonance imaging and future perspective. Nanomaterials 2023, 13, 2003. [Google Scholar] [CrossRef] [PubMed]

- Jing, B.; Xie, P.; Xing, E.P. On the Automatic Generation of Medical Imaging Reports. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1: Long Papers, pp. 2577–2586. [Google Scholar]

- Norgeot, B.; Glicksberg, B.S.; Trupin, L.; Lituiev, D.; Gianfrancesco, M.; Oskotsky, B.; Schmajuk, G.; Yazdany, J.; Butte, A.J. Assessment of a Deep Learning Model Based on Electronic Health Record Data to Forecast Clinical Outcomes in Patients with Rheumatoid Arthritis. JAMA Netw. Open 2019, 2, e190606. [Google Scholar] [CrossRef]

- Zambrano Chaves, J.M.; Wentland, A.L.; Desai, A.D.; Banerjee, I.; Kaur, G.; Correa, R.; Boutin, R.D.; Maron, D.J.; Rodriguez, F.; Sandhu, A.T.; et al. Opportunistic assessment of ischemic heart disease risk using abdominopelvic computed tomography and medical record data: A multimodal explainable artificial intelligence approach. Sci. Rep. 2023, 13, 21034. [Google Scholar] [CrossRef] [PubMed]

- Sharma, H.; Drukker, L.; Chatelain, P.; Droste, R.; Papageorghiou, A.T.; Noble, J.A. Knowledge representation and learning of operator clinical workflow from full-length routine fetal ultrasound scan videos. Med. Image Anal. 2021, 69, 101973. [Google Scholar] [CrossRef]

- Mansour, N.; Bas, M.; Stock, K.; Strassen, U.; Hofauer, B.; Knopf, A. Multimodal Ultrasonographic Pathway of Parotid Gland Lesions. Ultraschall Med.-Eur. J. Ultrasound 2015, 38, 166–173. [Google Scholar] [CrossRef]

- Sargsyan, A.E.; Hamilton, D.R.; Jones, J.A.; Melton, S.L.; Whitson, P.A.; Kirkpatrick, A.W.; Martin, D.S.; Dulchavsky, S.A. FAST at MACH 20: Clinical Ultrasound Aboard the International Space Station. J. Trauma Acute Care Surg. 2005, 58, 35–39. [Google Scholar] [CrossRef]

- Cherry, S.R.; Jones, T.; Karp, J.S.; Qi, J.; Moses, W.W.; Badawi, R.D. Total-Body PET: Maximizing Sensitivity to Create New Opportunities for Clinical Research and Patient Care. J. Nucl. Med. Off. Publ. Soc. Nucl. Med. 2018, 59, 3–12. [Google Scholar] [CrossRef]

- Barbas, A.S.; Li, Y.; Zair, M.; Van Den Boom, P.; Famure, O.; Dib, M.; Laurence, J.M.; Kim, S.J.; Ghanekar, A. CT volumetry is superior to nuclear renography for prediction of residual kidney function in living donors. Clin. Transplant. 2016, 30, 1028–1035. [Google Scholar] [CrossRef]

- Ye, J.; Hai, J.; Song, J.; Wang, Z. Multimodal Data Hybrid Fusion and Natural Language Processing for Clinical Prediction Models. medRxiv 2023. [Google Scholar] [CrossRef]

- Lin, W.C.; Chen, A.X.; Song, J.; Weiskopf, N.G.; Chiang, M.F.; Hribar, M.R. Prediction of multiclass surgical outcomes in glaucoma using multimodal deep learning based on free-text operative notes and structured EHR data. J. Am. Med. Inform. Assoc. 2024, 31, 456–464. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Liu, S.; Wang, Z.; Wang, Y.; Jiang, Y.; Liu, B. Explainable time-series deep learning models for the prediction of mortality, prolonged length of stay and 30-day readmission in intensive care patients. Front. Med. 2022, 9, 933037. [Google Scholar] [CrossRef]

- Bandara, K.; Bergmeir, C.; Hewamalage, H. LSTM-MSNet: Leveraging Forecasts on Sets of Related Time Series with Multiple Seasonal Patterns. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1586–1599. [Google Scholar] [CrossRef] [PubMed]

- Fan, C.; Zhang, Y.; Pan, Y.; Li, X.; Zhang, C.; Yuan, R.; Wu, D.; Wang, W.; Pei, J.; Huang, H. Multi-Horizon Time Series Forecasting with Temporal Attention Learning. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2527–2535. [Google Scholar]

- Jia, F.; Wang, K.; Zheng, Y.; Cao, D.; Liu, Y. GPT4MTS: Prompt-based Large Language Model for Multimodal Time-Series Forecasting. Proc. AAAI Conf. Artif. Intell. 2024, 38, 23343–23351. [Google Scholar] [CrossRef]

- Yang, H.; Kuang, L.; Xia, F. Multimodal temporal-clinical note network for mortality prediction. J. Biomed. Semant. 2021, 12, 3. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, C.; Xiao, C.; Sun, J. MediTab: Scaling Medical Tabular Data Predictors via Data Consolidation, Enrichment, and Refinement. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024. [Google Scholar]

- Soenksen, L.R.; Ma, Y.; Zeng, C.; Boussioux, L.; Villalobos Carballo, K.; Na, L.; Wiberg, H.M.; Li, M.L.; Fuentes, I.; Bertsimas, D. Integrated multimodal artificial intelligence framework for healthcare applications. NPJ Digit. Med. 2022, 5, 149. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Anand, R.S. Multimodal medical image sensor fusion model using sparse K-SVD dictionary learning in nonsubsampled shearlet domain. IEEE Trans. Instrum. Meas. 2019, 69, 593–607. [Google Scholar] [CrossRef]

- Rawls, E.; Kummerfeld, E.; Zilverstand, A. An integrated multimodal model of alcohol use disorder generated by data-driven causal discovery analysis. Commun. Biol. 2021, 4, 435. [Google Scholar] [CrossRef]

- Dong, L.; Wang, Z.; Zhang, M.; Gao, B. A study on a 3D multimodal magnetic resonance brain tumor image segmentation model. In Proceedings of the 2021 IEEE 23rd International Conference on High Performance Computing & Communications/7th International Conference on Data Science & Systems/19th International Conference on Smart City/7th International Conference on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Haikou, China,, 20–22 December 2021; pp. 2139–2143. [Google Scholar]

- Zhong, S.; Ren, J.X.; Yu, Z.P.; Peng, Y.D.; Yu, C.W.; Deng, D.; Xie, Y.; He, Z.Q.; Duan, H.; Wu, B.; et al. Predicting glioblastoma molecular subtypes and prognosis with a multimodal model integrating convolutional neural network, radiomics, and semantics. J. Neurosurg. 2022, 139, 305–314. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, L.; Ma, C. A multimodal diagnosis predictive model of Alzheimer’s disease with few-shot learning. In Proceedings of the 2020 International Conference on Public Health and Data Science (ICPHDS), Guangzhou, China, 20–22 November 2020; pp. 273–277. [Google Scholar]

- Sekhar, U.S.; Vyas, N.; Dutt, V.; Kumar, A. Multimodal Neuroimaging Data in Early Detection of Alzheimer’s Disease: Exploring the Role of Ensemble Models and GAN Algorithm. In Proceedings of the 2023 International Conference on Circuit Power and Computing Technologies (ICCPCT), Kollam, India, 10–11 August 2023; pp. 1664–1669. [Google Scholar]

- Wu, S.; Chen, C.; Luo, H.; Xu, Y. GIRUS-net: A Multimodal Deep Learning Model Identifying Imaging and Genetic Biomarkers Linked to Alzheimer’s Disease Severity. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–4. [Google Scholar]

- Falakshahi, H.; Rokham, H.; Miller, R.; Liu, J.; Calhoun, V.D. Network Differential in Gaussian Graphical Models from Multimodal Neuroimaging Data. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–6. [Google Scholar]

- Chang, H.H. Multimodal Image Registration Using a Viscous Fluid Model with the Bhattacharyya Distance. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–4. [Google Scholar]

- Zhou, Q.; Zou, H.; Luo, F.; Qiu, Y. RHViT: A Robust Hierarchical Transformer for 3D Multimodal Brain Tumor Segmentation Using Biased Masked Image Modeling Pre-training. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; pp. 1784–1791. [Google Scholar]

- Rondinella, A.; Chen, C.; Jiang, Q.; Gu, Y.; Xu, F. Enhancing Multiple Sclerosis Lesion Segmentation in Multimodal MRI Scans with Diffusion Models. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; pp. 3733–3740. [Google Scholar]

- Hatami, N.; Mechtouff, L.; Rousseau, D.; Cho, T.-H.; Eker, O.; Berthezene, Y.; Frindel, C. A Novel Autoencoders-LSTM Model for Stroke Outcome Prediction Using Multimodal MRI Data. In Proceedings of the 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI), Cartagena, Colombia, 18–21 April 2023; pp. 1–5. [Google Scholar]

- Chen, Z.; Liu, Y.; Wang, J.; Ma, Y.; Liu, Y.; Ye, H.; Liu, B. Enhanced Multimodal Low-Rank Embedding-Based Feature Selection Model for Multimodal Alzheimer’s Disease Diagnosis. IEEE Trans. Med. Imaging 2024, 44, 815–827. [Google Scholar] [CrossRef]

- Bouzara, A.; Kermi, A. Automated Brain Tumor Segmentation in Multimodal MRI Scans Using a Multi-Encoder U-Net Model. In Proceedings of the 2024 8th International Conference on Image and Signal Processing and their Applications (ISPA), Biskra, Algeria, 21–22 April 2024; pp. 1–8. [Google Scholar]

- Ahmad, N.; Chen, Y.T. Enhanced Deep Learning Model Performance in 3D Multimodal Brain Tumor Segmentation with Gabor Filter. In Proceedings of the 2024 10th International Conference on Applied System Innovation (ICASI), Kyoto, Japan, 17–21 April 2024; pp. 406–408. [Google Scholar]

- Jana, M.; Das, A. Multimodal Medical Image Fusion Using Deep-Learning Models in Fuzzy Domain. In Proceedings of the 2023 IEEE 5th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), Hamburg, Germany, 7–8 October 2023; pp. 514–519. [Google Scholar]

- Ouyang, Y.; Wu, Y.; Wang, S.; Qu, H. Leveraging Historical Medical Records as a Proxy via Multimodal Modeling and Visualization to Enrich Medical Diagnostic Learning. IEEE Trans. Vis. Comput. Graph. 2023, 30, 1238–1248. [Google Scholar] [CrossRef]

- Liu, X.; Qiu, H.; Li, M.; Yu, Z.; Yang, Y.; Yan, Y. Application of Multimodal Fusion Deep Learning Model in Disease Recognition. In Proceedings of the 2024 IEEE 2nd International Conference on Sensors, Electronics and Computer Engineering (ICSECE), Jinzhou, China, 29–31 August 2024; pp. 1246–1250. [Google Scholar]

- Junior, G.V.M.; Santos, R.L.d.S.; Vogado, L.H.; de Paiva, A.C.; dos Santos Neto, P.d.A. XRaySwinGen: Automatic Medical Reporting for X-ray Exams with Multimodal Model. Heliyon 2024, 10, e27516. [Google Scholar]

- Cui, K.; Changrong, S.; Maomin, Y.; Hui, Z.; Xiuxiang, L. Development of an Artificial Intelligence-Based Multimodal Model for Assisting in the Diagnosis of Necrotizing Enterocolitis in Newborns: A Retrospective Study. Front. Pediatr. 2024, 12, 1388320. [Google Scholar] [CrossRef]

- Yin, M.; Lin, J.; Wang, Y.; Liu, Y.; Zhang, R.; Duan, W.; Zhou, Z.; Zhu, S.; Gao, J.; Liu, L.; et al. Development and Validation of a Multimodal Model in Predicting Severe Acute Pancreatitis Based on Radiomics and Deep Learning. Int. J. Med. Inform. 2024, 184, 105341. [Google Scholar] [CrossRef] [PubMed]

- Qiu, X.; Wang, C.; Li, B.; Tong, H.; Tan, X.; Yang, L.; Tao, J.; Huang, J. An Audio-Semantic Multimodal Model for Automatic Obstructive Sleep Apnea-Hypopnea Syndrome Classification via Multi-Feature Analysis of Snoring Sounds. Front. Neurosci. 2024, 18, 1336307. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Ke, J.; Zhang, Y.; Gou, J.; Shen, A.; Wan, S. Multimodal Distillation Pre-Training Model for Ultrasound Dynamic Images Annotation. IEEE J. Biomed. Health Inform. 2024, 29, 3124–3136. [Google Scholar] [CrossRef]

- Thota, P.; Veerla, J.P.; Guttikonda, P.S.; Nasr, M.S.; Nilizadeh, S.; Luber, J.M. Demonstration of an Adversarial Attack Against a Multimodal Vision Language Model for Pathology Imaging. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–5. [Google Scholar]

- Hassan, A.; Sirshar, M.; Akram, M.U.; Farooq, M.U. Analysis of Multimodal Representation Learning Across Medical Images and Reports Using Multiple Vision and Language Pre-Trained Models. In Proceedings of the 2022 19th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 12–16 January 2022; pp. 337–342. [Google Scholar]

- Fahmy, G.A.; Abd-Elrahman, E.; Zorkany, M. COVID-19 Detection Using Multimodal and Multi-Model Ensemble-Based Deep Learning Technique. In Proceedings of the 2022 39th National Radio Science Conference (NRSC), Cairo, Egypt, 29 November–1 December 2022; Volume 1, pp. 241–253. [Google Scholar]

- Chen, W.; Li, Y.; Ou, B.; Tan, P. Collaborative Multimodal Diagnostic: Fusion of Pathological Labels and Vision-Language Model. In Proceedings of the 2023 2nd International Conference on Health Big Data and Intelligent Healthcare (ICHIH), Zhuhai, China, 27–29 October 2023; pp. 119–126. [Google Scholar]

- Silva, L.E.V.; Shi, L.; Gaudio, H.A.; Padmanabhan, V.; Morgan, R.W.; Slovis, J.M.; Forti, R.M.; Morton, S.; Lin, Y.; Laurent, G.H.; et al. Prediction of Return of Spontaneous Circulation in a Pediatric Swine Model of Cardiac Arrest Using Low-Resolution Multimodal Physiological Waveforms. IEEE J. Biomed. Health Inform. 2023, 27, 4719–4727. [Google Scholar] [CrossRef]

- Owais, M.; Zubair, M.; Seneviratne, L.D.; Werghi, N.; Hussain, I. Unified Synergistic Deep Learning Framework for Multimodal 2-D and 3-D Radiographic Data Analysis: Model Development and Validation. IEEE Access 2024, 12, 159688–159705. [Google Scholar] [CrossRef]

- Li, C.-Y.; Wu, J.-T.; Hsu, C.; Lin, M.-Y.; Kang, Y. Understanding eGFR Trajectories and Kidney Function Decline via Large Multimodal Models. In Proceedings of the 2024 IEEE 7th International Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 7–9 August 2024; pp. 667–673. [Google Scholar]

- Chen, L.; Zhang, T.; Li, Z.; Deng, J.; Li, S.; Hu, J. A Multimodal Deep Learning Model for Preoperative Risk Prediction of Follicular Thyroid Carcinoma. In Proceedings of the 2023 IEEE International Conference on E-health Networking, Application & Services (Healthcom), Chongqing, China, 15–17 December 2023; pp. 188–193. [Google Scholar]

- Souza, L.A.; Pacheco, A.G.C.; de Angelo, G.C.; de Lacerda, G.B.; da Silva, J.S.; Sampaio, F.N.; Velozo, B.M.; de Albuquerque, C.S.; Ribeiro, P.L.; Lins, I.D. LiwTERM: A Lightweight Transformer-Based Model for Dermatological Multimodal Lesion Detection. In Proceedings of the 2024 37th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Manaus, Brazil, 30 September–3 October 2024; pp. 1–6. [Google Scholar]

- Vo, H.Q.; Wang, L.; Wang, X.; Liu, R.; Sun, Y.; Li, X.; Chen, Z.; Li, Z. Frozen Large-Scale Pretrained Vision-Language Models Are the Effective Foundational Backbone for Multimodal Breast Cancer Prediction. IEEE J. Biomed. Health Inform. 2024, 29, 3234–3246. [Google Scholar] [CrossRef]

- Yin, H.; Tao, L.; Zuo, R.; Yin, P.; Li, Y.; Xu, Y.; Liu, M. A Multimodal Fusion Model for Breast Tumor Segmentation in Ultrasound Images. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–4. [Google Scholar]

- Wei, C.; Wang, H.; Shi, X.; Li, X.; Wang, K.; Zhou, G. A Finetuned Multimodal Deep Learning Model for X-ray Image Diagnosis. In Proceedings of the 2024 6th International Conference on Communications, Information System and Computer Engineering (CISCE), Guangzhou, China, 10–12 May 2024; pp. 810–813. [Google Scholar]

- Cerdá-Alberich, L.; Veiga-Canuto, D.; Garcia, V.; Blasco, L.; Martinez-Sanchis, S. Harnessing Multimodal Clinical Predictive Models for Childhood Tumors. In Proceedings of the 2023 IEEE EMBS Special Topic Conference on Data Science and Engineering in Healthcare, Medicine and Biology, Julians, Malta, 6–9 December 2023; pp. 71–72. [Google Scholar]

- Sakhovskiy, A.; Tutubalina, E. Multimodal Model with Text and Drug Embeddings for Adverse Drug Reaction Classification. J. Biomed. Inform. 2022, 135, 104182. [Google Scholar] [CrossRef]

- Li, Y.; Mamouei, M.; Salimi-Khorshidi, G.; Rao, S.; Hassaine, A.; Canoy, D.; Lukasiewicz, T.; Rahimi, K. Hi-BEHRT: Hierarchical Transformer-Based Model for Accurate Prediction of Clinical Events Using Multimodal Longitudinal Electronic Health Records. IEEE J. Biomed. Health Inform. 2022, 27, 1106–1117. [Google Scholar] [CrossRef]

- Zhang, K.; Niu, K.; Zhou, Y.; Tai, W.; Lu, G. MedCT-BERT: Multimodal Mortality Prediction Using Medical ConvTransformer-BERT Model. In Proceedings of the 2023 IEEE 35th International Conference on Tools with Artificial Intelligence (ICTAI), Atlanta, GA, USA, 6–8 November 2023; pp. 700–707. [Google Scholar]

- Duong, T.T.; Uher, D.; Su, H.; Küçükdeveci, A.A.; Almusalihi, R.; Hahne, F.; Schiessl, M.; Dürkop, K.; Bleser, G. Accurate COP Trajectory Estimation in Healthy and Pathological Gait Using Multimodal Instrumented Insoles and Deep Learning Models. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 4801–4811. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharjee, A.; Anwar, S.; Whitinger, L.; Loghmani, M.T. Multimodal Sequence Classification of Force-Based Instrumented Hand Manipulation Motions Using LSTM-RNN Deep Learning Models. In Proceedings of the 2023 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), Pittsburgh, PA, USA, 15–18 October 2023; pp. 1–6. [Google Scholar]

- Liu, S.; Wang, X.; Hou, Y.; Li, G.; Wang, H.; Xu, H.; Xiang, Y.; Tang, B. Multimodal Data Matters: Language Model Pre-Training over Structured and Unstructured Electronic Health Records. IEEE J. Biomed. Health Inform. 2022, 27, 504–514. [Google Scholar] [CrossRef] [PubMed]

- Winston, C.; Winston, C.N.; Winston, C.; Winston, C.; Winston, C. Multimodal Clinical Prediction with Unified Prompts and Pretrained Large-Language Models. In Proceedings of the 2024 IEEE 12th International Conference on Healthcare Informatics (ICHI), Orlando, FL, USA, 3–6 June 2024; pp. 679–683. [Google Scholar] [CrossRef]

- Pawar, Y.; Henriksson, A.; Hägglund, M. Leveraging Clinical BERT in Multimodal Mortality Prediction Models for COVID-19. In Proceedings of the 2022 IEEE 35th International Symposium on Computer-Based Medical Systems (CBMS), Shenzhen, China, 21–23 July 2022; pp. 199–204. [Google Scholar]

- Niu, S.; Yin, Q.; Wang, C.; Feng, J. Label Dependent Attention Model for Disease Risk Prediction Using Multimodal Electronic Health Records. In Proceedings of the 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 7–10 December 2021; pp. 449–458. [Google Scholar]

- Barbosa, S.P.; Marques, L.; Caldas, A.; Pimentel, R.; Cruz, J.G. Predictors of the Health-Related Quality of Life (HRQOL) in SF-36 in Knee Osteoarthritis Patients: A Multimodal Model with Moderators and Mediators. Cureus 2022, 14, e27339. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Bhongade, A.; Gandhi, T.K. Multimodal Wearable Sensors-Based Stress and Affective States Prediction Model. In Proceedings of the 2023 9th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 17–18 March 2023; Volume 1, pp. 30–35. [Google Scholar]

- Dharia, S.Y.; Valderrama, C.E.; Camorlinga, S.G. Multimodal Deep Learning Model for Subject-Independent EEG-Based Emotion Recognition. In Proceedings of the 2023 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Regina, SK, Canada, 24–27 September 2023; pp. 105–110. [Google Scholar]

- Singh, A.; Holzer, N.; Götz, T.; Wittenberg, T.; Göb, S.; Sawant, S.S.; Salman, M.-M.; Pahl, J. Bio-Signal-Based Multimodal Fusion with Bilinear Model for Emotion Recognition. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; pp. 4834–4839. [Google Scholar]

- Yamamoto, M.S.; Sadatnejad, K.; Tanaka, T.; Islam, M.R.; Dehais, F.; Tanaka, Y.; Lotte, F. Modeling Complex EEG Data Distribution on the Riemannian Manifold Toward Outlier Detection and Multimodal Classification. IEEE Trans. Biomed. Eng. 2024, 71, 377–387. [Google Scholar] [CrossRef]

| Disease | Fusion Technique | Key Metrics | Strengths | Weaknesses | Ref. |

|---|---|---|---|---|---|

| Thyroid Cancer | Intermediate | Accuracy: 93% (Multimodal) | High overall accuracy with robust multimodal metrics | Limited to 323 patient observations | [69] |

| Skin Cancer | Late | PAD-UFES-20: BACC = 0.63 ± 0.02, ISIC 2019: BACC = 0.52 ± 0.02 | Moderate accuracy across large datasets with diverse modalities | Relatively lower accuracy compared to other models | [70] |

| Breast Cancer (Mammograms & EHR) | Intermediate | CBIS-DDSM: AUC = 0.830, EMBED: AUC = 0.809 | Strong AUC values with reliable multimodal fusion | Accuracy is not the highest among all models | [71] |

| Breast Cancer (B-mode & Elastography Ultrasound) | Intermediate | Dice: 89.96%, Sensitivity: 90.37%, Specificity: 97.87% | High specificity and sensitivity; excellent for detection tasks | Limited observations (287 image pairs) | [72] |

| Cancer Diagnosis (X-ray & Reports) | Intermediate | ROUGE 1: 0.6544, ROUGE 2: 0.4188, ROUGE L: 0.6228 | Good performance in text-image alignment tasks | Lower accuracy metrics compared to classification models | [73] |

| Childhood Cancer (Neuroblastoma) | Intermediate | Accuracy: +15%, Precision: +20% | Combines imaging, clinical, and molecular data | Limited to neuroblastoma cases | [74] |

| Disease/Application | Fusion Technique | Key Metrics | Strengths | Weaknesses | Ref. |

|---|---|---|---|---|---|

| Neurological Disorders | Intermediate | Entropy, Mutual Information, etc. | Combines multiple imaging modalities for robust analysis. | Performance metrics not explicitly provided. | [39] |

| Alcohol Use Disorder | Early | RMSEA: 0.06, TLI: 0.91 | Significant network connectivity insights. | Limited to fMRI data; lacks multimodal fusion. | [40] |

| Brain Tumor (Gliomas) | Intermediate | Dice Similarity Coefficient (DSC) | High segmentation accuracy for multiple tumor types. | Focuses only on MRI imaging. | [41] |

| Glioblastoma | Early | Precision, Recall, Accuracy, F1-score, AUC | High accuracy for mutation and prognosis prediction. | Limited dataset size; requires validation on diverse populations. | [42] |

| Alzheimer’s Diagnosis (AD vs. NC) | Intermediate | Accuracy: 92.3%, AUC: 0.94 | Effective classification of Alzheimer’s vs. normal controls. | Does not address early diagnosis challenges. | [43] |

| Alzheimer’s Diagnosis (MCI) | Intermediate | Accuracy: 76.4%, AUC: 0.82 | Focus on mild cognitive impairment classification. | Lower accuracy compared to AD vs. NC. | [44] |

| Alzheimer’s Diagnosis (Ensemble Model) | Intermediate | F1: 82%, AUC-ROC: 0.93 | Ensemble model enhances classification accuracy. | Complex architecture may require extensive computational resources. | [45] |

| Schizophrenia | Intermediate | Graph Metrics | Graph modeling provides unique insights into connectivity patterns. | Detailed performance metrics are unavailable. | [46] |

| Brain Tumor Segmentation | Intermediate | Dice Score | High accuracy for tumor segmentation tasks. | Limited evaluation for different tumor types. | [47] |

| Multiple Sclerosis | Early | DSC: 0.7425, TPR: 0.7622, PPV: 0.7505 | Good segmentation performance with uncertainty handling. | Small dataset size limits generalizability. | [48] |

| Stroke Prediction | Intermediate | AUC: 0.71, ACC: 0.72, F1: 0.68 | Intermediate fusion improves prediction performance. | Limited by small cohort size. | [49] |

| Alzheimer’s Diagnosis (Severity) | Intermediate | 2-class F1: 0.86, 3-class F1: 0.50, 4-class F1: 0.40 | Focus on severity prediction with ordinal regression. | Performance decreases with increasing class complexity. | [50] |

| Emotion Recognition | Intermediate | SEED-IV: 67.33%, SEED-V: 72.36% | Fusion of EEG and eye-tracking data enhances emotion recognition. | Lower accuracy compared to other domains. | [51] |

| Brain Tumor (Gabor Filters) | Intermediate | Accuracy: 99.16%, mIoU: 0.804 | Gabor filters improve feature representation and accuracy. | May require domain- specific tuning. | [52] |

| General Brain Tasks | Intermediate | 4.27% improvement over baseline | Improved performance with Riemannian-based features. | Performance gains are relatively small. | [53] |

| Brain Imaging Registration | N/A | MI: 1.2527 ± 0.0510 | Numerical optimization ensures robust registration. | Not a learning-based approach; limited adaptability. | [86] |

| Alzheimer’s (NC vs. AD) | Intermediate | AUC: 0.962 | High classification accuracy for NC vs. AD. | Does not address multi- class scenarios. | [88] |

| Disease/Application | Fusion Technique | Key Metrics | Strengths | Weaknesses | Ref. |

|---|---|---|---|---|---|

| Adverse Drug Reactions (ADR) | Intermediate | F1: 0.61 (English), 0.57 (Russian), 8% gain (French) | Uses social media data for real-time ADR detection. | Limited dataset size; language-dependent performance. | [75] |

| General Disease Risk | Early | ROC AUC: 0.9036, Precision: 0.7906 | Effective multimodal fusion with time-series data. | Complex model architecture; high computational requirements. | [83] |

| Knee Osteoarthritis (KOA) | Intermediate | R2 values | Integrates neurophysiological and demographic data. | Small sample size; limited generalizability. | [84] |

| Clinical Event Prediction | Early | N/A | Large cohort size; integrates primary and secondary care data. | Performance metrics not provided. | [76] |

| General Diagnostic Applications | Intermediate | QS = 0.999 | Performs well across multiple metrics; robust feature fusion. | Limited details on dataset diversity. | [54] |

| In-Hospital Mortality Prediction | Intermediate | AUC-ROC: 0.8921, AUC-PR: 0.5635 | Strong performance for ICU patient outcomes. | Limited to a specific patient group; recall is low. | [77] |

| Neuromuscular Conditions | Intermediate | RMSE: 0.51–0.59 cm (Healthy), 1.44–1.53 cm (Condition) | Accurate gait analysis using IMU data. | Small sample size; specific to neuromuscular disorders. | [78] |

| Soft Tissue Manipulation | Intermediate | Accuracy: 93.2%, AUC: 0.9876 | High classification accuracy for multimodal data. | Limited to specific motions and therapists. | [79] |

| Stress and Affective States | Late | Accuracy: 97.15%, F1-score: N/A | High accuracy with chest-worn sensors. | Limited by sensor dependency; small cohort size. | [85] |

| Clinical Prediction | Early | Weighted F1: +0.02 to +0.16 (vs. unimodal data) | Demonstrates benefits of multimodal fusion. | Limited improvement in some tasks. | [80] |

| Cervical Spine Disorder | Late | Accuracy: 92.1%, F1: 0.92 | Effective decision- and feature-level fusion. | Limited to cervical spine disorders. | [55] |

| Necrotizing Enterocolitis (NEC) | Early | Accuracy: 94.2%, Sensitivity: 93.65% | High sensitivity and specificity. | Limited to neonatal intensive care data. | [81] |

| Severe Acute Pancreatitis | Intermediate | AUC: 0.874–0.916, Accuracy: 85–94.6% | Integrates clinical and imaging data effectively. | Relatively small dataset size. | [56] |

| Sleep Apnea-Hypopnea Syndrome | Intermediate | Accuracy: 77.6%, AUC: 0.709 | Audio features combined with semantic representations. | Limited sample size; moderate accuracy. | [57] |

| General Ultrasound-Based Diseases | Intermediate | Accuracy: 93.08% | Accurate annotation for multiple diseases. | Limited focus on ultrasound imaging. | [58] |

| Pathological Tissue Classification | Intermediate | Success Rate: 100% | Effective adversarial attack detection. | Focuses solely on pathology data. | [59] |

| Radiological Imaging Diagnosis | Intermediate | BLEU-4: 0.661, ROUGE-L: 0.748 | Generates high-quality radiological reports. | Limited to radiology; text accuracy is dataset-dependent. | [60] |

| Sepsis Prediction | Early | ROC AUC: 0.87, F1: 0.81 | Strong predictive performance for early sepsis detection. | Highly specific to ICU patient data. | [61] |

| Breast Cancer Diagnosis | Intermediate | F1: 0.93, Accuracy: 94.5% | Robust performance across multiple modalities. | Focuses only on breast cancer imaging. | [62] |

| Disease/Application | Fusion Technique | Key Metrics | Strengths | Weaknesses | Ref. |

|---|---|---|---|---|---|

| Thoracic Diseases | Intermediate | AUC: 0.985 (VisualBERT), ROUGE: 0.65, BLEU: 0.35 | Effective for disease classification and report summarization. | Focuses only on chest X-rays; dataset-specific performance. | [63] |

| COVID-19 | Intermediate | Accuracy: CT Scan—98.5%, X-ray—98.6% | High accuracy using diverse datasets. | Limited to respiratory diseases; no fusion of text. | [64] |

| COVID-19 (Karolinska Hospital) | Early | AUROC: 0.87, AUPRC: 0.45 (Multimodal) | Demonstrates benefits of multimodal fine-tuning. | Private dataset limits generalizability. | [82] |

| Neurological Disorders (ECG & GSR) | Intermediate | Accuracy: 68.7%, F1: 67.38% (Arousal) | Accurate arousal recognition using ECG and GSR. | Small dataset with 27 subjects limits applicability. | [87] |

| Chest Radiograph Diagnosis | Intermediate | Precision: 0.67, Recall: 0.75, F1: 0.71 | Utilizes LangChain for integrating vision-language models. | Text generation metrics show moderate performance. | [65] |

| Cardiac Arrest Prediction | Intermediate | AUC: 0.93 (Combined Waveforms) | High accuracy for physiological waveform integration. | Dataset limited to swine models. | [66] |

| Lung Diseases | Intermediate | Accuracy: 96.67%, F1: 96.88%, TNR: 97.02% | Robust performance using Vision Transformers. | Focused solely on infectious/non-infectious lung diseases. | [67] |

| Chronic Kidney Disease (CKD) | Intermediate | MAE: 1.03 (Training), MAPE: 3.59% (Training) | Uses multimodal prompts with LMMs for effective predictions. | Limited testing data for validation. | [68] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Siam, M.K.; Hossain Faruk, M.J.; He, B.; Cheng, J.Q.; Gu, H. Multimodal Models in Healthcare: Methods, Challenges, and Future Directions for Enhanced Clinical Decision Support. Information 2025, 16, 971. https://doi.org/10.3390/info16110971

Siam MK, Hossain Faruk MJ, He B, Cheng JQ, Gu H. Multimodal Models in Healthcare: Methods, Challenges, and Future Directions for Enhanced Clinical Decision Support. Information. 2025; 16(11):971. https://doi.org/10.3390/info16110971

Chicago/Turabian StyleSiam, Md Kamrul, Md Jobair Hossain Faruk, Bofan He, Jerry Q. Cheng, and Huanying Gu. 2025. "Multimodal Models in Healthcare: Methods, Challenges, and Future Directions for Enhanced Clinical Decision Support" Information 16, no. 11: 971. https://doi.org/10.3390/info16110971

APA StyleSiam, M. K., Hossain Faruk, M. J., He, B., Cheng, J. Q., & Gu, H. (2025). Multimodal Models in Healthcare: Methods, Challenges, and Future Directions for Enhanced Clinical Decision Support. Information, 16(11), 971. https://doi.org/10.3390/info16110971