Deep Forest-Based Monocular Visual Sign Language Recognition

Abstract

:1. Introduction

2. Related Work

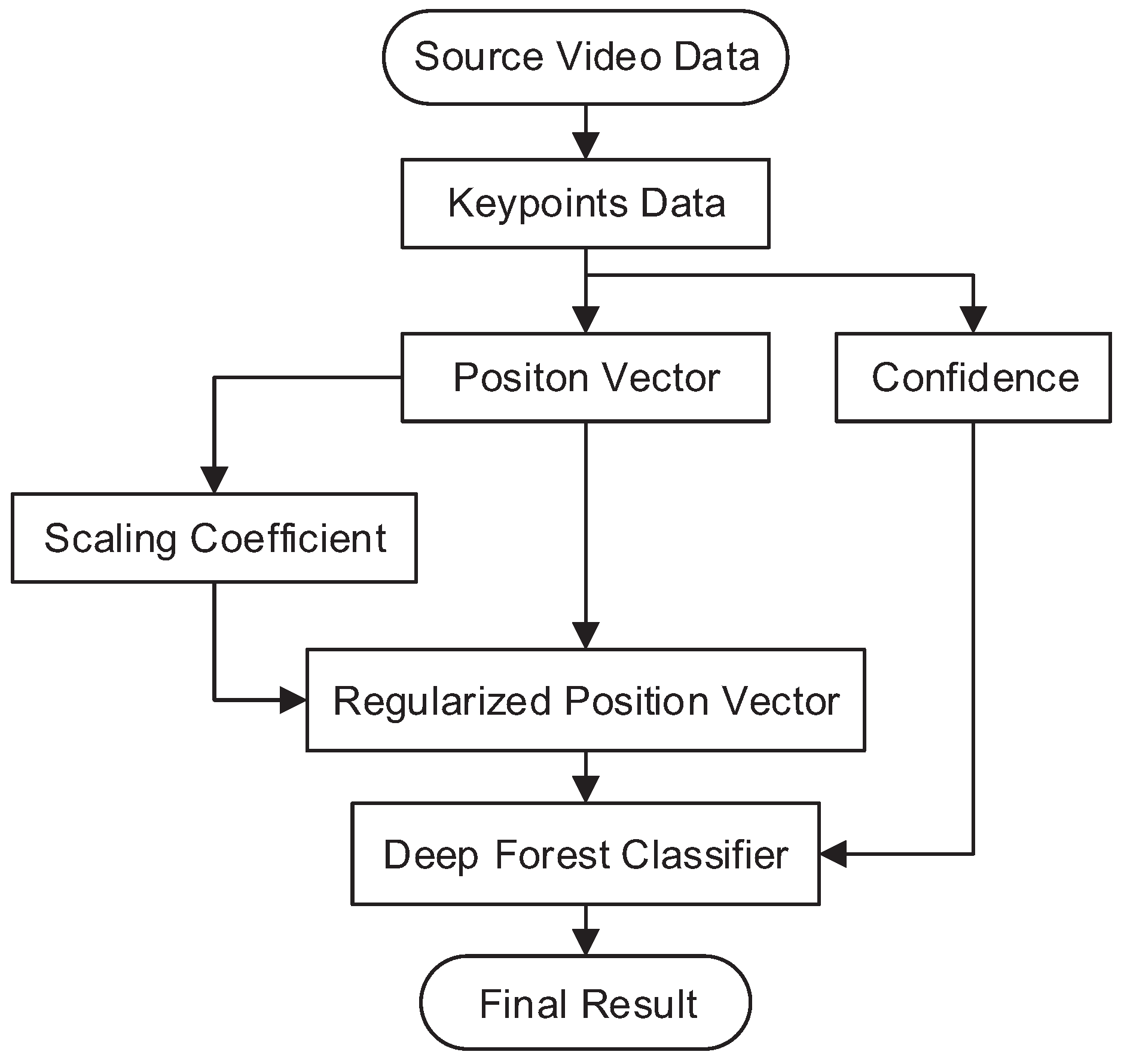

3. Framework

3.1. Problem Formulation

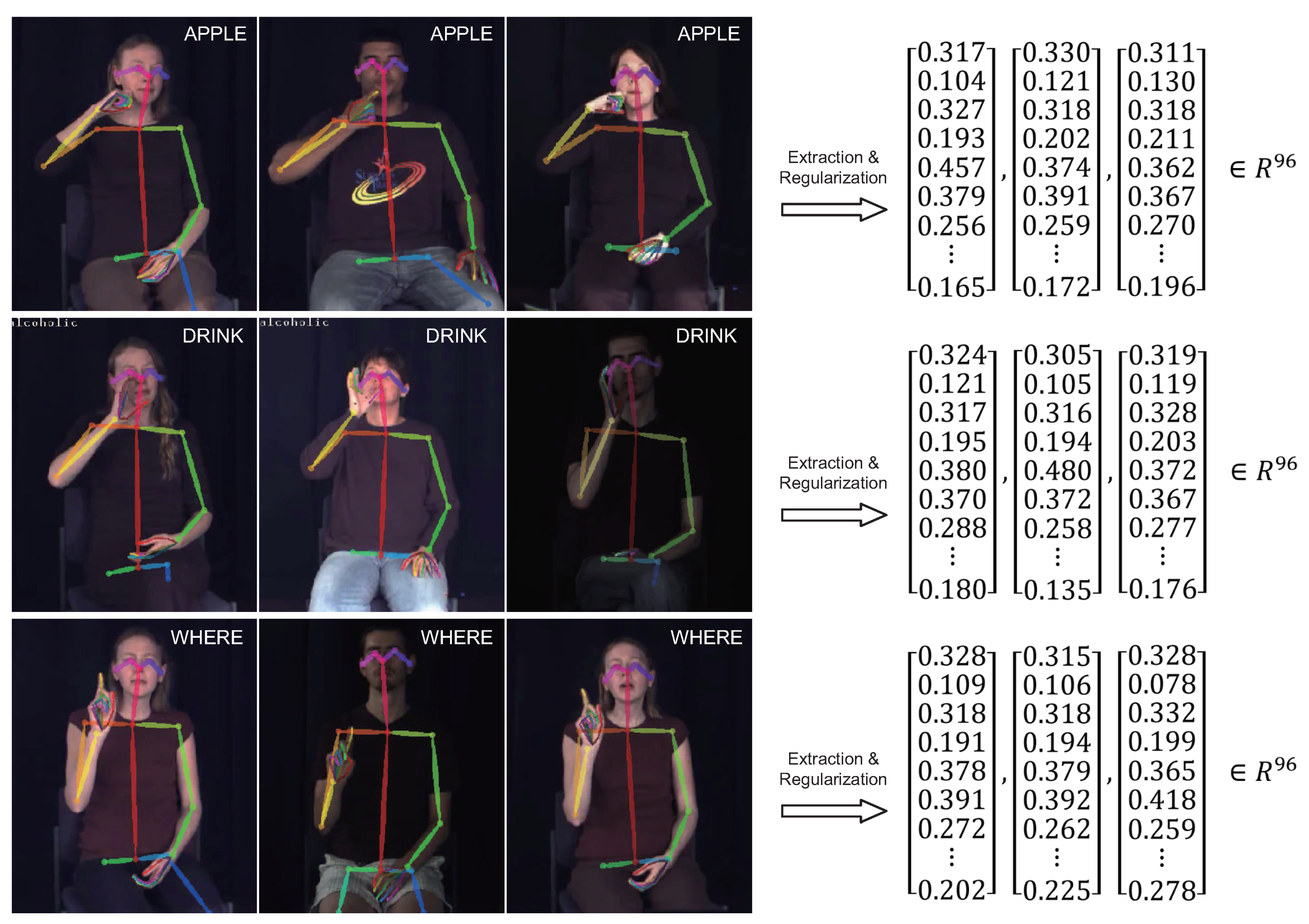

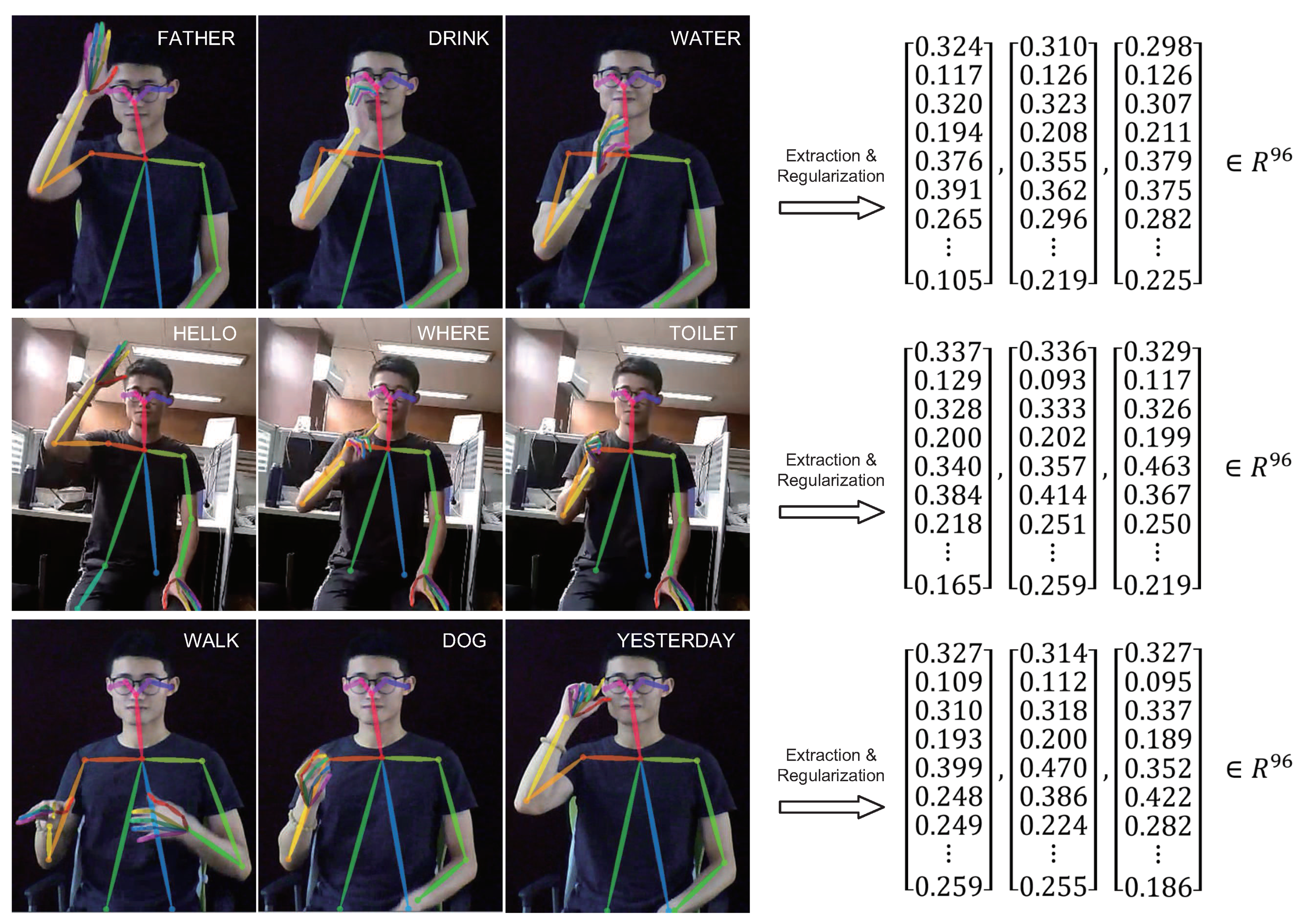

3.2. Feature Extraction

3.3. Regularization

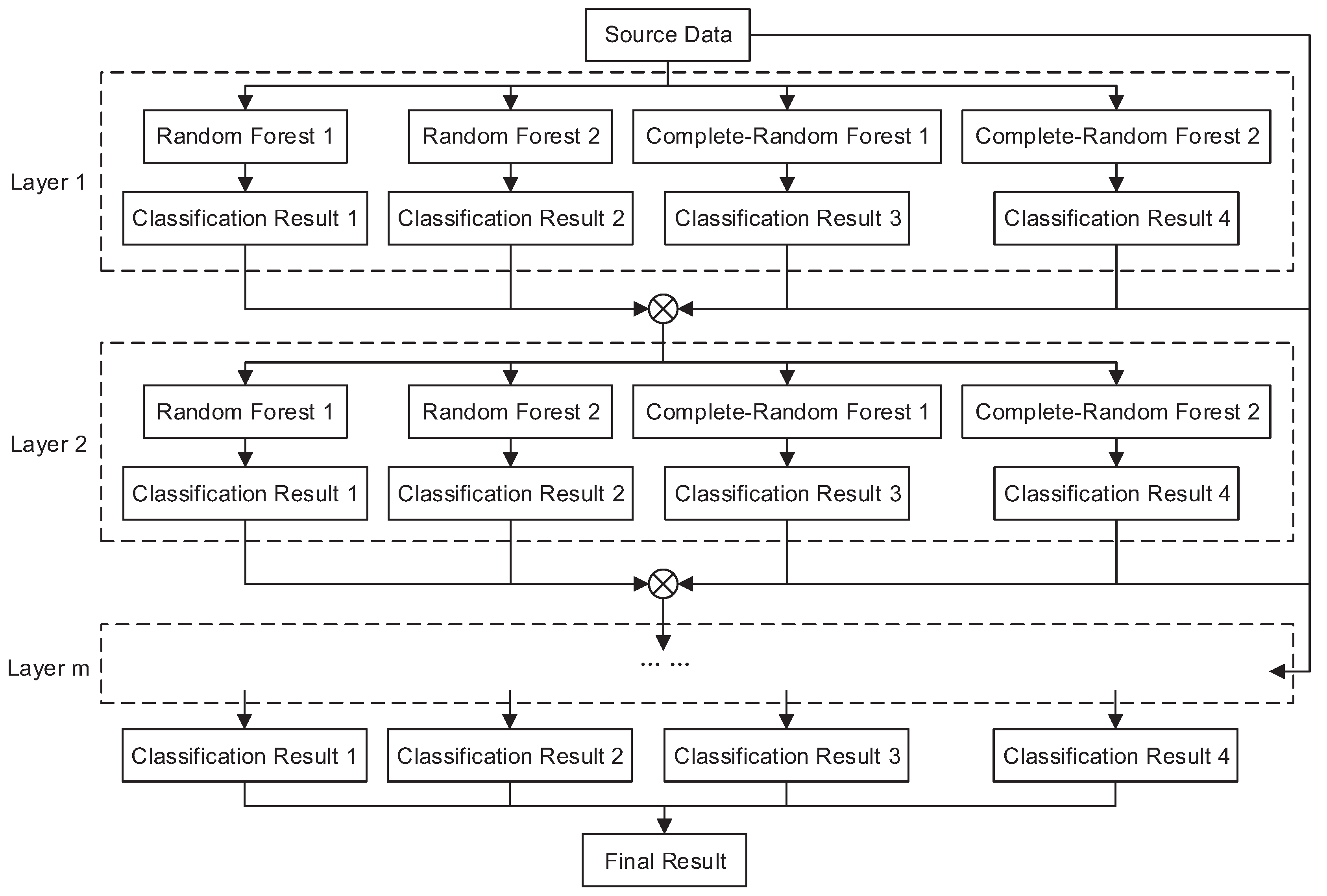

3.4. Semantic Classification

3.5. Voting Mechanism

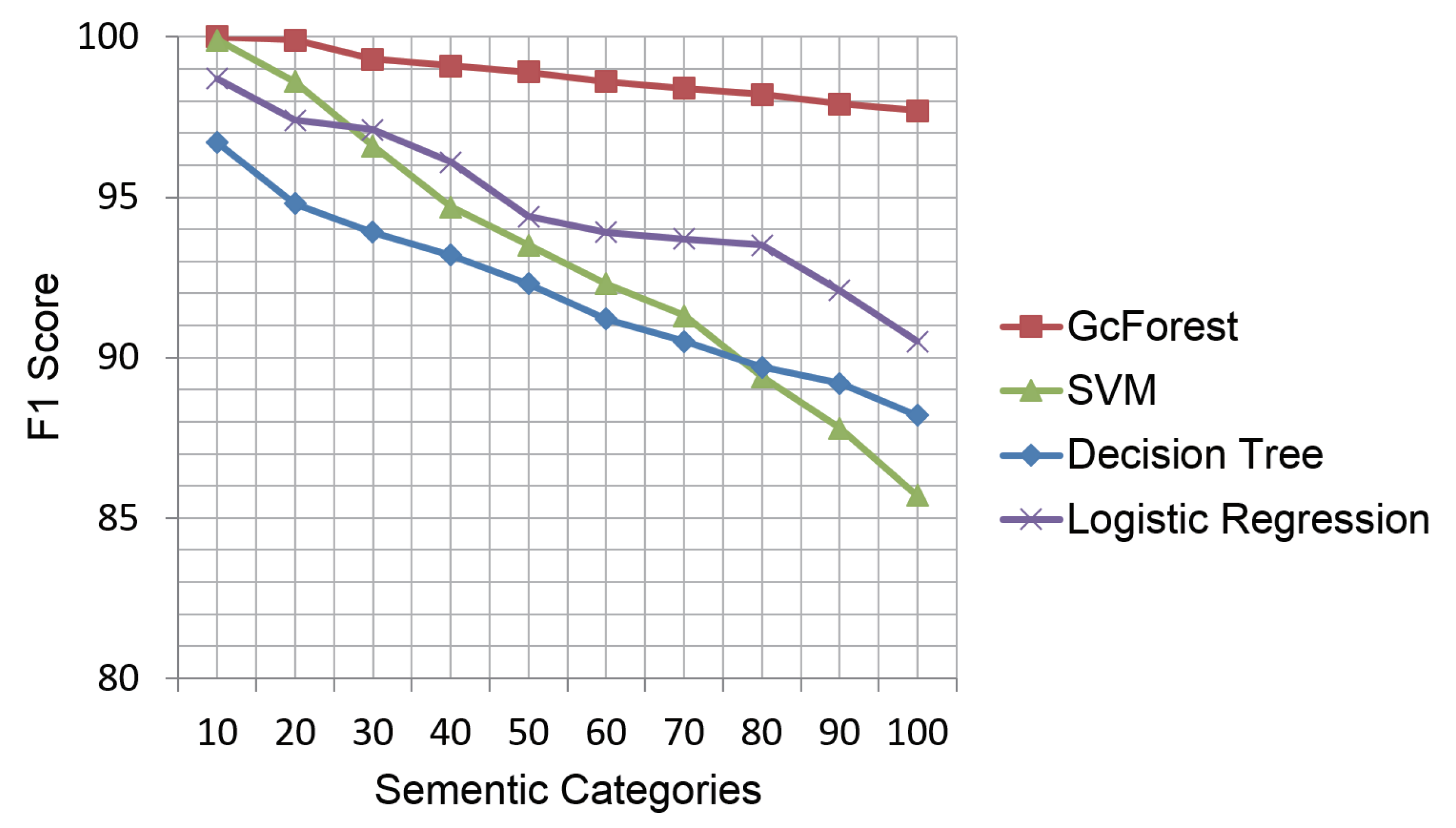

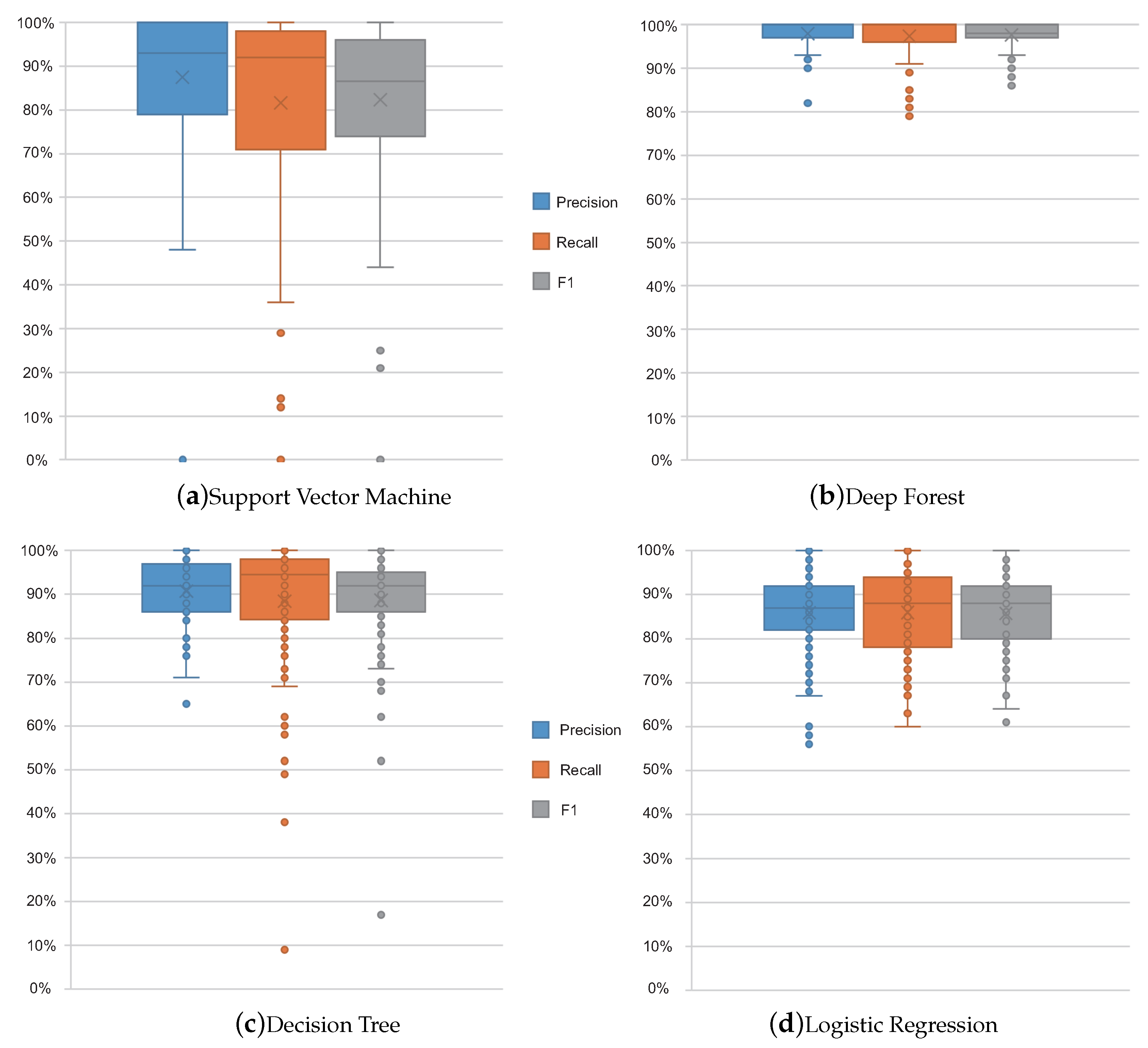

4. Experiments and Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Suharjito; Anderson, R.; Wiryana, F.; Ariesta, M.C.; Kusuma, G.P. Sign Language Recognition Application Systems for Deaf-Mute People: A Review Based on Input-Process-Output. Procedia Comput. Sci. 2017, 116, 441–448. [Google Scholar] [CrossRef]

- Al-Shamayleh, A.S.; Ahmad, R.; Abushariah, M.A.M.; Alam, K.A.; Jomhari, N. A systematic literature review on vision based gesture recognition techniques. Multimedia Tools Appl. 2018, 77, 28121–28184. [Google Scholar] [CrossRef]

- Kumar, P.; Gauba, H.; Pratim, R.P.; Prosad, D.D. A multimodal framework for sensor based sign language recognition. Neurocomputing 2017, 259, 21–38. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; Bin Lakulu, M.M. A Review on Systems-Based Sensory Gloves for Sign Language Recognition State of the Art between 2007 and 2017. Sensors 2018, 18, 2208. [Google Scholar] [CrossRef] [PubMed]

- Wei, S.; Chen, X.; Yang, X.; Cao, S.; Zhang, X. A Component-Based Vocabulary-Extensible Sign Language Gesture Recognition Framework. Sensors 2016, 16, 556. [Google Scholar] [CrossRef]

- Yang, X.; Chen, X.; Cao, X.; Wei, S.; Zhang, X. Chinese Sign Language Recognition Based on an Optimized Tree-Structure Framework. J. Biomed. Health Informat. 2017, 21, 994–1004. [Google Scholar] [CrossRef]

- Su, R.; Chen, X.; Cao, S.; Zhang, X. Random Forest-Based Recognition of Isolated Sign Language Subwords Using Data from Accelerometers and Surface Electromyographic Sensors. Sensors 2016, 16, 100. [Google Scholar] [CrossRef] [PubMed]

- Chana, C.; Jakkree, S. Hand Gesture Recognition for Thai Sign Language in Complex Background Using Fusion of Depth and Color Video. Procedia Comput. Sci. 2016, 86, 257–260. [Google Scholar] [CrossRef] [Green Version]

- Yang, H. Sign Language Recognition with the Kinect Sensor Based on Conditional Random Fields. Sensors 2015, 15, 135–147. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, X.; Liu, A.; Peng, H. A Novel Phonology- and Radical-Coded Chinese Sign Language Recognition Framework Using Accelerometer and Surface Electromyography. Sensors 2015, 15, 23303–23324. [Google Scholar] [CrossRef] [PubMed]

- Masoud, Z.; Manoochehr, N. An algorithm on sign words extraction and recognition of continuous Persian sign language based on motion and shape features of hands. Pattern Anal. Appl. 2018, 21, 323–335. [Google Scholar] [CrossRef]

- Huang, S.; Mao, C.; Tao, J.; Ye, Z. A Novel Chinese Sign Language Recognition Method Based on Keyframe-Centered Clips. Signal Process. Lett. 2018, 25, 442–446. [Google Scholar] [CrossRef]

- Elakkiya, R.; Selvamani, K. Extricating Manual and Non-Manual Features for Subunit Level Medical Sign Modelling in Automatic Sign Language Classification and Recognition. J. Med. Syst. 2017, 41, 175. [Google Scholar] [CrossRef]

- Kumar, P.; Roy, P.P.; Dogra, D.P. Independent Bayesian classifier combination based sign language recognition using facial expression. Inf. Sci. 2018, 428, 30–48. [Google Scholar] [CrossRef]

- Yang, W.; Tao, J.; Ye, Z. Continuous sign language recognition using level building based on fast hidden Markov model. Pattern Recognit. Lett. 2016, 78, 28–35. [Google Scholar] [CrossRef]

- Kumar, E.K.; Kishore, P.V.V.; Sastry, A.S.C.S.; Kumar, M.T.K.; Kumar, D.A. Training CNNs for 3-D Sign Language Recognition With Color Texture Coded Joint Angular Displacement Maps. Signal Process. Lett. 2018, 25, 645–649. [Google Scholar] [CrossRef]

- Zare, A.A.; Zahiri, S.H. Recognition of a real-time signer-independent static Farsi sign language based on fourier coefficients amplitude. Int. J. Mach. Learn. Cybern. 2018, 9, 727–741. [Google Scholar] [CrossRef]

- OpenPose: Real-Time Multi-Person Keypoint Detection Library for Body, Face, Hands, and Foot Estimation. Available online: https://github.com/CMU-Perceptual-Computing-Lab/openpose (accessed on 21 November 2018).

- Zhou, Z.; Feng, J. Deep forest: Towards an alternative to deep neural networks. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3553–3559. [Google Scholar]

- Reshna, S.; Jayaraju, M. Spotting and recognition of hand gesture for Indian sign language recognition system with skin segmentation and SVM. In Proceedings of the International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2017; pp. 386–390. [Google Scholar]

- Ibrahim, N.B.; Selim, M.M.; Zayed, H.H. An Automatic Arabic Sign Language Recognition System (ArSLRS). Comput. Inf. Sci. 2018, 30, 470–477. [Google Scholar] [CrossRef]

- Wang, H.; Chai, X.; Chen, X. Sparse Observation (SO) Alignment for Sign Language Recognition. Neurocomputing 2016, 175, 674–685. [Google Scholar] [CrossRef]

- Dong, C.; Leu, M.; Yin, Z. American sign language alphabet recognition using microsoft kinect. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 44–52. [Google Scholar]

- Almeida, S.G.M.; Guimaraes, F.G.; Ramirez, J.A. Feature extraction in Brazilian Sign Language Recognition based on phonological structure and using RGB-D sensors. Expert Syst. Appl. 2014, 41, 7259–7271. [Google Scholar] [CrossRef]

- Chevtecgebko, S.F.; Vale, R.F.; Macario, V. Multi-objective optimization for hand posture recognition. Expert Syst. Appl. 2017, 92, 170–181. [Google Scholar] [CrossRef]

- Lim, K.M.; Tan, A.W.C.; Tan, S.C. Block-based histogram of optical flow for isolated sign language recognition. J. Vis. Commun. Image Represent. 2016, 40, 538–545. [Google Scholar] [CrossRef]

- Özbay, S.; Safar, M. Real-time sign languages recognition based on hausdorff distance, Hu invariants and neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–8. [Google Scholar]

- Kumar, D.A.; Sastry, A.S.C.S.; Kishore, P.V.V.; Kumar, E.K. Indian sign language recognition using graph matching on 3D motion captured signs. Multimedia Tools Appl. 2018, 77, 32063–32091. [Google Scholar] [CrossRef]

- Kishore, P.V.V.; Kumar, D.A.; Sastry, A.S.C.S.; Kumar, E.K. Motionlets Matching with Adaptive Kernels for 3-D Indian Sign Language Recognition. IEEE Sens. J. 2018, 18, 3327–3337. [Google Scholar] [CrossRef]

- Tang, J.; Cheng, H.; Zhao, Y.; Guo, H. Structured dynamic time warping for continuous hand trajectory gesture recognition. Pattern Recognit. 2018, 80, 21–31. [Google Scholar] [CrossRef]

- Kumar, P.; Saini, R.; Roy, P.P.; Dogra, D.P. A position and rotation invariant framework for sign language recognition (SLR) using Kinect. Multimedia Tools Appl. 2018, 77, 8823–8846. [Google Scholar] [CrossRef]

- Naresh, K. Sign language recognition for hearing impaired people based on hands symbols classification. In Proceedings of the 2017 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 244–249. [Google Scholar]

- Ji, Y.; Kim, S.; Kim, Y.; Lee, K. Human-like sign-language learning method using deep learning. ETRI J. 2018, 40, 435–445. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Yu, Y.; Zhou, Z.H. Spectrum of variable-random trees. J. Artif. Intell. Res. 2008, 32, 355–384. [Google Scholar] [CrossRef]

- Sundermeyer, M.; Ney, H.; Schlüter, R. From feedforward to recurrent LSTM neural networks for language modeling. IEEE Trans. Audio Speech Lang. Process. 2015, 23, 517–529. [Google Scholar] [CrossRef]

- Rao, G.; Huang, W.; Feng, Z.; Cong, Q. LSTM with sentence representations for document-level sentiment classification. Neurocomputing 2018, 308, 49–57. [Google Scholar] [CrossRef]

- Mohanmed, M. Parsimonious memory unit for recurrent neural networks with application to natural language processing. Neurocomputing 2018, 314, 48–64. [Google Scholar] [CrossRef]

- Tan, Z.; Su, J.; Wang, B.; Chen, Y.; Shi, X. Lattice-to-sequence attentional Neural Machine Translation models. Neurocomputing 2018, 284, 138–147. [Google Scholar] [CrossRef]

| Index | Dataset | Country | Number of Signs | Sample Number |

|---|---|---|---|---|

| 1 | DGS Kinect 40 | Germany | 40 | 3000 |

| 2 | SIGNUM | Germany | 25 | 33,210 |

| 3 | Boston ASLLVD | USA | 3300 | 9800 |

| 4 | ASL-LEX | USA | 1000 | 1000 |

| 5 | LSA64 signs | Argentina | 64 | 3200 |

| SVM | Deep Forest | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Sign | Number | Precision | Recall | F1 | Sign | Number | Precision | Recall | F1 |

| None | 396 | 1.00 | 1.00 | 1.00 | None | 396 | 1.00 | 1.00 | 1.00 |

| Same | 124 | 0.91 | 0.94 | 0.93 | Same | 124 | 0.98 | 0.99 | 0.99 |

| Walk | 109 | 0.77 | 0.95 | 0.85 | Walk | 109 | 0.93 | 0.98 | 0.96 |

| Man | 108 | 0.54 | 0.72 | 0.62 | Man | 108 | 0.95 | 0.89 | 0.92 |

| Happy | 107 | 0.90 | 0.97 | 0.94 | Happy | 107 | 0.98 | 0.99 | 0.99 |

| Excuse | 102 | 1.00 | 1.00 | 1.00 | Excuse | 102 | 0.96 | 0.98 | 0.97 |

| Run | 97 | 0.97 | 0.99 | 0.98 | Run | 97 | 0.96 | 0.99 | 0.97 |

| Workout | 94 | 0.95 | 1.00 | 0.97 | Workout | 94 | 1.00 | 1.00 | 1.00 |

| Again | 89 | 0.92 | 0.93 | 0.93 | Again | 89 | 0.92 | 0.93 | 0.93 |

| Live | 84 | 0.93 | 0.92 | 0.92 | Live | 84 | 1.00 | 0.98 | 0.99 |

| Look | 80 | 0.66 | 0.97 | 0.78 | Look | 80 | 0.94 | 1.00 | 0.97 |

| Hamburger | 79 | 0.90 | 0.94 | 0.92 | Hamburger | 79 | 0.82 | 0.95 | 0.88 |

| Hurt | 75 | 0.93 | 0.99 | 0.95 | Hurt | 75 | 0.99 | 1.00 | 0.99 |

| Bird | 74 | 0.86 | 0.99 | 0.92 | Bird | 74 | 1.00 | 0.96 | 0.98 |

| Cat | 73 | 0.87 | 0.90 | 0.89 | Cat | 73 | 1.00 | 0.96 | 0.98 |

| Old | 66 | 0.79 | 0.58 | 0.67 | Old | 66 | 0.98 | 0.98 | 0.98 |

| Cold | 65 | 0.93 | 0.98 | 0.96 | Cold | 65 | 0.97 | 0.98 | 0.98 |

| Banana | 64 | 1.00 | 1.00 | 1.00 | Banana | 64 | 0.97 | 1.00 | 0.98 |

| Church | 63 | 1.00 | 0.98 | 0.99 | Church | 63 | 0.98 | 0.98 | 0.98 |

| Sleep | 63 | 0.73 | 0.92 | 0.82 | Sleep | 63 | 1.00 | 1.00 | 1.00 |

| … | … | … | … | … | … | … | … | … | … |

| Average | - | 0.88 | 0.87 | 0.86 | Average | - | 0.98 | 0.98 | 0.98 |

| Total | 4877 | - | - | - | Total | 4877 | - | - | - |

| Index | Signs | Word/Sentence |

|---|---|---|

| 1 | Apple | Apple |

| 2 | Banana | Banana |

| 3 | Drink | Drink-Water |

| 4 | Water | |

| 5 | Father | Father-Walk |

| 6 | Walk | |

| 7 | Hello | |

| 8 | Where | Hello-Where-Toilet |

| 9 | Toilet | |

| 10 | Dog | Walk-Dog-Yesterday |

| 11 | Yesterday |

| Word/Sentence | Prediction1 | Prediction2 | Prediction3 | Prediction4 | Prediction5 | Total | Classifier | PoF | RoS |

|---|---|---|---|---|---|---|---|---|---|

| Apple | Apple(40) | - | - | - | - | 40 | Deep Forest | 1.00 | 1/1 |

| Apple(34) | Hello(6) | - | - | - | SVM | 0.85 | 1/1 | ||

| Banana | Banana(60) | None(25) | Again(16) | Come(14) | Walk(7) | 158 | Deep Forest | 0.45 | 1/1 |

| Banana(63) | None(25) | Hamburger(19) | Friend(16) | Come(15) | SVM | 0.47 | 1/1 | ||

| Drink-Water | Drink(11) | None(6) | Water(4) | Come(4) | Home(3) | 37 | Deep Forest | 0.48 | 1/2 |

| Drink(9) | Eat(8) | None(4) | Come(4) | Water(3) | SVM | 0.27 | 1/2 | ||

| Father-Walk | Walk(11) | None(8) | Father(4) | Again(4) | Egg(4) | 50 | Deep Forest | 0.36 | 1/2 |

| Walk(10) | None(8) | Know(8) | Hello(6) | Father(5) | SVM | 0.24 | 1/2 | ||

| Hello-Where-Toilet | Toilet(36) | Home(28) | Hello(19) | Finish(15) | Drink(8) | 124 | Deep Forest | 0.44 | 2/3 |

| Toilet(61) | Boy(18) | Man(10) | Hearing(7) | Hello(7) | SVM | 0.49 | 1/3 | ||

| Walk-Dog-Yesterday | Walk(14) | Milk(9) | Dog(8) | Hello(6) | Other(4) | 53 | Deep Forest | 0.40 | 2/3 |

| Walk(12) | Egg(8) | Milk(8) | Dog(7) | Hello(6) | SVM | 0.23 | 1/3 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, Q.; Li, X.; Wang, D.; Zhang, W. Deep Forest-Based Monocular Visual Sign Language Recognition. Appl. Sci. 2019, 9, 1945. https://doi.org/10.3390/app9091945

Xue Q, Li X, Wang D, Zhang W. Deep Forest-Based Monocular Visual Sign Language Recognition. Applied Sciences. 2019; 9(9):1945. https://doi.org/10.3390/app9091945

Chicago/Turabian StyleXue, Qifan, Xuanpeng Li, Dong Wang, and Weigong Zhang. 2019. "Deep Forest-Based Monocular Visual Sign Language Recognition" Applied Sciences 9, no. 9: 1945. https://doi.org/10.3390/app9091945