Parameter Efficient Asymmetric Feature Pyramid for Early Wildfire Detection

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

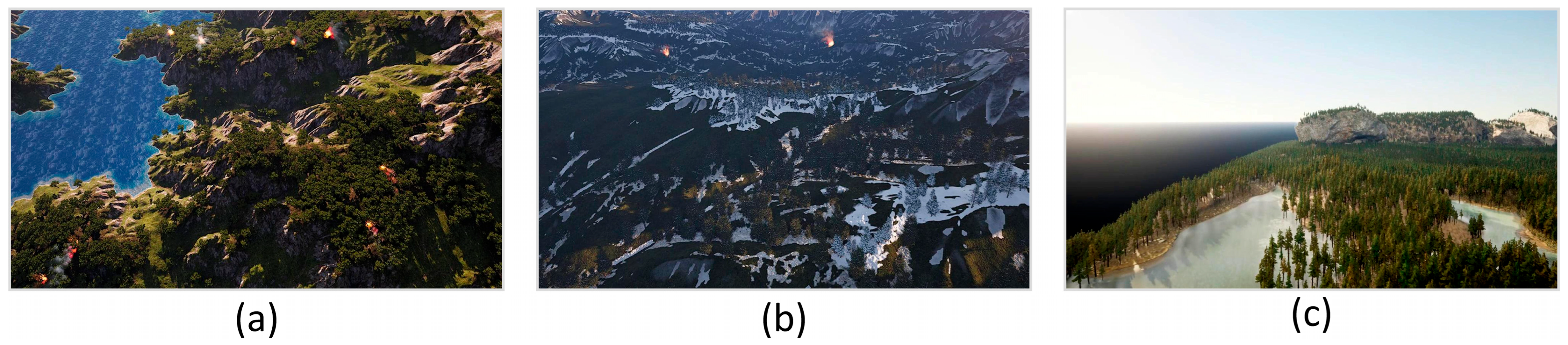

2.1.1. Data Source and Composition

2.1.2. Dataset Characteristics and Challenges

2.1.3. Dataset Split and Formatting

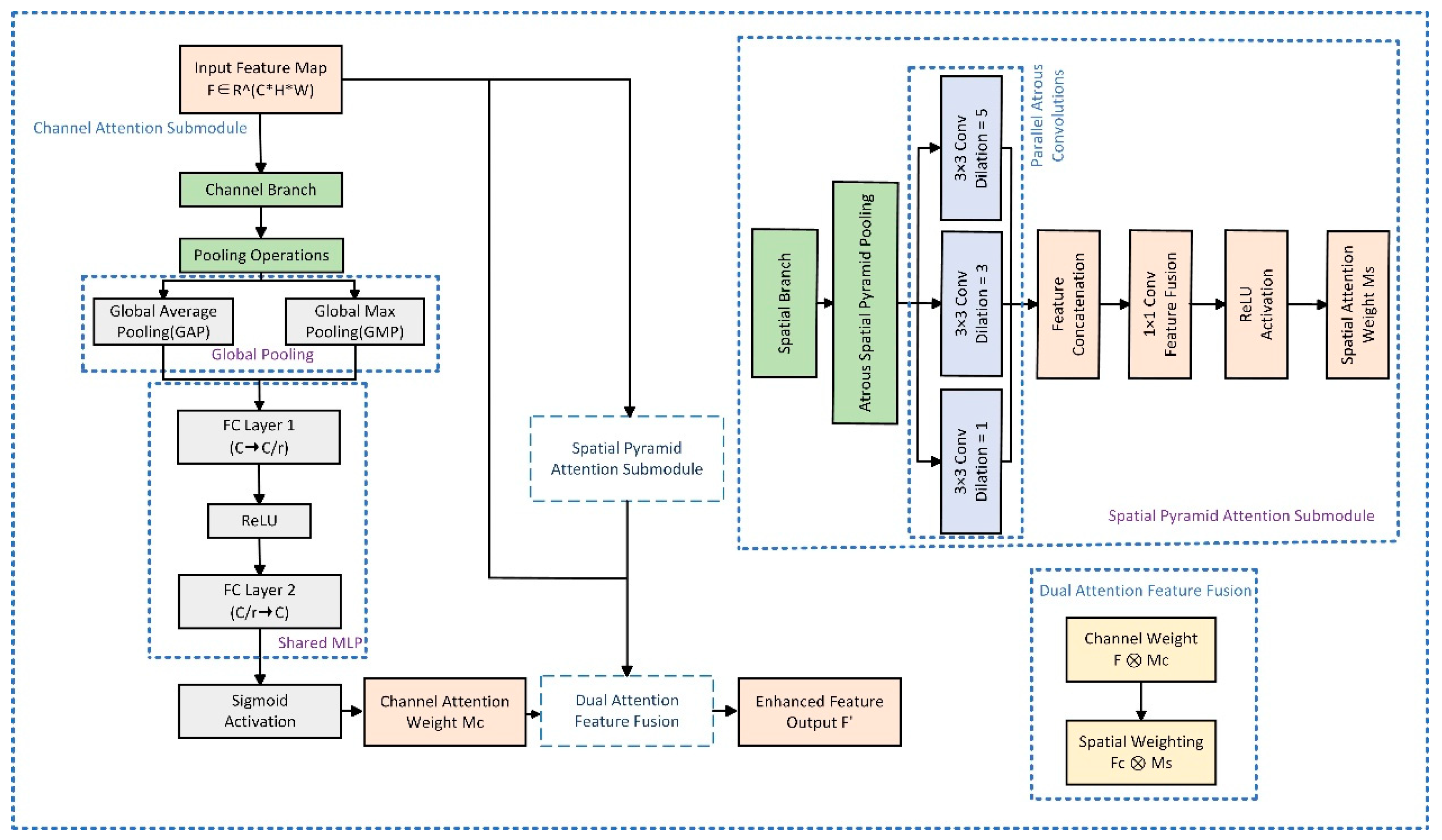

2.2. Proposed Method

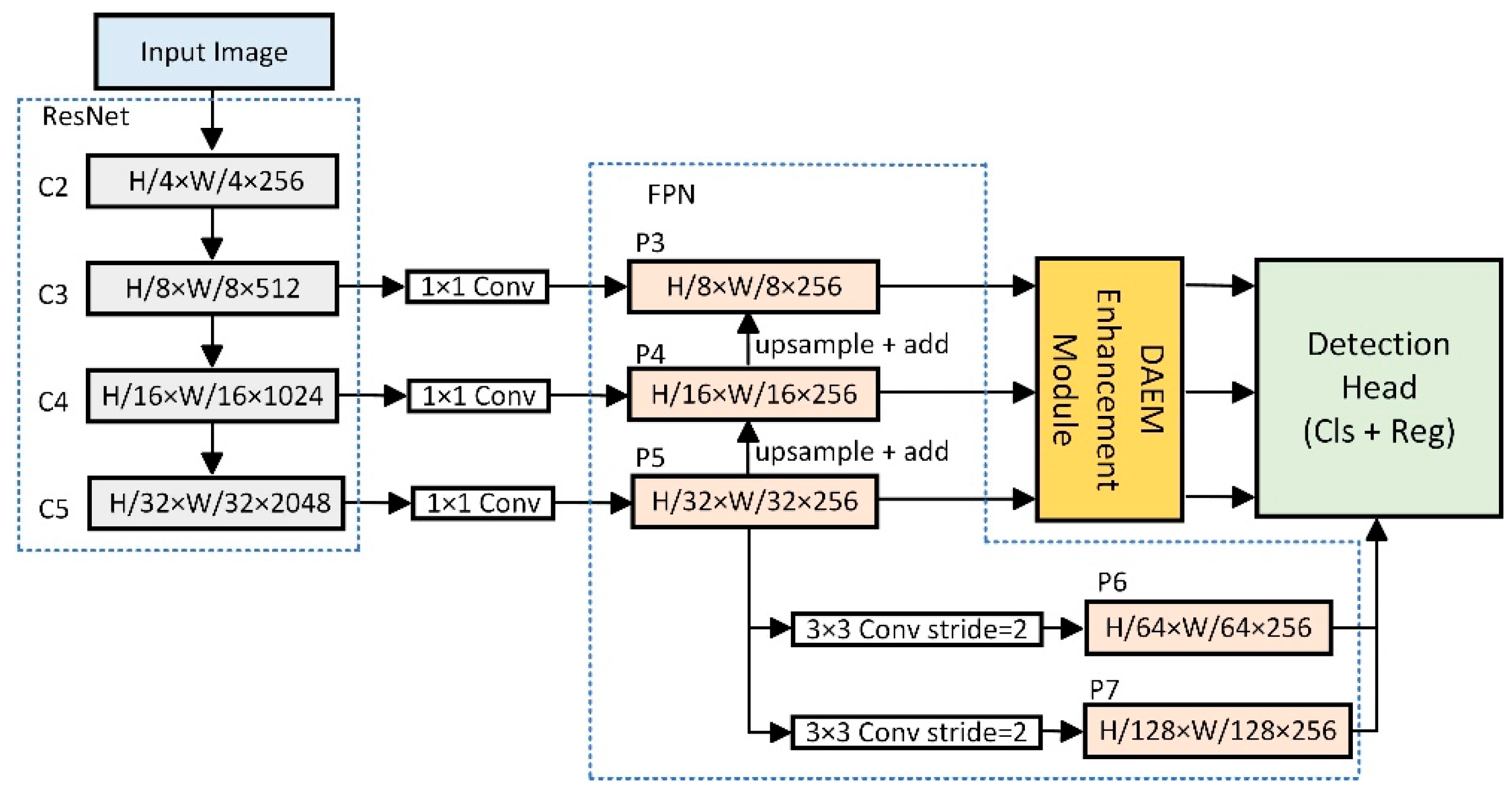

2.2.1. Baseline Model

2.2.2. Optimization of Bounding-Box Regression Loss

2.2.3. Iterative Design of an Asymmetric Feature Pyramid Network

2.3. Experimental Setup

2.3.1. Hardware and Software

2.3.2. Reproducibility Details

2.4. Evaluation Metrics

3. Results

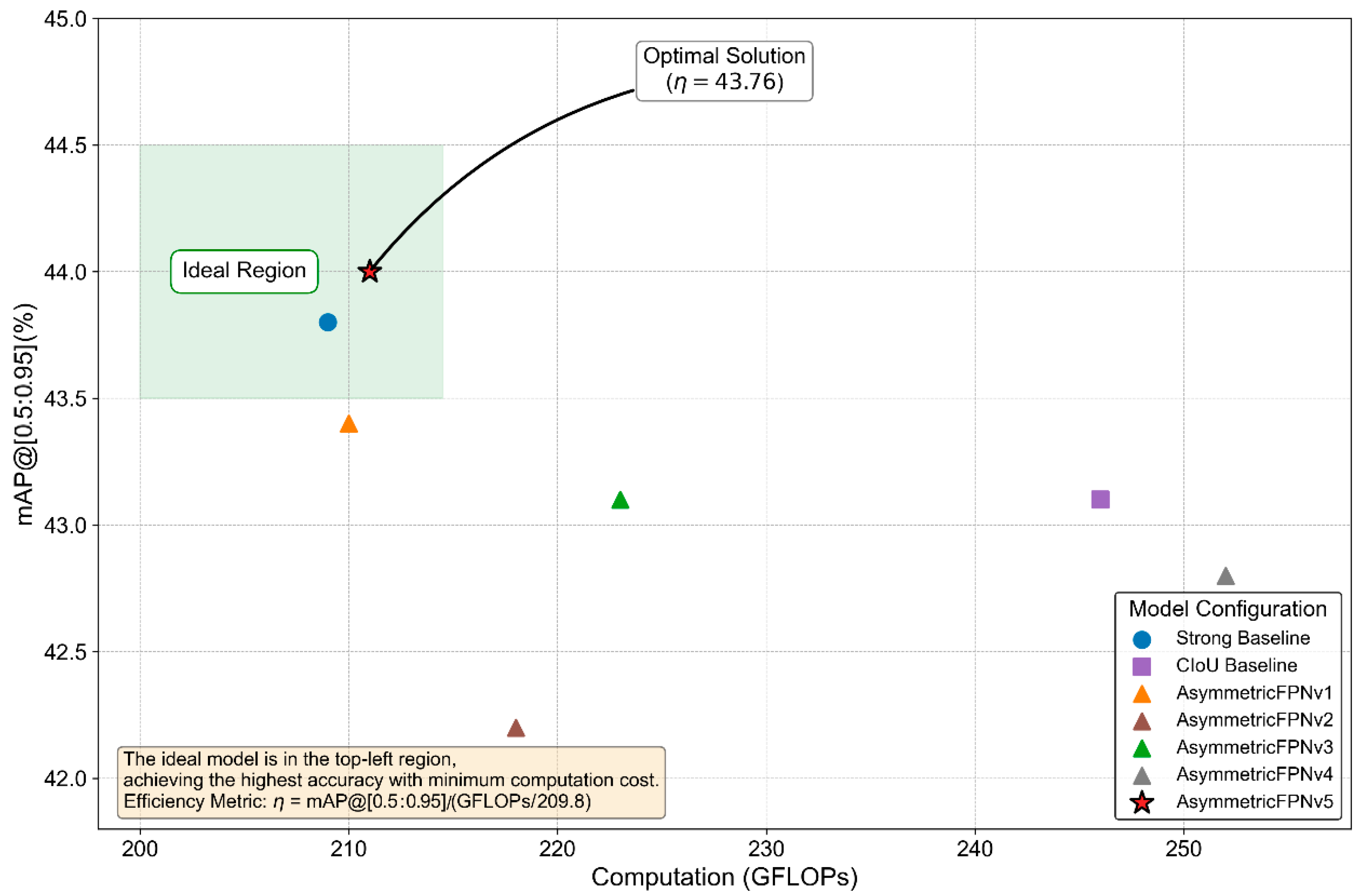

3.1. Ablation Study and Architectural Evolution

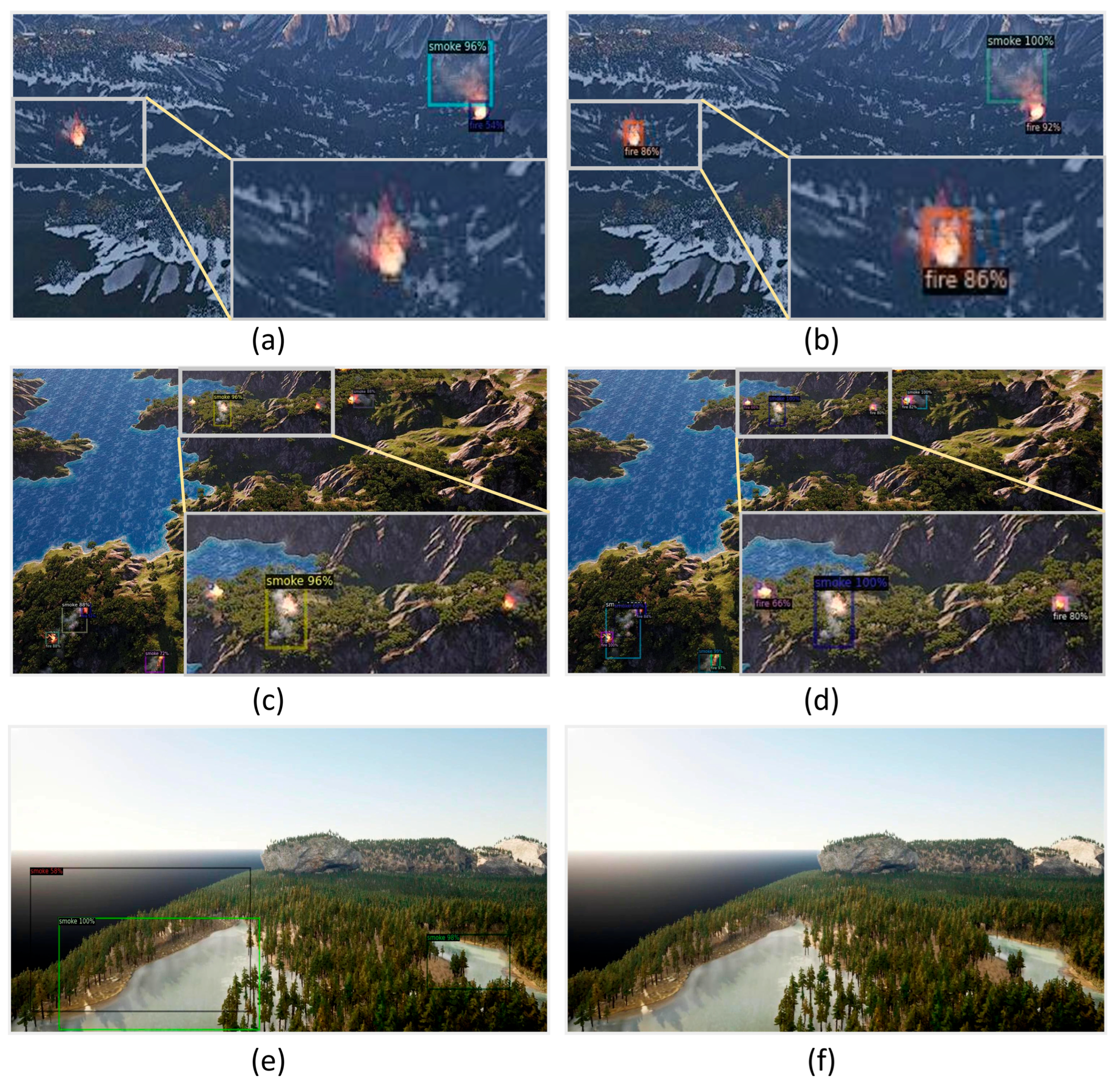

3.2. Qualitative Analysis

3.3. Comparison with State-of-the-Art Models

4. Discussion

4.1. Practical Applicability and Deployment

4.2. Attributing the Success of AsymmetricFPNv5 and the Path to an Efficiency Optimum

4.3. Comparison and Reflection on SOTA Models Rebalancing Accuracy Speed and Efficiency

4.4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| APs | Average Precision for small objects |

| BiFPN | Bidirectional Feature Pyramid Network |

| CIoU | Complete-Intersection over Union |

| DPPM | Dense Pyramid Pooling Module |

| DyFPN | Dynamic Feature Pyramid Network |

| EMA | Exponential Moving Average |

| FAM | Feature Alignment Module |

| FPN | Feature Pyramid Network |

| FPS | Frames Per Second |

| GFLOPs | Giga Floating-Point Operations |

| GN | Group Normalization |

| IoU | Intersection over Union |

| LEB | Lightweight Enhancement Block |

| MCCL | Multisccale Contrastive Context Learning |

| NMS | Non-Maximum Suppression |

| SiLU | Sigmoid Linear Unit |

| SPPF | Spatial Pyramid Pooling-Fast |

| UAV | Unmanned Aerial Vehicle |

References

- Food and Agriculture Organization of the United Nations. Global Forest Resources Assessment 2020: Key Findings; Food and Agriculture Organization of the United Nations: Rome, Italy, 2020; ISBN 978-92-5-132581-0. [Google Scholar] [CrossRef]

- Jones, M.W.; Abatzoglou, J.T.; Veraverbeke, S.; Andela, N.; Lasslop, G.; Forkel, M.; Smith, A.J.P.; Burton, C.; Betts, R.A.; van der Werf, G.R.; et al. Global and Regional Trends and Drivers of Fire Under Climate Change. Rev. Geophys. 2022, 60, e2020RG000726. [Google Scholar] [CrossRef]

- Mohapatra, A.; Trinh, T. Early Wildfire Detection Technologies in Practice—A Review. Sustainability 2022, 14, 12270. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Chan, C.C.; Alvi, S.A.; Zhou, X.; Durrani, S.; Wilson, N.; Yebra, M. A Survey on IoT Ground Sensing Systems for Early Wildfire Detection: Technologies, Challenges, and Opportunities. IEEE Access 2024, 12, 172785–172819. [Google Scholar] [CrossRef]

- Zhu, W.; Niu, S.; Yue, J.; Zhou, Y. Multiscale wildfire and smoke detection in complex drone forest environments based on YOLOv8. Sci. Rep. 2025, 15, 2399. [Google Scholar] [CrossRef] [PubMed]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Hyperparameter optimization of YOLOv8 for smoke and wildfire detection: Implications for agricultural and environmental safety. Artif. Intell. Agric. 2024, 12, 109–126. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, J.; Yang, H.; Liu, Y.; Liu, H. A Small-Target Forest Fire Smoke Detection Model Based on Deformable Transformer for End-to-End Object Detection. Forests 2023, 14, 162. [Google Scholar] [CrossRef]

- Park, G.; Lee, Y. Wildfire Smoke Detection Enhanced by Image Augmentation with StyleGAN2-ADA for YOLOv8 and RT-DETR Models. Fire 2024, 7, 369. [Google Scholar] [CrossRef]

- Bakirci, M.; Bayraktar, I. Harnessing UAV Technology and YOLOv9 Algorithm for Real-Time Forest Fire Detection. In Proceedings of the 2024 International Russian Automation Conference (RusAutoCon), Sochi, Russian Federation, 8–14 September 2024; IEEE: Piscataway, NJ, USA, 2024. [Google Scholar] [CrossRef]

- Huang, X.; Xie, W.; Zhang, Q.; Lan, Y.; Heng, H.; Xiong, J. A Lightweight Wildfire Detection Method for Transmission Line Perimeters. Electronics 2024, 13, 3170. [Google Scholar] [CrossRef]

- Gain, M.; Raha, A.D.; Biswas, B.; Bairagi, A.K.; Adhikary, A.; Debnath, R. LEO Satellite Oriented Wildfire Detection Model Using Deep Neural Networks: A Transfer Learning Based Approach. In Proceedings of the 2024 6th International Conference on Electrical Engineering and Information & Communication Technology (ICEEICT), Dhaka, Bangladesh, 2–4 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 214–219. [Google Scholar] [CrossRef]

- Shees, A.; Ansari, M.S.; Varshney, A.; Asghar, M.N.; Kanwal, N. FireNet-v2: Improved Lightweight Fire Detection Model for Real-Time IoT Applications. Procedia Comput. Sci. 2023, 218, 2233–2242. [Google Scholar] [CrossRef]

- Li, J.; Tang, H.; Li, X.; Dou, H.; Li, R. LEF-YOLO: A lightweight method for intelligent detection of four extreme wildfires based on the YOLO framework. Int. J. Wildland Fire 2023, 33, WF23044. [Google Scholar] [CrossRef]

- Ramadan, M.N.A.; Basmaji, T.; Gad, A.; Hamdan, H.; Akgün, B.T.; Ali, M.A.H.; Alkhedher, M.; Ghazal, M. Towards Early Forest Fire Detection and Prevention Using AI-Powered Drones and the IoT. Internet Things 2024, 27, 101248. [Google Scholar] [CrossRef]

- Giannakidou, S.; Rodoglou-Grammatikis, P.; Lagkas, T.; Argyriou, V.; Goudos, S.; Markakis, E.K.; Sarigiannidis, P. Leveraging the Power of Internet of Things and Artificial Intelligence in Forest Fire Prevention, Detection, and Restoration: A Comprehensive Survey. Internet Things 2024, 26, 101171. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Hu, M.; Li, Y.; Fang, L.; Wang, S. A2-FPN: Attention Aggregation based Feature Pyramid Network for Instance Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 15338–15347. [Google Scholar] [CrossRef]

- Zhang, K.; Li, Z.; Hu, H.; Li, B.; Tan, W.; Lu, H.; Xiao, J.; Ren, Y.; Pu, S. Dynamic Feature Pyramid Networks for Detection. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Jin, M.; Li, H. Feature-Aligned Feature Pyramid Network and Center-Assisted Anchor Matching for Small Face Detection. In Proceedings of the 2023 4th International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Hangzhou, China, 25–27 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 198–204. [Google Scholar] [CrossRef]

- Cazzato, D.; Cimarelli, C.; Sanchez-Lopez, J.L.; Voos, H.; Leo, M. A Survey of Computer Vision Methods for 2D Object Detection from Unmanned Aerial Vehicles. J. Imaging 2020, 6, 78. [Google Scholar] [CrossRef] [PubMed]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Computer vision for wildfire detection: A critical brief review. Multimed. Tools Appl. 2024, 83, 83427–83470. [Google Scholar] [CrossRef]

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne Optical and Thermal Remote Sensing for Wildfire Detection and Monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef] [PubMed]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, P.; Wergeles, N.; Shang, Y. A survey and performance evaluation of deep learning methods for small object detection. Expert Syst. Appl. 2021, 172, 114602. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Using Satellite Remote Sensing Data: Detection, Mapping, and Prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Yunnan University. Synthetic Fire-Smoke. Roboflow Universe. 2023. Available online: https://universe.roboflow.com/yunnan-university/synthetic-fire-smoke (accessed on 6 March 2023).

- Wildfire-Q3RM1. Wildfire. Roboflow Universe. 2024. Available online: https://universe.roboflow.com/wildfire-q3rm1/wildfire-ajbuc (accessed on 4 December 2024).

- Dubey, S.R.; Singh, S.K.; Chu, W.-T. Vision Transformer Hashing for Image Retrieval. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Palo Alto, CA, USA, 2020; pp. 12993–13000. [Google Scholar] [CrossRef]

- Zhao, T.; Xie, Y.; Wang, Y.; Cheng, J.; Guo, X.; Hu, B. A Survey of Deep Learning on Mobile Devices: Applications, Optimizations, Challenges, and Research Opportunities. Proc. IEEE 2022, 110, 334–354. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. In Computer Vision—ECCV 2018. Lecture Notes in Computer Science; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; Volume 11217, pp. 3–19. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. In Proceedings of the 7th International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019; OpenReview.net: Alameda, CA, USA, 2019. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. In Proceedings of the 5th International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; OpenReview.net: Alameda, CA, USA, 2017. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

| Model Configuration | mAP@[0.5:0.95] | mAP@0.5 (%) | Aps (%) | Params (M) | GFLOPs |

|---|---|---|---|---|---|

| Strong baseline | 43.8 | 82.7 | 24.4 | 36.3 | 209.8 |

| CIoU baseline | 43.1 | 82.4 | 25.2 | 36.3 | 210.0 |

| AsymFPNv1 | 43.4 | 83.1 | 24.1 | 36.9 | 209.8 |

| AsymFPNv2 | 42.3 | 84.3 | 19.4 | 37.5 | 218.8 |

| AsymFPNv3 | 42.8 | 85.4 | 26.6 | 38.2 | 251.6 |

| AsymFPNv4 | 43.1 | 85.3 | 24.8 | 41.9 | 223.0 |

| AsymFPNv5 | 44.0 | 85.5 | 25.3 | 36.5 | 211.0 |

| Model Configuration | mAP@[0.5:0.95] | mAP@0.5 (%) | Recall (%) |

|---|---|---|---|

| Faster R-CNN | 14.0 | 43.6 | 39.8 |

| RetinaNet (R-50) | 34.8 | 77.9 | 74.3 |

| YOLOX-l | 48.2 | 84.9 | 80.5 |

| YOLOX-x | 48.6 | 84.8 | 80.7 |

| YOLOv5l | 48.6 | 85.3 | 81.6 |

| YOLOv5x | 48.5 | 86.2 | 82.0 |

| YOLOv8l | 49.4 | 85.5 | 81.5 |

| YOLOv8x | 48.1 | 84.9 | 80.9 |

| AsymmetricFPNv5 | 44.0 | 85.5 | 81.2 |

| Model Configuration | Params (M) | GFLOPs | FPS | η | η@[0.5:0.95] |

|---|---|---|---|---|---|

| Faster R-CNN | 41.5 | 246.3 | 30.28 | 1.05 | 0.34 |

| RetinaNet (R-50) | 37.9 | 246.0 | 34.45 | 2.06 | 0.92 |

| YOLOX-l | 54.2 | 155.6 | 62.68 | 1.57 | 0.89 |

| YOLOX-x | 99.1 | 281.9 | 35.36 | 0.86 | 0.49 |

| YOLOv5l | 46.1 | 107.7 | 77.83 | 1.85 | 1.05 |

| YOLOv5x | 86.1 | 203.8 | 42.51 | 1.00 | 0.56 |

| YOLOv8l | 43.6 | 164.8 | 59.36 | 1.96 | 1.13 |

| YOLOv8x | 68.1 | 257.4 | 38.94 | 1.25 | 0.71 |

| AsymmetricFPNv5 | 36.5 | 211.0 | 26.10 | 2.34 | 1.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, X.; Bian, J.; Kang, Y.; Xie, X.; Deng, Y.; Lu, Q.; Tang, J.; Shi, Y.; Zhao, J. Parameter Efficient Asymmetric Feature Pyramid for Early Wildfire Detection. Appl. Sci. 2025, 15, 12086. https://doi.org/10.3390/app152212086

Cheng X, Bian J, Kang Y, Xie X, Deng Y, Lu Q, Tang J, Shi Y, Zhao J. Parameter Efficient Asymmetric Feature Pyramid for Early Wildfire Detection. Applied Sciences. 2025; 15(22):12086. https://doi.org/10.3390/app152212086

Chicago/Turabian StyleCheng, Xiaohui, Jialong Bian, Yanping Kang, Xiaolan Xie, Yun Deng, Qiu Lu, Jian Tang, Yuanyuan Shi, and Junyu Zhao. 2025. "Parameter Efficient Asymmetric Feature Pyramid for Early Wildfire Detection" Applied Sciences 15, no. 22: 12086. https://doi.org/10.3390/app152212086

APA StyleCheng, X., Bian, J., Kang, Y., Xie, X., Deng, Y., Lu, Q., Tang, J., Shi, Y., & Zhao, J. (2025). Parameter Efficient Asymmetric Feature Pyramid for Early Wildfire Detection. Applied Sciences, 15(22), 12086. https://doi.org/10.3390/app152212086